Abstract

Psychometric scales are useful tools in understanding people’s attitudes towards different aspects of life. As societies develop and new technologies arise, new validated scales are needed. Robots and artificial intelligences of various kinds are about to occupy just about every niche in human society. Several tools to measure fears and anxieties about robots do exist, but there is a definite lack of tools to measure hopes and expectations for these new technologies. Here, we create and validate a novel multi-dimensional scale which measures people’s attitudes towards robots, giving equal weight to positive and negative attitudes. Our scale differentiates (a) comfort and enjoyment around robots, (b) unease and anxiety around robots, (c) rational hopes about robots in general (at societal level) and (d) rational worries about robots in general (at societal level). The scale was developed by extracting items from previous scales, crowdsourcing new items, testing through 3 scale iterations by exploratory factor analysis (Ns 135, 801 and 609) and validated in its final form of the scale by confirmatory factor analysis (N: 477). We hope our scale will be a useful instrument for social scientists who wish to study human-technology relations with a validated scale in efficient and generalizable ways.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the near future, humans and robots will be interacting and working together. Yet it is not clear how humans en masse will be welcoming these new companions, nor what kinds of personality traits, socioeconomic factors, or experiences influence these attitudes. Social scientists wishing to understand general socioeconomic and cultural changes currently taking place need a way of measuring attitudes towards robots and robotization. In this paper, we will present a new instrument for social survey research with which to study individual differences in attitudes towards robots. Our scale differentiates between personal-level and societal-level attitudes, and has separate subscales for positive and negative attitudes, thus consisting of four dimensions.

1.1 Attitudes

An attitude is a psychological tendency that is expressed by evaluating a particular entity with some degree of favour or disfavour [1]. Ajzen and Fishbein [2] described attitude as a pre-disposition to respond favourably or unfavourably to objects in the world. Implicit in this viewpoint is the notion of evaluation, where individuals (perhaps unconsciously) rate their feelings toward an object or event on a number of dimensions such as good–bad, harmful–beneficial, pleasant–unpleasant and likeable–dislikeable and together these evaluations drive behaviour [3].

1.2 Attitudes Towards Robots

Recently, a systematic review by Naneva et al. [4] showed that between the years 2005 and 2019, at least 97 papers measured attitudes towards robots. They categorized the measurements as either Affective attitudes (feelings towards robots), Cognitive attitudes (thoughts on robots), General attitudes (mix of cognitive and affective attitudes), and as specific cases Acceptance (intention to use), Anxiety or Trust towards robots. Most of the studies were on how people felt about interacting with one specific robot in a real world situation, using tools like the Almere Model of robot acceptance [5], the Godspeed Questionnaire [6] and the Unified Theory of Acceptance and Use of Technology (UTAUT; [7]). These types of studies can be very useful for design and engineering approaches, marketing, and for predicting the effects of implementing a specific robot in a specific work environment, but the results from such specialized studies cannot be extrapolated into wider societal impacts and expectations. Also, as these measures ask subjects to evaluate their experience with a physical robot, they cannot be used in on-line large scale general surveys. Of the studies measuring attitudes towards robots in general, Anxiety was predominantly assessed via the Robot Anxiety Scale (RAS; [8]), General attitudes were almost exclusively measured via non-validated self-reporting measures and Cognitive and Affective attitudes either via non-validated self-reporting measures or the Negative Attitudes towards Robots Scale (NARS,Footnote 1 [9, 10])—NARS subscales 1 (interaction with robots) and 3 (emotions in interaction with robots) for Affective and 2 (social influence of robots) for Cognitive attitudes.

Similarly, Krägeloh et al. [11] found only six validated questionnaires to measure the acceptability of social robots, three of which can be used to measure attitudes towards robots in general: NARS, the Frankenstein Syndrome Questionnaire [12] measuring acceptance of humanoid robots and the Multi-dimensional Robot Attitude Scale [13] which has 12 facets (Familiarity, Interest, Negative attitude, Self-efficacy, Appearance, Utility, Cost, Variety, Control, Social support, Operation and Environmental fit) and is mainly intended for gauging the needs and wishes of potential users of domestic robots (such as robotic vacuum cleaners). There is also the Robot Perception Scale [14], which consists of two subscales (general attitudes toward robots and attitudes toward human–robot similarity and attractiveness).

In summary, the NARS measures only negative attitudes, the Frankenstein Syndrome Questionnaire is only applicable to humanoid robots, the Multi-dimensional Robot Attitude Scale is focused on surveying the needs of buyers, and the Robot Perception Scale compresses positive and negative attitudes towards robots on a single dimension. Thus, we can see that while tools to measure fears and anxieties about robots exist, there is a definite lack of compact tools to measure positive attitudes like hopes and expectations. It is then no wonder that the Special Eurobarometer [15] in 2012 ended up basing most of its socioeconomic and demographic analyses on the single question: “Generally speaking, do you have a very positive, fairly positive, fairly negative or very negative view of robots?”.

1.3 General Attitudes Towards Technologies

Previous research on attitudes towards technology in general—or computers and information technology specifically—shows that attitudes often are multidimensional. At least general positive (hopes, expected benefits) and negative (fears, expected risks) attitudes can be measured independently [16]. For example, Edison and Geissler [17] showed that an interest in playing with technology (positive attitude on a personal level) does not necessarily imply low fears towards how technologies can impact societies (negative attitude on a societal level).

Fears and hopes are not two ends of a single line, but rather two separate constructs. It is entirely possible for someone to have both high hopes and fears of something (for example, many people accept that cars make moving around much faster and easier and simultaneously know that car accidents are often lethal and exhaust gases contribute to global warming). Similarly, someone can simply have no interest in something, thus having both low hopes and fears on the subject. A scale that has fear at one end and hope at the other fails to differentiate between subjects who have both high hopes and fears and those who have both low hopes and fears. Hopes and fears are not a zero- sum game; some behaviours are predicted solely by fears, some by hopes and some by both.

There is also a distinction between personal and societal level of attitudes towards robots. At a personal level, hopes and fears are felt as innate, visceral reactions. One simply likes playing with a robot or shudders at the thought of touching one without a need to rationalize the feeling [3, 17]. At a societal level, we can worry about robots replacing humans at the workplace, thus creating unemployment, or hope that increasingly smart automatic driving systems will result in fewer traffic accidents, thus easing the burden on health care systems. Societal-level hopes and fears are based at least partly on information received from outside the self, i.e., learned [1, 18]. Thus, it is reasonable to think that societal and personal attitudes should be measured as separate constructs, although the distinctions are by no means clear cut. In addition to attitudes, experience in a subject influences the way one sees the subject, typically easing the worst fears but also dampening highest hopes (for an example on robots in elderly care, see [19]).

1.4 Current Studies

Up to date, there has not been an instrument that would have been properly validated to study multidimensional attitudes towards robots in general. Here, we attempt to fill this gap by designing and validating a scale that will allow social scientists to study attitudes towards robots. Specifically, we aim to design a scale with four attitude factors:

-

1.

Personal level positive (P+): comfort and enjoyment around robots

-

2.

Personal level negative (P−): unease and anxiety around robots

-

3.

Societal level positive (S+): rational hopes about robots in general

-

4.

Societal level negative (S−): rational worries about robots in general

and a few criterion items measuring real life experience with and knowledge about robots.

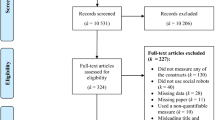

Sections 2–4 of this paper describe the iterative design process in detail. In short, we started with 23 items (V.1), dropped items based on exploratory factor analysis and content-related issues, added new items and repeated the factor analysis process (V.2), added more items and repeated the analysis again (V.3), and finally picked the strongest items of the last iteration and validated the scale. The final version of our scale (Appendix A) is described and validated in Sect. 5.

1.5 Overview on Data Analysis

There were no missing responses in any of the data sets (we used forced responses in both laboratory and online studies, and screened the data afterwards to ensure no missing data). We also screened the data for straight-line responders (i.e., participants who did not actually respond to the survey with any thought but merely chose the same response option for all items). No data imputation methods were used since all data was cross-sectional. In all studies, participants were over 18 years old, and were only allowed to participate if they were fluent in the language in which the study was run. In conjunction with exploratory factor analyses (pilot, Study 1, Study 2), satisfactory uniformity of sub-scales was confirmed with Cronbach’s alphas and Tarkkonen’s rhos. We used SPSS, versions 22–24 [20]; R: packages psych [21], lavaan [22]; and JASP, versions 0.7–0.11 [23] for our analyses.

We decided on the number of factors to be retained based on our pilot study: four factors, based on Kaiser criterion (for a non-Heywood case result of maximum-likelihood factor analysis). However, parallel analysis and optimal coordinates suggested two factors and acceleration factor suggested unidimensionality. We approached Studies 1 and 2 as a four-factor solution, which retroactively also validated the results of the pilot study. In our exploratory factor analysis studies, we iteratively kept selecting items that had a factor loading of at least 0.35 on one factor and that had no cross-factor loadings while excluding ill-fitting items one-by-one. The results of these exploratory factor analyses were finally confirmed in Study 3 with a confirmatory factor analysis (CFA). As a safeguard against multinormality violations, we used the Satorra–Bentler correction in our CFA.

2 Pilot Study

For the pilot study, we created an initial battery of questions about attitudes towards robots based on face validity and substantial content criteria. We took inspiration from NARS [9], the Robot Perception Scale [14] and the Frankenstein Syndrome Questionnaire [12], with an aim to expand their scope (the former focuses solely on negative attitudes; the latter only on humanoid robots). The purpose of the pilot was to evaluate whether the predicted four factor solution emerges. As a starting point, we went through existing robot attitude scales, extracted suitable items (see Appendix C) and, as the extracted items focused on negative attitudes, supplemented the battery with items designed to fit the theoretical model.

2.1 Method

2.1.1 Participants and Design

The data was collected during a randomized experimental study on human–robot interaction, before any manipulations happened (reported elsewhere). In total, 135 participants (56 Female) with an average age of 37.10 (SD = 17.65; with range 18–80) were recruited non-intrusively (details below). Of the participants, 60% had at least a Bachelor’s degree.

2.1.2 Procedure and Materials

We collected the data at a large public library in the capital area of Helsinki. Our participants were recruited non-invasively at a table in the foyer with a sign stating: ‘Participate in Psychological Research’. Research assistants in neutral clothing sat behind the table. Participants voluntarily approached our research assistants. They were informed they could participate in a psychological experiment taking about 30 min of their time. After ensuring that the participants were legal adults (over 18 years old), they were given informed consent forms to read informing them about the study and highlighting their right to opt out at any point. After signing the consent form, the participants were taken to our laboratory space. In the laboratory were four notebook computers with 15″ screens in cubicle-like nooks to guarantee privacy. Participants were given headphones playing pink noise at a constant, pleasant level to cover up background noise. The experiment was programmed using Python’s Social Psychology Questionnaire library [24], built on top of Pygame v. 1.96.

2.1.3 GAToRS Version 1

The initial version of the General Attitudes Towards Robots Scale (GAToRS) consisted of 23 statements concerning opinions about and attitudes towards robots, technology and artificial intelligences partly based on previous measurement tools [9, 12, 14]. For a full listing of items, see Table 1. There were also four items intended as criterion variables, and thus not included in the scale. These were: “Robotics is a familiar topic to me”, “Generally speaking, I have a positive view of robots”, “I have personal experience of using robots”, and “I am interested in scientific discoveries and technological developments”. All items were anchored from 1 to 7 (“Strongly disagree”–“Strongly agree”). The subscale scores were calculated by averaging the scores of the items in the subscale. We developed the items with a four-factor structure in mind: specifically, a structure with positive and negative attitudes both split into two separate factors of personal-level and societal-level attitudes. This assumption was based on earlier research showing that negative attitudes towards technologies form two separate factors of personal and societal worries regarding technologies [17]. Thus, we reasoned that positive attitudes would follow the same logic. A majority of the negatively valenced items were extracted from existing scales (see Appendix C for details on the origins of each item).

2.2 Results

First, we evaluated the number of factors proposed by various methods for the 23 items. Eigenvalues suggested 6 factors, parallel analysis and optimal coordinates two factors, and acceleration factor one factor. The proposed four-factor structure falls between these extremes, so we continued by running an exploratory factor analysis (EFA) with four factors. We wanted to allow for correlations between factors, since we had no strong reason to assume full orthogonality. In fact, the subscales should correlate: negative attitudes should correlate negatively with positive attitudes, and societal attitudes of a given valence should correlate with personal attitudes of the same valence. If this were not the case, it would raise questions regarding the construct validity of the scale. Thus, we used oblique promax rotation in our factor analysis. Based on the results, we dropped one item from the subsequent version of the scale. See Table 1 for results. All analyses were done with the statistical software R.

The factor with the most items (labeled S+) had 7 items relating to positive societal aspects of robots. These items were statements about robots being a natural product of civilization, with developers considering the needs of people, robots being able to ease life, robots being central in future societies, robots helping people, and robots being able to do dangerous tasks and being efficient. Each of these items had factor loadings > 0.34. Items in the second largest factor were about personal positive perceptions about robots (P+): robots being currently safe enough, robots being trustworthy, robots helping people, robots enhancing independence, feeling relaxed around robots, but also surprisingly that robotics should not be studied. Another factor (P−) had 4 items about negative personal attitudes towards robots: robots being scary, the thought of using robots in jobs being uncomfortable, being nervous around robots and being afraid robots wouldn’t understand orders. The last factor (S−) focused on the negative societal aspects of robots: people losing jobs, people having less interactions, becoming too dependent on robots and robotics needing strict supervision. Item 16 (“Robots are a good thing for society, because they help people”) loaded on factors P+ (0.36) and S+ (0.59), and item 12 (“In the future, robots will play a key role in society”) loaded on factors S+ (0.34) and S− (0.30), showing that it does not differentiate between positive and negative valences on the societal level. Items 13 and 17 did not load on any factor. The Cronbach’s alphas were P+ : 0.63, P−: 0.77, S+: 0.83, S−: 0.59; Tarkkonen’s RhosFootnote 2: P+: 0.61, P−: 0.74, S+: 0.71, S−: 0.54.

The factor structure is in line with our expectations about 4 factors (personal level positive, personal level negative, societal level positive, societal level negative). The model accounted for 42% of the total variance, with the explained variance spread among the factors quite evenly.

The correlations between factors are presented in Table 2. As expected, the two positive attitude factors correlated positively, as did the two negative attitude factors. Positive and negative attitudes correlate negatively on both individual and societal level and cross-levels.

2.3 Discussion of Pilot Study Results

The factors roughly corresponded to the hypothesized constructs, but were not without cross-loadings. Items intended for personal and societal factors sometimes loaded onto both factors, indicating that these items did not distinguish between these constructs as well as intended. Thus, the current set of items could be improved. The main problem with the suggested factor solution was that the subfactors were formed from very uneven numbers of items, with both negative attitude subfactors having only four items with loadings above 0.35. Ideally, we would like to have a scale with the same number of items intended to primarily measure one latent variable. Furthermore, Cronbach's alphas and Tarkkonen’s rhos for the subfactors did not reach the minimum level of acceptance (> 0.71) for all the subscales (P+ and S−).

Thus, we decided to add new items in the following studies. Of the items included in the pilot, all except for item 8 (“Robots are a crime against nature”) were retained, as we deemed even the items that did not load on any factor or cross-loaded on several as face valid.

It is also noteworthy that the pilot study was conducted in Finnish, and mainly served as a proof of concept as we were developing a tool for international use. Nuances between languages can easily make a difference in factor loadings. In subsequent studies, we attempted to refine the scale by iterating the item battery in international samples in English.

3 Study 1

In Study 1, we developed an extended version of the questionnaire. We retained 22 of the 23 items in the pilot study and translated those into English. We extracted more items from previous instruments ([9, 12, 14], see Appendix C) and crowdsourced new items that we hoped would fit the expected factors better. We posted open questions (“Can you think of a question that would clearly relate to personal comfort in using robots / personal fear of using robots / hopes/worries about the societal impact of robots”) in 4 science fiction fandom and 3 robotics-oriented Facebook groups. This yielded 73 items which we evaluated for suitability for the theoretical structure. Of these, we chose the 21 items that we found the most suitable for the scale. Combined with questions from the previous iteration, we ended up with 43 questions in total.

We chose items based on face validity (i.e., items that fit the presumed theoretical structure), moderateness of suggested phrasing (i.e., no extreme negative or positive language), and general “tone” (i.e., avoiding fanciful or far-fetched subject matter, even if the question was technically about attitudes towards robots). For example, we excluded items such as “Stumbling on a Roomba can be dangerous” (no face validity), “Horrible killer robots should be banned” (extreme negative phrasing), and “Sending robotic probes to outer space can give aliens the wrong impression about humanity” (a far-fetched theme). We intentionally excluded all suggested items that had to do with real-life experiences with robots, since these were not suitable as measures of attitudes. Regarding certain themes, we received several item suggestions that were quite similar to one another. For some of the themes, we included more than one item variation, picking items that were different enough from one another to cover different aspects of attitudes (see, e.g., items 23 and 24 in Table 3).

3.1 Method

3.1.1 Participants

A total of 801 participants (340 women) were pooled together from two datasets collected for experiments reported elsewhere. In these experiments, we collected the revised item battery for GAToRS as an experimental covariate prior to the actual experiment. The mean age of participants was 36.43 (SD = 11.52), and 59% of participants had at least a Bachelor’s degree.

3.1.2 Procedure & materials

Participants completed surveys in Qualtrics survey platform. GAToRS was presented before participants were directed to an unrelated experiment.

3.1.3 GAToRS Version 2.

This iteration of the item battery had a total of 43 items plus 4 criterion items. The criterion items were the same as in the previous version, and were not included in factor analysis. All items were anchored from 1 (“strongly disagree”) to 7 (“strongly agree”). The subscale scores were calculated by averaging the scores of the items in the subscale. For detailed listing of the items in this study see Table 3 below.

3.2 Results and Discussion

In dimensionality examination, optimal coordinates, acceleration factor, eigenvalue and the Kaiser criterion suggested factor solutions ranging from one to four factors. Thus, the analyses did not rule out the four-factor solution (i.e., there was no agreement between methods for the suggested number of factors). As a four-factor solution fit with our preliminary theoretical model, we proceeded by constraining the number of factors to four. We ran a similar exploratory factor analysis with oblique promax rotation as we did in the pilot study—see Table 3 below for results.

Moving forward, we selected items for further analysis by immediately ruling out items with cross-loadings greater than 0.17 in absolute value on more than two factors (Items 1, 17, 19) and items that had no loadings greater than 0.35 in absolute value (e.g., items 13, 14, 42). We then iteratively ran the factor analysis by dropping out items that did not cluster neatly with other items, or which we felt did not match in terms of content with the other items in the factor in light of our a priori structure (see Appendix D for details and rationale for item exclusions). Our final solution based on this iterative process is shown in Table 4 below.

This second iteration of Study 1 items conformed sensibly with our intended model. A majority of the items in the P+ factor (10, 18, 21, 32) related to personal-level positive attitudes or positive reliance towards robots; all of the five items in the P− factor were clearly individual level items focusing on negatively felt aspects of robots; the S+ factor had the highest loadings on three items related to labor allocation (3, 4, 29); and a majority of the items in the S− factor (5, 23, 34, 44) related to society-level worries about robots. The Cronbach’s alphas were P+: 0.78, P−: 0.79, S+: 0.83, S−: 0.72; Tarkkonen’s rhos were P+: 0.65, P−: 0.66, S+: 0.72, S−: 0.56.

However, the factors were still uneven after this iteration, with P+ having seven and the other three factors having five items with loadings above 0.35, although Cronbach’s alphas were now at acceptable levels (> 0.71). Moreover, some factors had content-validity issues due to their item loadings. The S+ factor, in this solution, only had one item (33) that was not directly related to labour allocation, indicating a risk of this factor turning into a factor specifically about this one societal theme rather than a more general factor. We felt that item 27 (“Robots are a natural product of our civilization”), which had a loading of 0.30 on this factor, should be re-tested, as it was clearly a society-level item that was more general, and thus included it in Study 2. With respect to the P+ factor, we dropped item 43 from any further analysis since it was clearly unrelated to personal issues (labour markets in developed countries). Similarly for S−, item 15 was clearly about personal dependency and was dropped. Item 26 had a cross-loading on the P+ and S+ factors: we decided to retain this item for Study 2 and see if this would replicate.

4 Study 2

In Study 1, we examined whether the initial structure of our item pool conforms to the predicted theoretical structure. Due to a lacking S+ factor and items that had to be dropped due to their content not matching the theoretical factor model, we again crowdsourced new items. We used the exact same method for crowdsourcing and criteria for choosing items as we did in Study 1 (described in Sect. 3). This yielded 34 items which we evaluated for suitability for the theoretical structure and chose the 13 most suitable. We kept the 22 items from Study 1, marked in Table 3, and added the crowdsourced items, leading to a total of 35 items. Additionally, we reworded two items to better match the personal or societal factor they loaded into in Study 1: “Robots can be trusted” to “I can trust a robot” (P+) and “Robots can make my life easier” to “Robots can make life easier” (S+).

4.1 Method

4.1.1 Participants

We pooled data together from 2 experimental online studies where we included the scale in exploratory manner. This resulted in 609 observations (318 male) for the 39 item version GAToRS with no missing values. The mean participant age was 30.96 (SD = 11.90). Participants were recruited from English-speaking countries via Prolific (www.prolific.co).

4.1.2 Procedure and Materials

Participants read and agreed to an informed consent form, after which they were directed to the survey form. They responded to several personality and attitude questionnaires, after which they participated in the experimental part of the study (reported elsewhere).

4.1.3 GAToRS Version 3

The refined version of the scale had 35 items, with the same 4 criterion items as used in previous iterations (not included in factor analysis). All items were anchored from 1 (“strongly disagree”) to 7 (“strongly agree”). The subscale scores were calculated by averaging the scores of the items in the subscale. For a list of all items, see Table 5.

4.2 Results of Study 2

We again started by running an exploratory factor analysis, constrained to four factors, with promax rotation on the full set of 35 items (see results in Table 5 below).

For each subscale, we selected the five items that had the highest loadings resulting in the final set of 20 items (see Table 6 and Appendix D). We then reran the factor analysis for these items. The results show a good fit with these items loading strongly on four factors without significant cross-loadings.

Based on these factor analysis results, we then computed the reliability estimate Tarkkonen’s Rho for each factor (P+ 0.81, S+ 0.83, P− 0.79 and S− 0.67), the Cronbach’s alphas were P+: 0.85, P−: 0.82, S+: 0.89, S−: 0.76. Taken together, the values of Tarkkonen’s Rhos and Cronbach’s alphas suggest that this set of items is better than the previous iterations, since all of them either were above the cut-off point (0.71) or very close to that point. This structure of 4 factors with 5 items each was then subjected to a confirmatory factor analysis in Study 3.

5 Study 3 Validation of Final Form

After 3 studies (n = 1545) and 3 scale iterations, we settled on a 20-item questionnaire (Table 7, below). This scale consisted of four subscales with five items each, plus four criterion items. The final version of the proposed scale, which here in Study 3 was subjected to Confirmatory Factor Analysis (CFA), is shown on Table 7 below. For comparison, we collected the existing Negative Attitudes towards Robots Scale. We chose the NARS as it was the closest match to our scale in terms of content. Thus, we could compare it to our scale as a test of convergent validity.

5.1 Method

5.1.1 Participants

502 participants were recruited from Prolific. 25 participants were excluded based on an attention check question (“It is important that you pay attention to this study, please respond 6”), leaving a final sample size of 477 (283 female, 192 male, 2 non-binary). The mean age of the sample was 40.23 (SD = 13.51). 61% of participants had at least a Bachelor's degree.

5.1.2 Procedure and Materials

5.1.2.1 General Attitudes Towards Robots Scale (GAToRS) Final Version

The final version of the scale had 20 items, plus the 4 criterion items that were included in all previous versions. All items were presented as statements and subjects were asked to answer based on how much they agree with each statement. All items were anchored from 1 (“strongly disagree”) to 7 (“strongly agree”). The subscale scores are calculated by averaging the scores of the items in the subscale. See below for reliabilities.

5.1.2.2 Negative Attitudes Towards Robots Scale (NARS)

The NARS was developed in Japanese and has been validated in at least English, Portuguese, Polish and Turkish. It has three factors (Negative Attitudes toward Situations of Interaction with Robots, six items; Negative Attitudes toward Social Influence of Robots, five items; and Negative Attitudes toward Emotions in Interaction with Robots, three items) [9]. Cronbach’s alphas were 0.84, 0.77, and 0.78 respectively in our sample. The full questionnaire can be found in Appendix B. All items were presented as statements and subjects were asked to answer based on how much they agree with each statement. All items were anchored from 1 (“completely disagree”) to 7 (“completely agree”).

5.1.3 Data Analysis

We ran confirmatory factor analysis (CFA) for the four-factor structure theoreticized and observed in the previous studies (we used the lavaan package for R). We ran the analysis with Satorra-Bentler robust estimation method, which corrects against multinormality violations. In this model, 20 items load on 4 factors, five on each. We also examined the intercorrelations between the subscales, between the criterion items and the subscales and between GAToRS and NARS subscales.

5.2 Results

The four factor model fit the GAToRS data well: Satorra-Bentler χ2(164) = 429.98, p < 0.001, CFI = 0.91, TLI = 0.896, RMSEA = 0.058, 90% CI = [0.052, 0.064], SRMR = 0.057 (See Table 8 and Fig. 1). The factors had reliability coefficient omegasFootnote 3 (calculated from the model) of P+ 0.75, P− 0.85, S+ 0.81, S− 0.76. The Cronbach’s alphas were P+: 0.74, P−: 0.84, S+: 0.83, S−: 0.76. We did not proceed with model modifications, since the initial model was good enough [25, 26].

The subscales were intercorrelated but not identical. Positive and negative personal dimensions had a moderate negative correlation. However, societal dimensions were more distinct from each other with only a small correlation between them. There was a moderate positive correlation between positive societal and personal aspects, indicating that people who view robots favorably personally do so regarding societal aspects too. This was also true also for the negative subscales. See Table 8 for details and distribution scatterplots in Appendix C.

Next, we conducted a set of multivariate regression analyses where we predicted the criterion items with GAToRS subscales. We averaged each subscale’s items to compute the respective scale score. The criterion items were “Generally speaking, I have a positive view of robots” (DV1), “I have personal experience of using robots” (DV2), “I am interested in scientific discoveries and technological developments” (DV3) and “Robotics is a familiar topic to me” (DV4). See Table 10 for details.

The criterion item regarding a general positive attitude towards robots (DV1) was positively predicted by the positive attitude subscales (P+ and S+) and negatively predicted by the personal negative attitude subscale (P−; See Table 10 for B-values). The societal negative attitude subscale (S−) did not predict DV1. The criterion item regarding personal experience (DV2) was positively predicted only by P+. Thus, societal-level attitudes are decoupled from personal experience. Personal experience with robots may lead to personal-level positive attitudes towards robots, or people who already have such attitudes may be more likely to, e.g., buy or build robots—the causal direction is beyond the scope of this analysis. The criterion item regarding general interest in science and technology (DV3) was predicted positively by P+, negatively by P−, and positively by S+. However, it was also predicted positively by S−: plausibly, people more interested in science and technology are also more aware of societal risks related to robots. Finally, the criterion item regarding familiarity (DV4) was predicted positively by P+ and negatively by P−. In sum, the criterion items had theoretically sensible associations with the subscales. The null associations between societal attitudes and experience or familiarity also provide evidence of discriminant validity: personal-level experience and familiarity with the subject matter of robotics can affect personal-level attitudes (or vice versa), but seem unrelated to larger-scale societal attitudes.

All NARS subscales (Negative Attitudes toward Situations of Interaction with Robots, Negative Attitudes toward Social Influence of Robots and Negative Attitudes toward Emotions in Interaction with Robots, Cronbach’s alphas 0.84, 0.77, 0.78 respectively in our sample) correlated in the expected directions with GAToRS subscales but at varying strengths, indicating that the scales indeed do measure different constructs. GAToRS P+ and S+ subscales were negatively correlated with all NARS subscales, and P− and S− were positively correlated with all NARS subscales, but correlations ranged from a relatively modest 0.2 to a very strong 0.8. P+ was the most strongly connected to NARS Emotions; P− was the most strongly connected to NARS Situations; and S− was the most strongly connected to NARS Social Influence. See Table 10 for details and Appendix C for scatterplots.

5.3 Discussion of Study 3

The final version of GAToRS yielded a coherent factor structure that had good fit indices. The subscales were intercorrelated but relatively independent, and approximately fit the proposed structure in terms of item content. Thus, we have untangled the complex of “attitudes towards robots” into four different facets of attitudes measured by four different subscales. The GAToRS subscales correlate in a theoretically sensible way with the NARS subscales, providing evidence of convergent validity. The lack of associations between familiarity with or experience of robots and the societal GAToRS subscales provides evidence of discriminant validity: societal attitudes related to robotics are not related to prior personal affiliation with robots.

6 General Discussion

Over three iterations and four studies (including a pilot study) and more than 2000 subjects, we developed and validated a scale that differentiates between a) comfort and enjoyment around robots, b) unease and anxiety around robots, c) reasonable hopes about robots in general and d) reasonable worries about robots in general. We named these facets Personal level positive (P+), Personal level negative (P−), Societal level positive (S+) and Societal level negative (S−) attitudes. In addition to its psychometric qualities, the scale is compact (only 20 items) and seems to be reliable in producing the same factor structure in EFA (Study 2) and CFA (Study 3) in different samples. We hope that it will be a useful instrument for social scientists who wish to study human-technology relations.’

The GAToR scale is partly similar to existing scales, since it incorporates some of the previous work by other teams [9, 12, 14]. Of the 20 items in GAToRS, only seven, in the end, were our own additions: the rest have been adapted from prior work by others with a new subscale structure in mind (see Appendix C for details on each item). Our work expands on this previous work by including aspects that previously have received less attention, but have been implied by more general attitude theories and research [3, 16,17,18]. First, GAToRS includes measurements for the previously omitted positive societal aspects of robots, and is explicitly based on the theoretical stance that positive and negative attitudes are not necessarily polar opposites but at least partially independent. As a case in point, the intercorrelations between the GAToRS subscales (Table 8) show that a negative attitude on the personal level is not simply a lack of positive attitude or vice versa, and the same applies on the societal level. Second, GAToRS differentiates between types of negative and positive attitudes in a way that has been used little in the study of attitudes towards robots, but that has some precedence in technology studies [17]. Again, the intercorrelations of the subscales (Table 8) show that personal-level likes and dislikes do not directly translate to matching societal-level attitudes. The multivariate regression analysis, where we predicted the criterion items with the GAToRS subscales (Table 9), indicates that the subscales measure constructs that are relatively independent of experience with robots. NARS, against which our scale was validated, clearly conforms to our instrument as all three of its sub-dimensions correlate meaningfully with our scale (see Table 10). Thus, we have evidence of both discriminant (associations with criterion items) and convergent (associations with NARS subscales) validity. However, further research, especially on discriminant validity using comparisons to other similar scales, is needed.

GAToRS expands the possibility of comparisons beyond negative attitudes, which have been the main target of most previous attitude measurement instruments in technology studies [4, 11]. GAToRS also allows for expanding the way human–robot attitudes have been previously studied towards more large-scale, population-based studies. For example, attitudes towards labour robots [27] and self-driving cars [28] have been studied with instruments which are limited in their scope only to the specific situational contexts they are intended for.

GAToRS is part of a larger, recent trend, where researchers are trying to understand and explain moral evaluations in human–robot interaction (see, e.g., [29, 30]) and human attitudes towards bio-medical silicon based enhancements [31]. For instance, a paper by Malle et al. [29] on the influence of trust in various aspects of human–robot interaction clearly shows that higher trust in robots leads to a better interaction experience, but what drives the initial level of trust remains open—do some people instinctively trust or distrust robots? Is the trust in robots driven by liking robots or the distrust by disliking them? More obscure topics like the moral psychology of mind upload [32, 33] have also been investigated. Even in these special cases we believe it would be important to see how attitudes towards robots might act as mediators, allowing for potentially more basic explanations for existing findings.

In terms of limitations, we must first note that our sample is constrained to WEIRD (Western, educated, individualistic, rich and democratic) countries, primarily in the English-speaking world. More research is needed to confirm whether the scale works in other cultures. As we collected our data online, it is presumably necessary also to test the scale in populations that are not as familiar with digital technologies overall. As previously mentioned, more research on the construct validity of the scale is needed, especially when it comes to discriminant validity, though we are uncertain which measurement instruments would be the best points of comparison to this end.

Correlations between GAToRS and other personality assessment scales are an important avenue for gaining further insights into the variety of factors influencing attitudes towards robots. Of course, testing whether GAToRS can be used to predict real life effects on interaction with robots and artificial intelligences will be the true proof of the functionality of this scale. Furthermore, it is naturally clear that attitudes towards robots are both historically and culturally contingent. To this end, it would be both interesting and social scientifically relevant to study how attitudes towards robots change in time and in different socioeconomic contexts, allowing social scientists to test several theories on how changes in labour markets and modes of production are reflected upon in attitudes towards technologies [34, 35].

6.1 Conclusions

To summarize, in four studies (N > 2000; including pilot), we document the process of developing a new instrument to measure attitudes towards robots. The result is a multidimensional, validated instrument for studying attitudes and their formation. We hope this General Attitudes Towards Robots Scale will prove to be reliable for social scientists and other researchers trying to tease apart what kinds of personality traits, socioeconomic factors, or experiences influence attitudes towards robots and artificial intelligences in general, and how those attitudes are reflected in real life interactions with robots.

Availability of Data and Materials

Upon request from corresponding author.

Code availability

Upon request from corresponding author.

Notes

Tarkkonen’s Rho gives us the same information as Cronbach’s alpha; however, since Cronbach’s alpha is known to have psychometric problems, such as giving inflated values for internal consistency when the number of items increases, we also report Tarkkonen’s rho, which is corrected for this problem.

Which in this case substitutes Tarkkonen’s rhos. Tarkkonen’s Rhos are calculated based on maximum likelihood factor analyses, but here our modelling is based on confirmatory factor analysis.

References

Eagly AH, Chaiken S (1993) The psychology of attitudes. Harcourt Brace, Fort Worth

Ajzen I, Fishbein M (1980) Understanding and predicting social behavior. Prentice Hall, Englewood Cliffs

Ajzen I (2001) Nature and operation of attitudes. Annu Rev Psychol 52:27–58

Naneva S, Sarda Gou M, Webb T, Prescott T (2020) A systematic review of attitudes, anxiety, acceptance, and trust towards social robots. Int J Soc Robot 12:1179–1201

Heerink M, Kröse B, Evers V, Wielinga B (2010) Assessing acceptance of assistive social agent technology by older adults: the almere model. Int J Soc Robot 2(4):361–375

Bartneck C, Croft E, Kulic D, Zoghbi S (2009) Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int J Soc Robot 1(1):71–81

Venkatesh V, Morris M, Davis G, Davis F (2003) "User acceptance of information technology: toward a unified view. MIS Q 27:425–478

Nomura T, Suzuki T, Kanda T, Kato K (2006) Measurement of anxiety toward robots. In: ROMAN 2006-the 15th IEEE international symposium robot human interaction communication. IEEE, pp 372–377

Nomura T, Suzuki T, Kanda T, Kato K (2006) Measurement of negative attitudes toward robots. Interact Stud Soc Behav Commun Biol Artif Syst 7(3):437–454

Nomura T, Kanda T, Suzuki T (2006) Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI & Soc 20(2):138–150

Krägeloh CU, Bharatharaj J, Kutty S, Nirmala P, Huang L (2019) Questionnaires to measure acceptability of social robots: a critical review. Robotics 8:88

Syrdal DS, Nomura T, Dautenhahn K (2013) The Frankenstein Syndrome Questionnaire—results from a quantitative cross-cultural survey. In: International conference on social robotics

Ninomiya T, Fujita A, Suzuki D, Umemuro H (2015) Development of the multi-dimensional robot attitude scale: constructs of people’s attitudes towards domestic robots. In: International conference on social robotics

Warta S (2015) I don’t always have positive attitudes, but when i do it is usually about a robot: development of the robot perception scale. In: The twenty-eighth international flairs conference

EC/Wave (2012) Special eurobarometer 382 (2012). Public attitudes towards robots. EC/Wave

Fischoff B, Slovic P, Lichtenstein S, Read S, Combs B (1978) How safe is safe enough? A psychometric study of attitudes towards technological risks and benefits. Policy Sci 9:127–152

Edison S, Geissler G (2003) Measuring attitudes towards general technology: Antecedents, hypotheses and scale development. J Target Meas Anal Mark 12:137–156

Bandura A (1986) Social foundations of thought and action: a social cognitive theory. Prentice Hall, Hoboken

Stafford R, Broadbent E, Jayawardena C, Unger U, Kuo I, Igic A, Wong R, Kerse N, Watson C, MacDonald B (2010) Improved robot attitudes and emotions at a retirement home after meeting a robot. In: 19th International symposium in robot and human interactive communication

IBM Corp Released (2013) IBM SPSS statistics for Windows, Version 22.0. IBM Corp., Armonk

Revelle W (2020) psych: Procedures for personality and psychological research. Northwestern University, Evanston

Rosseel Y (2012) lavaan: an R package for structural equation modeling. J Stat Softw 48(2):1–36

JASP Team (2021). JASP (Version 0.7–0.11) [Computer software]

Laakasuo M. Social Psychology Questionnaire library for PYTHON PYGAME (in preparation)

Byrne BM (2010) Structural equation modeling with AMOS: basic concepts, applications, and programming. Routledge, London

R. B. Kline, Principles and practice of structural equation modeling, Guilford publications, 2015.

Turja T, Rantanen T, Oksanen A (2019) Robot use self-efficacy in healthcare work (RUSH): development and validation of a new measure. AI & Soc 34:137–143

Charness N, Yoon JS, Souders D, Stothart C, Yehnert C (2018) Predictors of attitudes toward autonomous vehicles: the roles of age, gender, prior knowledge, and personality. Front Psychol 9:2589

Malle BF, Ullman D (2021) A multi-dimensional conception and measure of human-robot trust. In: Nam CS, Lyons JB (eds) Trust in human–robot interaction: research and applications. Elsevier, San Diego, pp 3–25

Malle BF, Thapa Magar S, Scheutz M (2019) AI in the sky: How people morally evaluate human and machine decisions in a lethal strike dilemma. In: Ferreira A, Silva Sequeira J, Virk GS, Kadar EE, Tokhi O (eds) Robots and well-being. Springer, Cham

Castelo N, Schmitt B, Sarvary M (2019) Human or robot? Consumer responses to radical cognitive enhancement products. J Assoc Consum Res 4(3):217–230

Laakasuo M, Drosinou M, Koverola M, Kunnari A, Halonen J, Lehtonen N, Palomäki J (2018) What makes people approve or condemn mind upload technology? Untangling the effects of sexual disgust, purity and science fiction familiarity. Palgrave Commun 4:84

Laakasuo M, Repo M, Berg A, Drosinou M, Kunnari A, Koverola M, Saikkonen T, Visala A, Sundvall J (2021) The dark path to eternal life: machiavellianism predicts approval of mind upload technology. Personal Individ Differ 177:110731

Frank MR, Autor D, Bessen JE, Brynjolfsson E, Cebrian M, Deming DJ, Feldman M, Groh M, Lobo J, Moro E, Wang D, Youn H, Rahwan I (2019) Toward understanding the impact of artificial intelligence on labor. Proc Natl Acad Sci 116(14):6531–6539

Czarniawska B, Joerges B (2020) Robotization of Work? Answers from popular culture, media and social sciences. Edward Elgar Publishing, Cheltenham

Acknowledgements

We wish to thank Marianna Drosinou for the graphics and Tuire Korvuo and Teemu Saikkonen for their help in preparing the manuscript.

Funding

Open Access funding provided by University of Helsinki including Helsinki University Central Hospital. This research was funded by the Jane and Aatos Erkko Foundation and the Academy of Finland. The funders were not involved in any way in the conducting of studies, analysis of data, preparation of manuscript nor decision to publish.

Author information

Authors and Affiliations

Contributions

The authors made the following contributions. Mika Koverola: Conceptualization, Data Collection, Data-Analysis, Writing—Original Draft Preparation, Writing—Review & Editing; Anton Kunnari: Data Collection, Data-Analysis, Writing—Review & Editing; Jukka Sundvall: Data Collection, Data-Analysis, Writing—Review & Editing; Michael Laakasuo: Conceptualization, Data Collection, Writing—Review & Editing Funding Acquisition, Project Management.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare no conflicts of interests.

Consent to participate

All participants gave their informed consent.

Ethics approval

This study was exempted from ethical review by Ethical Review Board in the Humanities and Social and Behavioral Sciences at the University of Helsinki. We certify that the study was performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

-

A.

General Attitudes towards Robots Scale (GAToRS)

-

B.

Negative Attitudes towards Robots Scale (NARS)

-

C.

Item extractions

-

D.

Item removals

-

E.

Correlations of the GAToRS and NARS subscales in the Validation study

Appendix A: General Attitudes Towards Robots Scale (GAToRS)

Please read the following statements and answer based on how much you agree with each statement. All items are anchored from 1 to 7 (“Completely disagree”–“Completely agree”).

2.1 Personal Level Positive Attitude (P+)

-

1.

I can trust persons and organizations related to development of robots

-

2.

Persons and organizations related to development of robots will consider the needs, thoughts and feelings of their users

-

3.

I can trust a robot

-

4.

I would feel relaxed talking with a robot

-

5.

If robots had emotions, I would be able to befriend them

2.2 Personal Level Negative Attitude (P−)

-

1.

I would feel uneasy if I was given a job where I had to use robots

-

2.

I fear that a robot would not understand my commands

-

3.

Robots scare me

-

4.

I would feel very nervous just being around a robot

-

5.

I don’t want a robot to touch me

2.3 Societal Level Positive Attitude (S+)

-

1.

Robots are necessary because they can do jobs that are too hard or too dangerous for people

-

2.

Robots can make life easier

-

3.

Assigning routine tasks to robots lets people do more meaningful tasks

-

4.

Dangerous tasks should primarily be given to robots

-

5.

Robots are a good thing for society, because they help people

2.4 Societal Level Negative Attitude (S−)

-

1.

Robots may make us even lazier

-

2.

Widespread use of robots is going to take away jobs from people

-

3.

I am afraid that robots will encourage less interaction between humans

-

4.

Robotics is one of the areas of technology that needs to be closely monitored

-

5.

Unregulated use of robotics can lead to societal upheavals

Appendix B

Negative Attitudes towards Robots Scale (NARS) Items with Subscales.

Item No.—Questionnaire Item—Sub-Scale.

-

1.

1 I would feel uneasy if robots really had emotions. S2

-

2.

Something bad might happen if robots developed into living beings. S2

-

3.

I would feel relaxed talking with robots. *S3

-

4.

I would feel uneasy if I was given a job where I had to use robots. S1

-

5.

If robots had emotions I would be able to make friends with them. *S3

-

6.

I feel comforted being with robots that have emotions. *S3

-

7.

The word “robot” means nothing to me. S1

-

8.

I would feel nervous operating a robot in front of other people. S1

-

9.

I would hate the idea that robots or artificial intelligences were making judgements about things. S1

-

10.

I would feel very nervous just standing in front of a robot. S1

-

11.

I feel that if I depend on robots too much, something bad might happen. S2

-

12.

I would feel paranoid talking with a robot. S1

-

13.

I am concerned that robots would be a bad influence on children. S2

-

14.

I feel that in the future society will be dominated by robots. S2

*reverse coded

Sub-scale 1: Negative Attitudes toward Situations and Interactions with Robots.

Sub-scale 2: Negative Attitudes toward Social Influence of Robots.

Sub-scale 3: Negative Attitudes toward Emotions in Interaction with Robots.

Appendix C: Item Extractions

Pilot | Extracted from |

|---|---|

1. Widespread use of robots is going to take away jobs from people | Frankenstein rw |

2. I am afraid that robots will encourage less interaction between humans | Frankenstein rw |

3. Robots are a natural product of our civilization | Frankenstein rw |

4. Persons and organizations related to development of robots will consider the needs, thoughts and feelings of their users | Frankenstein rw |

5. Robots can make my life easier | Frankenstein rw |

6. Robots scare me | Frankenstein rw |

7. Robotics is one of the areas of technology that people should not study | Frankenstein rw |

8. Robots are a crime against nature | Frankenstein rw |

9. Professionally supervised robots are safe enough to use in assistive tasks in care for the elderly | RPS rw |

10. Robots can be trusted | RPS |

11. I would feel uneasy if I was given a job where I had to use robots | NARS |

12. In the future, robots will play a key role in society | NARS rw |

13. Robots should always obey humans | RPS rw |

14. If I became too dependent on robots, something bad might happen | NARS rw, Frankenstein rw |

15. Robotics is one of the areas of technology that needs to be closely monitored | |

16. Robots are a good thing for society, because they help people | |

17. Widespread use of robotics can boost job opportunities in the developed nations | |

18. Robots are necessary because they can do jobs that are too hard or too dangerous for people | |

19. I feel more independent when I get help from a robot rather than a human being | |

20. I would feel relaxed talking with robots | NARS |

21. I would feel very nervous just being around a robot | NARS rw |

22. I fear that a robot would not understand my commands | |

23. Robots are hardworking | RPS |

Study 1 | Extracted from |

|---|---|

1. A robot is responsible for its actions | |

2. A robot's actions are always the responsibility of the owner | |

3. Assigning routine tasks to robots lets people do more meaningful tasks | |

4. Dangerous tasks should primarily be given to robots | Frankenstein rw |

5. I am afraid that robots will encourage less interaction between humans | Frankenstein rw |

6. I can trust persons and organizations related to development of robots | Frankenstein rw |

7. I fear that a robot would not understand my commands | |

8. I feel more independent when I get help from a robot rather than a human being | |

9. I want to know if I'm dealing with a human or a robot, even if only by email or text message | |

10. I would feel relaxed talking with robots | NARS |

11. I would feel uneasy if I was given a job where I had to use robots | NARS |

12. I would feel very nervous just being around a robot | NARS rw |

13. I would prefer to interact with a robot that looks like a machine | |

14. I would prefer to interact with a robot with humanoid appearance (head, arms, facial expressions etc.) | |

15. If I became too dependent on robots, something bad might happen | NARS rw, Frankenstein rw |

16. If robots cause accidents or trouble, persons and organizations related to development of them should give sufficient compensation to the victims | Frankenstein rw |

17. If robots cause accidents or trouble, persons or organizations owning the robot should give sufficient compensation to the victims | |

18. If robots had emotions, I would be able to befriend them | NARS |

19. In the future, robots will play a key role in society | NARS rw |

20. Military robots could reduce the human suffering caused by armed conflicts | |

21. Persons and organizations related to development of robots will consider the needs, thoughts and feelings of their users | Frankenstein rw |

22. Professionally supervised robots are safe enough to use in assistive tasks in care for the elderly | RPS rw |

23. Robotics is one of the areas of technology that needs to be closely monitored | |

24. Robotics is one of the areas of technology that people should not study | Frankenstein rw |

25. Robot's actions are always the responsibility of the programmer | |

26. Robots are a good thing for society, because they help people | |

27. Robots are a natural product of our civilization | Frankenstein rw |

28. Robots are hardworking | RPS |

29. Robots are necessary because they can do jobs that are too hard or too dangerous for people | |

30. Robots are nothing more than fancy pets | RPS |

31. Robots are sufficiently safe to assist in childcare under professional supervision | |

32. Robots can be trusted | RPS |

33. Robots can make my life easier | Frankenstein rw |

34. Robots may make us even lazier | Frankenstein rw |

35. Robots scare me | Frankenstein rw |

36. Robots should always obey humans | |

37. Robots should never make decisions concerning people | NARS rw, Frankenstein rw |

38. The idea of a robot with emotions is unpleasant to me | NARS rw |

39. The idea of an armed robot is frightening to me | |

41. The idea of self-thinking robots makes me feel uncomfortable | Frankenstein rw |

42. There is nothing especially wonderful or weird about robots | |

43. Widespread use of robotics can boost job opportunities in the developed nations | |

44. Widespread use of robots is going to take away jobs from people | Frankenstein rw |

Study 2 | Extracted from |

|---|---|

1. I can trust persons and organizations related to development of robots | Frankenstein rw |

2. Persons and organizations related to development of robots will consider the needs, thoughts and feelings of their users | Frankenstein rw |

3. I can trust a robot | RPS rw |

4. I would feel relaxed talking with a robot | NARS |

5. If robots had emotions, I would be able to befriend them | NARS |

6. Should the occasion arise, I’d be happy to test a sex robot | |

7. I’d be happy to let a robot take care of household chores | |

8. I’d feel less embarrassed asking a stupid question from a robot than from a human | |

9. I’d rather ask a machine for help than a human | |

10. If, because of an illness or an accident, I would need help in my daily chores, I’d feel more independent if the help came from a robot rather than a human | |

11. I would feel uneasy if I was given a job where I had to use robots | NARS |

12. I fear that a robot would not understand my commands | |

13. The idea of self-thinking robots makes me feel uncomfortable | Frankenstein rw |

14. Robots scare me | Frankenstein rw |

15. I would feel very nervous just being around a robot | NARS rw |

16. If I became too dependent on robots, something bad might happen | NARS rw, Frankenstein rw |

17. I don’t want a robot to touch me | |

18. I think the media is painting a too rosy picture of future robots | |

19. Robots are necessary because they can do jobs that are too hard or too dangerous for people | |

20. Robots can make life easier | Frankenstein rw |

21. Assigning routine tasks to robots lets people do more meaningful tasks | |

22. Dangerous tasks should primarily be given to robots | Frankenstein rw |

23. Robots are a good thing for society, because they help people | |

24. Robots are a natural product of our civilization | Frankenstein rw |

25. I can’t wait for robots to become more common | |

26. Media exaggerates worries about robotics | |

27. Robots may make us even lazier | Frankenstein rw |

28. Widespread use of robots is going to take away jobs from people | Frankenstein rw |

29. I am afraid that robots will encourage less interaction between humans | Frankenstein rw |

30. Robotics is one of the areas of technology that needs to be closely monitored | |

31. I’m worried about robots entering our daily life | |

32. In my opinion, robots should not be used in the care of children or the elderly | |

33. Man is the crown of creation | |

34. Robots can take the meaning out of human life | |

35. Unregulated use of robotics can lead to societal upheavals |

GAToRS Final Version | Extracted from |

|---|---|

1. I can trust persons and organizations related to development of robots | Frankenstein rw |

2. Persons and organizations related to development of robots will consider the needs, thoughts and feelings of their users | Frankenstein rw |

3. I can trust a robot | RPS rw |

4. I would feel relaxed talking with a robot | NARS |

5. If robots had emotions, I would be able to befriend them | NARS |

6. I would feel uneasy if I was given a job where I had to use robots | NARS |

7. I fear that a robot would not understand my commands | |

8. Robots scare me | Frankenstein rw |

9. I would feel very nervous just being around a robot | NARS rw |

10. I don’t want a robot to touch me | |

11. Robots are necessary because they can do jobs that are too hard or too dangerous for people | |

12. Robots can make life easier | Frankenstein rw |

13. Assigning routine tasks to robots lets people do more meaningful tasks | |

14. Dangerous tasks should primarily be given to robots | Frankenstein rw |

15. Robots are a good thing for society, because they help people | |

16. Robots may make us even lazier | Frankenstein rw |

17. Widespread use of robots is going to take away jobs from people | Frankenstein rw |

18. I am afraid that robots will encourage less interaction between humans | Frankenstein rw |

19. Robotics is one of the areas of technology that needs to be closely monitored | |

20. Unregulated use of robotics can lead to societal upheavals |

Appendix D: Item removals

Study 1 | Reason of removal |

|---|---|

1. A robot is responsible for its actions | Cross |

2. A robot's actions are always the responsibility of the owner | Low |

8. I feel more independent when I get help from a robot rather than a human being | Low |

9. I want to know if I'm dealing with a human or a robot, even if only by email or text message | Low |

13. I would prefer to interact with a robot that looks like a machine | No |

14. I would prefer to interact with a robot with humanoid appearance (head, arms, facial expressions etc.) | Low |

16. If robots cause accidents or trouble, persons and organizations related to development of them should give sufficient compensation to the victims | Cross |

17. If robots cause accidents or trouble, persons or organizations owning the robot should give sufficient compensation to the victims | Cross |

19. In the future, robots will play a key role in society | Low |

20. Military robots could reduce the human suffering caused by armed conflicts | No |

22. Professionally supervised robots are safe enough to use in assistive tasks in care for the elderly | Low |

24. Robotics is one of the areas of technology that people should not study | Low |

25. Robot's actions are always the responsibility of the programmer | Low |

28. Robots are hardworking | Low |

30. Robots are nothing more than fancy pets | Low |

31. Robots are sufficiently safe to assist in childcare under professional supervision | Low |

36. Robots should always obey humans | Low |

37. Robots should never make decisions concerning people | Low |

38. The idea of a robot with emotions is unpleasant to me | Cross |

39. The idea of an armed robot is frightening to me | Low |

42. There is nothing especially wonderful or weird about robots | No |

Study 2 | Reason of removal |

|---|---|

6. Should the occasion arise, I’d be happy to test a sex robot | Low |

7. I’d be happy to let a robot take care of household chores | Low |

8. I’d feel less embarrassed asking a stupid question from a robot than from a human | Low |

9. I’d rather ask a machine for help than a human | Low |

10. If, because of an illness or an accident, I would need help in my daily chores, I’d feel more independent if the help came from a robot rather than a human | Low |

13. The idea of self-thinking robots makes me feel uncomfortable | Low |

16. If I became too dependent on robots, something bad might happen | Cross |

18. I think the media is painting a too rosy picture of future robots | Cross |

24. Robots are a natural product of our civilization | Low |

25. I can’t wait for robots to become more common | Cross |

26. Media exaggerates worries about robotics | Low |

28. Widespread use of robots is going to take away jobs from people | Low |

31. I’m worried about robots entering our daily life | Cross |

32. In my opinion, robots should not be used in the care of children or the elderly | Low |

33. Man is the crown of creation | Low |

34. Robots can take the meaning out of human life | Low |

Appendix E

Correlations of the GAToRS and NARS subscales in the Validation study (Study 3).

Negative Attitudes towards Robots Scale:

NARS sit: Negative Attitudes toward Situations and Interactions with Robots.

NARS soc: Negative Attitudes toward Social Influence of Robots.

NARS emo: Negative Attitudes toward Emotions in Interaction with Robots.

General Attitudes Towards Robots Scale:

P+: Personal Level Positive Attitude.

P−: Personal Level Negative Attitude.

S+: Societal Level Positive Attitude.

S−: Societal Level Negative Attitude.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koverola, M., Kunnari, A., Sundvall, J. et al. General Attitudes Towards Robots Scale (GAToRS): A New Instrument for Social Surveys. Int J of Soc Robotics 14, 1559–1581 (2022). https://doi.org/10.1007/s12369-022-00880-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-022-00880-3