Abstract

When interacting with sophisticated digital technologies, people often fall back on the same interaction scripts they apply to the communication with other humans—especially if the technology in question provides strong anthropomorphic cues (e.g., a human-like embodiment). Accordingly, research indicates that observers tend to interpret the body language of social robots in the same way as they would with another human being. Backed by initial evidence, we assumed that a humanoid robot will be considered as more dominant and competent, but also as more eerie and threatening once it strikes a so-called power pose. Moreover, we pursued the research question whether these effects might be accentuated by the robot’s body size. To this end, the current study presented 204 participants with pictures of the robot NAO in different poses (expansive vs. constrictive), while also manipulating its height (child-sized vs. adult-sized). Our results show that NAO’s posture indeed exerted strong effects on perceptions of dominance and competence. Conversely, participants’ threat and eeriness ratings remained statistically independent of the robot’s depicted body language. Further, we found that the machine’s size did not affect any of the measured interpersonal perceptions in a notable way. The study findings are discussed considering limitations and future research directions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the field of human nonverbal communication, the effects of assertive body poses remain a popular and hotly debated topic. Adopting certain body postures is suspected to be a key to increasing self-worth, risk tolerance, and dominance in social situations (power-posing, [1,2,3]). A controversial psychological publication of the previous decade initially suggested that these effects might be rooted in bodily feedback loops and hormonal processes [1], but this claim was quickly met with skepticism and empirical objections by other researchers [4,5,6]. Yet, while the physiological aspects of power-posing have been mostly refuted, its cognitive effects on people’s self-perceptions remain a much-discussed (and researched) topic to this day [1, 7,8,9].

Apart from the controversial notion of whether people’s body language modulates the way they see themselves, however, there is much more scholarly agreement on how power poses influence perceptions by others. In fact, decades worth of research from the field of nonverbal communication clearly demonstrate that body postures, walking patterns, and hand gestures all impact which traits observers attribute to a person [10]. As a particularly prominent finding in this regard, numerous studies have shown that people displaying so-called expansive nonverbal behavior (e.g., a wide stance, sweeping hand movements, direct eye contact) are usually seen as much more persuasive, admirable, and confident than those standing or moving in a constricted manner [11,12,13]. Considering the high social relevance of these perceptions, it comes as no surprise that expansive body language has also been connected to several practical outcomes, such as increased success in job interviews [14], stronger romantic desirability [15], and more favorable ratings for politicians [16].

1.1 Applying Principles of Human Interaction to Technology

Due to notable advancements in the areas of computer science and engineering, contemporary digital technology can reach impressive levels of human likeness. Indeed, since many modern-day technologies appear inherently social in nature (for instance by talking back to the user in a human voice), it has been shown that people often apply the same scripts they use with other humans to their interactions with technology [17]. In consequence of this so-called computers are social actors (CASA) phenomenon, technologies such as robots, smart speakers, or even phones are not only ascribed their own personality [18, 19], but also regarded with genuine emotional attachment by their owners [20]. Of course, it should be noted that not each and every theory from the area of human–human interaction may be transferred seamlessly to people’s interactions with machines; for instance, since emotional bonds can only be developed unilaterally (from the user to the machine), relational expectations may turn out quite different [21]. Along the same lines, several recent publications have cautioned against generalizing social psychological insight to all types of human–robot interaction, instead advocating for a more nuanced perspective [22,23,24]. Regardless of these limitations, however, the CASA paradigm continues to offer most valuable reference points for researchers to make sense of people’s approach to intelligent technology [25,26,27,28].

The tendency to treat computers as social actors can be evoked by the most machine-looking, or even completely bodiless technologies, including smart home appliances or online text chatbots [29]. At the same time, research shows that by adding a human-like physical embodiment (e.g., a robotic body) to a digital system, it naturally becomes even easier for people to anthropomorphize it [30, 31]. Regarding the actual acceptance of technology, however, increased human likeness does not necessarily equal more liking as well. Instead, research embedded within the impressionistic uncanny valley framework [32, 33] has suggested that highly anthropomorphic technologies can also be perceived as eerily imperfect [34,35,36] or downright threatening [37, 38]. More specifically, it has been argued that by reaching high (yet not entirely flawless) levels of human likeness, technologies may trigger aversion on both a cognitive and affective level [39], for instance by eliciting cognitive dissonance [40], prompting mortality salience [41], or raising concerns about threatened human uniqueness [42, 43]. In turn, these processes may then manifest as an eerie sensation or the expectation of immediate danger. While earlier research mainly attributed the uncanny valley effect to visual features, recent literature shows that eeriness and threat may also be prompted by certain behaviors and mental capacities of artificial beings [38, 44, 45]. Thus, even if the face or body of a robot might not appear particularly threatening to an observer, its displayed actions and skills may still trigger a negative user response.

1.2 The Current Study

Considering the social quality that accompanies many human–machine interactions, a growing body of research has investigated whether nonverbal behavior by embodied digital technologies translates into the same interpersonal perceptions that occur among humans. Serving as the groundwork for this line of research, several studies were able to establish that the body language of social robots and virtual avatars is often recognized accurately by participants [46,47,48]. Subsequently, it was shown that by assuming specific body postures, human-like technologies may indeed appear more or less competent [49], persuasive [50], cooperative [51], authoritative [52], or dominant [53] to their human users.

Based on the reviewed literature, it becomes evident that fundamental principles of human body language might also apply to interactions with social robots. Nevertheless, some notable research gaps remain. For instance, we know of no previous research that has investigated potential interaction effects between a robot’s body language and its size on users’ evaluations and dispositional attributions. Considering that the popular NAO robot used in most scientific studies is rather small in size—and might therefore trigger associations such as “a toy” or “a child,” as well as corresponding cognitive schemas—it stands to reason that different effects might emerge for robots with larger body dimensions. In particular, we expected that perceptions of dominance or competence, which are typically associated more with adult age [54, 55], would turn out even stronger for adult-sized than for child-sized robots displaying expansive behavior.

H1a: A robot that strikes an expansive pose will appear more dominant than a robot striking a constrictive pose.

H1b: This effect will turn out stronger for an adult-sized than for a child-sized robot.

H2a: A robot that strikes an expansive pose will appear more competent than a robot striking a constrictive pose.

H2b: This effect will turn out stronger for an adult-sized than for a child-sized robot.

Despite being an increasingly prominent construct in the field of human–robot interaction [38, 56], we are not familiar with any research that has connected robot postures to the perception of threat. In our opinion, this presents another empirical shortcoming, not least considering that the perceived safety and danger of robots is strongly related to their mass adoption [57]. With our third hypothesis, we therefore scrutinized participants’ threat experience in the face of robots displaying different body language. Building upon the effects proposed in H1 and H2, we expected:

H3a: A robot that strikes an expansive pose will appear more threatening than a robot striking a constrictive pose.

H3b: This effect will turn out stronger for an adult-sized than for a child-sized robot.

Lastly, we strived to situate the current study in the tradition of the uncanny valley framework, which has inspired scholars in many technology-related disciplines for several decades [33, 39]. Based on the emerging idea that threat perceptions might be the underlying reason for the eerie sensation often observed in robot experiments [32, 58], we hypothesized:

H4a: A robot that strikes an expansive pose will appear eerier than a robot striking a constrictive pose.

H4b: This effect will turn out stronger for an adult-sized than for a child-sized robot.

2 Method

The current study was conducted in the form of an online experiment, using self-created sets of robot photographs in a 2 (robot size: child vs. adult) × 2 (robot pose: expansive vs. constrictive) between-subject design. Hypotheses, measures, and analysis strategies were preregistered at https://aspredicted.org/pa7ta.pdf. Furthermore, we provide all data, codes, and materials of this study in an Open Science Framework repository (https://osf.io/2zx89/).

2.1 Participants

An a priori calculation of minimum sample size—assuming a test power of 80% and a medium multivariate effect—resulted in a lower threshold of at least 125 participants. Ultimately, 214 participants (120 female, 93 male, 1 other; age M = 34.47 years, SD = 17.38) were recruited via social media, university mailing lists, and personal contacts for the current study. However, by using a five-point conscientiousness item, we were able to identify seven participants who had responded rather carelessly, leading to their exclusion from the data. Furthermore, three participants were excluded due to technical difficulties during their participation. Although we had initially considered to also exclude all participants who recognized the portrayed robot—which would have led to the additional exclusion of 39 individuals—our statistical analyses showed that removing these participants did not change our findings in any meaningful way.Footnote 1 As such, we opted to keep the respective datasets in our study, resulting in a final sample of 204 participants (114 female, 89 male, 1 other) with an average age of M = 34.28 years (SD = 17.13). In terms of professional background, our participants were predominantly students (44.8%), employees (35.8%), and retirees (11.3%). To participate, each person had to give informed consent. Additionally, all participants were offered to join a gift raffle of two €15 shopping vouchers as an incentive.

2.2 Procedure and Materials

At the start of our online study, each participant was randomly assigned to one of four experimental groups according to our two-factorial design: child-sized robot in expansive pose, child-sized robot in constrictive pose, adult-sized robot in expansive pose, adult-sized robot in constrictive pose. Subsequently, we presented all participants with two self-created robot images matching their assigned condition (Fig. 1), which had to be viewed for a minimum duration of twenty seconds each before it was possible to proceed to the prepared evaluation questionnaires.

As for the creation of our stimuli, we first took several photographs of the robot NAOv5 in a neutral setting. In order to depict expansive and constrictive body language in a conceptually valid way, we consulted psychological literature [1, 4, 10, 59], as well as a taxonomy proposed by human–computer interaction scholars [51]. By these means, the following criteria were identified for expansive poses: A wide stance (standing) or spread legs (sitting), hands placed on the hips (standing) or behind the head (sitting), head slightly lifted, and direct eye contact. In contrast to this, constrictive poses were characterized by the robot putting its arms together in front of its body, lowering its head, averting its gaze, and assuming a slightly bent posture.

To avoid the mono-stimulus bias often encountered in media-based research [60], we decided to prepare two stimulus pictures for each experimental group: (a) NAO sitting alone in front of a neutral background, and (b) NAO standing next to a group of people. Adobe Photoshop software and license-free stock photos were used to assemble the final stimuli. For the size manipulation, we either portrayed NAO in its original size of 55 cm (≈1′10″) or depicted a version that appeared approximately 160 cm tall (≈5′3″). In order to achieve this manipulation in the images with a neutral background, we added electric sockets as a well-known comparison standard so that participants could infer the intended size of the machine.

2.3 Measures

2.3.1 Dominance

To assess perceptions of dominance, we used five items provided by Straßmann and colleagues [51]. All items (e.g., “dominant”, “decisive”, “submissive”) were presented using 7-point scales (1 = not at all; 7 = completely) and averaged into a composite score after recoding the negatively valenced items. The resulting dominance index achieved good internal consistency, Cronbach’s α = 0.83.

2.3.2 Competence

In this study, we strived to assess competence as a relatively general interpersonal impression, i.e., the potential for effective action in any given domain [54]. A suitable instrument was obtained from prior power-posing research [61], which addresses perceived competence rather broadly via seven semantic differentials (e.g., “novice/experienced”, “unable/able to compete”). All items were presented with seven gradation points. We observed good internal consistency for the averaged index, Cronbach’s α = 0.84.

2.3.3 Threat

As a measure of technology-related threat, we added the ten-item scale (e.g., “This robot gives a peaceful impression.”, “I know that this robot would not harm me.”, “This robot is up to no good.”) developed by Stein and colleagues [38]. All items had to be answered on seven-point Likert scales. Reliability for the averaged scale turned out good, Cronbach’s α = 0.84.

2.3.4 Eeriness

The robot’s eeriness was assessed using eight semantic differentials (e.g., “bland/uncanny”, “boring/shocking”) provided by Ho and MacDorman [62]. Again, participants were provided with seven-point scales to express how they perceived the depicted NAO robot. For the resulting eeriness index, we observed acceptable internal consistency, Cronbach’s α = 0.79.

2.3.5 Interest in Robots (Covariate)

Previous research shows that positive attitudes towards robotic technology in general strongly affect participants’ evaluation of specific robots as well [63, 64]. As such, we decided to include participants’ interest in robots as a covariate, measuring it with three items (“I would love to interact more with robots.”, “I am really interested in robots.”, “I don’t find robots fascinating at all.”) using seven-point scales. The averaged robot interest index showed good internal consistency, Cronbach’s α = 0.82.

3 Results

Table 1 gives an overview of the zero-order correlations between the measured variables, whereas Table 2 shows the means and standard deviations obtained in our study. Additionally, Fig. 2 can be used for a graphical inspection of our obtained group differences.

Since we found our four dependent variables to be significantly intercorrelated, we decided to investigate the effects of our experimental manipulation in a multivariate analysis of covariance (MANCOVA).Footnote 2 Specifically, we entered both experimental factors, the four dependent variables (dominance, competence, threat, and eeriness), and participants’ interest for robots as a covariate into the procedure. Doing so, a significant multivariate main effect of robot pose was observed, V = 0.19, F(4,196) = 11.81, p < .001, with a large effect size of ηp2 = .19. Conversely, neither the main effect of robot size (p = .458), nor the interaction effect combining both factors (p = .357) turned out significant. The effect of the covariate slightly missed the conventional threshold of significance (p = .056).

Based on the significant multivariate effect of robot pose, we proceeded with univariate analyses regarding this factor. By these means, we encountered significant effects of the robot’s body language on perceived dominance, F(1,199) = 35.77, p < .001, ηp2 = .15, and perceived competence, F(1,199) = 31.81, p < .001, ηp2 = .14. Numerically, the large effect sizes are echoed in substantial rating differences between the groups: Participants who viewed expansively posing NAOs rated them much higher in both measures (dominance: M = 3.09, SD = 1.44; competence: M = 4.17, SD = 1.14) than the groups that were shown NAO in constrictive poses (dominance: M = 2.01, SD = 1.05; competence: M = 3.31, SD = 1.03). As such, we confirm hypotheses H1a and H2a, although the corresponding hypotheses H1b and H2b have to be rejected. Lastly, neither the effect of NAO’s posing on perceived eeriness (p = .372) nor that on perceived threat (p = .438) was found to be significant, resulting in the rejection of hypotheses H3 and H4.

Concluding our data analysis with an exploratory look into potential gender differences, we carried out separate subgroup analyses using the data of our female and male participants. Doing so, we found similar effect patterns emerging for both examined genders, matching the results described above. The main effect was similar in size for both genders (women: ηp2 = .25; men: ηp2 = .20), and the overall pattern of significant results remained the same. As one notable distinction, we observed that the included covariate—interest in robots—only exerted a significant influence in the MANCOVA using the female participants’ data (p = .009, ηp2 = .12), but not in the analysis focusing on the participating men (p = .955; ηp2 = .01).

4 Discussion

In order to successfully establish social robots in the roles they are being developed for, positive user experiences are of utmost importance. Acknowledging this, a growing body of literature has discussed how the nonverbal behavior of robots might contribute to smooth human–robot interactions, for instance by evoking certain desirable impressions among users. In our contribution to this emerging research area, we first obtained two noteworthy results: Confident, assertive power poses indeed made robots seem more dominant and competent than more constrictive body language. To our surprise, these effects emerged regardless of the robot’s size: Statistically, it did not matter whether the portrayed robot was depicted a mere 55 cm small or in the size of a human adult. From a psychological perspective, this implies that—in contrast to human-to-human contexts—body size might not necessarily elicit different impressions and expectations when engaging a robot. At the same time, we want to caution readers against overgeneralizing our findings, as they are merely based on static, mediated stimuli (i.e., photographs). In fact, we cannot rule out that different effects would occur when presenting participants with video sequences of robots—which include much more nonverbal cues such as timing, sequence, and variation—or even real-life interactions. Especially in the latter case, we would expect participants to feel quite differently in front of an adult-sized than a toy-sized robot, as the machine’s sheer mass and physicality would probably suffice to make it seem more dominant. Still, given that most people get acquainted with robots through media instead of real-life contact [66], we suggest that our study offers relevant insight, both to researchers and developers of robotic technology, as well as media producers who strive to portray robots in a certain way.

In the same vein, a worthwhile discussion may be invited by our findings (or lack thereof) concerning negative user perceptions, i.e., threat and eeriness. For both of these study variables, no significant group differences could be observed after manipulating the robot’s pose and size. If taken at face value, this suggests that feelings of uncanniness or potential danger might not depend on the posture or dimensions of a social robot, so that engineers might feel free to make use of expansive body language without having to worry about backfiring effects. However, we again have to consider that different results could emerge once people actually stood in front of a robot; in that case, observers might think much more about the machine’s ability to grab or hurt them, which would likely prompt more negative reactions. Similarly, the uncanny valley hypothesis in its original form includes the assumption that moving stimuli evoke stronger creepiness and aversion than static ones [32]—a possibility that also needs to be acknowledged in the interpretation of the observed null findings.

In terms of future research directions, we believe that studies focusing on natural human–robot interactions constitute the most important next step to alleviate our study’s limitations. At the same time, this might turn out quite difficult, as identical robots of different sizes would be required to secure internal validity. Given the necessary resources, however, any scientific efforts that investigate the effects of robotic body language in live interactions will certainly be of great value. Additionally, we believe that follow-up studies could advance the current line of research by going beyond our dichotomous differentiation between expansive and constrictive poses—looking into the effects of more nuanced nonverbal behaviors instead. After all, it stands to reason that even minuscule details in the presented stimulus pictures might have affected participants’ perceptions; for instance, portraying the robot in a relaxed seating position might have subverted any notion of threat, as the machine may not have seemed ready to execute relevant actions in this situation. Likewise, in the stimulus image that depicted the robot among a group of humans, the shown individuals looked rather happy and shared close personal space with the machine—potentially biasing participants towards competence and against threat perceptions. Hence, further studies (e.g., based on insight from the field of proxemics) are all but needed to elucidate on the intricate interactions that determine users’ reactions to different robot poses.

Pointing out another methodological limitation of our study, we would like to note that the current experiment made use of only one specific robot design, namely the robot NAO, which is often described as “cute” by study participants [67]. Further research could therefore try to replicate the reported findings using more serious-looking robots, which might inherently appear more threatening or eerie. Then again, as studies have shown that even relatively similar types of social robots can trigger different dispositional attributions [68], it might also be worthwhile to repeat the current study with only slight visual modifications (e.g., NAO robots with different coloration). Of course, all replication and follow-up efforts will need to consider sample characteristics—as literature clearly shows that age, gender, cultural norms, and personality all affect the level of comfort people experience in the face of autonomous technology [63, 69,70,71]. While our exploratory subgroup analysis of potential gender differences suggested rather similar effects for both men and women, it should be noted that our findings are still based on a single convenience sample. As such, more diverse groups of participants should be recruited in order to understand whether the observed results are consistently observed among people from different age brackets, sociodemographic backgrounds, or countries.

Last but not least, researchers might also pursue conceptual extensions of the presented work. As a specific recommendation in this regard, we encourage scholars to look deeper into users’ perception of intentionality and trust, two aspects that have been highlighted as most crucial for the successful incorporation of robots into human society [72,73,74]. In all probability, trusting a robot (and its intentions) may shield observers against threat-inducing cues, so that measuring this variable will certainly result in a more complete picture in future studies. Along the same lines, we anticipate that the intelligence ascribed to a robot might constitute another crucial moderator of how people evaluate robotic body language; after all, assuming a robot to be genuinely competent will likely change the impression it conveys with an assertive, powerful pose. As such, including user’s mind attributions concerning robots may offer another ideal starting point for further research.

5 Conclusion

Conveying information about one’s emotions, attitudes, and dispositions constitutes one of the primary functions of using body language. Robot engineers can make use of this fundamental principle of human communication to improve the acceptance (and thematic fit) of their creations. According to our study, a robot placing its hands confidently on its hips may indeed seem more competent and powerful than a machine displaying constrictive body language, without necessarily evoking stronger unease among observers. Furthermore, our results suggest that similar effects of nonverbal behavior might apply to robots of different sizes—at least if they remain at a safe (or mediated) distance.

Data Availability

We provide all data and materials of this study in an Open Science Framework repository (https://osf.io/2zx89/).

Notes

Please see the provided OSF link for detailed output files of both MANCOVA analyses—with and without the participants that recognized the used robot.

Although our data violated the assumption of homogeneous covariance matrices, MANCOVAs with comparable group sizes have been shown to be robust nonetheless [65].

References

Carney DR, Cuddy AJC, Yap AJ (2010) Power posing: brief nonverbal displays affect neuroendocrine levels and risk tolerance. Psychol Sci 21:1363–1368. https://doi.org/10.1177/0956797610383437

Cuddy AJC (2015) Presence: bringing your boldest self to your biggest challenges. Little Brown and Company, Boston

Cuddy AJC, Schultz SJ, Fosse NE (2018) P-curving a more comprehensive body of research on postural feedback reveals clear evidential value for power-posing effects: reply to simmons and simonsohn. Psychol Sci 29:656–666. https://doi.org/10.1177/0956797617746749

Cesario J, Johnson DJ (2017) Power poseur: bodily expansiveness does not matter in dyadic interactions. Soc Psychol Pers Sci 9:781–789. https://doi.org/10.1177/1948550617725153

Ranehill E, Dreber A, Johannesson M, Leiberg S, Sul S, Weber RA (2015) Assessing the robustness of power posing: no effect on hormones and risk tolerance in a large sample of men and women. Psychol Sci 26:653–656. https://doi.org/10.1177/0956797614553946

Simmons JP, Simonsohn U (2017) Power posing: P-curving the evidence. Psychol Sci 28:687–693. https://doi.org/10.1177/0956797616658563

Allen J, Gervais SJ, Smith JL (2013) Sit big to eat big: the interaction of body posture and body concern on restrained eating. Psychol Women Quart 37:325–336. https://doi.org/10.1177/036168431347647

Elkjær E, Mikkelsen MB, Michalak J, Mennin DS, O’Toole MS (2020) Expansive and contractive postures and movement: a systematic review and meta-analysis of the effect of motor displays on affective and behavioral responses. Perspect Psychol Sci. https://doi.org/10.1177/1745691620919358

Gronau QF, van Erp S, Heck DW, Cesario J, Jonas KJ, Wagenmakers EJ (2017) A Bayesian model-averaged meta-analysis of the power pose effect with informed and default priors: the case of felt power. Compreh Results Soc Psychol 2:123–138. https://doi.org/10.1080/23743603.2017.1326760

Hall JA, Horgan TG, Murphy NA (2019) Nonverbal communication. Annu Rev Psychol 70:271–294. https://doi.org/10.1146/annurev-psych-010418-103145

Burgoon JK, Birk T, Pfau M (1990) Nonverbal behaviors, persuasion, and credibility. Hum Commun Res 17:140–169. https://doi.org/10.1111/j.1468-2958.1990.tb00229.x

Cashdan E (1998) Smiles, speech, and body posture: how women and men display sociometric status and power. J Nonverbal Behav 22:209–228. https://doi.org/10.1023/A:1022967721884

Newman R, Furnham A, Weis L, Gee M, Cardos R, Lay A, McClelland A (2016) Non-verbal presence: How changing your behaviour can increase your ratings for persuasion, leadership and confidence. Psychology 7:488–499. https://doi.org/10.4236/psych.2016.74050

Bonaccio S, O’Reilly J, O’Sullivan SL, Chiocchio F (2016) Nonverbal behavior and communication in the workplace. J Manag 42:1044–1074. https://doi.org/10.1177/0149206315621146

Vacharkulksemsuk T, Reit E, Khambatta P, Eastwick PW, Finkel EJ, Carney DR (2016) Dominant, open nonverbal displays are attractive at zero-acquaintance. Proc Natl Acad Sci USA 113:4009–4014. https://doi.org/10.1073/pnas.1508932113

Spezio ML, Loesch L, Gosselin F, Mattes K, Alvarez RM (2012) Thin-slice decisions do not need faces to be predictive of election outcomes. Polit Psychol 33:331–341. https://doi.org/10.1111/j.1467-9221.2012.00897.x

Nass C, Moon Y (2005) Machines and mindlessness: Social responses to computers. J Soc Issues 56:81–103. https://doi.org/10.1111/0022-4537.00153

Lee KM, Peng W, Jin S-A, Yan C (2006) Can robots manifest personality? An empirical test of personality recognition, social responses, and social presence in human–robot interaction. J Commun 56:754–772. https://doi.org/10.1111/j.1460-2466.2006.00318.x

Wang W (2017) Smartphones as Social Actors? Social dispositional factors in assessing anthropomorphism. Comput Hum Behav 68:334–344. https://doi.org/10.1016/j.chb.2016.11.022

Wan EW, Chen RP (2021) Anthropomorphism and object attachment. Curr Opin Psychol 39:88–93. https://doi.org/10.1016/j.copsyc.2020.08.009

de Graaf MMA (2016) An ethical evaluation of human–robot relationships. Int J Soc Robot 8:589–598. https://doi.org/10.1007/s12369-016-0368-5

Fox J, Gambino A (2021) Relationship development with humanoid social robots: Applying interpersonal theories to human/robot interaction. Cyberpsychol Behav Soc Netw 24:294–299. https://doi.org/10.1089/cyber.2020.0181

Wullenkord R, Eyssel F (2020) Societal and ethical issues in HRI. Curr Robot Rep 1:85–96. https://doi.org/10.1007/s43154-020-00010-9

Seibt J, Vestergaard C, Damholdt MF (2020). Sociomorphing, not anthropomorphizing: towards a typology of experienced sociality. In: Nørskov M, Seibt J, Quick OS (eds) Culturally sustainable social robotics: proceedings of robophilosophy 2020. IOS Press, Amsterdam, pp 51–67. doi:https://doi.org/10.3233/FAIA200900

Edwards C, Edwards A, Stoll B, Lin X, Massey N (2019) Evaluations of an artificial intelligence instructor’s voice: social identity theory in human–robot interactions. Comput Human Behav 90:357–362. https://doi.org/10.1016/j.chb.2018.08.027

Hong JW (2020) Why is artificial intelligence blamed more? Analysis of faulting artificial intelligence for self-driving car accidents in experimental settings. Int J Hum-Comput Int 36:1768–1774. https://doi.org/10.1080/10447318.2020.1785693

Lee-Won RJ, Joo YK, Park SG (2020) Media equation. Int Encycl Media Psychol. https://doi.org/10.1002/9781119011071.iemp0158

Nielsen YA, Pfattheicher S, Keijsers M (2022) Prosocial behavior towards machines. Curr Opin Psychol 43:260–265. https://doi.org/10.1016/j.copsyc.2021.08.004

Liu B, Sundar SS (2018) Should machines express sympathy and empathy? Experiments with a health advice chatbot. Cyberpsychol Behav Soc Netw 21:625–636. https://doi.org/10.1089/cyber.2018.0110

Broadbent E, Kumar V, Li X, Sollers J, Stafford RQ, MacDonald BA, Wegner DM (2013) Robots with display screens: A robot with a more humanlike face display is perceived to have more mind and a better personality. PLoS ONE 8:e72589. https://doi.org/10.1371/journal.pone.0072589

Krach S, Hegel F, Wrede B, Sagerer G, Binkofski F, Kircher T (2008) Can machines think? Interaction and perspective taking with robots investigated via fMRI. PLoS ONE 3:e2597. https://doi.org/10.1371/journal.pone.0002597

Mori M (1970) The uncanny valley. Energy 7:33–35

Kätsyri J, Förger K, Mäkäräinen M, Takala T (2015) A review of empirical evidence on different uncanny valley hypotheses: support for perceptual mismatch as one road to the valley of eeriness. Front Psychol 6:390. https://doi.org/10.3389/fpsyg.2015.00390

Perez JA, Garcia Goo H, Sánchez Ramos A, Contreras V, Strait MK (2020) The uncanny valley manifests even with exposure to robots. In: Proceedings of the 2020 ACM/IEEE International Conference on Human–Robot Interaction. IEEE Press, New York, pp 101–103. doi:https://doi.org/10.1145/3371382.3378312

Seyama J, Nagayama RS (2007) The uncanny valley: Effect of realism on the impression of artificial human faces. Presen Teleop Virt 16:337–351. https://doi.org/10.1162/pres.16.4.337

Strait MK, Floerke VA, Ju W, Maddox K, Remedios JD, Jung MF, Urry HL (2017) Understanding the uncanny: both atypical features and category ambiguity provoke aversion against humanlike robots. Front Psychol 8:1366. https://doi.org/10.3389/fpsyg.2017.01366

Ferrari F, Paladino MP, Jetten J (2016) Blurring human-machine distinctions: Anthropomorphic appearance in social robots as a threat to human distinctiveness. Int J Soc Robot 8:287–302. https://doi.org/10.1007/s12369-016-0338-y

Stein J-P, Liebold B, Ohler P (2019) Stay back, clever thing! Linking situational control and human uniqueness concerns to the aversion against autonomous technology. Comput Hum Behav 95:73–82. https://doi.org/10.1016/j.chb.2019.01.021

Diel A, MacDorman KF (2021) Creepy cats and strange high houses: Support for configural processing in testing predictions of nine uncanny valley theories. J Vis 21:1–20. https://doi.org/10.1167/jov.21.4.1

Moore RK (2012) A Bayesian explanation of the ‘Uncanny Valley’ effect and related psychological phenomena. Sci Rep 2:864. https://doi.org/10.1038/srep00864

MacDorman KF (2005) Mortality salience and the uncanny valley. In: Proceedings of the 5th IEEE-RAS International Conference on Humanoid Robots. IEEE Press, New York, pp 399–405. doi:https://doi.org/10.1109/ICHR.2005.1573600

Stein JP, Ohler P (2017) Venturing into the uncanny valley of mind—The influence of mind attribution on the acceptance of human-like characters in a virtual reality setting. Cognition 160:43–50. https://doi.org/10.1016/j.cognition.2016.12.010

Złotowski J, Yogeeswaran K, Bartneck C (2017) Can we control it? Autonomous robots threaten human identity, uniqueness, safety, and resources. Int J Hum Comput St 100:48–54. https://doi.org/10.1016/j.ijhcs.2016.12.008

Appel M, Izydorczyk D, Weber S, Mara M, Lischetzke T (2020) The uncanny of mind in a machine: Humanoid robots as tools, agents, and experiencers. Comput Hum Behav 102:274–286. https://doi.org/10.1016/j.chb.2019.07.031

Gray K, Wegner D (2012) Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition 125:125–130. https://doi.org/10.1016/j.cognition.2012.06.007

Beck A, Cañamero L, Hiolle A, Damiano L, Cosi P, Tesser F, Sommavilla G (2013) Interpretation of emotional body language displayed by a humanoid robot: a case study with children. Int J Soc Robot 5:325–334. https://doi.org/10.1007/s12369-013-0193-z

Breazeal C (2002) Emotion and sociable humanoid robots. Int J Hum Comput St 59:119–155. https://doi.org/10.1016/S1071-5819(03)00018-1

Destephe M, Henning A, Zecca M, Hashimoto K, Takanishi A (2013) Perception of emotion and emotional intensity in humanoid robots’ gait. In: Proceedings of the 2013 IEEE international conference on robotics and biomimetics. IEEE Press, New York, pp 1276–1281. doi:https://doi.org/10.1109/robio.2013.6739640

Bergmann K, Eyssel F, Kopp S (2012) A second chance to make a first impression? How appearance and nonverbal behavior affect perceived warmth and competence of virtual agents over time. In: Nakano Y, Neff M, Paiva A, Walker M (eds) Proceedings of the 2012 international conference on intelligent virtual agents. Springer, Berlin, pp 126–138. doi:https://doi.org/10.1007/978-3-642-33197-8_13

Chidambaram V, Chiang Y-H, Mutlu B (2012) Designing persuasive robots: How robots might persuade people using vocal and nonverbal cues. In: Proceedings of the 7th annual ACM/IEEE international conference on human-robot interaction. ACM Press, New York, pp 293–300. doi:https://doi.org/10.1145/2157689.2157798

Straßmann C, Rosenthal-von der Pütten A, Yaghoubzadeh R, Kaminski R, Krämer N (2016) The effect of an intelligent virtual agent’s nonverbal behavior with regard to dominance and cooperativity. In: Proceedings of the 2016 international conference on intelligent virtual agents. Springer, Berlin, pp 15–28. doi:https://doi.org/10.1007/978-3-319-47665-0_2

Johal W, Pesty S, Calvary G (2014) Towards companion robots behaving with style. In: Proceedings of the 23rd IEEE international symposium on robot and human interactive communication. IEEE Press, New York, pp 1063–1068. doi:https://doi.org/10.1109/ROMAN.2014.6926393

Peters R, Broekens J, Li K, Neerincx MA (2019) Robots expressing dominance: Effects of behaviours and modulation. In: Proceedings of the 8th international conference on affective computing and intelligent interaction (ACII). IEEE Press, New York, pp 1–7. doi:https://doi.org/10.1109/ACII.2019.8925500

Heckhausen J (2007) Competence and motivation in adulthood and old age. In: Elliot AJ, Dweck CS (eds) Handbook of competence and motivation. The Guilford Press, New York, pp 240–258

Jones C, Peskin H, Wandeler C (2017) Femininity and dominance across the lifespan: Longitudinal findings from two cohorts of women. J Adult Dev 24:22–30. https://doi.org/10.1007/s10804-016-9243-8

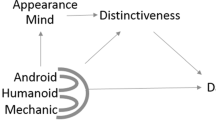

Müller BCN, Gao X, Nijssen SRR, Damen TGE (2020) I, robot: how human appearance and mind attribution relate to the perceived danger of robots. Int J Soc Robot. https://doi.org/10.1007/s12369-020-00663-8

Gnambs T, Appel M (2019) Are robots becoming unpopular? Changes in attitudes towards autonomous robotic systems in Europe. Comput Hum Behav 93:53–61. https://doi.org/10.1016/j.chb.2018.11.045

Wang S, Rochat P (2017) Human perception of animacy in light of the uncanny valley phenomenon. Perception 46:1386–1411. https://doi.org/10.1177/0301006617722742

Givens DB (2005) The nonverbal dictionary of gestures, signs and body language cues. Center for Nonverbal Studies Press, Spokane

Reeves B, Yeykelis L, Cummings JJ (2016) The use of media in media psychology. Media Psych 19:49–71. https://doi.org/10.1080/15213269.2015.1030083

Furley P, Dicks M, Memmert D (2012) Nonverbal behavior in soccer: the influence of dominant and submissive body language on the impression formation and expectancy of success of soccer players. J Sport Exerc Psy 34:61–82. https://doi.org/10.1123/jsep.34.1.61

Ho C-C, MacDorman KF (2010) Revisiting the uncanny valley theory: developing and validating an alternative to the Godspeed indices. Comput Hum Behav 26:1508–1518. https://doi.org/10.1016/j.chb.2010.05.015

MacDorman KF, Entezari S (2015) Individual differences predict sensitivity to the uncanny valley. Interact Stud 16:141–172. https://doi.org/10.1075/is.16.2.01mac

Stafford RQ, MacDonald BA, Jayawardena C, Wegner DM, Broadbent E (2014) Does the robot have a mind? Mind perception and attitudes towards robots predict use of an eldercare robot. Int J Soc Robot 6:17–32. https://doi.org/10.1007/s12369-013-0186-y

Field A (2013) Discovering statistics using IBM SPSS statistics. SAGE Publications, Thousand Oaks

Mara M, Stein JP, Latoschik ME, Lugrin B, Schreiner C, Hostettler R, Appel M (2021) User responses to a humanoid robot observed in real life, virtual reality, 3D and 2D. Front Psychol 12:1152. https://doi.org/10.3389/fpsyg.2021.633178

Rosenthal-von der Pütten AM, Krämer N (2014) How design characteristics of robots determine evaluation and uncanny valley related responses. Comput Hum Behav 36:422–439. https://doi.org/10.1016/j.chb.2014.03.066

Thunberg S, Thellman S, Ziemke T (2017) Don’t judge a book by its cover: a study of the social acceptance of NAO vs. Pepper. In: Proceedings of the 5th international conference on human agent interaction. ACM Press, New York, pp 443–446. doi:https://doi.org/10.1145/3125739.3132583

de Graaf MMA, ben Allouch S, van Dijk JAGM, (2019) Why would I use this in my home? A model of domestic social robot acceptance. Hum Comput Int 34:115–173. https://doi.org/10.1080/07370024.2017.1312406

Kaplan F (2004) Who is afraid of the humanoid? Investigating cultural differences in the acceptance of robots. Int J Humanoid Robot 1:1–16. https://doi.org/10.1142/S0219843604000289

Liang Y, Lee SA (2017) Fear of autonomous robots and artificial intelligence: Evidence from national representative data with probability sampling. Int J Soc Robot 9:379–384. https://doi.org/10.1007/s12369-017-0401-3

Hancock PA, Billings DR, Schaefer KE, Chen JYC, de Visser E, Parasuraman R (2011) A meta-analysis of factors affecting trust in human–robot interaction. Hum Fact 53:517–527. https://doi.org/10.1177/0018720811417254

Wiese E, Metta G, Wykowska A (2017) Robots as intentional agents: Using neuroscientific methods to make robots appear more social. Front Psychol 8:1663. https://doi.org/10.3389/fpsyg.2017.01663

Złotowski J, Sumioka H, Nishio S, Glas DF, Bartneck C, Ishiguro H (2016) Appearance of a robot affects the impact of its behaviour on perceived trustworthiness and empathy. Paladyn J Behav Robot 7:55–66. https://doi.org/10.1515/pjbr-2016-000

Acknowledgements

All listed authors offered substantial contributions to the study conception and drafting of the manuscript. Furthermore, all authors approved of the final submission and agreed to be accountable for all aspects of the work.

Funding

Open Access funding enabled and organized by Projekt DEAL. There is no funding information to declare.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Statement

While there is no formal requirement for psychological studies in Germany to be approved by institutional review boards, the authors declare that this research was conducted in full accordance with the Declaration of Helsinki, as well as the ethical guidelines provided by the German Psychological Society (DGPs). Of course, this also included obtaining informed consent from all participants before they were able to take part in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stein, JP., Cimander, P. & Appel, M. Power-Posing Robots: The Influence of a Humanoid Robot’s Posture and Size on its Perceived Dominance, Competence, Eeriness, and Threat. Int J of Soc Robotics 14, 1413–1422 (2022). https://doi.org/10.1007/s12369-022-00878-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-022-00878-x