Abstract

The aim of this paper is to investigate performance in a collaborative human–robot interaction on a shared serious game task. Furthermore, the effect of elicited emotions and perceived social behavior categories on players’ performance will be investigated. The participants collaboratively played a turn-taking version of the Tower of Hanoi serious game, together with the human and robot collaborators. The elicited emotions were analyzed in regards to the arousal and valence variables, computed from the Geneva Emotion Wheel questionnaire. Moreover, the perceived social behavior categories were obtained from analyzing and grouping replies to the Interactive Experiences and Trust and Respect questionnaires. It was found that the results did not show a statistically significant difference in participants’ performance between the human or robot collaborators. Moreover, all of the collaborators elicited similar emotions, where the human collaborator was perceived as more credible and socially present than the robot one. It is suggested that using robot collaborators might be as efficient as using human ones, in the context of serious game collaborative tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Collaborative interaction between humans and robots has been a subject of interest for writers and scholars for a long time [1,2,3]. Motivated by these ideas, researchers working with Human–Robot Interaction (HRI) are investigating robots as peers and colleagues with a variety of social and emotional abilities (e.g., [4, 5]). These endeavors have been motivating HRI research ranging from robotic movement and control, to modeling cognition and social behavior [6]. The future might witness an increase in a number of robots, performing tasks in collaboration with humans sharing the same task space [7,8,9,10]. Such scenarios, where humans must work closely with robots in social interaction situations, are becoming increasingly relevant in the world of today [11,12,13,14,15]. This vision gives rise to a need for the investigation of human perception in regards to its robot partners, interacting collaboratively on a shared task. Moreover, it indicates a need for deeper understanding how social cues of a robot collaborator affect performance of a human partner, in an attempt to inform the design of robot collaborators and tasks.

Previous research employing questionnaires shows that embodied robots are sometimes as engaging as humans [16, 17]. A study of perceived social behavior is important for many aspects of robotics, as it investigates perception in HRI and it should be important to consider when designing a human–robot social interaction [18]. Research shows that engaging physical non-humanoid robot collaborators can convey social cues and be perceived as socially present, furthermore they can elicit emotional responses [6, 19]. People are sensitive to social cues of a robot. In particular, they prefer that a robot moves at a speed slower than that of a walking human [20, 21]. Moreover, curves of gestured movements influence the perception of collaborating robots [22]. Embodiment characterizes the role of a body in an intelligent behavior [23]. The authors state that there is a direct link between embodiment and information provided by social cues of robot collaborators. They also state that the human perception of robot collaborators is dependent on the physical interactions, actions, shapes, and the serious game environment itself. The previous research found that social cues (i.e., gestured motion and speed) of collaborating robots elicit emotions in their human partners [24]. Their study uses variations in gestured motion and speed of the collaborating robot, from a direct path at fixed speed to a gestured path at varying speeds. This investigation is motivated by these findings, as it follows up on the previous study [24] also to include perceived social categories, where a robot collaborator acts as a stimulus eliciting emotions and perceived social behavior. Perceived social categories of such interaction (i.e., engagement, reliability, credibility, interaction, and presence) in regards to a robot collaborator, could affect performance on the task presented [6, 25].

Humans use mechanisms from Human–Human Interaction (HHI) to perceive robots as autonomous social agents, more or less as socially present as real human collaborators [6]. The previous proposition motivates this research to take into consideration both HHI and HRI for the investigation of perception in social behavior and emotions. This research extends the previous investigation [6] in terms of perceived social presence and it contributes with other social categories and elicited emotions, where these have been brought in relation to performance on a game task.

Previous studies investigating interactions between humans and robots in serious games are sparse. Simulated computer agents playing games such as chess or checkers with or against humans, are familiar concept [26]. However, interaction between humans and robots on a collaborative serious game task within a physical environment is rare. Thus, this investigation aims to extend the research in this domain. To investigate these aspects, this study uses a collaborative serious game task. Although there is a certain popularity of electronic games in current research methods, many traditional games are played in the physical world and require a tangible interaction [27]. The physicality of games is important because humans perceive robots as physical entities, capable of interacting with the physical world, while having access to the virtual domain. Such HRI-enhanced traditional serious games can support this physical and virtual duality.

A traditional serious game has been used in the experiment, which can be solved following a sequential set of steps. There exists an optimal solution to the game, such that any non-optimal one could easily be compared for a measurement of human participants’ performance. Emerging emotions might be responsible for influencing performance on a game task, as evidence shows that emotions are a key factor that critically influences human decision-making [28,29,30]. For this purpose, the serious game used in this study is turn based and allows one to play at a pace one feels comfortable with, to investigate the effects of robot collaborators’ social cues on human performance.

The goal of this paper is to investigate the performance of human participants on a shared serious game task, with a robot partner in their proximate interactive collaboration. In an attempt to investigate how humans perceive robots as social agents and entities in shared-task spaces. In this study, it is of interest to find how a small subset of social cues is perceived and interpreted by humans collaborating with the robot. Thus, this paper aims to investigate the effects of perceived social categories and elicited emotions in proximate interaction, as proposed by Ijsselsteijn and Harper [18]. More specifically, to bring these effects in relation to performance on a serious game task in a collaborative HRI. The contributions of this paper are: (1) to investigate how people perceive robots as social agents in shared-task spaces, capable of interacting with human collaborators, and how it affects performance on a task; (2) understand how social cues of robot collaborators elicit emotions and how those cues are perceived as social categories, affecting the performance of human partners performing collaboratively on s shared game task; (3) understanding both HHI and HRI in regards to perception of social categories and elicited emotions, bringing results in relation with performance on a game task. These insights could provide a deeper understanding of how emotions and social categories underlie such an interaction from the human collaborator perspective, potentially informing the design of more meaningful collaborative game tasks that would use helpful and intelligent robot collaborators which act according to behavioral patterns that humans can understand and relate to [31].

The remainder of the paper is structured as follows: related work is given in Sect. 2. Section 3 presents the research questions, while Sect. 4 details the experimental set-up and the methodological approach to answering them. The results are given in Sect. 5, while the discussion and the conclusion are given in Sects. 6 and 7 respectively.

2 Related Work

There is a great body of previous investigations that motivated and supported this research.

2.1 Human–Robot Interaction

A HRI requires communication between robots and humans [22]. Authors state that it is “the process of working together to accomplish a goal.” Such communication may take several forms. Proximate Interaction, where humans and robots are collocated (i.e., a collaborative robot is in the same room as the humans in this study) [22]. In such interaction it is likely for a robot to be perceived as animate, eliciting social and emotional responses [6]. Social interaction, where humans and robots interact as peers or companions [32]. HRI challenges include supporting effective social interactions through social and emotional cues, such as cues associated with the robot’s proxemic behavior e.g., gestures and speed. These cues were found to affect significantly the participants’ perception of different social categories and emotional states of a collaborating robot [6, 22].

2.2 Serious Games

Serious games are (digital) games used for purposes other than mere entertainment [33]. They need to captivate and engage the player for a specific purpose [34]. Social interaction in serious games needs more than one player to be accomplished. Collaboration could be an important method to improve player’s motivation, by suggesting serious game activities that involve a physical player to collaborate with on the same task [35]. In traditional collaboration-based digital serious games one is playing together with a computer (a virtual entity). In HRI-enhanced serious games one is playing together with a physical entity eliciting diverse behaviors and stronger emotional responses in players, which might support higher motivation and focus on the task [35].

Robots were used in serious game settings for treatment of autism [36] and stress [37], in a game scenario interacting with groups of children [38], in games and other natural social interactions with humans conveying emotions and robots providing feedback [5, 39]. Furthermore, robots were used as game companions in an educational setting, where engagement was automatically detected in children playing chess with an “iCat” robot displaying affective and attention cues [40]. Previous research on interaction between humans and robots in a collaborative serious game within a physical environment, is sparse. The “Sheep and Wolves” is a classic board game, where humans and robots collaborate as independent members of the wolf team. They hunt a single sheep in an attempt to surround it [27, 31]. In the “Tic-tac-toe” game the robot and a human can move game pieces on a physical board and collaborate as equals [41]. Another example is the “Mastermind” game, where the robot makes suggestions to human players as to what colors to pick, where both are engaged in cooperation [42].

2.3 Emotions

Evidence showed that emotions influence decision-making, but they do not always impair it. Emotions were found to have a positive influence on decision-making and to facilitate it [28,29,30]. It was found that the experience of emotions is a mandatory prerequisite for advantageous decision-making [43], therefore having a positive impact on performance [44,45,46,47]. Russell [48] generally classified emotions by their independent components, arousal and valence. Level of excitement is represented by arousal, while current emotional state is represented by valence, to be positive or negative. Following this notion upon measuring emotions, one is actually measuring a combination of valence and arousal.

2.4 Social Interaction

As with other aspects of social interaction, there are many issues that affect perceived engagement, as a number of studies have shown [16, 49,50,51]. Robot collaborators were found to be capable of interacting socially with other players [52]. Robots would have to possess the skills to interact, as well as to behave in a social manner that would be perceived as acceptable and appropriate in human environments. Table 1 gives a concise description of the investigated social categories in this study.

Interaction The previous studies have investigated robot motion and human perception of robots. For example, the level of comfort for the test subjects was investigated through the Wizard-of-Oz experiments, where an experimenter was hidden and operated or partially operated a robot, thus mimicking autonomous behavior [53,54,55]. Studies have investigated comfort in a HRI [56, 57]. These studies, specifically investigated social interactions requiring trust and respect, because they were found to be fundamental to many social interactions, including cooperation [51]. Studies have shown that people who hold negative attitudes toward robots feel less comfortable in these human-robot situations [55, 58].

Reliability Goetz et al. [59] reported that people complied more with a serious, more authoritative robot than with a playful robot on the task that itself was serious. Following this proposition, it seems that the manner in which a robot was presented to humans collaborating with it may have affected the extent to which people were willing to rely on it [9]. A dependable robot helped to increase trust in human collaborators, believing that it would be consistent with its operations, and that it would be available when it was needed [25].

Credibility How a person perceives robot’s motives was found to be important for how credible it was perceived [25]. A desirable robot was the one perceived to have had the person’s best interests in mind, and it would often be viewed as beneficial to someone interacting with it. Credibility could have been affected by various causes such as the presence of robots [25, 51].

Engagement Results show that engagement with robots is dependent on the cues displayed in the game and social context, with prior having a more important role [60]. An engaged person was found to have a direct and natural experience, rather than just processing of symbolic data presented [61]. Moreover, Sidner et al. [62] defined engagement as the process of establishing, maintaining and ending an interaction among the partners. Such interaction was therefore found to likely be more compelling.

Presence Social presence could shortly be described as the “sense of being together with another” [52]. It might have an influence on task performance, where impoverished social presence undermines it [25]. If human players perceived artificial opponents as not socially present, their enjoyment would decrease while interacting with them [63]. Social presence was found to be important for understanding another agent’s intentions [6]. In a task where a robot was conveying information to a person (such as giving directions, teaching a new skill and collaborating towards a particular goal), it has been perceived as persuasive with clear intentions [16, 25, 64].

3 Research Questions

Following up on the findings and the proposition that the perception of robots as autonomous social agents uses mechanisms from HHI and could affect performance [6], this study applied these mechanisms on the influence of robot collaborators in regards to performance on the game task. Furthermore, this study extends previous research [6] to take into consideration the human collaborator condition. Thus, the following research question is presented:

RQ 1

How do collaborator conditions (human and robot) affect performance in a collaborative serious game?

Research suggests that robot collaborators elicit emotions in human partners [22, 24]. Research has shown that a high level of focus on a task maximizes performance, while it is correlated with positive emotions and sufficient arousal [66, 67]. This is valid unless the challenge is sufficiently beyond or below one’s abilities, which generates anxiety or boredom respectively, resulting in worse performance. These studies warrant further investigation on how performance on a collaborative task is affected by the different dimensions of elicited emotions in regards to robot collaborators. To expand on these investigations, the following research question is presented:

RQ 2

How do elicited emotions affect performance in a collaborative serious game between collaborator conditions (human and robot), in regards to valence and arousal?

Research has shown that engaging physical non-humanoid robot collaborators could convey social cues and be perceived as social agents [6, 22]. High social presence was found to be correlated with higher performance on the task [6, 25]. This study extends on the previous investigations to identify which social categories have an impact on performance in a collaborative task with robot partners. Following these statements, the following research question is proposed:

RQ 3

How do social categories (engagement, reliability, credibility, interaction, and presence) affect performance in a collaborative serious game, between collaborator conditions (human and robot)?

4 Methodology

4.1 Participants

This study included 70 participants (58 male and 12 female). The age range of the participants was between 19 and 31 with a mean of (\(23.56 \pm 2.338\)). Subjects were students from The Blekinge Institute of Technology. They were given a movie ticket as a reward for participating. Demographic data were collected regarding the familiarity with the ToH game task, board games in general, and solving mathematical problems.

4.2 Compliance with Ethical Standards

Before running a trial involving physical interaction between humans and robots, certain legal and ethical issues must be satisfied. Risk assessment was carried out as suggested in [68] and mandatory activities were considered [38]. The experiments were carried out by the Game Systems and Interaction Laboratory (GSIL) at Blekinge Institute of Technology, Karlskrona, Sweden. The Ethical Review Board in Lund, Sweden, has approved all experiments (reference number 2012/737) conducted in this study. The informed consent form was signed by each participant.

4.3 Experimental Setup

A crossover study with controlled experiments was conducted in a laboratory setting. Lighting and temperature were controlled in such a way that artificial fixture light was used throughout the experiment while the temperature was held constant at \(23 \pm 1\,^{\circ }\hbox {C}\). The participants were seated in a chair with fixed height and predefined position. The height and position were constant during the experiment. The two experimenters were always present in the laboratory room to monitor the experiments, but they were completely hidden behind the screen. The experimenters were present for safety reasons when using the robot arms and for monitoring that all data were recorded correctly. Surveillance of the robot arms and the participants was done using a live feed from a video camera. In addition, the robot control software included an emergency stopping sequence that would be triggered if defects in the program execution were detected, which was controlled at all times by one of the experimenters. The additional software robot blockage was introduced in the case of any software failures.

4.3.1 Data Collection

The Geneva Emotion Wheel (GEW) was used for self-assessment report of the elicited emotional experience [69]. This tool allowed for fine-grained sampling of a large spectrum of emotional experiences as it includes 20 emotion categories (plus ’other emotion’ and ’no emotion’ categories), evenly sampling both negative and positive emotions. Arranged in a circle, the tool mapped emotion quality on a two-dimensional valence-arousal space. The emotion intensity was symbolized as the distance from the origin, graphically represented as a set of circles with an increasing circumference (creating an ordinal scale from 0 to 5). The participants were instructed to rate their emotional experience after every run of the game task, by marking the intensity of elicited emotions for which the experience was most salient.

The original Interactive Experiences Questionnaire was developed as a standardized survey for testing presence, specifically for feelings of presence in films [61]. The questionnaire was adapted by Kidd and Breazeal [70] to measure the perceived presence in three characters: a human, a robot, and a cartoon robot. The survey for this study was adapted from Kidd and Breazeal’s Interactive experiences questionnaire. Replies to both questionnaires were grouped based on the previous research [65, 70] (see “Appendix A”). The replies were given on a seven-point Likert scale, after which they were summed and normalized before the analysis [71].

Regarding the Trust and Respect questionnaire, questions in the first two sections were taken from an earlier questionnaire which was designed to measure five of the six dimensions of social presence [61]. The concept of presence as social richness referred to a medium being seen as “sociable, warm, sensitive, personal or intimate when it is used to interact with other people” [61]. As in the study from Burgoon et al. [25], responses from the Solo, Human and Robot collaborator conditions were counterbalanced to avoid the ordering effects.

To measure the trust felt by a person towards a robot, the receptivity/trust sub-scale of the Relational Communication Scale was adapted to apply to the robot [72]. The adaptation in this case was simply changing “he/she” in the original scale to “the robot” or “the human” (see “Appendix B”). Calculations of Cronbach’s alpha reported in the appendixes were done on the data that were presented in [25].

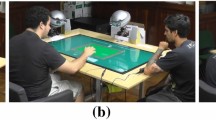

Experiment conditions: a the Solo condition in which a participant is playing the game on his own; b playing with the Human Collaborator emulating the direct robot collaborator condition, with a direct path at fixed speed; c the Direct Robot Collaborator condition, always moving in a similar fashion with a direct path at fixed speed; d the Non-Direct Robot Collaborator condition which had one additional non-direct random point inserted in its path when performing the moves at varying speeds

4.3.2 Task Description and Reasoning

The Tower of Hanoi (ToH) serious game was used as a tool for the investigation purposes in the context of human-robot collaborative interaction. The game was easy for the robots to handle since an optimal solution to the game exists, and it was a reasonable challenge for most humans. ToH was originally a single player game. During the collaborative gameplay, human-human or human-robot took turns to complete the game. The rules were explained in [73]. In short, ToH is a mathematical game consisting of three rods on which a number of disks of different sizes can be placed. The goal of the game is to start from a given configuration of the disks on the leftmost peg and to arrive with a minimal number of moves at the same configuration on the rightmost peg [73]. In this study, the serious game started from a given configuration of the disks, which was the same for all participants and referred to the beginning configuration in the game definition above. The individual turns consisted of moving any single disc to a next legal position, interchangeably between the human and robot collaborator until the final configuration of disks was reached on the opposite peg from the start. The human participant always started first. Also, similar to implementing artificial intelligence (AI) for checkerboard games [27], ToH’s AI rules were relatively simple. In games where social actions are not required (e.g., chess or checkers) and there exists only one human opponent, AI agents that play optimally are possible [52]. At any given moment during the game there was just one possible optimal step to move a disk towards the final configuration. The participants or the collaborators always had an option to select the optimal step as their next move. The participants also had an option to move a disk in any other legal position, which would not necessarily lead towards the final configuration. Such a non-optimal step was only allowed to the participants as the collaborators always took the optimal step to move a disk towards the final configuration.

The effect of human and robot collaborator conditions on performance in a serious game was investigated in this experiment. The main manipulations are presented on Fig. 1: (a) Solo condition in which the participant is playing the game on his own; (b) playing with the Human Collaborator emulating the direct robot collaborator condition, with a direct path at fixed speed; (c) the Direct Robot Collaborator condition, always moving in the similar fashion with the direct path at fixed speed; and (d) the Non-Direct Robot Collaborator condition which had one additional non-direct random point inserted in its path when performing the moves at varying speeds. The experiment setup was identical between the trials and participants, where the human participants played the turn-taking ToH game together with a robot or human collaborator, with the same goal of moving the disks from the starting to the final configuration. The obvious exception to this experimental setup was the Solo condition, where no collaborators were present and the participants had to play the game on their own. The experimenter had been trained to interact the same way with every participant according to a well rehearsed script, following an optimal algorithm at every move. Participants were instructed on the rules of the ToH game and trained through a practice session with the experimenter until they could finish the simple setup with three disks.

The overview of the software system for the ToH serious game. The gray boxes were third-party modules for communication with the hardware, while the black boxes were controller modules for the hardware and the serious game. The experiment data for the analysis were logged to the database (bottom right)

Emotion elicitation and social behavior were achieved by different gestured motions and speeds of the collaborating robot, designed as a platform for the investigation of human players’ perception. Concerning robot arms, 51 cm/s for speed of a large and 63 cm/s for a small robot is considered comfortable [21]. The Direct gestured motion traced a path between two end points of a current disc movement at a fixed speed of 30 cm per sec (30% of robot speed), see Fig. 1c. The Non-Direct gestured movement traced a path between two end points of a current disc movement, in between which a random point in space above the disks was inserted, randomized on each turn; furthermore, such “random” path was traced at 5 cm/s up to 70 cm/s (5–70% of robot speed), also randomized on each turn, see Fig. 1d. Speeds for the Non-Direct Robot Collaborator were equally distributed across the exploration space, resulting in the homogeneous distribution of speeds. The robot arm passed through all the three mentioned points in space while making its move before arriving at a final disc movement position. A demonstration of the experimental setup is shown in Fig. 2. It shows the experimental setup of a human-robot cooperation.

4.4 Hardware System

The hardware system [74] contained two Adept Viper S6501Footnote 1 6 DOF robot arms with Robotiq Adaptive 2-finger GrippersFootnote 2 as the end effectors. The robot arms were controlled using the Adept SmartController CX control box and two Adept Motionblox-40-60R power adapters. The end effectors were controlled using two Robotiq K-1035 control boxes. The Microsoft Kinect camera was used to monitor the game state by tracking moves made by the humans and robots during the ToH game. A camera was also used for surveillance of the participants and robot arms, in case of an emergency. A single PC running Windows 7 was controlling the system.

4.5 Software Platform

The overview of the software system is shown in Fig. 3. The Action module was the core of the software system. It decided what moves to make and when to make them. The Scene module provided information about the moves made and which player was next to make a move. All the moves and the timestamps for when they were made, were saved to the disk using the MoveLogging module. The RobotControl module was responsible for executing moves. It handled the movement of the robot arms to pick up and drop the specified game disk, and when to close/release the grippers. The Scene module used Microsoft Kinect SDK 6Footnote 3 to connect to the Kinect camera, and the EmguCV5 (a C# version of OpenCVFootnote 4) library to construct a scene of the current game state. The RobotControl module used the Adept ACE softwareFootnote 5 to control the robot arms. The data collection software, the robot control software and the Kinect camera software were running on the same computer in order to sync the timestamps between different data files.

4.6 Experiment Procedure

The Electrocardiograph, Galvanic Skin Response and Electroencephalograph physiological sensors were present, but they were not used to analyze social behavior categories or elicited emotions reported through the questionnaires in this study.

Upon arrival, the following procedure was employed:

-

1.

After entering the lab room, each participant was seated in a fixed chair at the table and faced the game task at a distance of 50–60 cm.

-

2.

Participants were given written information about the experiment and the description explaining the ToH game. Before starting the experiment session, participants played a practice ToH game with three disks in order to acquaint them with the game task. They were also given written information explaining that the data will be stored confidentially. When the participants agreed to take part in the experiments, they signed an informed consent form.

-

3.

Before the experiment started, participants filled in a demographics questionnaire.

-

4.

Each participant performed an experiment with four conditions: Solo, Human Collaborator, Direct Robot Collaborator with direct gestured movements and Non-Direct Robot Collaborator with non-direct gestured movements. The order of the conditions was counterbalanced between the participants in order to minimize the ordering effects. Each experiment condition was conducted as follows:

-

(a)

A participant played the ToH game.

-

(b)

After a trial was finished, a participant was asked to mark his/her emotional state on the GEW.

-

(c)

The operator instructed a participant about what game task to perform next, by showing information signs on a laptop placed next to the subject. The operator controlled the laptop using a remote desktop.

-

(a)

The four conditions (Solo, Human Collaborator, Direct Robot Collaborator and Non-Direct Robot Collaborator) were presented to each participant, as proposed in the study [25]. For each of the four conditions, the participants played the ToH game three times (thus, a total of 12 games were played per participant). Each experimental session took around 90 minutes to complete.

4.7 Data Processing

Each reply to the GEW questionnaire was mapped to a point in Russell’s valence-arousal space [48], where valence describes if an emotion is negative, positive, or neutral; while arousal describes the physiological activation state of the body ranging from low to high. Replies to the other questionnaires were scored on a seven-point Likert scale, where 1 meant “strongly disagree” and 5 meant “strongly agree”.

The reply scores to all questionnaires were transferred into SPSS statistics software and analyzed, together with the performance measures consisting of the total number of moves per game and round. Prior to the analysis, the questionnaire data were checked for errors and normalized. Furthermore, outliers were removed using z-scores (standardized values of 3.0 or greater).

5 Results

Seventy participants from the Blekinge Institute of Technology were randomly allocated to four conditions of solving the ToH serious game. The experiment was counterbalanced such that every participants performed all of the task conditions (Human Collaborator, Direct Robot Collaborator, Non-Direct Robot Collaborator and Solo). Reported arousal and valence variables were computed from the GEW questionnaire, while the social category variables (engagement, reliability, credibility, interaction and presence) were obtained from analyzing and grouping replies to the Interactive Experiences [65, 70] and the Trust and respect questionnaire [61, 72], shown in “Appendices A and B”. Analyses were performed using SPSS with the alpha level at 0.05 for all statistical tests. Differences were analyzed using one-way ANOVA. Correlations were explored using Pearson product-moment correlation index for continuous variables. The data showed no violation of normality, linearity or homoscedasticity (data was normalized and outliers were removed before the analysis). Participants reported previous experience with robotics on a seven-point Likert scale (\(\mu = 1.7, \sigma = 1.095, N = 70\)), while 43 of 70 participants have not had any previous experience with the ToH. All of the participants in the experiment were able to finish the trials.

5.1 Collaborator Conditions and Performances

Regarding RQ 1, all of the collaborator conditions were found to have comparable performance scores, as there was no statistically significant difference in the total number of moves per round among them, as determined by a one-way ANOVA (\(F(2, 624) = 1.652\), \(p>.05\)), as presented in Fig. 4 where higher values reflect worse performance. However, a higher number of moves was expected in the Solo condition in contrast to any of the collaborators, as the participants were not expected to know the most optimal solution for the ToH game task. The difference was statistically significant between the groups as determined by one-way ANOVA (\(F(3, 824) = 21.215\), \(p < 0.001\)). A Tukey post-hoc test revealed that the number of moves to complete the serious game task was statistically significantly lower for the Human Collaborator (\(16.077 \pm 1.73, p< 0.001\)), Direct Robot Collaborator (\(16.382 \pm 2.184, p < 0.001\)), and the Non-Direct Robot Collaborator (\(16.442 \pm 2.607, p < 0.001\)) compared to playing Solo (\(18.597 \pm 6.158\)).

5.2 Elicited Emotions and Performances

The analysis of elicited emotions was conducted to understand their effect on the performance scores (number of moves) on the game task, to answer RQ 2. The serious game presented in this study was reported as arousing, with the average arousal score in the experiment of 1.65 (\(SD = 3.017\)) of a 10-point nominal scale (GEW) where higher positive values reflect higher arousal. Furthermore, all of the conditions were reported as equally arousing, since there was no statistically significant difference in the reported arousal values between the condition groups, as determined by one-way ANOVA (\(F(3, 834) = 0.194, p > 0.05\)), shown in Fig. 5.

The average score for the Perceived social categories in the ToH serious game for each collaborator condition. The scores represent replies to the Interactive Experiences and Trust and respect questionnaire given on a seven-point Likert scale. The replies were grouped and summed for each Social presence cue, normalized and averaged over the whole experiment with 95% confidence interval. The stars (asterisk) indicate a significant difference in the Credibility and Presence scores between the Human Collaborator and both of the robot collaborator conditions, at the \(p < 0.01\) probability level

The better performing participants reported higher arousal values after each round, as Pearson product-moment correlation was run to determine the relationship between the participants’ number of moves and their reported arousal values in the GEW questionnaire. There was a strong, negative correlation between the total number of moves per round and the reported arousal values in the GEW questionnaire, which was statistically significant (\(r = -\,0.094, N = 828, p = 0.006\)).

The serious game presented in this study was reported as emotionally positive, with an average valence score in the experiment of 2.0 (\(SD = 2.424\)) out of a 10-point nominal scale (GEW), where higher positive values reflect more positive valence. Furthermore, all of the conditions were reported as equally positive. There was no statistically significant difference in the reported valence values between the conditions, as determined by one-way ANOVA (\(F(3, 834) = 0.266, p > 0.05\)), presented in Fig. 5.

Participants performed the task equally well regardless of reported valence, as no significant correlation was found between reported valence and the number of moves for the Human Collaborator (\( r = -\,0.109, N = 209, p > 0.05\)), Direct Robot Collaborator (\(r = -\,0.079, N = 210, p > 0.05\)) and Non-Direct Robot Collaborator (\(r = -\,0.029, N = 206, p > 0.05\)) conditions.

5.3 Social Presence and Performances

To investigate RQ 3, regarding social presence attributed to the robot collaborators, the analysis to understand the effect of social categories on the game task performance is reported.

The serious game task environment presented in this study was reported with high scores in the social categories during the interaction (the scores are presented as a Likert scale from 1 to 7 with higher values reflecting higher presence of social cues), as shown in Fig. 6. Furthermore, all of the robot collaborator conditions were found to have equally high scores in the social categories, as reported by the replies to both of the questionnaires. The results showed a significant difference only in the Credibility (\(F(2, 207) = 10.341, p < 0.001\)) and Presence (\(F(2, 207) = 8.751, p< 0.001\)) scores, where a statistically significant difference between the conditions was found, as determined by one-way ANOVA. Tukey post-hoc test revealed that the Credibility score was statistically significantly lower for the Direct Robot Collaborator (\(5.378 \pm 0.889, p < 0.001\)) and Non-Direct Robot Collaborator (\(5.636 \pm 0.802, p = 0.027\)) conditions, compared to the Human Collaborator one (\(5.985 \pm 0.672\)). Tukey post-hoc test revealed a similar finding for the Presence score which was also significantly lower for the Direct Robot Collaborator (\(4.564 \pm 1.093, p < 0.001\)) and the Non-Direct Robot Collaborator (\(4.822 \pm 0.94, p = 0.031\)) conditions compared to the Human Collaborator one (\(5.23 \pm 0.79\)). A difference between the human and the robot collaborators in regards to the Credibility and Presence scores was expected, as humans use mechanisms from HHI to perceive robots as autonomous social agents, more or less as socially present as real human collaborators [6]. There were no statistically significant differences between the conditions for the Engagement, Reliability and Interaction (\(p > 0.05\)). This finding supports the claim from [16, 17] that embodied robots are sometimes perceived as engaging as humans.

The participants who performed worse reported a higher social categories score, as Pearson product-moment correlation was run to determine the relationship between the participants’ number of moves and their reported questionnaire replies. There was a strong positive correlation between the total number of moves per round and the social categories score for the Engagement, Credibility, Interaction and Presence, which was statistically significant (\(r = 0.300, N = 210, p < 0.001\)). While social categories score for the Reliability showed no significant correlation to the total number of moves per trial.

6 Discussion

The intention of this research was to investigate the performance of participants in a proximate interaction with human and robot collaborators, on a shared serious game task. Furthermore, this study examined the effects of elicited emotions and perceived social categories in the human-robot collaborative performance on a game task. This investigation was based on social cues (i.e., gestured movement and speed) of the collaborating robot. Overall, the collaborators in the ToH serious game elicited arousing, pleasantly valenced emotions and were perceived with high scores in the social categories. The participants performed equally well on the serious game task, regardless of the collaborator condition. Furthermore, both human and robot collaborators were found to elicit emotions which were equally arousing, with equally positive valence. The results further showed that higher arousal scores resulted in higher performance. The participants also reported significantly higher scores in both the Credibility and Presence categories for the human collaborator, compared to both of the robot collaborators.

Considering RQ 1, findings from this experiment regarding the performance on a serious game task suggested that collaborating with a robot collaborator might have been as effective as collaborating with a human one. Results from the experiment did not show a statistically significant difference in the participants’ performance between any of the collaborator conditions. This may have been because participants were highly focused on the game task, since collaborating with a physical entity eliciting diverse behaviors and strong emotional responses might have promoted a higher focus on the task [35, 66, 67]. As expected, worse performance was found in the Solo condition, possibly because help was not available from the collaborators, leaving the participants to their own skills and with enough room for non-optimal moves. All of the collaborators were playing optimally on each move.

The relevance of previous claims was further supported through RQ 2, exploring the performance in regards to elicited emotions on a collaborative serious game task. The results indicated that both the human and robot collaborators elicited emotions which were equally arousing, with equally positive valence in the context of the ToH serious game. This implied that the investigated social cues and the human collaborator condition have not had a significant effect on the elicited emotions. These findings were also supported through the previous research, where participants collaborated with physical entities eliciting diverse behaviors and strong emotional responses (positive emotions and sufficient arousal), which might have supported a higher focus on the task [35, 66, 67]. Furthermore, the results showed that higher arousal scores resulted in higher performance while valence had no significant effect, especially since these findings hold true even for the Solo condition in this investigation. These findings were supported through the previous research investigations, where high levels of focus on a task were correlated with positive emotions and sufficient arousal, which might have increased the performance on the task [66, 67]. These results provided further evidence that interacting with a robot collaborator might have been as effective as collaborating with a human one, in regards to eliciting comparable levels of arousal and valence.

Regarding RQ 3, the investigation of the performance in regards to the diverse social categories perceived suggested that, the human collaborator was perceived as significantly higher in both the Credibility and Presence categories, compared to both of the robot collaborators. This difference had been supported by the previous research, where humans use mechanisms from HHI to perceive robots as autonomous social agents, more or less as socially present as real human collaborators [6], where human collaborators were found to be perceived as more present and credible than robot collaborators. Taking these findings into consideration, this study showed a similar performance in the participants collaborating with both the human and robot collaborators. These findings were comparable with findings in the previous studies, where similarly perceived robot collaborators resulted in similar performance regarding social presence [6]. Moreover, those social categories could have supported the design of embodiment in robots, as embodiment is reciprocal and dynamical coupling between brain (control), body, and environment [75]. This implied that further investigations in human-human collaboration is needed to map out the social cues responsible for this difference, in an attempt to design more meaningful robot collaborators and serious games. Furthermore, the worse performing participants reported higher scores on all of the perceived social categories, except in the Reliability, where no significant correlation was found. A possible explanation could be that the participants who performed worse had a more meaningful and longer interaction with the collaborators, which potentially could have resulted in higher reported scores.

6.1 Limitations

The limitations of this study were several. The collaborative serious game context of this experiment and the sole serious game involved, might have limited the generalizability to any collaborative task between humans and robots. Therefore, future studies would need to include other task and serious game contexts to present a convincing general conclusion. Furthermore, the human collaborator was in a position to express other social cues that were not investigated in this study through the robot collaborators. Future studies should investigate further into the social cues of human-human collaboration. The Trust and Respect Questionnaire might not have been exhaustive enough to describe the social presence. Therefore, when findings regarding the social presence were presented, they were regarded as a sense of working together with another to accomplish a goal [22, 52], as previously used in the literature [61]. The sample population of the participants was entirely made of college undergraduates and graduates. As social robots will be integrated in various contexts, future research should deepen the understanding of different populations interacting with robot collaborators. Furthermore, there might have been a relatively weak manipulation of the two intended social cues, as these had no significant influence on performance on the task.

There might have been fatigue effects present in this experiment, but it was assumed that they were minimal. Even more so, since the results showed an increased performance even during the later trials for each participant. The experiment lasted for around 90 minutes, with the time on the task around 30 minutes, which was comparable to other similar experiments reported.

7 Conclusion

This research contributes to the current body of knowledge by having used a realistic setting in which participants actually interacted with an autonomous robot collaborator to solve a serious game task. This stands in contrast to the previous Wizard-of-Oz experiments, where an experimenter was hidden and operated or partially operated a robot, thus mimicking an autonomous behavior [53,54,55]. Furthermore, questionnaire data were collected continuously throughout a session, directly after the game task had ended (the moment of the actual experience). Such reports might have been more accurate than the reports collected after the whole session or experiment, as a recollection of an experience. Additionally, the reporting was automated, thus not having had an experimental environment contaminated with anybody other than the participants, human and robot collaborators.

Overall, the collaborators in this serious game elicited arousing, pleasantly valenced emotions and were perceived with high scores in the social categories. The results of this experiment indicated that participants’ performance on the serious game task is comparable between the human and robot collaborator conditions. Even more so, as all of the collaborator conditions elicited similar levels of emotional arousal and valence, which might have been beneficial for having a higher performance on the task. These findings motivate the introduction of autonomous robots as collaborators on tasks. Moreover, the (non-humanoid) robots and human collaborator in this study elicited similar positive emotional valence in the participants. This is important, as robots get introduced in different aspects of human lives, possibly as team members [6]. On the other hand, the human collaborator was perceived as more credible and socially present, but it had no effect on the actual performance on the task as there was no significant difference in participant’s performance between the collaborator conditions. Current results support the notion that understanding emotional and social cues underlying such collaboration from the human perspective, it would be possible to design more helpful and intelligent robots which act according to behavioral patterns that humans can understand and relate to [31]. Especially if we consider humans who will have a longer and more meaningful interaction with robot collaborators, as this study found that those individuals reported higher scores on all of the perceived social categories, except on the Reliability. Such investigations would seek to improve the quality of HRI by designing autonomous robot collaborators and serious games, that will rely on perceived social behavior and elicited emotions in an attempt to be more natural, intuitive and familiar for its users [5]. If one also considers that robots possess physical-virtual duality and have access to the game task information, one can clearly see why a choice of robot collaborator on a task would be a sound choice.

Nevertheless, in the context of a serious game collaborative task, findings from this study supported the notion that using robot collaborators might be as efficient as using human ones. The future might witness the design of robot collaborators as socially closely perceived as their human counterparts, in regards to social presence and engagement. Especially since no significant difference was found in the participants’ performance interacting with the human collaborator. Future studies should investigate further into social cues in HHI and HRI for other serious game contexts, to inform a more general design of robot collaborators, which would be as credible and as socially present as human ones. Furthermore, future research should also investigate participants’ emotions and their detection using the Psychophysiology as an important part of HRI, where the embodiment is a powerful concept in the development of adaptive autonomous systems [75]. As proposed in [52], these future studies could bring about an increase in perceived social categories of robot collaborators and facilitate a more meaningful social interaction with their partners.

Notes

www.adept.com/products/robots/6-axis/viper-s650/general, accessed 06/10/2017 09:09.

http://robotiq.com/products/2-finger-adaptive-robot-gripper, accessed 06/10/2017 09:11.

http://developer.microsoft.com/en-us/windows/kinect, accessed 06/10/2017 09:14.

http://opencv.org, accessed 06/10/2017 09:14.

www.adept.com/products/software/pc/adept-ace/general, accessed 06/10/2017 09:15.

References

Licklider JCR (1960) Man-computer symbiosis. IRE Trans Hum Factors Electron 1:4–11

Moravec HP (1998) Robot: mere machine to transcendent mind. Oxford University Press, Oxford

Dick PK (1968) Do androids dream of electric sheep. Ballantine Books, New York

Norman DA (2003) Emotional design: why we love (or hate) everyday things, 1st edn. Basic Books, New York

Breazeal CL (2002) Designing sociable robots. The MIT Press, Cambridge, MA

Fiore SM, Wiltshire TJ, Lobato EJ, Jentsch FG, Huang WH, Axelrod B (2013) Toward understanding social cues and signals in human–robot interaction: effects of robot gaze and proxemic behavior. Front Psychol 4:1–15

Dautenhahn K (2007) Methodology and themes of human–robot interaction: a growing research field. Int J Adv Robot Syst 4(1):103–108

Kanda T (2007) Field trial approach for communication robots. In: Proceedings of 16th IEEE international symposium on robot and human interactive communication (RO- MAN 2007), pp 665–666

Hinds PJ, Roberts TL, Jones H (2004) Whose job is it anyway? A study of human–robot interaction in a collaborative task. Hum Comput Interact 19(1):151–181

Thrun S, Bennewitz M (1999) MINERVA: a second-generation museum tour-guide robot. In: Proceedings of IEEE international conference on robotics and automation, vol 3. IEEE

Ros R, Baroni I, Demiris Y (2014) Adaptive humanrobot interaction in sensorimotor task instruction: from human to robot dance tutors. Robot Auton Syst 62(6):707–720

Cooney M, Nishio S, Ishiguro H (2014) Affectionate interaction with a small humanoid robot capable of recognizing social touch behavior. ACM Trans Interact Intell Syst (TIIS) 4(4):1–32

Goetz J, Kiesler S (2002) Cooperation with a robotic assistant. In: CHI’02 extended abstracts on human factors in computing systems, pp 578– 579. ACM

Rehnmark F, Bluethmann W (2005) Robonaut: the’short list’ of technology hurdles. Computer 38(1):28–37

Sidner CL, Lee C, Kidd CD, Lesh N, Rich C (2005) Explorations in engagement for humans and robots. Artif Intell 166(1–2):140–164

Burgoon JK, Bonito JA (2000) Interactivity in humancomputer interaction: a study of credibility, understanding, and influence. Comput Hum Behav 6(16):553–574

Jung Y, Lee KM (2004) Effects of physical embodiment on social presence of social robots. In: Proceedings of the seventh international workshop on presence, Spain, pp 80–87

Ijsselsteijn W, Harper B (2000) TPRW group. Virtually there? A vision on presence research. Technical report, Eindhoven University of Technology

Scholl BJ, Tremoulet PD (2000) Perceptual causality and animacy. Trends Cognit Sci 4(8):299–309

Butler JT, Agah A (2001) Psychological effects of behavior patterns of a mobile personal robot. Auton Robots 10(2):185–202

Karwowski W, Rahimi M (1991) Worker selection of safe speed and idle condition in simulated monitoring of two industrial robots. Ergonomics 34(5):531–546

Goodrich M, Schultz AC (2007) Human–robot interaction: a survey. Found Trends Hum Comput Interact 1(3):203–275

Pfeifer R, Lungarella M, Iida F (2012) The challenges ahead for ’soft’ robotics. Commun ACM 56(11):76

Zoghbi S, Parker C, Croft E, Van der Loos HFM (2010) Enhancing collaborative human–robot interaction through physiological-signal based communication. In: Proceedings of workshop on multimodal human robot interfaces at IEEE international conference on robotics and automation (ICRA 2010). IEEE

Burgoon JK, Bonito JA (2002) Testing the interactivity principle: effects of mediation, propinquity, and verbal and nonverbal modalities in interpersonal interaction. J Commun 52(3):657–677

Schaeffer J (1997) One jump ahead: challenging human supremacy in checkers. Springer, New York

Xin M, Sharlin E (2007) Playing games with robots—a method for evaluating human-robot interaction. In: Sarkar N (ed) Human–robot interaction. Itech Education and Publishing, Vienna, p 522

Loewenstein G (2000) Emotions in economic theory and economic behavior. Am Econ Rev 90(2):426–432

Adam MTP, Gamer M (2011) Measuring emotions in electronic markets. In: Proceedings of the international conference on information systems (ICIS), Shanghai, China, pp 1–19

Picard RW, Vyzas E, Healey J (2001) Toward machine emotional intelligence: analysis of affective physiological state. IEEE Trans Pattern Anal Mach Intell 23(10):1175–1191

Xin M, Sharlin E (2006) Exploring human–robot interaction through telepresence board games. In: Advances in artificial reality and tele-existence, Springer, Berlin, pp 249–261

Fong T, Nourbakhsh I, Dautenhahn K (2003) A survey of socially interactive robots. Robot Auton Syst 42(3):143–166

Susi T, Johannesson M (2007) Serious games: an overview. Technical report, chool of Humanities and Informatics, University of Skövde

Corti K (2006) Games-based learning; a serious business application. Informe de PixelLearning 34(6):1–20

Hocine N, Gouaich A (2013) Difficulty and scenario adaptation: an approach to customize therapeutic games. In: Serious games for healthcare: applications and implications, 1st edn. IGI Global, Hershey, PA, p 30

Wainer J, Dautenhahn K, Robins B, Amirabdollahian F (2013) A pilot study with a novel setup for collaborative play of the humanoid robot KASPAR with children with autism. Int J Soc Robot 6(1):45–65

Dang T-H-H, Tapus A (2013) Coping with stress using social robots as emotion-oriented tool: potential factors discovered from stress game experiment. In: Herrmann G, Pearson MJ, Lenz A, Bremner P, Spiers A, Leonards U (eds) Social robotics. Springer International Publishing, Cham, pp 160–169

Walters ML, Woods S, Koay KL, Dautenhahn K (2005) Practical and methodological challenges in designing and conducting human–robot interaction studies. In: Proceedings of the artificial intelligence and simulation of behaviour (AISB)

Brooks AG, Gray J, Hoffman G (2004) Robot’s play: interactive games with sociable machines. Comput Entertain 2(3):10–10

Sanghvi J, Castellano G, Leite I, Pereira A, McOwan PW, Paiva A (2011) Automatic analysis of affective postures and body motion to detect engagement with a game companion. In: Proceedings of the 6th international conference on human–robot interaction—HRI ’11, pp 305

Tira-Thompson EJ, Halelamien NS (2004) Tekkotsu: cognitive robotics on the sony AIBO. In: Abstract for poster at international conference on cognitive modeling, Pittsburgh, PA

Bartneck C, Van Der Hoek M (2007) Daisy, Daisy, give me your answer do!: switching off a robot. In: Proceeding of the ACM/IEEE international conference on human–robot interaction, Arlington, VA. ACM Press, New York, pp 217–222

Bechara A, Damasio AR (2005) The somatic marker hypothesis: a neural theory of economic decision. Games Econ Behav 52(2):336–372

Gross JJ (1998) The emerging field of emotion regulation : an integrative review. Rev Gen Psychol 2(3):271–299

Fenton-OCreevy M, Soane E, Nicholson N, Willman P (2011) Thinking, feeling and deciding: the influence of emotions on the decision making and performance of traders. J Org Behav 32(8):1044–1061

Gross JJ (2009) Handbook of emotion regulation, 1st edn. The Guilford Press, New York, 654 p

Goldstein A (1996) Out of control : visceral influences on behavior. Org Behav Hum Decis Process 65(3):272–292

Russell JA (1980) A circumplex model of affect. J Personal Soc Psychol 39(6):1161–1178

Koda T, Maes P (1996) Agents with faces: the effect of personification. In: Fifth IEEE international workshop on robot and human communication. IEEE, pp 189–194

Reeves B, Nass C (1996) How people treat computers, television, and new media like real people and places. Cambridge University Press, Cambridge

Reeves B, Wise K, Maldonado H, Kogure K, Shinozawa K, Naya F (2003) Robots versus on-screen agents: effects on social and emotional responses. In: The conference on human factors in computing systems (CHI 2003), Fort Lauderdale. ACM

Pereira A, Prada R, Paiva A (2012) Socially present board game opponents. In: Nijholt A, Romão, T, Reidsma D (eds) Advances in computer entertainment. Springer Berlin, Heidelberg, pp 101–116

Koay KL (2005) Methodological issues using a comfort level device in human–robot interactions. In: Proceedings of IEEE international workshop on robot and human interactive communication (ROMAN 2005). IEEE, pp 359–364

Sisbot EA, Alami R (2005) Navigation in the presence of humans. In Proceedings of 5th international conference on humanoid robots (IEEE-RAS). IEEE, pp 181–188

Syrdal DS, Dautenhahn K (2006) ’Doing the right thing wrong’-personality and tolerance to uncomfortable robot approaches. In: The 15th IEEE international symposium on robot and human interactive communication (RO-MAN06). IEEE, pp 183–188

Powers A, Kiesler S (2007) Comparing a computer agent with a humanoid robot. In: Proceedings of 2004 ACM/IEEE international conference on human–robot interaction, Washington, D.C, pp 145–152

Yamato J, Brooks R, Shinozawa K, Naya F (2003) Human-robot dynamic social interaction. NTT Tech Rev 1(6):37–43

Walters ML (2005) The influence of subjects’ personality traits on personal spatial zones in a human–robot interaction experiment. In: Proceedings of the IEEE international workshop on robot and human interactive communication (ROMAN), pp 347–352

Goetz J, Kiesler S, Powers A (2003) Matching robot appearance and behavior to tasks to improve human-robot cooperation. In: Proceedings of the 12th IEEE workshop on robot and human interactive communication (ROMAN 2003), San Francisco, CA

Castellano G, Leite I, Pereira A, Martinho C, Paiva A, McOwan PW (2012) Detecting engagement in HRI: an exploration of social and task-based context. In: Proceedings—2012 ASE/IEEE international conference on privacy, security, risk and trust and 2012 ASE/IEEE international conference on social computing, SocialCom/PASSAT 2012, pp 421–428

Lombard M, Ditton TB, Crane D (2000) Measuring presence: a literature-based approach to the development of a standardized paper-and-pencil instrument. In: Presence 2000: the third international workshop on presence, The Netherlands

Sidner CL, Kidd CD, Lee C, Lesh N (2004) Where to look: a study of human-robot engagement. In: Funchal (ed) Proceedings of the 9th international conference on Intelligent user interfaces, Madeira, Portugal. ACM, New York, NY, pp 78–84

Heerink M, Ben K, Evers V, Wielinga B (2008) The influence of social presence on acceptance of a companion robot by older people. J Phys Agents 2(2):33–40

Bengtsson B Burgoon JK (1999) The impact of anthropomorphic interfaces on influence understanding, and credibility. In: Proceedings of the 32nd Hawaii international conference on system sciences, pp 1–15

Kidd CD (2003) Sociable robots: the role of presence and task in human–robot interaction. Ph.D. thesis, Massachusetts Institute of Technology

Chen J (2007) Flow in games (and everything else). Commun ACM 50(4):31

Csikszentmihalyi M (2008) Flow: The psychology of optimal experience. In: Harper perennial, 1st edn. Harper Perennial Modern Classics, New York, 336 p

Crown Copyright (2003) An introduction to health and safety. HSE Books, London

Scherer KR (2005) What are emotions? And how can they be measured? Soc Sci Inf 44(4):695–729

Kidd CD, Breazeal C (2004) Effect of a robot on user perceptions. In: Proceedings of 2004 IEEE/RSJ international conference on intelligent robotics and systems, Japan. IEEE, pp 3559–3564

Likert R (1932) A technique for the measurement of attitudes. Arch Psychol 22:136–165

Rubin RB, Rubin AM, Graham E, Perse EM, Seibold D (2010) Communication research measures II: a sourcebook. The Guilford Press, New York

Lucas E (1893) Récréations mathématiques. Gauthier-Villars, Paris

Hagelbäck J, Hilborn O, Jerčić P, Johansson S, Lindley C, Svensson J, Wen W (2014) Psychophysiological interaction and empathic cognition for human-robot cooperative work (PsyIntEC). In: Gearing up and accelerating cross-fertilization between academic and industrial robotics research in Europe. Springer, pp 283–299

Pfeifer R, Iida F, Lungarella M (2014) Cognition from the bottom up: on biological inspiration, body morphology, and soft materials. Trends Cognit Sci 18(8):404–413

Acknowledgements

The authors are grateful for the support and suggestions of our many colleagues. Special thanks to Craig Lindley and Stefan Johansson for their guidance and suggestions. Also to the other members of the CogNeuro group at the Bleking Institute of Technology, including Olle Hilborn and and Johan Svensson, without which this project would not have been possible. Finally, we would like to thank the reviewers for their valuable insights and comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was funded by the PsyIntEC EU Project (FP7-ICT-231143) through the European Clearing House for Open Robotics Development (ECHORD).

Appendices

Appendix A: Interactive Experience Questionnaire [65, 70]

A Likert scale was used for every question depending on the collaborator condition.

Robot: Not at all 1 2 3 4 5 6 7 Very much

Human: Not at all 1 2 3 4 5 6 7 Very much

-

1.

How engaging was the interaction?

-

2.

How relaxing or exciting was the experience?

-

3.

To what extent did you experience a sensation of reality?

-

4.

How much attention did you pay to the display devices or equipment rather than to the interaction?

-

5.

How often did you feel that the character was really alive and interacting with you?

-

6.

How completely were your senses engaged?

-

7.

How natural was the interaction with the character?

-

8.

The experience caused real feelings and emotions for me.

-

9.

I was so involved in the interaction that l lost track of time.

-

10.

How often did you want to or did you move your body or part of your body either closer to or farther away from the characters you saw/heard?

-

11.

To what extent did you feel you could interact with the character?

-

12.

How often did you have the sensation that the character could also see/hear you?

-

13.

How much control over the interaction with the character did you feel that you had?

-

14.

How often did you make a sound out loud (e.g., laugh, speak) in response to someone you saw or heard in the interaction?

-

15.

How often did you smile in response to the character?

-

16.

How often did you want to or did you speak to the character?

-

17.

How often did it feel as if the character was talking directly to you?

-

18.

He/she is a lot like me.

-

19.

I would like him/her to be a friend of mine.

-

20.

l would like to talk with him/her.

-

21.

If he/she were feeling bad, l’d try to cheer him/her up.

-

22.

I looked at him/her often.

-

23.

He/she seemed to look at me often.

-

24.

He/she makes me feel comfortable, as if I am with a friend.

-

25.

I like hearing his/her voice.

-

26.

If there were a story about him/her in a newspaper or magazine, I would read it.

-

27.

I like him/her.

-

28.

I’d like to see/hear him/her again.

Appendix B: Trust and Respect Questionnaire [61, 72]

Engagement (Calculated Cronbach’s alpha: 0.85) Robot: Not at all 1 2 3 4 5 6 7 Very much

Human: Not at all 1 2 3 4 5 6 7 Very much

-

How engaging was the interaction?

-

How relaxing or exciting was the experience?

-

How completely were your senses engaged?

-

The experience caused real feelings and emotions for me.

-

I was so involved in the interaction that l lost track of time.

Reliability (Calculated Cronbach’s alpha: 0.85) All items were ranked on a seven-point Likert scale with a range of “strongly disagree” (1) to “strongly agree” (7).

-

I could depend on this robot to work correctly every time.

-

The robot seems reliable.

-

I could trust this robot to work whenever I might need it.

-

If I did the same task with the robot again, it would be equally as helpful.

Credibility (Reported Cronbach’s alpha: 0.90) Rate the robot (Likert scale 1–7 was used):

-

Kind to Cruel

-

Safe to Dangerous

-

Friendly to Unfriendly

-

Just to Unjust

-

Honest to Dishonest

-

Trained to Untrained

-

Experienced to Inexperienced

-

Qualified to Unqualified

-

Skilled to Unskilled

-

Informed to Uninformed

-

Aggressive to Meek

-

Emphatic to Hesitant

-

Bold to Timid

-

Active to Passive

-

Energetic to Tired

Presence Please give your impression of the robot: Describes poorly 1 2 3 4 5 6 7 Describes well

-

Annoying

-

Balanced

-

Compelling

-

Convincing

-

Credible

-

Enjoyable

-

Entertaining

-

Fair

-

Favorable

-

Good

-

Helpful

-

Honest

-

Homogeneous

-

Informative

-

Likable

-

Negative

-

Persuasive

-

Reliable

-

Satisfying

-

Trustworthy

-

Useful

-

Varied

-

Well-composed

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jerčić, P., Wen, W., Hagelbäck, J. et al. The Effect of Emotions and Social Behavior on Performance in a Collaborative Serious Game Between Humans and Autonomous Robots. Int J of Soc Robotics 10, 115–129 (2018). https://doi.org/10.1007/s12369-017-0437-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-017-0437-4