Abstract

Glycated hemoglobin A1c (HbA1c) is routinely used as a marker of average glycemic control, but it fails to provide data on hypoglycemia and glycemic variability, both of which are associated with adverse clinical outcomes. Self-monitoring of blood glucose (SMBG), particularly in insulin-treated patients, is a cornerstone in the management of patients with diabetes. SMBG helps with treatment decisions that aim to reduce high glucose levels while avoiding hypoglycemia and limiting glucose variability. However, repeated SMBG can be inconvenient to patients and difficult to maintain in the long term. By contrast, continuous glucose monitoring (CGM) provides a convenient, comprehensive assessment of blood glucose levels, allowing the identification of high and low glucose levels, in addition to evaluating glycemic variability. CGM using newer detection and visualization systems can overcome many of the limitations of an HbA1c-based approach while addressing the inconvenience and fragmented glucose data associated with SMBG. When used together with HbA1c monitoring, CGM provides complementary information on glucose levels, thus facilitating the optimization of diabetes therapy while reducing the fear and risk of hypoglycemia. Here we review the capabilities and benefits of CGM, including cost-effectiveness data, and discuss the potential limitations of this glucose-monitoring strategy for the management of patients with diabetes.

Funding

Sanofi US, Inc.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Glycated Hemoglobin A1c as a Marker of Glycemic Control

Identified in the mid-1960s, and subsequently recognized for its role in metabolic control, glycated hemoglobin A1c (HbA1c) is the foremost indicator of blood glucose control [1]. Its value in type 1 diabetes (T1D) and type 2 diabetes (T2D) was established in landmark studies, which showed that reducing HbA1c to close-to-normal levels decreases the risk of diabetes-related conditions (including short- and long-term microvascular complications such as retinopathy and neuropathy and long-term macrovascular diseases such as coronary artery disease and stroke) [2,3,4,5,6,7], hence the recommendations for its use as a measure of glycemic control [8, 9]. Standardized assays provide an easy, reliable, and relatively inexpensive means of obtaining HbA1c measurements [10], which are familiar to both healthcare providers and patients [1].

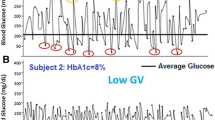

HbA1c measurement does, however, have limitations (Table 1) [11,12,13]. HbA1c values indicate the average glucose concentrations over a period of 8–12 weeks, so an HbA1c of 7%, for example, reflects an average glucose concentration of approximately 154 mg/dl (8.6 mmol/l), but may represent a range of 123–185 mg/dl (6.8 − 10.3 mmol/l), and this range broadens as HbA1c values increase [14]. However, a broader range of 50–258 mg/dl (2.8–14.3 mmol/l) would also reflect an average of 154 mg/dl, but would indicate a very different glucose response during the measurement period. Moreover, HbA1c values give no indication of intra- or inter-day fluctuations in blood glucose [15] or of episodes of hyperglycemia and hypoglycemia; patients with similar values can in fact have very different patterns of glycemic variability (Fig. 1) [15, 16]. HbA1c can also be affected by factors unrelated to glycemia (e.g., conditions affecting erythrocyte turnover, iron deficiency, genetics, and race) [17].

Reproduced from Kovatchev and Cobelli [16] © 2016 by the American Diabetes Association

Differences in glycemic variability over 15 days for two patients with similar HbA1c levels. BG blood glucose, GV glycemic variability, HbA1c glycated hemoglobin A1C

This article is based on previously conducted studies and does not contain any work performed by any of the authors with human participants or animals.

Self-Monitoring of Blood Glucose and Current Limitations

When the first blood glucose monitors for self-testing were developed in the early 1970s, concerns over their practicality, accuracy, and precision limited their use by patients [18], but monitors are now compact and convenient, providing results in a few seconds from only 0.3–1 μl of blood [15, 18]. Self-monitoring of blood glucose (SMBG) is fast, relatively inexpensive, and generally accurate [18], although low-cost meters and strips are usually less accurate and have higher lot-to-lot variability [19].

SMBG facilitates self-management and the involvement of patients in care. SMBG results can guide patients on nutrition and exercise, hypoglycemia prevention, and adjustment of medication to individual circumstances [8]. More frequent SMBG has been linked to lower HbA1c levels in patients with T1D [20] and in insulin-treated patients with T2D [21, 22], but is believed to be of limited value in those patients with T2D who are not using insulin [23]. Although SMBG frequency should be dictated by individual needs and goals, the American Diabetes Association recommends SMBG for most patients on intensive insulin regimens [i.e., those using multiple doses or continuous subcutaneous insulin infusion (CSII), known as the insulin pump] and further recommends its use to guide treatment decisions for patients on less-intensive regimens or noninsulin therapy [8].

The limitations of SMBG (Table 1) [11,12,13] largely relate to its perceived intrusiveness: it requires fingersticks several times daily [8], which can be time consuming, inconvenient, and painful, consequently leading to poor compliance [24] and impaired quality of life. SMBG data can be misreported, often because manually entered data are accidentally or deliberately incorrect (e.g., to show favorable results or to hide hyperglycemia or hypoglycemia) [25,26,27,28]. Misreporting in clinical studies is often due to data entries that cannot be correlated with a corresponding meter reading [28], and many physicians are familiar with logbooks that are filled out ‘retrospectively’ in the waiting room. Patients using SMBG need instruction and regular evaluation of their technique and use of their data to adjust therapy [8], which is a time-consuming process for healthcare providers. Ultimately, SMBG can provide only a ‘snapshot’ of a patient’s glycemic status at the time of sampling that, as for HbA1c, may not identify glucose excursions [11, 12].

Hypoglycemia

Attainment of near-normal HbA1c levels can be challenging for patients, largely because tightening glycemic control increases the risk of hypoglycemia [8, 9, 29]. In a recent observational study, 97.4% of patients with T1D, and 78.3% of patients with T2D, had experienced hypoglycemia; this experience, and fear of future hypoglycemia episodes, may lead patients to eat defensively, restrict exercise, miss work or school, or skip insulin doses [30]. Hypoglycemia, however, is not restricted to insulin use. Sulfonylureas are also associated with increased risk of hypoglycemia, particularly in older patients and those with significant renal insufficiency, which may raise questions regarding their use in these populations [31, 32]. Due to concerns regarding occurrence of hypoglycemia with sulfonylurea therapy, glucose testing is recommended, an additional burden that can limit the use of these agents.

Hypoglycemia negatively affects many aspects of a patient’s quality of life. It is associated with inadequate glycemic control, injuries due to falls or accidents (including traffic accidents) [8], and other serious complications. Long-term risks include diminished cognition (a particular concern for elderly patients) [8] and increased cardiovascular morbidity [33, 34]. Recurrent hypoglycemia may also negatively affect cognitive performance in children with T1D and in adults with long-standing diabetes [35, 36], whereas severe hypoglycemia can lead to seizure, coma, or death [8, 37,38,39,40] and has been linked to increased mortality in both clinical trials [41, 42] and in clinical practice [39].

Fear of hypoglycemia is a major barrier to glycemic control as it results in reluctance to adhere to or intensify therapy and in avoidance strategies relating to food and exercise that can adversely affect glycemic management [43]. However, recognition of hypoglycemia, especially if asymptomatic (‘silent’) or nocturnal, can be problematic [44], particularly for patients who only sporadically test their glucose levels using SMBG.

Glycemic Variability

Glycemic variability, characterized by the amplitude, frequency, and duration of fluctuations in blood glucose, can be expressed in terms of standard deviation, mean amplitude of glucose excursions, and coefficient of variation (CV) (Table 2) [45,46,47]. The CV is a measure of short-term within-day variability [45]; generally, a value < 36% defines stability, whereas a value ≥ 36% reflects instability with significantly increased risk of hypoglycemia [47].

In the short and medium term, wide glycemic variability is associated with adverse clinical outcomes (e.g., microvascular and macrovascular complications, increased mortality, and longer hospital stays) [48,49,50]. The extent of this variability has been associated with two different components of dysglycemia, namely chronic sustained hyperglycemia and acute dysglycemic fluctuations (peaks and nadirs). In the case of chronic sustained hyperglycemia, there is excessive and accelerated protein glycation, whereas in the case of acute dysglycemic fluctuations there is increased oxidative stress [51].

Minimizing glycemic variability thus appears to be a sensible treatment goal alongside that of reducing glycemic burden as measured by HbA1c, but only recently has accurate and reliable measurement of glycemic variability become possible [15, 52]. Real-time measurement of glucose levels 24 h per day is possible using continuous glucose monitoring (CGM). While CGM was initially expected to revolutionize intensive insulin therapy, progress has been gradual, largely because of issues of cost and reliability, and difficulties in use, as well as lack of a standardized format for data display and uncertainty about the best use of the copious data [53].

Technologic developments have made CGM devices easier to use, more reliable, and more cost effective; for example, some systems warn patients when blood glucose falls (or may fall) below or rises above set levels [53]. Most manufacturers recommend frequent recalibration using capillary blood glucose meters and reagent strips to maintain sensor accuracy [54]; failure to do so may result in inappropriate and risky treatment decisions.

The identification of the most clinically useful CGM metrics and the increased use of standardized, simplified data presentation allow the newer systems to provide clear and visual information upon which physicians and patients can base management decisions [53].

Current Status of CGM

CGM can be considered an advance on SMBG (Table 1) [11,12,13]. It provides a comprehensive picture of glycemic variability and allows glucose fluctuations to be linked to events such as meals, exercise, sleep, and medication intake—information that can help guide diabetes management [47].

CGM uses a fixed sensor with a subcutaneous, glucose-oxidase platinum electrode that measures glucose concentrations in the interstitial fluids [13]. However, a time lag between the measurement and display of the result due to a physiologic delay (while glucose diffuses from the vascular space to the interstitial fluid) [55, 56] can adversely affect accuracy and hamper the detection of hypoglycemia, particularly during rapid changes. The delays are smaller in adolescents than in adults, increase with age, and differ between devices for the same subject [56].

CGM devices either continuously track the glucose concentration and provide near real-time data or retrospectively show continuous measurements intermittently (i.e., when the user looks at the device). Intermittent devices include ‘flash’ systems, from which stored data can be uploaded at any time [47]. Their overall high cost can be offset by patients having intermittent blinded glucose sensors with a single reader kept by the attending healthcare professional (termed ‘professional’ CGM systems). Data are not seen in real time but are downloaded after a set period [57]; 14 days is the recommended period of time as this is the estimated minimum time of monitoring needed to obtain an accurate assessment of long-term glucose control [58].

The advantage of real time over intermittent CGM is that it can warn users of impending hypoglycemia or hyperglycemia. Data may be masked/blinded (unavailable to the patient and retrospectively viewed by the physician) or unmasked/unblinded (available to the patient and remotely to physicians and caregivers, either in real time or retrospectively) [47, 59]. Although blinding may be preferred to avoid influencing patient behavior and to help understand patients’ usual habits [57], an unblinded system may facilitate improvements in glycemic variability and help patients avoid hypoglycemia and hyperglycemia [60], and immediate feedback can help patients learn to manage the effects of food, exercise, and medication [57]. Therefore, some healthcare providers argue that real-time CGM should replace blinded methodologies, noting that blinding can result in increased risk and potential for harm in cases of unrecognized severe hypoglycemic episodes [12]; in addition, a retrospective evaluation of patients with T1D and TD2 using blinded CGM for 3 days did not show significant differences in pre- and post-study HbA1c levels [61].

Regardless of its type, CGM provides a measure of the time for which blood glucose is within the target range (‘time in range’) [70–180 mg/dl (3.9–10 mmol/l)] and of the duration and severity of hypoglycemia; it can alert to low glucose at level 1 [< 54–70 mg/dl (3.0–3.9 mmol/l) with or without symptoms], level 2 [< 54 mg/dl (3.0 mmol/l) with or without symptoms], and level 3 (severe hypoglycemia with cognitive impairment, when external assistance is needed for recovery) [47].

Overall, the new CGM devices are simpler, less expensive to use, and more accurate than older devices, and they require fewer or no daily calibrations against SMBG data [62]. Sensor accuracy is assessed through metrics such as the precision absolute relative deviation (PARD), continuous glucose error–grid analysis (CG-EGA), and mean absolute relative difference (MARD) (Table 2) [45,46,47]; a potentially overwhelming range of blood-glucose metrics can be provided by these systems [63]. A study comparing the accuracy of CGM and that of a capillary blood glucose meter built into the reader in insulin-treated patients with T1D or T2D revealed a MARD of 11.4% for CGM with stability of readings over 14 days of use [64]. A discrepancy of 10% between CGM and reference values has thus been proposed as sufficient to permit effective use of CGM without SMBG [47, 63], but this value is not based on outcome data. However, a study that used data on CGM, CSII, SMBG, and meals from patients with T1D, together with computer simulations, to determine the level of accuracy needed to forgo SMBG readings estimated an in silico MARD of 10% [65].

The use of standalone CGM systems is supported by data from an open-label, randomized trial conducted in 226 adults with T1D and point-of-care HbA1c ≤ 9.0%. Compared with CGM plus SMBG, use of CGM alone had no negative effect on time in range, and time in hypoglycemia was not significantly different between groups. One severe hypoglycemic event occurred in the CGM plus SMBG group, but there were no reported hypoglycemic events in the CGM alone group [66].

Standardized, easily understood data display formats are increasingly being used, such as the Ambulatory Glucose Profile (AGP) system [17], which provides summary statistics, graphs showing 24-h glucose and daily glucose (pooled over multiple days), and insulin doses (Fig. 2) [17, 67]. The AGP system can help primary care physicians and patients decide how best to increase the glucose time in range without increasing the risk of hypoglycemia [57], since observed glucose excursions, for example, can be related to events such as the timing and content of meals, type of exercise, medications (e.g., prandial insulin), or periods of stress or illness [68].

Ambulatory glucose profile for use in CGM devices. IQR interquartile range, CGM continuous glucose monitoring

Several CGM devices are commercially available (Table 3) [69, 70]. Unblinded, real-time CGM systems with hyperglycemia or hypoglycemia alerts may be particularly useful for patients with hypoglycemia unawareness [71], whereas personal or professional ‘flash’ CGM systems may be attractive to other groups given their longer sensor life, ease of use, relatively low cost, and no need for calibration [11].

Possible Benefits of CGM

Several studies have comprehensively demonstrated the benefits of CGM in patients with T1D, who show consistently improved glycemic control with fewer hypoglycemic events [72,73,74,75,76,77,78,79]. In a trial conducted in 322 adults and children with well-controlled T1D (HbA1c = 7.0–10%) who were predominantly receiving CSII, CGM use resulted in a significant improvement in all glycemic measures, including HbA1c reduction, at 26 weeks. This reduction was significantly greater than that achieved with SMBG in patients aged > 25 years (mean difference in change: − 0.53%; 95% confidence interval: − 0.71%, − 0.35%; P < 0.001) [72]. A subsequent analysis of the same group of patients showed that CGM significantly reduced HbA1c and time spent out of range (377 vs. 491 min/day; P = 0.003) and was associated with a numerical reduction in time spent in hypoglycemia compared with the control group (median 54 vs. 91 min/day; not statistically significant) [73]. Data from the DIAMOND study showed that use of CGM (vs. usual care, which was SMBG ≥ 4 times daily) for 24 weeks resulted in a greater decrease in HbA1c (mean reduction from baseline: 1.0% vs. 0.4%; P < 0.001) and a shorter duration of hypoglycemia (median duration of blood glucose < 70 mg/dl: 43 min/day vs. 80 min/day; P = 0.002) [76].

Other studies have assessed the effectiveness of CGM in helping individuals with impaired hypoglycemia awareness or history of severe hypoglycemia [80,81,82]. In a 24-week study, improvements in hypoglycemia awareness and reductions in the number of severe hypoglycemic events were similar whether subjects used SMBG or CGM or whether they were treated with multiple daily injections (MDIs) of insulin or CSII [80]. Furthermore, in a 16-week study of patients with T1D and impaired awareness of hypoglycemia who received CSII or MDIs of insulin, CGM resulted in fewer severe hypoglycemic events (P = 0.003), more time in normoglycemia (P < 0.0001), and less time in hypoglycemia (P < 0.0001) vs. SMBG [81]. A recent trial has shown that, in high-risk subjects with T1D treated with MDIs of insulin, the use of CGM reduced the incidence of hypoglycemic events by 72% (P < 0.0001) compared with SMBG [82].

CGM may also help elucidate patients’ responses to different insulin formulations and other hypoglycemic agents. A study of patients with T1D treated with two concentrations of insulin glargine used CGM to evaluate several aspects of glycemic control. Patients were randomly assigned to inject insulin glargine 100 or 300 U/ml in the morning or the evening for 8 weeks and then in the evening (if previously morning) or morning (if previously evening) at the same dose for 8 more weeks. CGM revealed a 24-h glucose profile that was more consistent, with fewer glucose fluctuations, with insulin glargine 300 U/ml vs. the 100 U/ml preparation, regardless of time of injection. Patients using insulin glargine 300 U/ml also had fewer episodes of confirmed severe nocturnal hypoglycemia [83]. In addition, the use of CGM in the investigational evaluation of a sodium-glucose co-transporter 2 inhibitor as adjunctive treatment in patients with T1D demonstrated the clinical value of the agent beyond HbA1c control through improvement of time in range [84].

Although conflicting data exist, clinical trials have shown that use of CGM not only reduces HbA1c and hypoglycemia, but it may also attenuate the fear of hypoglycemia and diabetes-related stress and improve quality of life [79, 85,86,87,88]. A real-world study of adults with T1D using CSII who started CGM showed decreased hospitalizations due to hypoglycemia and/or ketoacidosis and reduction in hospital stays and work absenteeism after 1 year [89].

Data in T2D are more limited but nevertheless provide evidence of greater benefits of CGM over SMBG in glycemic control for patients receiving MDI of insulin [77, 90] or other regimens [91, 92]. In a 52-week randomized trial in patients with T2D treated with various regimens (except prandial insulin), the mean decline in HbA1c at 12 weeks was 1.0% (± 1.1%) with CGM for four 2-week cycles (2 weeks on, 1 week off) and 0.5% (± 0.8%) with SMBG (P = 0.006) [91]. In the DIAMOND study, patients with T2D who received MDI of insulin showed a mean HbA1c reduction of 1.0% with CGM vs. 0.6% with usual care (adjusted difference: − 0.3%; P = 0.005); there were no meaningful differences in time spent in hypoglycemia or changes from baseline in insulin dose [76]. Older adults (aged ≥ 60 years) with T1D or T2D in the DIAMOND study also had significantly greater reductions from baseline in HbA1c with CGM vs. SMBG (0.9 ± 0.7% vs. − 0.5 ± 0.7%, adjusted difference in mean change: − 0.4 ± 0.1%; P < 0.001) [93].

Recent data show the benefits of ‘flash’ CGM. Its use in two randomized clinical trials reduced the time spent in hypoglycemia by 38% in patients with well-controlled T1D [94] and by 43% in insulin-treated adults (56% for those aged ≥ 65 years) with T2D [95]. Both reductions were significantly greater than those seen with SMBG. A real-world study of patients with T1D or T2D showed greater reductions in HbA1c levels with ‘flash’ CGM vs. SMBG, with a more marked difference between groups in T1D [96]. A recent large-scale (of more than 50,000 users), real-world study has further shown that the number of glucose checks using ‘flash’ glucose monitoring is inversely associated with time spent in hypoglycemia or hyperglycemia and positively correlated with time spent in euglycemia [97]. However, the I HART CGM study showed that switching from ‘flash’ to real-time CGM has a greater favorable impact on hypoglycemia for adults with T1D at high risk of hypoglycemia (reduction in percentage time in hypoglycemia from 5% to 0.8%) [98], indicating that ‘flash’ CGM would not be indicated for this specific patient population. In addition, a direct comparison of glucose concentration measured by ‘flash’ vs. CGM systems showed overall lower values for the ‘flash’ system compared with SMBG, with discrepancies between systems seen during hypoglycemia [99]. This further highlights the need for frequent collection of glucose data to optimize glycemic control and minimize the risk of hypoglycemia.

There is also evidence on the clinical effectiveness of integrated CGM and insulin pump systems (sensor-augmented pump therapy) for the management of T1D. These systems warn of abnormal blood glucose levels so that the user can adjust the insulin infusion rate. More recent devices can automatically stop insulin delivery for up to 2 h (and then the insulin infusion basal rate is restored) if they predict a hypoglycemic episode. A systematic review showed that attainment of HbA1c < 7% and improved quality of life at 6 months of follow-up were reported in a higher proportion of patients using integrated CSII plus CGM systems than in those using SMBG or CSII plus SMBG and MDI [100].

It is important to note that the benefits of CGM were shown in clinical trials with treatment adherence rates higher than 85% [73, 76, 101], but the compliance rate in patients with T1D in the real world is much lower (for example, only 8–17% for patients treated in specialty clinics) [102, 103]. In the US, CGM is least used by adolescents and young adults (< 10%) and most used in adults aged 26–49 years (23%) [104]. Barriers to routine CGM use include limited accuracy, inadequate reimbursement/cost, educational needs, patient annoyance due to frequent alarms, insertion pain, body image issues, and interference with daily life [103].

Guidelines on the Use of CGM

CGM recommendations by professional bodies vary and are more consistent for T1D than for T2D (Table 4) [8, 47, 67, 105]; they are, in general, conservative. The broadest and most recent guidelines are those from an international panel of physicians, researchers, and experts in CGM technology, who recommend CGM alongside HbA1c monitoring to assess glycemic status and inform adjustments to therapy in all patients with T1D and in patients with T2D receiving intensive insulin therapy but not reaching targets, especially if hypoglycemia is problematic [47].

Glycemic control should be assessed on the basis of key metrics provided by CGM, including glycemic variability, time in range, time above range (i.e., hyperglycemia), and time below range (i.e., hypoglycemia). These metrics should be obtained from 70 to 80% of all possible readings made during ≥ 14 days of CGM [47].

Recent guidelines have highlighted the need to consider outcomes beyond HbA1c control, such as a standard time in range, as an endpoint in clinical trials [53, 106]. This is supported by advocacy groups and the US Food and Drug Administration [107].

In general, despite mounting evidence of its effectiveness, only a small proportion of patients have access to CGM. This is mainly due to cost/reimbursement issues, an absence of specific guidelines on CGM use, and the complexity of the technology. Additional real-world cost-effectiveness data are needed to support routine use of CGM. Once technologic improvements produce easier-to-use devices with easy-to-understand data displays that are more cost effective than SMBG, CGM can be used alongside HbA1c monitoring to guide management strategies that better achieve optimal and stable glycemic control with a low risk of hypoglycemia [17].

Cost Effectiveness of CGM Devices

Overall, CGM is more effective than SMBG but is associated with higher costs [100, 108]. A meta-analysis showed that the most cost-effective use of CGM is probably for people with T1D and continued poor glycemic control despite intensified insulin therapy [109]. A cost-effectiveness analysis of data from the DIAMOND study showed that, in adults with T1D and elevated HbA1c (≥ 7.5%), CGM increased costs compared with SMBG but was a lifetime cost-effective intervention when clinical benefits (HbA1c reduction, daily strip use, and frequency of non-severe hypoglycemia) were taken into account [110]. Similarly, for high-risk patients with T1D and impaired hypoglycemia awareness, the economic impact of CGM is counteracted by lower hypoglycemia-related costs, reduced SMBG strip use, avoidance of HbA1c-related complications, and reduced insulin pump use [111]. CGM is also known to be cost effective in the management of patients with T2D not treated with prandial insulin [112].

Future of CMG

Closed-loop systems (‘artificial pancreas’), which consist of a CGM monitor and an insulin pump that delivers insulin through a standardized algorithm, have recently emerged as a means to attain glucose control in T1D. A systematic review and meta-analysis of randomized studies evaluating artificial pancreas systems in adult and pediatric patients with T1D in the outpatient setting showed improved glucose control, with higher time in target, compared with conventional pumps [113]. While these systems ensure a smooth glucose profile overnight with low risk of hypoglycemia, user input is usually required during meal times. Some devices are exploring fully automated options, but these run higher risk of hypoglycemia vs. systems requiring input of information on meal activity [114].

Conclusions

Although HbA1c remains the cornerstone of glycemic status monitoring, even ‘good’ glycemic control may include substantial blood glucose fluctuations and excursions into hyperglycemia and hypoglycemia, both of which are associated with short- and long-term complications. Hypoglycemia in particular represents a key challenge to safely achieving and maintaining glycemic targets.

SMBG gives an indication of glycemic variability, but each measurement provides only a ‘snapshot’ of blood glucose levels, and glucose excursions may be missed. The need for repeated daily fingersticks limits its usefulness, and ‘forgotten’ logbooks or meters and incomplete or inaccurate SMBG data may leave physicians without the information they need to optimize their patients’ glycemic control.

CGM has demonstrated the importance of glycemic variability and its association with hypoglycemia independently of HbA1c values. In both clinical trials and in the real-world setting, CGM was effective in reducing glucose levels and hypoglycemia as well as in attenuating diabetes stress and improving quality of life vs. usual care, but treatment compliance is key for effectiveness. Modern, easy-to-use CGM systems, particularly devices that do not require regular calibration, and simplified data displays could overcome many of the limitations of HbA1c monitoring and SMBG.

In summary, CGM provides physicians with the ability to improve on conventional methods of blood glucose monitoring and offers valuable additional data to inform better and safer decision-making.

References

Gebel E. The start of something good: the discovery of HbA1c and the American Diabetes Association Samuel Rahbar Outstanding Discovery Award. Diabetes Care. 2012;35:2429–31.

Diabetes Control and Complications Trial Research Group. The effect of intensive treatment of diabetes on the development and progression of long-term complications in insulin-dependent diabetes mellitus. N Engl J Med. 1993;329:977–86.

UK Prospective Diabetes Study (UKPDS) Group. Intensive blood-glucose control with sulphonylureas or insulin compared with conventional treatment and risk of complications in patients with type 2 diabetes (UKPDS 33). Lancet. 1998;352:837–53.

UK Prospective Diabetes Study (UKPDS) Group. Effect of intensive blood-glucose control with metformin on complications in overweight patients with type 2 diabetes (UKPDS 34). Lancet. 1998;352:854–65.

Diabetes Control and Complications Trial/Epidemiology of Diabetes Interventions and Complications Research Group, Lachin JM, Genuth S, Cleary P, Davis MD, Nathan DM. Retinopathy and nephropathy in patients with type 1 diabetes four years after a trial of intensive therapy. N Engl J Med. 2000;342:381–9.

Writing Team for the Diabetes Control and Complications Trial/Epidemiology of Diabetes Interventions and Complications Research Group. Effect of intensive therapy on the microvascular complications of type 1 diabetes mellitus. JAMA. 2002;287:2563–9.

Diabetes Control and Complications Trial (DCCT)/Epidemiology of Diabetes Interventions and Complications (EDIC) Study Research Group. Intensive diabetes treatment and cardiovascular outcomes in type 1 diabetes: the DCCT/EDIC study 30-year follow-up. Diabetes Care. 2016;39:686–93.

American Diabetes Association. Standards of medical care in diabetes—2018. Diabetes Care. 2018;41(Suppl. 1):S1–153.

Garber AJ, Abrahamson MJ, Barzilay JI, et al. Consensus statement by the American Association of Clinical Endocrinologists and American College of Endocrinology on the comprehensive type 2 diabetes management algorithm—2018 executive summary. Endocr Pract. 2018;24:91–120.

Weykamp C, John WG, Mosca A. A review of the challenge in measuring haemoglobin A1c. J Diabetes Sci Technol. 2009;3:439–45.

Ajjan RA. How can we realize the clinical benefits of continuous glucose monitoring? Diabetes Technol Ther. 2017;19:S27–36.

Ahn D, Pettus J, Edelman S. Unblinded CGM should replace blinded CGM in the clinical management of diabetes. J Diabetes Sci Technol. 2016;10:793–8.

Patton SR, Clements MA. Continuous glucose monitoring versus self-monitoring of blood glucose in children with type 1 diabetes—are there pros and cons for both? US Endocrinol. 2012;8:27–9.

Nathan DM, Kuenen J, Borg R, Zheng H, Schoenfeld D, Heine RJ, A1c-Derived Average Glucose Study Group. Translating the A1C assay into estimated average glucose values. Diabetes Care. 2008;31:1473–8.

Rayman G. Glycaemic control, glucose variability and the triangle of diabetes care. Br J Diabetes. 2016;16(Suppl. 11):S3–6.

Kovatchev B, Cobelli C. Glucose variability: timing, risk analysis, and relationship to hypoglycemia in diabetes. Diabetes Care. 2016;39:502–10.

Hirsch IB, Verderese CA. Professional flash continuous glucose monitoring with ambulatory glucose profile reporting to supplement A1C: rationale and practical implementation. Endocr Pract. 2017;23:1333–44.

Clarke SF, Foster JR. A history of blood glucose meters and their role in self-monitoring of diabetes mellitus. Br J Biomed Sci. 2012;69:83–93.

Brazg RL, Klaff LJ, Parkin CG. Performance variability of seven commonly used self-monitoring of blood glucose systems: clinical considerations for patients and providers. J Diabetes Sci Technol. 2013;7:144–52.

Miller KM, Beck RW, Bergenstal RM, et al. Evidence of a strong association between frequency of self-monitoring of blood glucose and hemoglobin A1c levels in T1D exchange clinic registry participants. Diabetes Care. 2013;36:2009–14.

Schütt M, Kern W, Krause U, et al. Is the frequency of self-monitoring of blood glucose related to long-term metabolic control? Multicenter analysis including 24,500 patients from 191 centers in Germany and Austria. Exp Clin Endocrinol Diabetes. 2006;114:384–8.

Karter AJ, Ackerson LM, Darbinian JA, et al. Self-monitoring of blood glucose levels and glycemic control: the Northern California Kaiser Permanente Diabetes registry. Am J Med. 2001;111:1–9.

Malanda UL, Welschen LM, Riphagen II, Dekker JM, Nijpels G, Bot SD. Self-monitoring of blood glucose in patients with type 2 diabetes mellitus who are not using insulin. Cochrane Database Syst Rev. 2012;1:CD005060.

Moström P, Ahlén E, Imberg H, Hansson PO, Lind M. Adherence of self-monitoring of blood glucose in persons with type 1 diabetes in Sweden. BMJ Open Diabetes Res Care. 2017;5:e000342.

Mazze RS, Shamoon H, Pasmantier R, et al. Reliability of blood glucose monitoring by patients with diabetes mellitus. Am J Med. 1984;77:211–7.

Kalergis M, Nadeau J, Pacaud D, Yared Z, Yale J-F. Accuracy and reliability of reporting self-monitoring of blood glucose results in adults with type 1 and type 2 diabetes. Can J Diabetes. 2006;30:241–7.

Blackwell M, Tomlinson PA, Rayns J, Hunter J, Sjoeholm A, Wheeler BJ. Exploring the motivations behind misreporting self-measured blood glucose in adolescents with type 1 diabetes—a qualitative study. J Diabetes Metab Disord. 2016;15:16.

Blackwell M, Wheeler BJ. Clinical review: the misreporting of logbook, download, and verbal self-measured blood glucose in adults and children with type I diabetes. Acta Diabetol. 2017;54:1–8.

Cryer PE. Glycemic goals in diabetes: trade-off between glycemic control and iatrogenic hypoglycemia. Diabetes. 2014;63:2188–95.

Khunti K, Alsifri S, Aronson R, et al. Impact of hypoglycaemia on patient-reported outcomes from a global, 24-country study of 27,585 people with type 1 and insulin-treated type 2 diabetes. Diabetes Res Clin Pract. 2017;130:121–9.

Sola D, Rossi L, Schianca GP, et al. Sulfonylureas and their use in clinical practice. Arch Med Sci. 2015;11:840–8.

van Dalem J, Brouwers MC, Stehouwer CD, et al. Risk of hypoglycaemia in users of sulphonylureas compared with metformin in relation to renal function and sulphonylurea metabolite group: population based cohort study. BMJ. 2016;354:i3625.

King R, Ajjan R. Hypoglycaemia, thrombosis and vascular events in diabetes. Expert Rev Cardiovasc Ther. 2016;14:1099–101.

Kalra S, Mukherjee JJ, Venkataraman S, et al. Hypoglycemia: the neglected complication. Indian J Endocrinol Metab. 2013;17:819–34.

Brands AMA, Biessels GJ, de Haan EHF, Kappelle LJ, Kessels RPC. The effects of type 1 diabetes on cognitive performance. A meta-analysis. Diabetes Care. 2005;28:726–35.

Blasetti A, Chiuri RM, Tocco AM, et al. The effect of recurrent severe hypoglycemia on cognitive performance in children with type 1 diabetes: a meta-analysis. J Child Neurol. 2011;26:1383–91.

Khunti K, Davies M, Majeed A, Thorsted BL, Wolden ML, Paul SK. Hypoglycemia and risk of cardiovascular disease and all-cause mortality in insulin-treated people with type 1 and type 2 diabetes: a cohort study. Diabetes Care. 2015;38:316–22.

Elwen FR, Huskinson A, Clapham L, et al. An observational study of patient characteristics and mortality following hypoglycemia in the community. BMJ Open Diabetes Res Care. 2015;3:e000094.

McCoy RG, Van Houten HK, Ziegenfuss JY, Shah ND, Wermers RA, Smith SA. Increased mortality of patients with diabetes reporting severe hypoglycemia. Diabetes Care. 2012;35:1897–901.

Pieber TR, Marso SP, McGuire DK, et al. DEVOTE 3: temporal relationships between severe hypoglycaemia, cardiovascular outcomes and mortality. Diabetologia. 2018;61:58–65.

Ismail-Beigi F, Craven T, Banerji MA, et al. Effect of intensive treatment of hyperglycaemia on microvascular outcomes in type 2 diabetes: an analysis of the ACCORD randomised trial. Lancet. 2010;376:419–30.

Zoungas S, Patel A, Chalmers J, et al. Severe hypoglycemia and risks of vascular events and death. N Engl J Med. 2010;363:1410–8.

Amiel SA, Dixon T, Mann R, Jameson K. Hypoglycaemia in type 2 diabetes. Diabet Med. 2008;25:245–54.

Martín-Timón I, Del Cañizo-Gómez FJ. Mechanisms of hypoglycemia unawareness and implications in diabetic patients. World J Diabetes. 2015;6:912–26.

Monnier L, Colette C, Owens DR. The application of simple metrics in the assessment of glycaemic variability. Diabetes Metab. 2018;44:313–9.

Freckmann G, Pleus S, Link M, Haug C. Accuracy of BG meters and CGM systems: possible influence factors for the glucose prediction based on tissue glucose concentrations. In: Kirchsteiger H, Jørgensen JB, Renard E, del Re L, editors. Prediction methods for blood glucose concentration: design, use and evaluation. Cham: Springer International Publishing; 2016. p. 31–42.

Danne T, Nimri R, Battelino T, et al. International consensus on use of continuous glucose monitoring. Diabetes Care. 2017;40:1631–40.

Mendez CE, Mok KT, Ata A, Tanenberg RJ, Calles-Escandon J, Umpierrez GE. Increased glycemic variability is independently associated with length of stay and mortality in noncritically ill hospitalized patients. Diabetes Care. 2013;36:4091–7.

Gorst C, Kwok CS, Aslam S, et al. Long-term glycemic variability and risk of adverse outcomes: a systematic review and meta-analysis. Diabetes Care. 2015;38:2354–69.

Hirakawa Y, Arima H, Zoungas S, et al. Impact of visit-to-visit glycemic variability on the risks of macrovascular and microvascular events and all-cause mortality in type 2 diabetes: the ADVANCE trial. Diabetes Care. 2014;37:2359–65.

Monnier L, Colette C. Glycemic variability: should we and can we prevent it? Diabetes Care. 2008;31(Suppl. 2):S150–4.

Kovatchev BP. Metrics for glycaemic control—from HbA(1c) to continuous glucose monitoring. Nat Rev Endocrinol. 2017;13:425–36.

Petrie JR, Peters AL, Bergenstal RM, Holl RW, Fleming GA, Heinemann L. Improving the clinical value and utility of CGM systems: issues and recommendations: a joint statement of the European Association for the Study of Diabetes and the American Diabetes Association Diabetes Technology Working Group. Diabetes Care. 2017;40:1614–21.

Acciaroli G, Vettoretti M, Facchinetti A, Sparacino G. Calibration of minimally invasive continuous glucose monitoring sensors: state-of-the-art and current perspectives. Biosensors (Basel). 2018;8:E24.

Schmelzeisen-Redeker G, Schoemaker M, Kirchsteiger H, Freckmann G, Heinemann L, Del Re L. Time delay of CGM sensors: relevance, causes, and countermeasures. J Diabetes Sci Technol. 2015;9:1006–15.

Sinha M, McKeon KM, Parker S, et al. A comparison of time delay in three continuous glucose monitors for adolescents and adults. J Diabetes Sci Technol. 2017;11:1132–7.

Carlson AL, Mullen DM, Bergenstal RM. Clinical use of continuous glucose monitoring in adults with type 2 diabetes. Diabetes Technol Ther. 2017;19:S4–11.

Riddlesworth TD, Beck RW, Gal RL, et al. Optimal sampling duration for continuous glucose monitoring to determine long-term glycemic control. Diabetes Technol Ther. 2018;20:314–6.

Edelman SV, Argento NB, Pettus J, Hirsch IB. Clinical implications of real-time and intermittently scanned continuous glucose monitoring. Diabetes Care. 2018;41:2265–74.

Rodbard D, Bailey T, Jovanovic L, Zisser H, Kaplan R, Garg SK. Improved quality of glycemic control and reduced glycemic variability with use of continuous glucose monitoring. Diabetes Technol Ther. 2009;11:717–23.

Pepper GM, Steinsapir J, Reynolds K. Effect of short-term iPRO continuous glucose monitoring on hemoglobin A1c levels in clinical practice. Diabetes Technol Ther. 2012;14:654–7.

Rodbard D. Continuous glucose monitoring: a review of recent studies demonstrating improved glycemic outcomes. Diabetes Technol Ther. 2017;19:S25–37.

Bailey TS. Clinical implications of accuracy measurements of continuous glucose sensors. Diabetes Technol Ther. 2017;19:S51–4.

Bailey T, Bode BW, Christiansen MP, Klaff LJ, Alva S. The performance and usability of a factory-calibrated flash glucose monitoring system. Diabetes Technol Ther. 2015;17:787–94.

Kovatchev BP, Patek SD, Ortiz EA, Breton MD. Assessing sensor accuracy for non-adjunct use of continuous glucose monitoring. Diabetes Technol Ther. 2015;17:177–86.

Aleppo G, Ruedy KJ, Riddlesworth TD, et al. REPLACE-BG: a randomized trial comparing continuous glucose monitoring with and without routine blood glucose monitoring in adults with well-controlled type 1 diabetes. Diabetes Care. 2017;40:538–45.

Bailey TS, Grunberger G, Bode BW, et al. American Association of Clinical Endocrinologists and American College of Endocrinology 2016 outpatient glucose monitoring consensus statement. Endocr Pract. 2016;22:231–61.

Hirsch IB. Professional flash continuous glucose monitoring as a supplement to A1C in primary care. Postgrad Med. 2017;129:781–90.

Cappon G, Acciaroli G, Vettoretti M, Facchinetti A, Sparacino G. Wearable continuous glucose monitoring sensors: a revolution in diabetes treatment. Electronics. 2017;6:65.

Dexcom G6 CGM. Available from: https://www.dexcom.com/g6-cgm-system. Accessed Oct 2018.

Reddy M, Jugnee N, El Laboudi A, Spanudakis E, Anantharaja S, Oliver N. A randomized controlled pilot study of continuous glucose monitoring and flash glucose monitoring in people with type 1 diabetes and impaired awareness of hypoglycaemia. Diabet Med. 2018;35:483–90.

Juvenile Diabetes Research Foundation Continuous Glucose Monitoring Study Group, Tamborlane WV, Beck RW, et al. Continuous glucose monitoring and intensive treatment of type 1 diabetes. N Engl J Med. 2008;359:1464–76.

Juvenile Diabetes Research Foundation Continuous Glucose Monitoring Study Group, Beck RW, Buckingham B, et al. Factors predictive of use and of benefit from continuous glucose monitoring in type 1 diabetes. Diabetes Care. 2009;32:1947–53.

Juvenile Diabetes Research Foundation Continuous Glucose Monitoring Study Group. Effectiveness of continuous glucose monitoring in a clinical care environment: evidence from the Juvenile Diabetes Research Foundation continuous glucose monitoring (JDRF-CGM) trial. Diabetes Care. 2010;33:17–22.

Deiss D, Bolinder J, Riveline JP, et al. Improved glycemic control in poorly controlled patients with type 1 diabetes using real-time continuous glucose monitoring. Diabetes Care. 2006;29:2730–2.

Beck RW, Riddlesworth T, Ruedy K, et al. Effect of continuous glucose monitoring on glycemic control in adults with type 1 diabetes using insulin injections: the DIAMOND randomized clinical trial. JAMA. 2017;317:371–8.

Gandhi GY, Kovalaske M, Kudva Y, et al. Efficacy of continuous glucose monitoring in improving glycemic control and reducing hypoglycemia: a systematic review and meta-analysis of randomized trials. J Diabetes Sci Technol. 2011;5:952–65.

Yeh HC, Brown TT, Maruthur N, et al. Comparative effectiveness and safety of methods of insulin delivery and glucose monitoring for diabetes mellitus: a systematic review and meta-analysis. Ann Intern Med. 2012;157:336–47.

Lind M, Polonsky W, Hirsch IB, et al. Continuous glucose monitoring vs conventional therapy for glycemic control in adults with type 1 diabetes treated with multiple daily insulin injections: the GOLD randomized clinical trial. JAMA. 2017;317:379–87.

Little SA, Leelarathna L, Walkinshaw E, et al. Recovery of hypoglycemia awareness in long-standing type 1 diabetes: a multicenter 2 × 2 factorial randomized controlled trial comparing insulin pump with multiple daily injections and continuous with conventional glucose self-monitoring (HypoCOMPaSS). Diabetes Care. 2014;37:2114–22.

van Beers CA, DeVries JH, Kleijer SJ, Smits MM, Geelhoed-Duijvestijn PH, Kramer MH, et al. Continuous glucose monitoring for patients with type 1 diabetes and impaired awareness of hypoglycaemia (IN CONTROL): a randomised, open-label, crossover trial. Lancet Diabetes Endocrinol. 2016;4:893–902.

Heinemann L, Freckmann G, Ehrmann D, et al. Real-time continuous glucose monitoring in adults with type 1 diabetes and impaired hypoglycaemia awareness or severe hypoglycaemia treated with multiple daily insulin injections (HypoDE): a multicentre, randomised controlled trial. Lancet. 2018;391:1367–77.

Bergenstal RM, Bailey TS, Rodbard D, et al. Comparison of insulin glargine 300 U/mL and 100 U/mL in adults with type 1 diabetes: continuous glucose monitoring profiles and variability using morning or evening injections. Diabetes Care. 2017;40:554–60.

Argento NB, Nakamura K. Glycemic effects of SGLT-2 inhibitor canagliflozin in type 1 diabetes patients using the Dexcom G4 PLATINUM CGM. Endocr Pract. 2016;22:315–22.

Juvenile Diabetes Research Foundation Continuous Glucose Monitoring Study Group, Beck RW, Lawrence JM, Laffel L, et al. Quality-of-life measures in children and adults with type 1 diabetes: Juvenile Diabetes Research Foundation continuous glucose monitoring randomized trial. Diabetes Care. 2010;33:2175–7.

Polonsky WH, Hessler D, Ruedy KJ, Beck RW, Group DS. The impact of continuous glucose monitoring on markers of quality of life in adults with type 1 diabetes: further findings from the DIAMOND randomized clinical trial. Diabetes Care. 2017;40:736–41.

Kubiak T, Mann CG, Barnard KC, Heinemann L. Psychosocial aspects of continuous glucose monitoring: connecting to the patients’ experience. J Diabetes Sci Technol. 2016;10:859–63.

Patton SR, Clements MA. Psychological reactions associated with continuous glucose monitoring in youth. J Diabetes Sci Technol. 2016;10:656–61.

Charleer S, Mathieu C, Nobels F, et al. Effect of continuous glucose monitoring on glycemic control, acute admissions, and quality of life: a real-world study. J Clin Endocrinol Metab. 2018;103:1224–32.

Beck RW, Riddlesworth TD, Ruedy K, et al. Continuous glucose monitoring versus usual care in patients with type 2 diabetes receiving multiple daily insulin injections: a randomized trial. Ann Intern Med. 2017;167:365–74.

Ehrhardt NM, Chellappa M, Walker MS, Fonda SJ, Vigersky RA. The effect of real-time continuous glucose monitoring on glycemic control in patients with type 2 diabetes mellitus. J Diabetes Sci Technol. 2011;5:668–75.

Vigersky RA, Fonda SJ, Chellappa M, Walker MS, Ehrhardt NM. Short- and long-term effects of real-time continuous glucose monitoring in patients with type 2 diabetes. Diabetes Care. 2012;35:32–8.

Ruedy KJ, Parkin CG, Riddlesworth TD, Graham C, DIAMOND Study Group. Continuous glucose monitoring in older adults with type 1 and type 2 diabetes using multiple daily injections of insulin: results from the DIAMOND trial. J Diabetes Sci Technol. 2017;11:1138–46.

Bolinder J, Antuna R, Geelhoed-Duijvestijn P, Kröger J, Weitgasser R. Novel glucose-sensing technology and hypoglycaemia in type 1 diabetes: a multicentre, non-masked, randomised controlled trial. Lancet. 2016;388:2254–63.

Haak T, Hanaire H, Ajjan R, Hermanns N, Riveline JP, Rayman G. Flash glucose-sensing technology as a replacement for blood glucose monitoring for the management of insulin-treated type 2 diabetes: a multicenter, open-label randomized controlled trial. Diabetes Ther. 2017;8:55–73.

Anjana RM, Kesavadev J, Neeta D, Tiwaskar M, Pradeepa R, Jebarani S, et al. A multicenter real-life study on the effect of flash glucose monitoring on glycemic control in patients with type 1 and type 2 diabetes. Diabetes Technol Ther. 2017;19(9):533–40.

Dunn TC, Xu Y, Hayter G, Ajjan RA. Real-world flash glucose monitoring patterns and associations between self-monitoring frequency and glycaemic measures: a European analysis of over 60 million glucose tests. Diabetes Res Clin Pract. 2018;137:37–46.

Reddy M, Jugnee N, Anantharaja S, Oliver N. Switching from flash glucose monitoring to continuous glucose monitoring on hypoglycemia in adults with type 1 diabetes at high hypoglycemia risk: the extension phase of the I HART CGM study. Diabetes Technol Ther. 2018;20:751–7.

Kumagai R, Muramatsu A, Fujii M, et al. Comparison of glucose monitoring between freestyle libre pro and iPro2 in patients with diabetes mellitus. J Diabetes Investig. 2018. https://doi.org/10.1111/jdi.12970(Epub ahead of print).

Riemsma R, Corro Ramos I, Birnie R, et al. Integrated sensor-augmented pump therapy systems [the MiniMed®Paradigm™ Veo system and the Vibe™ and G4®PLATINUM CGM (continuous glucose monitoring) system] for managing blood glucose levels in type 1 diabetes: a systematic review and economic evaluation. Health Technol Assess. 2016;20:1–251.

Chase HP, Beck RW, Xing D, et al. Continuous glucose monitoring in youth with type 1 diabetes: 12-month follow-up of the Juvenile Diabetes Research Foundation continuous glucose monitoring randomized trial. Diabetes Technol Ther. 2010;12:507–15.

Engler R, Routh TL, Lucisano JY. Adoption barriers for continuous glucose monitoring and their potential reduction with a fully implanted system: results from patient preference surveys. Clin Diabetes. 2018;36:50–8.

Messer LH, Johnson R, Driscoll KA, Jones J. Best friend or spy: a qualitative meta-synthesis on the impact of continuous glucose monitoring on life with type 1 diabetes. Diabet Med. 2018;35:409–18.

Miller KM, Foster NC, Beck RW, et al. Current state of type 1 diabetes treatment in the US: updated data from the T1D exchange clinic registry. Diabetes Care. 2015;38:971–8.

Peters AL, Ahmann AJ, Battelino T, et al. Diabetes technology-continuous subcutaneous insulin infusion therapy and continuous glucose monitoring in adults: an Endocrine Society clinical practice guideline. J Clin Endocrinol Metab. 2016;101:3922–37.

Agiostratidou G, Anhalt H, Ball D, et al. Standardizing clinically meaningful outcome measures beyond HbA1C for type 1 diabetes: a consensus report of the American Association of Clinical Endocrinologists, the American Association of Diabetes Educators, the American Diabetes Association, the Endocrine Society, JDRF International, The Leona M. and Harry B. Helmsley Charitable Trust, the Pediatric Endocrine Society, and the T1D Exchange. Diabetes Care. 2017;40:1622–30.

diaTribe Learn. 2016. Going beyond A1c—one outcome can’t do it all. Available from: https://diatribe.org/BeyondA1c. Accessed Oct 2018.

Health Quality Ontario. Continuous monitoring of glucose for type 1 diabetes: a health technology assessment. Ont Health Technol Assess Ser. 2018;18(2):1–160.

Pickup JC, Freeman SC, Sutton AJ. Glycaemic control in type 1 diabetes during real time continuous glucose monitoring compared with self monitoring of blood glucose: meta-analysis of randomised controlled trials using individual patient data. BMJ. 2011;343:d3805.

Wan W, Skandari MR, Minc A, et al. Cost-effectiveness of continuous glucose monitoring for adults with type 1 diabetes compared with self-monitoring of blood glucose: the DIAMOND randomized trial. Diabetes Care. 2018;41:1227–34.

Chaugule S, Oliver N, Klinkenbijl B, Graham C. An economic evaluation of continuous glucose monitoring for people with type 1 diabetes and impaired awareness of hypoglycaemia within North West London Clinical Commissioning Groups in England. Eur Endocrinol. 2017;13:81–8.

Fonda SJ, Graham C, Munakata J, Powers JM, Price D, Vigersky RA. The cost-effectiveness of real-time continuous glucose monitoring (RT-CGM) in type 2 diabetes. J Diabetes Sci Technol. 2016;10:898–904.

Weisman A, Bai JW, Cardinez M, Kramer CK, Perkins BA. Effect of artificial pancreas systems on glycaemic control in patients with type 1 diabetes: a systematic review and meta-analysis of outpatient randomised controlled trials. Lancet Diabetes Endocrinol. 2017;5:501–12.

Cameron FM, Ly TT, Buckingham BA, et al. Closed-Loop Control Without Meal Announcement in Type 1 Diabetes. Diabetes Technol Ther. 2017;19:527–32.

Acknowledgements

Funding

The development of this manuscript was funded by Sanofi US, Inc. Publication processing charges and the Open Access fee were covered by Sanofi US, Inc. All authors had full access to all of the data in this study and take complete responsibility for the integrity of the data and accuracy of the data analysis.

Medical Writing and Editorial Assistance

The authors received writing and editorial support in the preparation of this manuscript from Patricia Fonseca, PhD, from Excerpta Medica, funded by Sanofi US, Inc.

Authorship

All named authors meet the International Committee of Medical Journal Editors (ICMJE) criteria for authorship for this article, take responsibility for the integrity of the work as a whole, and have given their approval for this version to be published.

Authorship Contributions

All authors contributed to the concept/design and writing of this manuscript, including critical review, editing of each draft, and approval of the submitted version.

Disclosures

Ramzi Ajjan has received institutional research grants from Abbott, Bayer, Eli Lilly, Novo Nordisk, Roche, and Takeda and has received honoraria/education support and served as a consultant for Abbott, AstraZeneca, Bayer, Boehringer Ingelheim, Bristol-Myers Squibb, Eli Lilly, GlaxoSmithKline, Merck Sharp & Dohme, Novo Nordisk, and Takeda. David Slattery has no conflicts of interest to declare. Eugene Wright has participated in the speakers’ bureau of Abbott Diabetes, Boehringer Ingelheim, and Eli Lilly, has served as a board member/advisory panel member for Abbott Diabetes, Boehringer Ingelheim, Eli Lilly, Voluntis, Sanofi, and PTS Diagnostics, and has served as a consultant for Abbott Diabetes, Boehringer Ingelheim, Eli Lilly, and Voluntis.

Compliance with Ethics Guidelines

This article is based on previously conducted studies and does not contain any studies performed by any of the authors with human participants or animals.

Data Availability

Data sharing is not applicable to this article as no data sets were generated or analyzed during the current study.

Author information

Authors and Affiliations

Corresponding author

Additional information

Enhanced digital features

To view enhanced digital features for this article go to https://doi.org/10.6084/m9.figshare.7542944.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Ajjan, R., Slattery, D. & Wright, E. Continuous Glucose Monitoring: A Brief Review for Primary Care Practitioners. Adv Ther 36, 579–596 (2019). https://doi.org/10.1007/s12325-019-0870-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12325-019-0870-x