Abstract

This paper proposes the Self-Adaptive algorithm for Multi-Objective Constrained Optimization by using Radial Basis Function Approximations, SAMO-COBRA. This algorithm automatically determines the best Radial Basis Function-fit as surrogates for the objectives as well as the constraints, to find new feasible Pareto-optimal solutions. SAMO-COBRA is compared to a wide set of other state-of-the-art algorithms (IC-SA-NSGA-II, SA-NSGA-II, NSGA-II, NSGA-III, CEGO, SMES-RBF) on 18 constrained multi-objective problems. In the first experiment, SAMO-COBRA outperforms the other algorithms in terms of achieved Hypervolume (HV) after being given a fixed small evaluation budget on the majority of test functions. In the second experiment, SAMO-COBRA outperforms the majority of competitors in terms of required function evaluations to achieve \(95\%\) of the maximum achievable Hypervolume. In addition to academic test functions, SAMO-COBRA has been applied on a real-world ship design optimization problem with three objectives, two complex constraints, and five decision variables.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Real-world optimization problems often have multiple conflicting objectives, several constraints, and decision variables in the continuous domain [4, 16, 43]. Without loss of generality, a constrained multi-objective optimization problem can be defined as follows [13]:

where k represents the number of objectives, m represents the number of constraints, and d represents the number of parameters in the optimization problem. Solving this problem type can be done by searching for feasible Pareto-optimal solutions. The definition of a solution that is feasible and Pareto-optimal can be found in Def. 1.

Definition 1

(Feasible Pareto-optimal solution): \(\mathbf {x} \in \varOmega \) is called feasible Pareto-optimal with respect to \(\varOmega \) and \(g_i(\mathbf {x}) \le 0 \; \forall i \in \{1,\dots ,m\}\), if and only if there is no solution \(\mathbf {x}'\) for which \(\mathbf {v} = f(\mathbf {x}') = (f_1(\mathbf {x}'),\dots ,f_k(\mathbf {x}'))^\top \) dominates \(\mathbf {u} = f(\mathbf {x})= (f_1(\mathbf {x}),\dots ,f_k(\mathbf {x}))^\top \) where \(g_i(\mathbf {x}) \le 0\) and \(g_i(\mathbf {x}') \le 0 \; \forall i \in \{1,\dots ,m\}\).

On top of the already complex constrained multi-objective optimization problem characteristics, there is also a need to reduce the computational and licensing cost involved. There are many engineering examples which require expensive function evaluations [35], special hardware, or software licences [37]. Practical examples of such problems can be found in the automotive industry, aerospace engineering, and the maritime industry.

Handling constraints in optimization problems can be done in several ways: using penalty functions, separation of constraints and objectives, treating constraints as additional objectives, or hybrid methods [3, 16]. In our approach we only consider separation of constraints and objectives because the main issue with penalty functions is that the ideal penalty factors cannot be known in advance, and tuning the parameters requires a lot of additional function evaluations. The issue with treating constraints as additional objectives is that it makes the objective space unnecessarily more complex with a too strong bias towards the constraints.

An algorithm which uses the separation of constraints and objectives in combination with surrogates is SAMO-COBRA [41]. SAMO-COBRA, which is an abbreviation for Self-Adaptive Multi-Objective Constrained Optimization by using Radial Basis Function Approximations, owes its name to the very efficient constraint handling algorithms: COBRA [31] and SACOBRA [3]. Besides constraint handling, SAMO-COBRA shows to be efficient in finding Pareto-optimal solutions, thereby solving constrained multi-objective problems by using a limited number of function evaluations.

Compared to our previous work describing SAMO-COBRA [41], in this paper the SAMO-COBRA algorithm is described in more detail, SAMO-COBRA is compared to two new state-of-the-art algorithms, additional experiments are conducted to evaluate its performance, and SAMO-COBRA has been used in practice to solve a real-world optimization problem. In the real-world application SAMO-COBRA is used to solve a real-world ship design optimization problem with five variables, two complex constraints, and three objectives.

1.1 Outline

The remainder of this paper is organized as follows. In Sect. 2 related work is discussed. In Sect. 3 the SAMO-COBRA algorithm is proposed and described in detail. In Sect. 4 the experimental setup is given on how SAMO-COBRA is compared to other state of the art algorithms. In Sect. 5 the results of the experiments are reported. In Sect. 6 an example is given of a real-world application of SAMO-COBRA. The final concluding remarks are given in Sect. 7.

2 Related work

Existing work on surrogate assisted optimization is typically limited to a subset of three relevant requirements: multi-objective, constrained, and speed. For example, methods exist for quickly solving constrained single-objective problems (e.g. SACOBRA [3]), for multi-objective optimization without efficient constraint handling techniques (e.g. SMS-EGO [29] and PAREGO [25]), or for constrained multi-objective optimization without using meta-models, leading to a large number of required function evaluations (e.g. NSGA-II [14], NSGA-III [24], SPEA2 [44], and SMS-EMOA [6]). Some recently proposed algorithms address all three requirements, however, their computational cost grows cubically in every iteration and exponentially for each additional decision parameter due to the use of Kriging surrogates (e.g. CEGO [42], ECMO [36]).

Only very occasionally a surrogate-based algorithm is published that deals with both constraints and multiple objectives in an effective manner without using a Kriging surrogate (e.g., Datta’s and Regis’ SMES-RBF [11], Blank and Deb’s SA-NSGA-II [8], and Blank’s and Deb’s IC-SA-NSGA-II [8]).

SMES-RBF is a surrogate assisted evolutionary strategy that uses cubic Radial Basis Functions as a surrogate for the objectives and constraints to estimate the actual function values. The most promising solution(s) according to a non-dominated sorting procedure are then evaluated on the real objective and constraint function until the evaluation budget limit has been reached.

SA-NSGA-II is the surrogate assisted NSGA-II algorithm that exploits the Cubic Radial Basis Functions with a linear tail as a surrogate to find the Pareto frontier. SA-NSGA-II starts with a Latin Hypercube Sample to train the surrogates. Afterwards, in every iteration the surrogates are updated and used by the NSGA-II algorithm to determine the solutions to be evaluated next.

IC-SA-NSGA-II assumes that the constraints are inexpensive and exploits this assumption by only evaluating the objective functions if the constraints are satisfied. IC-SA-NSGA-II starts by creating a Riesz s-Energy sample (Energy) [22], which results in a well-spaced feasible point set. After the initial sample is evaluated, cubic Radial Basis Functions with a linear tail are fitted as surrogates for the objective and constraint functions. The surrogates are then used by the NSGA-II algorithm to find novel solutions. The constraint functions are evaluated first, the objective functions are only evaluated if the constraints are not violated. The novel evaluated solutions are added to the archive which is used in the next iteration to retrain the surrogates. This continues until the objective evaluation budget has been exhausted.

3 Constrained multi-objective optimization algorithm

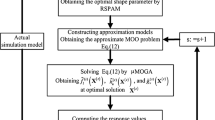

In this section, the new SAMO-COBRA algorithm is introduced. It is designed for dealing with continuous decision variables, multiple objectives, multiple complex constraints, and expensive objective function evaluations in an efficient manner. The idea behind the algorithm is that in every iteration, for each objective and for each constraint independently, the best transformation and the best RBF kernel is sought. In each iteration the best fit is used to search for a new unseen feasible Pareto efficient point that contributes the most to the HyperVolume (HV) which is computed between a reference point and the Pareto-frontier (PF). The pseudocode of SAMO-COBRA can be found in Algorithm 1. The Python implementation can be found on the Github page [39]. In the subsections below, the algorithm is explained in more detail.

3.1 Initial design of experiments

Bossek et al. showed empirically that, when dealing with sequential model-based optimization, in most cases it is best to use the Halton sampling strategy [21] with an initial sample that is as small as possible [9]. A few experiments (See Appendix A.1) confirmed that a small initial sample size and Halton sampling, also leads to the best results when applied on six constrained multi-objective problems from Sect. 4.

An RBF model that models the relationship between the input space and the output space can already be trained with \(d+1\) evaluated solutions. Therefore, it is advised to create an initial Halton sample of size \(d+1\) before the sequential optimization procedure starts, when using SAMO-COBRA. Every sample in the initial design is then evaluated (lines 2-4 of Algorithm 1) so that all samples have their corresponding constraint and objective scores.

3.2 Radial basis function fitting and interpolation

RBF interpolation approximates a function by fitting a linear weighted combination of RBFs [3]. The challenge is to find correct weights (\(\varvec{\theta }\)) and a good RBF kernel \(\upvarphi (\Vert \mathbf {x}-\mathbf {c} \Vert )\). An RBF is only dependent on the distance between the input point \(\mathbf {x}\) to the center \(\mathbf {c}\). The RBFs used in this work take each evaluated point as the centroid of the function, and the weighted linear combination of RBFs always produces a perfect fit through the training points. Besides the perfect fit on the training points, the linear combination of the RBFs can also give a reasonable approximation of the unknown area.

Any function which is only dependent on the distance from a specific point to another point belongs to the group of RBFs. The RBF kernels (\(\upvarphi \)) considered in this work are the cubic with \(\upvarphi (r)=r^3\), Gaussian with \(\upvarphi (r)=\exp {(-(\epsilon \cdot r)^2)}\), multiquadric with \(\upvarphi (r)=\sqrt{1+(\epsilon \cdot r)^2}\), inverse quadratic with \(\upvarphi (r)=(1+(\epsilon \cdot r)^2)^{-1}\), inverse multiquadric with \(\upvarphi (r)=(\sqrt{1+(\epsilon \cdot r)^2})^{-1}\), and thin plate spline with \(\upvarphi (r)=r^2\log r\). Note that the shape/width parameter \(\epsilon \) for every individual RBF is kept constant such as proposed by Urquhart et al. [38]. Moreover, all shape parameters are fixed to \(\epsilon = 1\).

Finding suitable linear weighted combinations \(\varvec{\theta }\) of the RBFs can be done by inverting \(\varvec{\varPhi }\in \mathbb {R}^{n \times n}\) where \(\varvec{\varPhi }_{i,j} = \upvarphi (\Vert \mathbf {x}_i-\mathbf {x}_j \Vert )\):

Here \(\mathbf {f}\) is a vector of length n with the function values belonging to one of the objectives or constraints. Because \(\varvec{\varPhi }\) is not always invertible, Micchelli introduced RBFs with a polynomial tail, better known as augmented RBFs [26]. In this work augmented RBFs are used with a second order polynomial tail. The polynomial tail is created by extending the original matrix \(\varvec{\varPhi }\) with \(\mathbf {P} = (1,x_{i1},\dots ,x_{id},x^2_{i,1},\dots ,x^2_{id})\), in its ith row, where \(x_{ij}\) is the jth component of vector \(\mathbf {x}_i\), for \(i=1,\dots ,n\) and \(j=1,\dots ,d\), \(\mathbf {P}^\top \), and zeros \(\varvec{0}_{(2d+1) \times (2d+1)}\), leading to \(1 + 2d\) more weights \(\varvec{\mu }\) to learn.

Now that the weights \(\varvec{\theta }\) can be computed and \(\varvec{\mu }\) with Eq. 1 (Lines 16, 17, 20, 21 of Algorithm 1), for each unseen input \(\mathbf {x}'\) the function value (\(f'\)) can be interpolated/predicted by using Eq. (3).

3.3 Scaling

In SAMO-COBRA, various scaling and transformation functions are used in lines 9-13 of the algorithm. This is done to improve the predictive accuracy of the RBF surrogate models. The four functions Scale, Plog, Standardize and the Scale Constraint are described below.

- Scale::

-

The input space/decision variables are scaled into the range \([-1,1]\) with \(x = 2\cdot (x-x_{lb})/(x_{ub}-x_{lb})-1\). By scaling large values in the input space, computationally singular (ill-conditioned) coefficient matrices in Eq. (1) can be prevented. In case the large values in the input space are kept, the linear equation solver will terminate with an error, or it will result in a large root mean square error [3]. Additionally, when fitting the RBFs, a small change in one of the variables, is relatively the same small change in all the other variables, making each variable in the basis equally important and equally sensitive.

- Standardize::

-

The relationship between the input space and the objective function values is modelled with RBF surrogates. Besides this relationship, Bagheri et al. also exploited similarities between RBF and Kriging surrogates to come up with an uncertainty quantification method [2]. The formula for this uncertainty quantification method is given in Eq. (4).

$$\begin{aligned} \widehat{U}_{RBF} = \upvarphi (\Vert \mathbf {x}'-\mathbf {x}' \Vert ) - \varvec{\varPhi }'^\top \varvec{\varPhi }^{-1}\varvec{\varPhi }'\nonumber \\ \end{aligned}$$(4)The uncertainty (\(\widehat{U}_{RBF}\)) of solutions far away from earlier evaluated solutions is higher compared to solutions close to earlier evaluated solutions. This uncertainty quantification method can therefore help in exploration, and prevent the algorithm from getting stuck in a local optimal solution. However, as can be derived from Eq. (4), the uncertainty quantification method is only dependent on the input space and not on the scale of the objective and/or weights of the RBF models. The objective values are therefore standardized as \(y' = (y-\bar{y})/\sigma \) so that the uncertainty scale and the objective scale match. Here \(\sigma \) is the standard deviation of y, and \(\bar{y}\) the mean of y.

- Scale Constraint::

-

The constraint evaluation function should return a continuous value, namely the amount by which the constraint is violated. Since it is possible to have multiple constraints, and each constraint is equally important, every constraint output is scaled with \(c' = c/(\max (c)-\min (c))\), where \(\max (c)\) is the maximum constraint violation encountered so far, and \(\min (c)\) is the smallest constraint value seen so far. After scaling, the difference between \(\min (c)\) and \(\max (c)\) becomes 1 for all constraints, making every constraint equally important while 0 remains the feasibility boundary.

- Plog::

-

In cases where there are very steep slopes, a logarithmic transformation of the objective and/or constraint scores can be beneficial for the predictive accuracy [32]. Therefore, the scores are transformed with the Plog transformation function. The extension to a matrix argument \(\mathbf {Y}\) is defined component-wise, i.e., each matrix element \(y_{ij}\) is subject to Plog.

$$\begin{aligned} \textsc {Plog}(y) = {\left\{ \begin{array}{ll} + \ln (1+ y), &{} \quad \text{ if }\quad y \ge 0 \\ -\ln (1- y), &{} \quad \text{ if }\quad y < 0 \end{array}\right. } \end{aligned}$$(5)

3.4 Maximize hypervolume contribution

After modelling the relationship between the input space and the response variables with the RBFs, the RBFs are used as cheap surrogates. For each constraint and objective, the best RBF configuration is chosen as described in Sect. 3.6. By using Eq. (3) for each unseen input \(\mathbf {x}'\), every corresponding constraint and objective prediction can be calculated. Given the RBF approximations for a solution \(\mathbf {x}'\), the constraint predictions can be used to check if the solution is predicted to satisfy all the constraints. Besides the constraint predictions, the objective predictions can be used to see if the solution is a highly preferred solution or not. Whether one solution is preferred above another solution can be computed with an infill criteria, also known as acquisition function. There are two infill criteria considered in this work, the S-Metric Selection criteria (SMS), and the Predicted Hyper-Volume criteria (PHV). Computation of the two infill criteria is done as follows:

-

1.

Compute all objective scores for a given solution \(\mathbf {x}'\) with Eq. (3). With the interpolated objective scores, compute the additional Predicted Hyper-Volume (PHV) score this solution adds to the PF. This is a purely exploitative infill criteria without any uncertainty quantification method.

-

2.

Compute all objective scores for a given solution \(\mathbf {x}'\) with Eq. (3) and subtract the uncertainty of each objective given \(\mathbf {x}'\) and Eq. (4). With the interpolated objective score minus the uncertainty, the potential HV that this solution could add to the PF is calculated. This infill criteria is similar to the Kriging \(\mathcal {S}\)-metric Selection (SMS) criterion from Emmerich et al. [6]. Because of the subtracted uncertainty, it will be more exploratory compared to the PHV criterion.

How much a solution adds to the PF is based on how much HV the solution adds between the already evaluated non-dominated solutions and a predefined reference point. A visual representation of the HV scores of two different solutions is displayed in Figure 1. By using any of the two infill criteria, the constrained multi-objective problem has been translated into a constrained single-objective problem.

After an infill criteria is chosen by the user, the constrained single-objective problem can be formulated and optimized. The COBYLA (Constrained Optimization BY Linear Approximations) algorithm [30] is used to maximize the infill criteria (Line 26 of Algorithm 1). COBYLA is allowed to vary \(\mathbf {x}'\) between the lower and the upper bound of the design space \(\mathbf {x}' \in [\mathbf {lb}\),\(\mathbf {ub}]\). COBYLA deals with constraints by preferring feasible solutions above infeasible solutions. This way, COBYLA searches for a Pareto-optimal solution which does not violate any of the constraints and has the highest possible infill criteria score.

If no feasible solution can be found by COBYLA, the solution with the smallest constraint violation is searched for. Note that COBYLA does not use the real objective and constraint function evaluations during the search for the next best solution. Instead, COBYLA uses the cheap RBF surrogates as surrogate for the real objective and constraint functions. Only after the next best solution on the surrogates is found, it is evaluated on the real objective and constraint functions (Lines 27-31 of Algorithm 1).

3.5 Surrogate exploration and RBF adaptation

To increase the chance of finding the best feasible pareto-optimal solution in every iteration, two hyper parameter updates are done. In every iteration of SAMO-COBRA the surrogate search budget and the allowed constraint RBF approximation error margin are updated.

The chances of finding the best feasible Pareto-optimal solution can be increased by starting the surrogate search not from one solution but from multiple randomly generated solutions independently. Each independent local search done by COBYLA gets an allocated search budget, which is updated every iteration together with the number of starting points. The problem characteristics (number of variables d, constraints m, and objectives k) influence the optimization problem complexity. Therefore, the surrogate evaluation budget and number of starting points are empirically chosen and set at \(50\cdot (d+m+k)\) and \(2\cdot (d+m+k)\) respectively. In every iteration of SAMO-COBRA the convergence of COBYLA is checked. If COBYLA converges every time to a feasible solution, the number of randomly generated points is increased by \(10\%\) and the surrogate search budget is decreased by \(10\%\). The opposite update is done if COBYLA did not converge from one of the starting points. The \(10\%\) search budget update step size is empirically chosen as a well working step size (See Appendix A.2 for the experiment). The search budget is updated this way to limit the time spent on exploring the surrogates and to further increase the chances of finding a solution that adds the most HV to the PF.

Because in the first iterations the RBFs do not model the constraints very well yet, an allowed error (\(\epsilon \)) of \(1\%\) for each constraint is built in. If the solution evaluated on the real constraint function is feasible, the error margin of this constraint approximation is reduced by \(10\%\). If a solution is infeasible, the RBFs surrogate approximation is clearly still wrong. Therefore, the error margin of the corresponding constraint is increased by \(10\%\). The \(10\%\) \(\epsilon \) update step size is empirically chosen as a well working step size (See Appendix A.2 for the experiment).

3.6 Selection of the best RBF

In every iteration, the best RBF kernel and transformation strategy is chosen (Line 32 of Algorithm 1). The pseudocode of this function can be found in Algorithm 2. Finding the best RBF kernel and transformation strategy is done by computing the difference between the RBF interpolated solution and the solution computed with the real constraint and objective functions. This difference is computed every iteration, resulting in a list of historical RBF approximation errors for each constraint and objective function, for each kernel, with and without the Plog transformation.

Based on the RBF approximation errors, the best RBF kernel and transformation are chosen. Bagheri et al. show empirically, that if only the last approximation error is considered in the single objective case, the algorithm converges to the best solution faster [1]. This is the case because when closer to the optimum, the vicinity of the last solution is the most important. In the multi-objective case, the vicinities of all the feasible Pareto-optimal solutions are important. Experiments confirmed that the approximation errors of the feasible Pareto-optimal solutions and the last four solutions should be considered. The approximation errors of the last four solutions ensure that the algorithm does not get stuck on one RBF configuration and the error of the Pareto-efficient solutions ensures that all the vicinities of the optimal solutions are considered. The Mean Squared Error measure is used to quantify which RBF kernel and which transformation function in the previous iterations resulted in the smallest approximation error.

To give insight into this RBF kernel and transformation switching approach, an additional experiment is conducted. In Appendix A.3, an experiment is described and results are given on how frequent the RBF kernel is changed and how often the Plog transformation strategy is changed.

4 Experimental setup

Two experiments are set up to compare SAMO-COBRA with other state of the art algorithms. In these experiments, two variants of the SAMO-COBRA algorithm are tested, one without the uncertainty quantification method (PHV), and one with the uncertainty quantification method (SMS). The performance of the two variants are compared to the performance of the following algorithms: CEGO [42], IC-SA-NSGA-II [8], SA-NSGA-II [8], NSGA-II [14], NSGA-III [24], and SMES-RBF [11]. The performance of the algorithms except for SMES-RBF are assessed on 18 academic benchmark functions. SMES-RBF is not tested since the implementation of SMES-RBF has not been made available and as such it could only be compared to the results reported in the SMES-RBF publication.

The 18 test functions and their characteristics are listed in Table 1. Some of the problems are real-world-like-problems while others are artificially created benchmark problems, proposed by several authors over the past years [37]. Each algorithm is tested 10 times on every test function to get a trustworthy result. The results for NSGA-II and NSGA-III had a high variance. Therefore, 100 runs are executed for those algorithms. In the first experiment, the algorithms are given a fixed budget to find a feasible Pareto-frontier. In the second experiment the algorithms are evaluated to see how many function evaluations they require to achieve a predefined threshold performance.

4.1 Hyper parameter settings

In the experiments for each algorithm either the original implementation is used or an implementation which was readily available in Python. For all algorithms, the recommended hyper parameters from the original implementations are used. Since there are no clear recommendations for the hyper parameters of NSGA-II and NSGA-III, a grid search is conducted. In the grid search the optimal population size and number of generations are determined for NSGA-II. For NSGA-III a grid search is done to find the best parameter value for the number of divisions that influence the spacing of the reference points of NSGA-III. For the sake of brevity, only the results with the best scores from this grid search are reported.

The implementations of the different algorithms are listed here: the original implementation of CEGO can be found on the dedicated Github page.Footnote 1 The original implementation of IC-SA-NSGA-II and SA-NSGA-II can be found on the personal page of Julian Blank.Footnote 2 For NSGA-II and NSGA-III the implementation of Platypus is used.Footnote 3 The implementation of the SMES-RBF algorithm is not provided. Therefore, only the reported results from the SMES-RBF paper [11] can be compared.

More details concerning the implementation of SAMO-COBRA, the experiments, and the statistical comparison can be found on Github [39].

4.2 Fixed budget experiment

In the first experiment, each algorithm was given a limited fixed number of function evaluation after which the HV performance metric is computed [7]. Each algorithm is allowed to do \(40\cdot d\) function evaluations, here d represents the number of decision variables of the optimization test function.

The HV metric is selected as the performance metric to quantify the results. This because HV simultaneously measures accuracy and diversity, and because it is the most common performance metric [34]. The HV is computed between the obtained Feasible Pareto-optimal solutions and the reference point reported in Table 1. Higher HV scores mean that more HV is covered and therefore a better approximation of the Pareto-frontier is found.

4.3 Convergence experiment

In the second experiment, each algorithm is tested to see when it reaches a threshold value of the HV metric. The threshold is set to \(95\%\) of the maximum achievable HV per test function between the reference points in Table 1 and the Pareto-frontier. Since the Pareto-front is not known for every function, NSGA-II is used to find the maximal HV between a reference point and the Pareto-frontier by running it with a population size of \(100 \cdot d\) and allowing the algorithm to run for 1000 generations.

For each algorithm, after each iteration or generation, the HV is computed. As soon as the threshold value is achieved, the number of function evaluations are used as the evaluation metric.

4.3.1 SMES-RBF convergence experiment

To be able to compare the results of SMES-RBF with the results of SAMO-COBRA, a different experiment is conducted. In this experiment the number of function evaluations are compared between SAMO-COBRA and SMES-RBF to achieve the HV as reported in the SMES-RBF paper [11].

5 Results

The complete set of results from the experiments can be found on Github [39]. It is important to keep in mind when analysing the results that IC-SA-NSGA-II as opposed to the other algorithms, uses many more constraint function evaluations.

5.1 Fixed budget experiment results

The results of the first experiment, in which the HV is computed after \(40\cdot d\) function evaluations, can be found in Table 2. A Wilcoxon rank-sum test with Bonferroni correction is used to determine if there is a significant difference between the algorithm with the best results compared to the algorithm with the lesser results.

Interestingly, the SAMO-COBRA with the predicted HV infill criterion (PHV) outperforms the state-of-the art algorithms in 16 out of the 18 test functions with the exception of the SAMO-COBRA SMS variant. Only on the WB and C3DTLZ4 test function the IC-SA-NSGA-II algorithm finds a significantly larger HV score. However, for the IC-SA-NSGA-II algorithm only the objective function evaluations are counted and not the constraint function calls. It is therefore not remarkable that for some test functions IC-SA-NSGA-II finds a higher HV.

Inspection showed that IC-SA-NSGA-II uses 10 000 constraint function evaluations to find a well spread feasible initial sample, after which IC-SA-NSGA-II starts optimizing. In order to obtain the Pareto frontier, the IC-SA-NSGA-II used on average 10 537 and 10776 constraint function evaluations for respectively the WB and C3DTLZ4 test function.

5.2 Convergence experiment results

In Table 3, the number of function evaluations are reported that are required to achieve the 95% threshold value of the maximum HV. For some test functions this was quite easy to achieve since it only required to evaluate the initial sample. On other test functions the algorithms required many more evaluations to achieve the threshold. Note again that for IC-SA-NSGA-II only the objective function calls are reported and not the constrained function evaluations.

NSGA-II and NSGA-III are terminated after 5000 function evaluations on the C3DLTZ4, OSY, SPD, and SRD test function. CEGO was not able to obtain the threshold value for the SPD and CSI function within 24 hours.

As can be seen in Table 3, SAMO-COBRA with the Predicted HV (PHV) infill criterion again outperforms the other algorithms for the majority of the test functions. This is interesting because this infill criterion is designed to be exploitative.

5.2.1 SMES-RBF convergence experiment results

As mentioned before, the implementation of SMES-RBF is not provided. Therefore, the reported results of SMES-RBF are compared with the results of SAMO-COBRA. In Table 4 the number of function evaluations are reported that are required to obtain the same HV as reported in the SMES-RBF paper.

As shown in Table 4, the number of function evaluations of SAMO-COBRA is much smaller. Interestingly, the HV results for the BICOP1 test function after 1000, 2000, and 5000 function evaluations of SMES-RBF reported in the original paper are, given the Nadir point, not possible.

To further inspect the performance of the algorithms over time, convergence plots are made for the BNH and TRICOP test function. The convergence plots show the HV computed in each iteration. In this experiment the same estimation of Nadir points as in the original SMES-RBF paper [11] are used as the reference points. The convergence of the HV on the BNH test function can be found in Figure 2. The convergence of the HV on the TRICOP test function can be found in Figure 3.

5.3 PHV vs SMS infill criterion

An interesting conclusion from all the experiments is that the exploiting strategy of the PHV infill criterion leads in most cases to the highest HV and to the least number of required function evaluations to obtain the \(95\%\) threshold. It is no surprise that this exploiting strategy works well in a constrained multi-objective setting, since a similar effect was already shown by Rehbach et al. [33]. Rehbach et al. show that in the single objective case it is only useful to include an expected improvement infill criterion if the dimensionality of the problem is low, if it is multimodal, and if the algorithm can get stuck in a local optimum. The results in Table 2 and Table 3 allow us to give the following advice based on empirical results: When searching for a set of Pareto-optimal solutions, an uncertainty quantification method should not be used. This is due to the fact that, when searching for a trade-off between objectives, the algorithm is forced to explore more of the objective space in the different objective directions. The exploration of objectives stimulates diversity, which makes the algorithm less likely to get stuck in a local optimum, thereby making the uncertainty quantification method redundant.

6 Real-world application

SAMO-COBRA has been used in practice to design a wind feeder vessel to support the installation of windmills at sea. Although high winds are good for power production, they usually also result in rough seas. These rough seas around the wind park installation sites increase the demand for reliable vessels. An example of such a reliable vessel (See Figure 4) has been designed and later optimized with SAMO-COBRA at C-Job Naval Architects.Footnote 4 This vessel is designed to support the construction of wind farms and to transport the materials from the shore to the installation sites.

The objectives of the optimization case of the wind feeder vessel are to have a robust seakeeping performance to maximize the year-round operability, while also keeping the operational cost and capital expenses at a minimum. The operability can be optimized by maximizing the so-called Operability Robustness Index (ORI) [20]. The seakeeping assessment is done with a strip theory code of NAPA.Footnote 5 Strip theory is proven to be fast and reliable with a sufficient accuracy for conventional hull forms [5, 19]. The capital expenses can be translated into the cost of steel that is required to build the vessel, this is roughly equal to the Lightship Weight (LSW) of the vessel. The LSW is calculated by summing the weight of all the equipment plus the minimum amount of steel that is required to fulfil the longitudinal strength requirements. The operational expenses can be dealt with by minimizing the ship resistance in the water at service speed (Rt[kN]). This resistance is calculated with the Holtrop Mennen method [23].

All objectives, practical constraints, relevant rules, regulations, and loading conditions can be evaluated with the modular Accelerated Concept Design framework [40]. In this software, a parametric 3D model of the ship is set up by a naval architect after which automated software tools can evaluate any design variation in one function call. In the wind feeder vessel case, five design parameters are defined: Aftship Length, Midship Length, Foreship length, Beam at Waterline, and Draught. Since sea-keeping and longitudinal strength are already captured in the objectives, only two constraints are needed. The two constraints are for space reservation of the wind turbine blades and the meta-centric height for intact stability of the vessel. Based on 50 Halton samples, \(36\%\) of the design space is feasible.

SAMO-COBRA is then used to optimize this ship design optimization problem. To enhance the exploration in this case study, SAMO-COBRA started with more than advised 50 initial Halton samples. After evaluation of the initial sample, the SAMO-COBRA algorithm with the PHV infill criterion is used to propose 250 more solutions. On a desktop with an Intel Xeon Processor E3-1245 V3 quad-core processor with 16 GB of working memory, the 300 evaluations required three and a half hours of wall clock time.

After analysing the results from the optimization study, the base design by the naval architect was shown to be much too large, causing the ship to be too heavy with a sub-optimal performance. If we zoom in on a few Pareto-optimal solutions and compare them with the original, then the solution with the same ORI score has a \(10.3\%\) smaller resistance value, and \(19.64\%\) less light ship weight. The solution with the same resistance score has a \(4\%\) better ORI score, and \(13.68\%\) less light ship weight. All the evaluated feasible solutions are visualized on the Pareto frontier in Figure 5.

7 Conclusion and future work

In this paper, two variants of the SAMO-COBRA algorithm are introduced, based on using two different infill criteria: S-Metric-Selection and Predicted Hypervolume (PHV), of which the latter is more exploitative than the former. The performance of the two SAMO-COBRA variants is compared to six other state-of-the-art algorithms: IC-SA-NSGA-II, SA-NSGA-II, NSGA-II, NSGA-III and SMES-RBF. On 16 out of the 18 test functions, SAMO-COBRA with the PHV infill criterion showed similar or better results. On two test functions, IC-SA-NSGA-II obtained significantly better results which can be explained by the fact that IC-SA-NSGA-II uses at least 10 000 more constraint function evaluations.

The SAMO-COBRA algorithm with the PHV infill criterion showed to be very efficient at solving constrained multi-objective optimization problems in terms of required function evaluations. We speculate that this exploiting infill criterion works best in most cases because of the characteristics of multi-objective problems. While dealing with multi-objective problems, the algorithm is already forced to explore more of the objective space, making the uncertainty quantification method redundant.

Besides such a performance comparison on test functions, SAMO-COBRA has also already been used in practice on a ship design optimization problem with three objectives, two constraints, and five decision variables. In this application, the algorithm demonstrates its ability to outperform the human expert in all objectives simultaneously.

Further research efforts will be put into creating infill criteria which can propose multiple solutions simultaneously. This way, in each iteration, evaluations can be run in parallel and wall clock time can be reduced even further. Besides parallelization, effort will be put into dealing with mixed integer decision parameters and solving multi-fidelity optimization problems.

Notes

Dedicated Naval Architects, https://c-job.com/.

Intelligent solutions for the maritime industry, https://www.napa.fi/.

References

Bagheri S, Konen W, Bäck T (2016) Online selection of surrogate models for constrained black-box optimization. In: 2016 IEEE Symposium Series on Computational Intelligence (SSCI), pp. 1–8. IEEE

Bagheri S, Konen W, Bäck T (2017) Comparing kriging and radial basis function surrogates. In: Proc. 27. Workshop Computational Intelligence, pp. 243–259

Bagheri S, Konen W, Emmerich M, Bäck T (2017) Self-adjusting parameter control for surrogate-assisted constrained optimization under limited budgets. Appl Soft Comput 61:377–393. https://doi.org/10.1016/j.asoc.2017.07.060

Bandaru S, Ng AH, Deb K (2017) Data mining methods for knowledge discovery in multi-objective optimization: Part a-survey. Expert Syst Appl 70:139–159

Beck RF, Reed AM, Sclavounos PD, Hutchison BL (2001) Modern computational methods for ships in a seaway. discussion. author’s closure. Trans-Soc Naval Archit Marine Eng 109:1–51

Beume N, Naujoks B, Emmerich M (2007) SMS-EMOA: Multiobjective selection based on dominated hypervolume. European J Oper Res 181(3):1653–1669. https://doi.org/10.1016/j.ejor.2006.08.008

Beume N, Naujoks B, Emmerich M (2007) Sms-emoa: Multiobjective selection based on dominated hypervolume. European J Oper Res 181(3):1653–1669

Blank J, Deb K (2021) Constrained bi-objective surrogate-assisted optimization of problems with heterogeneous evaluation times: Expensive objectives and inexpensive constraints. In: Ishibuchi H, Zhang Q, Cheng R, Li K, Li H, Wang H, Zhou A (eds) Evolutionary multi-criterion optimization. Springer International Publishing, Cham, pp 257–269

Bossek J, Doerr C, Kerschke P (2020) Initial design strategies and their effects on sequential model-based optimization. arXiv preprint arXiv:2003.13826

Coello CAC, Lamont GB, Van Veldhuizen DA et al (2007) Evolutionary algorithms for solving multi-objective problems, vol 5. Springer, New York

Datta R, Regis RG (2016) A surrogate-assisted evolution strategy for constrained multi-objective optimization. Expert Syst Appl 57:270–284. https://doi.org/10.1016/j.eswa.2016.03.044

Deb K (2001) Multi-objective optimization using evolutionary algorithms, vol 16. John Wiley & Sons, New Jersey

Deb K (2011) Multi-objective optimisation using evolutionary algorithms: an introduction. In: Multi-objective evolutionary optimisation for product design and manufacturing, pp. 3–34. Springer

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evolut Comput 6(2):182–197. https://doi.org/10.1109/4235.996017

Deb K, Pratap A, Meyarivan T (2001) Constrained test problems for multi-objective evolutionary optimization. In: International conference on evolutionary multi-criterion optimization, pp. 284–298. Springer. https://doi.org/10.1007/3-540-44719-9_20

Fan Z, Fang Y, Li W, Lu J, Cai X, Wei C (2017) A comparative study of constrained multi-objective evolutionary algorithms on constrained multi-objective optimization problems. In: 2017 IEEE congress on evolutionary computation (CEC), pp. 209–216. IEEE

Forrester A, Sobester A, Keane A (2008) Engineering design via surrogate modelling: a practical guide. John Wiley & Sons, New Jersey. https://doi.org/10.2514/4.479557

Gong W, Cai Z, Zhu L (2009) An efficient multiobjective differential evolution algorithm for engineering design. Struct Multidiscip Optim 38(2):137–157. https://doi.org/10.1007/s00158-008-0269-9

Gourlay T, von Graefe A, Shigunov V, Lataire E (2015) Comparison of aqwa, gl rankine, moses, octopus, pdstrip and wamit with model test results for cargo ship wave-induced motions in shallow water. In: International Conference on Offshore Mechanics and Arctic Engineering, vol. 56598, p. V011T12A006. American Society of Mechanical Engineers

Gutsch M, Steen S, Sprenger F (2020) Operability robustness index as seakeeping performance criterion for offshore vessels. Ocean Eng 217:107931

Halton JH (1960) On the efficiency of certain quasi-random sequences of points in evaluating multi-dimensional integrals. Numerische Mathematik 2(1):84–90

Hardin D, Saff E (2005) Minimal riesz energy point configurations for rectifiable d-dimensional manifolds. Adv Math 193(1):174–204

Holtrop J, Mennen G (1982) An approximate power prediction method. Int Shipbuild Prog 29(335):166–170

Jain H, Deb K (2014) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: Handling constraints and extending to an adaptive approach. IEEE Trans Evolut Comput 18(4):602–622. https://doi.org/10.1109/tevc.2013.2281534

Knowles J (2006) ParEGO: a hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans Evolut Comput 10(1):50–66. https://doi.org/10.1109/tevc.2005.851274

Micchelli CA (1986) Interpolation of scattered data: distance matrices and conditionally positive definite functions. Construct Approx 2(1):11–22

Mirjalili S, Jangir P, Saremi S (2017) Multi-objective ant lion optimizer: a multi-objective optimization algorithm for solving engineering problems. Appl Intell 46(1):79–95. https://doi.org/10.1007/s10489-016-0825-8

Parsons MG, Scott RL (2004) Formulation of multicriterion design optimization problems for solution with scalar numerical optimization methods. J Ship Res 48(1):61–76. https://doi.org/10.1007/s10489-016-0825-8

Ponweiser W, Wagner T, Biermann D, Vincze M (2008) Multiobjective optimization on a limited budget of evaluations using model-assisted \(\cal{S}\)-metric selection. In: International Conference on Parallel Problem Solving from Nature, pp. 784–794. Springer. https://doi.org/10.1007/978-3-540-87700-4_78

Powell MJD (1994) A direct search optimization method that models the objective and constraint functions by linear interpolation. In: Advances in Optimization and Numerical Analysis, pp. 51–67. Springer Netherlands. https://doi.org/10.1007/978-94-015-8330-5_4

Regis RG (2014) Constrained optimization by radial basis function interpolation for high-dimensional expensive black-box problems with infeasible initial points. Eng Optim 46(2):218–243

Regis RG, Shoemaker CA (2013) A quasi-multistart framework for global optimization of expensive functions using response surface models. J Global Optim 56(4):1719–1753. https://doi.org/10.1007/s10898-012-9940-1

Rehbach F, Zaefferer M, Naujoks B, Bartz-Beielstein T (2020) Expected improvement versus predicted value in surrogate-based optimization. arXiv preprint arXiv:2001.02957. https://doi.org/10.1145/3377930.3389816

Riquelme N, Von Lücken C, Baran B (2015) Performance metrics in multi-objective optimization. In: 2015 Latin American Computing Conference (CLEI), pp. 1–11. IEEE

Santana-Quintero LV, Montano AA, Coello CAC (2010) A review of techniques for handling expensive functions in evolutionary multi-objective optimization. Comput Intell Expens Optim Prob 29–59

Singh P, Couckuyt I, Ferranti F, Dhaene T (2014) A constrained multi-objective surrogate-based optimization algorithm. In: 2014 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2014.6900581

Tanabe R, Oyama A (2017) A note on constrained multi-objective optimization benchmark problems. In: 2017 IEEE Congress on Evolutionary Computation (CEC), pp. 1127–1134. IEEE

Urquhart M, Ljungskog E, Sebben S (2020) Surrogate-based optimisation using adaptively scaled radial basis functions. Appl Soft Comput 88:106050

de Winter R (2020) Roydezomer/samo-cobra: Release with new experiments. https://doi.org/10.5281/zenodo.5105636

de Winter R, Furustam J, Bäck T, Muller T (2021) Optimizing ships using the holistic accelerated concept design methodology. In: Okada T, Suzuki K, Kawamura Y (eds) Practical design of ships and other floating structures. Springer Singapore, Singapore, pp 38–50

de Winter R, van Stein B, Bäck T (2021) Samo-cobra: A fast surrogate assisted constrained multi-objective optimization algorithm. In: Ishibuchi H, Zhang Q, Cheng R, Li K, Li H, Wang H, Zhou A (eds) Evolutionary multi-criterion optimization. Springer International Publishing, Cham, pp 270–282

de Winter R, van Stein B, Dijkman M, Bäck T (2018) Designing ships using constrained multi-objective efficient global optimization. In: International Conference on Machine Learning, Optimization, and Data Science, pp. 191–203. Springer. https://doi.org/10.1007/978-3-030-13709-0_16

Yang Y, Liu J, Tan S (2021) A multi-objective evolutionary algorithm for steady-state constrained multi-objective optimization problems. Appl Soft Comput 101:107042

Zitzler E, Laumanns M, Thiele L (2001) SPEA2: Improving the strength pareto evolutionary algorithm. TIK-report 103

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix

A Appendix

The SAMO-COBRA algorithm has several hyper-parameters which can be tuned. For the most important one, the infill criteria (SMS or PHV) the results from our experiments are shown in the Experiment Sect. 4. Other hyper-parameters like the initial sample strategy, the RBF kernel, and \(\epsilon \) adaptation step size have a minor influence on the results as presented in the experiments below.

1.1 A.1 Sample strategy experiment

The initial sample strategy chosen is based on the comparison of the Hyper-volume results of six test functions after \(40\cdot d\) function evaluations in 10 independent runs. The strategy resulting in the highest Hyper-volume in most cases is recommended as the best sampling strategy for the SAMO-COBRA algorithm. The following subset of test functions are selected for the experiments: BNH, CEXP, SRN, TNK, CTP1, and TRICOP.

As can be seen from the results in Table 5, the Halton sampling strategy with \(d+1\) initial samples in most cases lead to better or similar results compared to the other two initial sampling strategies.

1.2 A.2 RBF and \(\epsilon \) adaptation experiment

The \(10\%\) update step size for the RBF evaluation budget, the number of starting points, and the \(\epsilon \) constraint margin are empirically chosen based on the below experiments. \(5\%\), \(10\%\), \(20\%\), and \(50\%\) are the update step sizes experimented with. The update step size which leads to the highest Hyper-volume in most cases is selected and recommended for the SAMO-COBRA algorithm. The following subset of test functions are selected for the experiments: BNH, CEXP, SRN, TNK, CTP1, and TRICOP. Each function is optimized 10 times with independent runs.

As can be seen from the results in Table 6, the \(10\%\) update step size lead to better or statistically indifferent results compared to the other tried step sizes.

1.3 A.3 RBF kernel and scaling selection experiment

In each iteration of the SAMO-COBRA algorithm, the best RBF kernel (Cubic, Gaussian, Multiquadric, Inverse Multiquadric, Thin Plate Spline) and best transformation function are chosen (Scale, Plog, and Standardize) for each objective and constraint independently. In Table 7 the amount of strategy switches per test function and the most frequent chosen RBF kernel per test function are displayed. The results are based on one run with 3 initial samples and 77 iterations of the SAMO-COBRA algorithm with the PHV infill criteria on the BNH, CEXP, SRN, TNK, CTP1, and TRICOP test functions.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Winter, R., Bronkhorst, P., van Stein, B. et al. Constrained Multi-Objective Optimization with a Limited Budget of Function Evaluations. Memetic Comp. 14, 151–164 (2022). https://doi.org/10.1007/s12293-022-00363-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12293-022-00363-y