Abstract

For every \(\beta \in (0,\infty )\), \(\beta \ne 1\), we prove that a positive measure subset A of the unit square contains a point \((x_0,y_0)\) such that A nontrivially intersects curves \(y-y_0 = a (x-x_0)^\beta \) for a whole interval \(I\subseteq (0,\infty )\) of parameters \(a\in I\). A classical Nikodym set counterexample prevents one to take \(\beta =1\), which is the case of straight lines. Moreover, for a planar set A of positive density, we show that the interval I can be arbitrarily large on the logarithmic scale. These results can be thought of as Bourgain-style large-set variants of a recent continuous-parameter Sárközy-type theorem by Kuca, Orponen, and Sahlsten.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Geometric measure theory often tries to identify patters in sufficiently large, but otherwise arbitrary, measurable sets. Recently, nonlinear or curved patterns have begun to attract much attention [1,2,3,4,5,6,7,8,9,10]; most of these references will be discussed below. In this note, we follow one of the many opened lines of research.

Kuca et al. [8] showed that there exists \(\varepsilon >0\) with the following property: every compact set \(K\subseteq {\mathbb {R}}^2\) with Hausdorff dimension at least \(2-\varepsilon \) necessarily contains a pair of points of the form

for some \(u\ne 0\). We can imagine that we started from a point \((x,y)\in K\), translated the parabola \(v=u^2\) so that its vertex falls into (x, y), and moved along that parabola to find another point in the set K; see Fig. 1. Their result can be thought of as a continuous-parameter analogue of the classical Furstenberg–Sárközy theorem [11, 12], on \({\mathbb {R}}^2\) instead of \({\mathbb {Z}}\). The parabola cannot be replaced with a vertical straight line (see the comments in [8]); curvature is crucial.

The authors of [8] mention that a set \(A\subseteq [0,1]^2\) of Lebesgue measure at least \(0<\delta \leqslant 1/2\) contains a pair of points (1.1) that also satisfy the gap bound

for some absolute constant C. This property is seen either by an easy adaptation of Bourgain’s argument from [1] for quadratic progressions

or by merely considering the last two points of the three-point quadratic corner

studied by Christ, Roos, and one of the present authors [4, Theorem 4]. A gap bound is needed in order to have a nontrivial result, as the Steinhaus theorem would identify sufficiently small copies of any finite configuration inside a set of positive measure. Namely, if A has positive measure, then the difference set \(A-A\) contains a ball around the origin, so it certainly intersects the parabola \(v=u^2\) in a point other than (0, 0). More on polynomial patterns like these can be found in recent preprints [9] and [10].

It is natural to wonder if sets \(A\subseteq [0,1]^2\) of positive measure also possess some stronger property of Furstenberg–Sárközy type. For instance, we can consider many parabolas \(v=au^2\) with their vertex translated to the point (x, y). Reasoning from the previous paragraph applies equally well for any fixed \(a>0\) to the vertically scaled set, giving a well-separated pair of points

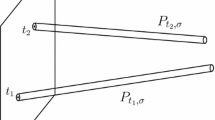

in the set A. However, it is not obvious if there exists a common starting point \((x,y)\in A\) from which we could move along “many” parabolas and always find points in the set A; see Fig. 2. This is the content of our main theorem below and here by many we mean a whole “beam” of parabolas with parameter a running over a non-degenerate interval I. In fact, a parabola can be replaced with any power curve \(v=au^\beta \), for a fixed \(\beta \ne 1\) and a varying \(a>0\).

Here is the main result of the paper. Let \(|E |\) denote the Lebesgue measure of a measurable set \(E\subseteq {\mathbb {R}}^2\).

Theorem 1

For a given \(\beta \in (0,\infty )\), \(\beta \ne 1\), there exists a finite constant \(C\geqslant 1\) with the following property: for every \(0<\delta \leqslant 1/2\) and every measurable set \(A\subseteq [0,1]^2\) of Lebesgue measure \(|A |\) at least \(\delta \), there exist a point \((x,y)\in A\) and an interval \(I\subseteq (0,\infty )\) such that

and that for every \(a\in I\), the set A intersects the arc of the power curve

The following short argument shows that Theorem 1 fails in the limiting case \(\beta =1\), i.e., when the power curves are replaced with straight lines through (x, y). Let \(N\subseteq [0,1]^2\) be a Nikodym set, which is a set of full Lebesgue measure such that through every point of N, one can draw a line that intersects N only at a single point; let us call such lines exceptional. If \({\mathcal {R}}_{\alpha } :{\mathbb {R}}^2\rightarrow {\mathbb {R}}^2\) denotes the rotation about the point (1/2, 1/2) by the angle \(\alpha \), while \({\mathcal {D}}_c:{\mathbb {R}}^2\rightarrow {\mathbb {R}}^2\) denotes the dilation centered at (1/2, 1/2) by the factor \(c>0\), then

is a Nikodym set such that its exceptional lines determine a dense set of directions through each of its points. In particular, there can be no beam of lines

through any point \((x,y)\in A\) that would nontrivially intersect A for each \(a\in I\), as required in Theorem 1. In fact, Davies [13] has already constructed a Nikodym set whose exceptional lines though each of its points form both dense and uncountable sets of directions. On the other hand, if we repeat the simple construction (1.3) starting with a Nikodym-type set found by Chang et al. [14, Corollary 1.2], then we can also rule out curves composed of countably many pieces of straight lines.

Finally, it is also legitimate to ask if an even stronger result holds for “really large” sets, namely for the sets \(A\subseteq {\mathbb {R}}^2\) that occupy a positive “share” of the plane. Recall that the upper Banach density of a measurable set A is defined as

Theorem 2

For a given \(\beta >1\) (resp. \(0<\beta <1\)) and a measurable set \(A\subseteq {\mathbb {R}}^2\) with \(\overline{\delta }(A)>0\), there is a number \(a_0\in (0,\infty )\) with the following property: for every \(a_1\) satisfying \(0<a_1<a_0\) (resp. \(a_1>a_0\)), there exists a point \((x,y)\in A\) such that for every \(a\in {\mathbb {R}}\) satisfying \(a_1\leqslant a\leqslant a_0\) (resp. \(a_0\leqslant a\leqslant a_1\)) the set A intersects the power curve

In comparison with Theorem 1, an improvement coming from Theorem 2 is in the fact that the interval \(I=[a_1,a_0]\) (resp. \(I=[a_0,a_1]\)) can have an arbitrarily small (resp. large) left (resp. right) endpoint \(a_1\). It is not clear to us if the latter result also holds with \(I=(0,\infty )\); this extension would probably be very difficult to prove. Our proof will rely on Bourgain’s dyadic pigeonholing in the parameter a, and as such, it is unable to assert anything for every single value of \(a\in (0,\infty )\). Thus, it is not coincidental that Theorem 2 is quite reminiscent of the so-called pinned distances theorem of Bourgain [15, Theorem 1’]. Our proof will closely follow Bourgain’s proof of that theorem, replacing circles with arcs of the curves \(v=a u^\beta \) and also invoking Bourgain’s results on generalized circular maximal functions in the plane [16].

Theorems 1 and 2 might also be interesting because they initiate the study of strong-type (a.k.a. Bourgain-type) results for finite curved Euclidean configurations, asserting their existence in A for a whole interval I of parameters/scales. The two-point pattern (1.2) studied here could possibly be replaced with larger and more complicated configurations in future.

2 Analytical Reformulation

It is sufficient to study the case \(\beta >1\). Afterward, one can cover \(0<\beta <1\) simply by interchanging the roles of the coordinate axes and applying the previous case to \(1/\beta \). Note that all bounds formulated in Theorem 1 and the statement of Theorem 2 are sufficiently symmetric to allow such swapping. Thus, let us fix the parameter \(\beta \in (1,\infty )\).

It is geometrically evident that one can realize an arc of the power curve \(v=u^\beta \) as a part of a smooth closed simple curve \(\Gamma \), which has non-vanishing curvature and which is the boundary of a centrally symmetric convex set in the plane. More precisely, take parameters \(0<\eta <\theta \) such that

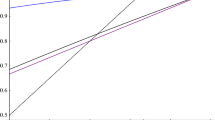

Figure 3 depicts how the arc

can be extended by its tangents at the endpoints to a boundary of a centrally symmetric convex set. It is then easy to curve and smooth this boundary a little in order to make it \(\textrm{C}^\infty \) with non-vanishing curvature while still containing the above arc. The trick of realizing a power arc as a part of the boundary of an appropriate centrally symmetric convex set with intention of applying Bourgain’s results [16] has already been used by Marletta and Ricci [17, Section 1, p. 59].

Define \(\nu \) to be the arc length measure of \(\Gamma \). We can also parametrize the curve \(\Gamma \) by arc length (i.e., traversing it at unit speed) as

so that we have

for every bounded measurable function f. Now take a nonnegative smooth function \(\Psi \) such that its support intersects \(\Gamma \) precisely in the arc (2.1), and which is constant 1 on a major part of that arc. Let \(\sigma \) be the measure given by

note that it is normalized as \(\sigma ({\mathbb {R}}^2)=\sigma (\Gamma )=1\). Then

for every bounded measurable function f, where \(\psi (u)\) is a constant multiple of

Thus, \(\psi \) is a nonnegative \(\textrm{C}^\infty \) function whose support is contained in \([\eta ,\theta ]\). All constants appearing in the proof are allowed to depend on \(\Gamma ,\beta ,\eta ,\theta ,\Psi \) without further mention.

If \(\sigma _t\) is the dilate of \(\sigma \) by a number \(t>0\), i.e., \(\sigma _t(E):=\sigma (t^{-1}E)\), then we have

so \(\sigma _{t}\) is “detects” points on the curve \(v=au^\beta \), where

Finally, let \(\tilde{\sigma }\) be the reflection of \(\sigma \), i.e., \(\tilde{\sigma }(E):=\sigma (-E)\). Note that

Both theorems will be consequences of the following purely analytical result. Let \(\mathbbm {1}_E\) denote the indicator function of a set \(E\subseteq {\mathbb {R}}^2\).

Proposition 3

Take \(0<\delta \leqslant 1/2\) and a measurable set \(A\subseteq [0,1]^2\) of measure \(|A |\geqslant \delta \). Suppose that there exist dyadic numbers (i.e., elements of \(2^{\mathbb {Z}}\))

having the property

for every point \((x,y)\in A\) and every index \(1\leqslant j\leqslant J\). Then \(J\leqslant \delta ^{-C'}\) for some constant \(C'\geqslant 1\) independent of \(\delta \) or A.

Our main task is to establish Proposition 3 and its proof will span over the next section.

3 Proof of Proposition 3

Let us write \(A\lesssim B\) and \(B\gtrsim A\) if the inequality \(A\leqslant CB\) holds for a constant \(C\in (0,\infty )\). This constant C is always understood to depend on \(\Gamma ,\beta ,\eta ,\theta ,\Psi \) from previous sections. Let \(\tau >0\) be a fixed positive number and \(\varrho >0\) a fixed dyadic number; their values will be small and they will be chosen later.

Take a measurable set \(A\subseteq [0,1]^2\) with \(|A |\geqslant \delta \). We write

If we take an index j such that

then

so for every \((x,y)\in A\cap [\tau ,1-\tau ]^2\) and \(t\in [c_j,b_j]\), we have

For such points (x, y), the assumption (2.4) then implies

which in turn leads to a lower bound

provided j is chosen large enough that (3.1) holds.

Let \(\varphi _t\) be the Poisson kernel on \({\mathbb {R}}^2\), i.e.,

for every \(t>0\), where the normalization is chosen such that \(\int _{{\mathbb {R}}^2}\varphi _t = 1\). For a bounded measurable function h we will write

Also, for \(k\in {\mathbb {Z}}\) let \({\mathbb {E}}_k\) denote the martingale averages with respect to the dyadic filtration, i.e.,

where \(h\in \textrm{L}^1_{\textrm{loc}}({\mathbb {R}}^2)\) and the sum is taken over all dyadic squares Q in \({\mathbb {R}}^2\) of area \(2^{-2k}\) (and side length \(2^{-k}\)).

Take \(t\in [c_j,b_j]\) and \(k_j = -\log _2(\varrho c_j)\), which is an integer. We decompose

Taking the triangle inequality and the supremum over t gives

We will estimate each of the terms separately, using Hölder’s inequality. For the first term on the right-hand side of (3.3), we will use the bound

whenever \(p>2\), where \(\alpha \) is a positive constant depending only on p. (Any fixed finite value of p greater than 2 will do.) This bound will follow from the central estimate (10) in Bourgain’s paper [16], which can be written in our notation as

whenever \({\mathbb {E}}_i h=0\), while \(n\leqslant i\) are positive integers and \(p,\alpha \) are as before. Bourgain [16, (10)] actually formulated (3.8) for the full arc length measure \(\textrm{d}\nu \), but the very same proof establishes it also for the smooth truncation \(\Psi \,\textrm{d}\nu \). In fact, Bourgain has already performed several decompositions of \(\nu \) [16, Sections 3–6], and an additional smooth angular finite decomposition of \(\Gamma \) can be added freely to the proof of his upper bound [16, (10)], making the proof insusceptible to a smooth truncation by \(\Psi \).

In order to prove (3.7), let \(d_j=-\log _2(c_j)\). We split \([c_j,1)\) into dyadic intervals \([2^{-n}, 2^{-n+1})\), estimate the maximum in n by the \(\ell ^p\)-sum, write

where \(\Delta _i = {\mathbb {E}}_{i+1} - {\mathbb {E}}_{i}\), and use the triangle inequality, after which it suffices to show

The left-hand side can be rewritten as

and then estimated by Minkowski’s inequality with

Finally, the inequality (3.8) with \(i=m+k_j\) bounds this by

as desired.

To control (3.4) and (3.5), we use Bourgain’s maximal estimate in the plane [16, Theorem 1],

for \(p>2\). Here, it gives

and

for an absolute constant \(C_2\).

To estimate (3.6), we claim that for each \((x,y)\in {\mathbb {R}}^2\), j, and \(t\leqslant b_j\),

for some absolute constant \(C_3\). To see this, we first use that

for each \((x,y)\in {\mathbb {R}}^2\). Since \(\Vert g\Vert _{\textrm{L}^\infty ({\mathbb {R}}^2)}\leqslant 1\), it only remains to bound, using (2.3),

where we also changed variables in x, y. By the mean value theorem, the last display is

for

and some \(0<a<1\). This is further bounded by

where we also used \(|u |\lesssim t\leqslant b_j<1\), and dominated a non-centered \(|\nabla \varphi _1|\) by a centered integrable function. Integrating in u and (x, y), we obtain a bound by \(C_3\varrho \).

Therefore, using (3.2) to obtain a lower bound, estimates (3.7), (3.9), (3.10), (3.11) for upper bounds, and Hölder’s inequality, we obtain

provided j is large enough.

Next,

and we have

and

for some absolute constant \(c_0>0\). The estimate (3.13) follows by the trivial \(\textrm{L}^\infty \) bound for the convolution. To see (3.14), we note that by the Cauchy-Schwarz inequality, for any \(k\in {\mathbb {Z}}\),

Then it remains to bound the martingale averages from above by the Poisson averages. The reader can find the details in the proof of Lemma 2.1 in [3]. Therefore, from (3.12) and \(\int _{{\mathbb {R}}^2}f=|A|\geqslant \delta \), we get

which will turn out useful provided that \(\tau \) is small enough.

Furthermore, we claim that for \(p>2\) and for any \(J>J_0\), we have

and

with the constant \(C_4\) independent of \(J_0,J\). These will be consequences of boundedness on \(\textrm{L}^p({\mathbb {R}}^2)\), \(1<p<\infty \), of the square functions

and

Bound for \(S_1\) follows from the classical Calderón-Zygmund theory [18, Subsections 6.1.3], while boundedness of \(S_2\) was proven by Jones, Seeger, and Wright [19, Sections 3–4]. In fact, the emphasis of the paper [19] was on more general dilation structures and more general martingales, while the square function estimate from the last display is essentially due to Calderón; see [18, Subsection 6.4.4]. Now, (3.16) follows by recalling \(p>2\) and writing

Similarly we deduce (3.17):

To be completely determined, one can simply take \(p=3\). From (3.16) and (3.17), we conclude that there exists \(j\in \{J_0+1,\ldots , J\}\) such that

Together with (3.15) applied for this particular j and \(\tau =c_0\delta ^2/8\) we obtain

i.e.,

Now we recall that we actually chose \(J_0\) in (3.1) at the beginning of the proof, which guarantees that \(J_0\leqslant \log _2(C_5 \delta ^{-2})\) for a suitable constant \(C_5\). Taking \(\varrho \) to be a small multiple of \(\min \{\delta ^{2/\alpha }, \delta ^2\}\) we obtain \(J \leqslant \delta ^{-C'}\) for a suitable constant \(C'\).

4 Proofs of Theorems 1 and 2

In this section, we deduce the two main theorems from Proposition 3. Once again, it is sufficient to consider \(\beta \in (1,\infty )\).

Proof of Theorem 1

Set \(J=\lfloor \delta ^{-C'}\rfloor +1\), where \(C'\) is the constant from Proposition 3. Let us simply choose consecutive dyadic scales, \(b_j=2^{-2j+1}\) and \(c_j=2^{-2j}\) for every \(1\leqslant j\leqslant J\). By the contraposition of Proposition 3 and using formula (2.3), we conclude that there exist a point \((x,y)\in A\) and an index \(1\leqslant j\leqslant J\) such that for every \(c_j\leqslant t\leqslant b_j\), the set A contains a point of the form

Substituting (2.2), we get

which now means that for every

there exists

such that \((x+u,y+au^\beta )\in A\). Observing

and that any such u satisfies

we finally establish Theorem 1. \(\square \)

Proof of Theorem 2

Suppose that the claim does not hold for some measurable set \(A\subseteq {\mathbb {R}}^2\) with \(\overline{\delta }(A)>0\). Take \(\delta :=\overline{\delta }(A)/2\) and \(J=\lfloor \delta ^{-C'}\rfloor +1\), where \(C'\) is the constant from Proposition 3. Inductively, we construct positive numbers

satisfying \(C_{j+1}\leqslant B_j/8^{\beta -1}\) and such that for each \(j\geqslant 1\) and every point \((x,y)\in A\), there exists \(a\in [B_j,C_j]\) with the property that A does not contain a point of the form

After the change of variables (2.2), we see that for each \(j\geqslant 1\) and every point \((x,y)\in A\), there exists

such that A does not contain a point of the form (4.1), so

By the definition of the upper Banach density, there exist a number \(R\geqslant B_J^{-1/(\beta -1)}\) and a point \((x_0,y_0)\in {\mathbb {R}}^2\) such that

Define

and let \(b_j\) and \(c_j\),respectively,be the number \(B_{J+1-j}^{-1/(\beta -1)}/2R\) rounded up to the nearest dyadic number and the number \(C_{J+1-j}^{-1/(\beta -1)}/2R\) rounded down to the nearest dyadic number, i.e.,

for every \(1\leqslant j\leqslant J\). Finally, for every \((x,y)\in A'\) and every \(1\leqslant j\leqslant J\), this implies

for some \(c_j\leqslant t\leqslant b_j\), while we have chosen J so that \(J>\delta ^{-C'}\). Note that also \(|A'|\geqslant \delta \), so the set (4.2) and the numbers (4.3) violate Proposition 3, which leads us to a contradiction. \(\square \)

References

Bourgain, J.: A nonlinear version of Roth’s theorem for sets of positive density in the real line. J. Anal. Math. 50, 169–181 (1988). https://doi.org/10.1007/BF02796120

Henriot, K., Łaba, I., Pramanik, M.: On polynomial configurations in fractal sets. Anal. PDE 9(5), 1153–1184 (2016). https://doi.org/10.2140/apde.2016.9.1153

Durcik, P., Guo, S., Roos, J.: A polynomial Roth theorem on the real line. Trans. Am. Math. Soc. 371(10), 6973–6993 (2019). https://doi.org/10.1090/tran/7574

Christ, M., Durcik, P., Roos, J.: Trilinear smoothing inequalities and a variant of the triangular Hilbert transform. Adv. Math. 390, 107863–60 (2021). https://doi.org/10.1016/j.aim.2021.107863

Chen, X., Guo, J., Li, X.: Two bipolynomial Roth theorems in \({\mathbb{R} }\). J. Funct. Anal. 281(2), 109024–10935 (2021). https://doi.org/10.1016/j.jfa.2021.109024

Fraser, R., Guo, S., Pramanik, M.: Polynomial Roth theorems on sets of fractional dimensions. Int. Math. Res. Not. IMRN 2022(10), 7809–7838 (2022). https://doi.org/10.1093/imrn/rnaa377

Kovač, V.: Density theorems for anisotropic point configurations. Can. J. Math. 74(5), 1244–1276 (2022). https://doi.org/10.4153/S0008414X21000225

Kuca, B., Orponen, T., Sahlsten, T.: On a continuous Sárközy type problem. Int. Math. Res. Not. IMRN. http://arxiv.org/abs/2110.15065 (2022)

Krause, B., Mirek, M., Peluse, S., Wright, J.: Polynomial progressions in topological fields. http://arxiv.org/abs/2210.00670 (2022)

Durcik, P., Roos, J.: A new proof of an inequality of Bourgain. http://arxiv.org/abs/2210.01326 (2022)

Furstenberg, H.: Ergodic behavior of diagonal measures and a theorem of Szemerédi on arithmetic progressions. J. Anal. Math. 31, 204–256 (1977). https://doi.org/10.1007/BF02813304

Sárkőzy, A.: On difference sets of sequences of integers. I. Acta Math. Acad. Sci. Hung. 31(1–2), 125–149 (1978). https://doi.org/10.1007/BF01896079

Davies, R.O.: On accessibility of plane sets and differentiation of functions of two real variables. Proc. Camb. Philos. Soc. 48, 215–232 (1952). https://doi.org/10.1017/s0305004100027584

Chang, A., Csörnyei, M., Héra, K., Keleti, T.: Small unions of affine subspaces and skeletons via Baire category. Adv. Math. 328, 801–821 (2018). https://doi.org/10.1016/j.aim.2018.02.009

Bourgain, J.: A Szemerédi type theorem for sets of positive density in \({\mathbb{R} }^{k}\). Isr. J. Math. 54(3), 307–316 (1986). https://doi.org/10.1007/BF02764959

Bourgain, J.: Averages in the plane over convex curves and maximal operators. J. Anal. Math. 47, 69–85 (1986). https://doi.org/10.1007/BF02792533

Marletta, G., Ricci, F.: Two-parameter maximal functions associated with homogeneous surfaces in \(\textbf{R} ^n\). Studia Math. 130(1), 53–65 (1998)

Grafakos, L.: Classical fourier analysis. In: Gehring, F.W., Halmos, P.R. (eds.) Graduate Texts in Mathematics, vol. 249, 3rd edn., p. 638. Springer, New York (2014). https://doi.org/10.1007/978-1-4939-1194-3

Jones, R.L., Seeger, A., Wright, J.: Strong variational and jump inequalities in harmonic analysis. Trans. Am. Math. Soc. 360(12), 6711–6742 (2008). https://doi.org/10.1090/S0002-9947-08-04538-8

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium. P.D. is partially supported by the NSF Grant DMS-2154356. P.D. and V.K. were supported by the NSF Grant DMS-1929284 while the authors were in residence at the Institute for Computational and Experimental Research in Mathematics in Providence, RI, during the Harmonic Analysis and Convexity program. V.K. and M.S. are partially supported by the Croatian Science Foundation project UIP-2017-05-4129 (MUNHANAP). M.S. is supported by a fellowship through the Grand Challenges Initiative at Chapman University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Durcik, P., Kovač, V. & Stipčić, M. A Strong-Type Furstenberg–Sárközy Theorem for Sets of Positive Measure. J Geom Anal 33, 255 (2023). https://doi.org/10.1007/s12220-023-01309-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12220-023-01309-7