Abstract

We present a review of known results in shape optimization from the point of view of Geometric Analysis. This paper is devoted to the mathematical aspects of the shape optimization theory. We focus on the theory of gradient flows of objective functions and their regularizations. Shape optimization is a part of calculus of variations which uses the geometry. Shape optimization is also related to the free boundary problems in the theory of Partial Differential Equations. We consider smooth perturbations of geometrical domains in order to develop the shape calculus for the analysis of shape optimization problems. There are many applications of such a framework, in solid and fluid mechanics as well as in the solution of inverse problems. For the sake of simplicity we consider model problems, in principle in two spatial dimensions. However, the methods presented are used as well in three spatial dimensions. We present a result on the convergence of the shape gradient method for a model problem. To our best knowledge it is the first result of convergence in shape optimization. The complete proofs of some results are presented in report (Plotnikov and Sokolowski, Gradient flow for Kohn–Vogelius functional).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of shape optimization is a mathematical discipline that is located at the intersection of the calculus of variations and the theory of free boundary value problems. Historically, its beginning is attributed to the time of the appearance of Newton’s studies (1685, Principia Mathematica) of the problem of finding the shape of a body which moves in a fluid with minimal resistance to motion. It seems that the paper [72] was the first publication devoted to shape optimization problems in the mechanics of solids. The other early direction included problems of optimizing the eigenvalues of elliptic operators. See monograph [55] for references and the historical remarks. We should also mention pioneering works [45, 46] on the application of variational methods to problems of ideal fluid flows with free boundaries.

The beginning of the modern mathematical theory of shape optimization was laid in monographs [34, 93, 104]. In these monographs, it was first singled out as an independent scientific discipline. At present, the theory of shape optimization includes a large number of various applied problems. A number of different approaches have been developed to solve shape optimization problems. The purpose of this paper is to give the reader an idea of the main problems of the theory and methods for their solution. We will focus on the geometric aspects of the theory.

Typically, the shape optimization problem admits the following general formulation. First, a fixed bounded \(\Omega \) of the Euclidean space \(\mathbb R^d\), \(d=2,3\), is specified. It is supposed to contain the inclusion \(\Omega _i\) such that \(\overline{\Omega _i}\subset \Omega \). The shape of the inclusion is unknown and must be determined together with the solution of the boundary value problem. It is also assumed that the regions \(\Omega _i\) and \(\Omega _e=\Omega \setminus \overline{\Omega _i} \) are filled with some physical substances. Among such substances can be solid elastic materials, liquids, physical fields (electric field, gravitation field), or simply void. The state of each substance is described by solutions to the system of governing partial differential equations equipped with appropriate boundary conditions. These solutions are completely determined by the inclusion \(\Omega _i\). In this framework, the compact set \(\Gamma =\partial \Omega _i\) defines the interface between domains occupied by materials with different physical properties.

Finally, an objective function J is specified. Usually it is considered as a function of \(\Omega _i\) and solutions to the governing equations. Its value is completely determined by the inclusion \(\Omega _i\). Therefore, we will denote the objective function as \(J(\Omega _i)\) or equivalently \(J(\Gamma )\). The shape optimization problem is to find \(\Omega _i\) that minimizes the objective function,

Here the minimum is taken over the admissible set of inclusions.

Let us give some basic typical examples of applied shape optimization problems. The simplest are problems of shape identification in electric tomography and geophysics. Electrical impedance tomography is used in medical imaging to reconstruct the electric conductivity of a part of the body from measurements of currents and voltages at the surface [26]. The same technique is also used in geophysical explorations. An important special case consists in reconstructing the shape of an unknown inclusion or void assuming (piecewise) constant conductivities.

Transmission single measurement identification problem Let us assume that a material occupy the bounded region \(\Omega \) in the space of points \(x\in \mathbb R^d\), \(d=2,3\). Without loss of generality, we may assume that the boundary of \(\Omega \) is infinitely differentiable. Furthermore, we assume there are two disjoint open arcs \(\Gamma _N, \Gamma _D\subset \partial \Omega \) such that \(\text {cl}\;\Gamma _N\cup \Gamma _D=\partial \Omega \). The inclusion, which is unknown and must be determined together with the solution, occupies the subdomain \(\Omega _i\Subset \Omega \) with the boundary \(\Gamma \). The equilibrium equations for the electric field potential \(u:\Omega \rightarrow \mathbb R\) in the simplest case can be written as

Here n is the outward normal vector to \(\partial \Omega \), \(h_n\) is a given voltage, and \(h_d\) is a given distribution of electric potential. We assume that \(h_n\) and \(h_d\) are extended to \(\partial \Omega \) and

The conductivity a is defined by the equalities

where \(a_0\) is a given positive constant, If \(\Gamma _D\ne \emptyset \), then for every \(h_n\) and \(h_d\) satisfying condition (1.3), problem (1.2) admits a unique solution \(u\in W^{1,2}(\Omega )\). If in addition, the arcs \(\Gamma _N\), \(\Gamma _D\) belong to different connected components of \(\partial \Omega \), \(h_n, h_d\in C^\infty (\partial \Omega )\), and \(\partial \Omega \), \(\partial \Omega _i\) belong to the class \(C^\infty \), then \(u\in C^\infty (\Omega )\). The problem on the identification of the inclusion \(\Omega _i\) is formulated as follows. For a given function \(g: \Gamma _D\rightarrow \mathbb R\) it is necessary to find an inclusion \(\Omega _i\) such that the solution to problem (1.2) satisfies the extra boundary condition

It is assumed that g satisfies the orthogonality condition

More generally, the problem of identification is to determine the shape of the inclusion by the additional boundary condition. This inverse problem is ill-posed and in general case has no solution. In practice, its approximate solution can be found by solving the variational problem

where the objective function \(J(\Omega _i)\) is a positive function that vanishes if and only if a solution to problem (1.2) satisfies the condition (1.5), \({\mathcal {A}}\) is some class of admissible inclusions. Notice that the mapping \( \Omega _i\rightarrow u\), where u is a weak solution to problem (1.2), determines a nonlinear operator, which takes the set of admissible shapes \({\mathcal {A}}\) into \(W^{1,2}(\Omega )\). The most successful choice of the objective function is the Kohn–Vogelius energy functional, which is defined as follows: [61]

Here \(v,w:\Omega \rightarrow \mathbb R\) satisfy the equations and boundary conditions

Single measurement identification problem with void The other example is the electrical impedance tomography problem that can be formulated as follows. For a domain \(\Omega \subset \mathbb R^2\) and functions \(h\in W^{1/2, 2}(\partial \Omega )\), \(g\in W^{-1/2,2}(\partial \Omega )\), to find subdomain \(\Omega _i\Subset \Omega \) and function \(u\in W^{1,2}(\Omega )\) such that

These equations define an overdetermined boundary value problem which has a solution only for the true inclusion \(\Omega _i\). Following Roche and Sokolowski, [97] we can replace boundary value problem (1.9) by the variational problem for Kohn–Vogelius type functional. To this end, denote by v and w solutions to the boundary value problems

In this case the Kohn–Vogelius functional reads

Shape optimization problems in mechanics of solids Again consider the standard geometric configuration that consists of bounded domain \(\Omega \subset \mathbb R^d\), \(d=2,3\), and the inclusion \(\Omega _i\Subset \Omega \). The state of linear elastic solid is completely characterized by the displacement field \(u:\Omega \rightarrow \mathbb R^d\) satisfying the equilibrium equation

where F is a given mass force, the strain tensor e, and the Hooke’s law matrix A are defined by the equality

Here the characteristic function \(\chi _i:\Omega \rightarrow \{0,1\}\) of the domain \(\Omega _i\) is defined by the equality

The constant matrices \(A^\beta \), \(\beta =i,e\), with entries \(A^\beta _{lmpq}\) characterize the properties of the elastic material and satisfies the symmetry and positivity conditions:

Note that the stress tensor \(\sigma \) is defined by the equality \(\sigma (u)= A\, e(u)\). Equation (1.12) should be endowed with boundary conditions. For example, we can take the Neumann and Dirichlet boundary conditions in the form

where \(h_n\) and \(h_d\) are given tractions and displacements, \(\Gamma _N\) and \(\Gamma _D\) are open disjoint subsets of \(\partial \Omega \) such that \( \Gamma _N\cup \overline{\Gamma _D}=\partial \Omega \). There are various formulations of the shape optimization problems in the solid mechanics corresponding to different objective functions. The typical choice of an objective function is

We also can consider the single measurement identification problem for elastic material similar to transmission single measurement identification problem formulated above.

Shape optimization problems in fluid mechanics The considerations of hydrodynamical forces acting on the object traveling within fluid is fundamental to the design of aircrafts, cars, and in many other practical problems. The design of optimal shapes with minimal (maximal) drag is one of the most important problems of applied hydrodynamics. It can be regarded as the shape optimization problem for equations of fluid dynamics. This problem was widely discussed in the literature. We refer the reader to review [75] and to monograph [94].

Again, assume that \(\Omega \subset \mathbb R^d\), \(d=2,3\), is a hold all bounded domain with the smooth boundary \(\partial \Omega \). It is supposed that \(\Omega \) contains a nonpermeable body \(\Omega _i\) with the boundary \(\Gamma \). A viscous incompressible fluid occupies the flow domain \(\Omega _e=\Omega \setminus \overline{\Omega _i}\). The state of the fluid is completely characterized by the velocity field \(u:\Omega _e\rightarrow \mathbb R^d\) and the pressure function \(p:\Omega _e\rightarrow \mathbb R\), which satisfy the Navier–Stokes equations and the boundary conditions

where the constant vector \(u_\infty \) is the flow direction. If \(\partial \Omega \) is Lipschitz, then this boundary value problem admits at least one solution \(u\in W^{1,2}(\Omega _e)\), \(p\in L^2(\Omega _e)\). If in addition \(\partial \Omega \) and \(\Gamma \) belong to the class \(C^{l+\alpha }\) with \(l\ge 2\) and \(\alpha \in (0,1)\), then \(u\in C^{l+\alpha }(\Omega _e)\) and \(p\in C^{l-1+\alpha }(\Omega _e)\).

The drag \({\textbf{F}}_D\) is the projection of the hydrodynamics force, acting on the body, onto the direction \(u_\infty \), i.e.,

It was proved in the seminal paper [11] that the expression for the drag can be equivalently rewritten in the form of the volume integral

It should be noted that the absolute minimum drag is achieved with an empty set \(\Omega _i\). Hence the drag minimization problem only makes sense if there are additional constraints on the geometry of \(\Omega _i\), which guarantee the nontriviality of solution. As such constraints, we can choose the area (length) \({\mathcal {L}}\) of the boundary \(\Gamma =\partial \Omega _i\)

or the volume of the body

In addition, in the two-dimensional case, we can define the lift (lifting force) \({\textbf{F}}_L\) as the projection of the hydrodynamic force onto the direction orthogonal to \(u_\infty \),

The problem of minimizing the drag for a given lifting force, as well as the problem of the ratio \({\textbf{F}}_D/{\textbf{F}}_L\) optimization, are natural optimum design problems for the Navier–Stokes equations, see e.g., [47, 63].

We listed the main applications of optimization theory to problems in solid and fluid mechanics. In fact, the theory of shape optimization finds applications in various fields of science, for example, in biology, [5], and photonics, [64].

Methods Unfortunately, shape optimization problems as stated with no additional geometric constraints are usually ill-posed, see [60, 79, 107] for examples. The reason is that microstructures tend to form, which are associated with a weak convergence of the characteristic functions \(\chi _i^m\) along a minimizing sequence \(\Omega _i^m\), \(m\ge 1\). Indeed, in the absence of strong compactness of the minimizing sequences of designs, the optimal state should be attained by a fine mixture of different phases. There are two different ways to cope with these difficulties.

First, the well-posed problems can be generated by a relaxation (homogenization) procedure. The homogenization of the material properties lead to the formation of microstructures. In such a way, the set of admissible shapes is extended and includes the microstructures. The quasi-convexification of the integrand in J is performed by taking the infimum over all possible microstructures, therefore, the existence of minimizers is ensured. The relaxation procedure usually yields continuous design variables \(\chi _i\) over the reference domain \(\Omega \). In such a case, it is impossible to define any shape from the homogenized solution for solids, liquids, or voids. Hence the relaxed optimal solutions may not lead directly to practical designs. The analysis of the relaxation method is beyond the scope of this paper. We refer the reader e.g., to monographs [19, 25], and paper [4] for a description of the relaxation method.

The second approach is the regularization of the objective function with the geometric energy functionals. The first-order penalization of J reads:

where \({\mathcal {L}}\) is the perimeter of \(\Omega _i\), \(\epsilon _p>0\) is the regularization parameter. If \(\Gamma =\partial \Omega _i\) is a regular manifold, then \({\mathcal {L}}\) is the area of \(\Gamma \) in 3D case and the length of \(\Gamma \) in 2D case. We refer to monograph [42] for the theory of sets with finite perimeter (Caccioppoli sets). This penalization was proposed in [8] by analogy with the Mumford–Shah functional, [78], in the theory of image segmentation processes. Note that the appearance of the perimeter regularization is motivated by the difficulties regarding the mathematical treatment of shape optimization. If the shape optimization problem is additionally supplemented with a perimeter penalization, then positive results concerning existence of optimal shapes have been obtained (see for instance [105]). However, sets with finite perimeter may be irregular in general case. Hence penalization (1.19) can be regarded as a weak regularization of shape optimization problems. The stronger regularization may be obtained if we impose constraints on the curvatures of \(\Gamma \). This approach also was motivated by the theory of image processing, [77]. The only possible conformally and geometrically invariant penalization functional depending on curvatures is the Willmore functional defined by the equality

where H is the mean curvature of \(\Gamma \). We refer the reader to monographs [53, 115], for the basic theory of surfaces with finite Willmore energy. In 2D case \({\mathcal {E}}_e\) coincides with the famous Euler elastica functional. Therefore, we can define the strong regularization of an objective function as follows:

Here \(\epsilon _j\), \(j=e,p\), are some positive constants. Note that the penalization term can be interpreted as the cost of structure manufacturing. Hence \(\epsilon _j\) are not necessary supposed to be small.

Remark 1.1

On the other hand, the influence of the geometric energy penalization on the optimal design should be further studied both from theoretical and numerical points of view. It is known that in the case of level set method with the topological derivatives adding the perimeter requires special construction of the numerical method of solution to obtain useful optimal designs, see [9].

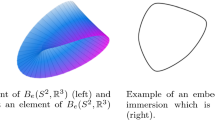

The most important question of the theory is the construction of a robust algorithm for the numerical study of shape optimization problems. The standard approach is to use the steepest descent method based on the shape calculus developed by Sokolowski and Zolesio [104]. See also Delfour and Zolesio [34], and references therein. The shape calculus works for inclusions \( \Omega _i\) with the regular boundary \(\Gamma =\partial \Omega _i \). In this setting, the objective function J is considered as a functional defined on the totality of smooth curves \(\Gamma \). This assumption is natural from the practical point of view. Without loss of generality we may restrict our considerations to the class of twice differentiable immersions (parametrized surfaces, curves) \(f:\mathbb S^{d-1}\rightarrow \mathbb R^d\) with \(\Gamma = f(\mathbb S^{d-1})\) diffeomorphic to the sphere \(\mathbb S^{d-1}\). In this framework, we will use the notation J(f) along with the notation \(J(\Gamma )\). The main goal of the shape calculus is to develop the method of differentiation of objective functions with respect to shapes of geometrical objects.

Following the general method of the shape calculus, we define the shape derivative of an objective function. To this end, choose an arbitrary vector field \(X:\mathbb S^{d-1}\rightarrow \mathbb R^d\) and consider the immersion

The manifolds \(\Gamma ^t=f^t(\mathbb S^{d-1})\), \(t\in (-1,1)\), define the one-parametric family of perturbations of \(\Gamma \). The shape derivative \(\dot{J}\) of J in the direction X is defined by the equality

If it admits the Hadamard representation

where n is the inward normal to \(\Gamma =\partial \Omega _i\), then the vector field

is said to be the gradient of J at the point f. The same definition holds for the geometric energy functional \({\mathcal {E}}\).

1.1 The Steepest Descent Method and the Gradient Flow

It follows from the definition that the shape gradient dJ can be regarded as a normal vector field on \(\Gamma \). If f is sufficiently smooth, for example \(f\in C^{2+\alpha }\), then the mapping \(f+\delta \, \textrm{d}J\,(f)\) defines an immersion of \(\mathbb S^{d-1}\) into \(\mathbb R^d\) for all sufficiently small \(\delta >0\). In the steepest descent method, the optimal immersion f and the corresponding shape \(\Gamma =f(\mathbb S^{d-1})\) are determined as a limit of the sequence of immersions

and the corresponding sequence of surfaces \( \Gamma _n=f_n(\mathbb S^{d-1})\). Here the energy \({\mathcal {E}}\) is defined (1.21), \(\delta \) is a fixed positive number, usually small, \(f_0\) is an arbitrary admissible initial shape. Relation (1.25) can be considered as the time discretization of the Cauchy problem

Since \(\mathcal {E}(f(t))+J(f(t))\) is a decreasing function of t, a solution to problem (1.26) can be considered as approximate solution to the penalized variational problem

Hence the existence of a solution to Cauchy problem (1.26) guarantees the well-posedness of the steepest descent method. In its turn, the existence of the limit \(\lim _{t\rightarrow \infty } f(t)\) guarantees the convergence of the method.

This paper is devoted to the mathematical aspects of the shape optimization theory. We focus on the theory of gradient flows of objective functions and their regularization. However, a number of important ideas and methods are left out of the scope of this article. For example:

-

1.

Topological optimization, which is based on the concept of a topological derivative used in the level set type method, [30,31,32, 80, 82,83,84,85, 103].

- 2.

-

3.

Application of direct methods of the calculus of variations using the theory of capacity, [34].

-

4.

Shape optimization problems with uncertainty conditions and random data, [27, 28, 50,51,52].

-

5.

The optimal layout theory in optimum design, [14, 15, 65, 72, 87].

The paper is organized as follows. Shape sensitivity analysis is one of the main tools of the theory of shape optimization. In Sect. 2, we present the outline of main ideas of the shape calculus. In order to be clear, we restrict the considerations to the relatively simple example of the single measurement identification problem. We give the derivation of the basic formulas for the material derivatives of the solutions to this problem and derive the representations of shape derivatives of objective functions. The formulations are given both in the distributed form and in the form of a contour integral in the Hadamard form.

In the general case, shape optimization problems can be attributed to the class of problems with free boundaries of mathematical physics. Such problems are difficult for mathematical analysis. Their numerical solution encounters significant difficulties. There are several approaches that help simplify the problem. In the next two sections, we will cover two of the most popular approaches: the phase field method and the level set method.

Sect. 3 is devoted to modeling shape optimization problems using the phase field (diffusive surface) method. This method allows us to reduce the original problem with a free boundary to a boundary value problem for a weakly nonlinear system of parabolic–elliptic equations. In this section, we give the construction of a phase field approximation for shape optimization problems in rigid body mechanics and viscous fluid dynamics. We will also proceed with the derivation of the phase field equations for the corresponding gradient flows.

Sect. 4 contains a description of the level set method, which is one of the most common methods for studying shape optimization problems. This method is a special algorithm for the numerical solution of optimization problems. It is based on the representation of the moving surface of the gradient flow of an objective function in the form of a solution to the Hamilton–Jacobi equation. A rigorous mathematical justification of the level set method is hardly possible, but it allows constructing efficient numerical algorithms.

In the last Section A, we consider the question of the correctness of the theory of gradient flows for shape optimization problems. For the model problem of identification of the inclusion form we establish the existence of a smooth solution of the equations.

2 Shape Calculus

In this section, we give the outline of the main ideas of the shape calculus theory.

This theory traces its origins to Hadamard’s pioneering paper [49]. Now the shape calculus is one of the main mathematical tools of the general shape optimization theory. We refer the reader to monographs [10, 34, 37, 54, 104, 109] and papers [2, 86, 102] for details and references.

In order to make the explanation more clear we restrict our considerations by the 2D single measurement identification problem and the simplest scalar version of the compliance problem. We start with the analysis of the shape derivative of the transmission problem for the Laplace equation.

Assume as before that an electric field occupies one-connected bounded domain \(\Omega \subset \mathbb R^2\) with smooth Jordan \(\partial \Omega \). Furthermore assume that an inclusion occupies a bounded domain \(\Omega _i\) with \(\overline{\Omega }_i\subset \Omega \). Denote by \(\Gamma \) the boundary of \(\Omega _i\) and set \(\Omega _e=\Omega \setminus \overline{\Omega }_i\). Suppose also that the conductivity \(a:\Omega \rightarrow \mathbb R\) satisfies the condition

The problem is to find the electric field \(u:\Omega \rightarrow \mathbb R \) satisfying the following equations and boundary conditions:

Here \(u^-\) and \(u^+\) are restrictions of u on \(\Omega _e\) and \(\Omega _i\), \(\nu \) is the outward normal vector to \(\partial \Omega \), n is the inward normal vector to \(\partial \Omega _i=\Gamma \), g is a given voltage.

Further we will assume that the given function g satisfies the solvability condition

These equations can be rewritten in the equivalent form

Equations (2.1.4) have the divergent form and admit a weak solution which is defined as follows. We say that the function \(u\in W^{1,2}(\Omega )\) is a weak solution to problem (2.1.4) if the integral identity

holds for every function \(\zeta \in W^{1,2}(\Omega )\). It is well known that for every \(g\in L^2(\partial \Omega )\) satisfying solvability condition (2.1.3), problem (2.1.4) has the only solution satisfying the orthogonality condition

This solution admits the estimate

2.1 Material Shape Derivative of Solution to Problem (2.1.4)

The definition of the shape derivatives of solutions to problem (2.1.4) is based on the following construction.

Choose an arbitrary mapping \(\varphi : \Omega \rightarrow \mathbb R^2\) of the class \(C^\infty (\Omega )\) and consider the family of \(C^\infty \) mappings \(y^t:\Omega \rightarrow \Omega \) defined by the equality

By the contraction mapping principle, the mapping \(y^t\) takes diffeomorphically the domain \(\Omega \) onto itself for all t from the small interval \((-t^*,t^*)\). Here the small positive \(t^*\) depends only on \(\varphi \). Obviously, \(y^t\) coincides with the identical mapping outside of the support of \(\varphi \). Moreover, it is an analytic function of t in the interval \((-t^*, t^*)\).

The diffeomorphism \(y^t\) defines the one-parametric families of the sets \(\Omega ^t_i\), \(\Gamma ^t\), and the functions \(a^t\),

They can be regarded as the perturbations of the inclusion \(\Omega _i\), the interface \(\Gamma \), and the conductivity coefficient a. The perturbed electric potential \(u^t(y)\) serves as a weak solution to the elliptic boundary value problem

It is clear that \(u\equiv u^0\) is a weak solution to problem (2.1.4), (2.1.6). In other words, \(u^t\) defines the perturbation of the original solution u. The calculation of the derivative \(u^t\) with respect to t (the Eulerian derivative) is difficult, since the set of discontinuity of the coefficient \(a^t\) strongly depends on t. Hence the derivative \(\partial _t u^t\) at \(t=0\) can be defined only outside of \(\Gamma \). In order to cope with this difficulty, the theory of shape calculus deals with the so-called material derivative which is defined as follows. Introduce the one-parametric family of functions \(v^t:\Omega \rightarrow \mathbb R\) given by the equality

The material derivative \(\dot{u}:\Omega \rightarrow \mathbb R\) is defined by the relation

Here u is a solution to problem (2.1.4), (2.1.6). The limit is taken in some suitable Banach space. In our case, an appropriate space is \(W^{1,2}(\Omega )\).

Now our task is to obtain the effective representation for the derivatives \(\dot{u}\) and \(\ddot{u}\). Recall that \(u^t\) is a solution to boundary problem (2.2.3). The change of the independent variable in (2.2.3) leads to the following equations for the function \(v^t\).

Here the symmetric positive matrix N is defined by the equalities

where the notation \(\textrm{d}\varphi \) stands for the Jacobi matrix of the mapping \(\varphi \). By virtue of the Neumann theorem, we have

It follows that the matrix N admits the decomposition

Note that for a suitable choice of \(t^*\), the series in the right-hand side converges in any space \(C^j(\mathbb R^2)\), \(0\le j<\infty \). Calculations give the following representations for the first two terms in decomposition (2.2.8).

The following lemma shows that the formula for \({\textbf{S}}_2\) can be essentially simplified.

Lemma 2.1

Under the above assumptions, we have

Proof

Introduce the temporary notation

It is necessary to prove that

We begin with the observation that

which yields

On the other hand, we have

We thus get

Next, we have

Combining this result with (2.2.12) we arrive at the identity

which obviously yields desired equality (2.2.11) \(\square \)

Let us turn to the derivation of the representations for \(\dot{u}\) and \(\ddot{u}\). Notice that a weak solution to problem (2.2.6) satisfies the integral identity

for all test functions \(\zeta \in W^{1,2}(\Omega )\). The first integral in the left-hand side of this integral identity defines the positive continuous sesquilinear form in the Hilbert space of all functions \(v\in W^{1,2}(\Omega )\) with zero average over \(\partial \Omega \). Moreover, this form is analytic function of the parameter t on the interval \((-t^*, t^*)\). It follows from the analytic theory of perturbations of self-adjoint operators, [59], ch. 7, that the weak solution \(v^t\) to problem (2.2.13) is an analytic function of the parameter \(t\in (-t^*,t^*)\). Moreover, \(v^t\) admits the representation

The series in the right-hand side converges strongly in the space \(W^{1,2}(\Omega )\). It is clear that the material derivative \(\dot{u}[\varphi ]\) and the second material derivative \(\ddot{u}[\varphi ,\varphi ]\) in the direction of the vector field \(\varphi \) are defined by the equalities

In order to complete the derivation of the material derivatives of u, it remains to obtain the equations for \(v_1\) and \(v_2\). Substituting decompositions (2.2.8) and (2.2.14) into (2.2.13) and retaining the first three terms in the obtained equality we conclude that the integral identities

hold for all test functions \(\zeta \in W^{1,2}(\Omega )\). It follows that \(v_1\) and \(v_2\) serve as weak solutions to the boundary value problems

Equations (2.2.18) and (2.2.19) along with relations (2.2.15) define the material shape derivative of u.

2.2 Distributed Shape Derivatives of the Kohn–Vogelius Functional

We define the objective function J by the equality

where u is the solution to problem (2.1.4), (2.1.6). The diffeomorphism \(y^t(x)=(I+t\varphi )(x)\), \(\varphi \in C^\infty _0(\Omega )\), takes the curve \(\Gamma \), the inclusion \(\Omega _i\), and the coefficient a to the perturbed \(\Gamma ^t\), \(\Omega _i^t\), and \(a^t\) given by relations (2.2.2). Let \(u^t\) be a solution to perturbed problem (2.2.3). Thus we get the one-parametric family J(t) perturbation of J given by the equalities

where N and \(v^t\) are defined by (2.2.6) and (2.2.7). The shape derivative of the functional J in the direction \(\varphi \) are given by the equalities

In order to obtain the representation for the shape derivatives of J, we substitute the decompositions (2.2.8) and (2.2.14) into (2.3.1) to obtain

It is clear that the power series at the right-hand side converges on the interval \((-t^*, t^*)\). Thus we get

The direct calculations show that

On the other hand, identities (2.2.16) and (2.2.17) with \(\zeta \) replaced by u and \( v_1\) imply

Substituting these equalities into (2.3.3) and recalling relations (2.2.15) we finally obtain

Equalities (2.3.4) give the desired representation for the shape derivatives of J.

2.3 Hadamard Representation of the First-Order Derivative of the Kohn–Vogelius Functional

The representation of the shape derivatives of the Kohn–Vogelius functional given by formulae (2.3.4) depends on the vector field \(\varphi \) defined in the whole domain \(\Omega \). However, the perturbation of J must depend on the perturbation of the interface \(\Gamma \). Therefore, we may expect that the shape derivatives depends only on the restriction of the vector field \(\varphi \) on \(\Gamma \). In other words, the integrals in (2.3.4) should be independent of an extension \(\varphi |_\Gamma \) to \(\Omega \). This means that the area integrals in (2.3.4) can be reduced to the integrals over the interface \(\Gamma \). This leads to the so-called Hadamard formulae for the shape derivatives. In this subsection we obtain the Hadamard representation for the first-order derivative \(\dot{J}\). Our considerations are based on the following auxiliary lemma.

Assume that \(\Gamma \) is a \(C^2\) Jordan curve. Let us consider two vector fields \({\textbf{p}}, {\textbf{q}}:\Omega \rightarrow \mathbb R^2\) satisfying the following conditions:

where \({\textbf{p}}^-\), \({\textbf{q}}^-\) are restrictions of \({\textbf{p}}\), \({\textbf{q}}\) on \(\Omega _e\) and \({\textbf{p}}^+\), \({\textbf{q}}^+\) are restrictions of \({\textbf{p}}\), \({\textbf{q}}\) on \(\Omega _i\). We will denote by \(\big [\cdot \big ]\) the jumps across the interface \(\Gamma \). For example, we have

Next set

The similar notation holds for \({\textbf{q}}\).

Lemma 2.2

Under the above assumptions we have

where

Proof

Note that

From this and the equalities

we conclude that

Integrating by parts we obtain

Next, we have

Substituting this result into (2.4.6) we finally arrive at desired identity (2.4.4). \(\square \)

We are now in a position to derive the representation for the first derivative of the objective function J. The result is given by the following proposition.

Proposition 2.3

Let a weak solution u to problem (2.2.6) and the interface \(\Gamma \) satisfy the condition

Then we have

Proof

The proof is based on Lemma 2.2. Set

Since \(a=\text {const}\) in domains \(\Omega _e\) and \(\Omega _i\), u is a harmonic function in these domains. It follows that

Applying Lemma 2.2 we obtain

Since u and \(a\nabla u\) are continuous in \(\Omega \), we have

Substituting this result into (2.4.9) and recalling formula (2.3.4) for \(\dot{J}\) we finally obtain

and the proposition follows. \(\square \)

Recall that the gradient \(\textrm{d}J\) of an arbitrary objective function is defined by the equalities

Thus we get the following:

Corollary 2.4

Under the assumptions of Proposition 2.3, we have

Remark 2.5

Let us consider the “complementary” problem with the Dirichlet boundary condition for the functional

where \(w\in W^{1,2}(\Omega )\) is a solution to the Dirichlet problem

Arguing as in the proof of Corollary 2.4 we obtain the following equality:

In particular, representations (2.4.10) and (2.4.11) for the Neumann and Dirichlet problems differ only in sign. Obviously the integral

is independent of \(\Gamma \). From this and from (2.4.10) to (2.4.11) follows formula (2.5.17) for the gradient of the Kohn–Vogelius functional

2.4 The Second-Order Shape Derivative of Kohn–Vogelius Functional

In this subsection we derive the Hadamard representation for the second-order derivative \(\ddot{J}\). The result is given by the following proposition.

Proposition 2.6

Let all conditions of Proposition 2.3 be satisfied. Furthermore assume that the weak solutions \(v_i\), \(i=1,2,\) to problems (2.2.18) and (2.2.19) satisfy the condition

Then we have

where

We split the proof into a sequence of lemmas.

Lemma 2.7

Under the assumptions of Proposition 2.6, we have

where \({\textbf{D}}_1\) is given by (2.5.3).

Proof

It follows from (2.3.4) that

Next, we apply Lemma 2.2 with

to obtain

Since \(a=\text {const.}\) in \(\Omega _e\) and \(\Omega _i\), it follows from equations (2.1.4), (2.2.18) that

in \(\Omega _i\cup \Omega _e\). Thus we get

Substituting this relation into (2.5.5) we finally obtain desired equality (2.5.4). \(\square \)

Lemma 2.8

Under the assumptions of Proposition 2.6, we have

where

Proof

Note that

It follows that

It remains to note that

and the lemma follows. \(\square \)

Lemma 2.9

Under the assumptions of Proposition 2.6, we have

where

Proof

Note that

We thus get

It remains to note that

and the lemma follows. \(\square \)

Lemma 2.10

Under the assumptions of Proposition 2.6, we have

Proof

Since u is harmonic function in \(\Omega \setminus \Gamma \), it follows from (2.5.6) and (2.5.9) that

Next, we have

Repeating these arguments we conclude that equalities (2.5.10) hold for \(\mathcal {A}_{22}+{\mathcal {B}}_{22}\) and \({\mathcal {A}}_{21}+{\mathcal {B}}_{21}\). \(\square \)

Lemma 2.11

Under the assumptions of Proposition 2.6, we have

Proof

Recall that

From this and equalities (2.5.6), (2.5.8) we conclude that

Equalities (2.5.10) in Lemma 2.10 imply

Substituting this result into (2.5.12) we arrive at desired equality (2.5.11). \(\square \)

Lemma 2.12

Under the assumption of Proposition 2.6, we have

Proof

We begin with the observation that

We have

Since \(\Delta u=0\) in \(\Omega \setminus \Gamma \), straightforward calculations lead the representation

where

Substituting the expression for \({\mathcal {R}}_i\) into (2.5.14) and noting that

we finally obtain equality (2.5.13). \(\square \)

Lemma 2.13

Under the assumptions of Proposition 2.6, we have

where \({\mathcal {D}}_i\) are given by (2.5.7) and (2.5.9).

Proof

It follows from Lemma 2.11 that

On the other hand, Lemma 2.12 yields

Combining these equalities we arrive at (2.5.15). \(\square \)

We are now in a position to complete the proof of Proposition 2.6. Equality (2.5.4) reads

Since a is a constant in each component of \(\Omega {\setminus } \Gamma \), equality (2.5.15) implies

It follows from (2.5.7) and (2.5.9) that

Noting that

and

with \(\varphi ^\bot =(-\varphi _2,\varphi _1)\) we get

This leads to the equality

Substituting this relation into (2.5.16) we finally arrive at the desired representation

where \({\textbf{D}}_i\), \(i=1,2\), are defined by (2.5.3). This completes the proof of Proposition 2.6.

The Hadamard representation (2.4.8) of the first derivative \(\dot{J}\) depends only on the restriction of the perturbation \(\varphi \) to the interface \(\Gamma \), i.e., \(\dot{J}\) is localized on \(\Gamma \). For the second-order derivative this fact is not obvious since representation (2.5.2) contains the nonlocal terms \(\dot{u}\) and \(\nabla _n\varphi \). The following lemma which we present without proof constitutes the localization property for \(\ddot{J}\).

Lemma 2.14

Assume that all conditions of Proposition 2.6 are satisfied. Let \(\psi :\Omega \rightarrow \mathbb R^2\) belongs to the class \(C^\infty _0(\Omega )\) and vanishes on \(\Gamma \). Then for every vector field \(\varphi \in C^\infty _0(\Omega )\) we have

Remark 2.15

Formula (2.5.2) defines the quadratic differential \(d^2 J\). The Hessian of J is a bilinear form \({\mathcal {H}}\) defined by equality

2.5 Examples

For many objective functions, there exist the explicit expressions for their gradients. Below we list some of them.

2.5.1 Transmission Single Measurement Identification Problem

In this case the gradient \(\textrm{d}J\) of the Kohn–Vogelius objective function (1.7) is defined as follows, see [2]

where \(\big [\cdot \big ]\) denotes the jump across \(\Gamma \), n is the inward normal to \(\partial \Omega _i=\Gamma \), v, and w are solutions to equations (1.8).

2.5.2 Single Measurement Identification Problem with Void

In this case the gradient of the objective function is defined by the equality, [97],

where v and w are solutions to problem (1.10).

2.5.3 Drag Minimization Problem for Navier–Stokes Equations

In the drag minimization problem, the objective function \(J={\textbf{F}}_D\) is defined by formulae (1.17) and (1.18). The analysis of the shape derivative and the gradient of J have been conducted in [11, 92]. In particular, [92] gives the following expression of \(\textrm{d}J\),

The state (u, p) and the costate (w, q) are given by the solutions to the boundary value problems

Hence the shape gradient \(\textrm{d}J\), in contrast to the shape gradients of Kohn–Vogelius type functionals, depends on shape derivatives of solutions to governing equations. It is important to note that formula (2.5.19) makes sense if and only if solutions to problems (2.5.20) and (2.5.21) are unique. The uniqueness criterium was discussed in [92] and [47]. We only note that solutions are unique if the viscosity \(\nu \) is sufficiently large with respect to \(|u_\infty |\) and the diameter of \(\Omega \).

3 Phase Field Models in the Shape Optimization Theory

3.1 Preliminaries

In the previous sections, we consider shape optimization problems in a fixed hold-all domain \( \Omega \in \mathbb R^d\), \(d=2,3\), containing an unknown inclusion \(\Omega _i\Subset \Omega \). The goal was to find the shape of \(\Omega _i\) in order to minimize the objective function J, depending on \(\Omega _i\) and some physical field u defined in \(\Omega \) or in \(\Omega _i\) or in \(\Omega _e=\Omega \setminus \overline{\Omega _i}\). The unknown inclusion is completely characterized by the design variable \(\rho :\Omega \rightarrow \{-1,1\}\) defined by the equality

is the characteristic function of \(\Omega _i\). Hence \(\rho =1\) in \(\Omega _i\) and \(\rho =-1\) in \(\Omega _e\).

The main idea of the phase field method (diffusive surface method) is to replace the discontinuous design variable \(\rho \) by a continuous phase field function (an order parameter) \(\varphi :\Omega \rightarrow \mathbb R\). The interface \(\Gamma \) between the material components in the phase field approximation can be roughly represented as a small neighborhood of the level set \(\{\varphi =0\}\) named diffusive interface. The domains \(\Omega _i\) and \(\Omega _e\) occupied by different materials approximately correspond to the sets \(\{\varphi >0\}\) and \(\{\varphi <0\}\). Hence no restrictions are imposed on the topology of the diffusive interface. In addition, the boundaries of the sets occupied by different components may intersect with the boundary of the hold all domain \(\Omega \). The latter is important for the analysis of the compliance problem in solid mechanics, see [12, 13] for the statement of the compliance problem. Thus, the phase field theory is one of the most appropriate mathematical tools for solving topological optimization problems.

The phase field method first was developed as a way to represent the surface dynamics of phase transition phenomena. It dates back to historical papers [7, 20]. This method has been used in many interface dynamic studies as a general interface tracking method. The first application of the phase field method to the structural optimization problem was given in [16]. The idea of applying of the phase field method to shape optimization in hydrodynamics first was proposed in [47]. In this section, we shortly describe the possible applications of the phase field theory to the basic shape optimization problems. It is important to note that the phase field method works only for penalized problems with the perimeter or (and) elastic penalization. Hence, first we consider the phase field approximation for geometric problems. Throughout this section the notation W stands for the Ginzburg–Landau potential

Phase field approximation of geometric problems In this paragraph, we consider the phase field approximation for the area functional and the Willmore–Helfrich functional. The approach is based on the general theory of \(\Gamma \)-limit. We refer the reader to monographs [17, 25] for the state of art in the domain. Following [17], the \(\Gamma \)-limit of the sequence of functionals is defined as follows.

Definition 3.1

Let us consider a family of functionals \(F_\varepsilon : X\rightarrow [-\infty , \infty ]\), \(\varepsilon \in (0,1)\), defined on a topological space X. In that case we say that \(F_\varepsilon \) \(\Gamma \)-converges to \(F: X \rightarrow [-\infty , \infty ]\) at \(x \in X\) as \(\varepsilon \rightarrow 0\) if we have

where \({\mathcal {N}}(x)\) denotes the family of all neighborhoods of x in X. In this case we say that F(x) is the \(\Gamma \)-limit of \(F_\varepsilon \) at x and we write

If this equality holds for all \(x \in X\) then we say that \(F_\varepsilon \) \(\Gamma \)-converges to F (on the whole X).

For the first time, the phase field approximation of the area functional was considered in paper [74], see also [73]. In these papers, it was shown that for every bounded Lipschitz domain \(\Omega \subset \mathbb R^d\), \(d=2,3\), the \(\Gamma \)-limit in \(L^1(\Omega )\) of the energy functional

and \(F_\varepsilon (\varphi )=\infty \) otherwise admits the representation.

Here \({\mathcal {H}}_{d-1}\) is \((d-1)\)-dimensional Hausdorff measure, \(\partial ^*\) is the essential De Giorgi boundary, see [17], the constant \(c_W\) is defined by the equality

The question on the phase field approximation of the Willmore–Helfrich functional was raised by De Giorgi, [29], Conjecture 4. Later De Giorgi’s conjecture was modified in the context of the phase transition theory, see [98] and references therein. The modified De Giorgi’s problem can be formulated as follows. Let \(\Omega \subset \mathbb R^d\) be a bounded domain with Lipschitz boundary, let W be the double-well potential defined by (3.2). For every \(\epsilon >0\) and \(\gamma >0\) define the functional \({\mathcal {F}}_\varepsilon : L^1(\Omega )\rightarrow \mathbb R\) by the equalities

if \(\varphi \in W^{2,2}(\Omega )\) and \(\mathcal F_\varepsilon (\varphi )=\infty \) if \(\varphi \in L^1(\Omega ){\setminus } W^{2,2}(\Omega )\). Now introduce a set \(\Omega _i\subset \Omega \) such that the \(\partial \Omega _i\cap \Omega \) belongs to the class \(C^2\). Denote by \(\rho \) the design variable given by (3.1). In [98] it was proved that

where

Here \({\textbf{H}}\) is the mean curvature of \(\partial \Omega _i\cap \Omega \). The requirement that the interface \(\Omega \cap \partial \Omega _i\) be smooth is essential. There are numerous examples of two-dimensional Euler’s elastica with singular points. The phase field approximation does not work in neighborhoods of such points. It should also be noted that the phase field approximation (3.4) is neither the only one and nor the most accurate one. For the discussion of the problem, see for instance [18] and references therein.

It follows from what was mentioned above that the functionals (3.3) and (3.4) define the phase field approximations of the area and Willmore–Helfrich functionals. For sufficiently smooth vector fields \(\varphi \) and smooth compactly supported perturbations \(\delta \varphi \) it follows that \(L^2\)-gradients \(dF_\epsilon \) and \(d\mathcal F_\epsilon \) of the functionals \(F_\varepsilon \) and \(\mathcal F_\epsilon \) are defined by the equalities

Calculations show that

where the chemical potential \(\varvec{\mu }\) is defined by

Gradient flow There is a massive body of literature devoted to \(L^2\)-gradient flow (mean curvature flow) of the area phase field approximation \(F_\epsilon \) given by (3.3). We refer the reader to papers [57, 58] for details. The gradient flow of the Willmore functionals was considered in the papers [38, 69], and [44]. It follows from these papers that \(L^2\)- gradient flow equation for the functional \({\mathcal {F}}_\varepsilon \) reads

where \(\varvec{\mu }_\epsilon \) is given by (3.5). For the sake of simplicity, we take \(\epsilon =1\) and rewrite this equation in the form

The natural boundary and initial conditions for equation (3.6) can be taken in the form

Equation (3.6) along with boundary and initial conditions (3.7) determine the well-posed boundary value problem for weakly nonlinear fourth-order parabolic equation. The global existence and uniqueness of strong solutions for this and more general phase field models are proved in [23].

3.2 Phase Field Approximations of Objective Functions

Two-components problems The main goal of the shape optimization theory is minimizing an objective function J as well as minimizing its penalization \({\mathcal {E}}+J\). Therefore, in order to develop a theory of the phase field approximation for shape optimization problems, it is necessary to determine the phase field approximations of the objective function J. It is important to note that the theory of the phase field models can be developed only for penalized objective functions \({\mathcal {E}}+J\) or \({\mathcal {L}}+J\). In the previous paragraph, we considered the phase field approximations of geometric functionals \({\mathcal {L}}\) and \({\mathcal {E}}\). Now, we give several examples of phase field approximations for the objective functions J listed in Sect. 1.

The Identification Problem We start with the simplest example of an identification problem for transmission equations, which was formulated at the beginning of Sect. 1. For this problem, the objective function coincides with the Kohn–Vogelius functional. By virtue of the definition of the design variable \(\rho \), the expression for the Kohn–Vogelius functional reads

Here the function \(a:\{-1,1\}\rightarrow \{1,a_0\} \) is defined by the equalities

We restrict our considerations by the simple version of the identification problem formulated in Sect. 1 with

In this setting, the functions v and w satisfy the equations and boundary conditions

where \(h\in W^{1/2,2}(\partial \Omega )\), \(g\in L^2(\partial \Omega )\) are given functions, n is the outward normal to \(\partial \Omega \). We also add the following conditions:

which guarantee the solvability and uniqueness of solutions to problem (3.8). In the theory of the phase field models, the discontinuous design variable \(\rho \) is replaced by a continuous phase function \(\varphi \). Therefore, it is most natural to choose the phase approximation of the conductivity \(a(\rho )\) in the form \(a=a(\varphi )\) such that a monotone function \(a\in C^\infty (\mathbb R)\) satisfies the conditions

Thus we arrive at the following expression for the phase field approximation of the Kohn–Vogelius functional

where the functions v and w satisfy the equations and boundary conditions

and additional conditions (3.9). It should be noted that the solutions to problems (3.11) are uniquely determined by the phase field \(\varphi \). Hence \(J=J(\varphi )\) is a function of the phase variable.

Now, our task is to derive the expression for the gradient of the Kohn–Vogelius functional (3.10). Recall that the gradient \(\textrm{d}J\) of an objective function J is defined by the equality

where \(\psi \) is an arbitrary smooth function. The gradient of the phase field approximation of the Kohn–Vogelius functional is given by the following lemma.

Lemma 3.2

For every \(\varphi \in C^2(\Omega )\), the gradient \(\textrm{d}J\) of the functional J defined by (3.10) and (3.11) admits the representation

where v and w are solutions to boundary value problems (3.11)–(3.9).

Proof

Without loss of generality we may assume that \(\varphi \in C^\infty (\Omega )\). Fix an arbitrary \(\psi \in C^\infty (\Omega )\) and consider the one-parametric family of coefficients

Denote by \(v_s, w_s\in W^{1,2}(\Omega )\) the solutions to the boundary value problems

Since \(a_s\) is an infinitely differentiable function of s, it follows from the general theory of elliptic equations, see [59], that \(v_s\) and \(w_s\) are infinitely differentiable functions of s with values in \(W^{1,2}(\Omega )\). Set

Differentiation of equations (3.14) with respect to s at \(s=0\) gives the following system of equations and boundary conditions for \(\omega \) and \(\varsigma \):

which are understood in the sense of distributions. This means that \(\omega \in W^{1,2}(\Omega )\), \(\varsigma \in W^{1,2}_0(\Omega )\), and the integral identities

hold for all \(\xi \in W^{1,2}(\Omega )\) and \(\eta \in W^{1,2}_0(\Omega )\). Next notice that for \(s=0\),

Let us evaluate the integral in the right-hand side. Since \(\varsigma \in W^{1,2}_0(\Omega )\), it follows from (3.11) that

Thus we get

It follows from this and the first integral identity in (3.15) with \(\xi =v-w\) that

Combining the obtained results we arrive at the identity

Substituting this equality into (3.16) we obtain the desired relation (3.12). \(\square \)

The following consequence of Lemma 3.2 may be useful. Let us consider the functionals

where v and w are solutions to boundary value problems (3.11).

Corollary 3.3

Under the assumptions of Lemma 3.2, we have

Proof

Notice that if \(h=0\) (\(g=0\)), then \(w=0\) (\(v=0\)). Hence the corollary is the straightforward consequence of Lemma 3.2. \(\square \)

Compliance minimization problem. Assume that a two-component elastic material occupies the hold all bounded domain \(\Omega \subset \mathbb R^d\), \(d=2,3\), with the \(C^\infty \) boundary \(\partial \Omega \). Denote by \(\Omega _i\) and \(\Omega _e=\Omega \setminus \overline{\Omega _i}\) the domains occupied different components. Usually \(\Omega _i\) is considered as an elastic inclusion. It is important to note that in the compliance minimization problem, it is not assumed that \(\partial \Omega _i\cap \partial \Omega =\emptyset \). The state of the material is characterized by the displacement vector field \(u:\Omega \rightarrow \mathbb R^d\). Introduce the strain tensor e and the Hooke’s law stiffness matrix A defined by the equalities

Here \(\chi _i\) is the characteristic function of domain \(\Omega _i\), the constant matrices \(A^i\) and \(A^e\) characterize the properties of the elastic material and satisfies the symmetry and positivity conditions (1.14). With this notation the stress tensor \(\sigma \) is defined by the equality \(\sigma =Ae(u)\). In the absence of material forces, the equilibrium equation reads

This equation should be supplemented with boundary conditions. We take the traction boundary conditions

where g is a given force acting on the material. The compliance J equals the work done by the applied load

By virtue of the equilibrium equation, we can rewrite this expression in the equivalent form

The functional J can be regarded as an objective function for the compliance minimization problem. Recall the notation \(\rho =2\chi _i-1\) for the design variable. It is easily seen that

In the theory of phase field approximation, the design variable \(\rho \) should be replaced by a continuous phase field function \(\varphi \). We choose the phase field approximation of the tensor A in the form

where \(b\in C^\infty (\mathbb R)\) is a monotone function such that \(b(\varphi )=0\) for \(\varphi \le -1\) and \(b(\varphi )=1\) for \(\varphi \ge 1\). Obviously \(A(\varphi )\) satisfies the symmetry and positivity conditions (1.14). Thus we get the following phase field approximation of the objective function:

Arguing as in the proof of Lemma 3.2 and Corollary 3.3 we arrive at the following formula for the gradient of J:

Phase field approximation of objective functions. One-component problems The important characteristic of the phase field models is that the phase function should be defined in the hold all domain \(\Omega \). The peculiarity of one-component problems is the presence of void where the phase function is not defined. To overcome this difficulty, we make the classical assumption that the void region say \(\Omega _e\) is filled with some fictitious material. Below we give two examples of the application of this approach.

One-component compliance problem. In one-component compliance problem, it is assumed that the domain \(\Omega ^e\) is a void region. The means that \(A^e=0\). Next, we suppose that the domain \(\Omega _e\) is filled with some fictitious material with the Hooke’s law matrix \(A^e=\delta A^*\), \(\delta \in (0,1)\). We also assume that a constant matrix \(A^*\) satisfies the symmetry and positivity condition (1.14). Now take the phase field matrix \(A(\varphi )\) in the form

Thus we reduce the one-component compliance problem to two-component problem considered above. We may expect that solutions to the problem with fictitious material converge to a solution of one-component problem as \(\delta \rightarrow 0\). For positive \(\delta \), the expression for the gradient of the objective function coincides with (3.18).

Drag minimization problem. Recall the formulation of the drag minimization problem. Let \(\Omega \subset \mathbb R^d\), \(d=2,3\), be a bounded domain with the smooth boundary \(\partial \Omega \). It is supposed that \(\Omega \) contains a nonpermeable body \(\Omega _i\) with the boundary \(\Gamma \). A viscous incompressible fluid occupies the flow domain \(\Omega _e=\Omega \setminus \overline{\Omega _i}\). The state of the fluid is characterized by the velocity field \(u:\Omega _e\rightarrow \mathbb R^d\) and the pressure function \(p:\Omega _e\rightarrow \mathbb R\) which satisfy the Navier–Stokes equations and the boundary conditions

where the constant vector \(u_\infty \) is the flow direction. The objective function J is the projection of the hydrodynamics force, acting on the body, onto the direction \(u_\infty \). Calculations show that in the case of the viscous incompressible fluid it is defined by the equality, see [11, 92],

Note that the drag minimization problem makes sense if there are additional constraints on the geometry of \(\Omega _i\), which guarantee the nontriviality of solution. As such constraints, we choose the area (length) \({\mathcal {L}}\) of the boundary \(\Gamma =\partial \Omega _i\),

In order to derive the expression for the phase field approximation of the objective function and its gradient, we have to define the equations for fictitious fluid in the whole domain \(\Omega \). The following approach was proposed in [47]. Notice that the flow domain \(\Omega _e\) approximately coincides with set \(\{\varphi <0\}\). On the other hand, the streamlined body \(\Omega _i\) approximately coincides with the set \(\{\varphi >0\}\). Now introduce a one-parametric family of functions \(a_\delta (\varphi )\) with the following properties:

Next we introduce the fictitious resistance force \(-a_\delta (\varphi ) u\) and define the phase field approximation of the Navier–Stokes equations as follows:

We take the phase field approximation of the objective function in the form

Our task is to derive the expression for the gradient of J by using the shape calculus. On this way we meet the following critical difficulty, which is typical for nonlinear problems. Notice that for every \(\varphi \in L^\infty (\Omega )\), problem (3.23) has a weak solution \(u\in W^{1,2}(\Omega )\), \(p\in L^2(\Omega )\). In particular, for \(\nu>\nu _0>0\), this solution admits the estimate

It is worthy noting that \(c_u\) is independent of \(a_\delta \) and \(\varphi \). Moreover, if \(\varphi \in C^{l+\alpha }(\Omega )\), then \(u\in C^{l+2+\alpha }(\Omega )\). However, this solution is not unique and the number of solutions may depend on \(\varphi \). If it is the case, then we cannot define the shape gradient of the objective function. In order to cope with this difficulty we impose additional restriction on the data and the viscosity coefficient in order to provide the uniqueness of solutions to problem (3.22). It is known, see [56], that a solution u to problem (3.22) with \(a_\delta =0\) is unique, if it satisfies the inequality

where

In particular, u is unique if \(\nu>\nu _0>0\) with arbitrary fixed \(\nu _0\) and

This result also holds true for an arbitrary positive a. Further we will assume that the viscosity coefficient satisfies inequality (3.25). In order to derive the formula for \(\textrm{d}J\) we proceed as in the proof of Lemma 3.2. We restrict our considerations by the case of fixed small parameter \(\delta \) and will write simply \(a(\varphi )\) instead of \(a_\delta (\varphi )\).

Without loss of generality we may assume that \(\varphi \in C^\infty (\Omega )\). Fix an arbitrary \(\psi \in C^\infty (\Omega )\) and consider the one-parametric family of coefficients

Denote by \((u_s, p_s)\in C^\infty (\Omega )\) the solution to the boundary value problem

Since \(a_s\) is an infinitely differentiable function of s, it follows that \(u_s\) and \(p_s\) are infinitely differentiable functions of s. Set

Differentiation of equations (3.26) with respect to s at \(s=0\) gives the equations and boundary conditions for \(\upsilon \) and q

Note that for \(s=0\), we have

Integrating by parts and noting that \(\text {~div~}u=0\), we obtain

Combining this relation with equation (3.26) we arrive at the equality

Here we use the identity

The analysis of the uniqueness proof in [56] shows that condition (3.25) guarantees the existence and boundedness of the linear operator

Next notice that

which gives

where

In other words, \(\omega \) is a solution to the adjoint boundary value problem

Thus we arrive to the following formula for the gradient of the phase field approximation of the drag functional

3.3 Gradient Flows

It follows from the analysis in Sects. 3.1 and 3.2 that in the framework of the phase field theory, the shape optimization problem is reduced to problem of minimization of the functionals

Here \(F_\varepsilon \) and \({\mathcal {F}}_\varepsilon \) are given by equalities (3.3) and (3.4), \(J(\varphi )\) is the phase approximation of the objective function generated by the original shape optimization problem. For example, the functionals J are given formulae (3.10), (3.17), and (3.23) for problems listed in Sect. 3.2. In all these cases the functionals are weakly lower semicontinuous in suitable Sobolev spaces. In particular, the existence of minimizers can be proved by the application of the, direct methods of the calculus of variations.

The justification of the steepest descent method requires study of the gradient flows of the functionals (3.31). The correctness of the evolutionary gradient flow equation guarantees the well-posedness of this method. We restrict our consideration be the case of strong regularization with the Willmore–Helfrich penalty functional. It follows from (3.6) that can take the gradient flow equations in the form

The problems with constraints. In many applications the additional constraints on admissible shapes are imposed. We consider in details the case of the perimeter constraints. The key observation is that in the phase field theory the lengths element \(\textrm{d}s\) of the unknown interface \(\Gamma \) admits the approximation

Hence for \(\epsilon =1\), the corresponding constraints condition can be written in the form

\({\mathcal {L}}_0\) is a given positive constant such that

In this case parameter \(\gamma \) in equation (3.32) becomes a function of the temporal variable and can be regarded as the Lagrange multiplier. It is easily seen that

In this case the function \({\mathcal {C}}\) is independent of t and equals \({\mathcal {C}}(0)\). At the end of this section we give two examples of gradient flow equations for the problems listed in Sect. 3.2.

Two-components compliance minimization problem. In this case we have the following elliptic–parabolic problem for the displacement field u and the phase function \(\varphi \).

Here the matrix \(A(\varphi )\) is defined by (3.19).

Drag minimization problem. In this case we have the parabolic equation for \(\varphi \), the Navier–Stokes equation with fictitious resistance force for the velocity u and the pressure p, and the adjoin linear equation for the adjoint variables \(\omega \) and \(\pi \).

Here \(a(\varphi )=a_\delta (\varphi )\) is defined by (3.21).

4 Level Set Method

The level set method first introduced and devised in [91] is a simple and efficient method for computing the motion of an interface in two or three dimensions. The level set method has a wide range of applications, including problems in fluid mechanics, solids mechanics, and image processing over the years [5, 90, 91, 100]. The level set methods for structural shape and topology optimization problems are proposed and developed in papers [5, 101, 111, 112]. There is now a growing massive of literature devoted to the application of the level set method to numerous applied shape optimization problems. We refer the reader to a review [6] and references therein for the state of the art in this domain. However, it is important to note that in general this method does not have a rigorous mathematical justification. It remains unclear whether the differential equations underlying this method are mathematically correct. Nevertheless, the level set method remains a powerful and efficient method for the numerical analysis of applied problems. In this section, we give a brief outline of the main ideas of the level set method. In order to illustrate the main features of the method, we restrict our considerations by a simple geometric configuration. Assume that a two-component material occupies a bounded domain \(\Omega \subset \mathbb R^2\) with the smooth boundary. Assume that the components occupy two disjoint subdomains \(\Omega _i\) and \(\Omega _e\) of the hold all domain \(\Omega \) separated by an interface \(\Gamma \Subset \Omega \), i.e., \( \Omega _i\cup \Omega _e\cup \Gamma =\Omega \). For simplicity we assume that the inclusion \(\Omega _i\Subset \Omega \) is a simply connected domain with regular boundary \(\Gamma \). The main idea is to define a one-parametric family of moving surfaces (curves) \(\Gamma (t)\) such that the objective function \(J(\Gamma (t))\) decreases as the quasi-time variable t decreases. It separates the moving domains \(\Omega _i(t)\) and \(\Omega _e(t)\).

The level set method occupies an intermediate position between the phase field method and the gradient flow method. Just as in the phase field model, we introduce a phase function \(\varphi (x,t)\) satisfying the conditions

In this setting the interface \(\Gamma (t)\) coincides with the level set \(\{\varphi (\cdot , t)=0\}\). Hence the task is to define the evolution of the phase function \(\varphi (x,t)\).

In contrast to the phase field model, the phase function \(\varphi \) is determined directly from the kinematic equation, which describes the motion of the interface points along the trajectories of some velocity field V(x, t). Denote by \(V_\Gamma (x,t)\), \(x\in \Gamma (t)\), the velocity of the motions of points \(x\in \Gamma (t)\). It assumes that \(V_\Gamma \) is extended to \(\Omega \) such that the extended field V(x, t) is Lipschitz in the variable x and the normal component of V vanishes at \(\partial \Omega \). The evolution of the phase function along the field V is defined by a solution to the Cauchy problem for linear transport equation

Here \(\varphi _0\) is the initial distribution of the phase function. A moving interface is defined as a level set \(\{\varphi (\cdot ,t)=0\}\). The function \(\varphi \) can be defined by the characteristic method by the equality

where X(t, x, s) is a solution to the Cauchy problem

The tangent component of the field V generates the sliding of the interface along itself. Therefore, it is always assumed that the vector field V is directed along the normal to \(\Gamma \). Note that the normal to the level surface is oriented to the side increasing phase function. We thus get

where v is some scalar field. As a result, we arrive at the Hamilton–Jacobi equation for the phase function

This equation is widely used in wave optics and acoustics as a mathematical model of the motion of wave fronts. Almost all results on the global existence and uniqueness of solutions to the Hamilton–Jacobi equations have been obtained using the method of viscosity solutions. We refer the reader to the basic monograph [68] and review article [24] to get acquainted with the general theory of viscosity solutions. Note that the viscosity solutions method is applicable to autonomous equations with a Lipschitz velocity field and Lipschitz initial data. In this case, the solution is also Lipschitz.

To obtain the equation of the level set method for optimization problems, the velocity v must be specified. The main requirement is that the objective function \(J(\Gamma (t))\) decreases as t increases. This leads to the following expression for the restriction \(v_\Gamma \) of the scalar field v to the interface \(\Gamma (t)\):

Here, H is an arbitrary smooth function satisfying the conditions

\( \textrm{d}J:\Gamma (t)\rightarrow \mathbb R^d\) is the gradient of the objective function J given by relations (1.23), (1.24). Recall the basic examples of the objective functions and their gradients listed in Sect. 1. The difficult

Remark 4.1

The positivity condition in (4.6) is due to the fact that the normal vector in definition (1.23) of \(\textrm{d}J\) is directed inside the inclusion \(\Omega _i\) and is opposite to the vector field \(\nabla \varphi /|\nabla \varphi |\).

With this notation the equation for the phase function reads

where \(v^*\) is an extension of the vector field \(v_\Gamma \) given by (4.5) to the hold all domain \(\Omega \). The question of constructing such an extension is nontrivial. We refer the reader to paper [1] for a discussion of this issue.

In particular, \(v^*\) strongly depends on the choice of an extension operator. Note that \(v^*\) and \(v_\Gamma \) depend on \(\Gamma \) in some implicit and complicated manner. In fact, equation (4.7) is a nonlinear operator equation. The well-posedness of Cauchy problem (4.7) have never been investigated. The only exception is the mean curvature flow with the objective function

The rigorous treatment of this specific case was developed in [21, 43]. See also important monograph [48] and the references therein.

In the conclusion of the section, following [6], we describe the recurrent process for finding approximate solutions to problem (4.7). The process is divided into three steps.

1. The first step is to discretize the process with respect to time. For this purpose, the interval (0, T) is divided into subintervals

The velocity \(v^*\) is approximated by functions \(v^*_{a }\) such that

2. The second step is to determine the velocity field \(v^*_n\). Assume that the approximate velocity \(v^*_{a}\) and the approximate phase function \(\varphi _{a}\) are well defined. Set

Next set

Following [6] define the vector field \(v^*_{n}\in W^{1,2}_0(\Omega )\) as a weak solution to the transmission problem,

where \(\epsilon \) is a small parameter.

3. Finally, the approximate solution \(\varphi _{ a}\) on the interval \([\tau _{n-1}, \tau _n]\) is defined as a solution to the Cauchy problem

If \(\Gamma _0\) is sufficiently smooth, then the process is well defined. However, the proof of its convergence is problematical.

References

Adalsteinsson, D., Sethian, J.A.: The fast construction of extension velocities in level set methods. J. Comput. Phys. 148, 2–22 (1999)

Afraites, L., Dambrine, M., Kateb, D.: Shape methods for the transmission problem with a single measurment. Numer. Funct. Anal. Optim. 28, 519–551 (2007)

Allaire, G.: Shape Optimization by the Homogenization Method, vol. 146. Springer, New York (2002)

Allaire, G., Bonnetier, E., Francfort, G., Jouve, F.: Shape optimization by the homogenization method. Numer. Math. 76, 27–68 (1997)

Allaire, G., Jouve, F., Toader, A.-M.: Structural optimization using sensitivity analysis and a level-set method. J. Comput. Phys. 194, 363–393 (2004)

Allaire, G., Dapogny, C., Jouve, F.: Shape and topology optimization. In: Bonito, A., Nochetto, R.H. (eds.) Geometric Partial Differential Equations. Handbook of Numerical Analysis, Part II, vol. 22. Elsevier, Amsterdam (2021)

Allen, S.M., Cahn, J.: A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metall. 27, 1085–1095 (1979)

Ambrosio, L., Buttazzo, G.: An optimal design problem with perimeter penalization. Calc. Var. Partial Differ. Equ. 1, 55–69 (1993)

Amstutz, S., Dapogny, C., Ferrer, A.: A consistent approximation of the total perimeter functional for topology optimization algorithms. ESAIM Control Optim. Calc. Var. 28, paper 18 (2022)

Azegami, H.: Shape Optimization Problems. Springer Optimization and Its Applications, vol. 164. Springer, Singapore (2020)

Bello, J., Fernándes-Cara, E., Lemoine, J., Simon, J.: The differentiability of the drag with respect to the variation of Lipscitz domain in a Navier-Stokes flow. SIAM J. Contr. Optim. 35, 620–640 (1997)

Bendsoe, M.P.: Optimization of Structural Topology. Shape and Material. Springer, Berlin (1995)

Bendsoe, M.P., Sigmund, O.: Material interpolation schemes in topology optimization. Arch. Appl. Mech. 69, 635–654 (1999)

Bolbotowski, K.: Elastic Bodies and Structures of the Optimum Form, Material Distribution, and Anisotropy. PhD Thesis, Oficyna Wydawnicza Politechniki Warszawskiej, Warsaw (2021)

Bouchitté, G., Buttazzo, G.: Characterization of optimal shapes and masses through Monge-Kantorovich equation. J. Eur. Math. Soc. 3, 139–168 (2001)

Bourdin, B., Chambolle, A.: Design dependent loads in topology optimization. ESAIM Control Optim. Calc. Var. 9, 19–48 (2003)

Braids, A.: \(\Gamma \)-Convergence for Beginners. Oxford Lecture Series in Mathematics and Its Applications. Oxford University Press, Oxford (2002)

Bretin, E., Masnou, S., Oudet, E.: Phase-field approximations of the Willmore functional and flow. Numer. Math. 131, 115–171 (2015)

Bucur, D., Buttazo, G.: Variational Methods in Shape Optimization Problems. Birkhäuser, Boston (2005)

Cahn, J.W., Hilliard, J.E.: Free energy of a nonuniform system. I. Interfacial free energy. J. Chem. Phys. 28, 258–267 (1958) https://doi.org/10.1063/1.1744102

Chen, Y.G., Giga, Y., Goto, S.: Uniqueness and existence of viscosity solutions of generalized mean curvature flow equations. J. Differ. Geom. 33, 749–786 (1991)

Chou, K.-S., Zhu, X.-P.: The Curve Shortening Problem. Chapman & Hall/CRC Press, Boca Raton (2001)

Colli, P., Laurencot, P.: A phase field approximation of the Willmore flow with volume and area constraints. SIAM J. Math. Anal. 44, 3734–3754 (2012)

Crandall, M. G., Ishii, H., Lions, P.-L.: User’s guide to viscosity solutions of second order partial differential equations, Bull. Am. Math. Soc. (N.S.) 27(1), 1–67 (1992)

Dal Maso, G.: An Introduction to \(\Gamma \)-Convergence. Birkhäuser, Boston (1993)

Dall’ Acqua, A., Pozzi, P.: A Willmore-Helfrich \(L^2\) flows with natural boundary conditions. Commun. Anal. Geom. 221(4), 617–669 (2014)

Dambrine, M., Greff, I., Harbrecht, H., Puig, B.: Numerical solution of the Poisson equation with a thin layer of random thickness. SIAM J. Numer. Anal. 54(2), 921–941 (2016)

Dambrine, M., Harbrecht, H., Puig, B.: Incorporating knowledge on the measurement noise in electrical impedance tomography. ESIAM Control Optim. Calc. Var. 25, 1–16 (2019)

De Giorgi, E.: Some remarks on \(\Gamma \)-convergence and least squares methods. In: Dal Maso, G., Dell-Antonio, G.F. (eds.) Composite Media and Homogenization Theory. Progress in Nonlinear Differential Equations and Their Applications, vol. 5, pp. 135–142. Birkhuser, Boston (1991)

Delfour, M.C.: Topological derivative: a semidifferential via the Minkowski content. J. Convex Anal. 3(25), 957–982 (2018)

Delfour, M.C.: Topological derivatives via one-sided derivative of parametrized minima and minimax. Eng. Comput. 39, 34–59 (2022)

Delfour, M.C.: Topological derivative of state constrained objective functions: a direct approach. SIAM J. Control Optim. 60, 22–47 (2022)

Delfour, M.C.: One-sided derivative of parametrized minima for shape and topological derivatives. SIAM J. Control Optim. 61, (2023) to appear

Delfour, M.C., Zolésio, J.P.: Shapes and Geometries. Analysis, Differential Calculus, and Optimization. Advances in Design and Control, vol. 4. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2001)

Delfour, M.C., Zolésio, J.-P.: Oriented distance function and its evolution equation for initial sets with thin boundary. SIAM J. Control Optim. 42(6), 2286–2304 (2004)

Delfour, M.C., Zolésio, J.-P.: Evolution equations for shapes and geometries. J. Evol. Equ. 6(3), 399–417 (2006)

Delfour, M.C., Zolésio, J.P.: Shapes and Geometries. Metrics, Analysis, Differential Calculus, and Optimization, 2nd edn. Advances in Design and Control, vol. 22. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2011)

Du, Q., Liu, C., Ryham, R., Wang, X.: A phase field approximation of the Willmore problem. Nonlinearity 18, 1249–1267 (2005)

Dziuk, G., Kuwert, E., Schatzle, R.: Evolution of elastic curves in \(\mathbb{R} ^n\): existence and computation. SIAM J. Math. Anal. 33(5), 1228–1245 (2002)

Eppler, K., Harbrecht, H.: Shape optimization for 3D electrical impedance tomography. In: Glowinski, R., Zolésio, J. (eds.) Free and Moving Boundaries: Analysis, Simulation and Control. Lecture Notes in Pure and Applied Mathematics, vol. 252, pp. 165–184. Chapman & Hall/CRC, Boca Raton (2007)

Eppler, K., Harbrecht, H.: On a Kohn-Vogelius like formulation of free boundary problems. Comput. Optim. App. 52, 69–85 (2012)

Evans, L.C., Gariepy, R.F.: Measure Theory and Fine Properties of Functions. CRC Press, Boca Raton (1992)

Evans, L.C., Spruck, J.: Motion of level sets by mean curvature. I. J. Differ. Geom. 33, 635–681 (1991)

Fei, M., Liu, Y.: Phase field approximation of the Willmore flow. Arch. Ration. Mech. Anal. 241, 1665–1706 (2021)

Garabedian, P., Spencer, D.: Extremal methods in cavitational flow. Arch. Ration. Mech. Anal. 1, 359–409 (1952)

Garabedian, P., Lewy, H., Schiffer, M.: Axially symmetric cavitational flow. Ann. Math. 56, 560–604 (1952)