Abstract

In two recent papers, Boman (J Geom Anal 31:2726–2741, 2020, https://doi.org/10.1007/s12220-020-00372-8, J Ill-posed Inverse Probl 2021, https://doi.org/10.1515/jiip-2020-0139), we proved that the Radon transform of a compactly supported distribution can be supported in the set of supporting planes to a bounded, convex domain \(D\subset {\mathbb {R}}^n\) only if the boundary of D is an ellipsoid. Using closely related methods we study here the relationship between the analytic wave front set for the characteristic function, \(\chi _D\), of a domain \(D \subset {\mathbb {R}}^n\) and singularities of the boundary \(\partial D\) of the domain. For instance we prove that the boundary surface must be real analytic in a neighborhood of a point \(z \in \partial D \in C^1\), if the analytic wave front set of \(\chi _D\) at z contains no other elements than the conormals to \(\partial D\) at z.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Hörmander’s famous proof of Holmgren’s uniqueness theorem showed that a so-called exterior conormal of the support of a distribution f (for instance the conormal of a supporting plane for the support) must belong to the analytic wave front set, \(W\!F_A(f)\), of f, [6, Theorem 8.5.6]. The analogous fact for hyperfunctions was given independently by Kawai, and Kashiwara, [9, Theorem 4.4.1]. The question to be studied here is if singularities of the boundary of the support imply further singularities of the distribution in addition to the exterior conormal of the boundary. Later results by Hörmander [8] and Kashiwara showed that this is actually the case, if the boundary is sufficiently irregular in a certain sense. For instance, Corollary 2.7 in [8] implies that if \(z = (0,0) \in {{\,\textrm{supp}\,}}f\) and \({{\,\textrm{supp}\,}}f \subset \{|x_2|\ge |x_1|^{\gamma }\}\) for some \(\gamma < 2\), then \((z, \xi ) \in W\!F_A(f)\) for all \(\xi \in {\mathbb {R}}^2 \setminus \{0\}\). On the other hand, simple examples show that an analytic singularity of the boundary surface in general does not lead to new singularities in \(W\!F_A(f)\) (see Example 1 below). However, if f is a characteristic functions \(\chi _D\) for an open subset \(D\subset {\mathbb {R}}^n\) with \(C^1\) boundary or a product of a characteristic function with a real analytic function, we show here that analytic singularities of the boundary do imply additional singularities of the function. For instance, if \(W\!F_A(\chi _D)\) at the boundary point z contains no other singularities than the conormals to the boundary at z, then the boundary must be real analytic in a neighborhood of z (Corollary 1).

It turns out that it is better to begin by studying distributions with support in a hypersurface. Let \(\Sigma \) be a \(C^1\) hypersurface \(y = \Psi (x)\) in an open set \(U \subset {\mathbb {R}}^{n+1} \), let \(q_j\) be continuous functions on \(\Sigma \), and define a distribution f supported in \(\Sigma \) by

If \(\Sigma \) is \(C^{\infty }\) smooth and all \(q_j \in C^{\infty }\), then the wave front set of f is contained in the conormal to \(\Sigma \), in Hörmander’s notation

Similarly, if the hypersurface \(\Sigma \) is real analytic, and all \(q_j\) are real analytic functions, then the same inclusion holds for the analytic wave front set of f

We are interested in strong converses to those implications, stating that if f has some microlocal regularity, much weaker than (1.2) or (1.3), then \(\Sigma \) and \(q_j\) must be \(C^{\infty }\) or real analytic, respectively. In this direction we prove the following.

Theorem 1

Let \(\Sigma \) be a \(C^1\) hypersurface in a real analytic manifold M, let \(f \in {\mathcal {D}}'(M)\) be supported in \(\Sigma \), and let \(z \in {{\,\textrm{supp}\,}}f\). Assume that \(v \in T_{z}(M)\) is a tangent vector to M at z that is transversal to \(\Sigma \) and that

Then there exists a neighborhood U of z such that the surface \(\Sigma \) is real analytic in U and the distribution f has the form (1.1) in suitable local coordinates in U with all \(q_j\) real analytic.

Corollary 1

Let \(y = \Psi (x)\) be a \(C^1\) surface in a neighborhood U of \(z = (x^0, y^0) \in {\mathbb {R}}^{n+1}\), let u(x, y) be a real analytic function in U with \(u(z) \ne 0\), and define the function f in U by \(f(x, y) = u(x, y) H(y - \Psi (x))\), where H is the Heaviside function. Assume furthermore that the set of conormals to the line \({\mathbb {R}}\ni y \mapsto (x^0, y)\) is disjoint from \(W\!F_A(f)\). Then there exists a neighborhood \(U_0\) of z such that the surface \(\Sigma \) is real analytic in \(U_0\).

Proof

If u(x, y) is independent of y, it is enough to observe that the distribution \(\partial _y f\) must satisfy the hypotheses of Theorem 1. For the general case we note that

and hence

satisfies the hypotheses of Theorem 1, since differentiation and multiplication by real analytic functions preserve the analytic wave front set. \(\square \)

Remark

As we saw above, (1.3), the conclusion of Theorem 1 implies \(W\!F_A(f) \subset N^*(\Sigma )\), which is a much stronger statement than the assumption. This is not surprising, because the fact that \(W\!F_A(f) \subset N^*(\Sigma )\) follows directly from the assumptions by Kashiwara’s Watermelon theorem [9, Theorem 4.4.3].

Theorem 1 can be used to obtain a considerably simplified proof of the main result, Theorem 1.1, of our recent article [3]. See Sect. 3 for details.

Here is an example of a function f in \({\mathbb {R}}^2\) whose support is bounded by a \(C^2\) curve with a discontinuity in the third derivative at \(z = (0, 0)\), yet \(W\!F_A(f)_z\) consists precisely of the conormal to the boundary at z.

Example 1

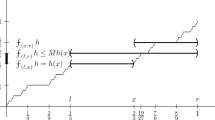

Let u(x, y) be the characteristic function for the region \(y > x^2\) and v(x, y) the characteristic function for the region \(y > x^2 - x^3\). Set \(f = u + v\). Then \(W\!F(f) = W\!F_A(f)\) is equal to the union of the conormals of the two curves, and since the curves have the x-axis as common tangent at the the origin, the only wavefronts of f at the origin are \((0, \pm 1)\). On the other hand, the boundary of the support of f is the curve \(y = \psi (x) = \min (u(x), v(x))\), which is \(C^2\) but not \(C^3\), since \(\psi (x) = x^2\) for \(x < 0\) and \(\psi (x) = x^2 - x^3 \) for \(x > 0\), and hence \(\psi '''(x) = - 6 H(x)\). This shows that a singularity of the boundary curve does not imply additional singularities of the distribution beyond the conormal of the boundary surface.

The assertion of Theorem 1 is no longer true if the analytic wave front set is replaced by the \(C^{\infty }\) wave front set.

Example 2

Let \(\Sigma \) be the curve \(y = |x|^3\) in \({\mathbb {R}}^2\) and let q(x) be the function

where \(\psi \in C_c^{\infty }({\mathbb {R}})\) and \(\psi = 1\) in some neighborhood of the origin. Define a distribution \(f \in {\mathcal {E}}'({\mathbb {R}}^2)\) supported on \(\Sigma \) by

Then \(W\!F(f) \subset N^*(\Sigma )\), although \(\Sigma \) is not smooth.

Proof

Since q(x) is smooth and the curve \(\Sigma \) is smooth outside the origin we need only prove that the only wave fronts above the origin are \((\xi , \eta ) = (0, \pm 1)\). Let us compute the Fourier transform of f along an arbitrary ray through the origin, not parallel to the \(\eta \) axis, \({\mathbb {R}}\ni \lambda \mapsto (\lambda , a \lambda ) = (\xi , \eta )\) with \( a \in \mathbb R\)

Replacing f by \(\chi f\), where \(\chi \) is a smooth cut-off function, equal to 1 in a neighborhood of the origin and supported in \(|x|\,\le 1/\sqrt{2|a|}\), and making the change of variable \(x(1 \pm a x^2) = t\) transforms the last two integrals into the form \(\widehat{u_a}(\lambda )\) where \(u_a \in C_c^{\infty }\) and depends smoothly on a. This shows that \(\widehat{\chi f}(\xi , \eta )\) tends rapidly to zero in the region \(|\xi | \ge \varepsilon |\eta |\) for every \(\varepsilon > 0\), hence \(W\!F(f) \subset N^*(\Sigma )\) as claimed. \(\square \)

2 Proofs

We will first show that the assumptions of Theorem 1 imply that f can be represented as in (1.1) with \(\Psi \in C^1\) and \(q_j\) continuous.

Proposition 1

Under the assumptions of Theorem 1 there exists a neighborhood U of z and local coordinates in U such that \(\Sigma \) can be written \(y = \Psi (x)\) in U with \(\Psi \in C^1\), \(\Psi > 0\), and the distribution f can be written as in (1.1) with \(q_j\) continuous.

Proof

Since the tangent vector v is transversal to \(\Sigma \) we can choose coordinates \((x, y) = (x_1, \ldots , x_n, y)\) in a neighborhood of z such that \(v(\varphi ) = \partial _y \varphi \) at z, in other words, \(v = (0, \ldots , 0, 1)\). From now on we shall denote the coordinates of the point z by \((x^0, y^0)\). The hypersurface \(\Sigma \) can then be represented \(y = \Psi (x)\) for some function \(\Psi \in C^1\) in a neighborhood U of \((x^0, y^0)\). By a translation of the y-coordinate we can of course achieve that \(\Psi > 0\). The assumption (1.4) can now be written

and this implies of course the same for \(W\!F(f)\), that is,

By Theorem 8.2.4 in [6] condition (2.2) implies that the restriction, \(f_x\), of f to the line \(y \mapsto (x, y)\) must exist as a distribution in \({\mathcal {E}}'({\mathbb {R}})\) for x near \(x^0\). Intuitively it is now obvious that f can be written in the form (1.1), because \(f_x\) is supported in the point \(y = \Psi (x)\), so \(f_x\) must be a linear combination of the Dirac measure at \(y = \Psi (x)\) and its derivatives for each x. It remains to make this argument rigorous and prove that each \(q_j(x)\) must be continuous.

By the definition of \(W\!F(f)\) the condition (2.2) implies that a suitable smooth cut-off of f in the x-direction, which we shall also denote by f, must satisfy

for some \(\delta > 0\). For any \(f \in C_c^{\infty }({\mathbb {R}}^{n+1})\) and \(\psi \in C_c^{\infty }({\mathbb {R}})\), denoting the partial Fourier transform with respect to x by \({\mathcal {F}}_x\), we can write

If f satisfies (2.3), then (2.4) makes sense even if f(x, y) does not make sense for fixed (x, y). Hence (2.4) can be used as the definition of \(\langle f_x, \psi \rangle \) for a compactly supported distribution satisfying (2.3). Note that the condition (2.3) implies that the expression (2.4) must depend continuously on x. If, as in our case, \(f_x\) is supported at the point \(y = \Psi (x)\), then each \(f_x\) can be written \(f_x = \sum _j^{m-1} (-1)^j q_j(x) \delta ^{(j)}(y - \Psi (x))\) and hence

To see that we can choose a common m for all \(x \in U\) it is sufficient to observe that the distribution f must have finite order in U, after U has been replaced by a slightly smaller neighborhood of z, if necessary. To prove that all \(q_k(x)\) must be continuous we choose a test function \(\psi (y) = \psi _x(y)\) such that \(\psi _x^{(k)}(\Psi (x)) = 1\) and \(\psi _x^{(j)}(\Psi (x)) = 0\) for all other \(j \le m-1\). Since \(\psi _x(y)\) can be chosen to depend continuously on x and \(x \mapsto \langle f_x, \psi \rangle \) is continuous for all \(\psi \), it follows that \(q_k(x) = \langle f_x, \psi _x\rangle \) is continuous. This completes the proof of the proposition.

We next prove that the assertions of Theorem 1 must hold under the simplifying assumption that the highest coefficient \(q_{m-1}\) in the expression (1.1) for f is different from zero at \(x = x^0\). As in [2] and [3] the arguments consist essentially of algebraic manipulations of sets of equations of the type (2.6). \(\square \)

Proposition 2

Let the distribution f be given by (1.1), where all \(q_j\) are continuous and \(\Psi \in C^1\). Let \((x^0, \Psi (x^0)) \in \Sigma \), assume that \(q_{m-1}(x^0) \ne 0\) and that

Then \(\Psi \) and \(q_j\), \(j = 0, 1, \ldots , m-1\), are real analytic in some neighborhood of \(x^0\).

The proof of Proposition 2 will fill almost all of the rest of this section.

Let \(\pi \) be the projection \({\mathbb {R}}^{n+1} \ni (x, y) \mapsto x \in {\mathbb {R}}^n\) and \(\pi ^*\) the pullback of test functions \(\pi ^* \psi = \psi \circ \pi \) for \(\psi \in C_c^{\infty }({\mathbb {R}}^n)\). The pushforward \(\pi _* f\) is the distribution on \({\mathbb {R}}^n\) that is defined for \(\psi \in C_c^{\infty }({\mathbb {R}}^n)\) by

The assumption (2.5) implies that \(\pi _* f \) is real analytic near \(x^0\). If \(m=1\), the proof is now immediate: since \(\pi _*(f) = q_0(x)\) and \(\pi _*(y f) = q_0(x) \Psi (x)\) are real analytic and \(q_0(x^0) \ne 0\) by assumption, it follows that \(\Psi (x) = \pi _*(y f) /q_0(x)\) must be real analytic in some neighborhood of \(x^0\).

To explain the idea of the proof we next consider the case \(m = 2\). Choosing in turn \(\varphi (x, y) = \psi (x), \psi (x) y, \psi (x) y^2\), and \(\psi (x) y^3\) in (1.1) and using the wave front assumption we see that the functions

must be real analytic. In matrix form the system (2.6) can be written

Our main goal is to prove that the function \(\Psi \) is real analytic. When this is done it will follow immediately from the Eq. (2.6) that all \(q_j\) are real analytic. We can eliminate the quantities \(q_0\) and \(q_1\) from the system (2.6) in the following way. Multiply the first equation by \(\Psi ^2\), the second by \(- 2 \Psi \), and the third by 1 and add all three equations. Then do the same with the second through fourth equations. This gives the system

which in matrix notation can be written

The determinant of the matrix

is different from zero at \(x^0\), because by (2.6)

and \(q_1(x^0) \ne 0\) by assumption. Hence we can solve for instance \(\Psi \) from the system (2.7) and obtain

where F and G are real analytic and \(G(x^0) = \det A(x^0) \ne 0\), hence \(\Psi (x)\) is analytic in a neighborhood of \(x^0\).

The corresponding fact for the general case is the main point of the proof of Proposition 2. We formulate it as a lemma.

Lemma 1

Under the hypotheses of Proposition 2 the function \(\Psi (x)\) must be real analytic in some neighborhood of \(x^0\).

Proof

The proof for the general case is parallel to that of the special case just discussed: we will show (1) that we can eliminate all the functions \(q_j\) to obtain a linear system in the “unknowns” \(\Psi , \ldots , \Psi ^m\), and (2) that the matrix of the resulting system—analogous to A in (2.8)—is non-singular at \(x^0\).

Introduce the matrix M that consists of m columns and infinitely many rows and is defined as follows. The first column is \(1, \Psi , \Psi ^2, \ldots \), the elements of the second column are the formal derivatives of those of the first, \(0, 1, 2 \Psi , 3 \Psi ^2, \ldots \), and the elements of the third column are the second derivatives of the same elements and so on. In other words, the entries \(m_{k, j}\) of the matrix M can be written (D denotes formal differentiation with respect to \(\Psi \))

where \(c_{k, j} = k!/(k-j)!\) for \(0 \le j \le k\). Denote the successive \(m \times m\) submatrices of M by \(M_0, M_1, M_2, \) etc. For instance, if \(m = 4\)

We are interested in the dependence of the matrix \(M_s\) on s. We will first show that

where S is the matrix

with

and \(T = \Psi I + N\), where N is the nilpotent matrix

For instance if \(m = 4\) this means that

The first \(m-1\) rows in the matrix identity \(S M_0 = M_1\) are trivial. The last row of this identity reads

or, taking account of the definition of \(s_{\nu }\),

Observing that all derivatives of order \(< m\) with respect to t of the expression

vanish at \(t = \Psi \) gives (2.14). This proves \(S M_0 = M_1\). The fact that \(M_s T = M_{s+1}\) for all \(s\ge 0\), which implies the second identity in (2.10), follows immediately from the formula

The identity \(S M_s = M_{s+1}\) for arbitrary s now follows from

This completes the proof of (2.10).

Note that the identity \(S M_0 = M_0 T\) shows that

Introduce a notation for column vectors as follows

and

Sets of equations \(\pi _*(f y^k) = h_k\) of the type (2.6) can then be written

It is now very easy to eliminate the functions \(q_j\) from systems of Eq. (2.16). Indeed, from \(M_0 Q = H_0\) and \(M_1 Q = S M_0 Q = H_1\) we get \(S H_0 = H_1\) and similarly

Considering a row of column vectors as a matrix we then have the following identity of \(m\times m\) matrices

The matrix \((H_0, H_1, \ldots , H_{m-1})\) is the analogue of the matrix A in (2.8) and will play an important role in this article. We therefore introduce the short notation

Equality of determinants in (2.18) together with (2.15) now shows that

In particular, if \(\det {\mathcal {H}} \ne 0\) at \(x^0\), \(\Psi ^m\) must be analytic near \(x^0\). [Equation (2.18) actually implies (2.19) with \(m=1\), but we will not need this fact.]

To complete the proof of Lemma 1 it remains to prove that \(q_{m-1}(x^0) \ne 0\) implies that \(\det {\mathcal {H}} \ne 0\) at \(x^0\).

Lemma 2

Under the hypotheses of Proposition 2 the matrix \({\mathcal {H}} = (H_0, H_1, \ldots , H_{m-1})\) is non-singular at \(x^0\). In fact

where \(c_m\) is given by (2.22) and

Proof

Combining (2.16) and (2.10) we obtain the following identity of \(m \times m\) matrices:

\(M_0\) is a triangular matrix whose determinant is equal to a constant \(c_m > 0\). For instance, if \(m = 4\) we have \(c_m = 1!\, 2!\, 3! = 3 \cdot 2^2 = 12\), and for arbitrary m

Set \(B = (Q, TQ, \ldots , T^{m-1} Q)\). We have seen that \(T = \Psi I + N\), where N is the nilpotent matrix (2.12). Replace for a moment T by \(T({t}) = {t} \Psi I + N\), where t is a real variable and define B(t) correspondingly. We will prove that the determinant of B(t) is independent of t, so in fact

The derivative of \(\det B({t})\) with respect to t is equal to a sum of terms of the form

But \(T'({t})\) is equal to \(\Psi \) times the identity matrix. Therefore each of the matrices above contains two proportional columns, which implies that all the determinants are zero, so T(t) is independent of t as claimed. B(0) is a triangular matrix, since

and \(\det B(0)\) is therefore easily seen to be equal to \(b_m q_{m-1}^m\) with \(b_m \ne 0\). From (2.24) we see that for instance if \(m=4\)

and similarly for arbitrary m we get (2.20). This completes the proof of Lemma 2.\(\square \)

The sign of \(b_m\) is not important for us. However, it is easily seen that the sign of \(b_m\) is equal to the parity of the permutation that reverses the order of m elements, and that \(b_m\) is positive if and only if m is equal to 0 or 1 modulo 4. Writing

and using (2.20) we see that in fact

End of proof of Lemma 1

Since the matrix \({\mathcal {H}}\) is non-singular at \(x^0\) by Lemma 2 and we may assume that \(\Psi (x) > 0\) it follows from (2.19) that \(\Psi (x)\) is real analytic in a neighborhood of \(x^0\). \(\square \)

End of proof of Proposition 2

By Lemma 1 we know that \(\Psi (x)\) must be real analytic in some neighborhood of \(x^0\). The triangular structure of the system of the first m of the equations (2.6) for arbitrary m shows that the argument given in the paragraph following (2.6) to prove that all \(q_j\) must be real analytic in a neighborhood of \(x^0\) is actually valid for arbitrary m. This completes the proof of Proposition 2. \(\square \)

Proof of Theorem 1

Choose coordinates according to Proposition 1. Let m be the largest number for which \(x^0 \in {{\,\textrm{supp}\,}}q_{m-1}\). It remains only to consider the case when \(q_{m-1}(x^0) = 0\). By definition a function h that is real analytic in a neighborhood of \(x^0 \in {\mathbb {R}}^n\) can be extended to a holomorphic function in a complex neighborhood in \({\mathbb {C}}^n\) of \(x^0\). From now on we will therefore think of holomorphic germs at \(x^0 \in {\mathbb {R}}^n \subset {\mathbb {C}}^n\). We will use the fact that unique factorization holds in the ring of germs of holomorphic functions of several variables, [7, Theorem 6.2.2]. It follows from (2.19) that the germ of \(\Psi ^m\) at \(x^0\) must be a quotient of holomorphic germs, say \(\Psi ^m = u/v\), where u and v are holomorphic germs at \(x^0\) without common factor. Arguing similarly replacing (2.18) by

and denoting by \(c_{m,s}\) a constant \(\ne 0\) that depends on m and s we then see that the germ

must be real analytic near \(x^0\) for every s. For sufficiently large s this leads to a contradiction unless \(\Psi ^m = u/v\) is analytic near \(x_0\). And since we may assume that \(\Psi (x^0) > 0\) we can conclude that \(\Psi \) is real analytic. And, as was mentioned earlier, the Eq. (2.6) now show that all \(q_j\) must be real analytic. This completes the proof of Theorem 1. \(\square \)

3 Radon Transforms Supported in Hypersurfaces

For continuous compactly supported functions f we define the Radon transform Rf as the integral of f over the hyperplane \(L(\omega , p) = \{x \in {\mathbb {R}}^n;\, \omega \cdot x = p\}\) with respect to surface measure ds

Rf is of course an even function, \(R f(\omega , p) = R f(-\omega , -p)\). The manifold \({\mathcal {H}}_n\) of hyperplanes in \({\mathbb {R}}^n\) is identified with \(S^{n-1} \times {\mathbb {R}}\) with \((\omega , p)\) and \((-\omega , -p)\) identified. It is well known that the Radon transform Rf of a compactly supported distribution f can be defined by

for even test functions \(\varphi (\omega , p)\) on \(S^{n-1} \times {\mathbb {R}}\). Here the action of the distribution \(g \in {\mathcal {D}}'({\mathcal {H}}_n)\) on the test function \(\varphi \) is denoted \(\langle g, \varphi \rangle \), and a locally integrable function \(g(\omega , p)\) is identified with a distribution by the formula

where \(d\omega \) is area measure on \(S^{n-1}\), and \(R^* \varphi \) is defined by

For more details see [4] or [2, Sect. 2]. It was proved in [2] and [3] that the Radon transform of a compactly supported distribution can be supported in the set of tangent planes to a bounded convex domain \(D \subset {\mathbb {R}}^n\) only if the boundary of D is an ellipsoid. It has been asked if the following local version of that theorem is valid. Let D be a convex (not necessarily bounded) domain with \(C^1\) boundary, and denote by \(\Sigma _D \) the set of tangent planes to \(\partial D\), which is a hypersurface in \({\mathcal {H}}_n\). Let \(L_0\) be a tangent plane to \(\partial D\) at the point \(z \in \partial D\). Assume that there exists a distribution f that is supported in \({\overline{D}}\) such that the restriction of Rf to a neighborhood \(V \subset {\mathcal {H}}_n\) of \(L_0\) is supported in \(V \cap \Sigma _D\). The question is if \(\partial D\) must then be an ellipsoid in some neighborhood of z. A partial answer to this question was given by Mark Agranovsky [1]. If \(\partial D\) is \(C^1\) in a neighborhood of z, then Theorem 1 implies that under those conditions \(\Sigma _D\) must be a real analytic hypersurface in the manifold \({\mathcal {H}}_n\) in a neighborhood of \(L_0\) (Theorem 2). Moreover, if \(\partial D\) is assumed to be \(C^{1,1}\), then \(\partial D\) must itself be real analytic (Theorem 3). Here we have used the standard notation \(C^{1,1}\) to denote the set of \(C^1\) functions whose first derivatives are Lipschitz continuous, and a hypersurface is said to be \(C^{1,1}\) if its defining function is \(C^{1,1}\). We wish to point out that in the proofs of those facts we do not need to use the assumption that the distribution g is a Radon transform, but only the fact that certain elements are not in the analytic wave front set of g.

Let D be a strictly convex (not necessarily bounded) domain in \({\mathbb {R}}^n\) with \(C^1\) boundary and let \(L_0\) be a hyperplane that is tangent to the boundary \(\partial D\) of D at the point z. Note that the intersection \(L \cap D\) must be bounded for all hyperplanes L in some neighborhood of \(L_0\), since D is strictly convex. The set \({\mathcal {H}}_n\) of hyperplanes in \({\mathbb {R}}^n\) has a natural structure as a real analytic manifold. In a neighborhood of a hyperplane \(L(\omega ^0, p_0) = \{x \in {\mathbb {R}}^n;\, x \cdot \omega ^0 = p_0\}\) with \(\omega ^0_n \ne 0\) we can take \(\omega _1, \ldots , \omega _{n-1}, p\) as coordinates on \({\mathcal {H}}_n\).

Theorem 2

Assume that there exists a distribution f, supported in \({\overline{D}}\), and a neighborhood V of \(L_0\) such that the restriction \(g = \left. R f\right| _V\) is supported in the set \(\Sigma _D \subset {\mathcal {H}}_n\) of tangent planes to \(\partial D\). Then g has the form (1.1) in suitable coordinates, and the surface \(\Sigma = \Sigma _D\) and all \(q_j\) are real analytic in a neighborhood of \(L_0\).

For the proof of Theorem 2 we shall need the following well known fact.

Lemma 3

If \(g(\omega , p) = R f(\omega , p)\) is the Radon transform of a compactly supported distribution, then the conormal of every line \({\mathbb {R}}\ni p \mapsto (\omega , p)\) for \(\omega \in S^{n-1}\) is disjoint from the analytic wave front set of g.

Proof

In two dimensions, the assertion of the lemma is closely related to the well known fact that the so-called sinogram, which is a density plot of the Radon transform \(R f(\omega , p)\) in an \(\alpha \, p\) plane where \(\cos \alpha = \omega _1\), never contains discontinuities along curves with vertical tangents (the p-axis is vertical). The lemma is a special case of much stronger statements that are well known (see e.g. [6], Sects. 8.2 and 8.5), so we only sketch the proof. We first consider the case of the \(C^{\infty }\) wave front set, \(W\!F(g)\). Let \(L_0\) be an arbitrary hyperplane in \({\mathbb {R}}^n\). Choose coordinates so that \(L_0\) is defined by \(x_n = 0\). In a neighborhood V of \(L_0\), in fact in the open set of all hyperplanes \(L(\omega , p)\) with \(\omega _n \ne 0\), we can choose coordinates \(y_1, \ldots , y_n\) on the manifold of hyperplanes by \(y_j = \omega _j/\omega _n\) for \(1 \le j \le n-1\) and \(y_n = p/\omega _n\). Writing \(x = (x', x_n)\) and \(\omega = (\omega ', \omega _n)\) we note that \(x \cdot \omega = x' \cdot \omega ' + x_n \omega _n = p\) is equivalent to

The so-called parametric Radon transform \(R_p f\) can be written in terms of the y-coordinates

Since \(ds/dx' = \sqrt{1 + |y'|^2}\) this gives the following expression for Rf in terms of the y-coordinates

The factor \( \sqrt{1 + |y'|^2}\) is a real analytic function of y, so we don’t need to pay attention to it here. For each fixed \(x'\) the integrand, as a function of y, has the form

for some function h of one variable. The wave front set of such a function must be contained in the conormal of the level sets \(y_n - x' \cdot y' = c\), which consists of elements of the form \((y, \eta )\), where

with \(\lambda \in {\mathbb {R}}\setminus \{0\}\) and \(x = (x', x_n) \in {{\,\textrm{supp}\,}}f\). If f is supported in the ball \(|x| \le b\), it follows that, for any \((y, \eta ) \in W\!F(g)\), \(\eta \) must have the form (3.1) with \(|x'| \le b\), that is

In particular, if \((y, \eta ) \in W\!F(g)\), then \(\eta _n\) must be different from zero. This proves the assertion of the lemma with \(W\!F(g)\) instead of \(W\!F_A(g)\). The argument is exactly the same for the case of \(W\!F_A(g)\). This completes the proof. \(\square \)

Proof of Theorem 2

Lemma 3 shows that we can apply Theorem 1 to the distribution g and the hypersurface \(p = \Psi (\omega )\) in the manifold of hyperplanes. The conclusion is that \(\Psi (\omega )\) must be real analytic and that \(g(\omega , p)\) must be expressible in the form (1.1) in suitable coordinates, where the surface \(\Sigma \) and all densities \(q_j\) are real analytic.\(\square \)

It is important to observe that the set of tangent planes to a hypersurface \(S \subset {\mathbb {R}}^n\) can be a real analytic hypersurface in the manifold of hyperplanes, even if S is not smooth. For example, if D is the set \(\{x \in {\mathbb {R}}^2;\, x_2 > (3/4)|x_1|^{4/3} \}\), then the line \(x_2 = \xi _1 x_1 + \xi _2\) is tangent to \(\partial D\) if and only if \(\xi _2 = - \xi _1^4/4\). However, if the boundary \(\partial D\) in Theorem 2 is assumed to be in \(C^{1,1}\), then we can in fact conclude that the boundary is real analytic. This is an immediate consequence of the following property of the Legendre transform.

Proposition 3

Assume that the real-valued function \(h(x) = h(x_1, \ldots , x_n)\) is real analytic and strictly convex, and that its Legendre transform \(\widetilde{h}(\xi )\) is in \(C^{1,1}\). Then \(h''(x)\) is non-degenerate (as a quadratic form) and \(\widetilde{h}(\xi )\) is real analytic.

The assertion of Proposition 3 is a special case of Theorem 4.2.2 in Chapter X of [5].

Theorem 3

Assume, in addition to the hypotheses of Theorem 2, that the boundary of D is in \(C^{1,1}\) in a neighborhood of \(z \in L_0 \cap \partial D\). Then the boundary of D is real analytic in a neighborhood of z.

Proof

Choose coordinates so that \(L_0\) is the hyperplane \(x_n = 0\) and D is given by \(x_n > h(x')\) in a neighborhood of \(z = (0, \ldots , 0)\). The hyperplane \(x_n = x' \cdot \xi ' - q\) is disjoint from D if and only if \(x' \cdot \xi ' - q \le h(x')\) for all \(x'\), that is,

Hence the hyperplane \(x_n = x' \cdot \xi ' - q\) is tangent to \(\partial D\) if and only if \(\widetilde{h}(\xi ') = q\). From Theorem 2 we know that the surface \(\Sigma = \Sigma _D\subset {\mathcal {H}}_n\) of tangent planes to \(\partial D\) is real analytic in a neighborhood V of \(L_0\). Using \(\xi _1, \ldots , \xi _{n-1}, q\) as coordinates on \(V \subset \mathcal H_n\) we see that the function \(\widetilde{h}(\xi ')\) must be real analytic near \(\xi ' = (0, \ldots , 0)\). Since h is convex, the Legendre transform of \(\widetilde{h}\) is equal to h, [5, ch. X, vol. 2, Sect. 1.3], [10, Sect. 12]. And since \(h(x')\) was assumed to be \(C^{1,1}\), Proposition 3 now implies that \(h(x')\) is real analytic, which completes the proof. \(\square \)

Since the proof of Proposition 3 is much easier than that of the considerably sharper Theorem 4.2.2 in [5], we have included a proof of Proposition 3 here. Our arguments give without additional effort the following slightly stronger statement.

Proposition 4

Assume that the real-valued function \(h(x) = h(x_1, \ldots , x_n)\) is strictly convex and in \(C^p\) for some integer \(p \ge 2\), and that its Legendre transform \(\widetilde{h}(\xi )\) is in \(C^{1,1}\). Then \(h''(x)\) is non-degenerate and \(\widetilde{h}(\xi )\) is in \(C^p\). Moreover, if h is real analytic, then \(\widetilde{h}\) is real analytic.

If \(h \in C^p\), \(p \ge 2\), and the second derivative \(h''(x)\) (considered as a quadratic form) is non-degenerate, then it is very easy to see that \(\widetilde{h}\) must belong to \(C^{p-1}\), and hence \(\widetilde{h} \in C^{\infty }\) if \(h \in C^{\infty }\). In fact

where x is given by

If \(h''(x)\) is non-degenerate, then the Jacobian of the map \(x \mapsto h'(x)\) is non-singular and \(h' \in C^{p-1}\), so x can be solved from (3.3) as a \(C^{p-1}\) function of \(\xi \), and plugging the result into (3.2) proves that \(\widetilde{h}(\xi )\) is \(C^{p-1}\). The same argument shows that \(\widetilde{h}\) is real analytic if h is real analytic. We shall see in a moment that this argument can be improved to show that \(\widetilde{h} \in C^p\) if \(h \in C^p\).

If h is convex, the Legendre transform of \(\widetilde{h}(\xi )\) is equal to h. If h is \(C^1\) and strictly convex, then the map \(x \mapsto \xi \) that is defined by (3.3) is injective. Therefore its inverse must be continuous, since the inverse of a continuous and injective map on a compact set is continuous. Moreover, it is known that \(\widetilde{h}\) is differentiable if h is strictly convex, [5, ch. X, vol. 2, Sect. 4.1]. Interchanging the roles of h and \(\widetilde{h}\) we can conclude that

Alternatively, from (3.2) we get

This identity confirms that \(\widetilde{h}'(\xi )\) is continuous, if h is \(C^1\) and strictly convex. Moreover, if \(h \in C^p\), \(p \ge 2\), and \(h''(x)\) is non-degenerate, then it is clear that the map \(x \mapsto h'(x) = \xi \) is \(C^{p-1}\). If \(h''(x)\) is non-degenerate, the inverse of that map must then also be \(C^{p-1}\), that is, the map \(\xi \mapsto x = \widetilde{h}'(\xi )\) must be \(C^{p-1}\). This shows that \(\widetilde{h} \in C^p\) as claimed.

Therefore the assertions of Proposition 4 follow after we have proved the following.

Proposition 5

If \(h \in C^2\) is strictly convex and \(h''(0)\) is degenerate, then the Legendre transform \(\widetilde{h}(\xi )\) is not \(C^{1,1}\) in a neighborhood of \(\xi ^0 = h'(0)\).

Proof

If \(n =1\) the assertion of Proposition 5 is trivial. To see this assume \(h\in C^{2}\) is strictly convex and that \(h(0) = h'(0) = 0\). The assumption that \(h''(0)\) is degenerate means in this case that \(h''(0) = 0\). Then \(h(x) = o(x^2)\) and \(h'(x) = o(x)\) as \(x \rightarrow 0\), so

We shall use (3.4). Let x and \(\xi \) be connected by \(h'(x) = \xi \). Then \(\widetilde{h}'(\xi ) = x\), so \(h'(x)/x = \xi /\widetilde{h}'(\xi )\). Formula (3.5) now shows that \(\xi /\widetilde{h}'(\xi )\) tends to zero, and hence

is unbounded, which proves that \(\widetilde{h}\) is not \(C^{1,1}\).

In considering the general case we may assume that \(h(0) = \partial _j h(0) = 0\) for all j. Then we can write a Taylor expansion of h(x) in the form

where \(q_2(x)\) is a homogeneous polynomial of degree 2. Choose coordinates so that

where all \(a_j > 0\). The assumption that \(h''(0)\) is degenerate implies that \(0 \le k<n\), and hence \(\partial _n^2 h(0) = 0\). Set \(v = (0, \ldots , 0,1)\) and

for some \(\varepsilon > 0\). Then in particular

By (3.4) we have \(\widetilde{h}'(\xi (t)) = t v\) and in particular

Combining this with (3.8) and (3.9) we see that

must tend to infinity as \(t \rightarrow 0\), so \(\widetilde{h}(\xi )\) cannot be \(C^{1,1}\). This completes the proof of Proposition 5.\(\square \)

As mentioned above Theorem 1 can be used to simplify the proof of Theorem 1.1 in [3]. A crucial step in the proof of Theorem 3.1, which implies Theorem 1.1, was to show that the determinant of \(\Pi _0(\omega )\) in (5.4) is not identically zero. The determinant of \(\Pi _0(\omega )\) is equal to a multiple of \(q_{m-1}(\omega )q_{m-1}(-\omega )\) as is seen from formula (5.5). Here \(q_{m-1}(\omega )\) is a function on \(S^{n-1}\), and it is the highest coefficient in an expression for a distribution on \({\mathcal {H}}_n\) with support in a hypersurface, similar to (1.1) above. Now, Theorem 1 shows that \(q_{m-1}(\omega )\) must be real analytic, and therefore it is clear that \(q_{m-1}(\omega )q_{m-1}(-\omega )\) cannot vanish identically unless \(q_{m-1}\) vanishes identically.

Change history

21 November 2022

The original online version of this article was revised: Missing Open Access funding information has been added in the Funding Note.

References

Agranovsky, M.: Locally polynomially integrable surfaces and finite stationary phase expansions. J. d’Anal. Math. 141, 23–47 (2020)

Boman, J.: A hypersurface containing the support of a Radon transform must be an ellipsoid. I: The symmetric case. J. Geom. Anal. 31, 2726–2741 (2020). https://doi.org/10.1007/s12220-020-00372-8

Boman, J.: A hypersurface containing the support of a Radon transform must be an ellipsoid. II: The general case. J. Ill-posed Inverse Probl. (2021). https://doi.org/10.1515/jiip-2020-0139

Helgason, S.: The radon transform. Birkhäuser, Boston (1980)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Convex analysis and minimization algorithms, vol. II. Springer-Verlag (1993)

Hörmander, L.: The analysis of linear partial differential operators. I. Springer, Berlin (1983)

Hörmander, L.: An introduction to complex analysis in several variables. Van Nostrand, Princeton (1966)

Hörmander, L.: Remarks on Holmgren’s uniqueness theorem. Ann. Inst. Fourier (Grenoble) 43, 1223–1251 (1993)

Kaneko, A.: Introduction to hyperfunctions. Kluwer, Tokyo (1988)

Rockafellar, R.: Convex analysis. Princeton University Press, Princeton (1970)

Funding

Open access funding provided by Stockholm University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Boman, J. Regularity of a Distribution and of the Boundary of Its Support. J Geom Anal 32, 300 (2022). https://doi.org/10.1007/s12220-022-01021-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12220-022-01021-y