Abstract

We analyze the problem of reconstruction of a bandlimited function f from the space–time samples of its states \(f_t=\phi _t*f\) resulting from the convolution with a kernel \(\phi _t\). It is well-known that, in natural phenomena, uniform space–time samples of f are not sufficient to reconstruct f in a stable way. To enable stable reconstruction, a space–time sampling with periodic nonuniformly spaced samples must be used as was shown by Lu and Vetterli. We show that the stability of reconstruction, as measured by a condition number, controls the maximal gap between the spacial samples. We provide a quantitative statement of this result. In addition, instead of irregular space–time samples, we show that uniform dynamical samples at sub-Nyquist spatial rate allow one to stably reconstruct the function \(\widehat{f}\) away from certain, explicitly described blind spots. We also consider several classes of finite dimensional subsets of bandlimited functions in which the stable reconstruction is possible, even inside the blind spots. We obtain quantitative estimates for it using Remez-Turán type inequalities. En route, we obtain Remez-Turán inequality for prolate spheroidal wave functions. To illustrate our results, we present some numerics and explicit estimates for the heat flow problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the sampling and reconstruction problem of signals \(u = u(t,x)\) that arise as an evolution of an initial signal \(f = f(x)\) under the action of convolution operators. The initial signal f is assumed to be in the Paley-Wiener space \(PW_c\), \(c>0\) (fixed throughout this paper) given by

with the Fourier transform normalized as \(\widehat{f}(\xi )=\int _{{\mathbb {R}}}f(t)e^{- it\xi }\,\mathrm {d}t\).

The functions u are solutions of initial value problems stemming from a physical system. Thus, due to the semigroup properties of such solutions, there is a family of kernels \(\{\phi _t: t> 0\}\) such that \(u(t,x)=\phi _t*f(x)\), \(\phi _{t+s}=\phi _t*\phi _s\) for all \(t,s\in (0,\infty )\), and \(f= \lim \limits _{t\rightarrow 0+}\phi _t*f\), \(f\in L^2({{\mathbb {R}}})\).

As we are primarily interested in physical systems, we typically consider the following set of kernels:

Observe that \(\phi \in L^1\) implies that \({\widehat{\phi }}\) is continuous and, therefore, the existence of \(\kappa _\phi > 0\) such that \(\widehat{\phi }\ge \kappa _\phi \) on \([-c,c]\) is equivalent to \(\widehat{\phi }>0\) on \([-c,c]\). We remark that some of our results hold for a less restrictive class of kernels.

Example 1.1

A prototypical example is the diffusion process with \({\widehat{\phi }}_t(\xi ) = e^{-t\sigma ^2\xi ^2}\), \(t>0\) It corresponds to the initial value problem (IVP) for the heat equation (with a diffusion parameter \(\sigma \not =0\))

for which the solution is given by \(u(x,t)=(\phi _t*f)(x)\).

Other examples include the IVP for the fractional diffusion equation

for which the solution is given by \(u(x,t)=(\phi _t*f)(x)\) with \({\widehat{\phi }}_t(\xi ) = e^{-t|\xi |^\alpha }\), and the IVP for the Laplace equation in the upper half plane

for which the solution is given by \(u(x,y)=(\phi _y*f)(x)\) with \({\widehat{\phi }}_y(\xi ) := e^{-y|\xi |}\).

The following problem serves as a motivation for this paper.

Problem 1

Let \( \phi \in \Phi \), \(L>0\), and \(\Lambda \subset {{\mathbb {R}}}\) be a discrete subset of \({{\mathbb {R}}}\). What are the conditions that allow one to recover a function \(f \in PW_c\) in a stable way from the data set

The set of measurements (1.3) is the image of an operator \({\mathcal {T}}:PW_c\rightarrow L^2\big (\Lambda \times [0,L]\big )\). Thus, the stable recovery of f from (1.3) amounts to finding conditions on \(\Lambda , \phi \) and L such that \({\mathcal {T}}\) has a bounded inverse from \({\mathcal {T}}(PW_c)\) to \(PW_c\) or, equivalently, the existence of \(A,B>0\) such that

If for a given \(\phi \) and L the frame condition (1.4) is satisfied, we say that \(\Lambda =\Lambda _{\phi ,L}\) is a stable sampling set.

Remark 1.2

It was shown in [5, Theorem 5.5] that \(\Lambda _{\phi ,L}\) is a stable sampling set

for some \(L>0\), if and only if \(\Lambda _{\phi ,1}\) is a stable sampling set.

Thus, for qualitative results, we will only consider the case of \(L=1\). For quantitative results, however, we may keep L in order to estimate the optimal time length of measurements.

Remark 1.3

Whenever (1.4) holds, standard frame methods can be used for the stable reconstruction of f [11].

Let us discuss Problem 1 in more detail in the case of our prototypical example.

1.1 Sampling the Heat Flow

Consider the problem of sampling the temperature in a heat diffusion process initiated by a bandlimited function \(f \in PW_c\):

where \(\phi _t\) is the heat kernel at time t:

with a parameter \(\sigma \not =0\). According to Shannon’s sampling theorem, f can be stably reconstructed from equispaced samples \(\{f(k/T): k \in {\mathbb {Z}}\}\) if and only if the sampling rate T is bigger than or equal to the critical value \(T =\displaystyle \frac{c}{\pi }\), known as the Nyquist rate. The Nyquist bound is universal in the sense that it also applies to irregular sampling patterns: if a bandlimited function can be stably reconstructed from its samples at \(\Lambda \subseteq {\mathbb {R}}\), then the lower Beurling density

satisfies \(D^{-}(\Lambda ) \ge \displaystyle \frac{c}{\pi }\). Recall that the upper Beurling density is defined by

We are interested to know if the spatial sampling rate can be reduced by using the information provided by the following spatio-temporal samples:

Observe that the amount of collected data in (1.6) is not smaller than that in the case of sampling at the Nyquist rate \(T=\displaystyle \frac{c}{\pi }\). If \(T <\displaystyle \frac{c}{\pi }\), however, the density of sensors is smaller, and thus such a sampling procedure may provide considerable cost savings.

Lu and Vetterli showed [16] that for all \(T < \displaystyle \frac{c}{\pi }\) there exist bandlimited signals with norm 1 that almost vanish on the samples (1.6), i.e. stable reconstruction is impossible from (1.6). As a remedy, they introduced periodic, nonuniform sampling patterns \(\Lambda \subseteq {\mathbb {R}}\) that do lead to a meaningful spatio-temporal trade-off: there exist sets \(\Lambda \subseteq {\mathbb {R}}\) that have sub-Nyquist density and, yet, lead to the frame inequality:

with \(A,B>0\); see Example 4.1 for a concrete construction. The emerging field of dynamical sampling investigates such phenomena in great generality (see, e.g., [1,2,3,4,5]).

As follows from Example 4.1, the estimates (1.7) may hold with an arbitrary small sensor density. The meaningful trade-off between spatial and temporal resolution, however, is limited by the desired numerical accuracy. For example, in the following theorem we relate the maximal gap of a stable sampling set to the bounds from (1.7).

Theorem 1.4

Let \(\Lambda \subseteq {\mathbb {R}}\) be such that (1.7) holds. Then there exists an absolute constant \(K>0\) such that, for \(\displaystyle R\ge K\max \left( \frac{B}{A},\frac{1}{c}\right) \) and every \(a\in {\mathbb {R}}\), we have \([a-R,a+R]\cap \Lambda \ne \emptyset \). In particular, we have \(D^-(\Lambda )\ge K^{-1}\min \left( \frac{A}{B},c\right) \) and \(D^+(\Lambda )\le KB\).

Theorem 4.4, which is a more general version of the above result, provides a more explicit dependence of K on the parameters of the problem.

Besides the constraints implied by Theorem 1.4, the special sampling configurations of Lu and Vetterli that lead to (1.7) lack the simplicity of regular sampling patterns. In this article, we explore a different solution to the diffusion sampling problem. We consider sub-Nyquist equispaced spatial sampling patterns (1.6) with \(T=\displaystyle \frac{c}{m\pi }\), \(m \in \mathbb {N}\), and restrict the sampling/reconstruction problem to a subset \(V \subseteq PW_c\), aiming for an inequality of the form:

Specifically, we consider the following signal models.

Away from blind spots. We will identify a set E with measure arbitrarily close to 1 such that (1.8) holds with \(V=V_E=\{f\in PW_c: {\text {supp}}\widehat{f} \subseteq E\}\). In effect, E is the set \([-c,c]\setminus {\mathcal {O}}\) where \({\mathcal {O}}\) is a small open neighborhood of a finite set, i.e., E avoids a certain number of “blind spots.”

Theorem 1.5

Let \( \phi \in \Phi \) and \(m\ge 2\) be an integer. Then for any \(r>0\) there exists a certain compact set \(E\subseteq [-c,c]\) of measure at least \(2c-r\) such that any \(f\in V_E\) can be recovered from the samples

in a stable way.

The set E in the above theorem depends only on \(\phi \) and the choice of r. The stable recovery in this case means that (1.8) holds with \(B=1\) and some \(A > 0\) which is estimated in a more explicit version of the above result, Theorem 2.8.

Prolate spheroidal wave functions. The Prolate Spheroidal Wave Functions (PSWFs) are eigenfunctions of an integral operator known as the time-band liminting operator or sinc-kernel operator

Using the min-max theorem, we get that \(\psi _{n,c}\) is the norm-one solution of the following extremal problem

where the condition \(f\in {\text {span}}\{\psi _{k,c}:\ k<n\}^\perp \) is void for \(n=0\). The family \((\psi _{n,c})_{n\ge 0}\) forms an orthogonal basis for \(PW_c\) and has the property to form an orthonormal sequence in \(L^2(-1,1)\).

We consider the N-dimensional space

The Landau-Pollak-Slepian theory shows that this subspace provides an optimal approximation of a bandlimited function that is concentrated on \([-1,1]\). More precisely, \(V=V_N\) minimizes the approximation error

among all N-dimensional subspaces of \(PW_c\).

Sparse sinc translates with free nodes. In this model, we let

be the class of linear combinations of N arbitrary translates of the sinc kernel \( {\text {sinc}}(x)=\frac{\sin x}{ x} \) Note that \(V_N\) is not a linear space. However, \(V_N - V_N \subseteq V_{2N}\). Therefore, (1.8) with \(V=V_{2N}\) implies

which ensures the numerical stability of the sampling problem \(f \mapsto \{f_t(m\pi k/c): k \in {\mathbb {Z}}: 0 \le t \le 1 \}\) restricted non-linearly to the class \(V_N\). In other words, if (1.8) holds with \(V=V_{2N}\) then any \(f\in V_N\) can be stably reconstructed from the samples (1.6).

Fourier polynomials. As our last model, we consider the Fourier image of the space of polynomials of degree at most N restricted to the unit interval. Explicitly,

where \(D: PW_c \rightarrow PW_c\) is the differential operator \(Df = f^\prime \). Observe that the union of such \(V_N\), \(N\in {\mathbb {N}}\), is dense in \(PW_c\).

In this article, we show that each of the above-mentioned signal models regularizes the diffusion sampling problem, albeit with possibly very large condition numbers.

Theorem 1.6

Let \(m \ge 2\) be an integer, \(\Phi \) be given by \(\widehat{\Phi }(\xi )=e^{-\sigma ^2\xi ^2}\). Let \(V=V_N\) be given by (1.9), (1.10), or (1.11). Then (1.8) holds with

where the \(\kappa _j\)’s are positive constants that depend on c only.

We provide a more precise expression for the lower frame constant in Theorem 3.5. Note that the lower bound deteriorates when \(\sigma ^2 \rightarrow 0\) (no diffusion) and \(\sigma ^2 \rightarrow +\infty \) (very rapid diffusion). This agrees with the intuition and numerical experiments for (non-bandlimited) sparse initial conditions presented in [20]: if \(\sigma ^2\) is very small, because of spatial undersampling, some components of f may be hidden from the sensors, while for large \(\sigma ^2\) the diffusion completely blurs out the signal and no information can be extracted.

Remark 1.7

To simplify the discussion we take \(c=1/2\) in this remark. There are instances when Theorem 1.6 applies for a signal \(f\in V_N\) which cannot be recovered simply from its samples on, say, \(2{{\mathbb {Z}}}\). As an example, we offer \(V_1\) given by (1.10) with \(\lambda _1 = 1\). The samples at time \(t=0\) are not sufficient to identify each signal since \({\text {sinc}}(\cdot -1)\in V_N\) vanishes on \(m {\mathbb {Z}}\), \(m\ge 2\). Similarly, for Theorem 1.5: the function \(\sin (\omega \cdot ) {\text {sinc}}(\frac{\cdot }{a})\), with an appropriately chosen a and \(\omega \), belongs to \(V_E\) and vanishes on \(m{{\mathbb {Z}}}\) for \(m\ge 2\). In finite dimensional subspaces \(V_N\), e.g., given by (1.9) and (1.11), sampling at time \(t=0\) with any \(m \in {\mathbb {N}}\) may be sufficient for stable recovery. However, the expected error of reconstruction in the presence of noise will be reduced if temporal samples are used in addition to those at \(t=0\). Theorems 1.5 and 1.6 can be used together. For example, a function f can be reconstructed away from the blind spots using Theorem 1.5 and approximated around the blind spots using Theorem 1.6.

1.2 Technical Overview

Lu and Vetterli explain the impossibility of subsampling the heat-flow of a bandlimited function on a grid (1.6) as follows [16]. The function with Fourier transform

is formally bandlimited to \(I =[-c,c]\) if \(T<c\), and vanishes on the lattice \(\tfrac{\pi }{T} {\mathbb {Z}}\). Moreover, f is an eigenfunction of the diffusion operator since

see (1.2) and (1.5). Hence, all the diffusion samples (1.6) vanish, although \(f \not \equiv 0\). While no Paley-Wiener function is infinitely concentrated at \(\{-T,T\}\), a more formal argument can be given by regularization. If \(\eta : {\mathbb {R}} \rightarrow {\mathbb {R}}\) is continuous and supported on \([-1,1]\) and \(\eta _\varepsilon (x) = \varepsilon ^{-1} \eta (x/\varepsilon )\), then \(f \cdot \widehat{\eta }_\varepsilon \in PW_c\) and provides a counterexample to (1.4), provided that \(\varepsilon \) is sufficiently small.

As we show below in Sect. 2.1, a similar phenomenon holds for more general diffusion kernels \(\phi \) as in (1.1). Indeed, an analysis along the lines of the Papoulis sampling theorem [18] shows that the diffusion samples (1.6) of a function \(f \in PW_c\) do not lead to a stable recovery of \(\widehat{f}\). However, these samples do allow for the stable recovery away from certain blind spots determined by \(\phi \); that is, one can effectively recover \(\widehat{f} \cdot \mathbf {1}_{E}\), for a certain subset \(E \subseteq I\) of positive measure (\(\mathbf {1}_E\) denotes the characteristic function of the set E). If we, furthermore, restrict the sampling problem to one of the finite dimensional spaces \(V=V_N\) given by (1.9), (1.10), or (1.11), we may then infer all other values of \(\widehat{f}\). The main tools, in this case, are Remez-Turán-like inequalities of the form:

For Fourier polynomials (1.11) the classical Remez-Turán inequality provides an explicit constant \(C_E\), while the case of sparse sinc translates (1.10) is due to Nazarov [17]. The corresponding inequality for prolate spheroidal wave functions (1.9) is new and a contribution of this article (our technique relies on [15]).

1.3 Paper Organization and Contributions

In Sect. 2, we show that uniform dynamical samples at sub-Nyquist rate allow one to stably reconstruct the function \(\widehat{f}\) away from certain, explicitly described blind spots determined by the kernel \(\phi \). We also provide an upper and lower estimate for the lower frame bound in (1.8). The upper estimate relies on the standard formulas for Pick matrices (see, e.g. [7, 10]). The lower estimate is far more intricate and is based on the analysis of certain Vandermonde matrices. We also provide some numerics and explicit estimates in the case of the heat flow problem.

In Sect. 3, we restrict the problem to the sets \(V=V_N\) given by (1.9), (1.10), or (1.11). We provide quantitative estimates for the frame bounds in (1.8). En route, we obtain an explicit Remez-Turán inequality for prolate spheroidal wave functions – a result which we find interesting in its own right.

In Sect. 4, we discuss the case of irregular spacial sampling. We recall that a stable reconstruction may be possible with sets \(\Lambda \) that have an arbitrarily small (but positive) lower density. Nevertheless, we show that the maximal gap between the spacial samples (and, hence, the lower Beurling density) is controlled by the condition number of the problem (i.e. the ratio \(\frac{B}{A}\) of the frame bounds).

2 Recovering a Bandlimited Function Away from the Blind-Spot

2.1 Dynamical Sampling in \(PW_c\)

In this section, we recall some of the results on dynamical sampling from [4, 5] and adapt them for problems studied in this paper.

For \(\phi \in L^1\), consider the function

that is, the 2c-periodization of the piece of \({\widehat{\phi }}\) supported in \([-c,c)\). Recall that we consider kernels from the set \(\Phi \) given by (1.1). Hence,

We also write

Next, we introduce the sampled diffusion matrix, which is the \(m\times m\) matrix-valued function given by

where

Remark 2.1

Observe that the matrix function \({\mathcal {B}}_m\) is m-periodic. Its eigenvalues, however, are 1-periodic because the matrices \({\mathcal {B}}_m(\xi )\) and \({\mathcal {B}}_m(\xi +k)\), \(k\in {{\mathbb {Z}}}\), are similar via a circular shift matrix.

The following lemma explains the role of the sampled diffusion matrix. In the lemma, we let

Note that if we recover \(\mathbf{f}(\xi )\) for \(\xi \in [0,1]\) then we can recover \(f_p\). Observe also that

In other words, \(f\mapsto \sqrt{\frac{2c}{m}}\mathbf{f}: PW_c\rightarrow L^2([0,1],{\mathcal {M}}_{m,1}(\mathbb {C}))\) is an isometric isomorphism.

Lemma 2.2

For \(f \in PW_c\),

Proof

Observe that it suffices to prove the result in \(PW_c\cap {\mathcal S}({{\mathbb {R}}})\) (the Schwarz class). Consider the function

Using the Poisson summation formula and the definition of \(f_t\), we get

Note that the functions \(b(\cdot , t)\) are 1-periodic,

and thus

Combining the last equation with the Parseval’s relation

yields the desired conclusion. \(\square \)

Remark 2.3

Lemma 2.2 shows that the stability of reconstruction from spatio-temporal samples is controlled by the condition number of the self-adjoint matrices \({\mathcal {B}}_m(\xi )\) in (2.12). For symmetric \(\phi \in \Phi \) and \(m \ge 2\), however,

which precludes the stable reconstruction of all \(f \in PW_c\), see, e.g., [4]. This adds to our explanation of the phenomenon of blind spots in Sect. 1.2. We can nonetheless hope to find a large set \(\widetilde{E} \subseteq [0,1]\) such that \(\lambda _{\mathrm {min}} \big ({\mathcal {B}}_m(\xi ) \big )\ge \kappa \) for \(\xi \in \widetilde{E}\). Then, repeating the computation in (2.14), we get

where \(E=\displaystyle \left( \frac{2c}{m}(\tilde{E}+{{\mathbb {Z}}})\right) \cap [-c,c]\).

In the following example, we offer some numerics. To simplify the computations, we represent \({\mathcal {B}}_m(\xi )\) in (2.12) as a Pick matrix (see, e.g., [7, 10]). For \(\xi \in [-c,c)\), we write \(\widehat{\phi }(\xi ) = e^{-\psi (\xi )},\) so that \(\psi \ge 0\) and \(\psi (0)= 0,\) and obtain for \(j, k = 0,\ldots , m-1\),

where the indices \(j^\prime ,k^\prime \) are in the set

m divides \(|j-j^\prime |\) and \(|k-k^\prime |\), and j, k, and \(\xi \) are not 0 simultaneously. Thus

Observe that \(({\mathcal {B}}_m)_{00}(0) = 1\).

Example 2.4

Here, we choose \(\phi \) to be the Gaussian function, i.e.,

for various values of \(\sigma \not = 0\). Hence, \(\psi (\xi ) = \sigma ^2\xi ^2\), and we get

with \(j^\prime \), \(k^\prime \), and \(({\mathcal {B}}_m)_{00}(0)\) as above.

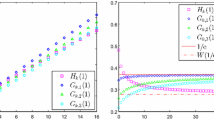

In Figure 1, we show the condition numbers of the matrices \({\mathcal {B}}_m(\xi )\) with \(\xi =0.45\), \(c=1/2\), \(m\in \{2,3,5\}\), and \(\sigma \) varying from 1 to 200.

In Figure 2, we also show the condition numbers of the matrices \({\mathcal {B}}_m(\xi )\). This time, however, still \(c=1/2\), the parameter \(\sigma \) is fixed to be 200, whereas the point \(\xi \) is allowed to vary from 0.35 to 0.49. We still have \(m\in \{2,3,5\}\).

2.2 Estimating the Minimal Eigenvalue of the Sampled Diffusion Matrix

In this subsection, we use Vandermonde matrices to obtain a lower estimate for the eigenvalue \(\lambda ^{(m)}_{\min }(\xi )\) of the matrices \({\mathcal {B}}_m(\xi )\) in (2.12). We also present an upper estimate for \(\lambda ^{(m)}_{\min }(\xi )\), which follows from the general theory of Pick matrices [7, 10].

We begin with the following auxiliary result.

Lemma 2.5

Let \(v_0, v_1, \ldots v_{m-1}\) be m distinct non-zero real numbers and let \(\mathbf {v}=(v_0,\ldots ,v_{m-1})\). For \(k\in {\mathbb {N}}\), define a function \(\Psi _k:{{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\) by \(\Psi _k(t)=\tfrac{1-t^2}{1-t^{2/k}}\) if \(t\ne 1\) and \(\psi _k(1)=k\). For \(j=0,\ldots ,m-1\), define

Let \(\sigma =\left( \sum _{j=0}^{m-1}\sigma _j^2\right) ^{1/2}\), \(\gamma _- = \min _{j} |v_j| >0\), \(\gamma _+=\max _{j} |v_j|\) and let

For \(N\in \mathbb N\), let \(W_N\) be the \((mN)\times m\) Vandermonde matrix associated to \(\mathbf {v}_N = (v_0^{\frac{1}{N}}, v_1^{\frac{1}{N}}, \ldots v_{m-1}^{\frac{1}{N}})\), i.e.,

Then for each \(x\in \mathbb {C}^m\), we have

Proof

let V be the \(m\times m\) Vandermonde matrix associated to \(\mathbf {v}\):

Note that the Frobenius norm of V and its determinant are given by

Recall from [23] an estimate for the minimal singular value of an \(m\times m\) matrix A:

Specifying this to V we get \(\sigma _{\min }(V)\ge \alpha \). As \(\Vert V\Vert \le \Vert V\Vert _F\), it follows that, for all \(x\in \mathbb {C}^m\),

Let \(D_N\) be the diagonal matrix with \(\mathbf{v}_{N}\) on the main diagonal. Since

we deduce from (2.22) that

Moreover, we have \(\gamma _-^{\frac{2\ell }{N}}\Vert x\Vert ^2\le \Vert D_N^\ell x\Vert ^2 \le \gamma _+^{\frac{2\ell }{N}}\Vert x\Vert ^2\) by definition of \(D_N\). The conclusion now follows by summing the two geometric sequences. \(\square \)

Note that the function \(\Psi _N\) is increasing on \((0,+\infty )\) and that, for \(t\ne 1\), \(t>0\)

Corollary 2.6

With the notation of Lemma 2.5, assume further that \(0<\nu \le v_j\le 1\) and \(m\ge 2\). Let

Then for each \(x\in \mathbb {C}^m\), we have

Proof

Indeed, \(\nu \le \gamma _-\le \gamma _+\le 1\) so \(\Psi _N(\nu )\le \Psi _N(\gamma _-)\) and \(\Psi _N(\gamma _+)\le \Psi _N(1)=N\).

Further, since \(v_j\le 1\), \(\sigma ^2\le m^2\). Moreover, the derivative of \(\left( \frac{t-1}{t}\right) ^{(t-1)/2}=\left( 1-\frac{1}{t}\right) ^{(t-1)/2}\) is

for \(t\ge 1\). Thus,

It follows that \(\alpha \) in the statement of Lemma 2.5 satisfies

and the result is established. \(\square \)

Proposition 2.7

Let \( \phi \in \Phi \). Define

Then, for each \(x\in \mathbb {C}^m\), we have

Proof

We fix \(\xi \) and apply Corollary 2.6 to \(v_j =\displaystyle ({\widehat{\phi }})_p\left( \frac{2c}{m}(\xi +j)\right) ^{\frac{1}{m}}\). With \({\widetilde{\alpha }}\) given by (2.24),

we get

On the other hand, \(\frac{1}{mN} W_N^*W_N\) equals the left-end mN-term Riemann sum for the integral defining \({\mathcal {B}}_m(\xi )\). It follows that

Using (2.23), we get

Finally, note that if \(0<a,b\le 1\), using the mean value theorem, there is an \(\eta \in (a,b)\) such that

Therefore

establishing the postulated estimates. \(\square \)

For an upper estimate of the minimal eigenvalue \(\lambda ^{(m)}_{\min }(\xi )\) we use the estimates of the singular values of Pick matrices by Beckerman-Townsend [7]. For \(p_j\in \mathbb {C}\), \( j = 1 , \dots , m\), and \(0<a\le x_1< x_2< \dots < x_m \le b\) let

be the corresponding Pick matrix. Then the smallest singular value \(s_{\min }\) of \(P_m\) is bounded above by

where \(s_{\max }\) is the largest singular value.

If \((\widetilde{ P}_m)_{jk} = \frac{1-c_jc_k}{x_j+x_k}\), then \(\widetilde{P}_m\) is related to a Pick matrix of the form (2.25) via the diagonal matrix \(D = \mathrm {diag} (1+c_j)\):

with \(p_j = \frac{1-c_j}{1+c_j}\), \(c_j\ne -1\).

In our case, see (2.20), \(x_j= \psi \left( \frac{2c}{m}(\xi +j)\right) \) and \(c_j = e^{-x_j} \in (0,1]\), so \(\mathrm {Id}\le D \le 2\mathrm {Id}\) and the singular values of \(\mathcal {B}_m (\xi )\) and the corresponding Pick matrix \(P_m\) differ at most by a factor 4. Therefore, (2.26) holds with \(a (\xi ) = \min \left\{ \psi \left( \frac{2c}{m}(\xi +k)\right) : k\in I_\xi \right\} \) and \(b (\xi ) = \max \left\{ \psi \left( \frac{2c}{m}(\xi +k)\right) : k\in I_\xi \right\} \), \(I_\xi \) defined in (2.19), and an additional factor 4 provided that \(a(\xi ) \ne 0\).

For our main examples, we have \(\psi (\xi ) = |\xi |^\alpha \), \(\alpha > 0\). This yields

So for the smallest singular value of \(\mathcal {B}_m(\xi )\) we obtain the estimate

Observe that the Beckerman-Townsend estimate (2.26) holds for all Pick matrices with the same values for \(a=\min x_j\) and \(b=\max x_j\) and is completely independent of the particular distribution of the \(x_j\). Regardless, it shows that the condition number grows nearly exponentially with m, establishing limitations on how well the space–time trade-off can work numerically. Of course, the condition number may be much worse if two values \(x_j\) and \(x_{j+1}\) are close together (if \(x_j = x_{j+1}\), then \(P_m\) is singular). Thus, (2.27) is an optimistic upper estimate for \(\lambda ^{(m)}_{\min }(\xi )\). By comparison, our lower estimate in Proposition 2.7 depends crucially on the distribution of the parameters \(x_j\) and is much harder to obtain. It does, however, establish an upper bound on the condition number and, thus, shows that the space–time trade-off may be useful. A precise result is formulated in the following subsection.

2.3 Partial Recoverability

Theorem 2.8

Let \( \phi \in \Phi \), \(m\ge 2\) an integer and \(\widetilde{E}\subseteq I = [0,1] \) be a compact set. Assume that there exists \(\delta >0\) such that, for every \(0\le j<k\le m-1\) and every \(\xi \in \widetilde{E}\)

Let \(E=\displaystyle \left( \frac{2c}{m}(\tilde{E}+{{\mathbb {Z}}})\right) \cap [-c,c]\). Then for any \(f\in PW_c\), the function \(\widehat{f}\mathbf {1}_{ E}\) can be recovered from the samples

in a stable way. Moreover, we have

where

Proof

Recall from (2.15) that we need to estimate

The upper bound follows directly from Proposition 2.7, and (2.14):

Let us now prove the lower bound using (2.18). First, \(\Delta _m(\xi )\ge \delta ^{\frac{m(m-1)}{2}}\). It follows from Proposition 2.7 that, if \(\xi \in \widetilde{E}\) then

Taking \(\displaystyle \kappa =\frac{\kappa _\phi ^{2/m}-1}{2e m^{m^2}\ln \kappa _\phi } \delta ^{m(m-1)}\) in (2.18) gives the result. \(\square \)

Remark 2.9

The condition number implied by the above theorem is not the best possible one can obtain through this method. For instance, a better estimate for the \(\sigma _{\min }\) of a Vandermonde matrix may be used in place of (2.21).

However, the method will always lead to a deteriorating estimate of the condition number as m increases. This follows from the Beckerman-Townsend estimate (2.26) we discussed in the previous subsection.

Corollary 2.10

Assume that \(\phi \in \Phi \), \({\widehat{\phi }}\) is even and strictly decreasing on \({{\mathbb {R}}}_+\), and \(m\ge 2\) is an integer. Given \(\eta \in (0,\frac{1}{4})\), let \(\widetilde{E} = [-\frac{1}{2} + \eta , -\eta ]\cup [\eta , \frac{1}{2} -\eta ]\) and \(E=\displaystyle \left( \frac{2c}{m}(\tilde{E}+{{\mathbb {Z}}})\right) \cap [-c,c]\). Then there exists \(A>0\) such that, for any \(f\in PW_c\),

Proof

We look into the main condition of Theorem 2.8: there exists \(\delta >0\) such that, for every \(0\le j<k\le m-1\) and every \(\xi \in \widetilde{E}\)

For a general \(\phi \in \Phi \), the function \({\widehat{\phi }}\) is continuous and, therefore, \({\widehat{\phi }}_p\) is continuous, except possibly on \(\displaystyle c+2c{{\mathbb {Z}}}\) where a jump discontinuity occurs if \({\widehat{\phi }}(-c)\ne {\widehat{\phi }}(c)\). Under current assumptions, however, \({\widehat{\phi }}\) is even and, therefore \({\widehat{\phi }}_p\) is continuous everywhere.

For \(0\le \ell \le m-1\) and \(\xi \in I\), we have \(\displaystyle -\frac{1}{2m} \le \frac{\xi + \ell }{m}\le 1- \frac{1}{2m} \) and

Thus, the condition of Theorem 2.8 would be satisfied with \(\widetilde{E}=I\) if \(|{\widehat{\phi }}|\) were one-to-one on I, that is, either strictly decreasing or strictly increasing. However, \({\widehat{\phi }}\) is even and strictly decreasing on \({{\mathbb {R}}}_+\), so that \({\widehat{\phi }}_p\) is continuous, strictly decreasing on [0, c] and strictly increasing on \([-c,0]\). It follows that (2.30) may only fail in small intervals around the points \(\xi \in I\) where \(\displaystyle {\widehat{\phi }}_p\left( \frac{2c}{m}(\xi +j)\right) -{\widehat{\phi }}_p\left( \frac{2c}{m}(\xi + k)\right) = 0\) for some \(j,k\in {{\mathbb {Z}}}\). Such points must satisfy

Thus, we need \(\xi = \frac{1}{2}(m-j-\ell )\), i.e. \(\xi \in \{0,\pm \frac{1}{2} \}\). In view of the continuity of \({\widehat{\phi }}_p\), it follows that there exists \(\eta > 0\) such that (2.30) holds for \(\xi \in \widetilde{E} = [-\frac{1}{2} + \eta , -\eta ]\cup [\eta , \frac{1}{2} -\eta ]\). It remains to observe that with any given \(\eta \in (0,\frac{1}{4})\) inequality (2.30) will hold for \(\delta \) sufficiently small. \(\square \)

2.4 Explicit Quantitative Estimates for the Gaussian

To obtain explicit estimates, we need to establish a precise relation between \(\eta \) and \(\delta \) in the proof of Corollary 2.10. In other words, we need to estimate \(\min \limits _{\xi \in \widetilde{E}} \psi (\xi )\), where, as above, \(\widetilde{E} = [-\frac{1}{2} + \eta , -\eta ]\cup [\eta , \frac{1}{2} -\eta ]\), \(\eta \in (0, \frac{1}{4})\), and the function \(\psi \) is given by

Lemma 2.11

Let E and \(\widetilde{E}\) be as in Corollary 2.10. Assume that the kernel \(\phi \in \Phi \) is such that \({\widehat{\phi }}\) is differentiable on E and

Then

Proof

Observe that

With this, the assertion of the lemma follows immediately from the mean value theorem. \(\square \)

The above observation leads to the following explicit estimate for the Gaussian kernel.

Proposition 2.12

Let \({\widehat{\phi }}(\xi )=e^{-\sigma ^2\xi ^2}\), \(\sigma \not =0\), and \(m\ge 2\) be an integer. Given \(\eta \in (0,\frac{1}{4})\), let \(\widetilde{E} = [-\frac{1}{2} + \eta , -\eta ]\cup [\eta , \frac{1}{2} -\eta ]\) and \(E=\displaystyle \left( \frac{2c}{m}(\tilde{E}+{{\mathbb {Z}}})\right) \cap [-c,c]\). Then, for any \(f\in PW_c\), we have

where

Proof

Observe that Lemma 2.11 applies with R given by (2.32). It remains to apply Theorem 2.8 with \(\kappa _\phi = e^{-(\sigma c)^2}\) and \(\delta = {4cR\eta /m}\). We deduce that (2.31) holds with

Using \(\displaystyle \frac{1-e^{-t}}{t}\ge \frac{1}{t+1}\), we obtain the claimed bound. \(\square \)

We remark that the estimate in the above proposition is quite pessimistic. Our numerical experiments showed that the true bound may be much better.

3 Remez-Turán Property and Fixing the Blind Spots

In Theorem 2.8, the main issue is that the lower bound is only in terms of \(\Vert \widehat{f}\mathbf {1}_{E}\Vert \) and not \(\Vert \widehat{f}\Vert \) so that stability is not obtained. In this section, we consider a certain class of subsets of \(PW_c\) for which Theorem 2.8 does lead to stable reconstruction.

3.1 Remez-Turán Property

Definition 3.1

Let \(V\subset PW_c\) and write \(\widehat{V}=\{\widehat{f}\,:\ f\in V\}\subset L^2([-c,c])\). We will say that \(\widehat{V}\) has the Remez-Turán property if, for every \(E\subset [-c,c]\) of positive Lebesgue measure, there exists \(C=C(E,V)\) such that, for every \(f\in V\),

When V is a finite dimensional subspace of \(PW_c\) such that \(\widehat{V}\) consists of analytic functions (restricted to I), then \(\widehat{V}\) has the Remez-Turán property since \(\Vert \widehat{f}\mathbf {1}_E\Vert _2\) is then a norm on V which, by finite dimensionality of V, is equivalent to \(\Vert \widehat{f}\mathbf {1}_{[-c,c]}\Vert _2\). However, the previous argument does not provide any quantitative estimate on the constant C(E, V). Let us start with two fundamental examples of spaces that have the Remez-Turán property, and for which quantitative estimates are known.

3.2 Fourier Polynomials

Let \(V_N\) be given by (1.11), so that \(\widehat{V}_N=\{P\mathbf {1}_{[-c,c]}, P\in {\mathbb {C}}_N[x]\}\) is the space of polynomials of degree at most N, restricted to I. The quantitative form of the Remez-Turán property for \(\widehat{V}_N\) is then known as the Remez Inequality [9]: for every polynomial of degree at most N,

3.3 Sparse Sinc Translates with Free Nodes

Let \(V_N\) be given by (1.10), so that \(\widehat{V}_N=\displaystyle \left\{ P\mathbf {1}_{[-c,c]}: P(\xi )=\sum _{n=1}^Nc_ne^{2i\pi \lambda _n\xi }\right\} \). Recall that \(\widehat{V}_N\) is not a linear subspace. The fact that \(\widehat{V}_N\) has the Remez-Turán property is a deep result of Nazarov [17]: for every exponential polynomial of order at most N, i.e. every P of the form \(P(\xi )=\sum _{n=1}^Nc_ne^{2i\pi \lambda _n\xi }\) one has

where \(\gamma \) is an absolute constant.

3.4 Prolate Spheroidal Wave Functions (PSWF)

The Prolate spheroidal wave functions (PSWFs) denoted by \((\psi _{n,c}(\cdot ))_{n\ge 0}\), are defined as the bounded eigenfunctions of the Sturm-Liouville differential operator \({\mathcal {L}}_c,\) defined on \(C^2([-1,1]),\) by

They are also the eigenfunctions of the finite Fourier transform \({\mathcal {F}}_c\), as well as the ones of the operator \(\displaystyle {\mathcal {Q}}_c= \frac{c}{2\pi } {\mathcal {F}}^*_c {\mathcal {F}}_c ,\) which are defined on \(L^2([-1,1])\) by

They are normalized so that \(\Vert \psi _{n,c}\Vert _{L^2([-1,1])}=1\) and \(\psi _{n,c}(1)>0\). We call \((\chi _n(c))_{n\ge 0}\) the corresponding eigenvalues of \({\mathcal {L}}_c\), \(\mu _n(c)\) the eigenvalues of \(\mathcal {F}_c\)

and \(\lambda _n(c)\) the ones of \({\mathcal {Q}}_c\) which are arranged in decreasing order. They are related by

A well known property is then that \(\Vert \psi _{n,c}\Vert _{L^2({{\mathbb {R}}})}=\frac{1}{\sqrt{\lambda _n(c)}}\). Further, their Fourier transform is given by

The crucial commuting property of \({\mathcal {L}}_c\) and \({\mathcal {Q}}_c\) has been first observed by Slepian and co-authors [21], whose name is closely associated with all properties of PSWFs, the spectrum of the operators \({\mathcal {L}}_c\) and \(Q_c\) and almost time- and band-limited functions. Among the basic properties of PSWFs, we cite their analytic extension to the whole real line and their unique properties to form an orthonormal basis of \(L^2([-1,1])\) and an orthogonal basis of \(PW_c\).

The prolate spheroidal wave functions admit a good representation in terms of the orthonormal basis of Legendre polynomials. In agreement with the standard practice, we will be denoting by \(P_k\) the classical Legendre polynomials, defined by the three-term recursion

with the initial conditions

These polynomials are orthogonal in \(L^2([-c,c])\) and are normalized so that

We will denote by \(P_{k,c}\) the normalized Legendre polynomial \(\displaystyle \widetilde{P}_{k,c}(x)=\sqrt{\frac{2k+1}{2c}}P_k\left( \frac{x}{c}\right) \) and the \(P_{k,c}\)’s then form an orthonormal basis of \(L^2([-c,c])\).

We start from the following identity relating Bessel functions of the first kind to the finite Fourier transform of the Legendre polynomials, see [6]: for every \(x\in {{\mathbb {R}}}\),

where \(j_k\) is the spherical Bessel function defined by \(j_k(x)=\displaystyle (-x)^k\left( \frac{1}{x}\frac{\text{ d }}{\text{ d }x}\right) ^k\frac{\sin x}{x}\). Note that \(j_k\) has the same parity as k and recall that, for \(x\ge 0\), \(j_k(x)=\sqrt{\frac{\pi }{2x}}J_{k+1/2}(x)\) where \(J_\alpha \) is the Bessel function of the first kind. In particular, from the well-known bound \(|J_{\alpha }(x)|\le \frac{|x|^{\alpha }}{2^{\alpha }\Gamma (\alpha +1)}\), valid for all \(x\in {{\mathbb {R}}}\), we deduce that

Using the bound \(\Gamma (x)\ge \sqrt{2\pi }x^{x-1/2}e^{-x}\) we get

We have the following lemma.

Lemma 3.2

Write \(\widehat{\psi _{n,c}}=\sum _{k\ge 0}\beta _k^n(c)P_{k,c}\). Then for every \(k,\ell \ge 0\)

This bound is an adaptation of techniques from [15] to improve the proof of the exponential decay from [22].

Proof

Using (3.39), we have

with (3.38). Recalling that \(\lambda _n(c)=\displaystyle \frac{c}{2\pi }|\mu _n(c)|^2\) and using Fubini, we get

with (3.40). But then, from (3.41) and Cauchy-Schwarz, we deduce that

As \(4\sqrt{2e}\le 10\), the result follows. \(\square \)

We will also need the following estimate.

Lemma 3.3

The eigenvalues (3.38) of \({\mathcal {Q}}_c\) satisfy

Proof

Precise pointwise estimates of the \(\lambda _n(c)\)’s have been obtained in [15, Section 4 & Appendix C] and have been further improved in [8] to

while we always have \(\lambda _n(c)<1\).

It follows that

The result follows. \(\square \)

We can now prove our Remez lemma for Prolate spheroidal wave functions.

Theorem 3.4

(Remez’s Lemma for PSWF) Let N be an integer and

Then, for every \(\psi \in \widehat{V}_N\) and every \(E\subseteq [-c,c]\) of positive measure,

where

Proof

Let \(\psi =\sum _{n=0}^Nc_\ell \psi _{n,c}\) so that, by orthogonality and the fact that \(\Vert \psi _{n,c}\Vert =\lambda _n(c)^{-1/2}\),

On the other hand

Let K be an integer that will be fixed later and write

Note that \(F_K\) is a polynomial of degree K so that

by (3.34). On the other hand,

so that

by Cauchy-Schwarz. We now apply Lemma 3.2 to get

Using Lemmas 3.3 and 3.2 we can rewrite this in the form \(\Vert R_K\mathbf {1}_E\Vert \le \Lambda _N\Phi _K\Vert \psi \Vert \) with

Next

since \(E\subset [-c,c]\) implies \(\left( \frac{|E|}{8c}\right) ^{K+\frac{1}{2}}\le 1\). Therefore

It remains to choose K so that \(\displaystyle \Lambda _N\Phi _K\le \frac{1}{4}\left( \frac{|E|}{8c}\right) ^{K+\frac{1}{2}}\).

First, if \(N\le \max (ec,2)\), then we want

so that it is enough that \(\displaystyle \frac{e}{2K+5}\le \frac{c^3}{48^2(3+ec)}\) and \(\displaystyle \frac{e}{2K+5}\le \frac{|E|}{8c}\) so we take

On the other hand, if \(N\ge \max (ec,2)\), then we want

Taking \(K:=K(N)= \max \left( 20,N,\left\lceil \frac{8(N+1)}{|E|}\right\rceil \right) \), we get

which gives the desired estimate since \(2(N+1)>ec\) and \(K\ge N\). \(\square \)

3.5 Sampling the Heat Flow

Equipped with the Remez-Turán Property, we are ready to close the blind spots in Theorem 2.8. We do it only in the case of heat flow as it should be clear how to obtain similar estimates in the case of other kernels \(\phi \in \Phi \).

Theorem 3.5

Let \({\widehat{\phi }}(\xi )=e^{-\sigma ^2\xi ^2}\), \(\sigma \not =0\), and \(m \ge 2\) be an integer. Let \(V=V_N\) be given by (1.9), (1.10), or (1.11). Then, for every \(f\in V\),

where

with \(\kappa _j\) positive constants that depend on c only.

Remark 3.6

For \(V=V_N\) given by (1.10), (1.11) and for \(V=V_N\) given by (1.9) when \(N\ge \max (2,ec)\), \(\kappa _0,\kappa _1\) do not depend on c.

Proof

To obtain this result, we take \(\eta =\displaystyle \frac{1}{8}\) in Proposition 2.12. First note that if \(\widetilde{E} = \displaystyle \left[ -\frac{3}{8} ,-\frac{1}{8}\right] \cup \left[ \frac{1}{8}, \frac{3}{8}\right] \) and \(E=\displaystyle \left( \frac{2c}{m}(\tilde{E}+{{\mathbb {Z}}})\right) \cap [-c,c]\) then \(\displaystyle \frac{c}{|E|}\ge \frac{1}{8}\) (say). Then (2.31) tells us that

for any \(f\in PW_c\), where

Note that

so that

Finally \(A\ge \frac{c\gamma _0}{\bigl ((\sigma c)^2+m\bigr )}\exp \Bigl (-m^2\bigl (-\gamma _1(c)\ln \sigma +\gamma _2(c)\sigma ^2+\ln m\bigr )\Bigr )\) where \(\gamma _0,\gamma _1(c),\gamma _2(c)\) are constants depending on c only.

It remains to fix the blind spots \(\Vert \widehat{f}\mathbf {1}_{E}\Vert ^2\) with the help of a Remez type inequality. For \(V=V_N\) given by (1.10), (1.11) and \(f\in V_N\), we simply have \(\Vert \widehat{f}\mathbf {1}_{E}\Vert ^2\ge \gamma _3^{2N+1}\Vert f\Vert ^2\) where \(\gamma _3<1\) is a constant.

For \(V=V_N\) given by (1.9), \(\Vert \widehat{f}\mathbf {1}_{E}\Vert ^2\ge \gamma _3^{2K(N)}\Vert f\Vert ^2\) where K(N) is given by (3.44)

Adding the estimates for fixing the blind spot yields (3.47). \(\square \)

Remark 3.7

Theorem 3.5 immediately implies Theorem 1.6. We also note that if \(V=V_N\) is given by (1.9) or (1.11), the reconstruction can be done from measurements at a finite number of spacial locations. Indeed, our results imply that in this case one can find the coefficients of f in its decomposition in a basis of V via simple least squares.

4 Sensor Density, Maximal Spatial Gaps and Condition Numbers

In this section, we discuss irregular spatio-temporal sampling. We establish that stable reconstruction from dynamical samples may occur when the set \(\Lambda \) has an arbitrarily small density. More importantly, however, we show that the density cannot be arbitrarily small for fixed frame bounds in (1.4). In fact, we provide an explicit estimate for the maximal spatial gap in terms of the condition number \(\frac{B}{A}\).

Example 4.1

In this example, we take \(c=1/2\) to simplify discussion. Assume that \(\phi \in \Phi \) is such that \(\widehat{\Phi }\) is real, even, and decreasing on [0, 1/2]. Let \(\Lambda _0=m {{\mathbb {Z}}}\), with \(m \in \mathbb {N}\) odd, \(\Lambda _k=mn{{\mathbb {Z}}}+k\), where n is any fixed odd number and \(k=1,\dots \frac{m-1}{2}\) . Then \(\Lambda =\bigcup \limits _{k=0}^{\frac{m-1}{2}} \Lambda _k\) has density \(D^{-}(\Lambda ) \le 1/n+1/m\) and is a stable set of sampling, i.e., (1.7) is satisfied.

The claim in the last example follows by stringing together several theorems on dynamical sampling. Firstly, [4, Theorems 2.4 and 2.5] yield that any \(f \in \ell ^2({{\mathbb {Z}}})\) can be recovered from the space–time samples \(\{\phi ^j*f(x_k): j=0,\dots ,m-1, \; x_k \in \Lambda \}\) and that the problem of sampling and reconstruction in \(PW_c\) on subsets of \({{\mathbb {Z}}}\) is equivalent to the sampling and reconstruction problem of sequences in \(\ell ^2({{\mathbb {Z}}})\). Secondly, combining [5, Theorems 5.4 and 5.5] shows that for \(\phi \in \Phi \), \(f\in PW_c\) can be stably reconstructed from \(\{\phi ^j*f(x_k): j=0,\dots ,m-1, \; x_k \in \Lambda \}\) if and only if (1.7) is satisfied.

Example 4.1 thus shows that (1.7) can hold with sets having arbitrarily small densities. The goal of this section is to show that the maximal gap in such sets is controlled by the condition number B/A.

We first establish the following lemma, which parallels [13, Proposition 4.4].

Lemma 4.2

Let \(\phi \in \Phi \) be such that \({\widehat{\phi }}\) is \(\mathcal {C}^1\)-smooth on \(I = [-c,c]\). Then there exists a finite constant \(C_{\phi , L}\) such that

On the other hand, setting \(c_{\phi ,L}= \frac{2(\kappa _\phi ^{2L}-1)}{\pi ^2\ln \kappa _\phi }>0\), for \(|x|\le \pi /2c\), we have

Proof

Firstly, writing the Fourier inversion formula shows that

from which it follows that

due to \(|{\widehat{\phi }}|\le 1\).

Secondly, note that, due to its smoothness, \({\widehat{\phi }}^\prime \) is bounded by \(E_\Phi :=\sup _{\xi \in [-c,c]}|{\widehat{\phi }}^\prime (\xi )|<+\infty \) on \([-c,c]\) Then, integrating (4.50) by parts leads to

and, as \(\kappa _\phi \le \widehat{\phi }\le 1\) on I, we deduce that

Consequently,

and the estimate (4.48) follows in view of (4.51).

On the other hand (4.50) implies that

But, for \(|\xi |\le c\), we have \({\widehat{\phi }}(\xi )^t\ge \kappa _\phi ^t\). Further, if we also have \(|x|\le \pi /2c\), then \(\cos 2x\xi \ge 0\). Therefore,

since \({\text {sinc}}(cx)\) is decreasing on \([0,\pi /2c]\) and \(\displaystyle {\text {sinc}}\left( c\frac{\pi }{2c}\right) =\frac{2}{\pi }\). It follows that

and we get the desired result. \(\square \)

Remark 4.3

If \({\widehat{\phi }}(\xi )=e^{-\sigma ^2\xi ^2}\), \(\sigma \not =0\), then \(\kappa _\phi = e^{-(\sigma c)^2}\) and we may take \(E_\phi = \sqrt{\frac{2}{e}}|\sigma |\). Therefore, the constants \(c_{\phi ,L}\) and \(C_{\phi ,L}\) in the above lemma can be taken as

For the estimate of \(C_{\phi ,L}\) we have used that

with Hölder.

Theorem 4.4

Let \(\phi \in \Phi \) and assume that \({\widehat{\phi }}\) is \(\mathcal {C}^1\)-smooth on \([-c,c]\). Assume that \(\Lambda \subseteq {\mathbb {R}}\) is a stable sampling set for Problem 1 with frame bounds A, B (i.e., (1.4) holds:

Let \(c_{\phi ,L}\) and \(C_{\phi ,L}\) be the constants from Lemma 4.2. Then for \(R\ge \displaystyle \max \left( \frac{\pi }{c},\frac{8c}{\pi }\frac{B}{A}\frac{C_{\Phi ,L}}{c_{\Phi ,L}}\right) \) and every \(a\in {\mathbb {R}}\), we have \([a-R,a+R]\cap \Lambda \ne \emptyset \). Further, we have \(D^-(\Lambda )\ge \displaystyle \min \left( \frac{c}{2\pi }, \frac{\pi }{16c}\frac{A}{B}\frac{c_{\Phi ,L}}{C_{\Phi ,L}}\right) \) and \(\displaystyle D^+(\Lambda )\le 4\frac{B}{c_{\Phi ,L}}\).

Proof

Denoting \(I_a = [a-\pi /4c,a+\pi /4c]\), \(a\in {{\mathbb {R}}}\), let us bound the covering number

We use (4.49), i.e., the fact that \(\displaystyle \int _0^L|({\text {sinc}}(c\cdot )*\phi _t)(x)|^2\,\text{ d }t\ge c_{\Phi ,L}\) for \(|x|\le \pi /2c\), and our first observation to obtain

where we applied (1.4) to \(f(t)={\text {sinc}}c(t - a)\) for all \(a\in {\mathbb {R}}\). As \(\hat{f}(\xi )=\displaystyle \frac{\pi }{c}\mathbf {1}_{[-c,c]}\), Parseval’s relation gives \(\Vert f\Vert ^2 =\frac{\pi }{c}\) hence

As a first consequence, this implies that \(D^+(\Lambda )\le 4\frac{B}{c_{\Phi ,L}}\).

Now we assume that for some \(a_0\in {\mathbb {R}}\), and some \(R\ge \displaystyle \frac{\pi }{c}\), \(\Lambda \cap [a_0-R,a_0+R]=\emptyset \). As the Paley-Wiener space is invariant under translation, if (1.4) holds for \(\Lambda \), it also holds for its translates, so that we may assume that \(a_0=0\).

From Lemma 4.2, there exists \(C_{\Phi ,L}\) such that \(\displaystyle \int _{0}^{L}|({\text {sinc}}(c\cdot )*\phi _t)(x)|^2\mathrm {d}t\le C_{\Phi ,L}/(1+x^2)\). Therefore, we have the following estimates

since we assumed that \(R\ge \pi /c\). It follows that \(R\le \displaystyle \frac{8c}{\pi }\frac{B}{A}\frac{C_{\Phi ,L}}{c_{\Phi ,L}}\). Finally, note that this implies that \(D^-(\Lambda )\ge \frac{1}{2R}\). \(\square \)

Remark 4.5

Computing the explicit estimate for \(\frac{C_{\Phi ,L}}{c_{\Phi ,L}}\), we observe that the maximal allowed gap in spacial measurements grows with L, which is to be expected. For the Gaussian, we may take the constant \(\frac{C_{\Phi ,L}}{c_{\Phi ,L}}\) to be \(O(L^2)\) (see (4.52)). The above results also shows that for \(\mathcal {C}^1\)-smooth functions \(\phi \), stable sampling sets must have positive lower density.

Remark 4.6

References

Aldroubi, A., Cabrelli, C., Çakmak, A.F., Molter, U., Petrosyan, A.: Iterative actions of normal operators. J. Funct. Anal. 272(3), 1121–1146 (2017)

Aldroubi, A., Cabrelli, C., Molter, U., Tang, S.: Dynamical sampling. Appl. Comput. Harmon. Anal. 42(3), 378–401 (2017). https://doi.org/10.1016/j.acha.2015.08.014

Aldroubi, A., Davis, J., Krishtal, I.: Dynamical sampling: time-space trade-off. Appl. Comput. Harmon. Anal. 34, 495–503 (2013)

Aldroubi, A., Davis, J., Krishtal, I.: Exact reconstruction of signals in evolutionary systems via spatiotemporal trade-off. J. Fourier Anal. Appl. 21(1), 11–31 (2015)

Aldroubi, A., Huang, L.X., Petrosyan, A.: Frames induced by the action of continuous powers of an operator. J. Math. Anal. Appl. 478, 1059–1084 (2019)

Andrews, G.E., Askey, R., Roy, R.: Special Functions. Cambridge University Press, Cambridge (2000)

Beckermann, B., Townsend, A.: On the singular values of matrices with displacement structure. SIAM J. Matrix Anal. Appl. 38, 1227–1248 (2017)

Bonami, A., Jaming, P., Karoui, A.: Non-asymptotic behaviour of the spectrum of the sinc-kernel operator and related applications. Available at ArXiv:1804.01257

Borwein, P., Erdélyi, T.: Polynomials and Polynomial Inequalities. Springer, New York (1995)

Fasino, D., Olshevsky, V.: How bad are symmetric Pick matrices? In Structured matrices in mathematics, computer science, and engineering, I (Boulder, CO, 1999), volume 280 of Contemp. Math., pages 301–311. Amer. Math. Soc., Providence, RI, 2001

Fornasier, M., Rauhut, H.: Continuous frames, function spaces, and the discretization problem. J. Fourier Anal. Appl. 11(3), 245–287 (2005)

Gautschi, W.: On inverses of Vandermonde and confluent Vandermonde matrices. Numer. Math. 4, 117–123 (1962)

Gröchenig, K., Romero, J.L., Unnikrishnan, J., Vetterli, M.: On minimal trajectories for mobile sampling of bandlimited fields. Appl. Comput. Harmon. Anal. 39(3), 487–510 (2015)

Güngör, A.D.: Erratum to “An upper bound for the condition number of a matrix in spectral norm”[J. Comput. Appl. Math. 143: 141–144]. J. Comput. Appl. Math. 234(316), 2010 (2002)

Jaming, Ph, Karoui, A., Spektor, S.: The approximation of almost time and band limited functions by their expansion in some orthogonal polynomials bases. J. Approx. Theory 212, 41–65 (2016)

Lu, Y.M., Vetterli, M.: Spatial super-resolution of a diffusion field by temporal oversampling in sensor networks. In: IEEE International Conference on Acoustics, p. 2009. Speech and Signal Processing, IEEE (2009)

Nazarov, F.: Local estimates for exponential polynomials and their applications to inequalities of the uncertainty principle type. Algebra i Analiz. 5, 3–66 (1993)

Papoulis, A.: Generalized sampling expansion. IEEE Trans. Circuit. Syst. CAS 24(11), 652–654 (1977)

Piazza, G., Politi, T.: An upper bound for the condition number of a matrix in spectral norm. J. Comput. Appl. Math. 143(1), 141–144 (2002)

Ranieri, J., Chebira, A., Lu, Y.M., Vetterli, M.: Sampling and reconstructing diffusion fields with localized sources. In: 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4016–4019 (2011)

Slepian, D., Pollak, H.O.: Prolate spheroidal wave functions, Fourier analysis and uncertainty I. Bell Syst. Tech. J. 43, 3009–3058 (1964)

Xiao, H., Rokhlin, V., Yarvin, N.: Prolate spheroidal wave functions, quadrature and interpolation. Inverse Probl. 17, 805–838 (2001)

Yu, Y.-S., Gu, D.-H.: A note on a lower bound for the smallest singular value. Linear Algebra Appl. 253, 25–38 (1997)

Acknowledgements

K. G. was supported in part by the project P31887-N32 of the Austrian Science Fund (FWF), and J. L. R. gratefully acknowledges support from the Austrian Science Fund (FWF): Y 1199 and P 29462. The authors are also grateful for the hospitality of various conferences, where we were able to work together on this project. We thank the hosts and organizers of all those events, in particular: ICERM, University of Bordeaux, and Vanderbilt University. Finally, it gives us great pleasure to dedicate this paper to Guido Weiss on the occasion of his \(90^{th}\) birthday. To a dear friend who taught so much to so many: Merry Guidmas!

Funding

Open Access funding provided by University of Vienna

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aldroubi, A., Gröchenig, K., Huang, L. et al. Sampling the Flow of a Bandlimited Function. J Geom Anal 31, 9241–9275 (2021). https://doi.org/10.1007/s12220-021-00617-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12220-021-00617-0