Abstract

Noncredible cognitive performance among chronic pain patients garners increased clinical attention. The Word Memory Test (WMT)—a well-established stand-alone validity indicator—was recently integrated with an eye tracker, and its utility was assessed using healthy simulators. The current study expands on this earlier work by assessing the utility of the eye-tracker integrated WMT to detect noncredible cognitive performance in the context of chronic pain. Chronic pain outpatients were randomly assigned to either a simulation (i.e., patients simulating cognitive impairment; n = 22) or honest control (i.e., patients performing to the best of their ability; n = 23) conditions. They then completed the WMT’s immediate recognition (IR) subtest while their eye movements were recorded. Simulators gazed less at relevant stimuli and gazed more at irrelevant stimuli than controls. Sensitivity levels tended to be low to moderate when maintaining specificities ≥ 90%, as customary in the field. While a previously developed scale that integrates eye movement measures using a logistic regression did not adequately differentiate the groups, conjunctive rules (i.e., the participant was required to fail both the WMT's classification scheme and the eye movement measure with the strongest discriminative capacity) were associated with higher specificities than those of the WMT’s conventional classification scheme. Overall, the eye-tracker integrated WMT shows initial clinical utility for detecting noncredible cognitive performance. Decreasing costs of eye trackers and enhanced usability will hopefully encourage further research of their utility for detecting noncredible cognitive performance and integration of this novel technology with other stand-alone validity indicators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Chronic pain is experienced by a significant percentage of the population (Turk & Patel, 2022; Yong et al., 2022). It reduces quality of life and is associated with emotional distress, reduced job productivity, and significant healthcare costs (Cohen et al., 2021). Chronic pain is also associated with cognitive impairment, including slower processing speed and deficits in attention, memory, and executive functioning (Khera & Rangasamy, 2021). This impairment burdens patients and further decreases their functioning (Nahin & DeKosky, 2020). Consequently, increasing efforts have been devoted to determining the extent of the cognitive impairment of chronic pain patients (Beckmann & Mano, 2021; Nahin & DeKosky, 2020) and developing treatments to remediate it (Baker et al., 2018; Jacobsen et al., 2020).

Feigning cognitive impairment is a growing concern when assessing chronic pain patients (Tuck et al., 2019). Up to half of the patients evaluated in a medicolegal context and 7–12% of patients tested outside litigation/compensation settings feign such impairment (Suhr & Bryant, 2021). Such feigning is a significant source of bias in cognitive testing, alongside other sources of noncredible cognitive performance (Cottingham, 2021; Suhr & Lee, 2023). The latter include, among others, disengagement from the evaluation process and the impact of psychiatric disorders (e.g., factitious disorder; Schroeder et al., 2022). Notably, clinical judgment is limited in detecting noncredible cognitive performance (Dandachi-FitzGerald & Martin, 2022; Guilmette, 2013). Consequently, clinicians developed objective validity indicators, and their incorporation in cognitive evaluations is considered at present a standard of practice (Bush et al., 2005; Chafetz et al., 2015; Sweet et al., 2021). Stand-alone validity indicators, also termed performance validity tests (PVTs), were explicitly designed to detect noncredible cognitive performance. They are used as part of a holistic approach that also considers embedded validity indicators and other indications of noncredible cognitive performance (Larrabee, 2022).

Eye movements reveal cognitive processes by indicating information the examinee is paying attention to while engaging with a task (Duchowski, 2017b; Graham et al., 2022; Pouget, 2019). These measures have attracted interest in recent years as potential validity indicators (e.g., Ales et al., 2023; Barry & Ettenhofer, 2016; Kanser et al., 2020; Mahoney et al., 2018; Patrick et al., 2021; Rizzo et al., 2021), driven by research indicating that eye movements can be used to detect other types of deception (Proudfoot et al., 2016; Seymour et al., 2013; Walczyk et al., 2012). Capitalizing on its computerized interface, Tomer et al. (2020) integrated an eye-tracker with the Word Memory Test (WMT), a well-established forced-choice recognition-memory-based PVT (Armistead-Jehle et al., 2021; Schroeder & Martin, 2021, pp. 40–43). It is widely used in clinical settings (Martin et al., 2015; Schroeder et al., 2016; Young et al., 2016). Importantly, studies of various neuropsychiatric disorders, including chronic pain (Greve et al., 2013), attested to its satisfactory classification accuracy (see reviews; Armistead-Jehle et al., 2021; Stevens & Licha, 2019). Tomer et al. (2020) indicated the feasibility of integrating the WMT with an eye tracker and the tentative utility of eye movement measures as validity indicators. More specifically, simulators (i.e., healthy participants feigning cognitive impairment) gazed less at task-relevant stimuli, spent more time gazing at irrelevant stimuli, and had a lower saccade rate when performing the WMT’s immediate recognition (IR) subtest than healthy honest controls. However, Tomer et al. (2020) did not include clinical patients, a limitation considering that such patients may exhibit genuine cognitive impairment and, consequently, their poor performance may be erroneously interpreted as noncredible (Rogers, 2018, p. 11).

The current study aimed to further assess the effectiveness of eye movement measures as validity indicators in forced-choice recognition-memory-based PVTs. More specifically, chronic pain patients simulating cognitive impairment while performing the WMT were compared to those who completed the PVT to the best of their ability. Based on Tomer et al. (2020), we hypothesized that (a) Simulators will gaze less at the targets (i.e., correct/foil words) than controls. (b) Simulators will gaze more at screen areas containing task instructions than controls. (c) Simulators will have a lower saccade rate (see Discussion section for the theoretical underpinning of this hypothesis). (d) The WMT’s eye behavior scale, WEBS, which was derived by combining the contributions of the eye movement measures using logistic regression (Tomer et al., 2020), will significantly differentiate the groups. We expected the eye movement measures to maintain clinical utility (i.e., cutoffs maintaining specificities ≥ 90% will be associated with at least moderate sensitivities; see elaboration in the data analysis subsection). However, we did expect at least a minor decrease in discriminative capacities compared to our earlier study (Tomer et al., 2020). This was expected, considering that Tomer et al. (2020) included healthy controls, while chronic pain patients served as controls in the current study. More specifically, since chronic pain is associated with cognitive impairment (Khera & Rangasamy, 2021), studies in which these patients serve as controls are expected to show reduced group differences compared to simulation studies in which participants are healthy. Finally, we tentatively hypothesized that integrating eye movement data and the WMT’s conventional classification scheme would enable better classification of participants compared to using the WMT’ classification scheme as the sole validity indicator (corresponding to the increased use of multivariate models of performance validity; Davis, 2021; Erdodi, 2023).

Method

Participants

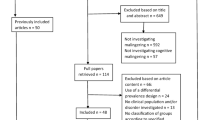

Participants were recruited from the pain outpatient unit of Loewenstein Hospital (N = 63). Inclusion criteria were as follows: (a) adult age (18 to 65 years old), (b) experiencing chronic pain, operationalized as pain persisting for over three months (see definition by the International Association for the Study of Pain, IASP; Treede et al., 2019), (c) proficiency in Hebrew. Exclusion criteria were as follows: (a) past or present severe neuropsychiatric diagnosis (e.g., cerebrovascular accident, CVA) according to their medical records or self-report; (b) visual impairment (e.g., near-sightedness (myopia), far-sightedness (hyperopia), and nystagmus) that interferes with the ability to calibrate the eye tracker; (c) involvement in litigation or other legal proceedings at the time of the study.

Participants did not complete the experiment due to difficulty calibrating the eye-tracker (n = 2), self-reported pain/fatigue (n = 3), or technical difficulties (n = 3). The data of additional participants were discarded due to poor eye-tracking data quality (n = 7), difficulty understanding instructions (n = 2), and insufficient motivation to follow the experimental instructions (n = 1). The final criterion was deemed necessary since even healthy university students may exert suboptimal effort when cognitive tests are administered for research purposes (An et al., 2012, 2017). All participants, therefore, completed a debriefing survey, which included an item inquiring about their motivation to follow the experimental instructions (1–7 Likert-type scale; higher scores indicate stronger motivation). Later, an apriori decision rule was used to detect poorly motivated participants (motivation item < 4, as used in Berger et al., 2021). The final sample included 45 participants (age. M = 47.6, SD = 12.9, range = 21–65; education, M = 13.3, SD = 2.2, range = 8–19). Table 1 presents the demographic and clinical data of the participants.

The Loewenstein Hospital’s Institutional Review Board (IRB) committee approved the study, and all participants signed a written informed consent form before entering the study.

Tools

Word Memory Test (WMT): The WMT is a stand-alone recognition-memory-based computerized PVT (Allen & Green, 1999; Green, 2003; Green & Allen, 1999; Sherman et al., 2022, pp. 1002–1018). The WMT has six subtests, including two primary performance validity subtests which were used in the current study (immediate and delayed recognition, IR and DR, respectively). While performing the IR subtest, the participant is presented twice with a list of semantically associated noun pairs (e.g., “Lion–Zoo”). Next, the participant identifies the previously shown nouns in a series of forced-choice trials (target paired with foil). The DR subtest was administered 30 min after the participants performed the IR subtest. It is identical to the IR subtest, except for a change in foils. The current study utilized the Hebrew version of the WMT (Hegedish & Hoofien, 2013). Performance validity was determined using the WMT’s classification scheme (Green, 2005, p. 9), which considers the examinee’s IR-score (% correct responses in the IR subtest), DR-score (% correct responses in the DR subtest), and the consistency of responses (CNS). More specifically, participants with an accuracy score ≤ 82.5% in any of the accuracy-based validity indicators (IR-score, DR-score, and CNS) were considered as failing the WMT.

Self-report Questionnaires: (a) Brief Pain Inventory–Short Form (BPI-SF): A commonly used pain scale (Cleeland, 2009; Jumbo et al., 2021). It includes a screening question, a body chart indicating painful regions, four items assessing pain severity, and seven items assessing pain interference with daily functioning (range: 0 to 10; higher scores indicating worse pain or more substantial interference). The mean of the four pain severity items was used in the current study to calculate a total pain severity score (Jumbo et al., 2021). (b) Patient Health Questionnaire (PHQ-9): A 9-item questionnaire of depressive symptoms (Aagaard et al., 2023; Kroenke et al., 2001). (c) General Anxiety Disorder-7 (GAD-7): A 7-item questionnaire assessing participants’ anxiety levels in the preceding 2 weeks (Plummer et al., 2016; Spitzer et al., 2006). Responses range from 1 (“not at all”) to 3 (“nearly every day”) in both the PHQ-9 and GAD-7. (d) Debriefing survey: A questionnaire assessing the participants’ motivation to follow the experimental instructions (see also participants subsection); additional post-experimental inquiries that were not the focus of the current study (e.g., an open question regarding the use of feigning strategies by simulators); and contact information that willing participants could provide to receive a brief overview of the study after finalizing it.

Eye-Tracking Apparatus and Eye Movement Measures: An Eyelink Portable Duo eye-tracking system (SR Research Ltd., Mississauga, Canada) recorded binocular eye movements at a sample rate of 250 Hz. Alienware OptX AW2310 display screen (1920 × 1080 pixels; 120 Hz refresh rate), located approximately 65 cm from the participant, presented stimuli. Eye movements were extracted using Eyelink Data Viewer (SR Research Ltd., Mississauga, Canada) and analyzed using an SR research event detection algorithm (SR Research, 2010, p. 91). The analyzed measures were as follows:

-

(a)

Spatial eye movement measures: Dwell rate (i.e., average number of entries per second) in the areas of interest (AOIs) in which the correct/foil words or instructions were presented (“response AOI dwell rate” and “instructions AOI dwell rate,” respectively). A partition of the display screen to AOIs can be found in Tomer et al., (2020, p. 52).

-

(b)

General eye movement measure (“saccade rate”): Average number per second of saccades. A saccade is a ballistic motion in which the eyes scurry between fixations, periods in which the eyes remain fairly still and attended information is processed (Duchowski, 2017a, pp. 40–43).

-

(c)

WMT's eye behavior scale (WEBS): A regression formula combining eye movement measures into a continuous scale. The scale’s values estimate the probability of classifying the participant as performing in a noncredible manner (range: 0–1):

$$\begin{aligned}P\left(\mathrm{noncredible performance}\right)\\&=\frac{1}{1+{e}^{-(-4.486*\mathrm{Response\;AOI\;dwell\;rate}+3.218*\mathrm{Instructions\;AOI\;dwell\;rate}-0.981*\mathrm{Saccade\;rate}+12.28)}}\end{aligned}$$The scale was created as part of our previous study, in which it was named the combined eye movements scale (Tomer et al., 2020).

-

(d)

Exploratory eye movement measures: Average number of times per second the participants’ gaze crossed the screen’s midline (“side crossings”). The measure reflects gaze oscillations between correct and foil words and, thereby, indecisiveness.

Procedure

Participants filled out a demographic-medical questionnaire, the BPI, PHQ-9, and GAD-7. They were then randomly assigned to one of two conditions, as in Lupu et al. (2022): (a) Simulators (n = 21): these patients read a script according to which their attorney advised them that by appearing cognitively impaired in a neuropsychological assessment they are scheduled to undergo they may receive a larger monetary settlement in an insurance claim they recently filed. (b) Honest patients (n = 23): these patients were requested to perform the tasks to the best of their ability. Appendix Table 4 presents the experimental instructions that were given to the participants. Comprehension was assessed by asking the participants to reiterate the experimental instructions. They were also informed that they would receive a coupon for coffee and a pastry if the research team members judged them to perform the tasks as instructed (all participants received the incentive). Finally, the study included cognitive testing and was performed in a clinical setting. We were, therefore, concerned that participants may manipulate their performance during the experiment (i.e., malinger), request information regarding performance during the experiment at a later date, and use it as part of medicolegal procedures. We, therefore, attempted to minimize this confounder by noting in advance that participants’ data will be used solely for experimental purposes and will not be available for future use (e.g., in medicolegal procedures). Next, the participants were moved to a dimmed and noise-isolated room in which a second experimenter, blind to the participant’s group allocation, sat them in front of the display screen and calibrated the eye tracker. The participants then performed the WMT's IR subtest, the Cogstate Brief Battery (CBBFootnote 1), and the WMT’s DR subtest. A brief recess was provided to ensure that 30 min elapsed between administering the IR and DR subtests. Participants then filled out the debriefing survey.

Data Analysis

Group Comparisons in Demographic, Clinical, and WMT Outcome Measures

The groups (chronic pain simulators/chronic pain honest controls) were compared in demographic, clinical measures, and motivation to follow the experimental instructions using independent samples t-tests and chi-square analyses (for parametric and non-parametric variables, respectively). The groups were compared in the WMT’s accuracy outcome measures (IR-score, DR-score, and CNS) using a multivariate analysis of variance (MANOVA). The groups were compared in failure rates in each of the WMT’s accuracy outcome measures, as well as the WMT’s classification scheme, using chi-square analyses. Since the groups differed significantly in pain severity (see Table 1), we repeated the parametric analyses using ANOVAs with a between-subjects factor of group (simulators/controls) and a total pain severity score (no.) covariate. The findings are not reported as they are similar to those of the original analyses (presented in the Results section). Note that the baseline group differences in pain severity were unexpected as the BPI was filled out before the randomization and experimental manipulation.

Establishing the Discriminative Capacities of the WMT Outcome Measures

Discriminative capacities of the WMT's eye movement measures were assessed using receiver operating characteristic (ROC) curve analyses (Obuchowski & Bullen, 2018). Next, utility estimates—sensitivity, specificity, positive predictive power (PPP), and negative predictive power (NPP)—were calculated for cutoffs of eye movement measure with the strongest discriminative capacity (Heilbronner et al., 2009, pp. 1119–1120). Calculations of PPP and NPP were based on the expected base rates of noncredible cognitive performance in the context of chronic pain (i.e., 10 to 50%; Suhr & Bryant, 2021). Cutoffs were then selected according to the conventions in the field (Schroeder et al., 2021, p. 28; Sherman et al., 2020, p. 12 & p. 14; Sweet et al., 2021, p. 1069); cutoffs had to maintain high specificities (≥ 90%; i.e., a false positive rate of < 10%) and be at least moderately sensitive (sensitivity or true positive rate ≥ 50%). For comparison’s sake, we calculated utility estimates (i.e., sensitivity and specificity) for the WMT’s conventional classification scheme (Green, 2005, p. 9). In addition, we assessed the utility of a conjunctive (“and”) decision rule for determining noncredible cognitive performance (i.e., the participant was required to fail both the WMT’s classification scheme and the eye movement measure with the strongest discriminative capacity).

General Remarks

Assumptions for parametric analyses were checked using skewness, kurtosis, and the Kolmogorov–Smirnov test (Mishra et al., 2019). Ps < 0.05 were considered significant in all statistical analyses. The discriminative capacities of AUCs were interpreted following Hosmer et al., (2013, p. 177): poor (0.6–0.69), acceptable (0.70–0.79), excellent (0.80–0.89), and outstanding (≥ 0.90). Sensitivity values were interpreted following Boone (2013, p. 32): low (< 40%), moderate (40–69%), and strong (≥ 70%). The analyses were performed using IBM SPSS Statistics version 29.0.

Results

Group Comparisons in the Eye-Tracker Integrated WMT Outcome Measures

The groups differed significantly in all eye movement measures, except saccade rate (p = 0.12). More specifically, chronic pain simulators had a lower response AOI dwell rate, higher instructions AOI dwell rate, and a larger WEBS than chronic pain controls (p = 0.03, p < 0.001, p = 0.02; respectively). Simulators also made fewer side crossings (i.e., number of times their gaze crossed the screen’s midline; p = 0.02). The MANOVA of WMT outcome measures indicated a significant group main effect; F(3,40) = 21.67, p < 0.001, ηp2 = 0.62. Simulators had lower IR-score, DR-score, and CNS (i.e., performed more poorly; ps < 0.001) (see Table 2).

Establishing the Discriminative Capacity and Cutoffs of the WMT Outcome Measures

AUCs in ROC curves were significant for all eye movement measures, except saccade rate (AUC = 0.666, SE = 0.09, p = 0.06, 95% CI, 0.496–0.836). The discriminative capacity of instructions AOI dwell rate was excellent (AUC = 0.809, SE = 0.07, p < 0.001, 95% CI, 0.677–0.942), while that of the WEBS was acceptable (AUC = 0.725, SE = 0.08, p = 0.01, 95% CI, 0.578–0.872). Response AOI dwell rate had poor discriminative capacity (AUC = 0.685, SE = 0.08, p = 0.03, 95% CI, 0.530–0.840). Table 3 presents utility estimates for the eye movement measure with the strongest discriminative capacity (instructions AOI dwell rate). Notably, perfect specificity (100%) for detecting noncredible performance was associated with moderate sensitivity (cutoff = 0.425, sensitivity = 40.9%). Sensitivity could be somewhat enhanced (specificity = 91.3%, sensitivity = 45.5%) using a 0.354 cutoff (≥ 0.354 indicating noncredible performance). Lower cutoffs, however, were associated with inadequate specificities (< 90%). The lower discriminative capacities of the other measures were manifested in the utility estimates associated with suggested cutoffs: (a) Response AOI dwell rate: A 1.235 cutoff (≤ 1.235 indicating noncredible performance) was associated with 91.3% specificity and 27.3% sensitivity. Perfect specificity was achievable (0.026 cutoff) but was associated with 0% sensitivity. (b) WEBS: A 0.9965 cutoff (≥ 0.9965 indicating noncredible performance) was associated with perfect specificity and 27.3% sensitivity, while specificity was 91.3% and sensitivity was 36.4% when using a 0.9910 cutoff.

As shown in Table 2, significantly more simulators failed the WMT’s primary performance validity indicators (IR-score, DR-score, and CNS), as well as the WMT’s classification scheme (Green, 2005, p. 9), than controls. Notably, the classification scheme achieved 73.9% specificity and 95.5% sensitivity (17/23 controls and 21/22 simulators were correctly classified). A conjunctive (“and”) rule requiring the participant to fail both the WMT's classification scheme and the instructions AOI dwell rate measure (i.e., the eye movement measure with the strongest discriminative capacity) was associated with (a) Perfect specificity (100%) and 40.9% sensitivity when using the conservative instructions AOI dwell rate cutoff (0.425). (b) Specificity = 95.7% (i.e., one honest control mistakenly categorized as performing in a noncredible manner), and sensitivity = 45.5% when using the instructions AOI dwell rate’s liberal cutoff (0.345).

Discussion

Tomer et al. (2020) indicated the feasibility of integrating an eye tracker with the WMT and the utility of eye movement measures as a supplementary technique for detecting noncredible cognitive performance. As part of the current study, we aimed to enhance the methodological rigor of our previous study by recruiting clinical patients. Specifically, we compared the performance of chronic pain patients simulating cognitive impairment and control patients in the eye-tracker integrated WMT. The prevalence of complaints regarding cognitive impairment among chronic pain patients (Cuevas et al., 2022; Khera & Rangasamy, 2021) and the need to clarify the credibility of their performance in cognitive evaluations (Suhr & Bryant, 2021) were additional incentives for conducting the study.

The study’s analyses indicated significant group differences in spatial eye movement measures. Simulators gazed less at screen areas containing correct/foil words than controls. Conversely, they gazed more at screen areas that were irrelevant for successful WMT performance (i.e., areas in which the instructions were presented) than controls. This replicated our earlier findings, gathered using healthy simulators (Tomer et al., 2020). As in our earlier publication, we can speculate that the simulators’ performance reflects three consecutive cognitive processes. First, simulators experience increased cognitive load as they attempt to avoid detection while misrepresenting their true cognitive ability. This corresponds to the cognitive approach to deception, according to which deception is more cognitively demanding than telling the truth (Driskell & Driskell, 2019; Vrij et al., 2017). Second, simulators visually disengage from relevant stimuli (i.e., correct/foil words) to decrease the impact of external distractors and thereby lower their experienced cognitive load. Third, task instructions draw the simulators’ gaze (i.e., they serve as “attentional hooks”). The latter may stem from the automatic processing of language and its ability to draw attention involuntarily (D'Mello & Mills, 2021), previously used to detect malingered illiteracy (Lu et al., 2004). Besides the group differences in spatial eye movement measures (i.e., the tendency to gaze at instructions instead of word-foil pairs), we previously noted two findings supporting this three-stage cognitive model underlying simulators’ eye movements (Tomer et al., 2020): (a) There was a significant group difference in saccade rate, an eye movement measure affected by cognitive load (Skaramagkas et al., 2023; Tao et al., 2019). (b) Simulators made fewer side crossings (i.e., the number of times their gaze crossed the midline of the screen) than controls, interpreted as supporting the capacity of task instructions to draw the simulators’ gaze (see elaboration in Tomer et al., 2020). It should be noted, however, that while simulators also made fewer side crossings in the current study, we did not find significant group differences in saccade rate. This raises doubts regarding the cognitive load that the simulators experienced, stressing the need for further research before reaching firm conclusions regarding the underlying cognitive processes involved in simulating cognitive impairment.

Gazing at the instructions (i.e., instructions AOI dwell rate) best differentiated the groups. Correspondingly, group differences in this measure were associated with excellent discriminative capacity (AUC = 0.809). Notably, a 0.425 instructions AOI dwell rate cutoff was associated with perfect (100%) specificity and moderate sensitivity (40.9%). Lowering the cutoff to 0.354 led to a slight increase in sensitivity (45.5%) while keeping specificity above the recommended minimum level (91.3%). Response AOI dwell rate, the other spatial eye movement measure in which the groups significantly differed, was associated with poor discriminative capacity (AUC = 0.685). Correspondingly, the highest sensitivity that could be reached while maintaining specificity ≥ 90% was 27.3% (response AOI dwell rate cutoff = 1.235).

False positives are minimized when using data derived from several, preferably not highly correlated, validity indicators (Cottingham, 2021). We, therefore, integrated data derived from individual validity indicators into a single scale, termed WEBS (“combined eye movements scale” in Tomer et al., 2020). As hypothesized, simulators in the current study had significantly larger WEBS than controls. However, the scale had only acceptable discriminative capacity (AUC = 0.725) and its discriminative capacity was lower than that of instructions AOI dwell rate. This contrasts with Tomer et al. (2020), in which the WEBS had the strongest discriminative capacity out of the eye movement measures. Likely, the non-significant group differences in saccade rate that were found in the current study decreased the WEBS discriminative capacity as the measure is included in the scale’s regression formula (Tomer et al., 2020).

Overall, instructions AOI dwell rate proved to be the most useful eye-movement-based validity indicator, even when compared to a logistic regression-based scale (WEBS). The latter conclusion is also supported by the comparison with our earlier study (Tomer et al., 2020). The discriminative capacities of validity indicators in clinical settings are usually lower than suggested by simulation studies of healthy participants, reflecting the cognitive impairment of clinical patients and the tendency of simulators to produce an exaggerated impairment profile (Sherman et al., 2020, p. 14). Correspondingly, there was a major decrease in the discriminative capacity of response AOI dwell rate between the two studies (AUC = 0.685 vs. AUC = 0.916). Similarly, the discriminative capacity of WEBS in the current study decreased from its outstanding capacity (AUC = 0.923; Δ = 0.198) in Tomer et al. (2020). In contrast, instructions AOI dwell rate had a stronger discriminative capacity in the current study than our previous one (AUC = 0.809 vs. AUC = 0.764; Tomer et al., 2020). Thus, instructions AOI dwell rate was both the most potent eye movement-based validity indicator and proved robust to changes in sample characteristics.

Overall, the current study provides tentative support for the use of instructions AOI dwell rate as a validity indicator when assessing chronic pain patients. The other eye movement measures, in contrast, proved less sensitive to noncredible performance, raising doubts regarding their clinical utility. Interestingly, instructions on AOI dwell rate seems to complement the WMT’s conventional classification scheme. While the WMT’s classification scheme proved to be highly sensitive (95.5%) but lacking in specificity (73.9%), instructions AOI dwell rate showed an almost opposite profile (i.e., two cutoffs were suggested earlier with specificities of 100%/91.3% and sensitives of 40.9%/45.5%). Considering the enhanced sensitivity of the WMT to noncredible performance compared to other forced-choice recognition-memory-based PVTs (see discussion in Armistead-Jehle et al., 2021), clinicians may utilize in the future a conjunctive (“and”) rule for determining noncredible performance. Such decision rules were suggested to minimize false positives (Sherman et al., 2020; Sweet et al., 2021), as also found in the current study; e.g., requiring the participant to fail both the WMT's classification scheme and instructions AOI dwell rate (cutoff = 0.345) was associated with 95.7% specificity and 45.5% sensitivity. Admittedly, this is only a marginal improvement in discriminative capacity compared to using instructions AOI dwell rate as the sole validity indicator. However, using such a conjunctive rule may provide some reassurance to clinicians when testing clinical populations using the WMT.

Several limitations of the current study should be noted. First, the use of simulation research design limits the generalizability of the findings (Schroeder et al., 2021). We attempted to address this limitation by recruiting clinical patients and using manipulation checks to ensure participants followed the experimental instructions. However, the use of “known-groups comparisons” research design in future studies (i.e., classifying examinees based on well-established validity indicators Rogers, 2018) is encouraged and will allow for better evaluation of the generalizability of our findings. Second, the study’s sample size was modest. As well as enhancing statistical power, the recruitment of larger samples in future studies will enable researchers to compare the impact of different chronic pain etiologies and to estimate the contribution of other relevant factors (e.g., medications and sleep quality; Babiloni et al., 2020; Khera & Rangasamy, 2021). Third, as noted by an anonymous reviewer, the groups significantly differed in variance (i.e., “motivation to follow experimental procedures,” the three WMT accuracy outcome measures, and two of the eye movement measures; see Tables 1 and 2). The group differences in variance that were found for the WMT’s accuracy measures were unsurprising considering a ceiling effect in the controls’ performance (an expected finding in simulation studies). However, the differences in variance in other measures were unexpected; for two measures (“instructions AOI dwell rate” and “motivation to follow experimental procedures”) the variance was larger among simulators than controls, while an opposite pattern was found for WEBS. It would be interesting to assess whether such differences in variance are replicated in future studies and, if so, explore explanations for them. Researchers may also explore the following lines of research: (a) assessing the utility of integrating an eye-tracker with other subtests of the WMT, chiefly its other primary performance validity subtest (Delayed Recognition, DR). This can also be done with other forced-choice memory-based PVTs (e.g., Test of Memory Malingering, TOMM), assessing the generalizability of the findings across stand-alone validity indicators with a similar methodology. (b) An anonymous reviewer noted that eye tracking might be particularly useful to uncover intent to feign impairment. If so, this would allow the determination of malingering, the intentional feigning of cognitive impairment, as well as deliberate deception in the context of a factitious disorder (American Psychiatric Association, 2022; Sweet et al., 2021, p. 1058). Determining intent entails, at present, below-chance performance in forced-choice recognition-memory-based PVTs or documentation (e.g., surveillance footage) markedly inconsistent with self-report or performance during the assessment (Sherman et al., 2020). Since eye movements are only under partial volitional control (Bridgeman, 1992; Theeuwes et al., 1998), eye tracking may offer a novel method to determine the examinee’s intent. For example, eye tracking may reveal inconsistencies between an examinee’s statements (i.e., claiming not to remember a stimulus) and eye movement data that suggests that the stimulus was, in actuality, remembered. Furthermore, examinees will struggle to devise countermeasures for these eye movement-based validity indicators. Studies of deceptive behaviors tentatively support such future use of eye tracking. Notably, participants’ gaze is preferentially directed toward unfamiliar objects when gazing at two stimuli, a familiar and an unfamiliar one (see review; Lancry-Dayan et al., 2023). Familiar stimuli also elicit fewer and longer fixations, and the pupil size increases when participants encounter previously studied stimuli (Heaver & Hutton, 2011; Lancry-Dayan et al., 2023). To explore these possibilities that eye tracking offers, two lines of research can be suggested. First, researchers can study the ability of eye movement measures to detect coached malingerers by providing simulators with information regarding key eye-movement-based validity indicators and exploring their ability to suppress them and thereby avoid detection. Second, researchers may explore item-level eye movements. As discussed by Tomer et al. (2020), this will necessitate maintaining similar luminescence levels throughout the study and either modification of established tasks (e.g., creating a “baseline block” in the WMT that will establish baseline pupil size, and adding fixation points) or the creation of tasks that were designed from their inception for integration with an eye tracker (e.g., Omer & Braw, 2021). Though a major undertaking, such research endeavor will hopefully pave the way for using eye tracking to determine intent to feign cognitive impairment. (c) Eye movements may clarify cognitive processes that underlie noncredible performance, and we earlier postulated a tentative three-stage cognitive model underlying the simulators’ eye movements. Though this may be premature, we hope to encourage further research that will aid in elucidating such models, as such endeavors have been fruitful in adjacent research fields (e.g., deception detection; Nortje & Tredoux, 2019).

Conclusions

The current study further stresses the feasibility of integrating an eye tracker with a forced-choice recognition memory PVT and the yet untapped potential of this novel technology to enhance the detection of noncredible cognitive performance. More specifically, spatial eye movement measures, but not saccade rate, successfully differentiated chronic pain patients simulating cognitive impairment and those performing honestly; simulators gazed less at relevant stimuli while gazing more at irrelevant stimuli than controls. The former measure (i.e., instructions AOI dwell rate) had the strongest discriminative capacity, with cutoffs maintaining adequate specificities associated with sensitivities between 40.9 and 45.5%. Notably, this validity indicator was robust to changes in population, as evident by comparing it to our earlier study of healthy simulators (Tomer et al., 2020). In addition, conjunctive rules (i.e., the participant was required to fail both the WMT’s classification scheme and the eye movement measure with the strongest discriminative capacity) were associated with higher specificities than those of the WMT’s conventional classification scheme. They may, therefore, prove valuable when testing clinical populations, pending further validation. The other eye movement measures, including a scale that combines the contribution of eye movement measures (WEBS), showed a decrease in discriminative capacities compared to Tomer et al. (2020). Their clinical utility was, therefore, not supported by the current study’s findings. Research of the eye-tracker integrated WMT in clinical settings is mandated to assess its utility further and model the cognitive underpinning of noncredible performance when performing recognition-memory-based PVTs. This endeavor is worth pursuing as psychophysiological measures may be harder to manipulate volitionally than conventional accuracy measures. Improved usability and reduced costs of eye trackers in recent years are further incentives for such efforts (Novák et al., 2023).

Data Availability

The data that support the findings of this study are openly available in ZENODO at https://zenodo.org/doi/https://doi.org/10.5281/zenodo.10488100.

Notes

The CBB is a computerized cognitive assessment battery (duration circa 10 min). It was used as a filler in the context of the current study (for additional information, see Lupu, Braw, Sacher, & Ratmansky, 2022).

References

Aagaard, A., Ravn, S. L., Andersen, T. E., & Vaegter, H. B. (2023). Interpretation of the patient health questionnaire 9 in high-impact chronic pain: Do we measure depressive symptoms the way we think? The Clinical Journal of Pain, 39(10), 501–515. https://doi.org/10.1097/AJP.0000000000001142

Ales, F., Giromini, L., Warmelink, L., Polden, M., Wilcockson, T., Kelly, C., Winters, C., Zennaro, A., & Crawford, T. (2023). On the use of eye movements in symptom validity assessment of feigned schizophrenia. Psychological Injury & Law, 16(1), 83–97. https://doi.org/10.1007/s12207-022-09462-0

Allen, L., & Green, P. (1999). Severe TBI sample performance on CARN and the WMT: Supplement to the CARB ‘97 and Word Memory Test manuals. CogniSyst.

American_Psychiatric_Association. (2022). Diagnostic and statistical manual of mental disorders-5 text revision (DSM-5-TR). American Psychiatric Association.

An, K. Y., Kaploun, K., Erdodi, L. A., & Abeare, C. A. (2017). Performance validity in undergraduate research participants: A comparison of failure rates across tests and cutoffs. Clinical Neuropsychologist, 31(1), 193–206. https://doi.org/10.1080/13854046.2016.1217046

An, K. Y., Zakzanis, K. K., & Joordens, S. (2012). Conducting research with non-clinical healthy undergraduates: Does effort play a role in neuropsychological test performance? Archives of Clinical Neuropsychology, 27(8), 849–857. https://doi.org/10.1093/arclin/acs085

Armistead-Jehle, P., Denney, R. L., & Shura, R. D. (2021). Use of the Word Memory Test (WMT), Medical Symptom Validity Test (MSVT) and Nonverbal Medical Symptom Validity Test (NV-MSVT) in assessment following head injury (ch. 5). In A. M. J. Horton & C. R. Reynolds (Eds.), Detection of malingering during head injury litigation (3 ed., pp. 223–243). Springer. https://doi.org/10.1007/978-3-030-54656-4_5

Babiloni, A. H., De Koninck, B. P., Beetz, G., De Beaumont, L., Martel, M. O., & Lavigne, G. J. (2020). Sleep and pain: Recent insights, mechanisms, and future directions in the investigation of this relationship. Journal of Neural Transmission, 127(4), 647–660. https://doi.org/10.1007/s00702-019-02067-z

Baker, K. S., Georgiou-Karistianis, N., Lampit, A., Valenzuela, M., Gibson, S. J., & Giummarra, M. J. (2018). Computerised training improves cognitive performance in chronic pain: A participant-blinded randomised active-controlled trial with remote supervision. Pain, 159(4), 644–655. https://doi.org/10.1097/j.pain.0000000000001150

Barry, D. M., & Ettenhofer, M. L. (2016). Assessment of performance validity using embedded saccadic and manual indices on a continuous performance test. Archives of Clinical Neuropsychology, 31(8), 963–975. https://doi.org/10.1093/arclin/acw070

Beckmann, E. A., & Mano, K. E. J. (2021). Advancing the measurement of executive functioning in pediatric chronic pain. Children, 8(8), 1–12. https://doi.org/10.3390/children8080630

Berger, C., & L., A., Braw, Y., Elbaum, T., Wagner, M., & Rassovsky, Y. (2021). Detection of feigned ADHD using the MOXO-d-CPT. Journal of Attention Disorders, 25(7), 1032–1047. https://doi.org/10.1177/1087054719864656

Boone, K. B. (2013). Clinical practice of forensic psychology: An evidence-based approach. Guilford Press.

Bridgeman, B. (1992). Conscious vs unconscious processes: The case of vision. Theory & Psychology, 2(1), 73–88.

Bush, S. S., Ruff, R. M., Troster, A. I., Barth, J. T., Koffler, S. P., Pliskin, N. H., Reynolds, C. R., & Silver, C. H. (2005). Symptom validity assessment: Practice issues and medical necessity NAN policy & planning committee. Archives of Clinical Neuropsychology, 20(4), 419–426. https://doi.org/10.1016/j.acn.2005.02.002

Chafetz, M. D., Williams, M. A., Ben-Porath, Y. S., Bianchini, K. J., Boone, K. B., Kirkwood, M. W., Larrabee, G. J., & Ord, J. S. (2015). Official position of the american academy of clinical neuropsychology social security administration policy on validity testing: Guidance and recommendations for change. The Clinical Neuropsychologist, 29(6), 723–740. https://doi.org/10.1080/13854046.2015.1099738

Cleeland, C. S. (2009). The brief pain inventory: User guide. University of Texas. http://www.mdanderson.org/

Cohen, S. P., Vase, L., & Hooten, W. M. (2021). Chronic pain: An update on burden, best practices, and new advances. Lancet, 397(10289), 2082–2097. https://doi.org/10.1016/S0140-6736(21)00393-7

Cottingham, M. E. (2021). Clinician’s guide to navigating performance validity testing (ch. 1). In K. B. Boone (Ed.), Assessment of feigned cognitive impairment: A neuropsychological perspective (2 ed., pp. 3–10). Guilford Press.

Cuevas, H., Danesh, V., & Henneghan, A. (2022). Self-reported cognitive function in persons with nonneurological chronic diseases: A systematic review. Journal of Aging Research, 2022, 5803337. https://doi.org/10.1155/2022/5803337

Dandachi-FitzGerald, B., & Martin, P. K. (2022). Clinical judgment and clinically applied statistics: Description, benefits, and potential dangers when relying on either one individually in clinical practice (ch. 7). In R. W. Schroeder. & P. K. Martin. (Eds.), Validity assessment in clinical neuropsychological practice: Evaluating and managing noncredible performance (pp. 107–125). Guilford.

Davis, J. J. (2021). Interpretation of data from multiple performance validity tests (ch. 14). In K. B. Boone (Ed.), Assessment of feigned cognitive impairment: A neuropsychological perspective (2 ed., pp. 283–306). Guilford Press.

D’Mello, S. K., & Mills, C. S. (2021). Mind wandering during reading: An interdisciplinary and integrative review of psychological, computing, and intervention research and theory. Language and Linguistics Compass, 15(4), e12412. https://doi.org/10.1111/lnc3.12412

Driskell, T., & Driskell, J. E. (2019). Got theory? Multitasking, cognitive load, and deception (ch. 8). In T. Docan-Morgan (Ed.), The Palgrave Handbook of Deceptive Communication (pp. 145–167). Palgrave Macmillan. https://doi.org/10.1007/978-3-319-96334-1_8

Duchowski, A. T. (2017b). Neuroscience and psychology (ch. 21). In Eye tracking methodology: Theory and practice (3 ed., pp. 249–280). Springer. https://doi.org/10.1007/978-1-4471-3750-4_11

Duchowski, A. T. (2017a). Eye tracking methodology: Theory and practice (3 ed.). Springer. https://doi.org/10.1007/978-3-319-57883-5

Erdodi, L. A. (2023). Multivariate models of performance validity: The Erdodi Index captures the dual nature of non-credible responding (continuous and categorical). Assessment, 30(5), 1467–1485. https://doi.org/10.1177/10731911221101910

Graham, L., Das, J., Moore, J., Godfrey, A., & Stuart, S. (2022). The eyes as a window to the brain and mind (ch. 1). In S. Stuart (Ed.), Eye Tracking: Background, Methods, and Applications (pp. 1–14). Springer. https://doi.org/10.1007/978-1-0716-2391-6_1

Green, P., & Allen, L. M. (1999). Performance of neurological patients on the Word Memory Test (WMT) and Computerised Assessment of Response Bias (CARB): Supplement to the Word Memory Test and CARB ‘97 manuals. CogniSyst.

Green, P. (2003). Green’s Word Memory Test for windows: User’s manual. Green’s Publishing.

Green, P. (2005). Green's Word Memory Test for windows: User’s manual (revised June 2005). Green's Publications.

Greve, K. W., Bianchini, K. J., & Brewer, S. T. (2013). The assessment of performance and self-report validity in persons claiming pain-related disability. The Clinical Neuropsychologist, 27(1), 108–137. https://doi.org/10.1080/13854046.2012.739646

Guilmette, T. J. (2013). The role of clinical judgment in symptom validity assessment (ch. 2). In D. A. Carone & S. S. Bush (Eds.), Mild Traumatic Brain Injury: Symptom Validity Assessment and Malingering (pp. 31–43). Springer.

Heaver, B., & Hutton, S. B. (2011). Keeping an eye on the truth? Pupil size changes associated with recognition memory. Memory, 19(4), 398–405. https://doi.org/10.1080/09658211.2011.575788

Hegedish, O., & Hoofien, D. (2013). Detection of malingered neurocognitive dysfunction among patients with acquired brain injuries: A Word Memory Test study. European Journal of Psychological Assessment, 29(4), 253–262. https://doi.org/10.1027/1015-5759/a000154

Heilbronner, R. L., Sweet, J. J., Morgan, J. E., Larrabee, G. J., & Millis, S. R. (2009). American Academy of Clinical Neuropsychology consensus conference statement on the neuropsychological assessment of effort, response bias, and malingering. The Clinical Neuropsychologist, 23(7), 1093–1129. https://doi.org/10.1080/13854040903155063

Hosmer, D. W., Lemeshow, S., & Sturdivant, R. X. (2013). Applied Logisitic Regression (3 ed.). John Wiley & Sons.

Jacobsen, H. B., Klungsoyr, O., Landro, N. I., Stiles, T. C., & Roche, B. T. (2020). Mindflex training for cognitive flexibility in chronic pain: A randomized, controlled cross-over trial. Frontiers in Psychology, 11, 1–16. https://doi.org/10.3389/fpsyg.2020.604832

Jumbo, S. U., MacDermid, J. C., Kalu, M. E., Packham, T. L., Athwal, G. S., & Faber, K. J. (2021). Measurement properties of the Brief Pain Inventory-Short Form (BPI-SF) and Revised Short Mcgill Pain Questionnaire version-2 (SF-MPQ-2) in pain-related musculoskeletal conditions a systematic review. Clinical Journal of Pain, 37(6), 454–474. https://doi.org/10.1097/Ajp.0000000000000933

Kanser, R. J., Bashem, J. R., Patrick, S. D., Hanks, R. A., & Rapport, L. J. (2020). Detecting feigned traumatic brain injury with eye tracking during a test of performance validity. Neuropsychology, 34(3), 308–320. https://doi.org/10.1037/neu0000613

Khera, T., & Rangasamy, V. (2021). Cognition and pain: A review. Frontiers in Psychology, 12, 1–11. https://doi.org/10.3389/fpsyg.2021.673962

Kroenke, K., Spitzer, R. L., & Williams, J. B. (2001). The PHQ-9: Validity of a brief depression severity measure. Journal of General Internal Medicine, 16(9), 606–613. https://doi.org/10.1046/j.1525-1497.2001.016009606.x

Lancry-Dayan, O. C., Ben-Shakhar, G., & Pertzov, Y. (2023). The promise of eye-tracking in the detection of concealed memories. Trends in Cognitive Sciences, 27(1), 13–16. https://doi.org/10.1016/j.tics.2022.08.019

Larrabee, G. J. (2022). Synthesizing data to reach clinical conclusions regarding validity status (ch. 11). In R. W. Schroeder. & P. K. Martin. (Eds.), Validity Assessment in Clinical Neuropsychological Practice: Evaluating and Managing Noncredible Performance. Guilford.

Lu, P. H., Boone, K. B., Jimenez, N., & Razani, J. (2004). Failure to inhibit the reading response on the Stroop Test: A pathognomonic indicator of suspect effort. Journal of Clinical and Experimental Neuropsychology, 26(2), 180–189. https://doi.org/10.1076/jcen.26.2.180.28082

Lupu, T., Braw, Y., Sacher, Y., & Ratmansky, M. (2022). Cogstate brief battery: Cognition and the feigning of cognitive impairment in chronic pain. Applied Neuropsychology. Adult, 29(6), 1332–1343. https://doi.org/10.1080/23279095.2021.1873138

Mahoney, E. J., Kapur, N., Osmon, D. C., & Hannula, D. E. (2018). Eye tracking as a tool for the detection of simulated memory impairment. Journal of Applied Research in Memory and Cognition, 7(3), 441–453. https://doi.org/10.1016/j.jarmac.2018.05.004

Martin, P. K., Schroeder, R. W., & Odland, A. P. (2015). Neuropsychologists’ validity testing beliefs and practices: A survey of North American professionals. The Clinical Neuropsychologist, 29(6), 741–776. https://doi.org/10.1080/13854046.2015.1087597

Mishra, P., Pandey, C. M., Singh, U., Gupta, A., Sahu, C., & Keshri, A. (2019). Descriptive statistics and normality tests for statistical data. Annals of Cardiac Anaesthesia, 22(1), 67–72. https://doi.org/10.4103/aca.ACA_157_18

Nahin, R. L., & DeKosky, S. T. (2020). Comorbid pain and cognitive impairment in a nationally representative adult population: Prevalence and associations with health status, health care utilization, and satisfaction with care. Clinical Journal of Pain, 36(10), 725–739. https://doi.org/10.1097/AJP.0000000000000863

Nortje, A., & Tredoux, C. (2019). How good are we at detecting deception? A review of current techniques and theories. South African Journal of Psychology, 49(4), 491–504. https://doi.org/10.1177/0081246318822953

Novák, J. Š, Masner, J., Benda, P., Šimek, P., & Merunka, V. (2023). Eye tracking, usability, and user experience: A systematic review. International Journal of Human-Computer Interaction, 1–17. https://doi.org/10.1080/10447318.2023.2221600

Obuchowski, N. A., & Bullen, J. A. (2018). Receiver operating characteristic (ROC) curves: Review of methods with applications in diagnostic medicine. Physics in Medicine and Biology, 63(7), 07TR01. https://doi.org/10.1088/1361-6560/aab4b1

Omer, E., & Braw, Y. (2021). The Multi-Level Pattern Memory Test (MPMT): Initial validation of a novel performance validity test. Brain Sciences, 11(8). https://doi.org/10.3390/brainsci11081039

Patrick, S. D., Rapport, L. J., Kanser, R. J., Hanks, R. A., & Bashem, J. R. (2021). Detecting simulated versus bona fide traumatic brain injury using pupillometry. Neuropsychology, 35(5), 472–485. https://doi.org/10.1037/neu0000747

Plummer, F., Manea, L., Trepel, D., & McMillan, D. (2016). Screening for anxiety disorders with the GAD-7 and GAD-2: A systematic review and diagnostic metaanalysis. General Hospital Psychiatry, 39, 24–31. https://doi.org/10.1016/j.genhosppsych.2015.11.005

Pouget, P. (2019). Introduction to the study of eye movements (ch. 1). In C. Klein & U. Ettinger (Eds.), Eye Movement Research: An Introduction to Its Scientific Foundations and Applications (pp. 3–10). Springer. https://doi.org/10.1007/978-3-030-20085-5_1

Proudfoot, J. G., Jenkins, J. L., Burgoon, J. K., & Nunamaker, J. F., Jr. (2016). More than meets the eye: How oculometric behaviors evolve over the course of automated deception detection interactions. Journal of Management Information Systems, 33(2), 332–360. https://doi.org/10.1080/07421222.2016.1205929

Rizzo, J. R., Hudson, T. E., Martone, J., Dai, W. W., Ihionu, O., Chaudhry, Y., Selesnick, I., Balcer, L. J., Galetta, S. L., & Rucker, J. C. (2021). How sandbag-able are concussion sideline assessments? A close look at eye movements to uncover strategies. Brain Injury, 35(4), 426–435. https://doi.org/10.1080/02699052.2021.1878554

Rogers, R. (2018). An introduction to response styles (ch. 1). In R. Rogers & S. D. Bender (Eds.), Clinical Assessment of Malingering and Deception (4 ed., pp. 3–17). Guilford Press.

Schroeder, R. W., & Martin, P. K. (2021). Forced-choice performance validity tests (ch. 3). In K. B. Boone (Ed.), Assessment of Feigned Cognitive Impairment: A Neuropsychological Perspective (2 ed., pp. 34–63). Guilford Press.

Schroeder, R. W., Boone, K. B., & Larrabee, G. J. (2021). Design methods in neuropsychological performance validity, symptom validity, and malingering research (ch. 2). In K. B. Boone (Ed.), Assessment of Feigned Cognitive Impairment: A Neuropsychological Perspective (2 ed., pp. 11–33). Guilford.

Schroeder, R. W., Martin, P. K., & Larrabee, G. J. (2022). A framework for providing clinical feedback when patients invalidate testing (ch. 4). In P. K. Martin & R. W. Schroeder (Eds.), Validity Assessment in Clinical Neuropsychological Practice: Evaluating and Managing Noncredible Performance (pp. 47–69). Guilford.

Schroeder, R. W., Martin, P. K., & Odland, A. P. (2016). Expert beliefs and practices regarding neuropsychological validity testing. The Clinical Neuropsychologist, 30(4), 515–535. https://doi.org/10.1080/13854046.2016.1177118

Seymour, T. L., Baker, C. A., & Gaunt, J. T. (2013). Combining blink, pupil, and response time measures in a concealed knowledge test. Frontiers in Psychology, 3, 1–15. https://doi.org/10.3389/fpsyg.2012.00614

Sherman, E. M. S., Tan, J. E., & Hrabok, M. (2022). Performance validity (ch. 16). In A compendium of neuropsychological tests: Fundamentals of neuropsychological assessment and test reviews for clinical practice (4 ed.). Oxford University Press.

Sherman, E. M. S., Slick, D. J., & Iverson, G. L. (2020). Multidimensional malingering criteria for neuropsychological assessment: A 20-year update of the malingered neuropsychological dysfunction criteria. Archives of Clinical Neuropsychology, 35, 735–764. https://doi.org/10.1093/arclin/acaa019

Skaramagkas, V., Giannakakis, G., Ktistakis, E., Manousos, D., Karatzanis, I., Tachos, N., Tripoliti, E. E., Marias, K., Fotiadis, D. I., & Tsiknakis, M. (2023). Review of eye tracking metrics involved in emotional and cognitive processes. IEEE Reviews in Biomedical Engineering, 16, 260–277. https://doi.org/10.1109/RBME.2021.3066072

Spitzer, R. L., Kroenke, K., Williams, J. B., & Lowe, B. (2006). A brief measure for assessing generalized anxiety disorder: The GAD-7. Archives of Internal Medicine, 166(10), 1092–1097. https://doi.org/10.1001/archinte.166.10.1092

SR_Research. (2010). Eyelink 1000 user’s manual, version 1.5.2. SR Research Ltd.

Stevens, A., & Licha, C. (2019). The Word Memory Test in medicolegal assessment: A measure of effort and malingering? The Journal of Forensic Psychiatry & Psychology, 30(2), 220–249. https://doi.org/10.1080/14789949.2018.1539509

Suhr, J. A., & Bryant, A. (2021). Noncredible presentations in neuropsychological assessment of pain‑and fatigue‑related disorders: Clinical and research implications (ch. 18). In K. B. Boone (Ed.), Assessment of Feigned Cognitive Impairment: A Neuropsychological Perspective. Guilford.

Suhr, J. A., & Lee, T. M. C. (2023). Assessment of invalid presentations in neuropsychological evaluations (ch. 4). In G. J. Boyle, Y. Stern, D. J. Stein, B. J. Sahakian, C. J. Golden, T. M.-C. L. and, & S.-H. A. Chen (Eds.), The Sage Handbook of Clinical Neuropsychology: Clinical Neuropsychological Assessment and Diagnosis (pp. 39–60).

Sweet, J. J., Heilbronner, R. L., Morgan, J. E., Larrabee, G. J., Rohling, M. L., Boone, K. B., Kirkwood, M. W., Schroeder, R. W., Suhr, J. A., & Conference, P. (2021). American Academy of Clinical Neuropsychology (AACN) 2021 consensus statement on validity assessment: Update of the 2009 AACN consensus conference statement on neuropsychological assessment of effort, response bias, and malingering. The Clinical Neuropsychologist, 35(6), 1053–1106. https://doi.org/10.1080/13854046.2021.1896036

Tao, D., Tan, H., Wang, H., Zhang, X., Qu, X., & Zhang, T. (2019). A systematic review of physiological measures of mental workload. International Journal of Environmental Research and Public Health, 16(15), 2716. https://doi.org/10.3390/ijerph16152716

Theeuwes, J., Kramer, A. F., Hahn, S., & Irwin, D. E. (1998). Our eyes do not always go where we want them to go: Capture of the eyes by new objects. Psychological Science, 9(5), 379–385. https://doi.org/10.1111/1467-9280.00071

Tomer, E., Lupu, T., Golan, L., Wagner, M., & Braw, Y. (2020). Eye tracking as a mean to detect feigned cognitive impairment in the word Memory Test. Applied Neuropsychology: Adult, 27(1), 49–61. https://doi.org/10.1080/23279095.2018.1480483

Treede, R. D., Rief, W., Barke, A., Aziz, Q., Bennett, M. I., Benoliel, R., Cohen, M., Evers, S., Finnerup, N. B., First, M. B., Giamberardino, M. A., Kaasa, S., Korwisi, B., Kosek, E., Lavand’homme, P., Nicholas, M., Perrot, S., Scholz, J., Schug, S., & Wang, S. J. (2019). Chronic pain as a symptom or a disease: The IASP classification of chronic pain for the International Classification of Diseases (ICD-11). Pain, 160(1), 19–27. https://doi.org/10.1097/j.pain.0000000000001384

Tuck, N. L., Johnson, M. H., & Bean, D. J. (2019). You’d better believe it: The conceptual and practical challenges of assessing malingering in patients with chronic pain. Journal of Pain, 20(2), 133–145. https://doi.org/10.1016/j.jpain.2018.07.002

Turk, D. C., & Patel, K. V. (2022). Epidemiology and economics of chronic and recurrent pain. In Clinical Pain Management (ch. 2) (pp. 6–24). https://doi.org/10.1002/9781119701170.ch2

Vrij, A., Fisher, R. P., & Blank, H. (2017). A cognitive approach to lie detection: A meta-analysis. Legal and Criminological Psychology, 22(1), 1–21. https://doi.org/10.1111/lcrp.12088

Walczyk, J. J., Griffith, D. A., Yates, R., Visconte, S. R., Simoneaux, B., & Harris, L. L. (2012). Lie detection by inducing cognitive load: Eye movements and other cues to the false answers of “witnesses” to crimes. Criminal Justice and Behavior, 39(7), 887–909. https://doi.org/10.1177/0093854812437014

Yong, R. J., Mullins, P. M., & Bhattacharyya, N. (2022). Prevalence of chronic pain among adults in the United States. Pain, 163(2), e328–e332. https://doi.org/10.1097/j.pain.0000000000002291

Young, J. C., Roper, B. L., & Arentsen, T. J. (2016). Validity testing and neuropsychology practice in the VA healthcare system: Results from recent practitioner survey. The Clinical Neuropsychologist, 30(4), 497–514. https://doi.org/10.1080/13854046.2016.1159730

Acknowledgements

The research reported in this paper was completed as part of the second author’s (Tamar Lupu) Ph.D. dissertation (Ariel University, Ariel, Israel). Shoval Ben-Shushan, Tair Cohen, and Niva Laufer aided in performing the experimental procedures as part of the psychology undergraduate program at Ariel University (Ariel, Israel). They were supervised by Lior Golan, a research assistant at the corresponding author's laboratory. We thank them for their invaluable contribution to the study. We thank the late Dr. Paul Green for supporting the project and helpful advice throughout the years.

Funding

Open access funding provided by Ariel University. This research received funding from Loewenstein Hospital (grant: NCT03201887). This research received no additional grants from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. Yoram Braw acquired funding. Tamar Lupu prepared material and collected data. Tomer Elabaum aided in eye-tracker integration and data extraction. Tamar Lupu, Tomer Elabaum, and Yoram Braw performed the analysis. Yoram Braw wrote the first draft of the manuscript submitted for publication, and all authors commented on previous versions of the manuscript.

Corresponding author

Ethics declarations

Ethics Approval

This study was performed in line with the principles of the Declaration of Helsinki. Approval was granted by the Loewenstein Hospital's Institutional Review Board (IRB) committee (June/02/2016; 0024–15-LOE).

Consent to Participate

All participants signed a written informed consent form before entering the study.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Braw, Y.C., Elbaum, T., Lupu, T. et al. Chronic Pain: Utility of an Eye-Tracker Integrated Stand-alone Performance Validity Test. Psychol. Inj. and Law 17, 139–151 (2024). https://doi.org/10.1007/s12207-024-09507-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12207-024-09507-6