Abstract

The treatment of gait disorders and impairments are major challenges in physical therapy. The broad and fast development in low-cost, miniaturized, and wireless sensing technologies supports the development of embedded and unobtrusive systems for robust gait-related data acquisition and analysis. Next to their applications as portable and low-cost diagnostic tools, such systems are also capable of use as feedback devices for retraining gait. The approach described within this article applies movement-based sonification of gait to foster motor learning. This article aims at presenting and evaluating a prototype of a pair of instrumented insoles for real-time sonification of gait (SONIGait) and to assess its immediate effects on spatio-temporal gait parameters. For this purpose, a convenience sample of six healthy males (age \(35\pm 5~\hbox {years}\), height \(178\pm 4~\hbox {cm}\), mass \(78\pm 12~\hbox {kg}\)) and six healthy females (age 38 ± 7 years, height \(166\pm 5~\hbox {cm}\), mass: \(63\pm 8~\hbox {kg}\)) was recruited. They walked at a self-selected walking speed across a force distribution measurement system (FDM) to quantify spatio-temporal gait parameters during walking without and with five different types of sonification. The primary results from this pilot study revealed that participants exhibited decreased cadence (p < 0.01) and differences in gait velocity (p < 0.05) when walking with sonification. Results suggest that sonification has an effect on gait parameters, however further investigation and development is needed to understand its role as a tool for gait rehabilitation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Within the United States alone an estimated one-third of the elderly population is clinically diagnosed with some form of gait disorder [34]. Disorders and impairments to healthy gait patterns not only impede an individual’s mobility and independence, but also have impact on other aspects of life, as walking forms the basis for several activities of daily living. Therefore the diagnosis and treatment of gait disorders are major concerns for physical therapists. Currently, several methods exist for evaluating and diagnosing gait disorders that range from simple visual inspection by physical therapists to advanced motion capturing systems. These three-dimensional motion capture systems combined with force plates are typically used to accurately measure the kinematic and kinetic aspects of gait. However, the high accuracy afforded by these systems is accompanied by several limiting factors such as large monetary and infrastructural costs. Additionally, these systems are only capable of functioning within a laboratory setting, which subsequently limits the amount of footsteps that are captured. Thus the resulting assessment of participants’ gait patterns may be an insufficient representation of their typical locomotion. Due to the rapid and broad development of low-cost, miniaturized, and wireless sensing technologies wearable mobile platforms for gait analysis have emerged in the field of clinical rehabilitation and tele-monitoring. These advancements allow for the development of embedded and unobtrusive systems for robust gait-related data acquisition.

The developments of these wearable mobile platforms have the potential of serving as diagnostic tools. In addition they may also present means for providing portable feedback, during gait retraining, to foster motor learning. The approach described within this article involves the use of such a system for real-time auditory display (sonification) of gait. This system is based on unobtrusive, wireless, instrumented insole technology for plantar force distribution acquisition. Specifically, sonification is a means of providing real-time auditory feedback to the participant that serves to augment neural pathways involved in motor learning. Real-time provision of augmented feedback to participants has been a traditional method of fostering motor learning for rehabilitation in clinical settings [32]. Furthermore, it has typically been described as an effective means in guiding the learner towards the correct motor response, minimizing movement execution errors, and reinforcing consistent behavior performance. However, real-time augmented feedback has predominantly been provided through visual feedback and is relatively under examined via sonification methods. Effenberg [16] suggests, that movement sonification can be used to enhance human perception in the field of motor control and motor learning. A possible explanation for Effenbergs view may be the advantage of multisensory integration over solely unisensory impressions of a performed movement [33]. Further explanations for Effenberg’s view may be the high accuracy of the human auditory system in detecting changes in sound as well as its faster processing time compared to the visual system [26].

2 Related work

Several approaches have been published in the literature proposing sonification as a promising approach for training and rehabilitation purposes. For example, sonification applications have been used in rowing [17, 31], handwriting [13] and speed skating [20]. Additionally, a small number of studies exist that examined sonification for gait retraining on various patient demographics [5, 24, 28–30]. Although these studies have generally reported positive results, they are limited in several aspects. For instance, Malucci et al. [24] and Rodgers et al. [30] synthesized sounds by using spatial and temporal information of specific biomechanical gait parameters, but based their data capturing methods on expensive and large laboratory equipment, such as three-dimensional motion analysis systems. Restricting their systems to laboratory settings only. In contrast, both Redd and Bamberg [28] as well as Riskowski et al. [29] developed devices that are low cost and portable (such as instrumented insoles or knee braces) but only generate basic auditory cues, such as error identification with distinct sounds. Similar shortcomings were exhibited by the system developed by Baram and Miller [5]. Their apparatus is a small portable, ankle-mounted device for people with multiple sclerosis that generates a ticking sound each time the user takes a step. By highlighting temporal aspects of an individual’s walking pattern, the participant was intended to attenuate to possible asymmetries and aberrations in gait fluency. This procedure was meant to benefit the harmonization of a non-rhythmic walking pattern. The advantage of this system clearly lies in its practicality and affordability for the broader population.

However, the representation of a persons temporal step frequency alone may be insufficient for advances in gait rehabilitation. Considering the above-mentioned approaches and limitations in gait sonification, there is a demand for the development of a system that is unobtrusive, practical, affordable, provides the user with real-time information along with advanced sonification procedures that incorporate more complex information such as temporal aspects, weight distribution or kinetics. In addition, the success of such systems clearly depend on the design of the auditory display and its underlying sonification methods.

3 Objective and approach

For an everyday application in physical therapy, the design of the generated sounds has to follow two aspects. First, to support the therapeutic process, sounds should be positively perceived by the patients. The pleasantness of sounds is an important aspect to consider when providing auditory feedback. In two separate literature reviews, Avanzini et al. [4] and Cameirao et al. [9] outlined that sounds with positive connotations serve as learned reward functions that reinforce the intended effects on outcome parameters, reduce ratings of perceived exertions, and attain optimal arousal levels during physical activity. The authors also indicate that opposite effects are plausible outcomes if participants perceive the provided sounds as monotonous or unpleasant. Secondly, the auditory displays should give an accurate representation of gait for patients to foster motor learning.

Therefore, one of the aims of this research was to identify sonification types that provide an appropriate balance between pleasantness and effectiveness to patients. Based on these considerations the objective of this research was the design and manufacturing of a real-time, portable, and low-cost gait sonification application for use by patients and clinicians within and outside of a traditional clinical environment. The auditory display should hereby serve as a support for therapeutic interventions and self-directed learning at home.

This article aims to: (1) present the prototype development of a pair of wireless, sensor insoles instrumented with force-sensors for real-time data transmission and acquisition on a mobile client (SONIGait). (2) Present the development and evaluation of a set of sonification modules for audible feedback on a mobile client in combination with the SONIGait device. (3) Present data from a pilot study targeting the question if sonification causes any changes in spatio-temporal gait parameters, and thus alters normal gait patterns of healthy participants walking at their self-selected walking speed. We hypothesized that sonification would affect participants’ gait parameters.

4 Design of the SONIGait device

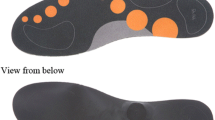

Priorities for the design and manufacturing of the SONIGait device (Fig. 1) were: (1) capturing force data during the entire stance phase for both feet simultaneously with sufficient sampling rate, (2) providing real-time acoustic feedback, (3) low-cost, (4) low-energy, and (5) unobtrusive. Based on these requirements a prototype of a force-sensing insole platform with a modular and generalizable approach was developed.

Accordingly, the developed device is (1) capable of capturing plantar pressure distribution data from two commercial shoe insoles each equipped with a total of seven force sensitive sensors. In comparison to other devices described in the literature, this rather large number of sensors allows for a more precise representation of the plantar pressure during ankle-foot roll-over (the motion between heel strike and toe-off). The captured sensor data are sampled at 100 Hz and transmitted wirelessly via Bluetooth LE to a mobile client based on Android 4.3 or greater. Tests showed that the overall latency (2) of the SONIGait device is less than 140 ms. Therefore, the SONIGait device fulfills the basic requirements for the real-time sonification of gait data. Actual costs (3) of less than $ 500 for the entire device (without the mobile client) clearly are in an acceptable range for affordable clinical devices. Based on a microcontroller and a wireless transmission protocol with minimal power consumption (4), the SONIGait device has an actual power consumption of about 100 mA per controller. The 500 mAh LiPo-Batteries allow for continuous operation of approximately five hours, which is a basic requirement for the intended application of the device in therapeutic settings as well as in self-directed home-based training sessions. At the present stage of development, the microcontroller unit as well as the battery are not yet directly embedded into the instep of the insole. Future developments will focus on the refinement and miniaturization of both modules (5). However, for this model, these components are stored within an ankle or dorsum-mounted small box (7.5 \(\times \) 5 \(\times \) 3 cm) on each leg. An outline of the SONIGait device and its system architecture is depicted in Fig. 2.

4.1 Embedded sensors

The SONIGait device has two instrumented insoles each consisting of a single processing and data transmission unit. The instrumented insoles for both the left and right foot are equipped with seven circular (diameter 9.53 mm), ultra-thin (0.2 mm) and flexible force sensors with a force range of 0-445 N (Tekscan, FlexiForce A301) to sample plantar force distribution during walking. These force sensors are located at the heel area and continue along the lateral part of the insole to the forefoot and metatarsophalangeal joints. This sensor arrangement allows for the calculation of gait timing parameters as well as the approximation of the vertical ground reaction force and plantar force distribution. Additionally a Sparkfun IMU (SparkFun Electronics, Colorado, USA) consisting of a combination of an ADXL345 3-axis accelerometer (Analog Devices) and an ITG3200 3-axis gyroscope (InvenSense Inc.) allow for additional data capturing. Within this article only sonification applications are presented that are based on the force sensor data.

4.2 Microcontroller and wireless connection

The data from the embedded force sensors are sampled by a Sparkfun Arduino Fio v3 Board (ATmega32U4, 8-MHz processor) at a 10-bit resolution. The SONIGait device is powered by a 3.7 V lithium-ion battery supply. Through the provided XBee socket for RF communication, the Arduino board is connected to a XBee module (BLEBee) based on the BlueGigas BLE112 Bluetooth LE chip. The provided firmware of the BLEBee module was slightly modified to increase the data packet size from 1 to 20 bytes, which allowed an increase in sampling rate from 40 to 100 Hz for the two Bluetooth LE connections simultaneously.

To estimate total latency of the system two series of tests were performed. The latency for the Bluetooth LE transmission was measured by assessing the round trip time of a single data packet. Specifically, a time stamp was sent from the mobile device to the microcontroller board over the Bluetooth connection then returned to the mobile device. Once the data packet was returned a second time stamp was taken. The latency was measured as the interval between these two events. Based on 15 measurements the average latency summed up to 90.3 ± 24.6 ms. The half were assumed as single direction latency (45 ms). However, the round trip measurement does not include the latency of the audio generation on the mobile device. Therefore, a Tekscan TDS 2002 60 MHz oscilloscope was used to measure overall latency of the analog-to-digital conversion of the force signals, Bluetooth transmission of the data and audio generation on the mobile device. The analog voltage after the amplifying circuit (input) and a generated 5 kHz sinus signal at the 3.5 mm jack (output) were each connected to one channel of the oscilloscope for comparison. The resulting overall latency was 139.5 ± 11.3 ms (averaged over ten measurements). Analog components such as pressure sensors, amplifying circuits and the wireless RF headphones were excluded in the analysis as they have a negligible impact on the latency.

4.3 Mobile device and audio generation

SONIGait is designed as a portable system. Thus data processing and audio generation for real-time sonification of gait were implemented in a mobile application for Android 4.3 devices or above. A background service handles two simultaneous Bluetooth LE connections and offers a constant data stream to the PureData (PD) audio generation engine.

PD is a visual programming language mainly for digital signal processing and audio synthesis. It allows sound designers to rapidly develop sound modules. These modules can be integrated into other native programming environments using an embeddable audio synthesis library called libPD. SONIGait generates sound on the Android platform using a limited standard set of digital signal processing (DSP) objects from the PD 0.46.7 “Vanilla” distribution. Whereas complex DSP chains like convolution reverb are currently to computationally expensive for mobile devices, simple synthesis algorithms generate audio in real-time. PD accepts arbitrary numeric data as input from Android, synthesizes sound, and passes the generated audio data to the Android low-level audio application programming interface for immediate playback.

5 Sonification of force sensor data

Appropriate sound design of footsteps has been a challenge for numerous applications in fields related to entertainment, sports training, and medical rehabilitation, the latter using sounds of footsteps [22, 30] to treat balance and gait disorders as well as motor deficiencies. In general, the sound design of these approaches can be distinguished between sample based implementations using recordings of real-life footsteps and synthesized sounds. These are further classified into models aiming to simulate real-world walking sounds on different ground textures [11, 12, 18, 19, 30] and the design of abstract sounds for the purpose of providing additional information about gait characteristics to the recipient [21, 22]. Bresin et al. [7, 8] analyzed the impact of acoustically augmented footsteps on walkers and investigated how far their emotional state was represented by the audio recordings of their walking movements. The researchers suggested that there were perceivable differences among gait and that in a closed-loop interactive gait sonification, the sound character of the augmented footsteps influenced walking behavior.

One further conceptual distinction concerns the intended purpose of closed-loop sonifications in the context of sports training and medical rehabilitation. In order to enhance the periodicity of footsteps, Godbout and Boyd [20] used phase-locked loops to synchronize generated sound events to walking and running. Additionally, Rodger et al. [30] used computationally-generated rhythmic sound patterns to support walking actions of Parkinsons Disease patients. However, synchronization of walking periodicity is not an issue at the present stage of the SONIGait project. The presented approach therefore focuses on the immediate acoustic mapping of plantar pressure (measured by seven force sensors per foot) during the ankle-foot roll-over motion between heel strike and toe-off during walking.

5.1 Parameter mapping of force sensor data

Existing studies using force sensors for footstep sonifications employed between one and three force sensors [8, 21, 22] for each foot. Due to the limited amount of measured force points, this approach seemed more suitable for triggering sound events than for a direct mapping of the plantar force distribution data of the feet (during ankle-foot roll-over) to a sonification model.

To get a more precise representation of the ankle-foot roll-over motion, seven sensors were implemented and distributed across the insole to provide sufficient data for comprehensible sonifications (see Figs. 1, 3). Measured force values of each sensor are mapped to different notes. Specifically, theses values are converted to 10 bit integers and then input to a corresponding sound generator used for sonification. These data are then mapped to amplitude values in all sonification models (to be explained in further detail in Sect. 5.2). In some models they are additionally mapped to frequency or modulation depth. The audio outputs of the seven sound generators are then mixed into one audio channel and delivered to the corresponding ear via stereo headphones, thus allowing participants to differentiate between their left and right ankle-foot roll-over motions. Thereby force sensor data of the left and right insoles are always sonified with identical parameter settings. For the presented approach, no additional adjustments (e.g. by adaptive calibration) of the incoming values in respect to the sensor positioning and the mass of the test persons have been performed. In order to optimize the range of the 10-bit sampled data and to avoid unnecessary signal noise, values between 30 and 500 were mapped linearly to the minimum and maximum amplitude.

5.2 Aspects of sound design

A comparable universal sound design approach by Grosshauser et al. [21] indicates that elementary sound generation using sine and sawtooth tones outperform more advanced synthesis methods such as granular synthesis and amplitude modulation. Therefore, we decided to keep the sound design as comprehensible and intuitive as possible. For the two (left and right insole) sets five arbitrarily combinable synthesis modules (SYN1-5) were developed. For a detailed technical description see additional Online Resource 1. All synthesis modules have in common that the amplitude of the outgoing sound signal is zero as long as there is no pressure (above the mentioned threshold) applied to the corresponding sensor. Pressure values rising above the threshold result in proportional signal amplitudes.

-

SYN1: Subtractive synthesis using band-pass filtered noise. For each sound generator representing one of the seven force pressure sensors white noise is filtered by a formant filter bank of six band-pass filters providing characteristic sounds for each of the seven sensors of the insole. The parameters of the heel sensor are adapted from the formats of a wooden door panel [19]. The fundamental and format frequencies of the generators consecutively activated during the ankle-foot roll-over are set increasingly. The character of the generated sound resembles walking in snow. In contrast to the following synthesis approaches, SYN1 is meant to provide a rather realistic sound experience during an ankle-foot roll-over.

-

SYN 2: Wavetable synthesis using a sinusoidal waveform moderately enriched by two harmonics thus slightly approximating the shape of a sawtooth wave. The attribution of individual pitches to the seven sound generators facilitates the generation of harmonic and melodic patterns.

-

SYN 3: FM-synthesis with statically defined carrier and modulator frequencies for each sound generator. The modulation index [10] is controlled by normalized incoming force sensor data.

-

SYN 4: A sinusoidal oscillator, with its frequency controlled by incoming force data (force dependent frequency). Thus, other than in the modules described above, the sound generators are not characterized by their fundamental frequency.

-

SYN 5: Implementation of a simple Karpus Strong algorithm (Fig. 4). An “impulse” (white noise) is triggered by the decrease of the force slope (change of sign of derivation). The delay time of the dampened feedback loop that determines the frequency of the sound relates to the force maximum.

Implementation of Karpus Strong algorithm [35]

Other than in SYN 1 and 2, where incoming data are only mapped to the amplitude of the generated sound signals, in SYN 3, 4 and 5 the measured force values are additionally mapped to a second sound parameter. For the modulation index of FM-synthesis (SYN 3), which controls the amplitudes of the induced sidebands, the complexity of the spectrum of generated sounds increases with the force applied. By providing different qualities of sound we intended to auditorily increase the awareness of the applied pressure force. Mapping incoming force pressure values additionally to the frequency of the generated sound as it is performed in SYN 4 and 5 are alternative approaches in this regard. Only in the implementation of the Karpus Strong algorithm in SYN5 is sound triggered by an event (reaching the maximum force of the sensor during one ankle-foot roll-over) and therefore is not an immediate transformation of incoming data. The intention behind this implementation is to further increase the awareness of the maximum pressure values applied to each area of the insole. To smoothen the audio output, a dynamic range compressorFootnote 1 and an optional reverb are implemented in the mixing section of the sonification software interface.

Based on these five sound synthesis modules 18 presets were defined. The 18 presets differ in various filter and frequency settings: pitch relation (e.g. building up fifths, chords, and melodies), amount of reverb, and in some of which input data averaging is applied. For detailed technical specifications see Online Resource 1. Five presets (SONI1-5) were then pre-selected by a group of two physical therapists and two movement scientists with experience in gait analysis and rehabilitation. This four person group tested the device, including all sonification types, by wearing the insoles and walking continuously as would be done by patients. They then made a mutual selection of five sonification types, based on a majority consensus, which they believed would be the most suitable for use in therapeutic settings. These were then used for the pilot study. Additional sample audio files for SONI1-5 are given in Online Resource 2. The five selected presets were as follows:

-

SONI1: wavetable synthesis with a tone sequence based on the opening tones from Beethovens “Für Elise”

-

SONI2: frequency modulation

-

SONI3: a second wavetable synthesis with a tone sequence based on a boogie-riff

-

SONI4: subtractive synthesis

-

SONI5: sine oscillator with a data driven frequency

What SONI1-5 have in common is that the sensor data are processed using a moving average filter and reverb. Data smoothing and reverb tend to settle the character of the generated sounds toward a more holistic side than a detailed analytical one. Based on the preselection, this may raise the assumption that the pleasantness of sounds were rated as a higher selection criterion than a pure analytical display. As for the selection of synthesis modules, the chosen sonification modules are based on two presets with melodic patternsFootnote 2 generated by wavetable synthesis, as well as one based on FM-synthesisFootnote 3, subtractive synthesis, and force dependent amplitude. Here, a tendency towards “musical” in contrast to “realistic” sound generation was exhibited. Interestingly, this is in slight contrast to the study of Maculewicz [23]. They analyzed different temporal feedback forms (1-kHz sinusoidal, synthetic footstep sounds on wood and on gravel) on rhythmic walking interactions and found that participants favored more the natural synthetic sounds than the synthetic variation.

Combinations of sound generation modules in one preset have only been tested during the development phase, although some of the combinations appeared to provide promising results. Presets for left and right insole sound generation were identical. Due to restrictions of the graphical interface of the prototyped Android device, presets cannot be adjusted during runtime. However, input data and generated audio are saved as text, respectively as audio files. The recorded data files can be played back at variable speed within the sonification software for further development and evaluation.

6 Methods

6.1 Participants

To examine the immediate effects of sonification on gait, a convenience sample of six healthy males (age 35 ± 5 years, height 178 ± 4 cm, mass 78 ± 12 kg) and six healthy females (age 38 ± 7 years, height 166 ± 5 cm, mass 63 ± 8 kg) was recruited from the St. Pölten University of Applied Sciences. Participants were excluded if they had any orthopedic, neurological, psychological or cognitive constraints affecting their gait. Additionally, participants were excluded if they were suffering from a hearing deficiency. Participants were instructed in detail about the study and denoted their voluntary participation by signing a university approved informed consent form.

6.2 Study protocol

To test if real-time sonification of gait affects the gait pattern the following experimental procedure was performed. Each participant was initially introduced to the SONIGait device and its purpose. Once the initial setup was completed, participants walked on an 8-meter straight walkway for a total of seven trials. Participants were instructed to walk at a self-selected speed and to keep walking constantly throughout the seven trials. This procedure was repeated for a total of six times, five times using one of the sonification types (SONI1-5) described above and once without sonification. The order in which these six situations were selected was randomly assigned using computer generated random numbers.

6.3 Instrumentation

Spatio-temporal gait parameters (cadence, gait velocity, step length) of each participant were captured during the last five rounds of each walking situation (without sonification or with one of the five sonification types) using two synchronous FDM 1.5 systems (ZEBRIS, Germany). Each system is constructed as a 1.5 \(\times \) 0.5 m large electronic mat with 11,264 capacitive sensors embedded into its surface. These two measurement systems are fully integrated into one walkway and evenly fitted to the surface, forming a measuring area of 3 \(\times \) 0.5 m. When walking across the surface of the measurement system, forces exerted by the feet were recorded by the sensors at 100 Hz, allowing the mapping of force distribution and timing at a high resolution. The recorded data were transferred and stored in a stationary PC via USB 2.0 for further analysis. The WIN FDM (v2.21) software was used to extract spatio-temporal parameters of each trial and participant. For each participant and sonification, a minimum of 15 steps were captured and used to calculate gait velocity (ms\(^{-1}\)), step length (cm) and cadence (steps/minute) for the dominant leg. In addition the coefficient of variation (COV = \(\sigma /\bar{x}\)) was used to analyze variability of the spatio-temporal parameters. The dominant leg was determined by asking each participant to kick a soccer ball, which was placed centered in front of each participant [6].

6.4 Questionnaire

Participants were immediately surveyed after each walking situation with one of the sonification types (SONI1-5) by a questionnaire comprising a total of 10 questions (Q1-Q10). Questions 1-8 were ranked on a 4-point Likert Scale: not at all (1), rudimentary (2), good (3), excellent (4). Questions 9 and 10 were ranked on a 5-point Likert Scale: very pleasant (1), pleasant (2), neutral (3), unpleasant (4), very unpleasant (5). For details regarding each specific question see Table 1. The purpose of the survey was to assess how the participants perceived each sonification variation.

6.5 Statistical analysis

Statistical analyses were conducted using IBM SPSS Statistics 22 (Somer, NY, USA). The dependent variables were defined as the spatio-temporal gait parameters (cadence, gait velocity, step length, and their corresponding coefficient of variations). Parameters were tested to comply with needed statistical assumptions by using Mauchly’s Test of Sphericity and the ShapiroWilks test. The level of significance was set a priori at p = 0.05 for all analyses. To analyze spatio-temporal parameters and their variability (COV), a one-way repeated measures ANOVA was utilized, using sonification type with six levels as a within-subject factor (no sonification and SONI1-5) for each dependent variable, to identify any differences. Partial eta-squared \((\eta )\) was used to calculate corresponding effect sizes. If differences were present, additional post-hoc analyses were performed using Bonferroni adjusted p values. Therefore, each p value was multiplied by the number of possible comparisons (six levels resulting in 15 possible comparisons). A Friedman test was used to assess statistical differences between questionnaire questions with five levels as the within-subjects factor (SONI1-5). A Wilcoxon signed-rank test was utilized if main effects were observed. Resulting p values were Bonferroni adjusted based on 10 possible comparisons for five levels.

7 Results of the pilot study

7.1 Spatio-temporal parameters

Mauchly’s test indicated that the assumption of sphericity was not violated in the performed ANOVAs. No significant main effects were observed in the performed ANOVAs for variability of the spatio-temporal parameters when walking with the different sonification types and walking without sonification. However, results from the ANOVAs did reveal significant main effects (Fig. 5) of sonification type (including no sonification) on cadence [F(5,55) = 9.514, p < 0.001, \(\eta \) = 0.464] and gait velocity [F(5,55) = 5.195, p = 0.001, \(\eta \) = 0.321]. Post-hoc analyses revealed that participants had a significantly decreased cadence (p < 0.01) when walking with sonification (regardless of the type) compared to walking without sonification. Walking velocity was significantly increased (p = 0.04) when walking without sonification compared to SONI1. No other main effects were observed.

7.2 Questionnaire

Questionnaire descriptive statistics are presented in Table 1. Results from the Shapiro–Wilks test revealed that data from the questionnaire violated the assumption of normality. Therefore, a non-parametric Friedman Test was used to statistically analyze differences on the various questions regarding each sonification type. A main effect was found on Question 3 (“if the ankle-foot roll-over motion produced a comprehensible sound during walking”) \(\chi ^2\) [4, N = 12] = 11.27, p = 0.02. However, post-hoc test with a Bonferroni adjustment revealed no significant differences between sonifications (p > 0.05).

8 Discussion

8.1 Evaluation of the questionnaire

Analysis of Q1–4 revealed that participants perceived the sonification device as an effective means, as indicated by the majority of sonifications receiving rankings of good, of audibly representing the following parameters: their personal gait patterns, matching their left and right feet, providing a comprehensible sound of the ankle-foot rollover motion, and mirroring their gait rhythm. However, the participants did not perceive a specific sonification variation as significantly better than another on the aforementioned questions. Only for Q3 statistical analysis revealed a main effect, which however, was not ascertainable during subsequent post-hoc comparisons. This might be explained by the fact that the Bonferroni correction, is a rather conservative method in terms of statistical power and may have diminished it too greatly in this pilot study. From the view of sound design, it can be concluded (as stated in Sect. 5.2) that sonifications based on musical, realistic (walking in snow alike), and rather abstract (frequency dependent force) sonifications are perceived equally as a means of providing an auditory display for gait.

Based on the outcomes of the questionnaire it is clear that the participants had difficulty consciously detecting a difference in their gait patterns due to the sonification feedback as reflected by the rankings of not at all or rudimentary on questions 6–8. In comparison to questions 1–4, these results may indicate that sonification was only consciously perceived as a means of representing gait rather than influencing it. However, this perception is contrary to the results from the spatio-temporal gait parameters, as discussed in the following section. Effenberg [15] suggested that, within sonification, two information processing pathways, conscious and unconscious, exist for the individual. The authors suggest that the unconscious pathway, termed the unconscious dorsal stream, is the primary path for altering motor control through sonification. Therefore, changes in participants’ gait parameters may have occurred without their conscious recognition. Finally, the participants had an overall positive emotional affect, as measured by the 5-point Likert Scale, regarding the pleasantness of the presented sonifications. Previous researchers [4, 9] suggested that tones associated with positive emotional affects have the potential to reinforce learning via reward functions, reduce perceived exertion levels, and elicit an optimal arousal level during physical activity. Therefore, the participants’ positive perceptions of the sonification variations indicate that they hold potential as a positive reward tool that can encourage motor learning. Further research, however, is needed to understand how sonification may exactly function as a reward mechanism to foster motor learning and how this may contribute to gait rehabilitation.

8.2 Gait analysis results

Spatio-temporal gait parameters were analyzed during walking with the five sonification types (SONI1-5) as well as without sonification. The primary aim of this analysis was to test whether or not real-time auditory display of a participants gait pattern would have an immediate effect on locomotion. The measured data showed differences in spatio-temporal parameters when walking with specific sonifications compared to walking without sonification. The most dominant effect was present for cadence, where participants showed a clear decrease (regardless of sonification type) compared to walking without sonification (Fig. 5). This effect was partly accompanied by a decrease in gait velocity. This link is not a surprising finding as gait velocity and cadence are strongly related to each other. Cadence is often described as one of the two key determinants (next to step length) for regulating self-selected walking speed [27].

Only significant differences were present between certain sonification types to no sonification. No differences in spatio-temporal parameters were observed between certain types of sonification. However, regardless of how participants perceived real-time auditory feedback, this additional information seemed to cause them to walk slower. Schmidt and Wulf [32] suggest that continuous concurrent feedback is an effective method for improving task performance during practice, in accordance with the Guidance Hypothesis, as it is a powerful guide for directing participants attention to the correct movement response. However, according to the questionnaire, participants perceived a difficulty in comprehending the sonification feedback as an influencing factor on their gait cycle. This may in turn have led to ambiguity in how they should process and use the feedback during the trials. Although this explanation may account for the aforementioned discrepancy, the goal of this pilot study was to solely examine the effect of the SONIGait device on participants’ gait outcome parameters. Therefore, only a brief and general overview of the SONIGait device, along with its purpose, was provided to each participant at the beginning of the sessions. Dyer et al. [14] suggested that sonification feedback can be limited in the sense that if the appropriate relationship between the user’s movement and sound variable is not adequately established (i.e. the mapping) then little to no task performance enhancement would be observed. In other words, user performance is enhanced only when the user understands how movements produce sounds. Perhaps for sonification feedback to be effective, it may be necessary to provide additional instructions as to how participants should interpret and use the sonification feedback. Additionally, only a relatively small amount of research currently exists regarding the application of concurrent acoustic feedback to alter gait parameters. As gait is inherently defined as an automatic motor process, it is plausible that the ambiguity of how the user should implement the feedback disrupted their automatic control of gait as demonstrated by reductions in cadence and gait velocity. However, the reductions in cadence and gait velocity indicate that sonification does serve as a mechanism to alter gait in some manner.

8.3 Limitations

Results obtained within this pilot study need to be considered with regard to some limitations. The success of such systems and sonification based therapeutic interventions certainly depend on latency. With an overall latency for the SONIGait device of approximately 140 ms this may seem critical at first glance. Wessel and Wright [36] for example, state a maximum latency of 10 ms between a stimulus and its response for control gestures and resulting sounds on a computer. However, in contrast Askenfelt und Janson [3] refer to an acceptable latency of up to 100 ms between the touch of a piano key and its hammer striking the string. Pneumatic pipe organs may even have latencies of several hundred milliseconds without affecting the musicians performance. Van Vugt and Tillman [35] state an even greater latency, placing it for intermodal detection of synchrony or asynchrony at approximately 100 ± 70 ms. Latency is a critical issue in gait sonification, however 140 ms seem to be a promising start for future developments. This also goes in line with our questionnaire result from Q1, where most of the participants in general rated that the sonification represented their personal gait pattern well. Nonetheless, reducing latency of such systems needs to be an issue for future developments. For the SONIGait device this may be achieved by changing the platform of the mobile device from Google Android to Apple iOS, as it has a lower latency in audio generation compared to the Android device used within this article [25]. However, changing to iOS would lead to a higher connection interval of the Bluetooth LE transmission [2], which consequently would reduce sampling rate to approximately 50 Hz.

Preview of a single insole with its microcontroller of the SONIGait 2.0 device. For a better visibility of the sensor placement and associated wiring the top layer of the insole is illustrated transparently. Utilizing the A401 model will increase the sensing area per force sensor from 0.71 to \(5.07\hbox { cm}^2\)

8.4 Future prospects

Further research is still necessary to understand the applications of insole auditory feedback systems for rehabilitating gait. Results from the current pilot data indicate that the present prototype was successful in altering participants’ gait in some manner. However, the exact mechanism that caused the gait alterations requires further investigation. Thus, future aims of this project will be to examine how sonification, as a motor learning tool, specifically alters an individual’s gait pattern. For instance, if sonification encourages a more regular gait pattern in unhealthy populations, such as in demographics that have neuromuscular impairments. Furthermore, subsequent research is recommended to examine how much sonification feedback is optimal for rehabilitating participants gait. Investigating the optimal feedback frequency schedule is crucial as previous researchers suggested that too much or too little feedback could be detrimental to performance [1, 37, 38]. Subsequent investigations will implement retention tests to determine if any gait alterations, due to sonification, persist in the absence of feedback.

Currently, advancements in the prototype of the SONIGait hard- and software are being developed, to improve some drawbacks of the first prototype presented in this article. The new device, SONIGait 2.0, will be equipped with seven FlexiForce A401 force sensors (diameter 25.4 mm) as opposed to the smaller FlexiForce A301 (diameter 9.53 mm). The A401 model will increase the sensing area (per force sensor) from 0.71 to 5.07 cm\(^2\). Thus, the vertical ground reaction forces that act through each specific sensor during any moment of the stance phase will be captured with a higher precision level. For performance optimization a new computing platform will be selected. The Teensy 3.1 microcontroller (32 bit ARM Cortex-M4 72 MHz CPU) in combination with a Bluegiga BLE 112 Bluetooth LE module will allow for approximately 133 Hz data sampling and transmission rate simultaneously on both foot-worn devices. To further miniaturize the hardware all components such as the microcontroller, BLE module, and IMU (Bosch BNO 055) will include sensor fusion. In addition, the measurement circuit for linearization of the pressure sensor values will be sketched and placed on a printed circuit board. By including a 500 mAh battery, the size of the prototype is reduced to 5.8 \(\times \) 5.6 \(\times \) 2.1 cm. In contrast to the first prototype, custom-made shoe insoles will be produced of a thermoplastic resin with the top covered by a thin leather layer (see Fig. 6). The construction of the new prototype will facilitate sensor placement and its associated wiring, while simultaneously increasing the durability of the embedded hardware. These future improvements will demonstrate crucial steps for the practicality of the device in the therapeutic settings.

9 Conclusion

A prototype platform (SONIGait) for real-time sonification of gait related data was developed. Priorities in the design and manufacturing were the ability of capturing force data during the entire stance phase for both feet simultaneously and providing real-time acoustic feedback. In a pilot study elicited changes in gait patterns by the sonification modules were evaluated. Results revealed that sonification, through the SONIGait device, was an effective method for altering the participants’ gait parameters. However, the clinical implications of using sonification for gait rehabilitation still require further investigation.

Notes

Adapted from Frank Barknechts rjlib (www.github.com/rjdj/rjlib).

Beethovens “Für Elise” and part of a boogie riff.

Carrier frequencies based on harmonic series, modulator using inharmonic frequencies, modulation index dependent on force sensor data.

References

Anderson DI, Magill RA, Sekiya H, Ryan G (2005) Support for an explanation of the guidance effect in motor skill learning. J Mot Behav 37(3):231–238. doi:10.1111/j.1532-5415.2005.00580

Apple Inc (2013) Bluetooth accessory design guidelines for apple products. Release R7. https://developer.apple.com

Askenfelt A, Jansson EV (1990) From touch to string vibrations. I. Timing in the grand piano action. J Acoust Soc Am 88(1):52–63. doi:10.1121/1.399933

Avanzini F, De Gtzen A, Spagnol S, Rod A (2009) Integrating auditory feedback in motor rehabilitation systems. In: Proceedings of international conference on multimodal interfaces for skills transfer (SKILLS09), Bilbao, Spain, vol 232, pp 53–58

Baram Y, Miller A (2007) Auditory feedback control for improvement of gait in patients with Multiple Sclerosis. J Neurol Sci 254(1–2):90–94. doi:10.1016/j.jns.2007.01.003

Bisson EJ, McEwen D, Lajoie Y, Bilodeau M (2011) Effects of ankle and hip muscle fatigue on postural sway and attentional demands during unipedal stance. Gait Posture 33(1):83–87. doi:10.1016/j.gaitpost.2010.10.001

Bresin R, Friberg A, Dahl S (2001) Toward a new model for sound control. In: Proceedings of the COST G-6 conference on digital audio effects (DAFX-01), Limerick, Ireland, pp DAFX1–DAFX5

Bresin R, De Witt A, Papetti S, Civolani M, Fontana F (2010) Expressive sonification of footstep sounds. In: Proceedings of ISon 2010, 3rd interactive sonification workshop, Stockholm, Sweden, pp 51–54

Cameirao M, Badia SBI, Zimmerli L, Oller E, Verschure PF (2007) The Rehabilitation gaming system: a virtual reality based system for the evaluation and rehabilitation of motor deficits. In: Virtual rehabilitation, 2007, Venice, Italy, pp 29–33, doi:10.1109/ICVR.2007.4362125

Chowning JM (1973) The synthesis of complex audio spectra by means of frequency modulation. J Audio Eng Soc 7(21):526–534

Cook PR (2002) Modeling Bill’s gait: analysis and parametric synthesis of walking sounds. In: Proceedings of the 22nd international conference: virtual, audio engineering society, Espoo, Finland, Synthetic, pp 1–6

Cook PR (2003) Real sound synthesis for interactive applications. Taylor and Francis Group, Boca Raton, FL

Danna J, Velay J, Paz-Villagrn V, Capel A, Ptroz C, Gondre C, Martinet R (2013) Handwriting movement sonification for the rehabilitation of dysgraphia. In: Proceedings of the 10th international symposium on computer music multidisciplinary research, Marseille, France, pp 200–208

Dyer FJ, Stapleton P, Rodger WM (2015) Sonification as concurrent augmented feedback for motor skill learning and the importance of mapping design. Open Psych J 8(Suppl 3: M 4):192–202

Effenberg AO (2004) Using sonification to enhance perception and reproduction accuracy of human movement patterns. In: Proceedings of the international workshop on interactive sonification. Bielefeld, Germany, pp 1–5

Effenberg AO (2005) Movement sonification: effects on perception and action. IEEE Multimed 12(2):53–59. doi:10.1109/MMUL.2005.31

Effenberg AO, Fehse U, Weber A (2011) Movement Sonification: audiovisual benefits on motor learning. In: BIO web of conferences 1:22 (1–5). doi:10.1051/bioconf/20110100022

Farnell AJ (2007) Procedural synthetic footsteps for video games and animation. In: Proceedings of the Pd convention 2007. Montral, Qubec, pp 1–5

Farnell AJ (2010) Designing sound. University Press Group Ltd, Cambridge, MA

Godbout A, Boyd JE (2010) Corrective sonic feedback for speed skating: a case study. In: Proceedings of the 16th international conference on auditory display, DC, USA, Washington, pp 23–30

Grosshauser T, Blsing B, Spieth C, Hermann T (2012) Wearable sensor-based real-time sonification of motion and foot pressure in dance teaching and training. J Audio Eng Soc 60(7/8):580–589

Lecuyer A, Marchal M, Hamelin A, Wolinski D, Fontana F, Civolani M, Papetti S, Serafin S (2011) Shoes-your-style: changing sound of footsteps to create new walking experiences. In: Proceedings of workshop on sound and music computing for human–computer interaction. Alghero, Italy, pp 1–4

Maculewicz J, Jylh A, Serafin S, Erkut C (2015) The effects of ecological auditory feedback on rhythmic walking interaction. IEEE Multimed Inst Electr Electron Eng 22:24–31. doi:10.1109/MMUL.2015.17

Maulucci RA, Eckhouse RH (2011) A real-time auditory feedback system for retraining gait. In: Proceedings of the annual international conference of the IEEE EMBS, Minneapolis, Minnesota, USA, pp 1–4

Michon R, Orlarey Y (2015) MobileFaust: a set of tools to make musical mobile applications with the faust programming language. In: Proceedings of the 15th international conference on new interfaces for musical expression, Batan Rouge, Louisiana, pp 1–6

Neuhoff JG (2011) Perception, cognition and action in auditory display. In: Hermann T, Hunt A, Neuhoff JG (eds) The sonification handbook. Logos Publishing House, Berlin, pp 63–85

Perry J, Burnfield JM (2010) Gait analysis: normal and pathological function, 2nd edn. Slack Inc, Thorofare, NJ

Redd CB, Bamberg SJM (2012) A wireless sensory feedback device for real-time gait feedback and training. IEEE/ASME Trans Mechatron 17(3):425–433. doi:10.1109/TMECH.2012.2189014

Riskowski JL, Mikesky AE, Bahamonde RE, Burr DB (2009) Design and validation of a knee brace with feedback to reduce the rate of loading. J Biomech Eng 131(8):1–6. doi:10.1115/1.3148858

Rodger MM, William RY, Cathy MC (2013) Synthesis of walking sounds for alleviating gait disturbances in Parkinson’s disease. IEEE Trans Neural Syst Rehabil Eng 22(3):543–548. doi:10.1109/TNSRE.2013.2285410

Schaffert N, Mattes K (2012) Acoustic feedback training in adaptive rowing. In: Proceedings of 18th international conference on auditory display, Atlanta, GA, pp 83–88

Schmidt RA, Wulf G (1997) Continuous concurrent feedback degrades skill learning: implications for training and simulation. Hum Factors 39(4):509–525

Shams L, Seitz AR (2008) Benefits of multisensory learning. Trends Cogn Sci 12(11):411–417. doi:10.1016/j.tics.2008.07.006

Verghese J, LeValley A, Hall CB, Katz MJ, Ambrose AF, Lipton RB (2006) Epidemiology of gait disorders in community-residing older adults. J Am Geriatr Soc 54(2):255–261. doi:10.1111/j.1532-5415.2005.00580

van Vugt FT, Tillmann B (2014) Thresholds of auditory-motor coupling measured with a simple task in musicians and non-musicians: was the sound simultaneous to the key press? PLoS One 9(2):e87,176. doi:10.1371/journal.pone.0087176

Wessel D, Wright M (2002) Problems and prospects for intimate musical control of computers. Comput Musci J 26(3):11–22. doi:10.1162/014892602320582945

Winstein CJ, Schmidt RA (1990) Reduced frequency of knowledge of results enhances motor skill learning. J Exp Psychol Learn Mem Cognit 16(4):677. doi:10.1037/0278-7393.16.4.677

Wulf G, Chiviacowsky S, Schiller E, Vila LTG (2010) Frequent external-focus feedback enhances motor learning. Front Psychol 1:1–7. doi:10.3389/fpsyg.2010.00190

Acknowledgments

Open access funding provided by FH St. Pölten - University of Applied Sciences.

Author information

Authors and Affiliations

Corresponding author

Additional information

This study was funded by the Austrian Research Promotion Agency (grant number 839092).

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Horsak, B., Dlapka, R., Iber, M. et al. SONIGait: a wireless instrumented insole device for real-time sonification of gait. J Multimodal User Interfaces 10, 195–206 (2016). https://doi.org/10.1007/s12193-016-0216-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-016-0216-9