Abstract

Two types of stochastic epidemic models are formulated, in which both infectivity in the latent period and household quarantine on the susceptible are incorporated. With the help of Lyapunov functions and Has’minskii’s theory, we derive that, for the nonautonomous periodic version with white noises, it owns a positive periodic solution. For the other version with white and telephone noises, we construct stochastic Lyapunov function with regime switching to present easily verifiable sufficient criteria for the existence of ergodic stationary distribution. Also, we introduce a series of numerical simulations to support our analytical findings. At last, a brief discussion of our theoretical results shows that the stochastic perturbations and household quarantine measures can significantly affect both periodicity and stationary distribution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As is known to all, the catastrophic influences of infectious diseases on the development of the world cannot be ignored, and the struggle between human beings and infectious diseases has never stopped. Since Kermack and McKendrick [1] pioneered the SIR (susceptible-infected-removed) epidemic compartmental model, various mathematical models have been widely established, and quite a few scholars have achieved many good results which are useful for the control and prevention of the spread of the epidemic [2,3,4,5,6,7,8,9,10,11]. It is worth noting that many diseases, such as mumps, HIV etc., have a latent period, which is the period of time between the exposure to a disease-causing agent and the onset of disease the agent causes. It is reasonable to consider the SEIR model, which introduces the latent period by using a compartmental approach. There are intensive literatures about kinds of SEIR models with the efforts of many researchers [2, 6, 9]. Generally speaking, classic SEIR models assume that the latent individuals are not contagious, and the susceptible individuals may become contagious only through contact with the infective individuals. However, this assumption is quite different from features of some transmitted diseases (such as SARS [12] and COVID-19 [13]) with infectivity in the latent period. This fact has been concerned by some scholars and SEIR models with infectivity in the latent period have also been considered, see [12, 14, 15].

The COVID-19, which is a highly contagious and lethal respiratory virus with a similar incubation time and generation time as SARS coronavirus (see [16]), has spread throughout almost every nation on our planet to become a pandemic since 2019. The time between infections and developing the symptoms is within 2–14 days, and the infected person’s latent period is infectious. To date, COVID-19 has ravaged more than two hundred countries around the world and has caused enormous economic and human losses. The worst scenario is that there was no specific treatment or vaccine available to prevent at the early stages of the outbreak. For this reason, we have to rely on traditional public health measures to respond to the outbreak, such as isolation, quarantine, social distance and community containment [16,17,18]. Many of these were implemented successfully as effective measures during SARS in 2003, providing impetus to continue such stringent measures for COVID-19. China drew useful lessons learnt from SARS outbreak almost 20 years ago and issued unprecedented gigantic interventions to prevent the propagation of COVID-19, including household quarantine, wearing masks, lockdown and strict constant tracing [19,20,21,22,23,24]. In a recent investigation, an SEIR epidemic model of deterministic differential equations

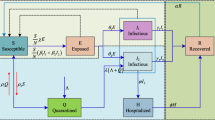

has been used by Jiao et al. [25] to model the spread of the epidemic with infectivity in the latent period and household quarantine on the susceptible by focusing on the transmitting features of COVID-19, where \(\Lambda >0\) represents the recruitment rate; \(\beta >0\) is the infective rate from S to E; \(\eta _1(0<\eta _1<1)\) stands for the household quarantine rate of the susceptible; \(\eta _2(0<\eta _2<1)\) is the rate at which the exposed individuals become infectious in the latent period; \(\delta >0\) stands for the transition rate of becoming infectious after a latent period (\(\delta >\eta _2(\theta +\alpha +\mu )\)); \(\mu >0\) is the natural death rate; the hospitalized rate of I is given by \(\alpha >0\); \(\theta >0\) can be regarded as the rate that I become R; \(\eta _3>0\) is the recovery rate of I. The schematic diagram of model (1.1) is shown in Fig. 1 below.

The schematic diagram of the deterministic model (1.1)

Consider the absence of R(t) in other equations, Jiao et al. [25] only focused on the following three-dimensional model

and obtained the basic reproduction number \(R_0=\frac{\Lambda \beta (1-\eta _1)[\delta +\eta _2(\theta +\alpha +\mu )]}{\mu (\theta +\alpha +\mu )(\delta +\mu )}\). Furthermore, they proved that model (1.2) admits a globally asymptotically stable disease-free equilibrium if \(R_0<1\), while the positive equilibrium of model (1.2) is globally asymptotically stable if \(R_0>1\).

Due to the existence of random phenomena in an ecosystem, epidemic models are unavoidably subjected to the environmental fluctuations. In fact, temperature changes, humidity and media coverage inevitably affect the spread of epidemic. To better explain these phenomena, many researchers have included stochastic perturbations in the process of epidemic modelling and achieved numerous good results [26,27,28,29,30,31,32,33,34,35,36]. Keeping in mind such an idea, Shangguan and Liu et al. [37] recently incorporated white noises into model (1.2) and assumed that stochastic perturbations on the individuals are proportional to S(t), E(t), I(t), and derived a new stochastic version as follows

where \(B_i(t)\) represent independent standard Brownian motions with \(B_i(0)=0\), and \(\sigma _i^2>0\) represent the intensities of noises, \(i=1,2,3\). They investigated the above model’s stochastic dynamics such as extinction, persistence in the mean and ergodic stationary distribution (ESD), and compared with model (1.2) and revealed the effect of white noises on the spread of the disease.

Note that periodicity is also an important factor and a quantity of human infectious diseases similarly fluctuate over time and often seasonally spread. The birth rate, death rate, recovery rate and other parameters appear more or less periodicity rather than keeping constant, and hence it is reasonable to further take periodic variation into account in the process of investigating the stochastic epidemic model. The existence of periodic solutions is important for understanding and controlling the transmissibility of the diseases, and is extensively researched as a key point in many literatures [38,39,40,41,42,43,44]. For instance, Lin et al. [39] researched a stochastic non-autonomous periodic SIR model and established the threshold of the disease to occur and also verified the existence of periodic solutions. The existence of periodic solutions for a stochastic periodic SIS model with general nonlinear incidence proposed by Ramziya et al. [41] was discussed. Inspired by the arguments above, we focus on the stochastic model (1.3) and further investigate a corresponding model with periodic variable coefficients, which yields

where the parameters \(\Lambda (t)\), \(\beta (t)\), \(\mu (t)\), \(\delta (t)\), \(\theta (t)\), \(\alpha (t)\) and \(\sigma _i(t) (i=1,2,3)\) involved with the above model (1.4) are positive continuous T-periodic functions.

Recalling the stochastic model (1.3), we find that white noises are incorporated into the deterministic model (1.2). Next, based on model (1.3), we take a further step to investigate the influence of telephone noises which can be described as a switching between (finite) regimes of environment. The switching is without memory with exponential distribution waiting times and the waiting time for the next switch has an exponential distribution [45], which can be modeled by a finite-state Markov chain. The analysis of stochastic epidemic models with Markov switching has received considerable attention, see [35, 42, 44, 46, 47] and the references therein. Motivated by this good idea, we suppose that there exist N regimes and a corresponding version is governed by

The switching between these N regimes is given by a Markov chain on the state space \({{\mathbb {S}}}=\{1, 2, \ldots , N\}\). This model evolves to a stochastic model with regime switching as follows

where \(\xi (t)\) is a right-continuous time Markov chain with values in finite state space \({{\mathbb {S}}}\). We assume that the Brownian motions \(B_i(\cdot )\) are dependent of the Markov chain \(\xi (\cdot )\). For each \(k\in {{\mathbb {S}}}\), the parameters \(\Lambda (k),\beta (k),\delta (k),\theta (k),\alpha (k),\mu (k)\) and \(\sigma _i(k)(i = 1, 2, 3)\) are positive constants. Model (1.5) is called a subsystem of model (1.6) and model (1.6) can be regarded as model (1.5) switching from one to another by the law of the Markov chain for all \(k\in {{\mathbb {S}}}\).

For the convenience of subsequent sections, we list the following preliminaries. In details, assign \({{\mathbb {R}}}_+=[0,\infty ),{{\mathbb {R}}}_+^3=\{(x_1,x_2,x_3)\in {{\mathbb {R}}}^3:x_i>0,i=1,2,3\}\) and let a complete probability space \((\Omega ,{\mathcal {F}},\{{\mathcal {F}}_t\}_{t\ge 0},{{\mathbb {P}}})\) with a filtration \(\{{\mathcal {F}}_t\}_{t\ge 0}\) satisfy the usual conditions. For an integral function \(\aleph (t)\) defined for \(t\in {{\mathbb {R}}}_+\), we denote \(\langle \aleph \rangle _t=\frac{1}{t}\int _0^t\aleph (s)ds\), \(\aleph ^l=\inf _{t\in {{\mathbb {R}}}_+}\aleph (t)\) and \(\aleph ^u=\sup _{t\in {{\mathbb {R}}}_+}\aleph (t)\). For each vector \(\pounds =(\pounds (1), . . . , \pounds (N))\), we define that \({\hat{\pounds }}=\min _{k\in {{\mathbb {S}}}}\{\pounds (k)\}\) and \(\check{\pounds }=\max _{k\in {{\mathbb {S}}}}\{\pounds (k)\}\). The generator for \(\xi (t)\) is defined as \(\Gamma =(\gamma _{ij})_{N\times N}\), where \(\gamma _{ij}=-\sum _{j=1}^N\gamma _{ij}\), and \(\gamma _{ij}\ge 0\ (i\ne j)\) is the transition rate from i to j, that is

where \(\Delta >0\). Assume that \(\xi (t)\) is irreducible, which implies that it has a unique stationary distribution \(\pi =\{\pi _1, \pi _2,\ldots ,\pi _N\}\) which can be governed by the equation \(\pi \Gamma =0\) subject to \(\sum _{h=1}^N\pi _h=1\) and \(\pi _h>0\) for any \(h\in {{\mathbb {S}}}\).

As a continuation of our previous work [37], the object of the present paper is to examine the effect of environmental variability on the dynamics of disease transmission of models (1.4) and (1.6). The rest sections of this work are summarized as follows. We discuss the existence of a positive periodic solution of model (1.4) in Sect. 2. Section 3 establishes sharp sufficient criteria for the ESD of model (1.6). In Sect. 4, several specific numerical examples are presented to substantiate our analytic results. Lastly, a series of concluding remarks are contained in Sect. 5.

2 Existence of positive periodic solution of (1.4)

The part of this paper, we proceed to discuss the existence of positive periodic solutions of model (1.4). In what follows, let us first prepare the following definition and two lemmas.

Definition 2.1

[49] A stochastic process \(\zeta (t)=\zeta (t,\omega )(-\infty<t<+\infty )\) is T-period if for every finite sequence of numbers \(t_1, t_2,\cdots , t_n\) the joint distribution of random variables \(\zeta (t_1+h),\zeta (t_2+h),\cdots ,\zeta (t_n+h)\) is independent of h, where \(h = kT\), \(k=\pm 1,\pm 2,\cdots \).

A periodic stochastic system is given by

where both functions h(t) and g(t) are T-periodic in t.

Lemma 2.1

[49] Suppose that the coefficients involved with (2.1) are T-periodic and system (2.1) owns a unique global solution. Also, there is a function \(W(t,x)\in C^2\) in \({{\mathbb {R}}}\) which is T-periodic in t and satisfies

- \((\mathbf{A}_1)\):

-

\(\inf _{|x|>{{\mathbb {R}}}}W(t,x)\rightarrow +\infty \) as \({{\mathbb {R}}}\rightarrow +\infty \),

- \((\mathbf{A}_2)\):

-

\(LW(t,x)\le -1\) outside some compact set.

Then system (2.1) has a T-periodic solution.

Lemma 2.2

For any initial value \((S(0),E(0),I(0))\in {{\mathbb {R}}}_+^3\), there is a unique globally positive solution \((S(t),E(t),I(t))\in {{\mathbb {R}}}_+^3\) of model (1.4) for \(t\ge 0\) almost surely.

Proof

Define a \(C^2\)-function \(V:{{\mathbb {R}}}_+^3\rightarrow {{\mathbb {R}}}_+\) as follows

where

By Itô’s formula, we derive that

where

Recalling (2.2), we can easily get

Then

where \({\mathcal {K}}>0\) is a constant. Since the rest part can be proved in the same way as in the proof of Theorem 2.1 in [48], the details are omitted. \(\square \)

Assign

Let us now begin to state our principal conclusion on the existence of a positive periodic solution of model (1.4).

Theorem 2.1

Model (1.4) owns a positive T-periodic solution if \({\mathfrak {R}}>1\).

Proof

Let us consider a \(C^2\)-function \(W:[0,+\infty )\times {{\mathbb {R}}}_+^3\rightarrow {{\mathbb {R}}}_+\) with the form

where

and w(t) is a T-periodic function with

where \({\mathfrak {R}}_0(t)\) will be provided later. Let \(p>0\) and \({\mathcal {M}}_1>0\) is a sufficiently large constant satisfying

where \(\vartheta :=\langle \mu +\frac{1}{2}\sigma _1^2\rangle _T({\mathfrak {R}}-1)\) and \(f_2\) will be supplied later.

We can easily derive that W(S, E, I, t) is T-periodic in t, and

where \(U_\kappa =({1}/{\kappa },\kappa )\times ({1}/{\kappa },\kappa )\times ({1}/{\kappa },\kappa )\) and the number \(\kappa >1\) is sufficiently large, which implies that the assumption \((\mathbf{A}_1)\) in Lemma 2.1 verified.

Now, we continue to confirm that the assumption \((\mathbf{A}_2)\) in Lemma 2.1. Assign

where \(W_1=-\ln S-a_1\ln E-a_2\ln I-vI,W_2=\frac{1}{p+1}(S+E+I)^{p+1},W_3=-\ln S-\ln E\).

Making use of Itô’s formula to \(W_1\) yields

where

It follows from (2.3) and (2.4) that

Applying Itô’s formula to \(W_2\) leads to

where

Similar to \(LW_2\), we can obtain

By virtue of (2.5)-(2.7), we have

where \(H={\mathcal {M}}_1[\beta ^u(1-\eta _1)+v(\theta ^u+\alpha ^u+\mu ^u)]+\beta ^u(1-\eta _1)\).

Define a bounded closed set with the form

where the constant \(\varepsilon >0\) is suitably small such that

where \(f_1,f_2,f_3,f_4,f_5\) are positive constants which can be determined in (2.15)-(2.19), respectively. For convenience, we divide \({{\mathbb {R}}}_+^3\setminus U\) into six domains with the following forms

Obviously, \({{\mathbb {R}}}_+^3\setminus U=U_1\cup U_2\cup U_3\cup U_4\cup U_5\cup U_6\). Next we will prove, by verifying the above six cases, that \(LW\le -1\) on \({{\mathbb {R}}}_+^3\setminus U\).

Case 1 If \((S,E,I)\in U_1\), then one derives, by (2.8) and (2.9), that

where

Case 2 If \((S,E,I)\in U_2\), then we have from (2.8) and (2.11) that

Case 3 If \((S,E,I)\in U_3\), then combining (2.8) with (2.12) results in

where

Case 4 If \((S,E,I)\in U_4\), then using (2.8) and (2.12) yields

where

Case 5 If \((S,E,I)\in U_5\), then one can know from (2.8) and (2.13) that

where

Case 6 If \((S,E,I)\in U_6\), then by (2.8) and (2.14) we have

where

The above discussions of six cases show that \(LW\le -1\), \((S,E,I)\in {{\mathbb {R}}}_+^3\setminus U\), which means that the assumption \((\mathbf{A}_2)\) in Lemma 2.1 also holds. Thus model (1.4) owns a positive T-periodic solution. \(\square \)

3 Ergodic stationary distribution of (1.6)

Our purpose in this section is to analyze the ESD of model (1.6). We first give some lemmas which are important for subsequent discussions.

Let \((X(t),\xi (t))\) be the diffusion process governed by

where \(b(\cdot , \cdot ) : {{\mathbb {R}}}^n\times {{\mathbb {S}}}\rightarrow {{\mathbb {R}}}^n, \sigma (\cdot , \cdot ) : {{\mathbb {R}}}^n\times {{\mathbb {S}}}\rightarrow {{\mathbb {R}}}^{n\times n}\) and \(D(x, k)=\sigma (x, k)\sigma ^\top (x, k)=(d_{ij}(x,k))\). For each \(k\in {{\mathbb {S}}}\), we assume that \({\mathcal {W}}(\cdot , k)\) is any twice continuously differentiable function, and the operator L can be described by

Lemma 3.1

[49] Assume that the following three assumptions hold

- \(\mathrm{(B_1)}\):

-

for \(i\ne j\), \(\gamma _{ij}>0\), \(i,j\in {{\mathbb {S}}}\),

- \(\mathrm{(B_2)}\):

-

for each \(k\in {{\mathbb {S}}}\),

with some constant \(\psi \in (0,1]\) for all \(x\in {{\mathbb {R}}}^n\),

- \(\mathrm{(B_3)}\):

-

there exists a nonempty open set D with compact closure, satisfying that, for each \(k\in {{\mathbb {S}}}\), there is a nonnegative function \({\mathcal {W}}(\cdot , k):D^c\rightarrow {{\mathbb {R}}}\) such that \({\mathcal {W}}(\cdot , k)\) is twice continuously differentiable and that for some \(\alpha >0\),

then system (2.1) is ergodic and positive recurrent. Namely, there exists a unique stationary distribution \(\pi (\cdot , \cdot )\) such that for any Broel measurable function \(h(\cdot , \cdot ):{{\mathbb {R}}}^n\times {{\mathbb {S}}}\rightarrow {{\mathbb {R}}}\) satisfying

one has

Lemma 3.2

For any initial value \((S(0),E(0),I(0),\xi (0))\in {{\mathbb {R}}}_+^3\times {{\mathbb {S}}}\), model (1.6) has a unique globally positive solution \((S(t),E(t),I(t),\xi (t))\) on \(t\ge 0\) almost sure.

Proof

Since the procedure to prove this lemma is very similar to that of Lemma 2.2, we only give the formulas which are different from those in Lemma 2.2. Assign

where \(b_1={({\hat{\delta }}+{\hat{\mu }})}/{\check{\beta }(1-\eta _1)[\eta _2+{\check{\delta }}/{({\hat{\mu }}+{\hat{\alpha }}+{\hat{\theta }})}]}\) and \(b_2={b_1\check{\beta }(1-\eta _1)}/{({\hat{\mu }}+{\hat{\alpha }}+{\hat{\theta }})}\). Based on the idea of the proof of Lemma 2.2, the remaining proof can be similarly validated. \(\square \)

Denote

Next, we focus on the existence of a unique ESD of model (1.6).

Theorem 3.1

For any initial value \((S(0),E(0),I(0),\xi (0))\in {{\mathbb {R}}}_+^3\times {{\mathbb {S}}}\), the solution \((S(t),E(t),I(t),\xi (t))\) of model (1.6) owns a unique ESD if \({\mathfrak {R}}_0^s>1\).

Proof

First, the condition \(\gamma _{ij}>0\) (\(i\ne j\)) is given by Sect. 1, which is the required assertion of the assumption \((\mathbf{B}_1)\) in Lemma 3.1.

Next, let us verify the assumption \((\mathbf{B}_2)\) in Lemma 3.1. One can derive the following diffusion matrix of model (1.6)

We have

for any \((S,E,I)\in D^c\times {{\mathbb {S}}},\zeta =(\zeta _1,\zeta _2,\zeta _3)\in {{\mathbb {R}}}^3\), where

\(D=[\epsilon ,{1}/{\epsilon }]\times [\epsilon ,{1}/{\epsilon }]\times [\epsilon ,{1}/{\epsilon }]\) and the constant \(\epsilon >0\) is sufficiently small. Thus, we can obtain that the assumption \((\mathbf{B}_2)\) in Lemma 3.1 is valid.

At last, we need to prove that the assumption \((\mathbf{B}_3)\) holds. In the same way as in the above proof of the assumption \((\mathbf{A}_2)\) in Theorem 2.1, hence we only give the formulas which are different from those in Sect. 2.

A \(C^2\)-function is constructed as follows

where

\(\lambda (k)\) will be determined later, \(\varrho >0\) and \({\mathcal {M}}_2>0\) such that

where \({\tilde{\vartheta }}=\sum _{k=1}^{N}\pi _k(\mu (k)+\frac{1}{2}\sigma _1^2(k))(\tilde{{\mathfrak {R}}}_0-1)\) and

Obviously, \({\tilde{W}}(S,E,I,k)\) admits a minimum value point \((S_*,E_*,I_*,k)\) in the interior of \({{\mathbb {R}}}_+^3\times {{\mathbb {S}}}\). Consider a nonnegative \(C^2\)-function as follows

where \({\mathcal {W}}_1=-\ln S-c_1\ln E-c_2\ln I-\frac{\check{\beta }(1-\eta _1)\eta _2}{{\hat{\delta }}}I,{\mathcal {W}}_2=\frac{1}{\varrho +1}(S+E+I)^{\varrho +1}, {\mathcal {W}}_3=-\ln S-\ln E-{\tilde{W}}(S_*,E_*,I_*,k)\). One derives, by Itô’s formula to \({\mathcal {W}}_1\), that

where

Now, we define \({\bar{R}}_0=({\bar{R}}_0(1),{\bar{R}}_0(2),\ldots ,{\bar{R}}_0(N))^\top \), and since the generator matrix \(\Gamma \), so there exists a solution \(\lambda =(\lambda (1),\lambda (2),\ldots ,\lambda (N))^\top \) for the following Poisson system

This shows that

which together with the definitions of \(c_1\) and \(c_2\) leads to

The remaining proof is similar to (2.6)-(2.19), and hence is omitted. So model (1.6) admits a unique ESD. \(\square \)

4 Numerical simulations

In this sequel, we will focus on illustrating the feasibility of theoretical results on models (1.4) and (1.6) by two numerical examples. Assume that initial values of models (1.4) and (1.6) are given by \((S(0),E(0),I(0))=(0.3,0.4,0.2)\).

Example 1

In model (1.4), we fix the parameters \(\Lambda (t)=1+0.1\sin \pi t\), \(\mu (t)=0.2+0.1\sin \pi t\), \(\eta _1=0.2\), \(\eta _2=0.2\), \(\beta (t)=0.5+0.1\sin \pi t\), \(\theta (t)=0.15+0.1\sin \pi t\), \(\delta (t)=0.5+0.1\sin \pi t\), \(\alpha (t)=0.25+0.1\sin \pi t\), \(\sigma _1(t)=\sigma _2(t)=\sigma _3(t)=0.01+0.05\sin \pi t\). A calculation shows that \({\mathfrak {R}}\approx 2.462713>1\). So it follows from Theorem 2.1 that model (1.4) has a T-periodic solution, we can clearly see Fig. 2. Moreover Fig. 2a, b show that S(t), E(t), I(t) fluctuate periodically.

Example 2

In model (1.6), we assume that the Markov chain \(\xi (t)\) switches among these states \({{\mathbb {S}}}=\{1,2\}\) with the generator

and the corresponding stationary distribution \(\pi =(\pi _1,\pi _2)=(0.4,0.6)\). Select \(\Lambda (1)=0.9\), \(\beta (1)=0.4\), \(\alpha (1)=0.25\), \(\mu (1)=0.2\), \(\theta (1)=0.15\), \(\eta _1=0.2\), \(\eta _2=0.2\), \(\sigma _1(1)=0.05\), \(\sigma _2(1)=0.05\), \(\sigma _3(1)=0.05\), \(\Lambda (2)=1\), \(\beta (2)=0.6\), \(\alpha (2)=0.3\), \(\mu (2)=0.3\), \(\theta (2)=0.25\), \(\eta _1=0.2\), \(\eta _2=0.2\), \(\sigma _1(2)=0.1\), \(\sigma _2(2)=0.1\), \(\sigma _3(2)=0.1\), and then \({\mathfrak {R}}_0^s=1.0528286>1\). So we know from Theorem 3.1 that model (1.6) owns a unique ESD, this result is illustrated by Fig. 3.

5 Discussions

As a continuation of our previous work [37], based on the stochastic model (1.3) with infectivity in the latent period and household quarantine, we continue to consider two types of stochastic models, in which the periodic variation and telephone noise are respectively considered (see models (1.4) and (1.6)). Two sets of sufficient criteria are respectively established for the T-periodic solution and the existence of a unique ESD, see Theorems 2.1 and 3.1. From the above theoretical results, the conclusions are summarised below.

-

We can conclude that, by Theorem 2.1, model (1.4) has a positive T-periodic solution if \({\mathfrak {R}}>1\). Recalling the expression of \({\mathfrak {R}}\), we find that low noise intensities \(\sigma _i^2(i=1,2,3)\) and inadequate cooperation from the household quarantine rate \(\eta _1\) can prevent the disease from dying out, in other words, the disease will fluctuate periodically.

-

We have shown that, under Theorem 3.1, model (1.6) owns a unique ESD if \({\mathfrak {R}}_0^s>1\). This implies that decreasing the noise intensities \(\sigma _i^2(i=1,2,3)\) and the household quarantine rate \(\eta _1\) can lead to a prevalence level of the disease. It is evident that household quarantine, if it is strictly implemented by the government, plays a crucial role in controlling the disease spread, which is consistent with that of Refs. [25, 37]. It’s necessary to point out that China has accomplished an unprecedented gigantic achievement, which benefits from the whole government’s swift and decisive response in a short time, including the detection of cases to immediate isolation, strict contact tracing, medical observation of all contacts, as well as household quarantine. Particular emphasis is that both household quarantine and the latent individuals tracing have significant influences on the control of the epidemic.

Finally, we should point out that our present work is only considered the stochastic dynamics of the three-dimensional models (1.4) and (1.6) with variables S, E and I. In fact, it will deserve to respectively incorporate the periodic variation and telephone noise into the variable R in four-dimensional model. Also, some interesting topics deserve further investigation. We can explore the effects of delay [36] or impulse [30] on periodic model (1.4), and may study model (1.6) driven by Lévy jumps [29]. We leave these issues for future consideration.

References

Kermack, W.O., Mckendrick, A.G.: A contribution to the mathematical theory of epidemics. Proc. R. Soc. A. 115, 700–721 (1927)

Schwartz, I.B., Smith, H.L.: Infinite subharmonic bifurcation in an SEIR epidemic model. J. Math. Biol. 18, 233–253 (1983)

Hethcote, H.W., van den Driessche, P.: An SIS epidemic model with variable population size and a delay. J. Math. Biol. 34, 177–194 (1995)

Cooke, K.L., van den Driessche, P.: Analysis of an SEIRS epidemic model with two delays. J. Math. Biol. 35, 240–260 (1996)

Hethcote, H.W.: The mathematics of infectious disease. SIAM Rev. 42, 599–653 (2000)

Li, G.H., Jin, Z.: Global stability of a SEIR epidemic model with infectious force in latent, infected and immune period. Chaos Solitons Fractals 25, 1177–1184 (2005)

Gray, A., Greenhalgh, D., Hu, L., Mao, X., Pan, J.: A stochastic differential equation SIS epidemic model. SIAM J. Appl. Math. 71, 876–902 (2011)

Zhao, Y.N., Jiang, D.Q.: The threshold of a stochastic SIS epidemic model with vaccination. Appl. Math. Comput. 243, 718–727 (2014)

Wang, L.W., Liu, Z.J., Zhang, X.A.: Global dynamics for an age-structured epidemic model with media impact and incomplete vaccination. Nonlinear Anal. Real World Appl. 32, 136–158 (2016)

Wang, L.W., Zhang, X.A., Liu, Z.J.: An SEIR epidemic model with relapse and general nonlinear incidence rate with application to media impact. Qual. Theory Dyn. Syst. 17, 309–329 (2018)

Han, S.Y., Lei, C.X.: Global stability of equilibria of a diffusive SEIR epidemic model with nonlinear incidence. Appl. Math. Lett. 98, 114–120 (2019)

Li, G.H., Zhen, J.: Global stability of an SEI epidemic model with general contact rate. Chaos Solitons Fractals 23, 997–1004 (2005)

Manou-Abu, S., Balicchi, J.: Analysis of the COVID-19 epidemic in french overseas department Mayotte based on a modified deterministic and stochastic SEIR model. medRxiv. (2020). https://doi.org/10.1101/2020.04.15.20062752

Li, G.H., Wang, W.D., Jin, Z.: Global stability of an SEIR epidemic model with constant immigration. Chaos Solitons Fractals 30, 1012–1019 (2006)

Li, X.Z., Fang, B.: Stability of an age-structured SEIR epidemic model with infectivity in latent period. Appl. Appl. Math. 4, 218–236 (2009)

Wilder-Smith, A., Freedman, D.O.: Isolation, quarantine, social distancing and community containment: pivotal role for old-style public health measures in the novel coronavirus (2019-nCoV) outbreak. J. Travel Med. 27, 1–4 (2020)

Hellewell, J., Abbott, S., Gimma, A., et al.: Feasibility of controlling COVID-19 outbreaks by isolation of cases and contacts. Lancet Glob. Health 8, e488–e496 (2020)

Feng, S., Shen, C., Xia, N., et al.: Rational use of face masks in the COVID-19 pandemic. Lancet Respir. Med. 8, 434–436 (2020)

Chu, D.K., Akl, E.A., Duda, S., et al.: Physical distancing, face masks, and eye protection to prevent person-to-person transmission of SARS-CoV-2 and COVID-19: a systematic review and meta-analysis. Lancet 395, 1973–1987 (2020)

Prem, K., Liu, Y., Russell, T.W., et al.: The effect of control strategies to reduce social mixing on outcomes of the COVID-19 epidemic in Wuhan, China: a modelling study. Lancet Public Health 5, e261–e270 (2020)

Maier, B.F., Brockmann, D.: Effective containment explains subexponential growth in recent confirmed COVID-19 cases in China. Science 368, 742–746 (2020)

Wu, Z., McGoogan, J.M.: Characteristics of and important lessons from the coronavirus disease 2019 (COVID-19) outbreak in China: summary of a report of 72314 cases from the Chinese Center for Disease Control and Prevention. JAMA. 323, 1239–1242 (2020)

Cheng, V.C.C., Wong, S.C., Chuang, V.W.M., et al.: The role of community-wide wearing of face mask for control of coronavirus disease 2019 (COVID-19) epidemic due to SARS-CoV-2. J. Infect. 81, 107–114 (2020)

Kucharski, A.J., Klepac, P., Conlan, A.J.K., et al.: Efectiveness of isolation, testing, contact tracing, and physical distancing on reducing transmission of SARS-CoV-2 in diferent settings: a mathematical modelling study. Lancet Infect. Dis. 20, 1151–1160 (2020)

Jiao, J.J., Liu, Z.Z., Cai, S.H.: Dynamics of an SEIR model with infectivity in incubation period and homestead-isolation on the susceptible. Appl. Math. Lett. 107, 106442 (2020)

Yang, Q.S., Mao, X.R.: Extinction and recurrence of multi-group SEIR epidemic models with stochastic perturbations. Nonlinear Anal. Real World Appl. 14, 1434–1456 (2013)

Lahrouz, A., Omari, L.: Extinction and stationary distribution of a stochastic SIRS epidemic model with non-linear incidence. Statist. Probab. Lett. 83, 960–968 (2013)

Liu, M., Bai, C.Z., Wang, K.: Asymptotic stability of a two-group stochastic SEIR model with infinite delays. Commun. Nonlinear Sci. Numer. Simul. 19, 3444–3453 (2014)

Zhang, X.H., Wang, K.: Stochastic SEIR model with jumps. Appl. Math. Comput. 239, 133–143 (2014)

Wang, F.Y., Wang, X.Y., Zhang, S.W., Ding, C.M.: On pulse vaccine strategy in a periodic stochastic SIR epidemic model. Chaos Solitons Fractals 66, 127–135 (2014)

Liu, Q., Jiang, D.Q., Shi, N.Z., Hayat, T., Ahmad, A.: Asymptotic behavior of a stochastic delayed SEIR epidemic model with nonlinear incidence. Phys. A 462, 870–882 (2016)

Zhang, X.B., Shi, Q.H., Ma, S.H., Huo, H.F., Li, D.G.: Dynamic behavior of a stochastic SIQS epidemic model with Lévy jumps. Nonlinear Dyn. 93, 1481–1493 (2018)

Mu, X.J., Zhang, Q.M., Rong, L.B.: Optimal vaccination strategy for an SIRS model with imprecise parameters and Lévy noise. J. Frankl. Inst. 356, 11385–11413 (2019)

Wei, F.Y., Xue, R.: Stability and extinction of SEIR epidemic models with generalized nonlinear incidence. Math. Comput. Simul. 170, 1–15 (2020)

Zhao, X., He, X., Feng, T., Qiu, Z.P.: A stochastic switched SIRS epidemic model with nonlinear incidence and vaccination: stationary distribution and extinction. Int. J. Biomath. 13, 2050020 (2020)

Shi, X.Y., Cao, Y.M.: Dynamics of a stochastic periodic SIRS model with time-delay. Int. J. Biomath. 13, 2050072 (2020)

Shangguan, D.C., Liu, Z.J., Wang, L.W., Tan, R.H.: A stochastic epidemic model with infectivity in incubation period and homestead-isolation on the susceptible. J. Appl. Math. Comput. (2021). https://doi.org/10.1007/s12190-021-01504-1

Cao, Z.W., Cao, W.J., Xu, X.J., Han, Q.X., Jiang, D.Q.: The threshold behavior and periodic solution of stochastic SIR epidemic model with saturated incidence. J. Nonlinear Sci. Appl. 9, 4909–4923 (2016)

Lin, Y.G., Jiang, D.Q., Liu, T.H.: Nontrivial periodic solution of a stochastic epidemic model with seasonal variation. Appl. Math. Lett. 45, 103–107 (2015)

Liu, S.N., Xu, X.J., Jiang, D.Q., Hayat, T., Ahmad, B.: Stationary distribution and extinction of the DS-I-A model disease with periodic parameter function and Markovian switching. Appl. Math. Comput. 311, 66–84 (2017)

Rifhat, R., Wang, L., Teng, Z.D.: Dynamics for a class of stochastic SIS epidemic models with nonlinear incidence and periodic coefficients. Phys. A 481, 176–190 (2017)

Zhang, X.H., Jiang, D.Q., Hayat, T., Alsaedi, A.: Periodic solution and stationary distribution of stochastic S-DI-A epidemic models. Appl. Anal. 97, 179–193 (2018)

Qi, H.K., Leng, X.N., Meng, X.Z., Zhang, T.H.: Periodic solution and ergodic stationary distribution of SEIS dynamical systems with active and latent patients. Qual. Theory Dyn. Syst. 18, 347–369 (2019)

Zhang, W.W., Meng, X.Z., Dong, Y.L.: Periodic solution and ergodic stationary distribution of stochastic SIRI epidemic systems with nonlinear perturbations. J. Syst. Sci. Complex. 32, 1104–1124 (2019)

Settati, A., Lahrouz, A.: Stationary distribution of stochastic population systems under regime switching. Appl. Math. Comput. 244, 235–243 (2014)

Zhang, X.H., Jiang, D.Q., Alsaedi, A., Hayat, T.: Stationary distribution of stochastic SIS epidemic model with vaccination under regime switching. Appl. Math. Lett. 59, 87–93 (2016)

Qi, H.K., Zhang, S.Q., Meng, X.Z., Dong, H.H.: Periodic solution and ergodic stationary distribution of two stochastic SIQS epidemic systems. Phys. A 508, 223–241 (2018)

Has’minskii, R.Z.: Stochastic Stability of Differential Equations. Sijthoff Noordhoff, Alphen aan den Rijin, The Netherlands (1980)

Mao, X.R., Marion, G., Renshaw, E.: Environmental Brownian noise suppresses explosions in population dynamics. Stoch. Process. Appl. 97, 95–110 (2002)

Acknowledgements

We would like to thank the referees and editor for their careful reading of the original manuscript and their valuable comments and suggestions that greatly improved the presentation of this work. The work is supported by the NNSFs of China (Nos. 11871201, 12001178).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shangguan, D., Liu, Z., Wang, L. et al. Periodicity and stationary distribution of two novel stochastic epidemic models with infectivity in the latent period and household quarantine. J. Appl. Math. Comput. 68, 2551–2570 (2022). https://doi.org/10.1007/s12190-021-01627-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-021-01627-5