Abstract

Medical students encounter specific stressors that can lead to higher levels of psychological distress compared to their similar aged counterparts who study other subjects. The Medical Student Stressor Questionnaire (MSSQ) was developed to specifically identify stressors among medical students as well as measure the intensity of stress caused by the stressors. However, no Italian version has been validated to date. The aim of this study was to develop an Italian version of the MSSQ (MSSQ-I) and to assess its psychometric properties. The MSSQ has been translated and culturally validated into Italian. All medical students from the University of Brescia were asked to participate in a web-survey in which they were asked to complete the MSSQ-I. For the analysis of the stability over time, participating students were asked to complete again the MSSQ-I after about one month. Among 1754 medical students, 964 completed the MSSQ-I for the validation analyses. A first Confirmatory Factor Analysis and a subsequent Explanatory Factor Analysis outlined a slightly modified factor structure of the scale with five factors instead of six as in the original scale. The internal consistency on the overall scale was high (α = 0.94), as well as the internal consistency of each of the new factors: (α > 0.83 for the first four factors and α = 0.67 for the fifth). External validity analysis confirmed moderate/high concurrent validity. These results suggest that the MSSQ-I can be used as a tool to identify stressors among Italian medical students, although further research is needed to confirm its individual test-retest reliability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

University has always been regarded as highly stressful environment to students (Bayram and Bilgel 2008; Stanley and Manthorpe 2001; Leppink et al. 2016) and the rates of psychological stress and morbidity among University students are higher than those seen in the general population (Deasy et al. 2014; Stallman 2010). Medical training further adds to the already stressful environment, with medical students reporting higher levels of psychological distress than their same-age peers (Dyrbye et al. 2011; Brazeau et al. 2014) and than non-medical students (de La Rosa-Rojas et al. 2015; Moreira de Sousa et al. 2018). This suggests that medical education itself contributes to student distress presenting some specific stressors, defined as personal or environmental events that cause stress (Lazarus 1990).

Many researchers have highlighted the importance of early identification of psychological distress and stressors among medical students, which can prevent the onset of possible future mental disorders (Firth 1986; Dyrbye et al. 2011; Saxena et al. 2014; Matheson et al. 2016; McLuckie et al. 2018). Several studies have identified different major stressors for medical students, including excessive workload, difficulties with studying and time management, exposure to patient death and suffering, and need to succeed (Dyrbye et al. 2005; Santen et al. 2010; Chang et al. 2012; Hill et al. 2018).

Usually studies evaluating stress in medical students (Chen et al. 2015; Ludwig et al. 2015; Park et al. 2015; Saeed et al. 2016; Heinen et al. 2017) use scales or tools such as the Perceived Stress Questionnaire (Cohen et al. 1983) or the Kessler Psychological Distress Scale (Kessler et al. 2002) whose validity and reliability is very well known, but which are not specific for medical students.

Only a few instruments have been developed to assess stress in medical students. The Perceived Medical School Stress Instrument (Vitaliano et al. 1989) includes items which describe negative attitudes and perceived dissatisfaction regarding the medical school experience, while the Medical Student Well-Being Index (Dyrbye et al. 2011) evaluates symptoms of burnout, depression, fatigue, and quality of life.

Several countries have addressed the need of validated instruments assessing psychological distress in medical students with national projects and studies: for example, Kim et al. (2014) developed and validated a stress scale for medical students in Korea and in the same country Shim and colleagues validated the Korean version of the higher education stress inventory with medical students (Shim et al. 2016). In Germany, Kötter and Voltmer validated the German version of the Perceived Medical School Stress Instrument (Kötter and Voltmer 2013). However, such tools do not specifically and widely assess the different stressors encountered by medical students.

The Medical Student Stressor Questionnaire (MSSQ) was developed to provide a valid and reliable self-administered instrument to identify stressors among medical students as well as to measure the intensity of stress caused by the stressors (Yusoff et al. 2010). The results found that the MSSQ has good psychometric properties; factor analysis showed that all the items are well distributed and reliability analysis showed that the MSSQ has a high internal consistency as Cronbach’s alpha coefficient value was 0.95 (Yusoff et al. 2010; Yusoff 2011).

MSSQ authors grouped stressors of medical students into categories identified by the six factors of the scale: (i) Academic Related Stressors, (ii) Intrapersonal and Interpersonal Related Stressors, (iii) Teaching and Learning-Related Stressors, (iv) Social Related Stressors, (v) Drive and Desire Related Stressors, and (vi) Group Activities Related Stressors.

The 40 items on MSSQ represent events that have been identified to be the most probable stressors in medical students. Respondents are requested to assess each event by choosing from five responses: 0 = ‘causing no stress at all’, 1 = ‘causing mild stress’, 2 = ‘causing moderate stress’, ‘causing high stress’, and 4 = ‘causing severe stress’.

The MSSQ has been used to assess stressors in medical students in Asia (Yusoff et al. 2011; Saxena et al. 2014; Eva et al. 2015; Patil et al. 2017; Ghosal and Behera 2018), Africa (Melaku et al. 2015) and Europe (Romania: Bob et al. 2014; The Netherlands: Yee and Yusoff 2013).

In Italy, in recent years, there has been a growing interest in studying stress in University students (Cavallo et al. 2016; Portoghese et al. 2019; Salvarani et al. 2020), but only a few studies evaluated psychological distress in medical students (Serenari et al. 2019; Molodynski et al. 2020) and there is lack of Italian instruments for the assessment of stressors in such population. The objective of this study was to develop an Italian version of the MSSQ (MSSQ-I) and to assess its psychometric properties. More specifically, we aimed to translate and culturally validate the MSSQ into Italian, test the questionnaire in a representative sample of medical students and collect data to perform analyses on its construct validity, internal consistency, external validity and over time stability (Boateng et al. 2018).

Methods

Design and Sample

This study was conducted in the University of Brescia, in the North of Italy. It is a medium-sized college and it has currently 13,886 students enrolled, of which 1754 attending the degree of Medicine and Surgery.

In April 2019, all medical students were asked to participate in a web-survey in which they were asked to complete the MSSQ-I and provide information about their age, gender and academic year. Students were recruited using the institutional email address that University of Brescia automatically sets up for each student after confirmation of enrolment and use for all institutional communications. The online survey was created with LimeSurvey (www.limesurvey.org). Limesurvey is an open-source online survey application which allows a completely anonymous data collection. The software automatically sends an email to all participants with a personal link to access the survey. Once a participant completes the survey, Limesurvey delete any link between the participant and their answers to the survey. So, only de-identified data are delivered to the investigators to preserve participants’ anonymity.

Each student received from Limesurvey a first email with a detailed description of the study and information about questionnaire, voluntary participation and survey anonymity, together with the link to access the survey. Up to three emails were sent by the software to students who did not complete the survey to remind them to participate. Through the survey online access, students were asked to confirm their consent to participate. The study protocol was approved by the University of Brescia Institutional Review Board.

In order to test the stability over time of the MSSQ-I, students involved in the first survey were asked to complete again the MSSQ-I after about one month. In this case the MSSQ-I was included in a broader online survey aimed at evaluating the well-being of students. This survey also included the University Stress Scale (USS; Stallman 2008), that provides a measure of the cognitive appraisal of demands across the range of environmental stressors experienced by University students, which was used to perform external validity analysis.

The Italian Version of MSSQ

Firstly, the authors of the original MSSQ were contacted to obtain the English version of the questionnaire. The MSSQ was linguistically validated and translated into Italian by two authors (JD and CB) and an intern collaborating with AG, all with fluent English skills, according to the Principles of Good Practice for the Translation and Cultural Adaptation Process for Patient-Reported Outcome Measures (Wild et al. 2005).

These three translations were synoptically compared in order to overcome any divergent translations and to achieve a full consensus. This resulted in a preliminary MSSQ-I. Then, a native English-speaking translator with fluent Italian skills was asked to translate the preliminary MSSQ-I back into English. The authors performed the review of this secondary English translation and any incongruities were rationalized and cleared up.

With the help of a representative group of medical students, we conducted a cultural validation assessing the level of comprehensibility and cognitive equivalence of the translation. In this phase, all 40 items were examined and item 13 “Partecipazione alle presentazioni in aula” (“Participation in class presentation” in the english version of MSSQ) was evaluated as not-applicable to the context of the University of Brescia (as in the medical course of this University students are not required to give class presentations) and excluded from the MSSQ-I.

The final version of the MSSQ-I was formulated, checking for minor errors which had been missed during the translation process.

Accessing the web-survey, students were asked to complete a digital version of the MSSQ-I graphically equivalent to the paper version. A brief paragraph with a description of the questionnaire and the Italian translation of the original instructions for completing the questionnaire were added.

On each row an item was presented (e.g. “Exams”), and five response options were given, from 0=“causing no stress at all” to 4=“causing severe stress”.

Data Analysis

The data were described as the mean and standard deviation (SD) for continuous variables or as frequencies and percentages for categorical variables. The Gaussianity assumption of continuous variables was assessed using Kolmogorov–Smirnov and Shapiro–Wilk tests.

As item 13 was considered non-applicable for our sample, the original construct of the scale needed to be assessed. For this purpose, we performed a first Confirmatory Factor Analysis (CFA) to test the validity of the factor structure (construct validity) shown in the original validation study (Yusoff et al. 2010). Goodness of fit of CFA was evaluated by the following indices: Root Mean Square Error of Approximation (RMSEA) smaller than 0.05, Comparative Fit Index (CFI) greater than 0.90, Tucker-Lewis coefficient (TLI) greater than 0.90 and ratio of the Chi-square value to its degrees of freedom (chi2/df) of less than 2.5. Subsequently, we performed the Exploratory Factor Analysis (EFA) to determine the new factor structure of the MSSQ-I. According to recommendations on scale development and validation studies (Worthington and Whittaker 2006; Cabrera-Nguyen 2010), we randomly split the sample in order to conduct EFA and CFA on two different same-size samples. More specifically, we first performed EFA on one randomly selected half of the sample (calibration sample), and then tested the factor structure derived from EFA by CFA on the other half of the sample (validation sample). EFA was carried out by using orthogonal varimax rotation to ensure clarity of the factor structure and ease of interpretation (Bartholomew et al. 2008). The Kaiser–Meyer–Olkin (KMO) test verified the sampling adequacy for this analysis, and Barlett’s Test of Sphericity assessed the degree of inter-correlation between variables. Factor was determined based on scree plot. Factor loading greater than 0.35 was considered the threshold indicating that the item contributed sufficiently to the factor.

Cronbach’s alpha coefficients were calculated to determine the internal consistency for both the whole scale and each factor, and values ≥0.70 were considered adequate. Any change in consistency was evaluated by removing one item at a time.

In addition, McDonald’s omega coefficient was computed (Zinbarg et al. 2005).

External validity (concurrent/divergent validity) was evaluated using the Pearson’s correlation coefficient between the factors of MSSQ-I and the total score of the USS.

Finally, the stability of the instrument over time was carried out in an aggregate way due to the anonymized survey that made impossible to perform Pearson’s correlation on individual pre-post measures. In detail, stability of MSSQ-I was assessed by comparing indices (mean, SD, median, interquartile range) and violin plots of the students’ groups distributions of each factor of the MSSQ-I scale, computed by using scale items administrated in the two time occasions: at baseline and one month later. In addition, we performed bootstrap estimates of the five factors distribution indices in order to provide an exhaustive evaluation of their stability over time.

All statistical analyses were carried out using the SPSS 21.0 and SPSS-AMOS programs. Statistical significance was set at significance level equal to 0.05.

Results

Characteristics of Participants

Of the 1754 students in the study population, 1027 (58.6%) agreed to participate accessing the web-survey. The study included only responses with a survey completion rate greater than 65% (964/1754, 55.0%) and among these, less than 5% of the total considered responders had incomplete data. A Bayesian data imputation procedure (through SPSS-AMOS package) was applied for the imputation of these data.

The mean age of participants was 23.6 years (SD = 3.9) and most students were women (61.3%). With regard to the distribution of students for academic year, 13.5% was enrolled in the first year, 13.4% in the second year, 16.4% in the third year, 16.6% in the fourth year and 16.1% in the fifth year, while most respondents were enrolled in the sixth (and last) year (24.0%).

Table in Online Resource 1 shows descriptive statistics for the (39) MSSQ items. Items with higher mean scores were Tests/examinations (mean = 3.08, SD = 0.75), Falling behind in reading schedule (mean = 3.05, SD = 0.96) and Heavy workload (mean = 3.01, SD = 0.90).

Construct Validity of MSSQ-I

CFA was performed on the overall sample (n = 964) to evaluate the goodness of fit of the factor-solution proposed by Yusoff et al. (2010). As reported in the figure in Online Resource 2, the CFA showed poor fit: RMSEA = 0.079 (90% CI: 0.020, 0.102); CFI = 0.757 TLI = 0.724; chi2/df = 7.064; p < .001, indicating that the original six factors MSSQ construct did not fit with our sample. Thus a subsequent EFA was performed in order to determine the optimal factor structure of the MSSQ-I.

After splitting the sample into two halves, EFA was conducted on one-half of the sample (calibration sample) and the results were confirmed by applying the CFA on the other half (validation sample). Descriptive statistics (confirming homogeneity in terms of age, sex and academic year) of the two spit samples are reported in Online Resource 3. The EFA analysis outlined five factors (based on the scree plot evaluation, see figure in Online Resource 4), explaining 52.2% of the total variability. The factors were labelled according to the item content, as follows: Academic Related Stressors (ARS), Teaching and Learning Related Stressors (TLRS), Staff and Students Related Stressors (SSRS), Intrapersonal and Expectations Related Stressors (IERS), and Patients Related Stressors (PRS) (see Table 1). ARS and TLRS subscales appeared semantically similar to two subscales proposed in the original MSSQ (Yusoff et al. 2010), so we maintained the same labels. Item 11 “Partecipazione alle discussioni in aula” (“Participation in class discussion” in the english version) showed factor loading under the cut-off (0.35) and was excluded from MSSQ-I.

Internal Consistency

The overall scale showed high internal consistency (Cronbach’s alpha = 0.94; McDonald’s omega = 0.96). Cronbach’s alpha and McDonald’s omega values for the subscales were as follows: ARS α = 0.89, ω = 0.91; TLRS α = 0.87, ω = 0.89; SSRS α = 0.86, ω = 0.89; IERS α = 0.83, ω = 0.88; PRS α = 0.67, ω = 0.73. As reported in Table 1, in PRS subscale, alpha value was found to be higher (0.721) if item 38 (“Lavorare con i computer”, which corresponds to “Working with computer” in the original english MSSQ) were removed from the scale. Also considering that item 38 was semantically inconsistent with PRS items, it was excluded from MSSQ-I.

CFA on the Five Factors of the Italian Version of MSSQ-I

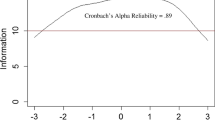

CFA on the new derived five factors was performed on the validation sample. As shown in Fig. 1, the fit of the model to the data was excellent (RMSEA = 0, [90% CI: 0, 0.03]; CFI = 1.000; TLI = 1.002; chi2/df = 0.569; p = 0.451). The factor loadings were all high (larger than 0.70) except for the factor PRS (equal to 0.39) indicating that the PRS subscale was less (but still significantly) associated to the common latent domain measuring the stress caused by medical course stressors.

External Validity

The total USS score showed significant and moderate-high Pearson’s correlation with ARS and IERS factors (r = 0.52 and r = 0.58, p < 0.001 for both); moderate correlations with TLRS and SSRS factors (r = 0.39, 0.40; p < 0.001 for both) and only weakly correlation (r = 0.16, p = 0.002) with PRS (see Table 2). These results confirmed: good concurrent validity of the first 4 factors with the domain measured by USS (the domains and extent of stress experienced by University students); and provided quite good divergent validity for PRS (as expected, considering the domain measured by USS does not include the relationship with patients).

Stability over Time of the MSSQ-I

Among the 964 students who completed the survey at baseline, 617 (64%) completed the second survey after about one month (second evaluation). Due to anonymization, the evaluation of stability over time of the five found factors of MSSQ-I scale was evaluated by inspection of their distributions (Figure in Online Resource 5) and corresponding indices (Table in Online Resource 6). The violin plots of the five factors computed in first and in second students’ group evaluation showed substantially equal distributions confirming the stability of the five factors of MSSQ-I across time. Median and interquartile range depicted by the white box-plot inside each violin plot highlight very similar values. A mean difference between first and second evaluation was found for TLRS factor only (p < 0.001); however, it is worth to note that this difference is purely statistical (due to the large samples). Moreover, all the 95% bootstrap confidence intervals, computed for the distribution indices assessed at the second evaluation, included the corresponding values of the first evaluation, highlighting consistent stability over time of the found factors distribution. Details about these and other statistical distribution indices are shown in the table in Online Resource 6 and in the figure in Online Resource 5.

Discussion

The objective of this study was to develop the MSSQ-I and to assess its psychometric properties in a sample of 964 medical students of the University of Brescia. The MSSQ-I has 37 items instead of 40; one item was discarded during the cultural validation and two items were excluded during the EFA.

The multidimensional structure of the MSSQ was confirmed; however, the EFA outlined five factors instead of six as in the original scale. More specifically, ARS factor refers to any University, educational or student events that causes stress on students, such as examination system, academic schedule, and lack of time. This factor was very similar to the ARS factor described in the original MSSQ, so we decided to keep the same label. TLRS factor refers to any event related with teaching or learning that cause stress, including teachers’ competency and quality of feedback given by teachers. As for ARS factor, also TLRS factor was very similar to the TLRS factor described in the original MSSQ, so we decided to keep the same label. In MSSQ-I we could not confirm the original factors Intrapersonal and interpersonal related stressors, Social related stressors, Drive and desire related stressors, and Group activities related stressors. However, some comparisons can be done between these original factors and the new factors of MSSQ-I.

In MSSQ-I, SSRS factor refers to any form or aspect of relationships with University personnel, teachers and other students that causes stress. This category of stressors is included in the original Social Related Stressors factor, which also included other forms of relationship that can cause stress, for example the relationship with patients. In MSSQ-I, dealing with patients and their suffering is included in a specific factor, the PRS.

In MSSQ-I, IERS factor refers to both intrapersonal stressors including poor motivation, self-conflict, unwillingness to study medicine, and stressors related to expectations that other people (e.g. parents) may have for the student. These areas are also relevant in the original version of MSSQ and are included in the Intrapersonal and Interpersonal Related Stressors factor and in the Drive And Desire Related Stressors factor.

Such differences between MSSQ-I and the original MSSQ in factor structure may be explained by the differences in the medical course system and in the culture of our Italian sample and the original Malaysian sample. For example, in Italy the degree of Medicine and Surgery lasts six years, while in Malaysia the typical duration is five years. Group activities such as class presentations and discussion are common in Malaysia medical courses, while they are quite unusual in Italy. Methods of assessments vary as well: in Italy oral examination are very frequent, while in Malaysia other methods such as multiple choice questions and one best answer are preferred (Lim 2008).

In our sample, the top stressors were all included in the ARS factor, therefore related to academic matters: Tests/examinations, Falling behind reading schedule, Heavy workload. Such results are consistent with those presented in other studies from different countries (Yee and Yusoff 2013; Bob et al. 2014; Melaku et al. 2015; Patil et al. 2017) and suggest that the most stressful aspects of the medical training are similar for students from different culture and school systems.

The validation found that the MSSQ-I has good psychometric properties; the five factors are clearly distinct and their loadings were all very high; an exception holds for the PRS factor, indicating that, in our sample, dealing with patients is not strongly associated to the stress caused by medical course stressors. The CFA carried out on the EFA-derived factor structure showed an excellent fit of the model to the data providing a robust validation of the MSSQ-I construct structure.

The analysis of external validation also confirmed a good concurrent validity with the USS, which measures the stress experienced by University students, and provided quite good divergent validity for PRS (as expected, considering that the USS is not specific for medical students and therefore does not include the relationship with patients, which is the domain covered by PRS).

Similarly, the analysis evaluating the stability over time confirmed the MSSQ-I reliability, although a mean difference between first and second evaluation (2.01 and 2.20) was found for TLRS factor. Clinically, the two means do not indicate a noteworthy difference of the intensity of stress, however this difference is statistically significant because of the large samples.

Therefore, results suggest that the MSSQ-I is a valid and reliable instrument that can be used to identify stressors and measure the intensity of stress caused by stressors.

Strengths and Limitations

The large sample of students who completed the survey at baseline, as well as the likewise large amount of gathered data in the second administration of the MSSQ-I to assess its stability over time, have ensured robustness of findings and an adequate generalization of results with respect to the medical student population. Another strength of this study is the application of the internationally accepted Principles of Good Practice for the translation of Assessment Instruments (Wild et al. 2005) for the implementation of MSSQ-I. Among the limitations, the unfeasibility to perform test-retest reliability by standard method due to the anonymized survey compelled us to assess the stability over time of MSSQ-I in an aggregate way, by comparing distribution indices of the scale factors. However, the information obtained from the factor distributions over time allowed an even stability assessment. Another methodological weakness regards the unavailability of Italian scales measuring specific stressors in medical students. However, we performed external validation analysis of MSSQ-I by using USS, which allowed us to assess both concurrent and divergent validity. Similarly, we could not compare the factor solution originally proposed by Yusoff et al. (2010) with the factor solution (in terms of CFA goodness of fit) proposed for MSSQ-I, because in the validation paper of the original scale the CFA on the six factors of MSSQ was not performed.

Available data on web-surveys among University students’ populations show variable response rates. In this web-survey the response rate is higher compared to other studies (Ridner et al. 2016; Lanthier-Veilleux et al. 2016; Auerbach et al. 2018; Mortier et al. 2018); however, we could not analyse possible nonresponse bias because we have not had the chance to collect data on students refusing to participate and this may be considered a limitation of the current study. Finally, confirmation of these findings through multicentre studies would be appropriate.

Conclusions

The study was conducted on a large sample of Italian medical students and it supported the validity and the reliability of the MSSQ-I. Considering the high prevalence of psychological distress in medical students potentially leading to severe impairment in their academic achievement and personal development, the MSSQ-I could be adopted by Universities as a guide for tailored strategies to reduce stress and promote mental health.

Aknowledgements

We thank Robert Alexander Coates for the kind collaboration in the back-translation of the MSSQ-I and Benedetta Santini for the support in the development of MSSQ-I. Finally, we are particularly grateful to all medical students of the University of Brescia who participated to this study.

Data Availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Auerbach, R. P., Mortier, P., Bruffaerts, R., Alonso, J., Benjet, C., Cuijpers, P., Demyttenaere, K., Ebert, D. D., Green, J. G., Hasking, P., Murray, E., Nock, M. K., Pinder-Amaker, S., Sampson, N. A., Stein, D. J., Vilagut, G., Zaslavsky, A. M., Kessler, R. C., & Collaborators, W. H. O. W. M. H.-I. C. S. (2018). WHO world mental health surveys international college student project: Prevalence and distribution of mental disorders. Journal of Abnormal Psychology, 127(7), 623–638.

Bartholomew, D. J., Steele, F., Galbraith, J., & Moustaki, I. (2008). Analysis of multivariate social science data statistics in the social and behavioral sciences series (2nd ed.). Boca Raton: Taylor & Francis.

Bayram, N., & Bilgel, N. (2008). The prevalence and socio-demographic correlations of depression, anxiety and stress among a group of university students. Social Psychiatry and Psychiatric Epidemiology, 43(8), 667–672.

Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., & Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6, 149.

Bob, M. H., Popescu, C. A., Pîrlog, R., & Buzoianu, A. D. (2014). Personality factors associated with academic stress in first year medical students. HVM Bioflux, 6(1), 40–44.

Brazeau, C. M., Shanafelt, T., Durning, S. J., Massie, F. S., Eacker, A., Moutier, C., Satele, D. V., Sloan, J. A., & Dyrbye, L. N. (2014). Distress among matriculating medical students relative to the general population. Academic Medicine, 89(11), 1520–1525.

Cabrera-Nguyen, P. (2010). Author guidelines for reporting scale development and validation results. Journal of the Society for Social Work and Research, 1, 99–103.

Cavallo, P., Carpinelli, L., & Savarese, G. (2016). Perceived stress and bruxism in university students. BMC Research Notes, 9(1), 514.

Chang, E., Eddins-Folensbee, F., & Coverdale, J. (2012). Survey of the prevalence of burnout, stress, depression, and the use of supports by medical students at one school. Academic Psychiatry, 36, 177–182.

Chen, Y., Henning, M., Yielder, J., Jones, R., Wearn, A., & Weller, J. (2015). Progress testing in the medical curriculum: students' approaches to learning and perceived stress. BMC Medical Education, 15, 147.

Cohen, S., Kamarck, T., & Mermelstein, R. (1983). A global measure of perceived stress. Journal of Health and Social Behavior, 24(4), 385–396.

de La Rosa-Rojas, G., Chang-Grozo, S., Delgado-Flores, L., Oliveros-Lijap, L., Murillo-Pérez, D., Ortiz-Lozada, R., Vela-Ulloa, G., & Yhuri Carreazo, N. (2015). Level of stress and coping strategy in medical students compared with students of other careers. Gaceta Medica de Mexico, 151(4), 443–449.

Deasy, C., Coughlan, B., Pironom, J., Jourdan, D., & Mannix-McNamara, P. (2014). Psychological distress and coping amongst higher education students: A mixed method enquiry. PLoS One, 9(12), e115193.

Dyrbye, L. N., Thomas, M. R., & Shanafelt, T. D. (2005). Medical student distress: Causes, consequences, and proposed solutions. Mayo Clinic proceedings, 80, 1613–1622.

Dyrbye, L. N., Harper, W., Durning, S. J., Moutier, C., Thomas, M. R., Massie, F. S. Jr., Eacker, A., Power, D. V., Szydlo, D. W., Sloan, J. A., & Shanafelt, T. D. (2011). Patterns of distress in US medical students. Medical Teacher, 33(10), 834–839.

Eva, E. O., Islam, M. Z., Mosaddek, A. S., Rahman, M. F., Rozario, R. J., Iftekhar, A. F., Ahmed, T. S., Jahan, I., Abubakar, A. R., Dali, W. P., Razzaque, M. S., Habib, R. B., & Haque, M. (2015). Prevalence of stress among medical students: A comparative study between public and private medical schools in Bangladesh. BMC research notes, 8(327), 5.

Firth, J. (1986). Levels and sources of stress in medical students. British Medical Journal (Clin Res Ed), 292(6529), 1177–1180.

Ghosal, K., & Behera, A. (2018). Study on prevalence of stress in medical students. Journal of Research In Medical And Dental Science, 6(5), 182–186.

Heinen, I., Bullinger, M., & Kocalevent, R. D. (2017). Perceived stress in first year medical students - associations with personal resources and emotional distress. BMC Medical Education, 17(1), 4.

Hill, M. R., Goicochea, S., & Merlo, L. J. (2018). In their own words: Stressors facing medical students in the millennial generation. Medical Education Online, 23(1), 1530558.

Kessler, R. C., Andrews, G., Colpe, L. J., Hiripi, E., Mroczek, D. K., Normand, S. L., Walters, E. E., & Zaslavsky, A. M. (2002). Short screening scales to monitor population prevalences and trends in non-specific psychological distress. Psychological Medicine, 32(6), 959–976.

Kim, M. J., Park, K. H., Yoo, H. H., Park, I. B., & Yim, J. (2014). Development and validation of the medical student stress scale in Korea. Korean Journal of Medical Education, 26(3), 197–208.

Kötter, T., & Voltmer, E. (2013). Measurement of specific medical school stress: Translation of the “perceived medical school stress instrument” to the German language. GMS Zeitschrift für Medizinische Ausbildung, 30(2)- Doc22.

Lanthier-Veilleux, M., Généreux, M., & Baron, G. (2016). Prevalence of residential dampness and mold exposure in a University student population. International Journal of Environmental Research and Public Health, 13(2), 194.

Lazarus, R. S. (1990). Theory-based stress measurement. Psychology Inquiry, 1(1), 3–13.

Leppink, E. W., Odlaug, B. L., Lust, K., Christenson, G., & Grant, J. E. (2016). The Young and the stressed: Stress, impulse control, and health in college students. The Journal of Nervous and Mental Disease, 204(12), 931–938.

Lim, V. K. E. (2008). Medical education in Malaysia. Medical Teacher, 30(2), 119–123.

Ludwig, A. B., Burton, W., Weingarten, J., Milan, F., Myers, D. C., & Kligler, B. (2015). Depression and stress amongst undergraduate medical students. BMC Medical Education, 15, 141.

Matheson, K. M., Barrett, T., Landine, J., McLuckie, A., Soh, N. L., & Walter, G. (2016). Experiences of psychological distress and sources of stress and support during medical training: A survey of medical students. Academic Psychiatry, 40(1), 63–68.

McLuckie, A., Matheson, K. M., Landers, A. L., Landine, J., Novick, J., Barrett, T., & Dimitropoulos, G. (2018). The relationship between psychological distress and perception of emotional support in medical students and residents and implications for educational institutions. Academic Psychiatry, 42(1), 41–47.

Melaku, L., Mossie, A., & Negash, A. (2015). Stress among medical students and its association with substance use and academic performance. Journal of biomedical education, 1-9.

Molodynski, A., Lewis, T., Kadhum, M., Farrell, S, M., Lemtiri Chelieh, M., Falcão De Almeida, T., Masri, R., Kar, A., Volpe, U., Moir, F., Torales, J., Castaldelli-Maia, J, M., Chau, S, W, H., Wilkes, C., & Bhugra, D. (2020). Cultural variations in wellbeing, burnout and substance use amongst medical students in twelve countries. International Review of Psychiatry, 1–6.

Moreira de Sousa, J., Moreira, C. A., & Telles-Correia, D. (2018). Anxiety, depression and academic performance: A study amongst Portuguese medical students versus non-medical students. Acta Medica Portuguesa, 31(9), 454–462.

Mortier, P., Auerbach, R. P., Alonso, J., Bantjes, J., Benjet, C., Cuijpers, P., Ebert, D. D., Green, J. G., Hasking, P., Nock, M. K., O'Neill, S., Pinder-Amaker, S., Sampson, N. A., Vilagut, G., Zaslavsky, A. M., Bruffaerts, R., Kessler, R. C., & WHO WMH-ICS Collaborators. (2018). Suicidal Thoughts and Behaviors Among First-Year College Students: Results From the WMH-ICS Project. Journal of the American Academy of Child and Adolescent Psychiatry, 57(4), 263–273.e1.

Park, K. H., Kim, D. H., Kim, S. K., Yi, Y. H., Jeong, J. H., Chae, J., Hwang, J., & Roh, H. (2015). The relationships between empathy, stress and social support among medical students. International Journal of Medical Education, 6, 103–108.

Patil, S. P., Sadhanala, S., Srivastav, M. U., & BansodeGokhe, S. S. (2017). Study of stressors among undergraduate medical students of a teaching medical institution. International Journal Of Community Medicine And Public Health, 4, 3151–3154.

Portoghese, I., Galletta, M., Porru, F., Burdorf, A., Sardo, S., D'Aloja, E., Finco, G., & Campagna, M. (2019). Stress among university students: Factorial structure and measurement invariance of the Italian version of the effort-reward imbalance student questionnaire. BMC Psychology, 7(1), 68.

Ridner, S. L., Newton, K. S., Staten, R. R., Crawford, T. N., & Hall, L. A. (2016). Predictors of well-being among college students. Journal of American College Health, 64(2), 116–124.

Saeed, A. A., Bahnassy, A. A., Al-Hamdan, N. A., Almudhaibery, F. S., & Alyahya, A. Z. (2016). Perceived stress and associated factors among medical students. Journal of Family and Community Medicine, 23(3), 166–171.

Salvarani, V., Ardenghi, S., Rampoldi, G., Bani, M., Cannata, P., Ausili, D., Di Mauro, S., & Strepparava, M. G. (2020). Predictors of psychological distress amongst nursing students: A multicenter cross-sectional study. Nurse Education in Practice, 44, 102758.

Santen, S. A., Holt, D. B., Kemp, J. D., & Hemphill, R. R. (2010). Burnout in medical students: Examining the prevalence and associated factors. The Southern Medical Journal, 103, 758–763.

Saxena, Y., Shrivastava, A., & Singhi, P. (2014). Gender correlation of stress levels and sources of stress among first year students in a medical college. Indian Journal of Physiology and Pharmacology, 58(2), 147–151.

Serenari, M., Cucchetti, A., Russo, P. M., Fallani, G., Mattarozzi, K., Pinna, A. D., Colonnello, V., Poggioli, G., & Cescon, M. (2019). Burnout and psychological distress between surgical and non-surgical residents. Updates in Surgery, 71(2), 323–330.

Shim, E. J., Jeon, H. J., Kim, H., Lee, K. M., Jung, D., Noh, H. L., Roh, M. S., & Hahm, B. J. (2016). Measuring stress in medical education: Validation of the Korean version of the higher education stress inventory with medical students. BMC Medical Education, 16(1), 302.

Stallman, H. M. (2008). University stress scale. Brisbane: Queensland University of Technology.

Stallman, H. M. (2010). Psychological distress in university students: A comparison with general population data. Australian Psychologist, 45(4), 249–257.

Stanley, N., & Manthorpe, J. (2001). Responding to students' mental health needs: Impermeable systems and diverse users. Journal of Mental Health, 10(1), 41–52.

Vitaliano, P. P., Maiuro, R., Mitchell, E., & Russo, J. (1989). Perceived stress in medical school: Resistors, persistors, adaptors and maladaptors. Social Science & Medicine, 28, 1321–1329.

Wild, D., Grove, A., Martin, M., Eremenco, S., McElroy, S., Verjee-Lorenz, A., Erikson, P., & ISPOR Task Force for Translation and Cultural Adaptation. (2005). Principles of good practice for the translation and cultural adaptation process for patient-reported outcomes (PRO) measures: Report of the ISPOR task force for translation and cultural adaptation. Value in Health, 8(2), 94–104.

Worthington, R. L., & Whittaker, T. A. (2006). Scale development research: A content analysis and recommendations for best practices. The Counseling Psychologist, 34, 806–838.

Yee, L. Y., & Yusoff, M. S. B. (2013). Prevalence and sources of stress among medical students in Universiti Sains Malaysia and Universiteit Maastricht. Education in Medicine Journal, 5(4), 34–41.

Yusoff, M. S. (2011). A confirmatory factor analysis study on the medical student stressor questionnaire among Malaysian medical students. Education in Medicine Journal, 3(1), e44–e53.

Yusoff, M. S. B., Abdul Rahim, A. F., & Yaacob, M. J. (2010). The development and validity of the medical student stressor questionnaire (MSSQ). ASEAN Journal of Psychiatry, 11, 13–24.

Yusoff, M. S. B., Yee, L. Y., Heng Wei, L., Hon Meng, L., Xue Bin, L., Chin Siong, C., & Abdul Rahmin, A. F. (2011). A study on stress, stressors and coping strategies among Malaysian medical students. International Journal of Students' Research, 1(2), 45–50.

Zinbarg, R. E., Revelle, W., Yovel, I., & Li, W. (2005). Cronbach’s alpha, Revelle’s Beta, McDonald’s omega: Their relations with each and two alternative conceptualizations of reliability. Psychometrika, 70, 123–133.

Funding

Open access funding provided by Università degli Studi di Brescia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

Alberto Ghilardi, Chiara Buizza and Jessica Dagani contributed to the study conception and design and material preparation. Data collection was performed by Jessica Dagani and analysis were performed by Clarissa Ferrari. The first draft of the manuscript was written by Jessica Dagani and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Ethic Approval

This study was performed in line with the principle of the Declaration of Helsinki. The study protocol was approved by the University of Brescia Institutional Review Board.

Consent

All participants received a detailed description of the study and information about questionnaire, voluntary participation and survey anonymity, and they gave their informed consent prior to participation.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

12144_2020_922_MOESM2_ESM.docx

Online Resource 2 CFA on the 39 items of the original MSSQ (item 13 was considered non-applicable for our sample and discarded) (DOCX 169 kb)

12144_2020_922_MOESM5_ESM.docx

Online Resource 5 Stability over time of the five factor distributions: violin plots at first (baseline, dark plots) and at second (one month later, light plots) evaluation (DOCX 95 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dagani, J., Buizza, C., Ferrari, C. et al. Psychometric validation and cultural adaptation of the Italian medical student stressor questionnaire. Curr Psychol 41, 4132–4140 (2022). https://doi.org/10.1007/s12144-020-00922-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-020-00922-x