Abstract

Self-efficacy describes people’s belief in their own ability to perform the behaviors required to produce a desired outcome. The purpose of this study was to examine the psychometric properties of the Swedish version of the General Self-Efficacy Scale (GSES) with an adolescent sample, using Rasch analysis. The scale was examined with a focus on invariant functioning along the latent trait as well as across sample groups. The data were collected 2009 and 2010 among 3764 students aged between 13 and 15 years, in the 7th to 9th grade, in compulsory schools in the municipality of Karlstad, Sweden. The item fit was acceptable, the categorization of the items worked well and the scale worked invariantly between years of investigations. Although the GSES worked well as a whole, there was some evidence of misfit indicating room for improvements. The targeting may be improved by adding more questions of medium difficulty. Also, further attention needs to be paid to the dimensionality of the GSES as well as to whether the psychometric properties of GSES are affected by using more recent data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The concept of self-efficacy was developed by Albert Bandura in his social cognitive theory. Self-efficacy is defined as people’s belief in their ability to perform the behaviors required to produce a desired outcome (Bandura 1977). The concept of self-efficacy has been used in several research areas, such as educational research (see Schunk 1991; Silver et al. 2001; Usher and Pajares 2008; Zimmerman 2000), organizational research (see Chen et al. 2001), and social work research (see Jackson and Huang 2000; Ramo et al. 2010; Schmall 1994).

There are four sources of information that impact a person’s self-efficacy: performance accomplishment, vicarious experience, verbal persuasion, and physiological states (Bandura 1977). According to Bandura (1993, 1997), self-efficacy affect how people feel, think, motivate themselves, and behave. It is hypothesized that people with low self-efficacy for accomplishing a task may refrain from performing the task at hand (Schunk 1991; Bandura 1997). Low self-efficacy then becomes a vicious circle: “Lack of faith in ability produces lack of action. Lack of action contributes to more self-doubt. They become doubtful of their own capabilities and are more easily stressed and more frequently depressed than people with high self-efficacy” (Singh and Udainiya 2009). People with high self-efficacy for accomplishing a task should readily attempt the task. Bandura (1977) also stated that these people work harder, and are more persistent when difficulties arise, than people with low self-efficacy. Self-efficacy differs conceptually from related motivational constructs, such as outcome expectations (Zimmerman 2000), perceived control (Endler et al. 2001), self-concept, or locus of control (Zimmerman 2000; Pastorelli et al. 2001).

The theory originally stated that self-efficacy is situation-specific (Bandura 1977), meaning that a person could experience high self-efficacy in one situation and low in another. As a result, a large number of domain-specific instruments measuring self-efficacy have been developed, for example, a career decision-making self-efficacy scale (Betz et al. 1996), a nursing self-efficacy scale (Hagquist et al. 2009), and an alcohol abstinence self-efficacy scale (DiClemente et al. 1994). However, other researchers have proposed that self-efficacy may be generalized (Schwarzer and Jerusalem 1995; Sherer et al. 1982; Eden 1988). As the most important source of information that contributes to a person’s self-efficacy is performance accomplishment, it has been argued that an individual’s experience of failure or success in different situations should result in a generalized type of self-efficacy (Sherer et al. 1982). Even though Bandura (1997) was against an ‘”all-purpose measure”, he acknowledged that self-efficacy can be generalized when commonalities are cognitively structured across activities, for example, when tasks require similar subskills or when the skills required to accomplish dissimilar activities are acquired together. Bandura (1997) also described “transforming experiences” that can strengthen a person’s beliefs in other areas than where the success was achieved. Several scales to measure general self-efficacy exist within research; one was developed by Schwarzer and Jerusalem (1995) and another by Sherer and colleagues (1982), which in turn has a short version that was developed by Chen and colleagues (Chen et al. 2001).

The General Self-Efficacy Scale (GSES), developed by Schwarzer and Jerusalem (1995), has been translated into 31 different languages (see http://userpage.fu-berlin.de/health/selfscal.htm). The scale has been psychometrically tested in different populations and in different cultures (e.g Scholz et al. 2002; Luszczynska et al. 2005). Results show it to be a reliable, valid, and unidimensional scale. However, most research testing the psychometric properties of the scale have been based on theories within the classical test theory paradigm; only two studies have evaluated the psychometric properties of the GSES using Rasch analysis, one with a sample of adults with spinal cord injury (Peter et al. 2014) and the other with morbidly obese adults (Bonsaksen et al. 2013). Thus, neither of these studies used samples of adolescents. The scale was in fact intended to be used with adolescents as well as the general adult population (Schwarzer and Jerusalem 1995). The way in which adolescents develop and exercise their self-efficacy during this transitional period can play a key role in setting the course their life paths take (Bandura 2006). Studies have shown that an adolescent’s self-efficacy affects their physical activity (Feltz and Magyar 2006), their risk-taking behavior, and their health decisions (Schwarzer and Luszczynska 2006). The Swedish version of the GSES (Koskinen-Hagman et al. 1999) has been psychometrically evaluated based on classical test theory. The sample consisted of people from the general population and people on sick leave, aged 19 to 64 years. Results showed high internal consistency (α = 0.90), unidimensionality, and factor loadings ranging between 0.64 and 0.80 (Löve et al. 2012).

The purpose of the present study was to examine the psychometric properties of the Swedish version of the GSES with an adolescent sample. Since invariant comparisons of general self-efficacy between different samples are essential, we used the Rasch model, which has invariance as an integral property. The Rasch model has not previously been applied on general self-efficacy adolescent data.

Methods

Material

The data for this study were collected as part of a school prevention project in collaboration between the municipality of Karlstad in Sweden and the Centre for Research on Child and Adolescent Mental Health (CFBUPH) at Karlstad University, Sweden. Data were collected about social relations, classroom climate, bullying, and mental health. The overall aim of the project was to promote good mental health among children and adolescents.

Data Collection

The data in the present study were collected 2009 and 2010 among students aged between 13 and 15 years in the 7th to 9th grades in compulsory schools in the municipality of Karlstad. The data collection was carried out by a research team at CFBUPH. All students received both written and oral information about the aim of the study, that their participation was voluntary, and that they had the right to withdraw their participation at any time. Due to the age of the children in the 7th and 8th grades, written information was given to the parents, and those who did not want their children to participate were asked to notify the grade teacher. Eight out of nine compulsory schools participated in the data collection in 2009. The questionnaire consisted of 109 questions, with the items about general self-efficacy as number 58. The questionnaire for 2010 consisted of 116 questions, with the items about general self-efficacy as number 63. By 2010, one of the compulsory schools in the municipality had closed and that year all eight of the remaining compulsory schools participated. Table 1 shows the number of participants and non-participants for 2009 and 2010.

Table 1 show that the proportion of the non-participants decreased from 17.1% for 2009 to 9.7% for 2010. In both years, a higher proportion of non-participants were found in the higher grades.

Instrument

The GSES consists of ten items, see Appendix Table 6. The responses to the items are summarized across respondents, yielding a score between 10 and 40; higher scores indicate higher self-efficacy. The Swedish version of the GSES is the instrument investigated in this study. The GSES was translated into Swedish in 1999 (Koskinen-Hagman et al. 1999) and the adaptation followed a group consensus model (personal communication with Koskinen-Hagman Dec 5, 2015). Although, the process of translation into Swedish has not been reported in any papers, the general principles for the translation process have previously been described, as part of a study investigating general self-efficacy as a universal construct, in the following way: “The procedure included back translations and group discussions. Since the goal was to achieve cultural-sensitive adaptations of the construct rather than mere literal translations, the translators acquired a thorough understanding of the general self-efficacy construct” (Scholz et al. 2002). This description corresponds to the group consensus model described by the Swedish translator.

Analysis Using the Rasch Model

Rasch analysis can be used to examine whether responses to individual items can be combined into a unidimensional composite measure, enabling us to distinguish individuals at the high and low levels of the latent trait (Andrich 1988). The Rasch model estimates item and person parameters independently of each other and places both parameter estimates on the same latent variable, which offers opportunities to examine the targeting, in other words, the locations of the items relative to the respondents. If the targeting is bad, the reliability will be lower, which makes it hard to differentiate people along the latent trait

Since invariance is an integral property of the Rasch model, a test of fit between the data and the model is a test of whether the instrument works invariantly or not. In the Rasch analysis, the focus is on the operating characteristics of the items along the whole continuum of a latent trait, not on a single summary measure. Expected value curve (EVC), sometimes called Item Characteristic Curves, are useful graphical tools for checking the fit of the data to the Rasch model, complementing formal test statistics. The EVC predicts the responses to the items as a function of the items and the respondent’s locations on the latent trait. These expected values are compared with the observed values. If an item shows differential item functioning (DIF), more than one EVC is required to predict the responses to that item. This means that members of one group score differently on an item than members of another group, given the same location on the latent trait. In DIF analysis of an item set, several items may show evidence of DIF, consisting of real DIF items as well as artificial DIF items (Andrich and Hagquist 2012, 2015). Real DIF is inherent to an item and affects the person measures, while artificial DIF does not, because it is an artifact of the procedure to identify DIF. Since real DIF affects the person measurement and the comparisons between groups, different options to address real DIF may be considered. One option is to simply remove the real DIF item(s); another option is to take the DIF into account based on principles of equating. While the first option will decrease the reliability and person separation, the second will not have any effect on the reliability and person separation. Therefore, it is usually preferable to resolve an item instead of removing it. Given that resolving an item, like removing an item, may affect the validity, from that perspective resolving DIF is only justified if the source of DIF can be shown to arise from some source irrelevant to the variable being assessed and therefore deemed dispensable.

The data may statistically fit the polytomous Rasch model (Andrich 1978) although the response categories do not operate in the intended order. Such threats to measurement may also be detected by the Rasch model thanks to its sensitivity to the categorization of the items. Hence, the Rasch analysis may facilitate decisions about the number of response categories that would be optimal as well as the phrasing of the categories.

In the present study, the following issues were analyzed:

-

a.

Person separation

-

b.

Whether items cover the full range of ability levels of the latent trait i.e. targeting

-

c.

Whether there is any threshold disordering

-

d.

Item fit

-

e.

Differential item functioning (DIF) for gender, age, and grade

-

f.

Local dependency

The procedures for analyzing theses aspects are described below. The analyses were conducted using the software program RUMM 2030 (Andrich et al. 2012).

-

a.

The person separation index (PSI) is the measure of reliability employed in this study; it is analogous with Cronbach’s alpha when the data is normally distributed (Pallant and Tennant 2007).

-

b.

Targeting for GSES was analyzed in the present study by examining the person–item threshold distribution (Tennant and Conaghan 2007).

-

c.

Disordered thresholds occur when respondents cannot discriminate between the response options. This could reflect the phrasing of the response, or it could be misinterpreted or confusing, or there could be too many response options (Pallant and Tennant 2007). The ordering of the thresholds is therefore important.

-

d.

The item fit can be examined in different ways: graphically with the EVC and formally with fit residuals and chi-square tests (Hagquist et al. 2009). A value between -2.5 and 2.5 is considered acceptable for the fit residuals (Pallant and Tennant 2007). The reported p-values are chi-square statistics based on a comparison between the observed means and the expected values (Hagquist 2001) in equal-sized class intervals of people (i.e. groups representing different ability levels) along the latent trait. One thing to bear in mind is that a large sample size always gives low p-values. With a large sample size, the parameters are estimated with great precision, and any misfit will be exposed (Andrich 1988). Therefore, even if there are significant p-values indicating that the expected values and the model do not fit, the items may be retained. To reconcile the sensitivity of the formal test but still have the advantage of the graphical representation, Andrich and Styles (2010) suggest adjusting the sample size. The chi-square statistic is proportional to the sample size, and what happens in practice according to Andrich and Styles (2010) is that the chi-square statistic is multiplied by the new sample size divided by N. For this reason, formal tests with an adjusted sample size (n = 900) were performed in the present study.

-

e.

In order to make invariant comparisons, a measurement instrument has to function the same way along the latent trait and across the groups that are to be compared, for example between girls and boys, across age groups, across sampling years, between cultures, and across countries (Andrich 1988; Hagquist et al. 2009). Lack of invariance across groups is referred to as DIF. We analyzed DIF graphically by the EVCs and formally by analysis of variance (ANOVA). There are two types of DIF: uniform and non-uniform. Uniform DIF is when the EVCs for each group are parallel, and non-uniform DIF means that the EVCs are non-parallel (Andrich and Hagquist 2012). The groups of interest (person factors) in this analysis are: grade (7th, 8th or 9th), year (2009 or 2010) and gender (girl or boy). The ANOVA of standardized residuals, shows main effects for class interval and a main effect for person factors (uniform DIF), as well as an interaction effect between class interval and person factors (non-uniform DIF). To distinguish between real and artificial DIF, the item with the highest F-value must be identified. Andrich and Hagquist (2012) suggest that you should resolve the item with the highest F-value. Resolving an item means that an item is split into the specific sample groups, for example, gender will result in one item for girls and one item for boys, in which the values for the excluded group are treated as missing. In the present study, items were resolved for DIF starting with the worst-fitting item; thereafter a new ANOVA was performed to identify whether any additional items needed to be resolved.

-

f.

Local independence refers to the idea that for the same value of β (person parameter, that is, a person’s ability), there is no further relationship between responses to any pair of items (Marais and Andrich 2008). Correlations between residuals may indicate local independence. There are two different types of local dependency: one is response dependency (Marais and Andrich 2008). It means that the response that a person gives to one item depends on the response that the same person gave to a previous item. Evidence of response dependency was analyzed in the present study by identifying items with residual correlations above 0.3 in the Person–item residual correlation matrix. The second type of local dependency is violation of unidimensionality, or what Marais and Andrich (2008) refer to as trait dependence, which reflects the presence of more than one trait. Unidimensionality is an important aspect of construct validity. Evidence of multidimensionality was analyzed in the present study by first identifying positive or negative principal component loadings and then conducting t-tests of differences in person–location values generated from these two subsets of items. Although trait and response dependence are conceptually different, they are hard to distinguish, both empirically and in the literature (Marais and Andrich 2008).

Results

Original Item Set (10 items)

Power of analysis of fit was “Excellent” on all tests conducted. This indicates that the people are spread out throughout the continuum, and not clustered around the same location.

Reliability, Targeting, and Threshold Ordering

The PSI value for all ten items was 0.8998 (Cronbach’s α 0.93788) with extremes and 0.8737 (Cronbach’s α 0.90001) without extremes.

The person–item threshold distribution showed that the mean location was 1.411 with a standard deviation of 2.241. It also showed that there were a lot of easier items but there were no items covering the range from 0.5 to 1.5 logits. This was also where most people were located; i.e. there were no items targeting these people. Furthermore there were extreme values at both ends. There were no questions covering locations over 4.25 logits or under -3.25 logits. So no items targeted these people either. The different means for the person factor subgroups differ between -0.271 and +0.242 from the mean of the whole sample. The means for the person factor grade were 1.497 for 7th grade, 1.394 for 8th grade, and 1.356 for 9th grade, means for the person factor year were 1.397 for 2009 and 1.424 for 2010, and means for the person factor gender were 1.169 for girls and 1.685 for boys. This implies that out of all the subgroups, the instrument was targeted best for girls (1.169). Also, these values were interpreted as showing that the boys as a group have higher self-efficacy.

The analysis showed no disordered thresholds, which indicates that the response format works well.

Item Fit – Test of Invariance at a General Level

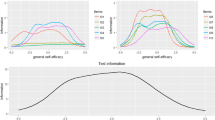

Figure 1 shows the EVCs, along with results from formal tests of item fit.

Figure 1 shows that two items (1 and 3) were under-discriminating and five items (5, 6, 8, 9, and 10) were over-discriminating. Negative residuals indicate over-discrimination and positive residuals indicate under-discrimination. The p-values for the items are less than 0.001 (Bonferroni adjustment) for all the items except items 2, 5, 6, and 7. To reconcile the sensitivity of the formal test due to the large sample size, the sample size was adjusted, in this case to 900; the p-values for the formal chi-square test with the adjusted sample size can also be seen in Fig. 1. Using this adjusted sample size, no items show evidence of statistical misfit.

Figure 1 g shows item 7, which has a good fit in both the graphical and formal investigation. Figure 1c shows item 3, an item that shows under-discrimination according to the fit residual. This indicates that students with low self-efficacy tend to score too high on this particular item and students with high self-efficacy tend to score too low, according to the Rasch model. Figure 1e shows item 5, an item that shows over-discrimination according to the fit residual. The opposite pattern occurs here, this indicates that students with low self-efficacy tend to score too low on this particular item and students with high self-efficacy tend to score too high, according to the Rasch model.

Even though the graphical representations in Fig. 1c and e show a little over- and under-discrimination, the deviation from the EVC is minor. These minor deviations also apply for the EVC curves for the rest of the items shown in Fig. 1.

Table 2 shows the item location for each item, and the spread of the item location values corresponds to the severity of each item. Item 4 represents the most difficult item whereas item 1 represents the easiest. Item 5 and 9 have almost the same item location.

Differential Item Functioning – Test of Invariance at a Finer Level

The groups of interest (person factors) in this analysis were: grade (7th, 8th, or 9th), year (2009 or 2010), and gender (girl or boy). Table 3 shows the results from the analysis concerning the person factor gender. The analysis showed no non-uniform DIF for that person factor. Table 3 also shows that for the person factor gender, uniform DIF was found in items 1, 2, 3, 4, and 6 when the sample size was intact. After the sample size was adjusted, only the DIF in item 6 remained statistically significant.

Analyses showed (not shown in the table) that uniform DIF was found in items 1, 5, and 9, for the person factor grade. When the sample size was adjusted, DIF found in those items was no longer statistically significant. Analyses also showed (not shown in the table) that there was no uniform or non-uniform DIF for the person factor year, irrespective of sample size, which indicates that the instrument works invariantly between years.

Local Independence

Looking at the residual correlation matrix, no correlations above a value of 0.3 were found, which indicates that there is no evidence, or only minor evidence, of local independence in the form of response dependence.

Investigations of PC loadings revealed that there might be a violation of unidimensionality, hence trait dependence. The loadings suggest that item 1, 2, and 3 represented one dimension and items 4, 5, 6, 7, 8, 9, and 10 represented another dimension.

To investigate this further, a paired t-test was conducted, where one set contained items 1, 2, and 3, which had a positive component–item residual, and the second set contained items 4, 5, 6, 7, 8, 9, and 10, which had a negative component–item residual. Analyses showed that the person location values from the two subsets were significantly different for 8.38%, thus exceeded the critical value of 5%, which may indicate trait dependence.

Revised Item Set (11 Items)

The following results describe the instrument after item 6 (I can remain calm when facing difficulties because I can rely on my coping abilities) was resolved for gender. To resolve an item in this case refers to splitting item 6 into two items, one for girls and one for boys, so this set includes 11 items.

Differential Item Functioning

To distinguish between real and artificial DIF, the item with the highest F-value was identified. This meant that item 6 for the person factor gender was resolved. Table 3 shows that the F-value for item 6 was 81.51663.

Figure 2 displays a graphical representation of a uniform DIF for item 6, for the person factor gender. The curves for boys and girls deviated in a parallel way, as seen in Fig. 2a. Irrespective of a boy’s or girl’s ability, the boys tended to answer higher on this item than girls, given the same location on the latent trait. The same pattern appeared for item 4. The opposite pattern occurred that girls tended to answer “higher” than boys on items 1, 2, and 3.

EVC for item 6, I can remain calm when facing difficulties because I can rely on my coping abilities. a) EVC before resolving the item (the line with x represents the boys and the line with o represents the girls); b) EVC after the item was resolved (here, the signs are reversed, so o represents boys and x represents girls (n = 3272))

After item 6 was resolved, no DIF was statistically significant in either the person factor gender or grade when the sample size was adjusted, as shown in Table 4.

Targeting

Figure 3 shows the person–item threshold distribution. The number of people was 3639, and the mean location was 1.393, with a standard deviation of 2.242.

The targeting of the instrument when item 6 was resolved compared to when the item set was intact does not differ significantly. The revised set had a slightly lower mean person location. There were a lot of easier items but there were no items to cover the range from 0.5 to 1.5 logits. This was also where the figure shows the most people; i.e. there were no items targeting these people. Furthermore there were extreme values at both ends. There were no questions to cover location values over 4.25 logits or lower than -3.25 logits. So no items target these people either.

Threshold Ordering

The analysis showed no disordered thresholds, which indicates that the response format works well.

Comparison of Person Measures

To examine the effect of the DIF items on person measurement, the mean values of the person–item threshold distribution for boys and girls were compared before and after the DIF for the person factor gender was resolved. The results can be seen in Table 5. Also included in the analysis and shown in Table 5 are the person measures if item 6 is removed.

Table 4 shows that the differences between the mean value for boys and girls in the original set, before any item was resolved, was 0.516, and the difference between the mean value for boys and girls in the revised 11-item set, after item 6 was resolved, was 0.457. Given that artificial DIF never affects the person measure, this confirms that the DIF was real. Table 4 also shows that the PSI value is slightly higher if item 6 is resolved rather than removed from the item set.

Discussion

The purpose of this study was to examine the psychometric properties of the Swedish version of the GSES with an adolescent sample, using Rasch analysis. The analysis of the fit residual for the GSES shows seven items that are over- or under-discriminating, of which five show statistically significant misfit. Given the large sample size and the fact that even the smallest deviation is detected, which in turn will result in fit residuals, the graphical investigation of the EVC is important to judge the magnitude of the misfit. In the items that show misfit according to the formal test statistics, the observations are located close to the EVC, indicating that the misfit might be only minor. This hypothesis is confirmed when an adjustment of the sample size is used as a heuristic tool for the evaluation of an instrument. When the sample size is adjusted, the DIF is no longer statistically significant for any of the items for grade and only one item remains statistically significant for gender. But after that item is resolved, no more DIF is found when the sample size is adjusted. It is important to bear in mind that, when adjusting the sample size, the parameters are estimated with good precision but have less power to detect misfit (Bergh 2014).

There are a few results that are quite similar between the original 10-item set and the revised 11-item set. The high PSI values, 0.8998 for the original set and 0.89963 for the revised set, indicate high reliability for the GSES. As expected, the PSI is slightly higher if item 6 is resolved rather than removed. In this study, the mean location is 1.411 in the original set and 1.393 in the revised set, indicating that the population has higher self-efficacy than the instrument is supposed to capture. The person–item distribution also shows that there are no questions covering the range where the largest proportion of people is located, so one improvement that can be made to the instrument is to add items with medium difficulty. This interpretation applies in both the original and the revised set. Another result shared by the two item sets concerns the response format, where the four qualitative response options (Not true at all; Hardly true; Moderately true; Exactly true) seem to work well.

The results presented may be discussed in relation to previous concerns about the GSES and also in relation to theoretical assumptions. The results reveal that there might be a violation of unidimensionality; it might be a trait dependence in the original 10-item set of the GSES, in so far that items 1, 2, and 3 represent one dimension and items 4, 5, 6, 7, 8, 9, and 10 represent another. The former group contains aspects of how much a person perseveres. The latter group of items covers the aspect of self-efficacy that relates to new, surprising or unexpected situations. It seems unlikely that the questions cover different dimensionalities, but rather that items in the latter group are phrased more similarly. Indeed, trait dependence is also found in items that are linked by attributes such as common stimulus materials, common item stems, common item structures, or common item content (Marais and Andrich 2008). Other research (Zhou 2015) has questioned the unidimensionality of the 10-item version of GSES, talking about action self-efficacy (items 1, 6, 7, 8, and 9), meaning self-efficacy in a pre-intentional phase, and coping self-efficacy (items 2, 3, 4, 5, and 10), meaning self-efficacy in a post-intentional phase. Further analysis (not shown in the paper) with five different samples (7th grade, 8th grade, 9th grade, girls and boys) all shows evidence of items 1, 2, and 3 belonging to one dimension. All but one sample have had positive PC-loadings; the 8th grade sample is the one sample where items 1, 2, and 3 have negative PC loadings. What could these results imply for the usage of the instrument?

There may also be some concerns about the phrasing of some items. In particular, item 6 seems to be problematic. The phrasing of that statement in Swedish is a bit ambiguous. The back translation is, “Because of my own ability, I feel calm even when I am facing difficulties”, which does not explicitly specify coping, as in the English version (“I can remain calm when facing difficulties because I can rely on my coping abilities.”) There are a few aspects to consider when answering item 6. But this is also the only item that directly taps information about the aspect of physiological state that could impact a person’s self-efficacy beliefs according to Bandura (1977). Another item that might be a cause for concern regarding the translation is item 7, which does not capture a person’s belief about their own internal resources as the English version does. The back translation is, “Whatever happens, I’ll always manage” (compared to the English version, ”I can solve most problems if I invest the necessary effort.”) A person could interpret the Swedish item 7 in terms of external resources, for example, having supportive family or friends, enough money, or a place to live. Analysis, when item 7 is removed (not included in the paper) was performed showing results that did not considerably alter the outcomes from the psychometric evaluation of the GSES, indicating that item 7 was probably read in the context of the other items. In other words, the students interpreted item 7 to be about internal resources. Other researchers (Bonsaksen et al. 2013) have also discussed the content of item 2 (“If someone opposes me, I can find the means and ways to get what I want”) as problematic, as it is the only item to include an interpersonal aspect, both in the English and translated versions (Norwegian and Swedish). But this item might reflect another one of the sources of information that impact a person’s self-efficacy according to Bandura (1977), namely, verbal persuasion. If someone opposes you, this could mean that the person may perform some hostile actions or it could be in the form of discouraging words. And this is the only question that takes that aspect of information into consideration.

In conclusion, our analyses show that the GSES works reasonable well as a whole. Since the analyses are based on a large data set even relatively small evidence of misfit will appear to be statistically significant. There is clearly room for improvements of GSES. The targeting may for example be improved by adding more questions of medium difficulty. Also, further attention needs to be paid to the dimensionality of the GSES as well as to whether the psychometric properties of GSES are affected by using more recent data.

References

Andrich, D. (1978). A rating formulation for ordered response categories. Psychometrika, 43(4), 561–573.

Andrich, D. (1988). Rasch models for measurement (Vol. 68). Newbury Park: Sage Publications.

Andrich, D., & Hagquist, C. (2012). Real and artificial differential item functioning. Journal of Educational and Behavioral Statistics, 37(3), 387–416.

Andrich, D., & Hagquist, C. (2015). Real and artificial differential item functioning in polytomous items. Educational and Psychological Measurement, 75(2), 185–207.

Andrich, D., & Styles, I. (2010). Distractors with information in multiple choice items: a rationale based on the Rasch model. Journal of Applied Measurement, 12(1), 67–95.

Andrich, D., Sheridan, B. S., & Luo, G. (2012). Rumm 2030: Rasch unidimensional measurement models (software). Perth: RUMM Laboratory.

Bandura, A. (1977). Self-efficacy: toward a unifying theory of behavioral change. Psychological Review, 84(2), 191.

Bandura, A. (1993). Perceived self-efficacy in cognitive development and functioning. [Article]. Educational Psychologist, 28(2), 117.

Bandura, A. (1997). Self-efficacy: The exercise of control. New York: W. H. Freeman and Company.

Bandura, A. (2006). Adolescent development from an agentic perspective. In F. Pajares & T. C. Urdan (Eds.), Self-efficacy of adolecents (pp. 1–43). Greenwich: Information Age Publishing Inc.

Bergh, D. (2014). Chi-squared test of fit and sample size: a comparison between a random sample approach and a chi-square value adjustment method. Journal of Applied Measurement, 16(2), 204–217.

Betz, N. E., Klein, K. L., & Taylor, K. M. (1996). Evaluation of a short form of the career decision-making self-efficacy scale. Journal of Career Assessment, 4(1), 47–57.

Bonsaksen, T., Kottorp, A., Gay, C., Fagermoen, M. S., & Lerdal, A. (2013). Rasch analysis of the general self-efficacy scale in a sample of persons with morbid obesity. Health and Quality of Life Outcomes, 11(1), 1–11.

Chen, G., Gully, S. M., & Eden, D. (2001). Validation of a new general self-efficacy scale. Organizational Research Methods, 4(1), 62–83.

DiClemente, C. C., Carbonari, J. P., Montgomery, R., & Hughes, S. O. (1994). The alcohol abstinence self-efficacy scale. Journal of Studies on Alcohol, 55(2), 141–148.

Eden, D. (1988). Pygmalion, goal setting, and expectancy: compatible ways to boost productivity. Academy of Management Review, 13(4), 639–652.

Endler, N. S., Speer, R. L., Johnson, J. M., & Flett, G. L. (2001). General self-efficacy and control in relation to anxiety and cognitive performance. Current Psychology, 20(1), 36–52.

Feltz, D. L., & Magyar, M. (2006). Self-efficacy and adolecents in sport and physical activity. In F. Pajares & T. C. Urdan (Eds.), Self-efficacy beliefs of adolescents (pp. 161–179). Greenwich: Information Age Publishing Inc.

Hagquist, C. (2001). Evaluating composite health measures using Rasch modelling: an illustrative example. Sozial-und Präventivmedizin, 46(6), 369–378.

Hagquist, C., Bruce, M., & Gustavsson, J. P. (2009). Using the Rasch model in nursing research: an introduction and illustrative example. International Journal of Nursing Studies, 46(3), 380–393.

Jackson, A. P., & Huang, C. C. (2000). Parenting stress and behavior among single mothers of preschoolers: the mediating role of self‐efficacy. Journal of Social Service Research, 26(4), 29–42.

Koskinen-Hagman, M., Schwarzer, R., & Jerusalem, M. (1999). Swedish version of the gereral self-efficacy scale. http://userpage.fu-berlin.de/~health/swedish.htm.

Löve, J., Moore, C. D., & Hensing, G. (2012). Validation of the Swedish translation of the general self-efficacy scale. Quality of Life Research, 21(7), 1249–1253.

Luszczynska, A., Scholz, U., & Schwarzer, R. (2005). The general self-efficacy scale: multicultural validation studies. The Journal of Psychology, 139(5), 439–457.

Marais, I., & Andrich, D. (2008). Formalizing dimension and response violations of local independence in the unidimensional Rasch model. Journal of Applied Measurement, 9(3), 200–215.

Pallant, J. F., & Tennant, A. (2007). An introduction to the Rasch measurement model: an example using the Hospital Anxiety and Depression Scale (HADS). British Journal of Clinical Psychology, 46(1), 1–18.

Pastorelli, C., Caprara, G. V., Barbaranelli, C., Rola, J., Rozsa, S., & Bandura, A. (2001). The structure of children’s perceived self-efficacy: a cross-national study. European Journal of Psychological Assessment, 17(2), 87–97.

Peter, C., Cieza, A., & Geyh, S. (2014). Rasch analysis of the general self-efficacy scale in spinal cord injury. Journal of Health Psychology, 19(4), 544–555. doi:10.1177/1359105313475897.

Ramo, D. E., Myers, M. G., & Brown, S. A. (2010). Self-efficacy mediates the relationship between depression and length of abstinence after treatment among youth but not among adults. Substance Use & Misuse, 45(13), 2301–2322.

Schmall, V. (1994). Family caregiver education and training: enhancing self-efficacy. Journal of Case Management, 4(4), 156–162.

Scholz, U., Doña, B. G., Sud, S., & Schwarzer, R. (2002). Is general self-efficacy a universal construct? Psychometric findings from 25 countries. European Journal of Psychological Assessment, 18(3), 242–251.

Schunk, D. H. (1991). Self-efficacy and academic motivation. Educational Psychologist, 26(3-4), 207–231.

Schwarzer, R., & Jerusalem, M. (1995). Generalized self-efficacy scale. In J. Weinman, S. Wright, & M. Johnston (Eds.), Measures in health psychology: A user’s portfolio. Causal and control beliefs (Vol. 1, pp. 35–37). Windsor: NFER-NELSON.

Schwarzer, R., & Luszczynska, A. (2006). Self-efficacy, adolecents’ risk-taking behaviors, and health. In F. Pajares & T. C. Urdan (Eds.), Self-efficacy beliefs of adolescents (pp. 139–159). Greenwich: Information Age Publishing Inc.

Sherer, M., Maddux, J. E., Mercandante, B., Prentice-Dunn, S., Jacobs, B., & Rogers, R. W. (1982). The self-efficacy scale: Construction and validation. Psychological Reports, 51(2), 663–671.

Silver, B. B., Smith, E. V., Jr., & Creene, B. A. (2001). A study strategies self-efficacy instrument for use with community college students. Educational and Psychological Measurement, 61(5), 849–865.

Singh, B., & Udainiya, R. (2009). Self-efficacy and well-being of adolescents. Journal of the Indian Academy of Applied Psychology, 35(2), 227–232.

Tennant, A., & Conaghan, P. G. (2007). The Rasch measurement model in rheumatology: what is it and why use it? When should it be applied, and what should one look for in a Rasch paper? Arthritis Care & Research, 57(8), 1358–1362.

Usher, E. L., & Pajares, F. (2008). Sources of self-efficacy in school: critical review of the literature and future directions. Review of Educational Research, 78(4), 751–796.

Zhou, M. (2015). A revisit of general self-efficacy scale: uni-or multi-dimensional? Current Psychology, 1-15.

Zimmerman, B. J. (2000). Self-efficacy: an essential motive to learn. Contemporary Educational Psychology, 25(1), 82–91.

Acknowledgments

The authors gratefully acknowledge the funding that was supported by Forte: the Swedish Research Council for Health, Working Life and Welfare.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure of Potential Conflicts of Interest

This work was supported by Forte: the Swedish Research Council for Health, Working Life and Welfare (Program grant number: 2012–1736)

Research Involving Human Participants and Informed Consent

The principals guiding the data collection were reviewed by the ethical committee at Karlstad University and no objections were raised.

Appendix 1

Appendix 1

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Lönnfjord, V., Hagquist, C. The Psychometric Properties of the Swedish Version of the General Self-Efficacy Scale: A Rasch Analysis Based on Adolescent Data. Curr Psychol 37, 703–715 (2018). https://doi.org/10.1007/s12144-016-9551-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-016-9551-y