Abstract

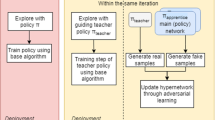

In Hierarchical Federated Learning (HFL), data sample sizes and distribution of different clients vary greatly. Due to the heterogeneity of the data, it is crucial to select appropriate clients to participate in model training while ensuring the model quality of HFL. We investigate the problem of optimizing client selection for model quality. We investigate the impact of Non-Independent and Identically Distributed data on HFL and found that selecting clients based on losses can improve model quality. Thus, We propose a client selection method based on Client Quality Records (CS-Loss), utilizing client losses. Since selecting clients to participate in model training at each iteration round results in changes to client losses and model parameters, the process becomes dynamic. Therefore, we formulate the client selection problem as a Markov Decision Process and design an algorithm based on Synchronous Advantage Actor-Critic (CS-A2C) to address it. Simulation results demonstrate that the CS-A2C algorithm outperforms both the existing FedAvg algorithm and Favor algorithm on the MNIST dataset. On the CIFAR-10 dataset, the proposed CS-A2C algorithm can improve model accuracy by 13% and 7% respectively.

Similar content being viewed by others

Data Availability

The datasets used in this article are all publicly available online datasets, which can be purchased and used.

References

Reinsel D, Gantz J, Rydning J (2017) IDC white paper, data age 2025

Konečnỳ J, McMahan HB, Yu FX, Richtárik P, Suresh AT, Bacon (2016) Federated learning: strategies for improving communication efficiency. arXiv:1610.05492

Konečnỳ J, McMahan HB, Ramage D, Richtárik P (2016) Federated optimization: distributed machine learning for on-device intelligence. arXiv:1610.02527

Bonawitz K, Eichner H, Grieskamp W, Huba D, Ingerman A, Ivanov V, Kiddon C, Konečnỳ J, Mazzocchi S, McMahan B et al (2019) Towards federated learning at scale: system design. Proc Mach Learn Syst 1:374–388

Luo S, Chen X, Wu Q, Zhi Z, Yu S (2020) HFEL: joint edge association and resource allocation for cost-efficient hierarchical federated edge learning. IEEE Trans Wirel Commun 19(10):6535–6548

Wang J, Wang S, Chen R-R, Ji M (2020) Local averaging helps: hierarchical federated learning and convergence analysis, 2. arXiv:2010.12998

Liu L, Zhang J, Song S, Letaief KB (2021) Hierarchical quantized federated learning: convergence analysis and system design. arXiv:2103.14272

Li Q, Diao Y, Chen Q, He B (2022) Federated learning on non-IID data silos: an experimental study. In: 2022 IEEE 38th International conference on data engineering (ICDE). IEEE, pp 965–978

Hangyu Z, Xu J, Shiqing L, Yaochu J (2021) Federated learning on non-IID data: a survey. Neurocomputing 465:371–390

Zhao Y, Li M, Lai L, Suda N, Civin D, Chandra V (2018) Federated learning with non-IID data. arXiv:1806.00582

Zhang SQ, Lin J, Zhang Qi (2022) A multi-agent reinforcement learning approach for efficient client selection in federated learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 36. pp 9091–9099

Luping W, Wei W, Bo L (2019) CMFL: mitigating communication overhead for federated learning. In: 2019 IEEE 39th International conference on distributed computing systems (ICDCS). IEEE, pp 954–964

Abay A, Zhou Y, Baracaldo N, Rajamoni S, Chuba E, Ludwig H (2020) Mitigating bias in federated learning. arXiv:2012.02447

Deng Y, Lyu F, Ren J, Chen Y-C, Yang P, Zhou Y, Zhang Y (2021) Fair: quality-aware federated learning with precise user incentive and model aggregation. In: IEEE INFOCOM 2021-IEEE conference on computer communications. IEEE, pp 1–10

Blanchard P, El Mhamdi EM, Guerraoui R, Stainer J (2017) Machine learning with adversaries: Byzantine tolerant gradient descent. Adv Neural Inform Process Syst 30

Guerraoui R, Rouault S et al (2018) The hidden vulnerability of distributed learning in Byzantium. In: International conference on machine learning. PMLR, pp 3521–3530

Cho YJ, Wang J, Joshi G (2020) Client selection in federated learning: convergence analysis and power-of-choice selection strategies. arXiv:2010.01243

Li T, Sahu AK, Zaheer M, Sanjabi M, Talwalkar A, Smith V (2020) Federated optimization in heterogeneous networks. Proc Mach Learn Syst 2:429–450

Yoshida N, Nishio T, Morikura M, Yamamoto K, Yonetani R (2019) Hybrid-FL: cooperative learning mechanism using non-IID data in wireless networks. arXiv:1905.07210

Wolfrath J, Sreekumar N, Kumar D, Wang Y, Chandra A (2022) HACCS: heterogeneity-aware clustered client selection for accelerated federated learning. In: 2022 IEEE International parallel and distributed processing symposium (IPDPS). IEEE, pp 985–995

Karimireddy SP, Kale S, Mohri M, Reddi SJ, Stich SU, Suresh AT (2019) SCAFFOLD: stochastic controlled averaging for on-device federated learning. arXiv:1910.06378

Zhu Z, Hong J, Zhou J (2021) Data-free knowledge distillation for heterogeneous federated learning. In: International conference on machine learning. PMLR, pp 12878–12889

Sattler F, Müller K-R, Samek W (2020) Clustered federated learning: model-agnostic distributed multitask optimization under privacy constraints. IEEE Trans Neural Netw Learn Syst 32(8):3710–3722

Qiu T, Zheng X, Yongxin Z, Feng S (2022) FedFog: federated learning architecture for non-IID data

Duan M, Liu D, Chen X, Liu R, Tan Y, Liang L (2020) Self-balancing federated learning with global imbalanced data in mobile systems. IEEE Trans Parallel Distrib Syst 32(1):59–71

Ribero M, Vikalo H (2020) Communication-efficient federated learning via optimal client sampling. arXiv:2007.15197

Zhao J, Feng Y, Chang X, Liu CH (2022) Energy-efficient client selection in federated learning with heterogeneous data on edge. Peer-to-Peer Netw Appl 15(2):1139–1151

Chen H, Huang S, De Zhang, Xiao M, Skoglund M, Poor HV (2022) Federated learning over wireless IoT networks with optimized communication and resources. IEEE Internet of Things J 9(17):16592–16605

Wei X, Liu J, Shi X, Wang Y (2022) Participant selection for hierarchical federated learning in edge clouds. In: 2022 IEEE International conference on networking, architecture and storage (NAS). IEEE, pp 1–8

Chen X, Li Z, Ni W, Wang X, Zhang S, Xu S, Pei Q (2022) Two-phase deep reinforcement learning of dynamic resource allocation and client selection for hierarchical federated learning. In: 2022 IEEE/CIC International conference on communications in china (ICCC). IEEE, pp 518–523

Qu Z, Rui D, Lixing C, Xu J, Lu Z, Yao L (2022) Context-aware online client selection for hierarchical federated learning. IEEE Trans Parallel Distrib Syst 33(12):4353–4367

Lai F, Zhu X, Madhyastha HV, Chowdhury M (2020) Oort: informed participant selection for scalable federated learning. arXiv:2010.06081

Wang H, Kaplan Z, Niu D, Li B (2020) Optimizing federated learning on non-IID data with reinforcement learning. In: IEEE INFOCOM 2020-IEEE Conference on computer communications. IEEE, pp 1698–1707

Xin S, Zhuo L, Xin C (2022) Node selection strategy design based on reputation mechanism for hierarchical federated learning. In: 2022 18th International conference on mobility, sensing and networking (MSN). pp 718–722

Taïk A, Mlika Z, Cherkaoui S (2021) Data-aware device scheduling for federated edge learning. IEEE Trans Cogn Commun Netw 8(1):408–421

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Van Hasselt H, Guez A, Silver D (2016) Deep reinforcement learning with double Q-learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 30

Mnih V, Badia AP, Mirza M, Graves A, Lillicrap T, Harley T, Silver D, Kavukcuoglu K (2016) Asynchronous methods for deep reinforcement learning. In: International conference on machine learning. PMLR, pp 1928–1937

Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. arXiv:1708.07747

LeCun Y (1998) The MNIST database of handwritten digits. https://www.yann.lecun.com/exdb/mnist/

Krizhevsky A, Hinton G et al (2009) Learning multiple layers of features from tiny images

Funding

This work is supported in part by the National Key R &D Program of China under grant 2022YFF0604502, Beijing Natural Science Foundation (4232024), and National Natural Science Foundation of China (61872044).

Ethics declarations

Consent to Publish

We confirm that the work has not been published before, the publication has been approved by all co-authors and our contribution is original and that we have full power to make this consent.

Conflict of Interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: 4 - Track on IoT

Guest Editor: Peter Langendoerfer

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Z., Dang, Y. & Chen, X. Node selection for model quality optimization in hierarchical federated learning based on deep reinforcement learning. Peer-to-Peer Netw. Appl. (2024). https://doi.org/10.1007/s12083-024-01660-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12083-024-01660-8