Abstract

Flexibility is a main mean to create resilient supply chains. The most flexible resources are often human resources but creating high, homogenous skill levels is not cost efficient. Heterogenous labour provides an alternative. The literature on Dual Resource Constrained (DRC) shops modelled heterogeneous labour with multi-functionality and efficiency matrices that indicate if a worker can perform at a station and according to which level of efficiency. However, this literature typically considered these matrices as a given environmental factor rather than a factor under firm control. Consequently, it typically neglected literature that can be used to guide matrix design. In response, this study draws on the literature on unbalanced lines to test eight different matrices to guide worker training. Discrete event simulation is then used to evaluate their performance in a DRC pure flow shop with high variability in demand and processing times. Results demonstrate that the matrix design impacts performance and that an unbalanced design has the potential to improve performance compared to a balanced design and full multi-functionality. Specifically, the bowl configuration shows much promise, which further confirms the “bowl phenomenon”. However, performance gains are dependent on the combination of When, Where and Who rules used to guide the worker assignment decision. So, the decision on worker training is also contingent on the decision which worker assignment rule to use. Findings have important implications for research and practice, providing guidance on how to design more resilient shops and supply chains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Flexible capacity is widely recognized as a mean to accommodate uncertain market demand (e.g. Altendorfer et al. 2020; Chou et al. 2016; Fan et al. 2022). This potential becomes even more important with the increase of uncertainty caused by changes in consumer behaviour patterns witnessed recently (Ardolino et al. 2022). These changes together with shortages in manufacturing resources (Ivanov and Dolgui 2022) lead to systemwide disruptions that effect whole supply chains. Disruptions, such as those caused by COVID-19, create ripple and bullwhip effects that create uncertainties in demand within and across whole industries (e.g. KEK et al. 2022). In this context, flexibility is a main mean for firms to build resilience capability into their systems to survive and grow (Piprani et al. 2022; Badhotiya et al. 2022), specifically reconfigurability (Pansare and Yadav 2022).

The most flexible resource on the shop floor is often the worker; in practice workers are often flexible and can be shifted from one station to another (Erhard 2019; Hopp and Oyen 2004) reconfiguring systems at short notice. While it is obvious that the best performance can be obtained if workers are homogenous in terms of high levels of multi-functionality and efficiency, it is often not desirable to rely on such a highly skilled workforce. First, there is a trade-off between efficiency gains through standardization and flexibility (Assad Neto et al. 2021). Second, employing skilled workers is generally limited in real-world scenarios because of high wages in high-cost environments, such as developed economies (Mirzaei et al. 2021), and the absence of trained labour in less developed contexts (Zheng and Wang 2016).

Using a heterogeneous (Kim and Nembhard 2013; Lian et al. 2018) instead of a homogenous workforce can provide significant reduction in training needs and costs (Brusco and Johns 1998). If the workforces is heterogenous, then managers need to decide on the most efficient assignments of machines and workers, while keeping worker workload balanced (Vital-Soto et al. 2023). This decision has a major impact on efficiency and resilience. But in addition, managers also need to decide on worker training, since this determines the assignments that can be realized. Compared to a specialized system where specific tasks (or operations) can only be handled by one worker, cross-training creates a flexible system through overlapping skills that enable tasks to be processed by alternative workers (Bokhorst and Gaalman 2009). This enabling may occur in terms of so-called multi-functionality, which is the number of tasks a worker can execute, and in terms of efficiency, which is the rate at which a task can be executed.

There exists a broad literature on heterogeneous labour, specifically in the context of Dual Resource Constraint (DRC) shops, where workers are considered a constraining resource that can be transferred across a given set of stations (Xu et al. 2011; Araz and Salum 2010). However, this literature considers worker skills to be an environmental variable, and performance of, for example, labour assignment rules are evaluated taking this environmental variable into account. Meanwhile, there also exists a broad literature considering the DRC scheduling problem. But only very few studies have considered heterogeneous worker. For example, Liu et al. (2022) considered processing time reductions resulting from increased experience at the job level and motivation at the workforce level, Dunke and Nickel (2022) combined a multi-method approach to solve the DRC job shop scheduling problem with data uncertainty and worker efficiency variation over time, Lei and Guo (2014) considered a DRC interval job shop with heterogeneous workers considering environmental objectives, while Zheng and Sui (2019) considered the same problem but with the objective to minimize energy consumption. Geurtsen et al. (2023) provides an overview of the literature on resource scheduling that consider resources that reduce the processing time (speed-up resource). A major shortcoming of this scheduling literature is its restriction to deterministic contexts, i.e. a solution for a given set of jobs is obtained. But in practice, many shops experience stochastic contexts, specifically shops that operate as make-to-order shops, and were jobs can arrive at any moment in time. This makes the use of scheduling questionable. Moreover, again the worker skill levels are seen as environmental factors not under the influence of management. This means, some standard levels of heterogeneity are tested, and no specific matrices designed with the objective to improve performance.

To the best of our knowledge, the only two existing study that test different multi-functionality and efficiency matrices for heterogeneous labour with the objective to guide worker training and eventually improve performance are Brusco and Johns (1998) and Bokhorst et al. (2004a, b). Brusco and Johns (1998) tested different cross-training configurations, showing results that asymmetric efficiency matrices always outperformed symmetric matrices, which reinforces the importance of matrix design for performance improvement. However, these results are based on integer programming models and cost calculations. The actual operational impact of different labour multi-functionality and efficiency matrices on performance remains unknown. Bokhorst et al. (2004a, b) found that in parallel and job shop structures an equal multi-functionality and an equal machine coverage are important for achieving an optimal mean flow, whilst serial structures require more attention and unequal multi-functionality. Our study extends Brusco and Johns (1998) and Bokhorst et al. (2004a, b) by considering heterogeneous efficiencies and by drawing on the wider Operations Management literature to identify rules for matrix design. For example, the bowl phenomenon (McNamara et al. 2016) would suggest an increase in capacity at central stations through either increasing worker efficiency or multi-functionality at these stations. However, none of the matrices presented in the DRC literature creates this bowl shape. In this study, we therefore explore the broader Operations Management literature to identify different matrix designs for a DRC shop with heterogeneous labour to answer the following research question that motivates the study “What is the best design for multi-functionality and efficiency matrices in a high variety make-to-order flow shop with heterogeneous labour?”

We then use simulation to assess performance of the different designs in a DRC pure flow shop with high variability in demand and processing times. From a theoretical perspective, this integrates different related but so far unconnected streams of literature. It also reframes the DRC problem as a design problem, which is important when shops and entire supply chains are rebuild for resilience. From a practical perspective, we seek to provide guidance to managers on worker training in this type of shop, which is a common shop type, e.g. for companies that focus on producing prototypes and making small runs, e.g. of 1 to 4 units, sometimes referred to as “one offs” (Rossini et al. 2019; Thürer et al. 2015).

The remainder of this paper is structured as follows. In Section 2, we review the relevant DRC literature on heterogeneous labour in terms of multi-functionality and efficiency, and the literature on unbalanced lines. The simulation model used to evaluate performance is then described in Section 3 together with the design rules that emerged from the literature and the associated training matrices to be considered in our study. Finally, the results are presented, discussed, and analysed in Section 4 before conclusions are presented in Section 5, where managerial implications, limitations, and future research directions are also outlined.

2 Literature review

This study links into two streams of literature. First, the DRC literature that considers a heterogeneous workforce in terms of multi-functionality and efficiency. Second, the literature on unbalanced lines. Both are discussed in Sections 2.1 and 2.2 respectively.

2.1 Heterogeneous labour in DRC shops

This section reviews the literature on heterogeneous multi-functionality and heterogeneous efficiency. Note that we only focus on literature in stochastic environments. This excludes most of the literature on advanced scheduling techniques (e.g. Lei and Guo 2014, 2015; Li et al. 2016; Zheng and Wang 2016; Zhang et al. 2017; Li et al. 2016; Liu et al. 2022; Dunke and Nickel 2022; Gong et al. 2018), which presupposes that demand and capacity availability are known in advance and therefore deterministic. Most literature on stochastic environments approaches the problem of worker assignment in DRC shops via the greedy heuristic of When?, Who?, and Where?. The When rule just triggers the assignment decision and needs to be executed even for more advanced assignment procedures. Meanwhile, the Who rule is only meaningful if there is more than one worker available, while the Where rule is only meaningful if there is more than one station in need of a worker. Given our stochastic environment, it is very unlikely that both situations occur simultaneously, except for low load periods. We therefore consider that the focus on studies that use above greedy heuristic is justified.

2.1.1 Heterogeneous multi-functionality

Labour multi-functionality has been defined as the number of different departments or stations at which a worker can perform operations (Costa and Portioli-Staudacher 2021; Fry et al. 1995; Park and Bobrowski 1989). Nelson (1967) was one of the first to introduce the concept of labour multi-functionality, but then only considered a completely inflexible scenario, where each worker can work at only one station, and a completely flexible scenario, where each worker can work at any station. These scenarios were extended by two scenarios where worker can work at two or three stations in a five station DRC job shop by Park and Bobrowski (1989), while Park (1991) also included the scenario where a worker can work at four stations. A major conclusion from these earlier studies is that most of the benefits associated with worker multi-functionality can be realized without extreme high multi-functionality levels or full multi-functionality with all workers cross-trained on all machines (e.g. Fry et al. 1995; Campbell 1999). Fry et al. (1995) consequently only considered scenarios where workers can work at 1, 2 and 3 stations.

Felan and Fry (2001) later suggest that it is better to have a mix of workers with no multi-functionality and some workers with very high multi-functionality rather than all workers with equal multi-functionality. Meanwhile, Bokhorst et al. (2004a, b), Slomp et al. (2005) and Yue et al. (2008) incorporate the concept of chaining (Jordan and Graves 1995; Iravani et al. 2007) in cross-training configurations that link workers to machines. For example, Yue et al. (2008) showed that a minimum level of heterogeneous multi-functionality is desired and that long chain, where all workers have overlapping capabilities, is in all situations better than several short chains, where capabilities do not always overlap.

2.1.2 Heterogeneous efficiency

Labour efficiency is the level of service rate at which a worker can work at a station (Bobrowski and Park 1993). If a worker is assigned to a new station, then a productivity loss is likely to occur. The concept of multi-functionality does not consider this effect; rather workers are assumed to either have the maximum level of efficiency or to be unable to work at a station (see e.g. Thürer et al. 2019). This would be an ideal situation where operators are perfectly interchangeable without productivity losses due to different sets of skills or experience, an assumption first questioned by Bobrowski and Park (1993). Bobrowski and Park (1993) used three heterogeneous labour efficiency matrices: (i) each worker is fully efficient at one station but only realizes 95% of its service rate at the next four downstream stations and 85% at the remaining stations; (ii) the overall aggregate worker efficiency is decreasing, and the worker realizes 95% of its service rate at the next downstream station, 90% at the three following downstream stations and 80% at the remaining stations; and (iii) workers are divided in two groups, a senior group made up of five workers and a junior group made up of the four remaining workers, and junior workers are able to operate stations with an efficiency that can at best match the worst of the senior worker’s efficiency for those stations. Results in Bobrowski and Park (1993) show that efficiency is likely to dominate the labour assignment rules in contexts with heterogeneous efficiencies. This result was later confirmed by Bokhorst et al. (2004a, b), who found that heterogeneity outperforms homogeneity because the former increases the effectiveness of the Who rule. A similar finding was obtained by Thürer et al. (2020). While this result is important in terms of assignment rules, no real information on the impact of differences in the efficiency matrices could be gained. As expected, reducing worker’s average efficiency across stations results in worse performance.

The study by Bobrowski and Park (1993) was later extended by Malhotra and Kher (1994) who modelled three different heterogeneous efficiency matrices. In the first, workers where subdivided according to efficiency, e.g. worker A 100% efficient at all stations, worker B 95% efficient at all station and so on. In the second, stations where subdivided according to efficiency, i.e. all workers are 100% efficient at station 1, 95% at station 2 etc. The third resembles the matrices in Bobrowski and Park (1993). According to Malhotra and Kher (1994), rather than having both heterogeneous workers and stations (the third matrix) it is better to train workers in order to have some stations that always work faster, for example the first machine, or having some workers completely interchangeable and some others less efficient; the latter is similar to the senior and junior worker concept proposed by Bobrowski and Park (1993) and overlaps with the results in Felan and Fry (2001) on labour multi-functionality.

2.2 Theoretical background on unbalanced lines

Line balancing is an important problem in the Operations Management literature and has consequently received broad research attention (see the reviews by Boysen et al. 2008; Battaïa and Dolgui 2013). Line balancing may hereby focus on an equal distribution of work content across the stations of a line (e.g. Parvin et al. 2012), or on an equal distribution of capacity. The focus of this study is on the latter. Balanced lines – where service time means of stations are all equal – generally lead to best performance (Salveson 1955), but some studies also recognized the importance of unbalanced lines (i.e. Lau 1992; Shaaban and McNamara 2009), since they can perform equally well as balanced lines in certain contexts (McNamara, Shaaban, and Hudson 2016) but require less capacity. Unbalanced line hereby means that stations do not operate at the same service rate (McNamara et al. 2016), i.e. resulting in some stations being faster in processing a job than some other stations. This is equivalent to the impact of a heterogeneous labour force discussed in the context of DRC shops above, since the service rate is a result of the capacity, which itself is realized by the machine and the worker in a DRC shop. Machine and worker can constrain the service rate, being the impact of the worker typically more pronounced in less automated process, such as in high variety contexts.

One of the most important discoveries in the area of unbalanced lines was the “bowl phenomenon”. The bowl phenomenon was first observed by Hillier and Boling (1967), who demonstrated that the output of a serial production line with up to 4 stations with exponentially distributed processing times and limited inter-station buffers could be increased by unbalancing the line: faster stations should be positioned in the middle of the line. Hillier and Boling (1967, 1979) further argued that a symmetrical bowl shape was the optimal for all line length, while El-Rayah’s (1979) findings supported the bowl phenomenon for lines having up to 12 stations, and Pike and Martin’s (1994) for lines with 30 stations.

More recently, there has been a resurgence of interest in the bowl phenomenon (e.g. Lopes et al. 2020; McNamara et al. 2016; Romero-Silva and Shaaban 2019) and the literature explored the bowl phenomenon considering different aspects, such as the effect of variance of the operations times, the effect of the buffer capacity and the effect of considering unreliable lines. In these contexts, the bowl phenomenon may not always lead to the expected improvements (e.g. Romero-Silva et al. 2021). In general, the bowl phenomenon is argued to be more important in context with limited inventory buffer size. In this study, we will assess whether it has an impact in DRC shops where labour is heterogeneous.

3 Simulation

We model companies whose production follows a dominant sequence flow, i.e. a production line. A simulation model of a pure flow shop has been implemented in Simio, a specialized software for discrete event simulation (Rossini and Portioli-Staudacher 2018; Kundu et al. 2018). A stylized standard model of a pure flow shop will be used in this study to avoid interactions that may otherwise interfere with our understanding of the main experimental factors (Portioli-Staudacher et al. 2020). While any individual flow shop in practice will differ in many aspects from our stylized environment, the model used in this study captures the job and shop characteristics of high variety make-to-order flow shops, i.e. high processing time variability,

3.1 Overview of modelled shop and job characteristics

We have kept our flow shop relatively small since this allows causal factors to be identified more easily. Small systems provide a better insight into the role of operating variables and, in practice, large systems can often be decomposed into several smaller systems (Bokhorst et al. 2004a, b). The shop has five stations with unlimited buffers, which also allows for comparison with previous DRC studies (Davis et al. 2009; Park and Bobrowski 1989; Park 1991). Each station has two identical machines. We choose a staffing level of 50% (e.g. Felan et al. 1993). In other words, there are five workers that can be shifted across the ten machines and worker’s availability constrains the capacity. Only if a worker is assigned to a machine, the capacity of the machine is realized and orders can be processed. As in previous DRC research, we consider machine capacity to be constant and we instead focus on different levels of labour capacity (Thürer et al. 2019; Thürer et al. 2020).

As in most previous DRC studies, travel time is considered negligible. Operation processing times follow a 2-Erlang distribution with a mean equal to 60 time units (e.g. Thürer et al. 2020; Bokhorst et al. 2004a, b). Set-up times are considered sequence independent and consequently part of the operation processing time. The inter-arrival time of jobs follows an exponential distribution with a \(\lambda\) that ensures that workers are, on average, occupied for 90% of their time if a worker is chosen at random among the set of available workers. Due dates are set exogenously by adding a random allowance factor, uniformly distributed between 1600 and 1900 time units, to the job entry time. The minimum is set considering the maximum processing time and the routing length of jobs that is equal to the number of stations, five. The maximum was set such that the percentage tardy is neither too high nor too low. The percentage tardy should not be too high to avoid certain adverse effects, since rules that reduce the variance of lateness across jobs may even lead to an increase in the percentage tardy when due date allowances are too tight on average. The percentage tardy should not be too low to avoid our results being affected by incidental effects, as very few jobs would be responsible for the performance of the shop. Finally, the first come first served rule is used for priority dispatching.

3.2 Matrix design

The following design rules could be identified from the literature:

-

Design Rules from the Literature on Heterogeneous Multi-Functionality (Section 2.1.1): High levels of multi-functionality are not required. Rather, a small number of highly skilled workers (being all other workers lower skilled) is preferred to a scenario where all workers are equally skilled. Long overlapping chains are preferred.

-

Design Rules from the Literature on Heterogeneous Efficiency (Section 2.1.2): Efficiency matrices should focus on equipment heterogeneity, i.e. the first station works faster than the others, or labour heterogeneity, i.e. some workers are more proficient on all machines then the others. Asymmetric chaining configuration, such as the CT4 long chain configuration in Brusco and Johns (1998), are preferred to symmetric matrix configurations, such as the CT1 small chain configuration in Brusco and Johns (1998).

-

Design Rules from the Literature on Unbalanced Lines (Section 2.2): Placing faster workers at middle stations (bowl shape) can lead to lower throughput times. Placing faster workers at stations either at the beginning or the end of a line (inverted bowl) can lead to improvements in total completion time. Placing workers from slow to fast following the workflow (ascending order) can reduce work-in-process.

While these rules were obtained across differing contexts, and may not be directly comparable and transferable, they provide important indications on which matrix designs to consider in our study. Given these rules, eight different matrices for multi-functionality will be tested in this study as follows:

-

1.

Bowl: more workers are trained for central stations;

-

2.

Inverted bowl or bell: less workers are trained for central stations;

-

3.

Downstream increasing (DSI) or monotone ascending: less workers are trained for upstream stations;

-

4.

Upstream increasing (USI) or monotone descending: more workers are trained for downstream stations;

-

5.

Long chain: all workers are involved in a long chain of skill overlaps with the average number of skills that overlaps equal to 2 (as in Yue et al. 2008);

-

6.

Complete Long Chain: all workers are involved in a chain of skill overlaps with the average number of skills that overlaps equal to 2 (as in Yue et al. 2008) and the long chain is completed and closed with the first worker being able to operate on the fifth station (Inman et al. 2004);

-

7.

Small chain: two short chains with two workers being involved in each chain of skill overlaps with the average number of skills that overlap equal to 2 (as in Yue et al. 2008); and,

-

8.

Full multi-functionality (Full): all workers can work at all stations. This homogeneous scenario is considered to provide a baseline for comparison with the previous matrices.

In terms of efficiency matrices, we consider workers to be able to work at each station, however at different proficiency. For this reason, we only consider small, long chain and complete long chain. The different matrices for multi-functionality and efficiency considered in this study are summarized in Table 1, which indicates the stations at which a worker can work for the multi-functionality scenarios, and at which a worker realizes 100% efficiency for the efficiency scenarios. At other stations, workers can either not work (multi-functionality scenarios) or work at reduced efficiency. The efficiency loss \(\alpha\) of each worker is modelled at three levels: 10%, 20% and 30%, meaning that the worker needs p/(1-\(\alpha\)) time units to finish the job, where p is the processing time if the job was processed by a fully efficient worker. Since the average efficiency of the workers is reduced, we adjust the inter-arrival rate of jobs as in e.g. Thürer et al. (2019) to ensure comparable worker utilization levels across experiments. This adjustment is realized by simply multiplying the inter-arrival rate by the average level of efficiency.

3.3 Labour assignment rules

There are three major worker assignment decisions. First, when should the worker assignment decision be executed (i.e. the When Rule)? Second, for the case that there are multiple stations without a worker and therefore available to receive workers, where should a worker go (i.e. the Where Rule)? And third, for the case that more than one worker is not assigned to a station and is therefore available, who should be assigned to the station (i.e. the Who Rule)?

In this study, two When Rules are considered: (i) centralized, where a worker is eligible for transfer after each job completion; and, (ii) decentralized (exhaustive), where a worker is eligible for transfer once the queue of the current station is empty (or exhausted). We also consider three Where Rules: (i) maximum number of jobs in queue (Max Jobs), where a worker is transferred to the station with the longest queue measured in terms of number of jobs; (ii) earliest due date (EDD), where a worker is transferred to the station with the queue that contains the job with the most urgent due date; and, (iii) maximum efficiency (Max Eff), where a worker is transferred to the station where it has the maximum efficiency. For all three, the selected station may be the current station or a station without a worker. The Max Jobs rule was included as a standard rule that has been widely applied in previous research. The EDD rule was included due to its good performance in Jensen (2000), while the Max Eff rule should be considered because we consider a workforce that is heterogeneous in proficiency. Other rules, such as the shortest processing time rule were not considered since they did not lead to performance improvements in previous studies (see, e.g. Park and Bobrowski 1989). Finally, we also consider two Who Rules: (i) the Random rule, where the worker will be chosen randomly; and, (ii) the Max Eff rule, where the most efficient worker will be chosen from the set of available workers.

3.4 Experimental design and performance measures

The experimental factors are summarized in Table 2, for multi-functionality and Table 3, for efficiency. A full factorial design with 32 (2x2x8) scenarios for multi-functionality and 180 (2x3x2x5x3) scenarios for efficiency was used. Each scenario was replicated 100 times. Results were collected over 1.000.000 time units following a warm-up period of 400.000 time units. These parameters allowed us to obtain stable results while keeping the simulation runtime to a reasonable level. Three main performances measures are used to assess both workload balancing and delivery performance: (i) the lead time (i.e. the time when a job is completed minus the time when it entered the shop); (ii) the percentage of tardy jobs; and, (iii) the mean tardiness – that is Tj=max (0,Lj), with Lj being the lateness of job j (i.e. the actual delivery date minus the due date of job j). We do not explicitly consider training costs since these costs are idiosyncratic to each company. Similar holds for the revenue increase realized through the different matrices, to which training costs would have to be compared. We therefore do not consider cost, but companies can use the operational results to estimate costs applying their idiosyncratic cost and revenue factors.

4 Results

Statistical analysis of our results was first conducted using an ANOVA (Analysis of Variance). Given our experimental set-up, we conducted two ANOVA’s, one for multi-functionality and one for efficiency. For the efficiency related experiments, efficiency loss was treated as blocking factor, which is commonly used to model known sources of variance. Results are not presented given space restrictions. Most main effects, two-way interactions, three-way interactions, and the four-way interaction (for efficiency experiments) were shown to be statistically significant at \(\alpha =\) 0.05. Detailed results will be presented next to explore these performance differences. Section 4.1 first focusses on multi-functionality. Section 4.2 then presents the results for efficiency matrices. Finally, a discussion of results is presented in Section 4.3.

4.1 Performance assessment for multi-functionality

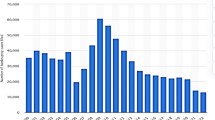

The results obtained for the experiments considering the eight different matrix configurations for multi-functionality are summarized in Fig. 1. We structured the presentation of results along the performance of the different matrix configurations, given that this is the focus of our study. The performance of each configuration is highly dependent on the assignment rules applied. The impact of assignment rules in isolation will be discussed further below.

As somewhat expected, Full results in best performance. However, Bowl realizes comparable performances, especially for Max Jobs (with both Centralized and Decentralized When Rule) and EDD (with Decentralized When Rule). The USI configuration realizes good performance with EDD and Centralized When Rule. With USI only 3 workers can work at station 4 and only 2 workers can work at station 5. EDD incentivizes the transfer of worker to downstream stations, which partly overcomes this shortage specifically if a Centralized When Rule is used, and workers can move more frequently. This also explains why Bell and DSI show poor performances with EDD and a Centralized When Rule. In general, USI performs well with EDD and poorly with Max Jobs, while DSI performs well with Max Jobs and poorly with EDD. Bell performs well with Max Jobs and a Centralized When Rule.

Meanwhile, Small Chain performs better than Long Chain and Closed Long Chain. Long Chain shows very poor performance with Max Jobs and a Centralized When Rule. Under this setting Long Chain leads to larger queues at downstream stations, especially in front of station 5, as shown in Table 4.

These larger queues lead to workers 4 and 5 having a higher utilization compared to other workers when Long Chain is used. This is due to station 4 and 5 only realizing a 50% utilization when worker 4 is at station 3. It only occurs with Max Jobs since the likelihood that worker 4 is at station 3 is higher compared to EDD. Closing the Long Chain improves performance only when Max Jobs and a Centralized When Rule is used, because at station 5 the utilization is increased, and consequently there are no large queues at downstream stations. Meanwhile, if the Max Jobs Where Rule is combined with a Decentralized When Rule, the Long Chain and Small Chain matrices have comparable performances. These findings are not in line with Yue et al. (2008), where long chains – in which all workers have overlapping capabilities – perform better than small chains, in a job shop with a Max Jobs Where Rule and a Centralized When Rule. This will be discussed further in Section 4.3 below.

Finally, and in terms of labour assignment rule, we observe that the Decentralized (exhaustive) outperforms the Centralized When rule with Max Jobs, except for Bell. For Bell only worker 2 and 4 can work at station 2 and 4, while worker 3 cannot. A Centralized When Rule increases the chances that a worker is shifted. Meanwhile, a EDD Where Rule performs better than Max Jobs for Full, Bowl and USI if the Decentralized When Rule is applied and for Small Chain, Long Chain, USI and Full if a Centralized When Rule is applied.

4.2 Performance assessment for efficiency

The results for the experiments considering the five different matrix configurations for efficiency are summarized in Fig. 2 for the Max Eff Who Rule. The results for the Random Who rule are provided in an Appendix since this factor was not significant. Note that results across the three different levels of efficiency loss \(\alpha\) (0,3; 0,2 and 0,1) are not directly comparable since an adjustment in inter-arrival time was required to ensure equal worker utilization. Differences across \(\alpha\) are therefore not discussed, which is justified by the focus of our study, which is on the impact of matrices for each setting of \(\alpha\). We again present results along the performance of the different matrix configurations, and the impact of assignment rules in isolation further below.

For all setting of \(\alpha\), Bowl performs better than Full if a Max Eff Where Rule and a Decentralized When Rule is used. This confirms the findings in the literature that heterogeneous configurations are better than homogeneous configurations. Differences compared to the results for multi-functionality can be explained by inter-arrival times being adjusted to account for differences in efficiencies across matrices. So, in a sense the comparison to Full is fairer for efficiency than for multi-functionality. The Bowl, Bell and DSI configuration perform poorly if a Centralized When Rule is used. This is explained by the fact that with Max Eff, workers tend to stay where they are more efficient, and a Centralized When Rule aggravates this effect. Meanwhile, USI works well with EDD, as in the multi-functionality scenario. DSI has workers that have an efficiency loss at upstream stations, however, when efficiency loss is low, DSI performs similarly to USI. This is also the reason why with Max Jobs, DSI outperforms Full, especially with a Decentralized When Rule while Bowl and Bell perform poorly.

Finally, in terms of worker assignment rule, we observe that with Max Jobs and EDD Where Rule, a Centralized When Rule outperforms a Decentralized When Rule for each configuration for low levels of \(\alpha\). But when \(\alpha\) is high, Decentralized is better for DSI and Full (with Max Jobs) and for Bowl and Bell (with EDD). In general, EDD outperforms Max Jobs and the Max Eff Where rule outperforms EDD, especially when \(\alpha\) is high. As shown in previous literature, if labour is heterogeneous in terms of proficiency, then efficiency considerations tend to dominate the choice of assignment rule.

4.2.1 Robustness analysis

Results in Fig. 2 highlight that with \(\alpha\) equal to 0,3, in addition to Bowl, also USI and Bell perform better than Full while DSI performs similarly to Full. This means that when workers select the station where they are most efficient, configurations where most efficient workers are placed at the central or upstream stations outperform configurations where workers are all equally efficient or where most efficient workers are placed downstream. This raises the questions: What would happen if the most efficient workers are placed at the second and fourth station? Do the previous findings hold? To answer these questions, we designed a new matrix where the maximum average efficiency is at station 2 and 4, while the least efficient stations are stations 1, 3 and 5. This matrix creates “2 peaks”. It takes from Bowl that the first and last stations have the lowest efficiency, and from Bell that the central station has the lowest efficiency. Results in Fig. 3 show that this new matrix performs well with EDD, when it performs better than Bowl, Bell and USI, especially with a Max Eff Who rule and a Decentralized When rule. This is because there are more efficient workers on downstream stations, especially at station four. In accordance with previous results, the new matrix does not perform well with Max Jobs, especially with Decentralized and with Max Eff Where rule. This largely confirms our previous findings (Fig. 4) .

4.3 Discussion of results

Our results confirm the DRC literature in that a heterogeneous workforce can perform similar to a homogeneous workforce in terms of multi-functionality and that a heterogeneous workforce can outperform a homogenous workforce in terms of efficiency (Bobrowski and Park 1993; Brusco and Johns 1998; Bokhorst et al. 2004a, b). It is further argued here that this is due to the experimental setting, being parameters typically adjusted in experiments related to efficiencies to create a fair comparison. Our results further confirm the literature on unbalanced lines in that placing more flexible workers at the middle stations is the best configuration (Shaaban and McNamara 2009). Our results extend this literature by showing that the bowl phenomenon is relevant in lines without limited inventory buffers if capacity is dual resource constrained. This is an important finding extending the applicability of the bowl phenomenon integrating two related but hitherto unconnected streams of literature. Moreover, results show that USI can outperform Bowl if combined with an EDD Where and a Centralized When Rule. In general, which matrix performs best is largely dependent on the assignment rules applied. There is a strong interaction between cross-training configuration and assignment rules. Another important implication is that the Where Rule appears to be more important in our study than the When Rule. This is in contrast to the general guideline in the DRC literature that the Where Rule has less of a performance impact than the When Rule (Xu et al. 2011). For example, USI and 2 Peaks should be combined with EDD, while DSI should be combined with Max Jobs Where rule.

In terms of assignment rules, our results confirm that EDD tends to move workers downstream (Thürer et al. 2019). At the same time, the Max Eff rule prevents workers to be shifted amongst stations. Both effects determine which matrices work best with these two rules, and which matrices should not be combined with these two rules. Bell, and DSI should not be combined with an EDD Where rule in the case of heterogeneous multi-functionality, USI should not be combined with Max Jobs while it performs well with EDD, and Long Chain should not be combined with Max Jobs. For heterogeneous efficiency, DSI and 2 Peaks should not be combined with a Max Eff Where rule; this is also valid for Bell, if combined with a Centralized When rule. Meanwhile, Bowl should not be combined with a Centralized Max Eff Where rule, especially if the efficiency loss is low. It performs however best with a Decentralized Max Eff Where rule. Finally, USI should be combined with a Max Eff Where rule or EDD, depending on the efficiency loss, DSI and Bell with Max Jobs and 2 Peaks with a EDD Where rule.

Finally, our results question Yue et al.’s (2008) argument that a Long Chain configuration outperforms a Small Chain since it allows for a better balance of the workload. This finding was obtained in a job shop where more than one worker can work at each station. In a pure flow shop, small chain may outperform long chain, unless the long chain is closed and a Centralized When and Max Jobs Where rule applied. The likelihood that downstream stations have a low utilization rate increases for Long Chain because worker 4 is shifted to work at station 3. Given the directed flow in the pure flow shop, this causes a build-up of the queue in front of station 4. This has important implications for future research and practice. If a directed flow is considered, it may be preferable to have some skills overlapping between some workers rather than having all workers with overlapping skills.

5 Conclusions

A major aim of the DRC literature is to exploit labour flexibility by shifting workers from one station to another to improve throughput times and delivery related performance. In this context, a heterogeneous workforce can provide significant reduction in training needs and costs compared to a homogenous workforce. Heterogeneous labour, specifically in the context of DRC shops, has been modelled either in the form of a multi-functionality matrix, which indicates whether a worker can work at a station, or an efficiency matrix, which gives the efficiency of each worker at each station. However, these matrices are typically considered to be an environmental factor, and, to the best of our knowledge, existing research did not seek to design matrices with the objective to improve performance. In response, this study asked: What is the best design for multi-functionality and efficiency matrices in a high variety make-to-order flow shop with heterogeneous labour? This research advances existing DRC theory by showing: (i) which are the best designs for multi-functionality and efficiency matrices in terms of performance improvement in a DRC flow shop; and (ii) how the performances of those matrices are dependent on the When, Where and Who worker assignment rules. A major contribution of this study is to highlight the potential that lies in the conscious design of worker skill matrices. Specifically, a bowl-shaped design showed much promise. However which design to choose is contingent on the labour assignment rule applied. In general, results show that a heterogeneous workforce can realize performance that is comparable or even superior to a homogeneous workforce. This overcomes the trade-off between increases in flexibility and increases in cost. It allows to build resilient shops and supply chains cost efficiently, which is of utmost importance in the current uncertain business environment.

5.1 Managerial implications

Our results show that lead time and delivery related performance can be improved if certain unbalances are created across stations. Our results answer the practical managerial question How to best unbalance a DRC line? Or better How to cross-train workers on a line in order to maximise lead time and delivery related performances? Managers can use the result of the present research to design training programs that create the best unbalances according to the worker assignment rules used. In fact, results also highlight the importance of choosing the right Where Rule in combination with a certain multi-functionality or efficiency configuration. For example, when workers are more trained to work at upstream stations, an EDD rule should be used, while, on the contrary, when workers are more trained to work on downstream stations, a Max Jobs rule should be used. It is of utmost importance, not to take the worker cross training decision without the decision on worker assignment rule that will be applied. Results also advise to prefer small chains of skills overlap over long chains of skills overlap in pure flow shops. This is different from findings for job shops and avoids low utilizations at downstream stations and the ensuing queue built up at those stations incurred otherwise.

5.2 Limitations and future research

A main limitation of our study is that we have only considered one layout configuration, which is a DRC pure flow shop. Future research could consider different layout configurations, such as job shops. The experimental setting could also be extended by incorporating other environmental factors, such as different coefficients of variation for the processing times, or different degrees of due date tightness. We recognize these limitations, but we also consider our experimental design to be justified by the need to keep our study reasonably focused. Future research could also remove some of the simplifying assumption of the model, for example, by considering transfer time, or learning and forgetting effects of the workers. We considered worker efficiency and multi-functionality to be static in order to keep our study focused, but fatigue or forgetting are likely to create dynamic efficiencies in practice. Future research could assess how dynamic efficiencies effect our results. Finally, future research could also consider the impact of higher-level planning functions, such as order release rules, which focus on line balancing in terms of work content. The literature reports that the bowl phenomenon is even more important in context with limited buffer size.

Data availability

Data are made available on request.

References

Altendorfer K, Schober A, Karder J, Beham A (2020) Service level improvement due to worker cross training with stochastic worker absence. Int J Prod Res 59(14):4416–4433

Araz ÖU, Salum L (2010) A Multi-Criteria Adaptive Control Scheme Based on Neural Networks and Fuzzy Inference for DRC Manufacturing Systems. Int J Prod Res 48(1):251–270

Ardolino M, Bacchetti A, Ivanov D (2022) Analysis of the COVID-19 pandemic’s impacts on manufacturing: a systematic literature review and future research agenda. Opera Manag Res 15(1–2):551–566

Assad Neto A, Sampaio J, Detro SP, Deschamps F, Santos EAP, Loures EDFR (2021) Reconciling process flexibility and standardization: a case study in the automotive industry. Opera Manag Res 14(3–4):507–524

Badhotiya GK, Soni G, Jain V, Joshi R, Mittal S (2022) Assessing supply chain resilience to the outbreak of COVID-19 in Indian manufacturing firms. Opera Manag Res 15(3–4):1161–1180

Battaïa O, Dolgui A (2013) A Taxonomy of Line Balancing Problems and Their Solution Approaches. Int J Prod Econ 142(2):259–277

Bobrowski PM, Park PS (1993) An Evaluation of Labor Assignment Rules when Workers are Not Perfectly Interchangeable. J Opera Manag 11:257–268

Bokhorst JA, Slomp J, Molleman E (2004) Development and evaluation of cross-training policies for manufacturing teams. IIE Transactions 36(10):969–984

Bokhorst JCA, Slomp J, Gaalman GJC (2004) On the Who-Rule in Dual Resource Constrained (DRC) Manufacturing Systems. Int J Prod Res 42(23):5049–5074

Bokhorst JAC, Gaalman GJC (2009) Cross-Training Workers in Dual Resource Constrained Systems with Heterogeneous Processing Times. Int J Prod Res 47(22):6333–6356

Boysen N, Fliedner M, Scholl A (2008) Assembly line balancing: Which model to use when? Int J Prod Econ 111(2):509–528

Brusco MJ, Johns TR (1998) Staffing a Multiskilled Workforce with Varying Level of Productivity: An Analysis of Cross-Training Policies. Decision Sci 29(2):499–515

Campbell GM (1999) Cross-utilization of workers whose capabilities differ. Mana Sci 45(5):722–732

Chou YC, Yen HY, Yu SJ (2016) Developing a temporary workforce transaction mechanism from risk sharing perspectives. Int J Prod Res 56(5):1865–1881

Costa F, Portioli-Staudacher A (2021) Labor flexibility integration in workload control in Industry 4.0 era. Opera Manag Res 14:420–433

Davis DJ, Kher HV, Wagner BJ (2009) Influence of workload imbalances on the need for worker flexibility. Com Indust Eng 57(1):319–329

Dunke F, Nickel S (2022) A multi-method approach to scheduling and efficiency analysis in dual-resource constrained job shops with processing time uncertainty. Com Indust Eng 168:108067

El-Rayah TE (1979) The effect of inequality of interstage buffer capacities and operation time variability on the efficiency of production line systems. Int J Prod Res 17(1):77

Erhard M (2019) Flexible staffing of physicians with column generation. Flex Serv Manuf J 33(1):212–252

Fan J, Zhang C, Liu Q, Shen W, Gao L (2022) An improved genetic algorithm for flexible job shop scheduling problem considering reconfigurable machine tools with limited auxiliary modules. J Manuf Syst 62:650–667

Felan JT, Fry TD, Philipoom PR (1993) Labor Flexibility and Staffing Levels in a Dual-Resource Constrained job Shop. Int J Prod Res 31(10):2487–2506

Felan JT, Fry TD (2001) Multi-Level Heterogeneous Worker Flexibility in a Dual Resource Constrained (DRC) Job-Shop. Int J Prod Res 39(14):3041–3059

Fry TD, Kher HV, Malhotra MK (1995) Managing Worker Flexibility and Attrition in Dual Resource Constrained Job Shops. Int J Prod Res 33(8):2163–2179

Geurtsen M, Didden JB, Adan J, Atan Z, Adan I (2023) Production, maintenance and resource scheduling: A review. Eur J Opera Res 305(2):501–529

Gong X, Deng Q, Gong G, Liu W, Ren Q (2018) A memetic algorithm for multi-objective flexible job shop problem with worker flexibility. Int J Prod Res 56:2506–2522

Hillier FS, Boling RW (1967) Finite Queues in Series with Exponential or Erlang Service Times-A Numerical Approach. Opera Res 15:286–303

Hillier FS, Boling RW (1979) On the Optimal Allocation of Work in Symmetrically Unbalanced Production Line Systems with Variable Operation times. Manag Sci 25(8):721–728

Hopp JW, Oyen PM (2004) Agile workforce evaluation: a framework for cross-training and coordination. IIE Transactions 36(10):919–940

Inman RR, Jordan WC, Blumenfeld DE (2004) Chained cross-training of assembly line workers. Int J Prod Res 42(10):1899–1910

Iravani SMR, Kolfal B, Van Oyen MP (2007) Call-center labor cross-training: it’s a small world after all. Manag Sci 53(7):1102–1112

Ivanov D, Dolgui A (2022) The shortage economy and its implications for supply chain and operations management. Int J Prod Res 60(24):7141–7154

Jensen JB (2000) The Impact of Resource Flexibility and Staffing Decisions on Cellular and Departmental Shop Performance. Eur J Opera Res 127:279–296

Jordan WC, Graves SC (1995) Principles on the Benefits of Manufacturing Process Flexibility. Manag Sci 41(4):577–594

KEK V, Nadeem SP, Ravichandran M, Ethirajan M, Kandasamy J (2022) Resilience strategies to recover from the cascading ripple effect in a copper supply chain through project management. Opera Manag Res 15(1–2):440–460

Kim S, Nembhard DA (2013) Rule mining for scheduling cross training with a heterogeneous workforce. Int J Prod Res 51(8):2281–2300

Kundu K, Rossini M, Portioli-Staudacher A (2018) Analysing the impact of uncertainty reduction on WLC methods in MTO flow shops. Prod Manuf Res 6(1):328–344

Lau HS (1992) On Balancing Variances of Station Processing times in Unpaced Lines. Eur J Opera Res 61(3):345–356

Lei D, Guo X (2014) Variable Neighbourhood Search for Dual-Resource Constrained Flexible Job Shop Scheduling. Int J Prod Res 52(9):2519–2529

Lei D, Guo X (2015) An Effective Neighbourhood Search for Scheduling in Dual-Resource Constrained Interval Job Shop with Environmental Objective. Int J Prod Res 159:296–303

Li J, Huang Y, Niu X (2016) A Branch Population Genetic Algorithm for Dual-Resource Constrained Job Shop Scheduling Problem. Com Indust Eng 102:113–131

Lian J, Liu CG, Li WJ, Yin Y (2018) A Multi-Skilled Worker Assignment Problem in Seru Production Systems Considering the Worker Heterogeneity. Com Indust Eng 118:366–382

Liu M, Liu Z, Chu F, Liu R, Zheng F, Chu C (2022) Risk-averse assembly line worker assignment and balancing problem with limited temporary workers and moving workers. Int J Prod Res 60(23):7074–7092

Lopes TC, Michels AS, Lüders R, Magatão L (2020) A simheuristic approach for throughput maximization of asynchronous buffered stochastic mixed-model assembly lines. Com Opera Res 115:104863

Malhotra MK, Kher HV (1994) An Evaluation of Worker Assignment Policies in Dual Resource-Constrained Job Shops with Heterogeneous Resources and Worker Transfer Delays. Int J Prod Res 32(5):1087–1103

McNamara T, Shaaban S, Hudson S (2016) Fifty years of the bowl phenomenon. J Manuf Syst 41:1–7

Mirzaei N, Hilletofth P, Pal R (2021) Challenges to competitive manufacturing in high-cost environments: checklist and insights from Swedish manufacturing firms. Opera Manag Res 14(3–4):272–292

Nelson RT (1967) Labor and Machine Limited Production Systems. Manag Sci 13(9):648–671

Pansare R, Yadav G (2022) Repurposing production operations during COVID-19 pandemic by integrating Industry 4.0 and reconfigurable manufacturing practices: an emerging economy perspective. Opera Manag Res 15:1270–1289

Park SP (1991) The Examination of Worker Cross-Training in a Dual Resource Constrained Job Shop. Eur J Opera Res 52:291–299

Park SP, Bobrowski PM (1989) Job Release and Labor Flexibility in a Dual Resource Constrained Job Shop. J Opera Manag 8(3):230–249

Parvin H, Van Oyen MP, Pandelis DG, Williams DP, Lee J (2012) Fixed task zone chaining: worker coordination and zone design for inexpensive cross-training in serial CONWIP lines. IIE Trans 44(10):894–914

Pike R, Martin G (1994) The bowl phenomenon in unpaced lines. Int J Prod Res 32(3):483–99

Piprani AZ, Jaafar NI, Ali SM, Mubarik MS, Shahbaz M (2022) Multi-dimensional supply chain flexibility and supply chain resilience: the role of supply chain risks exposure. Opera Manag Res 15(1–2):307–325

Portioli-Staudacher A, Costa F, Thürer M (2020) The use of labour flexibility for output control in workload controlled flow shops: A simulation analysis. Int J Indust Eng Comput 11(3):429–442

Romero-Silva R, Shaaban S (2019) Influence of unbalanced operation time means and uneven buffer allocation on unreliable merging assembly line efficiency. Int J Prod Res 57(6):1645–1666

Romero-Silva R, Shaaban S, Marsillac E, Laarraf Z (2021) The impact of unequal processing time variability on reliable and unreliable merging line performance. Int J Prod Econ 235:108108

Rossini M, Portioli-Staudacher A (2018) Supply Chain Planning: A quantitative comparison between lean and info-sharing models. Prod Manuf Res 6(1):264–283

Rossini M, Audino F, Costa F, Cifone FD, Kundu K, Portioli-Staudacher A (2019) Extending lean frontiers: a kaizen case study in an Italian MTO manufacturing company. Int J Adv Manuf Technol 104(5–8):1869–1888

Salveson ME (1955) The assembly line balancing problem. J Indust Eng 6(3):18–25

Shaaban S, McNamara T (2009) The effects of joint operations time means and variability unbalance on production line performance. Int J Manuf Technol Manag 18(1):59–78

Slomp J, Bokhorst J, Molleman E (2005) Cross-training in a cellular manufacturing environment. Com Indust Eng 48(3):609–624

Thürer M, Stevenson M, Protzman CW (2015) COBACABANA (Control of Balance by Card Based Navigation): An Alternative to Kanban in the Pure Flow Shop? Int J Prod Econ 166:143–151

Thürer M, Stevenson M, Renna P (2019) Workload Control in Dual Resource Constrained High-Variety Shops: An Assessment by Simulation. Int J Prod Res 57(3):931–947

Thürer M, Zhang H, Stevenson M, Costa F, Ma L (2020) Worker assignment in dual resource constrained assembly job shops with worker heterogeneity: an assessment by simulation. Int J Prod Res 58:6336–6349

Vital-Soto A, Baki MF, Azab A (2023) A multi-objective mathematical model and evolutionary algorithm for the dual-resource flexible job-shop scheduling problem with sequencing flexibility. Flexible Serv Manuf J, (in print)

Xu J, Xu X, Xie SQ (2011) Recent Developments in Dual Resource Constrained (DRC) System Research. Eur J Operation Res 215:309–318

Yue H, Slomp J, Molleman E, Van Der Zee DJ (2008) Worker Flexibility in a Parallel Dual Resource Constrained Job Shop. Int J Prod Res 46(2):451–467

Zhang J, Wang W, Xu X (2017) A Hybrid Discrete Particle Swarm Optimization for Dual-Resource Constrained Job Shop Scheduling with Resource Flexibility. J Intell Manuf 28:1961–1972

Zheng XL, Wang L (2016) A Knowledge-Guided Fruit Fly Optimization Algorithm for Dual Resource Constrained Flexible Job-Shop Scheduling Problem. Int J Prod Res 54(18):5554–5566

Zheng F, Sui Y (2019) A Bi-objective Model for Dual-resource Constrained Job Shop Scheduling with the Consideration of Energy Consumption. In 2019 In-ternational conference on industrial engineering and systems management (IESM) (pp. 1–6). IEEE

Funding

Open access funding provided by Politecnico di Milano within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Costa, F., Thürer, M. & Portioli-Staudacher, A. Heterogeneous worker multi-functionality and efficiency in dual resource constrained manufacturing lines: an assessment by simulation. Oper Manag Res 16, 1476–1489 (2023). https://doi.org/10.1007/s12063-023-00371-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12063-023-00371-2