Abstract

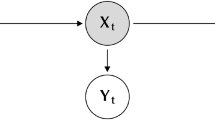

Using the expression for the unnormalized nonlinear filter for a hidden Markov model, we develop a dynamic-programming-like backward recursion for the filter. This is combined with some ideas from reinforcement learning and a conditional version of importance sampling in order to develop a scheme based on stochastic approximation for estimating the desired conditional expectation. This is then extended to a smoothing problem. Applying these ideas to the EM algorithm, a reinforcement learning scheme is developed for estimating the partially observed log-likelihood function. A stochastic approximation scheme maximizes this function over the unknown parameter. The two procedures are performed on two different time scales, emulating the alternating ‘expectation’ and ‘maximization’ operations of the EM algorithm. We also extend this to a continuous state space problem. Numerical results are presented in support of our schemes.

Similar content being viewed by others

Notes

Double, because the importance sampling measures for two ‘value functions’ corresponding to terminal condition f and \(\mathbf 1 \) differ unlike in the non-adaptive case, where it was fixed as the common \(q(\cdot | \cdot )\).

References

Borkar V S, Jain A V 2014 Reinforcement learning, particle filters and the EM algorithm. In: Proceedings of the Workshop on Information Theory and Applications, San Diego, February

Bain A and Crisan D 2009 Fundamentals of stochastic filtering. Berlin–Heidelberg: Springer Verlag

Asmussen S and Glynn P W 2007 Stochastic simulation: algorithms and analysis. New York: Springer Verlag

Borkar V S 2009 Reinforcement learning – a bridge between numerical methods and Monte Carlo. In: Sastry N S N, Rao T S S R K, Delampady M and Rajeev B (Eds.) Perspectives in mathematical sciences I: probability and statistics. Singapore: World Scientific, pp. 71–91

Zakai M 1969 On the optimal filtering of diffusion processes. Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete 11(3): 230–243

Borkar V S 1991 A remark on control of partially observed Markov chains. Annals of Operations Research 29(1): 429–438

Borkar V S 1991 Topics in controlled Markov chains. Pitman Research Notes in Maths. No. 240. Harlow, UK: Longman Scientific and Technical

Elliott R J, Aggoun L and Moore J B 1995 Hidden Markov models: estimation and control. New York: Springer Verlag

Ahamed T P I, Borkar V S and Juneja S 2006 Adaptive importance sampling technique for Markov chains using stochastic approximation. Operations Research 54(3): 489–504

Lindsten F and Schön T B 2013 Backward simulation methods for Monte Carlo statistical inference. In: Foundations and Trends in Machine Learning, vol. 6(1), Hanover, MA: NOW Publishers, pp. 1–143

Cappé O, Moulines E and Rydén T 2005 Inference in hidden Markov models. New York: Springer Verlag

Borkar V S 2008 Stochastic approximation: a dynamical systems viewpoint. New Delhi, India: Hindustan Book Agency and Cambridge, UK: Cambridge University Press

Dempster A P, Laird N M and Rubin D B 1977 Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society Series B 39(1): 1–38

Hirsch M W 1989 Convergent activation dynamics in continuous time networks. Neural Networks 2: 331–349

Author information

Authors and Affiliations

Corresponding author

Additional information

Work of VSB supported in part by a J C Bose Fellowship and a grant for ‘Distributed Computation for Optimization over Large Networks and High Dimensional Data Analysis’ from the Department of Science and Technology, Government of India. A part of this work was presented at the Workshop on Information Theory and Applications, San Diego, CA, February 2014 [1].

Rights and permissions

About this article

Cite this article

BORKAR, V.S., JAIN, A.V. Reinforcement learning, Sequential Monte Carlo and the EM algorithm. Sādhanā 43, 123 (2018). https://doi.org/10.1007/s12046-018-0889-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12046-018-0889-8