Abstract

New consumer needs have led industries to the possibility of creating virtual platforms where users can customize products by creating infinite combinations of different results. This made it possible to expand sales by guaranteeing a wide choice that would satisfy all requests. The dynamic and flexible evolution of factories is guaranteed by the introduction of new technologies such as robotization and 3D printers, recognized as two of the pillars of Industry 4.0. The main aim of this paper is to achieve a workflow for the creation and implementation of personalised jewellery based on faces with different emotional expressions. To date, there are few works in the literature investigating the intersection between smart manufacturing and emotion recognition, and these are mainly related to improving human–machine interaction. The authors’ aim is to research for innovation in the intersection of three different fields of study such as parametric modelling, smart manufacturing and emotion recognition in order to create personalized and innovative manufacturable models. To this purpose, an application has been generated that exploits both visual scripting, typical of parametric modelling, and scripting, in the Python programming language. The generated algorithm implements a machine learning for emotion recognition that identifies the label of each user-generated face, validating the effectiveness of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Different facial expressions play an important role in non-verbal communication. They are important in the daily communication between human beings as indicators of feelings, allowing men to express their moods. Consequently, information on facial expressions is often used in automatic emotion recognition systems. Traditional methods perform the analysis of human expressions by analyzing them through static images or frames extracts by video.

In addition, the development of digital skills in people and the increasing popularity of additive manufacturing tools, including domestic ones, are driving people to seek out and produce customized objects. It is up to research to illustrate the potential that this new branch of object customization can offer consumers. The aim of this work is to create a complete workflow that allows parametric modelling of a face and its expressions to be used in smart manufacturing, validating the modelling with automatic recognition techniques. Each user will be able to reconstruct his or her own face and use it in the customization of a parametric jewelery object physicalized using a 3D printer and then cast through traditional techniques.

The 3D geometry of a human face is required for a wide range of applications, involving: 3D face recognition and authentication [21, 55], simulation of facial plastic in surgery [45], simulation of facial expression [75] and facial animation [47]. The challenge in developing a facial model is to be able to derive a model that is ever closer to reality and, at the same time, that can be used efficiently for a specific purpose. In this work the authors have initially used parametric modeling, in order to create a set of faces which, modifying input parameters, reproduce the different emotions.

For the workflow presented here to be effective, its components: modelling, emotion recognition and additive manufacturing must interact effectively with each other. Specifically, through the use of scripts, the parametric DAG will send .STL files to the printing platform and images to machine learning (ML) for recognition.

1.1 Parametric modelling for manufacturing

In order to maintain competitiveness in the long term, industries must increase the ability to respond to customer needs quickly, satisfying requests and producing numerous variants of the same product even in small batches [29]. Individualization and personalization of products leads to manufacturing concepts like X-to-order (Engineer-to-Order, Make-to-Order, Build-to-Order and Configure-to-Order) [72] and finally to mass-customization, which means the manufacturing of products customized according to customers needs, at production costs similar to those of mass-produced products. Traditional factories cannot meet these production requirements. Only digitized factories are able to sustain such a level of dynamism in large-scale production.

The digitization of the productive industry is based on nine pillars including Internet of Things (IoT), big data, cloud, system integration, augmented reality, cyber security, autonomous robot, simulation and Additive Manufacturing (AM). These nine pillars create physical-digital production systems in which machines and computers are integrated. An important role for digital factories is played by computer systems such as parametric and generative modelling. Traditional CADs allow designers to create and show extremely complex objects characterized by a precision of the details; however, these static systems do not allow for the exploration of variants in design space. Parametric Design (PD) is a tool that requires an explicit visual dataflow in the form of a graph named Direct Acyclic Graph (DAG). This method defines a geometry as a logical sequence of linked operations allowing the generation of very complex 3D models. PD permits a wider range of design possibilities to be explored than traditional processes, helping human creativity and ensuring design variations that are obtained simply by changing the input parameters [57].

The complex virtual geometries generated through algorithmic modelling can be achieved only with the aid of robotic processes. Customisation and complexity are bringing the concept of uniqueness back into large scale production. In particular, in order to test the workflow proposed in this paper, an application was realized for the jewelry sector. This application consists in the creation of a parametric ring, the variations of which are obtained by simply varying numerical sliders. The top part of this object consists of faces representing the various emotions, each of which is validated by an automatic label generated by the ML. Each user, by manipulating the different sliders, can select and combine the different expressions that will always result in an object that can be manufactured by stereolithographic 3D printing.

1.2 Facial and emotion recognition

The face is the first non-verbal communication tool and is the first interaction between human beings. The face changes based on what a person feels at a given moment and, therefore, based on the emotion that moment arouses in him. The emotion is expressed by the slightest change in the facial muscles, the face continues to change until the actual emotion is expressed; such bodily changes represent a fundamental channel on the emotional state of a person at that particular moment [46].

In the analysis of facial expressions reference is made to facial recognition, different facial movements and changes that occur in the face. Emotion is often expressed through subtle changes in facial features, such as the stretching of lips when a person is angry or the lowering of the corners of the lips when a person is sad [25] or in the different change of the eyebrows or eyelids [34]. The movement of the face takes place in a specific space that takes into consideration the reference points of a face (Landmarks Face). The dynamics of change in this space can reveal emotions, pain and cognitive states and regulate social interaction. Thus, the movement of the reference points of a face, such as the corners of the mouth and eyes, constitutes a “space of reference points” [63].

Facial expressions are an important tool in non-verbal communication. The role of the classification of facial expressions is useful for the behavioral analysis of human beings [46] and the automated analysis of facial expressions is important for effective human–computer interaction. The facial expression is processed in order to extract information from it and to recognize the six basic expressions. This is called Emotion Recognition Method.

Research has developed different approaches and methods for the analysis of fully automatic facial expressions, useful in human–computer interaction or computer-robot systems [2, 38, 42, 51].

The use of neural networks and of machine learning techniques allow for facial expression analysis [30, 44, 61]

1.3 Parametric modeling for animations: emotion animation

The modelling of emotions in a Virtual Environment (VE) attributes its diffusion and constant improvement to the gaming industry. In detail, two different groups of virtual characters can be distinguishet. The first group is represented by the characters, or non-playable characters (NPC) which are the representation of artificial agents [16, 20, 53]. The second group is represented by avatars, also known as embodiments, which are the virtual representation of a user. In order to obtain realistic representations, the characters must simulate human behaviour, including emotional behaviour. A virtual character in reality is nothing more than a 3D mesh made up of a set of vertices and faces. In order to give expression to these 3D models it is necessary to introduce an animation process. This process requires a designer, or animator, who manually builds a complex system of movement of the points that simulates human behaviour as an emotion [7, 26].

The main method for character animation is known as rigging, composed in two steps. In the first phase, known as rig building, an ordered and hierarchical structure similar to a skeleton is built by applying a displacement field that simulates movement [8, 56]. For each rig it will be necessary to carry out a process called weight paint, in which the designer will assign a weight, i.e. the ability of a certain “rig” to influence a quantity of vertices for manipulation. Essentially, for each rig an area of influence is assigned, where the number of vertices selected is determinated by the weight assigned to the specific rig. Thanks to this system, when a rig moves, it imposes the displacement to all the vertices that are part of its area of influence as well as to the sub-hierarchies of rigs allowing the transformation of the model. The second phase starts when the rig structure is completed, this phase consists of the actual animation, the designer in this phase defines the displacement vector in a given time, position and speed [37, 68]. The main advantage of the method is that the skeleton is independent of the animation and therefore with the same skeleton or rig several expressions can be made [58]. This method is extremely widespread and well documented, allowing it to be applied on the most varied platforms (Blender, Unity etc). However, there is no specific technique for making the rig for facial expressions and each designer defines the skeleton according to needs. An example of rig distribution according with design needs is the creation of virtual avatars that present a very complex skeleton for accurate simulation of eye movement in order to improve human–machine interaction [65]. In contrast, playable characters present complex rigs to achieve realistic movements at the expense of facial expression.

Another method of animation named blend shapes or morph targets has been used successfully in recent years. With this approach the designer manually deforms the mesh point by point for the different configurations, initial and final, the animation is done by interpolation between the positions of the two limit configurations [1, 17, 67]. This method allows the designer to deform the mesh freely without the limitations due to rig structures. Regardless of the method adopted, the animation is a complex and extremely time-consuming process requiring the use of advanced modelling tools, for this reason the quality of the animation is linked to the skills of the author.

1.4 Parametric modeling for emotion animation

The research proposed in this paper is based on three distinct topics that correspond to three poorly-related research areas. The authors believe that innovation should be sought in the intersection areas of different sectors and for this reason they have chosen to investigate the intersections between manufacturing and emotion modelling and recognition.

The paper is structured as follows: Sect. 2 presents the background in which the three research topics (parametric modelling, animation and smart manufacturing) should be framed; Sect. 3 illustrates the theoretical and logical process that gives life to the dynamic 3D model that generates and validates the emotions modelled; in Sect. 4 the implementation of the method and the results obtained and their validation by machine learning label are shown; finally, the conclusions and open issues to be explored in the future are drawn.

2 Related works

To date, there are few works in the literature investigating the intersection between smart manufacturing and emotion recognition, and these are mainly related to improving human–machine interaction [14, 74]. On the other hand, it is not possible to identify work that exploits modelling and emotion recognition as a design technique for customizable and innovative objects. For this reason, the literature reviews of the three different fields: emotion recognition, parametric modelling and additive manufacturing are given separately below. The lack of work in the literature on this intersection makes it clear how it is not adequately investigated and how it can be an area of expansion for customizable object manufacturing.

2.1 Parametric modelling for emotion animation

One of the first parametric models of computer graphics human face was “Candide” first published in 1987 by Rydfalk [59] and then updated in 2001 by Ahldberg ahlberg2001candide. The “Candide” model implements a set of action units (AU) which are representative of micro-expressions derived from each person’s natural expressions. The combination of AU is able to replicate a specific human emotion. The accuracy in the representation of the emotion of a model in a Virtual Environment concept is also extremely important, introduced for the first time by Fabri et al. [36]. This research highlights how important was accuracy of expression in the absence of verbal language. The importance of the communication of emotions in a virtual environment has been explored in some works by Bertacchini et al. [9, 12, 15]. that analyses how a guide that presents human emotions creates a more immersive environment even in the case of augmented reality.

Wu et al. [73] create a Facial Expression Feature in a 3D character. This feature still exploits human performance, it is based on the recording of a video that is able to recognize six emotions and project it on a 3D avatar. However, these expressions are created a priori and for this reason they are static and not modifiable and can only be applied to a single 3D model. Kim et al. [43] propose a novel method to create emotive 3D models. They use image processing techniques to extract information from pictures of humans and map the emotion on an avatar in a Virtual Environment (VE). From what has been described, the improvement of the technique is evident in the creation of virtual 3D models able to simulate emotions but as can be seen the most accurate techniques are still related to the reading of human expressions from images or videos. The animations made with only manual techniques are less realistic, even if face landmarks are used, as defined in the previous section [50, 63]. In this sense it is important to consider the work of Molano et al. [53]. They tried to create a facial animation process that could be applied to any type of humanoid 3D model, even to several models together, without the need to use an actor to extrapolate human emotions.

2.2 Emotion recognition

According to a survey by Samal and Iyengar [60], research on the automation of facial expression recognition was not very active before the 1990s. Previously, Ekman & Friesen [35] devised the coding of the actions of human face system, called FACS (Facial Action Coding System), which allows the encoding of different facial movements in Units of Action (AU) based on the activity of facial muscles that generates temporary changes in facial expression according to specific organizations. Therefore, the movements of one or more facial muscles determine the six expressions recognized as universal, which are: fearful, happy, sad, angry, disgusted and surprised. In their works Bartlett et al. [5, 6] attempted to automate FACS annotation by exploring various representations based on holistic analyzes, wrinkles, and flow-of-motion fields. The results indicated that computer vision methods can simplify this task. Since then, there have been many advances in facial-related fields, such as face detection [69], facial landmark localization [76] and face verification [19, 27].These advances have helped improve facial expression recognition systems and data set collection.

Many approaches based on emotion recognition use neural networks only for the classification of hand-designed and pre-extrapolated functionalities, the application of learning methods in its total characteristics is becoming increasingly popular. Corneanu et al. [28], Matsugu et al. [52] and Viola et al. [69] provided a primary classification for emotion recognition using multimodal approaches. Subsequently, Li et al. [49] stated that emotion recognition is based on visual information and conducted an experiment based on smile recognition. Subjects were asked to represent a smile and a comparison was made between emotional recognition in 3D and 2D. More recently, Anil et al. [3] carried out an inspection of the techniques used for the recognition of emotions together with the accuracies measured on various databases, differentiating the 2D and 3D techniques. They carried out a standard classification consisting of algorithms that fall into the category of methods based on geometric characteristics and appearance [18, 33]. Wibowo et al. [70] through the use of 3D modeling techniques, defines that it is possible to simulate and understand the emotions in a face. These virtual models capable of simulating human emotions, as well as in the computer industry, have also proved particularly significant in specific reports such as in relationships with users suffering from autism spectrum disorders.

2.3 State of the art in digital and physical tools for manufacturing

Additive manufacturing appears to be one of the three main components for the workflow presented in this paper. In order to identify the most appropriate technology for physicalizing prototyped objects, an analysis of the main available numerical control technologies was conducted. As a result of the analysis presented below, stereolithographic resin printing was identified as the most appropriate technology for the realization of jewelry models. The latter was chosen because it has low production costs and relatively short printing times. In addition, as already proven by the authors in the work on parametric jewelry, it is possible to automatically and optimized transfer print files directly from the generative DAG into Grasshopper [11]. As well as, several authors have shown how digital design tools have evolved beyond their initial use and can be considered as collaborative and output management tools for manufacturing [13, 32, 54]. The parametric modelling in modern digital design tools has introduced the modification through parameters, sliders or numerical inputs of three-dimensional models obtained through a strategic disposition of rules in the form of explicit data flow [31]. This ability is due to the possibility of adding simulation tools and programming complex tasks associated with parametric and generative modeling techniques. These tools as well as many variants of the same model provide effective information management applied to generative design strategies. Jabi defines Parametric Design as: “the process based on algorithmic thinking that enables the expression of parameters and rules that, together, define, encode and clarify the relationship between design intent and design response” [41]. This sentence summarises the complexity of the concept of parametric modelling which is a combination of methods such as mathematics, geometry, logic and computer science applied to Art and Design. Many authors still discuss the univocal definition of parametric design techniques and theory as, for example, Burry [22] and Woodbury [71]. According to the latter, parametric design is to be considered as a large family that includes techniques leading geometry changes through parameter changes: “Design is change. Parametric modelling represents change.” The algorithmic structure of the parametric tools lends itself to the application of iterations and loops, if these are applied with a specific design intent then the process can be defined as Generative Design. In addition, scripting programming is a fundamental tool for the creation of rig animations as it allows you to define the displacement vectors and their variation [53].

The 3D models generated with parametric modelling are used for additive manufacturing and then are physicalised using 3D printers. “3D printing” is based on the layering of real parts from virtual data, be it with power glue, laser, UV light processing or electron beam [40]. “Additive Manufacturing”, in recent years, has become a new important field in the world of engineering and it is a professional application of the concept of “3D printing”. It ensures the employment in the 3D models of an assortment of reliable materials made in carbon, steel, aluminum, glass, high performance plastics and also ceramic [24, 39]. The use of AM within industry 4.0 means that the ultimate purpose of its application does not become important. “Design for function” or “design for production”, in the end, we will always be able to combine the avant-garde of new technologies with the ultimate aim of increasingly ambitious industrial objectives [4]. Since 1987, when the first 3D printer was invented, the concept of “Rapid Prototyping” (RP) has become a useful and fast tool to create and materialize ideas and design concepts. This is possible through the achievement of physicalizable digital 3D models coming from various fields of engineering, industry, and jewelry, up to, for example, working pieces of machinery. The limits of the use of 3D printers within the industry are linked to various technological aspects that initially restricted their use. The materials, the complex models to be made and their small print size led researchers and developers of business innovation to fields where prototyping of small and not too complex models was required.

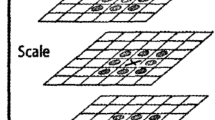

This has guaranteed a remarkable technological development in the last 20 years of the RP,although there are still limitations related to surface finish and measurement uncertainty between the nominal model and the model obtained by the process. The use of this technology provides the complete freedom of shape needed to produce customized objects, managing to invent 3D printers such as to be able to build concrete constructions [23]. The technological advancement of 3D printers and the concept of RP has ensured the development of other types of applications within the field of industrial design: “Rapid Manufacturing” (RM) and “Rapid Tooling” (RT) [40]. RM is a production process designed and created in order to obtain complete products immediately usable without the need for subsequent finishing phases [48]. RT, on the other hand, is an ideational and creation process, connected to the revolution of manufacturing and design of production tools that must increasingly respond to the demands of Industry 4.0 [64]. Figure 1 shows and schematizes the various additive processes available to make objects with the aid of AM. The basic principle lies in creating each artifact as a superimposition of layers printed one on top of the other that give shape to the final 3D model. All the processes used are different from traditional ones such as subtractive or formative manufacturing in which the raw material is modeled or removed [66].

There are three main technologies used for 3D printing: Fused Deposition Modeling, Stereolithographic Apparatus, and Selective Laser Sintering [62].

Fused Deposition Modeling (FDM) operates on an additive principle, releasing the material in layers. The plastic filament, collected in a reel, is liquefied inside a melting chamber and then released by an extruder nozzle onto the printing plate (bed), according to a pattern of lines that constitute the layers superimposed on the previous ones. Stereolithographic Apparatus (SLA) represents the first technique adopted in rapid prototyping. This technique uses a tank containing a special liquid resin capable of polymerizing when hit by light (photopolymerization); the printing plate, called bed, is the surface designed to accommodate the resin and is able to move up and down. A laser beam is projected through a system of mirrors, which shapes the liquid surface and modulates a raster image that is used to reconstruct the first section of the object to be created. After the first scan, the bed lowers so that the resin can create a new liquid surface and a subsequent laser scan generates a second section. The process is repeated until the desired object is completed. Selective Laser Sintering (SLS), is based on the use of powders that can be thermoplastic, metallic or siliceous. The sintering of the powders, i.e. the compaction and transformation into an indivisible compound, takes place through a laser, thus generating the prototype to be printed. To date, the industry uses STL printers with wax-based resins in order to create complex and impossible jewelry objects. The printed models are subsequently fused with artisan processes to obtain jewels in gold, silver or other metals.

3 Methods

As expressed in the previous paragraphs, this work illustrates the workflow that allows 3D models to be produced and verified for manufacturing with the capacity to show emotional facial expression. Figure 2, in the form of a graph, shows the main steps and interactions that constitute the proposed workflow. In input, the program has a 3D model of a static face while in output it returns models, for additive manufacturing, capable of conveying emotions through facial expressions. The first step concerns the creation of a basic element of the model called rig building which is able to deform the mesh representing the face at specific points. The second step is a verification process in which ML technologies are exploited to certify success in modeling emotions. Face recognition technology is used in order to return a recognition percentage for the 6 main emotions comunicated by the human face. In the last step, the preparation work of the 3D models for additive manufacturing is carried out. In this phase, for example, the faces obtained can be joined in order to create a jewel that will then be physicalized with the aid of a 3D printer. The steps that structure the workflow are reported and described in detail below.

3.1 Emotion modelling and animation

The starting point for the creation of the emotion animation system is to input a model of a human face with a regular and well-structured 3D polygon mesh. These models are assigned to manufacture and there are no requirements related to the texture and its mapping as for 3D models intended for VE. The above is a sufficient necessary condition to be able to start the process described in the workflow and identified as step 1. In this phase, the identification of the characteristic points of the human face, which are the same points that the classify algorithm detects for recognition of emotions (Fig. 3), is identified. These characteristic points are then used for the creation of the blend shapes, as already mentioned in Sect. 1.2. It has the function of defining which sections of the face will be subject to deformation and which will remain static for each emotion. This process is related to the recognition of the characteristic landmark points and not directly to the 3D mesh, the algorithm can therefore be used for any models for which it is possible to detect the characteristic points of the face. As shown in Fig. 3 landmarks points are the characteristic points of the face, they are a fixed number equal for each individual and are used to highlight the features of a face, in fact they correspond to: facial contour, eyebrows, mouth contours, the eyes and the nose. Variation in expression causes these characteristic points to shift, they are used by ML techniques to more easily abstract information about each emotion. In fact, Fig. 3 shows an example of an image with the identification of landmarks points for each of the main emotions employed to train ML.

To the structure composed from the landmarks points is then assigned an area of influences related to a number of points of the mesh that will be affected by the imposed displacement. The topological deformation of the mesh guarantees the ability to simulate human emotions.

In the field of PD, the animation of a system is obtained using Eq. 1, where A and B are 3D vectors that describe the initial and destination coordinates of the displacement of each vertex constituting the base blend shapes. This allows the vertices to be moved in space in assigned characteristic positions that change for each emotion and are strictly dependent on parameter t. The weight painting, described previously, has the function of applying the deformation to the remaining points of the mesh making the model similar to real human facial behavior. Move statements, vectors and points subject to displacement, are defined by scripting. This definition process therefore requires the combination of scripting and visual scripting which will constitute the instructions and rules for simulating emotions. By modifying the displacement vectors, it will be possible to modify the animations.

Equation 1 was implemented through the use of visual scripting, which does not appear in explicit form in this present case.

3.2 Classify, emotion recognition

The second step concerns the analysis of the process carried out in the previous phase through the use of Emotion Recognition by an automatic learning software. Before proceeding with the analysis of the data obtained in the first phase, an internal system in the chosen software was created using the appropriate programming language. Subsequently, the images obtained were tested in it, in order to validate and verify the performance of the system for the automatic recognition of the emotions.

The library used is “FER” (Facial Expression Recognition) for Python, which is a high-level programming language. Python is a widely used programming language for the purpose of instructing ML, capable of learning what to do just by looking at examples; it allows algorithms to be employed in order to analyze data or images automatically enabling the manipulation of the images themselves. In the software functions are implemented, such as detect_emotions(), which combined with the OpenCV library, permit to work on images identifying the faces and expressions represented.

The method used in Python for this paper is the Classify method that ensures classifying the data included in the dataset into different categories. In particular, it is used for the classification of images in the context of facial recognition and the emotion expressed by the face itself. The classification process of this method aims to assign to each data (in this case an image) the class of emotion determined by associating a label to it.

The process is made in steps:

-

acquisition of the image representing the face modelled in phase 1;

-

extraction of basic functions for facial recognition and for the landmark points;

-

final classification of the expression in the face with the label corresponding to the emotion expressed.

Specifically, the algorithm tested in this work guarantees starting from the images provided by step 1 and using the Emotion detection function in Python FER library to associate an emotion label provided by the machine to each face. In order to make the recognition wider, seven basic emotions have been chosen and implemented (happiness, fear, disgust, anger, surprise, sadness, neutrality) guaranteeing the machine the univocal association of only one of them to the designated image.

3.3 Additive manufacturing

The two steps described in the previous paragraphs provide a dynamic 3D model as a final result, capable of assuming different expressions through the variation of several sliders and a label for each emotion. This model is evidence of the effectiveness of parametric modeling; however, it does not yet have the characteristics or a precise purpose for use in the field of manufacturing. In order to guarantee the prototyping process of the created object, there are some checks to be made on the mesh that must be closed and with the normals arranged with the outgoing direction towards the outside.

As in any custom PD process, also in this case the ultimate purpose of the created object depends on the consumer’s imagination. In the specific case, it was decided to create a ring consisting of faces with different emotions. This choice was determinated both by the experience of the authors, who have dealt with the subject several times, and by the availability of stereolithographic printers. They are characterized by limited print volumes but are perfectly suited to the creation of prototypes with many small-scale details. A formlab - form2 printer was used, employing a wax-based resin designed specifically for jewelry and which can be used in classic casting processes. The resin was created by the FormLab development and research center in order to ensure a product that has the same chemical physical characteristics of the common wax used in traditional jewelry.

The final purpose of the paper is to obtain an integrated system that can guarantee not only the virtual design of the object but also its physicalization and creation. In fact, after being created, the ring can be prototyped and subsequently melted and manufactured in the industry by also choosing the metal and the finishes of its surface. This process highlights the possibility of creating infinite customized and unique models that meet all the needs of different consumers.

4 Results

The workflow described in the previous paragraphs (schematized in Fig. 2) was finally implemented by the authors using the parametric modeling software Grasshopper, a plug-in for Rhino. Such software is based on the creation of visual scripts and allows the programming of custom components in Python. Figure 4 shows the visual script created. It allows the three-dimensional model of the face to be animated, this script takes as input a model of a face with a well-structured mesh in a neutral expression (1). Then on that input model, landmarks points (2) divided into groups are identified:

-

eyebrows;

-

eyes;

-

mouth;

-

forehead;

These points are fundamental to the script, allowing it to simulate the movement of the facial muscles. These points are superimposed on the mesh, do not belong to it, and are identified by the ML algorithm. The implemented technique is based on the possibility of creating areas of influence around key points of the face (Landmark points) with the function of control points. (3), (4), (5) and (6) sections of the script showed in Fig. 4 are used to assign areas of influence to different groups of points. To these points an initial and final vector position are assigned as reported in Eq. 1, and the expression of the face is obtained by varying the parameter t (Eq. 1) between the imposed extremes. For each group of points, the aforementioned t parameter is represented by a different slider showed in section (7). Specifically the points identified by the ML are showed in the upper part of Fig. 5. In addition to the function groups assigned to the main movements there are other functions in the script, in fact group (8) corresponds to the task of harmonizing the various displacements by preventing concentrated deformations in the mesh. Finally, group (9) presents the outputs of the script, it is used to generate two different output information: 1) deformed meshes, 2) semirealistic images necessary for the purpose of identification of the represented emotion.

In order to assign labels to the individual facial expressions created by the user, the ML emotion recognition system has been implemented through the Python programming language. The algorithm uses two libraries (FER and Dlib) that allow the emotions and the landmark points to be identified, employed for the creation of the movements of the facial muscles.

The combination of the different displacement groups, which is obtained by varying the numerical sliders shown in Fig. 4 (7), results in a series of faces characterized by different facial expressions. As mentioned these outputs are presented both in the form of a 3D model (mesh) and as images. Such duality in the outcome is necessary to meet the purpose of the work: employ face models for the production of custom manufacturable objects using ML techniques to validate the emotion represented. In fact, the employed ML algorithm (FER) is trained to extract features and classify emotions on images consisting of a fixed number of pixels. In order to query the algorithm multiple times and obtain results with the best possible accuracy, all images are generated with the camera in a fixed position by varying only the input sliders. Each of the saved images will correspond to a static 3D file representing the same expression. The resulting models will have a one-to-one correspondence with the label assigned by the ML and are used as input for the creation of custom objects. Figure 5 shows the images obtained from the visual script (Fig. 4) each has the size of \(500\times 500\) px. In the upper part of the figure the model with neutral expression is shown, on it the landmark points are identified, divided into four groups as clarified in legend, by ML feature extraction. These points are those used for face deformation, each is assigned an area of influence and a displacement vector.

The user by varying the input sliders (Fig. 4 (7)) combines the displacements of the characteristic points and their areas of influence deforming the model and obtaining the various expressions. Once the desired configuration is achieved via the appropriate “bake” function, the parametric model is made static, and the i-th configuration is fixed and saved. As mentioned earlier, an .STL file and a \(500\times 500\) px image are obtained as output. The lower part of Fig. 5 shows the renderings made for the different configurations selected and used to query the ML. For each input, the algorithm identifies the location of the feature points and returns a recognition rate for each of the six main emotions. In fact, below each render of Fig. 5 are listed the normalized recognition percentage in the interval [0; 1] for each emotion. The data reported show a wide identification of some emotions (happy, angry and sad) while there is a lower recognition percentage for the others (disgust, fear and surprise). These results are supported by previous studies conducted by the authors [30]. In fact, even if the recognition percentage is lower than the others, the position of a correct label is in any case guaranteed as the result is superior if compared with the percentages assigned to other emotions. The algorithm in Python is made by assigning as a label the emotion that has the highest percentage.

For each of the labeled images, a 3D model is also obtained that is configured as a closed, manifold solid (only two faces join at each edge) with the normal vectors aligned. These features are essential for physicalization with additive technologies, and if they are not met, errors in prototypes are encountered. The resulting models are used to design customized objects; in the case study implemented for this experimentation, a ring was made on which two faces are embedded. The object customization process is carried out in rhino using grasshopper through the implementation of DAG, visual scripts generate real-time three-dimensional models that meet the conditions specified above. Even for customized objects, there is a dual output result from the DAG consisting of an .STL file for 3D printing and a photorealistic representation. A possible configuration of a custom object is represented in Fig. 6, where a ring reproducing the sad and happy expression has been generated. The parametric system allows the user to vary the configurations of the object and observe in real time the possible result, and if satisfied, proceed with the prototyping of the model using a stereolithographic wax printer and then create the jewel. Figure 7 shows the steps followed to go from the virtual 3D model to the castable resin prototype by using a Form 2 stereolithographic printer; a) the model is sent to the “Preform” slicing software, at this stage the supports are generated; b) the printed model is removed from the print base and undergoes post-processing washing; c) the model is freed from the supports and is ready for casting. Specifically for the case study, a ring of EU Size 16.0 was prototyped, which corresponds to an internal diameter of 19 mm. As also illustrated by the authors [10], the use of additive technologies results in the appearance of inaccuracies in prototypes, specifically imputable in the two stages of approximation: virtual (remeshing with triangular polygons) and physical (layer printing). As demonstrated in work [10], it is possible to operate on the approximation stage with triangular polygons by applying face subdivision algorithms that reduce the geometric deviations between the nominal model and the prototype. Where geometric deviation is defined as the distance between the point belonging to the nominal model and its projection on the model approximated with triangular polygons. Such an algorithm was also applied in the present case leading to visibly better results in the final prototype reducing the average geometric deviation from the order of magnitude of \(10^{-2} \) to \(10^{-3} \) mm.

5 Conclusions

The principal aim of this work concerns obtaining from the virtual environment a real user-designed object created through interaction with human emotions. The main result was the creation of a workflow capable of importing a face model and automating the animation of facial expressions, validating the represented emotions through the machine learning technique. This process is used in the field of parametric design and user customization of products. The benefits highlighted by the workflow are related to the ability to produce personalize objects optimized for prototyping through additive manufacturing. In fact, the DAG designed for the case study allows users to customize virtual objects in real time, obtaining two outputs consisting of: images and prototypal 3D models. The images are used to query the ML and assign a label to the 3D model, which is then used to make custom objects ready for additive technology. Preliminary results obtained demonstrate the ability of the workflow to realize and label some emotions (happiness, anger, and sadness) over others (disgust, fear, and surprise), which show recognition levels hovering around 50 percent. This may be attributed to a difficulty of the neural network to recognize negative emotions simulated by a neutral virtual face. Future developments include using a dataset from photorealistic avatars to train the ML and try to improve recognition rates of negative emotions.

References

Abdechiri, M., Faez, K., Amindavar, H., Bilotta, E.: The chaotic dynamics of high-dimensional systems. Nonlinear Dyn. 87(4), 2597–2610 (2017)

Adamo, A., Bertacchini, P.A., Bilotta, E., Pantano, P., Tavernise, A.: Connecting art and science for education: learning through an advanced virtual theater with “talking heads’’. Leonardo 43(5), 442–448 (2010)

Anil, J., Suresh, L. P.: Literature survey on face and face expression recognition. In: 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), pp. 1–6. IEEE (2016)

Attaran, M.: The rise of 3-d printing: the advantages of additive manufacturing over traditional manufacturing. Bus. Horiz. 60(5), 677–688 (2017)

Bartlett, M.S., Hager, J.C., Ekman, P., Sejnowski, T.J.: Measuring facial expressions by computer image analysis. Psychophysiology 36(2), 253–263 (1999)

Bartlett, M.S., Viola, P.A., Sejnowski, T.J., Golomb, B.A., Larsen, J., Hager, J.C., Ekman, P.: Classifying facial action. In: Advances in Neural Information Processing Systems, pp. 823–829 (1996)

Bertacchini, F., Bilotta, E., Caldarola, F., Pantano, P.: The role of computer simulations in learning analytic mechanics towards chaos theory: a course experimentation. Int. J. Math. Educ. Sci. Technol. 50(1), 100–120 (2019)

Bertacchini, F., Bilotta, E., Caldarola, F., Pantano, P., Bustamante, L.R.: Emergence of linguistic-like structures in one-dimensional cellular automata. In: AIP Conference Proceedings, vol. 1776, p. 090044. AIP Publishing LLC (2016)

Bertacchini, F., Bilotta, E., Carini, M., Gabriele, L., Pantano, P., Tavernise, A.: Learning in the smart city: a virtual and augmented museum devoted to chaos theory. In: International Conference on Web-Based Learning, pp. 261–270. Springer, Berlin (2012)

Bertacchini, F., Bilotta, E., Carnì, D. L., Demarco, F., Pantano, P., Scuro, C., Lamonaca, F.: Preliminary study of an innovative method to increase the accuracy in direct 3D-printing of nurbs objects. In: 2021 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4. 0 &IoT), pp. 94–98. IEEE (2021)

Bertacchini, F., Bilotta, E., Demarco, F., Pantano, P., Scuro, C.: Multi-objective optimization and rapid prototyping for jewelry industry: methodologies and case studies. Int. J. Adv. Manuf. Technol. 112(9), 2943–2959 (2021)

Bertacchini, F., Bilotta, E., Pantano, P.: Shopping with a robotic companion. Comput. Hum. Behav. 77, 382–395 (2017)

Bertacchini, F., Bilotta, E., Pantano, P.S.: On the temporal spreading of the SARS-CoV-2. PLoS ONE 15(10), e0240777 (2020)

Bertacchini, F., Scuro, C., Pantano, P., Bilotta, E.: A project based learning approach for improving students’ computational thinking skills. Front. Robot. AI 9 (2022)

Bertacchini, F., Tavernise, A.: Knowledge sharing for cultural heritage 2.0: prosumers in a digital agora. Int. J. Virtual Commun. Soc. Netw. (IJVCSN) 6(2), 24–36 (2014)

Bilotta, E., Di Blasi, G., Stranges, F., Pantano, P.: A gallery of Chua attractors Part VI. Int. J. Bifurc. Chaos 17(06), 1801–1910 (2007)

Bilotta, E., Lafusa, A., Pantano, P.: Life-like self-reproducers. Complexity 9(1), 38–55 (2003)

Bilotta, E., Pantano, P.: Structural and functional growth in self-reproducing cellular automata. Complexity 11(6), 12–29 (2006)

Bilotta, E., Pantano, P., Cupellini, E., Rizzuti, C.: Evolutionary methods for melodic sequences generation from non-linear dynamic systems. In: Workshops on Applications of Evolutionary Computation, pp. 585–592. Springer, Berlin (2007)

Bilotta, E., Stranges, F., Pantano, P.: A gallery of Chua attractors: Part III. Int. J. Bifurc. Chaos 17(03), 657–734 (2007)

Blanz, V., Vetter, T.: Face recognition based on fitting a 3D morphable model. IEEE Trans. Pattern Anal. Mach. Intell. 25(9), 1063–1074 (2003)

Burry, M.: Scripting Cultures: Architectural Design and Programming. Wiley, New York (2011)

Buswell, R.A., De Silva, W.L., Jones, S.Z., Dirrenberger, J.: 3d printing using concrete extrusion: a roadmap for research. Cem. Concr. Res. 112, 37–49 (2018)

Calignano, F., Manfredi, D., Ambrosio, E.P., Biamino, S., Lombardi, M., Atzeni, E., Salmi, A., Minetola, P., Iuliano, L., Fino, P.: Overview on additive manufacturing technologies. Proc. IEEE 105(4), 593–612 (2017)

Carroll, J.M., Russell, J.A.: Facial expressions in hollywood’s protrayal of emotion. J. Pers. Soc. Psychol. 72(1), 164 (1997)

Chan, C.S., Tsai, F.S.: Computer animation of facial emotions. In: 2010 International Conference on Cyberworlds, pp. 425–429. IEEE (2010)

Chopra, S., Hadsell, R., LeCun, Y.: Learning a similarity metric discriminatively, with application to face verification. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), vol. 1, pp. 539–546. IEEE (2005)

Corneanu, C.A., Simón, M.O., Cohn, J.F., Guerrero, S.E.: Survey on RGB, 3D, thermal, and multimodal approaches for facial expression recognition: history, trends, and affect-related applications. IEEE Trans. Pattern Anal. Mach. Intell. 38(8), 1548–1568 (2016)

Culot, G., Orzes, G., Sartor, M., Nassimbeni, G.: The future of manufacturing: a Delphi-based scenario analysis on industry 4.0. Technol. Forecast. Soc. Change 157, 120092 (2020)

De Pietro, M., Bertacchini, F., Pantano, P., Bilotta, E.: Modelling on human intelligence a machine learning system. In: International Conference on Numerical Computations: Theory and Algorithms, pp. 410–424. Springer, Berlin (2019)

Demarco, F., Bertacchini, F., Scuro, C., Bilotta, E., Pantano, P.: Algorithms for jewelry industry 4.0. In: International Conference on Numerical Computations: Theory and Algorithms, pp. 425–436. Springer, Berlin (2019)

Demarco, F., Bertacchini, F., Scuro, C., Bilotta, E., Pantano, P.: The development and application of an optimization tool in industrial design. Int. J. Interact. Design Manuf. (IJIDeM) 14(3), 955–970 (2020)

Dhall, A., Goecke, R., Joshi, J., Hoey, J., Gedeon, T.: Emotiw 2016: video and group-level emotion recognition challenges. In: Proceedings of the 18th ACM International Conference on Multimodal Interaction, pp. 427–432 (2016)

Eibl-Eibesfeldt, I.: Human Ethology. Routledge, London (2017)

Ekman, P., Friesen, W.V.: Facial Action Coding Systems. Consulting Psychologists Press, Palo Alto (1978)

Fabri, M., Moore, D.J., Hobbs, D.J.: The emotional avatar: Non-verbal communication between inhabitants of collaborative virtual environments. In: International Gesture Workshop, pp. 269–273. Springer, Berlin (1999)

Gabriele, L., Bertacchini, F., Tavernise, A., Vaca-Cárdenas, L., Pantano, P., Bilotta, E.: Lesson planning by computational thinking skills in Italian pre-service teachers. Inform. Educ. 18(1), 69–104 (2019)

Gabriele, L., Marocco, D., Bertacchini, F., Pantano, P., Bilotta, E.: An educational robotics lab to investigate cognitive strategies and to foster learning in an arts and humanities course degree. Int. J. Online Biomed. Eng. (iJOE) 13(04), 7–19 (2017)

Gibson, I., Rosen, D.W., Stucker, B., et al.: Additive Manufacturing Technologies, vol. 17. Springer, Berlin (2014)

Hopkinson, N., Hague, R., Dickens, P.: Rapid Manufacturing: An Industrial Revolution for the Digital Age. Wiley, New York (2006)

Jabi, W.: Parametric Design for Architecture. Laurence King Publishing, London (2013)

Kanade, T., Cohn, J.F., Tian, Y.: Comprehensive database for facial expression analysis. In: Proceedings Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), pp. 46–53. IEEE (2000)

Kim, M.-S., Hong, K.-S.: An avatar expression method using biological signals of face-images. In: 2016 International Conference on Information and Communication Technology Convergence (ICTC), pp. 1101–1103. IEEE (2016)

Kobayashi, H., Hara, F.: Facial interaction between animated 3d face robot and human beings. In: 1997 IEEE International Conference on Systems, Man, and Cybernetics. Computational Cybernetics and Simulation, vol. 4, pp. 3732–3737. IEEE (1997)

Koch, R.M., Roth, S.M., Gross, M.H., Zimmermann, A.P., Sailer, H.F.: A framework for facial surgery simulation. In: Proceedings of the 18th Spring Conference on Computer Graphics, pp. 33–42 (2002)

Kulkarni, S.S., Reddy, N.P., Hariharan, S.: Facial expression (mood) recognition from facial images using committee neural networks. Biomed. Eng. Online 8(1), 1–12 (2009)

Lee, Y., Terzopoulos, D., Waters, K.: Realistic modeling for facial animation. In: Proceedings of the 22nd Annual Conference on Computer Graphics and Interactive Techniques, pp. 55–62 (1995)

Levy, G.N., Schindel, R., Kruth, J.-P.: Rapid manufacturing and rapid tooling with layer manufacturing (LM) technologies, state of the art and future perspectives. CIRP Ann. 52(2), 589–609 (2003)

Li, C., Soares, A.: Automatic facial expression recognition using 3D faces. Int. J. Eng. Res. Innov. 3, 30–34 (2011)

Lombardo, M., Barresi, R., Bilotta, E., Gargano, F., Pantano, P., Sammartino, M.: Demyelination patterns in a mathematical model of multiple sclerosis. J. Math. Biol. 75(2), 373–417 (2017)

Mane, S., Shah, G.: Facial recognition, expression recognition, and gender identification. In: Data Management, Analytics and Innovation, pp. 275–290. Springer, Berlin (2019)

Matsugu, M., Mori, K., Mitari, Y., Kaneda, Y.: Subject independent facial expression recognition with robust face detection using a convolutional neural network. Neural Netw. 16(5–6), 555–559 (2003)

Molano, J.S.V., Díaz, G.M., Sarmiento, W.J.: Parametric facial animation for affective interaction workflow for avatar retargeting. Electronic Notes Theor. Comput. Sci. 343, 73–88 (2019)

Monizza, G.P., Bendetti, C., Matt, D.T.: Parametric and generative design techniques in mass-production environments as effective enablers of industry 4.0 approaches in the building industry. Autom. Constr. 92, 270–285 (2018)

Onofrio, D., Tubaro, S.: A model based energy minimization method for 3D face reconstruction. In: 2005 IEEE International Conference on Multimedia and Expo, pp. 1274–1277. IEEE (2005)

Ortiz, A., Oyarzun, D., Aizpurua, I., Posada, J.: Three-dimensional whole body of virtual character animation for its behavior in a virtual environment using H-Anim and inverse kinematics. In: Proceedings Computer Graphics International, pp. 307–310. IEEE (2004)

Oxman, R.: Thinking difference: Theories and models of parametric design thinking. Des. Stud. 52, 4–39 (2017)

Pranatio, G., Kosala, R.: A comparative study of skeletal and keyframe animations in a multiplayer online game. In: 2010 Second International Conference on Advances in Computing, Control, and Telecommunication Technologies, pp. 143–145. IEEE (2010)

Rydfalk, M.: CANDIDE: A Parameterised Face. Linköping University, Linköping (1987)

Samal, A., Iyengar, P.A.: Automatic recognition and analysis of human faces and facial expressions: a survey. Pattern Recogn. 25(1), 65–77 (1992)

Schachter, S., Singer, J.: Cognitive, social, and physiological determinants of emotional state. Psychol. Rev. 69(5), 379 (1962)

Schubert, C., Van Langeveld, M.C., Donoso, L.A.: Innovations in 3d printing: a 3D overview from optics to organs. Br. J. Ophthalmol. 98(2), 159–161 (2014)

Sebe, N., Lew, M.S., Sun, Y., Cohen, I., Gevers, T., Huang, T.S.: Authentic facial expression analysis. Image Vis. Comput. 25(12), 1856–1863 (2007)

Singh, G., Pandey, P.M.: Rapid manufacturing of copper-graphene composites using a novel rapid tooling technique. Rapid Prototyping J. (2020)

Song, J., Im, J., Lee, D.: A study on facial blendshape rig cloning method based on deformation transfer algorithm. J. Korea Multimedia Soc. 24(9), 1279–1284 (2021)

Strauss, H., Knaack, U.: Additive manufacturing for future facades: the potential of 3D printed parts for the building envelope. J. Facade Des. Eng. 3(3–4), 225–235 (2015)

Thobie, S.-A.: An advanced interpolation for synthetical animation. In: Proceedings of 1st International Conference on Image Processing, vol. 3, pp. 562–566. IEEE (1994)

Togawa, H., Okuda, M.: Position-based keyframe selection for human motion animation. In: 11th International Conference on Parallel and Distributed Systems (ICPADS’05), vol. 2, pp. 182–185. IEEE (2005)

Viola, P., Jones, M.: Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, vol. 1, pp. I–I. IEEE (2001)

Wibowo, H., Firdausi, F., Suharso, W., Kusuma, W.A., Harmanto, D.: Facial expression recognition of 3D image using facial action coding system (FACS). Telkomnika 17(2), 628–636 (2019)

Woodbury, R., et al.: Elements of parametric design (2010)

Wortmann, J.: A classification scheme for master production scheduling. In: Efficiency of Manufacturing Systems, pp. 101–109. Springer (1983)

Wu, Z., Zhang, S., Cai, L., Meng, H.M.: Real-time synthesis of Chinese visual speech and facial expressions using mpeg-4 fap features in a three-dimensional avatar. In: Ninth International Conference on Spoken Language Processing (2006)

Zhang, G., Luo, T., Pedrycz, W., El-Meligy, M.A., Sharaf, M.A.F., Li, Z.: Outlier processing in multimodal emotion recognition. IEEE Access 8, 55688–55701 (2020)

Zhang, Y., Prakash, E.C., Sung, E.: Face alive. J. Vis. Lang. Comput. 15(2), 125–160 (2004)

Zhu, X., Ramanan, D.: Face detection, pose estimation, and landmark localization in the wild. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2879–2886. IEEE (2012)

Acknowledgements

This work was supported by “National Group of Mathematical Physics” (GNFM-INdAM).

Funding

Open access funding provided by Università della Calabria within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bertacchini, F., Bilotta, E., De Pietro, M. et al. Modeling and recognition of emotions in manufacturing. Int J Interact Des Manuf 16, 1357–1370 (2022). https://doi.org/10.1007/s12008-022-01028-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12008-022-01028-3