Abstract

Artificial intelligence (AI) is deemed to increase workers’ productivity by enhancing their creative abilities and acting as a general-purpose tool for innovation. While much is known about AI’s ability to create value through innovation, less is known about how AI’s limitations drive innovative work behaviour (IWB). With AI’s limits in perspective, innovative work behaviour might serve as workarounds to compensate for AI limitations. Therefore, the guiding research question is: How will AI limitations, rather than its apparent transformational strengths, drive workers’ innovative work behaviour in a workplace? A search protocol was employed to identify 65 articles based on relevant keywords and article selection criteria using the Scopus database. The thematic analysis suggests several themes: (i) Robots make mistakes, and such mistakes stimulate workers’ IWB, (ii) AI triggers ‘fear’ in workers, and this ‘fear’ stimulates workers’ IWB, (iii) Workers are reskilled and upskilled to compensate for AI limitations, (iv) AI interface stimulates worker engagement, (v) Algorithmic bias requires IWB, and (vi) AI works as a general-purpose tool for IWB. In contrast to prior reviews, which generally focus on the apparent transformational strengths of AI in the workplace, this review primarily identifies AI limitations before suggesting that the limitations could also drive innovative work behaviour. Propositions are included after each theme to encourage future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Artificial Intelligence (AI) is increasingly taking over physical motion and performance activities, data processing and analysis, repetitive physical equipment management, and individual effective performance in workplaces (Chuang 2020; Malik et al. 2021). One might, therefore, argue that workers engage in more innovative work behaviour (IWB) (Henkel et al. 2020; Jaiswal et al. 2021). But then, how will AI in a workplace make workers innovative? The answer seems to be more nuanced.

While the existing literature (Chuang 2020; Klotz 2018; Malik et al. 2021; Wilson and Daugherty 2019) suggests that AI enhances workers’ abilities (intuition, empathy, and imagination) by automating mundane, repetitive, and boring activities. In academic research, there is a debate on whether this enhancement in workers’ abilities results from AI augmenting human intelligence and skills (Farrow 2019; Klotz 2018). Workplace AI that helps doctors diagnose disease and helps bankers detect fraud improves workers’ detection abilities rather than innovative work behaviour. However, the use of AI in this context suggests that AI cannot act independently of human workers (Klotz 2018; Wilson and Daugherty 2019).

It is reasonable to imply that AI’s limitations require human intelligence and abilities, for instance, to diagnose disease and detect fraud because current AI advancements cannot do so without humans (Klotz 2018; Wilson and Daugherty 2019). Workers, therefore, compensate for algorithmic flaws than relying on AI to enhance their creativity. While AI can influence workers’ behaviour (Malik et al. 2021; Yam et al. 2020), it is unclear how it might enable innovative work behaviour.

The research question that guides this review is: how will AI make workers innovative in their work? Following a protocol, the analysis involved reviewing 65 articles found in the Scopus database (Tranfield et al. 2003). Insights in the form of themes are obtained, along with propositions and prospective research areas, to highlight gaps in current knowledge and encourage further AI-enabled IWB research.

There are five sections to this study. The second section offers background for the two concepts. The third section describes the research method. Section four presents the research themes and propositions. The fifth section provides the concluding remarks, including discussion and conclusion, contributions, and study limits.

2 Background

2.1 Artificial Intelligence (AI) in the workplace

AI refers to computers’ ability to learn from experience and perform human-like complex tasks, such as rational decision-making (Pomerol 1997; Wang 2019). A common trend emerging from AI definitions is that machines can perform complex human-like tasks based on algorithms and data in the workplace and society. AI’s purpose is, therefore, to imitate human cognitive functions like perception, learning, reasoning, and decision-making (Holford 2019; Lopes de Sousa Jabbour et al. 2018).

However, the performance of intelligent systems depends on the data fed into them (Farrow 2019; Thesmar et al. 2019). Intelligent systems are unable to obtain missing parts of data. Therefore, data consistency and quantity are significant issues for AI applications in the workplace. Human intervention to support AI is required, as human intelligence and innovative behaviour are needed to find missing parts of data and categorise appropriate data for AI systems (Shute and Rahimi 2021). Human intervention is also necessary to override or interpret the outputs of these systems (Yam et al. 2020).

However, a core issue for workers with workplace AI is the loss of employment (Braganza et al. 2020; Rampersad 2020). Many workers will lose their jobs to AI applications in the workplace (Balsmeier and Woerter 2019). Consequently, the chances are high that the work performed by workplace AI would no longer need the workers’ involvement (Holford 2019; Wright and Schultz 2018). Workers, therefore, would not feel comfortable if they could not understand how an AI application helps or affects them.

The strategy that appears to help with this dilemma is to let workers see how this advancement activates their innovative behaviour (Fügener et al. 2022; Klotz 2018; Wilson and Daugherty 2019). However, the reality is quite the opposite (Davenport 2019; Gligor et al. 2021; Waterson 2020a, b). In such a technological context, while the inner workings of such systems generally remain unknown (Gligor et al. 2021; Klotz 2018), it is up to workers to upskill and reskill themselves to engage in innovative behaviour to coexist with AI systems (Afsar et al. 2014; Jaiswal et al. 2021; Sousa and Wilks 2018).

2.2 Innovative work behaviour (IWB)

Scholarly research combines idea conception through completion under ‘innovative behaviour’ (Baer 2012; Baer and Frese 2003; Scott and Bruce 1994; Somech and Drach-Zahavy 2013). The generation and implementation of new and original ideas into newly designed goods, services, or ways of working are examples of innovative work behaviour (Baer 2012; Perry-Smith and Mannucci 2017).

Further, innovative behaviour is often associated with creative problem-solving in engineering literature (Anantrasirichai and Bull 2021; Colin et al. 2016). AI technologies intend to enhance and support individuals’ creativity in problem-solving. In this literature, AI is a creative tool and can be a creator in its own right (Anantrasirichai and Bull 2021; Colin et al. 2016). On the other hand, workers can use AI applications to demonstrate ‘creativity in problem-solving’ in the workplace. They could use AI to create something new, like a unique ice cream flavour. However, IWB is generally reactive rather than proactive in management literature, such as devising workarounds (Alter 2014). Idea generation, elaboration, promotion, and implementation are parts of the process of developing workarounds (Alter 2014; Perry-Smith and Mannucci 2017). With AI’s limits in perspective, innovative work behaviour serves as workarounds to compensate for AI limitations.

Moreover, as per engineering literature (Anantrasirichai and Bull 2021; Colin et al. 2016), being creative is more about the creative process and an individual’s intellect than the creative outcome and behaviour. Solving a problem through a dramatic shift in viewpoint by employing technology is vital in the creative process (Colin et al. 2016). As per management literature, innovative behaviour manifests in the creative product (the behaviour) through idea generation, elaboration, championing, and implementation (Alter 2014; Perry-Smith and Mannucci 2017). In this review, IWB is spontaneous activity to compensate for AI flaws or make sense of AI system outputs rather than a dramatic shift in an individual’s perspective.

IWB necessitates deliberate workers’ intervention in the workplace (Perry-Smith and Mannucci 2017). It is thus IWB is the identification of issues, together with the generation, initiation, and implementation of new and original ideas, as well as the set of behaviours required to develop, initiate, and execute ideas with the intent of enhancing personal and business performance (Jong and Hartog 2010).

The behavioural element is critical, which means the conception through completion of ideas is not enough to demonstrate IWB without workers’ engagement with others in this process (Perry-Smith and Mannucci 2017). A worker collaborates with others to drive new ideas and determine feasibility (Perry-Smith and Mannucci 2017). However, when a worker engages with others as part of IWB, groupthink is likely to kill an idea prematurely (Moorhead and Montanari 1986). In addition, IWB reflects organisational contexts, which means ideation and the involvement of workers with others reflect the context of an organisation (Perry-Smith and Mannucci 2017; Saether 2019).

While workers identify potential problems and initiate behaviours that allow sharing of knowledge and insights (Chatterjee et al. 2021), IWB is expected to produce innovative output (Farrow 2019). It is, therefore, reasonable to suggest that IWB is a worker’s deliberate action of ideation and adopting new ideas, goods, processes, and procedures to their tasks, unit, department, or organisation (Jong and Hartog 2010). In this analysis, IWB examples include supporting the design, implementation, introduction and use of AI applications in the workplace (Desouza et al. 2020), implementing AI-related technologies (Choi et al. 2019), and proposing ways of achieving goals and executing work tasks using AI technologies (Mahroof 2019). However, this is not an exhaustive list; any behaviour in an organisation with an ‘innovative’ element falls under IWB (Jong and Hartog 2010).

3 Research method

The researcher formulated the research question, determined the keywords, and identified, collected, analysed and synthesised the relevant literature (Klein and Potosky 2019; Tranfield et al. 2003). The research began with a review of relevant literature on ‘artificial intelligence’ and ‘innovative work behaviour.’ The review question – how will AI in a workplace make workers innovative in their work? – allowed for a ‘concept-centric’ approach to the review, as mentioned in Method 1 below (Rousseau et al. 2008).

Three stages were followed to find studies on ‘artificial intelligence’ and ‘innovative behaviour’: First, a manual search was performed in Google Scholar using a combination of the two concepts. This search aimed to delve into the potential keywords for the data collection. Second, a search was performed in the electronic and multidisciplinary database of Scopus using a combination of the keywords: ALL( ( “artificial intelligence” OR “augmented intelligence” OR robot* ) AND ( “human innovati*” OR “human creativ*” OR “innovative work behavi?r” ) ).

Previous literature (e.g., Bos-Nehles et al. 2017; Charlwood and Guenole 2021; Farrow 2019) has used these keywords. Also, Truncation and Wildcards techniques were used to optimise the search string. Truncation and Wildcards are ‘search string broadening strategies’ to include various word endings and spellings. For example, in “innovative work behavi?r”, the Truncation symbol “?” in “behavi?r” was used to force the search string to return the British and American spellings of “innovative work behaviour.“ The search string used the Scopus field code ‘ALL()’ rather than ‘TITLE-ABS-KEY()’ to search document contents for the combination of the keywords. The search string returned documents that included the combination of the keywords in the entire body of the documents and the title, abstract, and keywords.

The final list of articles was retrieved from the Scopus database. The breadth of publications was prioritised over the depth. The Scopus database provides this, as it covers peer-reviewed journals published by major publishing houses and goes beyond only influential journals (Ballew 2009; Burnham 2006; Sharma et al. 2020). Further, due to the nature of ‘artificial intelligence’ and ‘innovative behaviour’ concepts, an interdisciplinary field coverage was chosen, a strength of the Scoups database compared to other databases such as the Web of Science. While Google Scholar could have provided such interdisciplinary field coverage, compared to Scopus, it has significant drawbacks, including difficulty narrowing down search results, limited sorting options, questionable content quality, and difficulties accurately extracting meta-data from PDF files, to highlight a few.

When the Scopus database was searched, the returned results were limited to the last ten years (Fig. 1), articles in the English language, journal as a source type, articles as a document type, articles of ABS ranking, articles in the following subject areas: Business, Management, and Accounting; Social Sciences; Psychology; Arts and Humanities; and Decision Sciences.

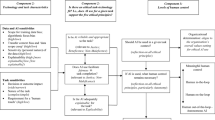

The Scopus field code EXACTSRCTITLE () was used to limit the returned results to ABS-listed journals (Table 1). This field code was supplied with a list of ABS-ranked journals. Then, manual checking of journal titles was performed to verify the quality of the returned data to ABS-rated journals. A graphic representation of the process is shown in Fig. 2.

A further reduction of the list was made by reviewing the abstracts of the articles in stage 2 above (Di Vaio et al. 2020). Articles had to satisfy thematic requirements to be included in the review. Articles that addressed the relationship between artificial intelligence and innovative work behaviour were retained for analysis. A careful reading of the articles’ abstracts helped create an excel table highlighting the relevance of each article to the topic (Di Vaio et al. 2020).

Then, in the third stage, to identify articles not returned by the Scopus search string in the second stage, a review of the reference lists of the recent articles, among the articles returned by the Scopus database, was performed (Di Vaio et al. 2020). Following the exclusion and inclusion criteria, 65 studies matching the criteria were combined for this analysis.

The objective of the data analysis stage was to understand the selected list of articles (Tranfield et al. 2003). The data were organised into themes in an Excel spreadsheet (Braun and Clarke 2006).

The analysis adopted ‘Reflexive Thematic Analysis’ (Braun and Clarke 2019, 2022). ‘Reflexive’ in this context highlights the role of the researcher in generating the themes (Braun and Clarke 2022; Terry and Hayfield 2020). This analysis method relies on the researcher’s interpretation and active engagement with the data considering the research question (Braun and Clarke 2022; Byrne 2022; Terry and Hayfield 2020).

Using reflexive thematic analysis, a researcher can offer deeper levels of meaning and significance, analyse hidden interpretations and assumptions, and explore the implications of meaning (Braun and Clarke 2022; Byrne 2022). In the reflexive thematic analysis, themes are hence patterns of meaning anchored by a shared idea or concept (Terry and Hayfield 2020). They are generated, explored, and refined throughout iterative rounds rather than simply emerging from the data (Braun and Clarke 2022; Byrne 2022; Terry and Hayfield 2020). They are meaningful entities from codes that capture the essence of meanings from data rather than clusters of data, classifications, or summaries (Braun and Clarke 2022; Terry and Hayfield 2020).

When reviewing the selected articles, the researcher allowed the six phases of thematic analysis (Braun et al. 2019; Braun and Clarke 2006) to guide theming and anchoring data to themes. First, the researcher immersed himself in the selected articles by re-reading them to familiarise himself with the data. During this phase, by being curious, the researcher made casual notes of interesting statements, such as “tasks robots will dominate” (Wirtz et al. 2018), “robot-related up-skilling” (Lu et al. 2020), “robots often make mistakes” (Yam et al. 2020), etc. In the second phase, the researcher started anchoring statements from the selected articles to interesting codes such as “robot mistake”, “replacement fear”, etc. In the third phase, constructing themes, themes were “built, molded, and given meaning” (Braun and Clarke 2019, p. 854), and they were the analytic output of an immersion process and deep engagement (Braun and Clarke 2022). The researcher explored latent meanings, connections, and possible interpretations such as “tasks robots will dominate” (Wirtz et al. 2018) and the uncertainties around robot-human tasks distribution, or “robot-related up-skilling” (Lu et al. 2020) and the perpetual race of upskilling and reskilling, or “robots often make mistakes” (Yam et al. 2020) and the idea of worker’s finding workarounds, etc. The researcher reviewed the candidate themes in the fourth and fifth phases and revised and defined them. Themes that were substantiated using the reviewed articles and were related to one another and the research question were kept (Braun and Clarke 2022). The researcher also shared the themes in research circles to enhance reflexivity and interpretative depth (Braun and Clarke 2022; Dwivedi et al. 2019). After that, in the sixth phase, the researcher used an iterative approach to report the themes with supporting references from the list of selected articles and relate the analysis to the research question (Braun and Clarke 2022). The researcher also presented the themes at the 37th EGOS Colloquium 2021. The researcher benefited from the participants’ feedback to further enhance reflexivity and interpretative depth (Braun and Clarke 2022; Dwivedi et al. 2019).

The development of a coding book, intercoder reliability, and reproducibility are inconsistent with reflexive thematic analysis (Braun and Clarke 2022). Furthermore, multiple analyses are possible; however, the researcher selected and developed the themes most pertinent to the research question (Terry and Hayfield 2020).

Therefore, the process of thematic analysis was interpretive, intending to identify themes and highlight links (Tranfield et al. 2003). The analysis began with a manual text study. Themes were developed inductively (Braun and Clarke 2006). They were linked iteratively, meaning that the analysis process entailed continual forward and backward movement in terms of constructing and refining themes (Braun and Clarke 2006).

4 Analysis and interpretation

The analysis suggested several themes in terms of how AI might drive IWB. A tabular presentation of the themes and supporting references is shown in Table 2.

These themes are discussed in this section. Propositions are also included after each theme to encourage future research into the role of AI in driving IWB.

4.1 Robots make mistakes, and such mistakes stimulate workers’ IWB

In this context, ‘robots’ refers to any intelligent systems in a workplace that emulate workers’ intelligence and abilities (Yam et al. 2020). To increase efficiency, organisations rely on robots (Yam et al. 2020). For instance, robots generated non-structured agreements in greater numbers than humans (Druckman et al. 2021). Negotiators working with a robotic platform were more pleased with the results and had more favourable views of the mediation experience (Druckman et al. 2021). These algorithmic approaches reduce human knowledge and meaning in the workplace (Holford 2019). However, different cultures can have different perspectives on robots. Americans, for example, were more critical of AI-generated content than the Chinese (Wu et al. 2020).

One might argue that robots in a workplace interact with workers who may not be adequately trained to interact with them. Therefore robots need to relate to inexperienced instructions and feel normal to workers even in unstructured interactions (Scheutz and Malle 2018). However, autonomy and flexibility expose robots to a plethora of ways in which they can make mistakes, disregard a worker’s expectations and the ethical code of a workplace, or create physical or psychological harm to a worker (Scheutz and Malle 2018).

A robot in an Amazon warehouse mistakenly ripped a can of bear repellent spray, resulting in the hospitalisation of 24 workers (Parker 2018). Microsoft’s robot editor used a photo of the wrong mixed-race member of a band to illustrate a news article about racism (Waterson 2020b). An AI robot camera, which was meant to monitor the football during a game, instead tracked the assistant referee’s head, resulting in sudden camera movements towards the referee and repeated switching between the referee’s head and the actual football (HT Tech, 2020). Although organisations increasingly depend on robots to improve efficiency, they frequently make mistakes, adversely affecting workers and their organisations (Yam et al. 2020). Also, algorithmic approaches fail to understand the distinct characteristics of human creativity and the tacit knowledge that goes with it (Chatterjee et al. 2021; Holford 2019).

Therefore, an observation in the literature (Li et al. 2019; Wilson and Daugherty 2019) is evident in favour of workers shaping an organisation’s service innovation performance more than robots. Although this can come as a surprise, since robots are supposed to make fewer errors, workers’ IWB may benefit from robots’ mistakes (Choi et al. 2019; Yam et al. 2020). Delivery drivers, for example, can drive in real traffic while predicting events for a robotic autopilot (Grahn et al. 2020). Although robotic autopilot can manage predicted events in this scenario, workers can deal with uncertainty and related adaptive and social behaviours in specific, highly congested traffic conditions and environments (Grahn et al. 2020).

If robots frequently fail to provide the expected service, workers would be pushed to think outside the box to eliminate robot mistakes. In this case, the role of robots in the workplace stimulates workers’ ability to think and innovate to overcome robot limitations (Klotz 2018; Wilson and Daugherty 2019). Manual assembly, for example, would place high demands on workers’ cognitive processing in a robotic-enabled workplace (Van Acker et al. 2021).

If robots make mistakes (or due to the diverse nature of services), they will likely continue to stimulate workers’ IWB. Therefore, robots in the workplace (particularly in knowledge work) must prioritise collaborative approaches in which workers and robots collaborate closely (Fügener et al. 2022; Sowa et al. 2021). The interpretation of this theme is summed up in Proposition 1. Table 3 proposes relevant questions to investigate this proposition further.

Proposition 1

Robots make mistakes, and such mistakes stimulate workers’ IWB.

4.2 AI triggers ‘fear’ in workers, and this ‘fear’ stimulates workers’ IWB

AI technologies in the workplace can reshape tasks and the definition of work across businesses (Braganza et al. 2020). This reshaping eliminates certain jobs or parts of an automated job (Braganza et al. 2020). As a result, algorithmic approaches aim to reduce various forms of human involvement and interpretation in the workplace(Holford 2019). Self-service technology (SST) and the Wizard-of-Oz (WOZ) experiment, for example, can demonstrate that the contribution of workers to services that incorporate these technologies has been reduced (Costello and Donnellan 2007).

Moreover, rather than directly influencing worker productivity, AI technologies indirectly influence the development of new, modified, or unmodified worker routines (Giudice et al. 2021). Therefore, it is generally accepted that workplace AI threatens the continuity and security of workers’ jobs (Rampersad 2020). It is also projected that AI applications will take over full-time and permanent jobs while workers will be hired for short-term assignments (Braganza et al. 2020). Therefore, considering the adoption of technological transformation, uncertainty about the employment of workers appears to be an integrated feature of AI systems (Costello and Donnellan 2007). This threat is genuine for jobs requiring repetitive motion, data management and analysis, repeated physical equipment control, and individual evaluative interaction (Chuang 2020; Lloyd and Payne 2022).

This argument, however, does have limitations. Although there is a persistent fear of job loss (Sousa and Wilks 2018), the academic literature (e.g., Jaiswal et al. 2021; Wilson and Daugherty 2019) suggests a drive toward a more symbiotic synthesis of human-machine competencies. This drive takes a more proactive approach to AI adoption in the workplace, encouraging businesses to be cautious in treating their workers (Li et al. 2019). This body of literature also argues that businesses should actively protect workers’ interests and cautiously implement technology that supports rather than replaces them (Li et al. 2019).

What can be suggested is that this line of thinking implies uncertainty about future employment for workers (Li et al. 2019). This uncertainty, however, may encourage workers to actively engage in service innovation (Li et al. 2019). And if an organisation can afford AI solutions, it is suggested that their workers’ IWB be enhanced by training (Klotz 2018; Wilson and Daugherty 2019). While AI systems help workers in innovation (Candi and Beltagui 2019; Verganti et al. 2020), the ‘fear’ factor may trigger IWB in workers. This factor theoretically benefits workers in their quest for a long-term career and assists organisations in surviving through the transition time by safeguarding the interests of their workers (Haefner et al. 2021; Li et al. 2019).

To stay relevant in a workplace where AI is reshaping human employment, workers must enhance their IWB (Klotz 2018; Rampersad 2020; Sousa and Wilks 2018). To coexist with intelligence systems, workers can capitalise on critical thinking, problem-solving, communication and teamwork (Rampersad 2020; Sousa and Wilks 2018). Therefore, workplace AI can stimulate IWB in workers because of improved workplace technology and the uncertainty and insecurity of jobs it brings to workers (Jiang et al. 2020; Nam 2019). ‘Fear’ of being replaced by robots or artificial intelligence, or ‘aliennational psychological contacts’ may trigger IWB in workers rather than the actual technology (Braganza et al. 2020; Kim et al. 2017). This discussion may also suggest that developments in AI systems, regardless of industry or context, stimulate IWB in workers if workers perceive a threat from these systems.

However, when discussing the ‘fear’ of being replaced, the human culture’s underlying position on workplace technology should be considered (Shujahat et al. 2019). How workers perceive AI in the workplace through a cultural lens could indicate how much the ‘fear’ factor is perceived (Wu et al. 2020). For example, perceived wider family support might help alleviate the ‘fear’ factor (Tu et al. 2021).

Though tailored approaches to mitigate the ‘fear’ factor of AI technologies and to put workers at the centre of workplace technologies are advised (Kim et al. 2017; Palumbo 2021), artificial intelligence is increasingly being adopted by organisations without careful consideration of the workers who will be working alongside it. Uncertainty regarding the employment of workers continues to be an inherent feature of AI systems being adopted (Costello and Donnellan 2007). Proposition 2 summarises this understanding. Table 3 proposes relevant research questions to investigate this proposition further.

Proposition 2

Workplace AI triggers ‘fear’ in workers, and this ‘fear’ stimulates IWB in workers as a means of staying relevant in the workplace.

4.3 Workers are reskilled and upskilled to compensate for AI shortcomings

By rendering processes more scalable, broadening the scope of operations across boundaries, and enhancing workers’ learning abilities and flexibility, AI technologies enable organisations to transcend the limitations of human-intensive processes (Verganti et al. 2020). As per this interpretation, AI technologies encourage a people-centred and iterative approach (Henkel et al. 2020; Verganti et al. 2020). However, one might argue that AI supports human-based approaches to user-centred solutions by requiring workers to reskill and upskill (Verganti et al. 2020; Wilson and Daugherty 2019). Therefore, while AI technologies can potentially reshape working arrangements, they can also facilitate workers’ reskilling and upskilling (Kim et al. 2017; Palumbo 2021).

Further, human oversight of AI applications can encourage reskilling and upskilling of workers (Brunetti et al. 2020; Xu and Wang 2019). Human supervision is often needed with AI applications to ensure that biases are not propagated (Sowa et al. 2021). For instance, although AI robot lawyers can perform certain tasks, such as answering legal questions, it has been argued that working with human lawyers ensures nuanced issues are adequately handled (Xu and Wang 2019). As a result, the human-AI collaboration focuses on situations in which humans and AI collaborate closely (Sowa et al. 2021; Wilson and Daugherty 2019). This interpretation explains that “algorithmic approaches,“ which aim to reduce human intervention in processes, could mean that while mundane and manual skills are delegated to robots, workers hone skills through reskilling and upskilling that robots still lack (Holford 2019; Kim et al. 2017).

Workers, therefore, can reskill and upskill themselves because of the introduction of AI in the workplace (Jaiswal et al. 2021). When upskilling and reskilling combine data with human intuition to make the most of AI technological advances, algorithms can positively impact workers’ work and discretionary power (Criadoa et al. 2020; Jaiswal et al. 2021). However, not only practical and technical knowledge and skills are necessary for that purpose, but also creativity-focused skills such as managing uncertainty, critical thinking, problem-solving, exploring possibilities, tolerating ambiguity, exhibiting self-efficacy, teamwork, and effective communication (Cropley 2020; Rampersad 2020). Recent literature (Jaiswal et al. 2021) also includes data analysis, digital, complex cognitive, decision-making, continuous learning skills, and creativity-focused skills.

The necessary skill set for upskilling and reskilling can fall into three categories: technical, human, and conceptual skills (Northouse 2018). Also, one might argue that upskilling and reskilling might mean workers are given AI-related short-term assignments when full-time and permanent assignments transfer to AI applications (Braganza et al. 2020). This discussion may also mean that to stay relevant, workers would go through a cycle of upskilling and reskilling, with the required skills evolving (Gratton 2020; Ransbotham 2020).

Workers must also be challenged and empowered to generate and implement new ideas (Auernhammer and Hall 2014). Therefore, though workplace AI requires upskilling and reskilling (Braganza et al. 2020), workplace AI places high demands on workers’ cognitive processing (Van Acker et al. 2021). Gamification and simulations help workers understand the feasibility of ideas by collaborating in cross-functional teams and being involved in the active development and auditing processes of AI applications (Anjali and Priyanka 2020; Criadoa et al. 2020). Consequently, human-AI collaboration is needed to overcome AI limitations in the workplace. For human workers to stay relevant in such human-AI collaboration, they need to upskill and reskill. Proposition 3 summarises this understanding. Table 3 proposes relevant research questions to investigate this proposition further.

Proposition 3

Workers can be reskilled and upskilled because of human-AI shortcomings in the workplace.

4.4 AI interface stimulates worker engagement

When AI technologies take a people-centred approach, there are indications in the literature (e.g., Wilson and Daugherty 2019) that the interface of AI systems can stimulate IWB in workers rather than the frequent errors of service robots (Yam et al. 2020). Earlier scholars (e.g., Davis 1998) agree that the interface design of technologies provides users with a means to interact with such technologies. For instance, Wilson and Daugherty (2019) report that medical professionals became medical coders in training workplace AI when the interface was user-friendly. The software interface, in this example, allowed medical coders to work with workplace AI and be involved in the AI solution’s much-needed training (Wilson and Daugherty 2019).

However, it is essential to mention that usability and interface design requirements may change from one worker to another (Massey et al. 2007). It is also possible to infer that high-skilled workers are better at interacting with AI systems (Shute and Rahimi 2021). Therefore, a high-skilled worker may have different expectations from an AI system’s interface design regarding usability and responsiveness than a low-skilled worker (Garnett 2018; Krzywdzinski 2017). While a ‘responsive’ and ‘usable’ interface is proposed to stimulate IWB in workers, the judgement of a ‘responsive’ and ‘usable’ interface is impacted by complex interactions (Garnett 2018; Massey et al. 2007).

The current research (Massey et al. 2007; Wilson and Daugherty 2019) suggests that a ‘responsive’ and ‘usable’ interface, for example, to enter data into AI-systems, requires workers’ perspective on interface design. In the workplace, recent research (Beane and Brynjolfsson 2020; Yam et al. 2020) also suggests that when robots are human-like—capable of thinking and feeling—workers evaluate them more favourably. This favourable perception can be explained by the fact that workers like assisting others and sharing their knowledge (Lo and Tian 2020; Yam et al. 2020). Human-like robots may offer humans such feelings (Giudice et al. 2021; Lo and Tian 2020).

However, the interface must meet workers’ sensory and functional needs (Massey et al. 2007). Therefore, designing such interfaces for workplace AI requires workers’ perspectives and understanding (Massey et al. 2007). While this extends beyond the interface design of an AI system (Massey et al. 2007), it suggests that intelligent systems can only encourage innovative behaviour if workers feel linked to them (Wilson and Daugherty 2019; Yam et al. 2020). This line of argument is extended further by proposing that a ‘responsive’ and ‘usable’ interface allows workers to conveniently enter data into an AI system and make sense of the systems’ outputs through such interface design (Verganti et al. 2020).

The interface should clarify which issues need to be addressed and how the outputs should be used (Verganti et al. 2020). If workers can make informed decisions using such an interface, the interface may encourage IWB (Verganti et al. 2020). If AI systems have ‘friendly’ interface designs, responsive and usable, one may conclude that workplace AI stimulates IWB in workers. This understanding is summed up in Proposition 4. Table 3 proposes relevant research questions to investigate this proposition further.

Proposition 4

When workplace AI has a user-friendly interface design, it encourages workers to generate and implement ideas.

4.5 Algorithmic bias requires innovative behaviour

Algorithmic bias may come from institutionalising existing human biases or introducing new ones (Albrecht et al. 2021). Implementing a machine learning model, for example, might be pitched to improve the satisfaction of employees and consumers. However, how do you define ‘satisfaction’ specifies the algorithm’s inner workings and the desired output (Akter et al. 2021; Stahl et al. 2020). And when AI technologies inform (or make) a decision that affects a human being (i.e. a worker), such bias has a detrimental effect, resulting in discrimination and unfairness (Akter et al. 2021).

For instance, based on data obtained from male CVs, Amazon’s AI recruitment system did not rate candidates gender-neutrally (BBC, 2018). Facebook’s Ad algorithm allowed advertisers to target users based on gender, race, and religion, all of which are protected classes (Hern 2018). An algorithm the UK’s Home Office used in visa decisions was dubbed “racist”; the algorithm focused on an applicant’s nationality (BBC, 2020).

Hence, although organisations are increasingly using AI systems and algorithms, the accessibility and interpretability of algorithms (algorithmic transparency) have drawn attention to the organisation’s algorithmic footprint (Criadoa et al. 2020). As a result, innovative behaviour in the workplace entails addressing the ethical, social, economic, and legal aspects of AI applications (Di Vaio et al. 2020).

While workers may still use discretion to override AI systems, biases in datasets and ambiguous algorithms force workers to rely on IWB to help AI systems make choices (Criadoa et al. 2020). Therefore, targeted human interventions are required to resolve the limitations of biases in a dataset and ambiguous algorithms (Palumbo 2021). Proposition 5 summarises this theme. Table 3 proposes relevant research questions to investigate this proposition further.

Proposition 5

Algorithmic bias necessitates innovative behaviour to overcome the limitations of biases in a dataset and ambiguous algorithms.

4.6 AI as a general-purpose tool for innovative behaviour

AI-assisted technologies can encourage curiosity, questioning, systematic thinking, trial and error, reasoning, and elaboration (Güss et al. 2021). A recently published preprint (Wang et al. 2022) shares how curiosity might encourage humans to use AI’s ‘blind spots.‘ The underlying argument is that AI-enabled systems encourage workers to reach wide and deep into their knowledge bases, resulting in novel ideas (Althuizen and Reichel 2016). Therefore, AI acts as a tool to transcend the limitations of human-intensive processes by allowing workers to generate ideas and assess their feasibility, thereby entering a cycle of learning iterations that is constantly updated (Verganti et al. 2020).

Even if AI-enabled solutions act as a general tool for innovation, workers still need to share expertise (Chatterjee et al. 2021). However, knowledge sharing appears to be challenging in this context as digital technologies reshape working arrangements and the organisational social climate (Palumbo 2021). Inadvertently, these technologies may create high-skilled vs. low-skilled groupings of workplace workers (Garnett 2018; Krzywdzinski 2017). Workplace design, training, and organisational culture, on the other hand, may also impact knowledge sharing in this context and, as a result, help AI-assisted technologies enable innovative behaviour (Aureli et al. 2019).

that, Further, rather than serving as a general-purpose tool for innovation, AI-enabled solutions may create time and space for innovative behaviour (Beltagui et al. 2021; Candi and Beltagui 2019). Intelligent systems perform routine tasks, relieving workers to be more innovative (Jaiswal et al. 2021). Instead of 3D printing being a tool for innovation (Beltagui et al. 2021; Candi and Beltagui 2019), it will relieve workers from work they have traditionally done (Greenhalgh 2016). Workers will have more opportunities to plan on innovative aspects of their jobs.

While AI technologies can create time and space for idea generation, workers react to these opportunities differently (Kronblad 2020). It is also interesting that workers’ perceptions differ across cultures regarding AI-generated content (Wu et al. 2020). Besides, procedural and distributive justice and the manager-employee relationship may impact how innovative work behaviour can be improved in a workplace (Perry-Smith and Mannucci 2017; Potter 2021).

Furthermore, intuition commonly guides innovative behaviour and relies on workers’ perception, knowledge, and value judgment (Stierand et al. 2014). Even so, AI technologies can create additional time and space for workers to engage in idea generation and deliberation (Nevo et al. 2020), ‘willingness to innovate,‘ as exhibited by experimenting with ideas, plays a critical role in innovative behaviour (Auernhammer and Hall 2014).

AI technologies can overcome design process limitations and improve the ability of workers to learn and adapt (Verganti et al. 2020). These technologies provide patterns and products to workers critical for innovative behaviour: idea conception through completion (Nevo et al. 2020; Perry-Smith and Mannucci 2017). AI systems open new avenues for innovative thinking (Townsend and Hunt 2019). Proposition 6 summarises this theme, and Table 3 proposes relevant research questions to investigate this proposition further.

Proposition 6

AI facilitates innovative behaviour by serving as a general-purpose tool for innovation, providing space and time for innovation, and providing patterns and products that workers can engage with.

5 Concluding remarks

This section discusses the themes, concludes the review, and highlights the study’s contributions and limitations.

5.1 Discussion and conclusion

The relationship between artificial intelligence and innovative work behaviour is explored in this review, and several themes are developed. Per the analysis, artificial intelligence’s flaws in the workplace can stimulate innovative work behaviour. However, this review does not dispute the advancement of artificial intelligence and how it helps workers, workplaces, and organisations (Shrestha et al. 2021; Sowa et al. 2021). It does, however, attempt to draw attention to artificial intelligence’s limits and how these limitations may drive innovative behaviour (Braganza et al. 2020; Stahl et al. 2020).

The first theme suggested that intelligent robots in the workplace make mistakes (Yam et al. 2020). While developers attempt to consider multiple scenarios, they cannot anticipate all scenarios when an intelligent robot interacts with an untrained human (Scheutz and Malle 2018). Intelligent robots may make mistakes even when not interacting with untrained humans since they cannot make sense of specific information or situations (Yam et al. 2020). Humans in the workplace should be innovative to compensate for such flaws in intelligent robots. However, rather than the technology’s ability to drive innovative behaviour, it appears that the technology’s shortcomings are doing so.

A worker may feel at ease delegating simple tasks to robots while keeping social and creative tasks (Van Looy 2022). The nature of the social and creative tasks that human workers reserve for themselves, as opposed to the mistakes made by robots, might drive innovative work behaviour. Although this is reasonable, it does not explain why workers are sceptical about robots performing social and creative tasks (Van Looy 2022). Therefore, the idea that robots make mistakes may explain why human workers delegate simple tasks to robots (Van Looy 2022). In this process, human workers do social and creative tasks which require innovative work behaviour.

The second theme focuses on the ‘fear’ factor associated with integrating intelligent systems in the workplace (Li et al. 2019; Rampersad 2020). AI will take over routine and manual tasks from humans, allowing humans to focus on tasks, for instance, that involve critical thinking and creativity (Rampersad 2020; Sousa and Wilks 2018). While this insight appears to be positive, it also brings a fear factor into the workplace (Braganza et al. 2020). Workers will eventually worry if such intelligent systems will replace them. Therefore, while AI provides narrow intelligence that exceeds human abilities, one may argue that it does not create jobs for itself but instead takes human jobs (Chuang 2020). The “fear” factor drives innovative behaviour as individuals attempt to remain relevant in a workplace where intelligent technologies are expected to take over existing tasks.

Recent studies (Chowdhury et al. 2022; Shin 2021) suggest that there may be another explanation for what drives innovative work behaviour other than the fear of intelligent systems. According to this line of research, when humans and AI collaborate, they can outperform an AI system alone (Fügener et al. 2022). Therefore, rather than the “fear” factor, a worker’s understanding of how an AI system decides, predicts, and performs tasks, as well as the limitations of an AI decision and its rationale, could drive innovative work behaviour (Chowdhury et al. 2022; Shin 2021). This course of research is still evolving; therefore, it is unclear whether workers’ understanding of how an AI system decides, predicts, and fulfils tasks reduces the likely fear that workers will be replaced by the system (Plumwongrot and Pholphirul 2022).

The third theme suggests that workers would need to be reskilled and upskilled to compensate for AI’s shortcomings (Cropley 2020; Rampersad 2020). Consequently, existing worker skills may become obsolete as these systems advance (Sousa and Wilks 2018). Whether workers enter a loop of becoming low-skilled and needing reskilling and upskilling as the weaknesses of AI systems are addressed is an interesting issue to explore. However, it is essential to highlight that such reskilling and upskilling do not appear to be motivated by the need for workers to remain relevant - instead, they seem to be driven by the desire to improve workers’ abilities to help AI systems in the workplace (Choi et al. 2019; Kim et al. 2017). It appears to be about exploiting humans to assist AI systems with existing flaws (Choi et al. 2019; Sousa and Wilks 2018). It is, therefore, reasonable to imagine that once AI flaws in an area are addressed, reskilling and upskilling of required skills to address flaws will cease. While AI systems would be predicted to replace human-only skills like creativity (Holford 2019), such predictions have yet to be realised. Furthermore, this interpretation does not consider how different personality traits among workers may drive innovative work in different ways (Jiang et al. 2022), or how using AI would lower the quality of work even after workers have been retrained and upskilled (Charlwood and Guenole 2021).

The fourth theme suggests that artificial intelligence interface is driving innovative behaviour. Workers will engage with AI systems in the workplace if the interface is designed human-friendly (Wilson and Daugherty 2019). However, it is worth noting that AI’s weakness needs such an interface to operate with humans (Sowa et al. 2021; Wilson and Daugherty 2019). Also, this theme has yet to clarify whether worker personality traits would affect how innovative they are when interacting with a user-friendly AI interface or whether doing so requires technical expertise above and beyond basic digital skills (Jiang et al. 2022; Lloyd and Payne 2022). Alternatively, if AI systems had progressed to general intelligence (human level), no such interface would have been required (Holford 2019). While creating such an interface implies that humans can collaborate with AI systems (Fügener et al. 2022), this collaboration is primarily about providing AI systems with additional data to work with or reinforcing AI learnings (Sowa et al. 2021; Wilson and Daugherty 2019). Therefore, AI’s flaws need the development of a friendly interface that allows humans to improve such systems.

The fifth theme argues that AI bias necessitates innovative work behaviour (Criadoa et al. 2020). AI systems use the data that has been fed to them (Albrecht et al. 2021). While humans give data and models that aid AI systems in learning from the data, the data and models may contain algorithmic bias (Vereycken et al. 2021). AI systems have yet to make sense of data beyond applying models. Human ingenuity is essential to assist AI systems in identifying and correcting bias in data or algorithms. Workers are, therefore, more likely to use their knowledge and training through innovative work behaviour to improve an AI system when moral violations are not involved (Wilson and Daugherty 2019).

The sixth theme suggests that AI can be used as a general-purpose innovation tool (Chan et al. 2018). AI applications in the workplace encourage curiosity, questioning, thinking, trial and error, reasoning, and elaboration (Güss et al. 2021). Thus, AI in the workplace creates new tools, time and space for innovation and new patterns for workers to engage with (Cebollada et al. 2021). But this might be because workers are still hesitant about how well AI systems can perform creative and social tasks (Castañé et al. 2022; Van Looy 2022). It can be argued that AI is a general-purpose tool for innovative behaviour because the technology has yet to innovate without humans’ help (Wilson and Daugherty 2019). While it is predicted that the technology will have this capability in the distant future (Holford 2019), workers with innovative behaviour will be required to make sense of the AI systems’ elements or use the system ingeniously.

5.2 Contributions

Recent reviews have delved into the use of AI in the workplace. These include AI to reshape innovation management (Haefner et al. 2021), artificial intelligence and business models (Di Vaio et al. 2020), electronic brainstorming for idea generation (Maaravi et al. 2021), AI to transform human existence (Matthews et al. 2021), AI to combat the COVID-19 pandemic (Khan et al. 2021), and AI to solve tasks autonomously (Cebollada et al. 2021), etc. On the other hand, these reviews focus on the apparent transformational strengths of new technologies such as AI. It is, however, not always the case with AI, which has limitations and weaknesses. For example, skilled personnel must fix errors made by service robots so that customers are not dissatisfied and their organisation is not adversely affected (Yam et al. 2020).

In contrast to prior reviews, the present one identifies AI weaknesses before suggesting that these flaws, rather than AI’s strengths, could also drive innovative work behaviour. This positioning suggests that AI flaws should be embraced in the workplace, enabling worker ingenuity. Innovative work behaviour, therefore, compensates for AI flaws. However, a further implication of this positioning is whether AI adoption in the workplace would reduce innovative behaviour when AI advances or worker ingenuity overcome existing AI limitations (Chuang 2020; Rampersad 2020). This further implication does not appear favourable to workers, let alone motivation to adopt AI in the workplace (Chuang 2020; Rampersad 2020). Is it not a reason to avoid adopting AI in the workplace? Or that AI may be programmed to stimulate innovative behaviour? Or should we appreciate AI’s flaws since they allow workers to exhibit their ingenuity? Holford (2019, p. 143) argues that future AI advancement that minimises the human element will fail to recognise “the unique and inimitable characteristics of human creativity and its associated tacit knowledge.“ Accordingly, advances in AI and minimizing AI limitations are less likely to reduce the demand for innovative work behaviour. While intelligent robots may be less likely to make mistakes in the future, innovative behaviour will still be required, for example, to understand AI system outputs.

Further, although Rogers’ innovation theory (2003) groups adopters into five groups based on their adoption rate, the theory does not explain how AI applications in the workplace might help adopters realise their IWB. In conclusion, this review suggests several potential research lines regarding AI and workers’ IWB, hoping to extend this theory.

There are also implications for practice. AI technologies stimulate innovative work behaviour (Liu et al. 2022; Odugbesan et al. 2022). Therefore, organisations should foster an environment where workers and AI technologies can coexist (Sowa et al. 2021; Wilson and Daugherty 2019). However, how an organisation fosters such an environment is critical. While integrating intelligent robots and systems into a workplace helps workers to seek workarounds and enhance areas where such intelligence systems have limitations, such integration should not create an environment of fear (Chuang 2020; Hasija and Esper 2022).

The fear of losing one’s job to intelligent systems may drive innovative work behaviour, but the existing intelligent systems cannot thrive without human involvement (Grimpe et al. 2022; Plumwongrot and Pholphirul 2022). Therefore, the fear factor may drive workers to restrict such systems from reaching their full potential.

But how can an organisation minimise this fear factor? One way is by outlining the “real” reason for incorporating intelligent systems at work (Liu et al. 2022). Another way is carefully contemplating how humans and AI systems can coexist (Berkers et al. 2022; Plumwongrot and Pholphirul 2022). While organisations’ understanding of manual jobs and those that require human elements is evolving, it seems that even if AI technologies can perform certain tasks like providing warmth and empathy, consumers prefer to interact with real people for that purpose (Beeler et al. 2022; Modliński et al. 2022; Peng et al. 2022).

Organisations might look at training options that help workers get along with AI systems. By retraining and upskilling workers, such training programmes can enable workers to overcome the limitations of intelligent systems. Such retraining and upskilling can enable workers to lessen algorithmic bias in workplace intelligent systems (Tilmes 2022). Additionally, such retraining and upskilling can allow employees to use intelligent systems to augment their innovative work behaviour (Odugbesan et al. 2022; Peng et al. 2022). Therefore, while AI’s limitations may drive innovative work behaviour, a meaningful coexistence that involves retraining and upskilling workers allow AI and humans to augment one another’s strengths.

This review has policy implications too. Suppose workers’ interaction with AI is intended to compensate for AI flaws. In that case, such interaction may be inconsistent with the OECD AI Principles (OECD, 2021) and the United Nations’ Sustainable Development Goal 8 (SDG 8), promoting productive employment and decent work (Braganza et al. 2020), which state that AI should benefit workers. In this context, AI benefits primarily from interactions with workers rather than workers directly benefiting from such interactions. Therefore, policymakers should explore how AI may continue to be a human partner (Sowa et al. 2021; Wilson and Daugherty 2019) rather than a rival (Chuang 2020; Rampersad 2020), as AI advancements may overcome the weaknesses highlighted in this review.

5.3 Limitations

The analysis is limited to ABS-ranked peer-reviewed journal articles, excluding non-peer-reviewed or non-ABS ranking papers, books and book chapters, and practitioner research.

Also, the search string may not have located relevant scholarly publications outside the Scopus database. The articles were retrieved from the Scopus database because of the breadth of publications it offers. The systematic search employed the ABS ranking to limit the returned articles to ABS-ranked journals. However, as one of the reviewers advised, the WoS database only indexes high-quality journals in each academic discipline. Future reviews on AI and innovative work behaviour can, therefore, source articles from the WoS database considering the quality aspect of the database.

Further, while the used keywords were derived from related papers, they may not have returned all relevant articles. When limiting the scope of the study, the researcher used the concept of ‘artificial intelligence’ as a keyword to mean “a collection of technologies” rather than referring to subset fields or other contributing concepts. Therefore, the search string did not include the search keywords such as “big data”, “machine learning”, “deep learning”, “digital transformation”, “recommender system”, or “natural language processing”. These keywords can be used in a future bibliometric analysis, along with the ‘artificial intelligence’ keyword, to explore further how AI limitations drive innovative work behaviour in the workplace.

Furthermore, later revisions excluded two themes from the manuscript: “AI accelerates knowledge creation and sharing” and “AI fosters open innovation, which involves workers’ IWB in a supply chain.“ The researcher concluded that other themes had already covered these two themes. The researcher also recognised the need for additional research in the supply chain context about ‘AI fosters open innovation, which involves workers’ IWB in a supply chain’. This decision was taken following Braun & Clarke’s guideline that “[…] the researcher needs to decide on and develop the particular themes that work best for their project—recognizing that the aims and purpose of the analysis […]” (2022, p. 10). Future studies utilising different analytical techniques can explore whether such themes can be developed independently.

In addition, while acknowledging the choices made in the study’s research design to limit the scope, the researcher understands that documents outside the inclusion criteria and alternative analytical methods may have added additional themes about AI and innovative work behaviour. Perhaps “AI’s explainability and causality to encourage innovative work behaviour” was one of these themes. Explainable AI describes how an AI system decides, predicts, and performs tasks (Rai 2020), enabling a worker to understand the limitations of an AI decision, its rationale, and preferred patterns of action (Chowdhury et al. 2022; Shin 2021). Therefore, it makes sense to infer that such themes might exist. According to recent research, explainable AI increases user trust and emotional confidence in the technology (Shin 2021). When workers know how AI makes decisions, along with the AI system, they can surpass an AI system on its own (Fügener et al. 2022). Therefore, it is intriguing to explore explainable AI with innovative work behaviour in future studies, for instance, to determine whether user trust and emotional confidence in AI would lead to innovative work behaviour among workers.

Finally, the researcher’s active role in theme generation is also acknowledged. The researcher adopted the reflexive thematic analysis method (Braun and Clarke 2022). The analysis adhered to “Big Q qualitative paradigms” (Braun and Clarke 2022; Kidder and Fine 1987). Therefore, the analysis and the themes need to be viewed through the 10-point core assumptions of reflective thematic analysis (Braun and Clarke 2022, pp. 8–9). With this approach, a researcher can generate intriguing and unique themes by blending the data, subjectivity, theoretical and conceptual understanding, and training and experience (Braun and Clarke 2022). The researcher chose this form of analysis to engage with compelling, insightful, thoughtful, complex, and deep meanings from the texts reviewed for this study (Braun and Clarke 2022) and in line with the existing literature that suggests subjectivity is a resource for research than an issue to be managed (Gough & Madill, 2012, as cited in Braun and Clarke 2022). However, this analysis method is inconsistent with the objectivity and reproducibility of themes (Braun and Clarke 2022; Byrne 2022; Terry and Hayfield 2020). The researcher understands that the inherent subjectivity in reflexive thematic analysis and the researcher’s active role did not adhere to what scholars refer to as “Small q qualitative paradigms” (Braun and Clarke 2022; Kidder and Fine 1987). Future studies can adopt analytic approaches of “Small q qualitative paradigms” to control for subjectivity and the researcher’s active role. Future research can use other analytical methods like content analysis (Seuring 2012) and bibliometric analysis (Ellegaard and Wallin 2015). While generating reproducible research, such alternative analytical methods can also advance our understanding of other themes and the recurrent nature of the themes identified in this reflexive thematic analysis.

While acknowledging these limitations, the purpose of this work is to encourage further research into exploring and expanding our understanding of AI-driven innovative work behaviour.

Data availability

The Scopus string, the list of articles, and the Excel spreadsheet can be provided.

Code availability

Not applicable.

References

Abubakar AM, Behravesh E, Rezapouraghdam H, Yildiz SB (2019) Applying artificial intelligence technique to predict knowledge hiding behavior. Int J Inf Manag 49:45–57. https://doi.org/10.1016/j.ijinfomgt.2019.02.006

Afsar B, Badir F, Y., Bin Saeed B (2014) Transformational leadership and innovative work behavior. Industrial Manage Data Syst 114(8):1270–1300. https://doi.org/10.1108/IMDS-05-2014-0152

Akhavan P, Shahabipour A, Hosnavi R (2018) A model for assessment of uncertainty in tacit knowledge acquisition. J Knowl Manag 22:413–431. https://doi.org/10.1108/JKM-06-2017-0242

Akter S, McCarthy G, Sajib S, Michael K, Dwivedi YK, D’Ambra J, Shen KN (2021) Algorithmic bias in data-driven innovation in the age of AI. Int J Inf Manag 60:102387. https://doi.org/10.1016/j.ijinfomgt.2021.102387

Albrecht T, Rausch TM, Derra ND (2021) Call me maybe: methods and practical implementation of artificial intelligence in call center arrivals’ forecasting. J Bus Res 123:267–278. https://doi.org/10.1016/j.jbusres.2020.09.033

Alter S (2014) Theory of Workarounds. Commun Association Inform Syst 34(1). https://doi.org/10.17705/1CAIS.03455

Althuizen N, Reichel A, Wierenga B (2012) Help that is not recognized: Harmful neglect of decision support systems. Decis Support Syst 54:719–728. https://doi.org/10.1016/j.dss.2012.08.016

Althuizen N, Wierenga B (2014) Supporting Creative Problem Solving with a Case–Based Reasoning System. J Manag Inf Syst 31:309–340. https://doi.org/10.2753/MIS0742-1222310112

Althuizen N, Reichel A (2016) The Effects of IT-Enabled cognitive stimulation tools on creative problem solving: a dual pathway to Creativity. J Manage Inform Syst 33(1):11–44. https://doi.org/10.1080/07421222.2016.1172439

Anantrasirichai N, Bull D (2021) Artificial intelligence in the creative industries: a review. Artif Intell Rev. https://doi.org/10.1007/s10462-021-10039-7

Anjali C, Priyanka B (2020) Future of work: an empirical study to Understand Expectations of the Millennials from Organizations. Bus Perspect Res 8(2):272–288. https://doi.org/10.1177/2278533719887457

Auernhammer J, Hall H (2014) Organizational culture in knowledge creation, creativity and innovation: towards the Freiraum model. J Inform Sci 40(2):154–166. https://doi.org/10.1177/0165551513508356

Aureli S, Giampaoli D, Ciambotti M, Bontis N (2019) Key factors that improve knowledge-intensive business processes which lead to competitive advantage. Bus Process Manage J 25(1):126–143. https://doi.org/10.1108/BPMJ-06-2017-0168

Austin RD (2016) Unleashing creativity with digital technology. MIT Sloan Manag Rev 58:22

Baer M (2012) Putting creativity to work: the implementation of creative ideas in organizations. Acad Manag J 55(5):1102–1119. https://doi.org/10.5465/amj.2009.0470

Baer M, Frese M (2003) Innovation is not enough: climates for Initiative and Psychological Safety, process innovations, and firm performance. J Organizational Behav 24(1):45–68

Ballew BS (2009) Elsevier’s Scopus® Database. J Electron Resour Med Libr 6(3):245–252. https://doi.org/10.1080/15424060903167252

Balsmeier B, Woerter M (2019) Is this time different? How digitalization influences job creation and destruction. Res Policy 48(8):103765. https://doi.org/10.1016/j.respol.2019.03.010

Bawack RE, Fosso Wamba S, Carillo KDA (2021) A framework for understanding artificial intelligence research: insights from practice. J Enterp Inf Manag 34:645–678. https://doi.org/10.1108/JEIM-07-2020-0284

BBC. Amazon scrapped “sexist AI” tool. BBC News. https://www.bbc.com/news/technology-45809919

BBC. Home Office drops “racist” algorithm from visa decisions. BBC News. https://www.bbc.com/news/technology-53650758

Beane M, Brynjolfsson E (2020) Working with Robots in a Post-Pandemic World. MIT Sloan Management Review 62(1):1–5

Beeler L, Zablah AR, Rapp A (2022) Ability is in the eye of the beholder: how context and individual factors shape consumer perceptions of digital assistant ability. J Bus Res 148:33–46. https://doi.org/10.1016/j.jbusres.2022.04.045

Beltagui A, Sesis A, Stylos N (2021) A bricolage perspective on democratising innovation: The case of 3D printing in makerspaces. Technological Forecasting and Social Change, 163. https://doi.org/10.1016/j.techfore.2020.120453

Berkers HA, Rispens S, Le Blanc PM (2022) The role of robotization in work design: a comparative case study among logistic warehouses. Int J Hum Resource Manage 0(0):1–24. https://doi.org/10.1080/09585192.2022.2043925

Bos-Nehles A, Renkema M, Janssen M (2017) HRM and innovative work behaviour: a systematic literature review. Personnel Rev 46(7):1228–1253. https://doi.org/10.1108/PR-09-2016-0257

Botega LF de C, da Silva JC (2020) An artificial intelligence approach to support knowledge management on the selection of creativity and innovation techniques. J Knowl Manag 24:1107–1130. http://dx.doi.org/10.1108/JKM-10-2019-0559

Braganza A, Chen W, Canhoto A, Sap S (2020) Productive employment and decent work: the impact of AI adoption on psychological contracts, job engagement and employee trust. J Bus Res. https://doi.org/10.1016/j.jbusres.2020.08.018

Braun V, Clarke V (2006) Using thematic analysis in psychology. Qualitative Res Psychol 3(2):77–101. https://doi.org/10.1191/1478088706qp063oa

Braun V, Clarke V (2019) Reflecting on reflexive thematic analysis. Qualitative Res Sport Exerc Health 11(4):589–597. https://doi.org/10.1080/2159676X.2019.1628806

Braun V, Clarke V (2022) Conceptual and design thinking for thematic analysis. Qualitative Psychol 9(1):3–26. https://doi.org/10.1037/qup0000196

Braun V, Clarke V, Hayfield N, Terry G (2019) Thematic analysis. In: Liamputtong P (ed) Handbook of Research Methods in Health Social Sciences. Springer, pp 844–858

Brunetti F, Matt DT, Bonfanti A, De Longhi A, Pedrini G, Orzes G (2020) Digital transformation challenges: strategies emerging from a multi-stakeholder approach. TQM J 32(4):697–724. https://doi.org/10.1108/TQM-12-2019-0309

Burnham JF (2006) Scopus database: a review. Biomedical Digit Libr 3:1. https://doi.org/10.1186/1742-5581-3-1

Byrne D (2022) A worked example of Braun and Clarke’s approach to reflexive thematic analysis. Qual Quant 56(3):1391–1412. https://doi.org/10.1007/s11135-021-01182-y

Candi M, Beltagui A (2019) Effective use of 3D printing in the innovation process. Technovation, 80–81, 63–73. https://doi.org/10.1016/j.technovation.2018.05.002

Castañé G, Dolgui A, Kousi N, Meyers B, Thevenin S, Vyhmeister E, Östberg P-O (2022) The ASSISTANT project: AI for high level decisions in manufacturing. Int J Prod Res 0(0):1–19. https://doi.org/10.1080/00207543.2022.2069525

Casazza M, Gioppo L (2020) A playwriting technique to engage on a shared reflective enquiry about the social sustainability of robotization and artificial intelligence. J Clean Prod 248:. https://doi.org/10.1016/j.jclepro.2019.119201

Cebollada S, Payá L, Flores M, Peidró A, Reinoso O (2021) A state-of-the-art review on mobile robotics tasks using artificial intelligence and visual data. Expert Systems with Applications, 167. https://doi.org/10.1016/j.eswa.2020.114195

Chan J, Chang JC, Hope T, Shahaf D, Kittur A (2018) Solvent: A mixed initiative system for finding analogies between research papers. Proceedings of the ACM on Human-Computer Interaction, 2(CSCW). https://doi.org/10.1145/3274300

Charlwood A, Guenole N (2021) Can HR adapt to the paradoxes of artificial intelligence? Hum Resource Manage J. https://doi.org/10.1111/1748-8583.12433

Chatterjee S, Chaudhuri R, Thrassou A, Vrontis D (2021) Antecedents and consequences of knowledge hiding: the moderating role of knowledge hiders and knowledge seekers in organizations. J Bus Res 128:303–313. https://doi.org/10.1016/j.jbusres.2021.02.033

Chiru C–G, Rebedea T (2017) Profiling of Participants in Chat Conversations Using Creativity–Based Heuristics. Creat Res J 29:43–55. https://doi.org/10.1080/10400419.2017.1267464

Choi Y, Choi M, Oh M, Moon, Kim S, Sam (2019) Service robots in hotels: understanding the service quality perceptions of human-robot interaction. J Hospitality Mark Manage 1–23. https://doi.org/10.1080/19368623.2020.1703871

Chowdhury S, Joel-Edgar S, Dey PK, Bhattacharya S, Kharlamov A (2022) Embedding transparency in artificial intelligence machine learning models: managerial implications on predicting and explaining employee turnover. Int J Hum Resource Manage 0(0):1–32. https://doi.org/10.1080/09585192.2022.2066981

Chuang S (2020) An empirical study of displaceable job skills in the age of robots. Eur J Train Dev. https://doi.org/10.1108/EJTD-10-2019-0183

Colin TR, Belpaeme T, Cangelosi A, Hemion N (2016) Hierarchical reinforcement learning as creative problem solving. Robot Auton Syst 86:196–206. https://doi.org/10.1016/j.robot.2016.08.021

Costello GJ, Donnellan B (2007) The diffusion of WOZ: expanding the topology of IS innovations. J Inform Technol 22(1):79. https://doi.org/10.1057/palgrave.jit.2000085

Criadoa JI, Valero J, Villodre J (2020) Algorithmic transparency and bureaucratic discretion: the case of SALER early warning system. Inform Polity 25(4):449. https://doi.org/10.3233/IP-200260

Cropley A (2020) Creativity-focused Technology Education in the age of industry 4.0. Creativity Res J. https://doi.org/10.1080/10400419.2020.1751546

Davenport TH (2019) Can we solve AI’s “Trust Problem”? MIT Sloan Management Review 60(2):1

Davis M (1998) Making a case for design-based learning. Arts Educ Policy Rev 100(2):7–15

Desouza KC, Dawson GS, Chenok D (2020) Designing, developing, and deploying artificial intelligence systems: Lessons from and for the public sector. Bus Horiz 63(2):205–213. https://doi.org/10.1016/j.bushor.2019.11.004

Di Vaio A, Palladino R, Hassan R, Escobar O (2020) Artificial intelligence and business models in the sustainable development goals perspective: a systematic literature review. J Bus Res 121:283–314. https://doi.org/10.1016/j.jbusres.2020.08.019

Druckman D, Adrian L, Damholdt MF, Filzmoser M, Koszegi ST, Seibt J, Vestergaard C (2021) Who is best at mediating a Social Conflict? Comparing Robots, Screens and humans. Group Decis Negot 30(2):395–426. https://doi.org/10.1007/s10726-020-09716-9

Dwivedi YK, Hughes L, Ismagilova E, Aarts G, Coombs C, Crick T, Duan Y, Dwivedi R, Edwards J, Eirug A, Galanos V, Ilavarasan PV, Janssen M, Jones P, Kar AK, Kizgin H, Kronemann B, Lal B, Lucini B, …, Williams MD (2019) Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management, 101994. https://doi.org/10.1016/j.ijinfomgt.2019.08.002

Ellegaard O, Wallin JA (2015) The bibliometric analysis of scholarly production: how great is the impact? Scientometrics 105(3). https://doi.org/10.1007/s11192-015-1645-z

Farrow E (2019) To augment human capacity—Artificial intelligence evolution through causal layered analysis. Futures 108:61–71. https://doi.org/10.1016/j.futures.2019.02.022

Fügener A, Grahl J, Gupta A, Ketter W (2022) Cognitive Challenges in Human–Artificial intelligence collaboration: investigating the path toward productive delegation. Inform Syst Res 33(2):678–696. https://doi.org/10.1287/isre.2021.1079

Flavián C, Pérez-Rueda A, Belanche D, Casaló LV (2021) Intention to use analytical artificial intelligence (AI) in services – the effect of technology readiness and awareness. J Serv Manag. https://doi.org/10.1108/JOSM-10-2020-0378

Garnett A (2018) The Changes and Challenges facing Regional Labour Markets. Aust J Labour Econ 21(2):99–123

Giudice MD, Scuotto V, Ballestra LV, Pironti M (2021) Humanoid robot adoption and labour productivity: a perspective on ambidextrous product innovation routines. Int J Hum Resource Manage 1–27. https://doi.org/10.1080/09585192.2021.1897643

Gligor DM, Pillai KG, Golgeci I (2021) Theorizing the dark side of business-to-business relationships in the era of AI, big data, and blockchain. J Bus Res 133:79–88. https://doi.org/10.1016/j.jbusres.2021.04.043

Gough, B., & Madill, A. (2012). Subjectivity in psychological research: From problem to prospect. Psychological Methods, 17(3), 374–384. https://doi.org/10.1037/a0029313

Grahn H, Kujala T, Silvennoinen J, Leppänen A, Saariluoma P (2020) Expert Drivers’ Prospective Thinking-Aloud to Enhance Automated Driving Technologies – Investigating Uncertainty and Anticipation in Traffic. Accident Analysis and Prevention, 146. https://doi.org/10.1016/j.aap.2020.105717

Gratton L (2020) Pioneering approaches to re-skilling and upskilling. (Ed.), A manager’s guide to the New World of Work: the most effective strategies for managing people, teams, and Organizations. The MIT Press, MIT Sloan Management Review

Greenhalgh S (2016) The effects of 3D printing in design thinking and design education. J Eng Des Technol 14(4):752–769. https://doi.org/10.1108/JEDT-02-2014-0005

Grimpe C, Sofka W, Kaiser U (2022) Competing for digital human capital: the retention effect of digital expertise in MNC subsidiaries. J Int Bus Stud. https://doi.org/10.1057/s41267-021-00493-4

Güss CD, Ahmed S, Dörner D (2021) From da Vinci’s Flying Machines to a theory of the creative process. Perspect Psychol Sci. https://doi.org/10.1177/1745691620966790

Haefner N, Wincent J, Parida V, Gassmann O (2021) Artificial intelligence and innovation management: A review, framework, and research agenda. Technological Forecasting and Social Change, 162. https://doi.org/10.1016/j.techfore.2020.120392

Hasija A, Esper TL (2022) In artificial intelligence (AI) we trust: a qualitative investigation of AI technology acceptance. J Bus Logistics. https://doi.org/10.1111/jbl.12301

Henkel AP, Bromuri S, Iren D, Urovi V (2020) Half human, half machine – augmenting service employees with AI for interpersonal emotion regulation. J Service Manage 31(2):247–265. https://doi.org/10.1108/JOSM-05-2019-0160

Hern A (2018), May 16 Facebook lets advertisers target users based on sensitive interests. The Guardian. https://www.theguardian.com/technology/2018/may/16/facebook-lets-advertisers-target-users-based-on-sensitive-interests

Holford WD (2019) The future of human creative knowledge work within the digital economy. Futures 105:143–154. https://doi.org/10.1016/j.futures.2018.10.002

HT Tech (2020), November 4 AI robot mistakes referee’s bald head with a football, tracks it throughout the match. HT Tech. https://tech.hindustantimes.com/tech/news/ai-robot-mistakes-referee-s-bald-head-with-a-football-tracks-it-throughout-the-match-71604487038101.html

Jaiswal A, Arun CJ, Varma A (2021) Rebooting employees: Upskilling for artificial intelligence in multinational corporations. Int J Hum Resource Manage 1–30. https://doi.org/10.1080/09585192.2021.1891114

Jiang L, Xu X, Wang H-J (2020) A resources–demands approach to sources of job insecurity: a multilevel meta-analytic investigation. J Occup Health Psychol. https://doi.org/10.1037/ocp0000267

Jiang X, Lin J, Zhou L, Wang C (2022) How to select employees to participate in interactive innovation: Analysis of the relationship between personality, social networks and innovation behavior. Kybernetes, ahead-of. https://doi.org/10.1108/K-09-2021-0884

Jong JD, Hartog DD (2010) Measuring innovative work Behaviour. Creativity and Innovation Management 19(1):23–36. https://doi.org/10.1111/j.1467-8691.2010.00547.x

Keeler LW, Bernstein MJ (2021) The future of aging in smart environments: Four scenarios of the United States in 2050. Futures 133:. https://doi.org/10.1016/j.futures.2021.102830

Khan M, Mehran MT, Haq ZU, Ullah Z, Naqvi SR, Ihsan M, Abbass H (2021) Applications of artificial intelligence in COVID-19 pandemic: A comprehensive review. Expert Systems with Applications, 185. https://doi.org/10.1016/j.eswa.2021.115695

Kidder LH, Fine M (1987) Qualitative and quantitative methods: when stories converge. New Dir Program Evaluation 1987(35):57–75. https://doi.org/10.1002/ev.1459

Kim YJ, Kim K, Lee S (2017) The rise of technological unemployment and its implications on the future macroeconomic landscape. Futures 87:1–9. https://doi.org/10.1016/j.futures.2017.01.003

Klein HJ, Potosky D (2019) Making a conceptual contribution at Human Resource Management Review. Hum Resource Manage Rev 29(3):299–304. https://doi.org/10.1016/j.hrmr.2019.04.003

Klotz F (2018) How AI can amplify human competencies. MIT Sloan Management Review 60(1):14–15

Köbis N, Mossink LD (2021) Artificial intelligence versus Maya Angelou: Experimental evidence that people cannot differentiate AI–generated from human–written poetry. Comput Hum Behav 114:. https://doi.org/10.1016/j.chb.2020.106553

Kronblad C (2020) Digital innovation in law dirms: the dominant logic under threat. Creativity and Innovation Management 29(3):512–527. https://doi.org/10.1111/caim.12395

Krzywdzinski M (2017) Automation, skill requirements and labour-use strategies: high-wage and low-wage approaches to high-tech manufacturing in the automotive industry. New Technol Work Employ 32(3):247–267. https://doi.org/10.1111/ntwe.12100

Li L, Li G, Chan SF (2019) Corporate responsibility for employees and service innovation performance in manufacturing transformation. Career Dev Int 24(6):580–595. https://doi.org/10.1108/CDI-04-2018-0109

Liu L, Schoen AJ, Henrichs C, Li J, Mutlu B, Zhang Y, Radwin RG (2022) Human Robot collaboration for enhancing work activities. Hum Factors 00187208221077722. https://doi.org/10.1177/00187208221077722

Lloyd C, Payne J (2022) Digital skills in context: working with robots in lower-skilled jobs. Econ Ind Democr 0143831X:221111416. https://doi.org/10.1177/0143831X221111416