Abstract

Companies increasingly use artificial intelligence (AI) and algorithmic decision-making (ADM) for their recruitment and selection process for cost and efficiency reasons. However, there are concerns about the applicant’s affective response to AI systems in recruitment, and knowledge about the affective responses to the selection process is still limited, especially when AI supports different selection process stages (i.e., preselection, telephone interview, and video interview). Drawing on the affective response model, we propose that affective responses (i.e., opportunity to perform, emotional creepiness) mediate the relationships between an increasing AI-based selection process and organizational attractiveness. In particular, by using a scenario-based between-subject design with German employees (N = 160), we investigate whether and how AI-support during a complete recruitment process diminishes the opportunity to perform and increases emotional creepiness during the process. Moreover, we examine the influence of opportunity to perform and emotional creepiness on organizational attractiveness. We found that AI-support at later stages of the selection process (i.e., telephone and video interview) decreased the opportunity to perform and increased emotional creepiness. In turn, the opportunity to perform and emotional creepiness mediated the association of AI-support in telephone/video interviews on organizational attractiveness. However, we did not find negative affective responses to AI-support earlier stage of the selection process (i.e., during preselection). As we offer evidence for possible adverse reactions to the usage of AI in selection processes, this study provides important practical and theoretical implications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Although the human element remains crucial in recruitment, firms increasingly digitize parts of the recruitment process and implement artificial intelligence (AI) and algorithmic decision-making (ADM) in their selection procedures. Generally, algorithms are the basis for several AI decision tools, whereby AI can be understood as new technology, where algorithms can interact with the environment by collecting information (e.g., through written text or speech and image recognition), and then interpreting data, recognizing patterns, inducing rules or predicting events, generating results, answering questions or giving instructions to other systems, and evaluating the results of their actions to improving decision-making (Ferràs-Hernández 2018). Recent surveys from Bullhorn and Staffing Industry Analysts reveal that firms have already automated several functions for the candidates (e.g., applications, assessment tests) and for the client organizations (e.g., search profiles/resumes, video interviews) (Kelly 2019). The advantages of ADM include higher efficiency in handling and screening applicants and a reduced time-to-hire and total costs of recruitment (McColl and Michelotti 2019; Suen et al. 2019; Woods et al. 2020). For example, Unilever saved 100,000 h of human recruitment time using algorithmic video analysis (Devlin 2020). Furthermore, algorithmic decision tools offer additional analytical possibilities, such as extracting and analyzing candidates’ personality traits from the application and predicting their potential job performance (Suen et al. 2019). Despite the firms’ enthusiasm for ADM, there remain concerns regarding applicant acceptance of algorithmic selection procedures (e.g., Acikgoz et al. 2020; Langer et al. 2019). For example, human decisions are perceived as fairer, more trustworthy, more interactionally, and evoke more positive emotions than algorithmic decisions in hiring situations (Acikgoz et al. 2020; Lee 2018).

However, little is known about applicants’ affective response to ADM during the entire recruitment process (e.g., screening and preselection, telephone or video interview), especially if an algorithm or AI supports humans in the selection process, while the final decision remains with them. First, while past research has focused on applicant reactions towards the decision agent (i.e., human or algorithm; e.g., Lee 2018), companies might be reluctant to deliver their decisions about candidates to an algorithm or AI completely. Second, there is a focus on specific applications of ADM in recruitment and selection, such as applicant screening (Bauer et al. 2006) or video interviews (e.g., Acikgoz et al. 2020; Langer and König 2017; Langer et al. 2019; McColl and Michelotti 2019; Mirowska 2020; Nørskov et al. 2020), while knowledge is scarce about applicant acceptance of AI systems in different steps of the recruitment process (Stone et al. 2013). From a company's perspective, it is important to impress candidates as well as to retain them throughout the different steps of the recruitment process. Third, from a theoretical perspective, procedural justice and fairness perceptions have been central theoretical frameworks for explaining applicant reactions towards these new technologies in recruitment and selection (e.g., Acikgoz et al. 2020; Bauer et al. 2006; Newman et al. 2020). There is a need to examine affective responses towards AI systems in recruitment (e.g., Langer and König 2017; Langer et al. 2019; Lukacik et al. 2020) because emotions and subjective impressions are of similar importance for recruitment success (Chapman et al. 2005).

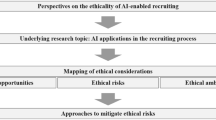

To fill this void, this study makes two contributions to the literature. First, this study examines applicant acceptance of ADM at different stages of the selection process, while leaving the final decision about candidates with humans. In particular, we argue that the higher the extent to which an AI is supporting recruitment process stages, the more negative are the affective responses to the recruitment process in terms of the opportunity to perform, emotional creepiness, and organizational attractiveness (see Fig. 1 for the research model).

Second, by focusing on candidates’ affective responses to the usage of AI technology, we shed light on the emotional mechanisms that help to explain applicants’ acceptance of ADM over and beyond other prominent theories in recruitment, such as signaling theory (e.g. Rynes et al. 1991; Spence 1978).

Finally, this study provides important practical implications because practice is far beyond research, and scientific scrutiny is necessary (Gonzalez et al. 2019; Lukacik et al. 2020; Nikolaou 2021). Consequently, this study provides important implications that help in the development and implementation of algorithmic decision tools.

2 Theoretical background and hypothesis development

2.1 Theoretical framework

In the information and communication technology context, affective responses (or affective reactions) to a stimulus caused by a computer device, software system, or another information technology play a vital role in human interaction with these new technologies (Zhang 2013). Affective responses are an umbrella term that covers affective evaluations, emotions, and attitudes, which result from interactions between humans and a stimulus, namely technology (Zhang 2013). In particular, affective evaluations contain a person’s assessment of the affective quality of this stimulus (e.g., satisfaction with a situation), which does not necessarily come along with an emotion (Russell 2003; Zhang 2013). Similarly, emotion and attitude also reside between the person and the stimulus, the only difference being that emotions are rather temporally constrained, while attitudes (and affective evaluations) last for a longer period (Clore and Schnall 2005).

Given that those affective responses to selection processes are important for organizational attractiveness and job acceptance intentions (Hausknecht et al. 2004; Ryan and Ployhart 2000), we propose that perceived opportunity to perform, emotional creepiness, and organizational attractiveness are three major affective responses in the context of AI usage during recruitment and selection procedures. Opportunity to perform belongs to the justice and fairness perceptions that match the affective evaluation of the decision support system (cf. (Zhang 2013). Emotional creepiness covers the (negative) emotions and feelings (e.g., anxiety, ambiguity, uncertainty) in response to a specific situation. Finally, organizational attractiveness has a long history in the recruitment literature and reflects applicants’ attitude towards the organization (e.g., Chapman et al. 2005) which is one of the most important antecedents of acceptance intentions and job choice according to the theory of planned behavior (Ajzen 1991). Thus, we propose that AI support during the different stages of the selection process influences affective responses of potential applicants in terms of organizational attractiveness, mediated by the opportunity to perform and emotional creepiness. Figure 1 depicts our research model.

2.2 Hypotheses development

In research on applicant reactions, the rules of justice (Gilliland 1993) play an essential role in understanding what aspects influence responses to an application process (Langer et al. 2018). Accordingly, the perception of fairness consists, among other things, of the opportunity to perform, equality of conduct, the treatment of applicants, the appropriateness of questions, and the overall fairness of the selection process (Bauer et al. 2001; Gilliland 1993). From a firm’s perspective, the use of AI systems should increase the standardization of procedures and make decisions more objective and less biased compared to humans with a human bias (Kaibel et al. 2019; Woods et al. 2020). However, especially in the context of an application, a decisive role plays how the applicants perceive the recruitment process.

Research on algorithms showed that humanity is an essential aspect of a positive perception (Lee 2018) and several studies examined that algorithms lack humanity (Lee 2018; Suen et al. 2019). Lee (2018) showed that people accept algorithmic systems that perform mechanical tasks (e.g., work scheduling), while humans should perform tasks that require human intuition (e.g., hiring). Additionally, Lee and Baykal (2017) showed that the fairness perception of algorithmic decisions is lower than decisions made in a group discussion. Even meta-analytical results corroborate that candidates respond more positively toward face-to-face interviews rather than to technology-mediated job interviews (Blacksmith et al. 2016). Moreover, asynchronous video interviews are less favored than synchronous video interviews (Acikgoz et al. 2020; Suen et al. 2019) and actual applicants perceive asynchronous video interviews as rather unfair (Hiemstra et al. 2019; Nørskov et al. 2020).

During the preselection stage, the support of AI technology in screening and recommending applications to employees of the HR department might negatively influence applicants’ fairness perception. On the one hand, AI-support in preselection might have an image of objectivity because a computer system is consistently evaluating each application on predefined rules and formulas which should reduce human biases and prejudices (Woods et al. 2020). On the other hand, these rules and formulas lack transparency; thus, candidates do not know whether and how AI is weighing certain information, such as personal characteristics, facial expressions, or working experience. Moreover, humans may think that AI lacks the ability to discern suitable candidates since AI makes judgments based on different key figures and does not consider qualitative information into account that are difficult to quantify (Lee 2018). This lack of knowledge and transparency of AI should reduce applicants’ opportunity to perform.

During the interview stage, applicants prefer situations that give them the opportunity to perform, enabling them to present their knowledge, skills, and abilities appropriately (Gilliland 1993; Gonzalez et al. 2019). If an AI technology supports the telephone interview or video interview, applicants could feel a lack of personal interaction and interpersonal treatment because AI may not recognize individual strengths or characteristics (Kaibel et al. 2019). Notably, the lack of nonverbal and verbal feedback from the interviewer deprives applicants of the opportunity to explain unclear issues more precisely or to ask a question, decreasing the perceived opportunity to perform (Langer and König 2017; Langer et al. 2020). In AI-based videos, candidates miss eye contact with the recruiter and show inappropriate hand gestures because they only see themselves (McColl and Michelotti 2019). Moreover, knowing that an AI evaluates the selection video, candidates give shorter answers and have the feeling that they do not have a good chance to perform (Langer et al. 2020). In summary, we hypothesize that AI-support in the preselection of candidates and during the telephone or video interviews diminishes the perceived opportunity to perform compared to a pure human evaluation.

H1

Compared to a pure human evaluation, (a) AI-support in preselection, (b) AI-support in telephone interview, and (c) AI-support in video interview is negatively associated with the opportunity to perform.

Feelings and emotions also play an important role in explaining applicants’ acceptance of recruitment methods (Langer et al. 2018). For example, Hiemstra et al. (2019) call for studying creepiness to better explain adverse applicant emotional reactions. Creepiness is defined as a potentially harmful emotional impression in conjunction with the feeling of ambiguity (Langer and König 2017; Langer et al. 2018). Creepiness occurs when people feel uncomfortable and insecure in a situation and do not know how to react in a new situation (Langer and König 2018). Consequently, new technologies or a new field of application of familiar technologies can also lead to the perception of a creepy situation (Langer et al. 2018; Tene and Polonetsky 2013). If ADM tools are not transparent and perceived to be uncontrollable, these new tools evoke an emotional response of creepiness (Tene and Polonetsky 2013). Moreover, most candidates are still not familiar with this new AI technology (Langer et al. 2018). If AI makes decisions about oneself, the rules and formulas of AI are unknown, which raises a feeling of ambiguity and emotional creepiness (Langer et al. 2018, 2019). Similarly, candidates might not know how to react appropriately during an AI-supported telephone or video interview or how to handle this unfamiliar circumstance (Langer et al. 2018, 2019). Meta-analytical evidence before the rise of AI technology in recruitment and selection showed that applicants react positively towards commonly used selection instruments, such as assessment centers or employment interviews (Anderson 2003; Hausknecht et al. 2004). Thus, candidates have expectations and presumptions about the recruitment process and the used interview methods with humans. The usage of AI-support during interviews violates these presumptions. Hence, the violation of presumptions and expectations should increase the negative affective responses in terms of emotional creepiness. Moreover, the lack of interpersonal treatment or social interaction and the lack of transparency of how AI assesses the gestures, language, and answers should also increase the feeling of creepiness (Langer and König 2017; Langer et al. 2019). Thus, we propose that:

H2

Compared to a pure human evaluation, (a) AI-support in preselection, (b) AI-support in telephone interview, and (c) AI-support in video interview is positively associated with emotional creepiness.

One crucial outcome of applicant reactions in recruitment is overall organizational attractiveness (Bauer et al. 2001; Gilliland 1993). Ryan and Ployhart (2000) define applicant reactions as attitudes, effects, or insights a person might have concerning the recruitment process and the potential employer. In turn, candidate reactions are associated with the perceived attractiveness of the organization (Bauer et al. 2001). Thus, organizational attractiveness is one of the most relevant outcomes of applicant reactions to selection procedures and reflects the overall assessment of the recruitment process and the organization (Bauer et al. 2001; Chapman et al. 2005; Gilliland 1993). Among other things, organizational attractiveness depends on how individualized and personalized applicants experience the complete application process (Lievens and Highhouse 2003). Consequently, if applicants positively evaluate the selection process, consider the selection methods to be fair, and have positive affective responses during the whole process, they generate a positive attitude towards the company (Bauer et al. 2006). Conversely, if candidates are dissatisfied with the selection process, they might self-select-out from the selection process (Hausknecht et al. 2004). Thus, we hypothesize that opportunity to perform and emotional creepiness influence organizational attractiveness and, therefore, mediate the relationship between the usage of AI-support during the selection process and organizational attractiveness.

H3a

Opportunity to perform positively related to organizational attractiveness.

H3b

Emotional creepiness is negatively related to organizational attractiveness.

H4

(a) Opportunity to perform and (b) emotional creepiness mediate the relationship between AI-support in the selection process and organizational attractiveness.

3 Methodology

3.1 Sample

Using an online panel of an ISO 20252:19 certified online sample provider, we recruited a quota-based sample, whose gender and age approximating their respective distributions in the German general population with 160 participants (40 participants per condition) from the working population. Consequently, 48.8% (n = 78) of the participants were females, and the mean age was 45.6 years. All participants were currently working with an average working experience of 22.6 years. On average, they already participated in 16 recruitment processes. 18.1% (n = 29) were currently searching for a new job.

3.2 Scenarios

We applied a between-subject design using four hypothetical scenarios for our treatment (Aguinis and Bradley 2014). Participants were randomly assigned to one of the four scenarios and should read a description of an application process to the fictitious company Marzeo.Footnote 1 The company name and URL (www.marzeo.de) have been developed (Evertz et al. 2019) to ensure that participants would not find any additional information about the company. Similarly, we adopted an ideal application process of previous research (Wehner et al. 2015) and manipulated the degree of AI-support during the recruitment process. We paid particular attention to the fact that each final decision remained with the employees of the HR department. Several studies have shown that people react negatively if decisions are solely made by a computer system or AI (Hiemstra et al. 2019; Langer et al. 2019). Since it is somewhat plausible that companies use AI to support their decisions during recruitment processes, we decided not to manipulate the decision agent in our scenarios.

After a short introduction, all scenarios contained the following parts of an ideal application and recruitment process: (1) confirmation of receipt and preselection, (2) a telephone interview, and (3) an in-person job interview. In scenario 1, only humans performed the recruitment activities mentioned above and made the final decisions. In scenario 2, we changed the preselection in step 1. Participants were told the following: “To guarantee a fair selection process, all applications are compared and evaluated after the application deadline by a software system based on artificial intelligence methods.” However, the final decision about all applications remained with employees of the HR department.

In scenario 3, the preselection (step 1, the same manipulation as scenario 2) and the telephone interview (step 2) were conducted by an AI. Participants were told that they have to log in to the application of the company’s website and that a software system using AI asks the questions. Moreover, AI creates a personality profile based on their answers. Again, the final decision about all applications remained with employees of the HR department.

Scenario 4 is the same as scenario 3, and an AI supports the same steps. However, instead of a telephone interview, participants were told that the company conducts an automated video interview, evaluated by an AI. Again, the final decision about all applications remained with employees of the HR department (see the “Appendix” for the scenarios).

3.3 Pre-test, implementation checks, common method bias, and sample size

We put much effort in ensuring that the design of the studies allowed us to minimize the risk of potential biases (Podsakoff et al. 2012). Therefore, we conducted several procedures and checks to ensure high data quality and to avoid common method bias. To ensure high data quality, first, we pretested the wording of our scenarios and questionnaire on a sample of 168 students of a German university to examine whether our treatments work as intended. Afterward, we pre-registered our study before data collection for our main study at aspredicted.org.Footnote 2 Second, we included two attention checks to enhance data quality. All participants in our sample passed these attention checks (e.g., “For this item, please select ‘strongly disagree’”) (Barber et al. 2013; Kung et al. 2018; Ward and Pond III 2015). Third, we tested whether there were significant differences between fast and slow responding participants in our main variables. For this purpose, we used a median split of the processing time, but we did not find any significant differences by using t-tests.

Furthermore, we included implementation checks for our treatments at the end of the questionnaire to ensure that the participants understood the scenarios as intended (Shadish et al. 2002). Between zero to six participants among our four scenarios did not pass this implementation check (sum = 16 participants). We used a t-test to test whether there were significant differences in our dependent variables between those who answered the manipulation check correctly and the other participants; however, we did not find any significant differences. In addition, we included a dummy variable into our structural equation model and correlated it with our treatment variables, but this did not change the results. Moreover, we tested our final structural equation model only on those participants that successfully passed the implementation check (n = 144), but this did not change our results. Since even those participants who did not pass the implementation check correctly might have an impression of AI-support during the recruitment process, which is important to our research question, we decided to keep these participants in our final sample. Finally, we measured whether our scenarios were realistic to the participants (Maute and Dubés 1999). We asked respondents to rate on a 7-point Likert-scale how realistic the description was to them (1 = very unrealistic to 7 = very realistic) and how well they were able to put themselves into the described situation (i.e., valence; 1 = very bad to 7 = very good). Overall, the results showed sufficient realism (mean = 4.87) and that participants were able to put themselves into the situation (mean = 5.64).

We conducted several procedures to avoid and assess common method bias, such as social desirability, negative affectivity, and the measurement instrument (Podsakoff et al. 2012). First, we chose an online experiment so that each participant was able to conduct the study online in their free time; thus, participants were alone without being observed by the researchers and we assured the participants’ anonymity, which minimizes social desirable behavior (Steenkamp et al. 2010; Weiber and Mühlhaus 2014). Second, the between-subject design of our experiment (i.e., each participant participated in one single scenario) and the random assignment of each participant to our treatment scenarios reduces the influence of learning, social desirable behavior, and common method bias. Third, since a person´s general state of mind at the time of the examination might also register an effect (Podsakoff et al. 2003; Weiber and Mühlhaus 2014), we tested the potential effect of negative affectivity (Emons et al. 2007) on our dependent variables. However, negative affectivity did not show influential effects on our results.

Finally, we used the marker variable technique to assess the degree of common method bias by adding an uncorrelated latent variable with the same item response format to our final CFA and SEM (Podsakoff et al. 2012; Siemsen et al. 2010; Williams and McGonagle 2016) because Harman’s one-factor test and the unmeasured latent method factor technique are not without critique (Fuller et al. 2016; Podsakoff et al. 2012). We used two items to measure agreeableness as our uncorrelated latent variable from the short Big-Five personality inventory (Gosling et al. 2003). This latent marker variable was allowed to simultaneously influence the nine items of our dependent variables (i.e., opportunity to perform, emotional creepiness, organizational attractiveness). The model fit of the SEM including the marker variable was worse (χ2 = 176.22, df = 135, p = 0.01; CFI = 0.98; RMSEA = 0.04) than without the marker variable (χ2 = 121.10, df = 109, p = 0.20; CFI = 0.99; RMSEA = 0.03; see results section). Moreover, we did not observe significant differences (threshold was set to β > 0.10) in the standardized regression weights when comparing the final SEM with this model that included the latent marker variable. Therefore, the threat of common method bias to our result was limited.

We approximated the sample size required for our analysis with the power analysis program G*Power (Faul et al. 2009). The sample size was a priori calculated based on a significance level α = 0.01 and a power level of 1-β = 0.99, which both provide a conservative estimate for the necessary sample size. Based on the recommendation by Cohen (1988), we chose a medium effect size index with 0.15. To analyze the groups and the variables, we draw on a global effect MANOVA, which yielded to 124 participants to have sufficient statistical power. Based on this power analysis we slightly oversampled and recruited 160 participants (aiming for 40 participants per condition). Concerning our structural equation model, we also applied Swain’s correction to account for a potential bias due to our small sample size (Herzog and Boomsma 2009; Swain 1975).

3.4 Measures

We measured all scales with items that ranged from 1 (strongly disagree) to 7 (strongly agree), except for the treatments. To reduce common method bias, we used a random rotation of the items for each scale to exclude a specific response behavior due to the sequence of the items. All scales were adopted from previous research to ensure the reliability and validity of our measures.

Treatment variables Since we used four scenarios, we built three dichotomous variables that reflect our treatments. The first dummy variable is AI-support in preselection (0 = only human; 1 = AI-support in preselection; scenario 2). The second dummy variable is AI-support in telephone interview (0 = only human; 1 = AI-support in preselection and telephone interview; scenario 3). The third dummy variable is AI-support in video interview (0 = only human; 1 = AI-support in preselection and video interview; scenario 4).

Opportunity to perform This variable was measured with three items from Bauer et al. (2001), e.g., “This application process gives applicants the opportunity to show what they can really do.” Cronbach’s alpha of the scale was 0.95.

Emotional creepiness This variable was measured with three items from Langer and König (2017), e.g., “During the shown situation I had a queasy feeling.” Cronbach’s alpha of the scale was 0.95.

Organizational attractiveness This variable was measured with three items from Aiman-Smith et al. (2001), e.g., “The company Marzeo is a good company to work for.” Cronbach’s alpha of the scale was 0.95.

Controls It seems reasonable that participants who are interested in new technologies are more inclined to positively evaluate and perceive the use of AI. As a control variable, therefore, we measured technological affinity with three items from Agarwal and Prasad (1998), e.g., “If I heard about a new information technology, I would look for ways to experiment with it.”, Cronbach’s alpha of the scale was 0.86. Additionally, we controlled for gender, age, and highest educational qualifications.

3.5 Analytical procedures

By applying structural equation modeling (SEM) using the software IBM SPSS Amos 26 (Arbuckle 2014), we applied a two-stage approach to examine our hypothesized research model (Anderson and Gerbing 1988). First, we estimated the measurement model for confirmatory factor analysis (CFA) while including our treatment variables and all control variables as covariates. Second, we estimated the SEM with our hypothesized direct effects and all control variables as covariates. We also evaluated the error covariance between the two latent mediating constructs (i.e., opportunity to perform and emotional creepiness) to control for unmeasured or omitted common causes (Kline 2015). We used chi-square statistics and common fit indices to evaluate the model fit to our data (Bollen 1989). A well-fitting model should have a nonsignificant chi-square test (Bollen 1989), a Comparative Fit Index (CFI) above 0.95 (Hu and Bentler 1998), and a Root Mean Squared Error of Approximation (RMSEA) below 0.06 (Brown and Cudeck 1993).

The measurement model shows a satisfactory fit to our data (χ2 = 114.90, df = 96, p = 0.09; CFI = 0.99; RMSEA = 0.04). The measurement model was the basis for the CFA and the test for convergent and discriminant validity. First, all standardized factor loadings and Cronbach’s alpha values of the latent constructs were above 0.80, which indicates a high reliability of our measurements. Second, we calculated the Average Variance Extracted (AVE) and Composite Reliabilities (CR) for the latent constructs (Bagozzi and Yi 1988; Fornell and Larcker 1981). Recommended threshold for the AVE is 0.50 and for CR is 0.60 (Fornell and Larcker 1981). All latent constructs exceeded these values (opportunity to perform: AVE = 0.86, CR = 0.95; emotional creepiness: AVE = 0.86; CR = 0.95; organizational attractiveness: AVE = 0.87, CR = 0.95; technological affinity: AVE = 0.68, CR = 0.87). Additionally, we checked whether the square root of the AVE of each construct was greater than the correlations with other variables to test for discriminant validity (Chin 1998). In summary, the high factor loadings, reliabilities, and AVE values support the validity of our measurements as well as convergent and discriminant validity.

3.6 Descriptive statistics

Table 1 shows the means, standard deviations, and correlations of our variables.

AI-support in video interviews was negatively correlated with organizational attractiveness and positively correlated with emotional creepiness, while AI-support in preselection and AI-support in telephone interviews were uncorrelated. Furthermore, we found the highest positive correlation between the opportunity to perform and organizational attractiveness (r = 0.75) as well as negative correlations between emotional creepiness and organizational attractiveness (r = −0.67) and opportunity to perform (r = −0.57). A possible explanation for these high correlations is the fictional setting of the written recruitment process. We expected higher correlations because the participants needed to conclude from their perceptions of the recruitment process how attractive the company is to them. Without any additional information, the perceptions and evaluation of the recruitment process become the most important predictors for organizational attractiveness. Our inspection of discriminant validity for our constructs and the low VIF values limit the threat of multicollinearity or that the constructs were considered as being the same.

3.7 Modeling procedure

Before we tested our hypothesized research model, we estimated three different SEM models to evaluate and specify the influence of our control variables. Beside our hypothesized relationships and the error covariance between the two mediators, model 1 contained correlations between all exogenous variables (χ2 = 138.64, df = 111, p = 0.04; CFI = 0.99; RMSEA = 0.04). Model 2 additionally contained direct relations between all control variables and opportunity to perform as well as emotional creepiness (χ2 = 118.71, df = 103, p = 0.14; CFI = 0.99; RMSEA = 0.03). Model 3 is a reduction of model 2, in which we kept the direct relations of technological affinity on opportunity to perform and emotional creepiness and fixed all relations from gender, age, and educational qualifications on the mediators to zero (χ2 = 121.10, df = 109, p = 0.20; CFI = 0.99; RMSEA = 0.03). Since the results of all estimates in model 3 are equivalent to model 2, we designated model 3 as the final SEM because the model fit was slightly better and model 3 was more restrictive concerning the relations of personal characteristics and the mediators, such as age, gender, and educational background. Finally, Swain-corrected estimators for the fit measures of model 3 supported our choice of model 3 as the final SEM despite the small sample size (Swain-corrected: χ2 = 115.34, p = 0.32; RMSEA = 0.02).

3.8 Results of the SEM

The SEM shows a satisfactory model fit to our data (χ2 = 121.10, df = 109, p = 0.20; CFI = 0.99; RMSEA = 0.03). Figure 2 and Table 2 show the results of our experimental SEM. First, while AI-support in preselection did not influence the opportunity to perform (p > 0.05), both AI-support in a telephone interview and video interview diminished the opportunity to perform (p < 0.05). Thus, while AI-support in preselection seems equal to a pure human evaluation in terms of the opportunity to perform, AI-support in later stages of the recruitment process decreases the opportunity to perform, which partially supports our hypothesis 1. Second, while AI-support in preselection also did not influence emotional creepiness (p > 0.05), both AI-support in telephone interviews and video interviews were positively associated with emotional creepiness (p < 0.01). Again, AI-support in later stages of the recruitment process increases emotional creepiness, while AI-support in preselection is similar to a pure human evaluation concerning emotional creepiness, which only partially supports our hypothesis 2. Third, we found that the opportunity to perform increased organizational attractiveness (p < 0.01), while emotional creepiness was negatively associated with organizational attractiveness (p < 0.01). These findings support our hypotheses 3a and 3b. Fourth, AI-support in later stages of the recruitment negatively influences organizational attractiveness via the two mediators, but AI-support in preselection did not indirectly affect organizational attractiveness. These findings partially support hypotheses 4a and 4b.

In addition to our hypothesized relationships, we also tested a) the direct influence of technological affinity and b) possible moderating effects of technological affinity combined with our treatment. First, technological affinity was positively associated with the opportunity to perform (p < 0.01) and negatively associated with emotional creepiness (p < 0.01). Second, given its influence on the mediators, technological affinity could also have a moderating influence on the relationships between the treatments and the mediator variables. Thus, we applied OLS regression analyses with opportunity to perform and emotional creepiness as dependent variables, and we included interaction terms between technological affinity and our treatment variables (Cohen et al. 2013). However, results showed that technological affinity did not moderate any of the relationships between our treatment variables and the proposed mediators.

3.9 Robustness check

While candidates will immediately perceive the computer system during a telephone and video interview, companies might not deliberately communicate their use of AI-support during the screening of all applications. Thus, AI-support in the preselection appears completely seamless to the candidates. The question arises of how candidates react to AI-support in preselection if they do not know about it during the recruitment process but find out about AI-support from others later–in our case: from the newspapers. To answer this question, we conducted a robustness check.

3.9.1 Sample and procedure

Participants of our experimental pre-post-design were a sub-sample of the initial study and, therefore, demographic characteristics of participants in the robustness check were equal. N = 40 participants participated in the first scenario that did not contain any AI support during the recruitment process. To ensure that the treatment in the robustness check did not compromise the results of the initial study, the treatment was given to participants after they participated in and filled in the full questionnaire completely. Just after the last item, we gave participants of the robustness check, a newspaper article about the company Marzeo (i.e., post-stimulus).

3.9.2 Newspaper article

We designed the newspaper article like a real article of a daily newspaper (see the “Appendix” for the newspaper article). In this newspaper article, journalists uncovered that Marzeo uses AI-support to evaluate and rank all applications and analyze the voice and spoken words during the telephone interview. Finally, a spokesperson of Marzeo admits that Marzeo uses AI-support during the recruitment, but humans make the final decision about each applicant. After reading the newspaper article, participants of the robustness check had to answer similar questions about the company concerning the fairness of the recruitment process, trust towards the company, and organizational attractiveness.

3.9.3 Measures

Due to the pre-post-design, we measured all items before participants read the newspaper article (t1) and after reading the newspaper article (t2).

Procedural justice This variable was measured with three items from Bauer et al. (2001), e.g., “I think that the application process is a fair way to select applicants for the job.” Cronbach’s alpha of the scale was 0.98.

Trust We measured trust with four items from Walsh and Beatty (2007), originally used in Morgan and Hunt (1994), e.g., “The company Marzeo can generally be trusted.” Cronbach’s alpha of the scale was 0.97.

Organizational attractiveness This variable was measured as described in Sect. 3.4. Cronbach’s alpha of the scale was 0.96.

3.9.4 Results

Results of pre-post-design are depicted in Fig. 3. We used paired sample t-tests to examine whether the differences in the means of the three variables was significant. First, we found a significant difference [t(39) = 4.62, p < 0.01] in procedural justice before the newspaper article (M = 5.25, SD = 1.31) and after the article (M = 4.08, SD = 1.50). Second, we found a significant difference [t(39) = 4.36, p < 0.01] in trust before the newspaper article (M = 4.71, SD = 1.14) and after the article (M = 3.80, SD = 1.31). Third, we also found a significant difference [t(39) = 4.89, p < 0.01] in organizational attractiveness before the newspaper article (M = 4.63, SD = 1.08) and after the article (M = 3.94, SD = 1.21).

4 Discussion and conclusion

4.1 Discussion

This study aimed to investigate a combination of different selection tools with AI-support in preselection as well as during telephone and video interviews in later stages of the selection process. The findings showed that AI-support in later stages of the selection process reduced the opportunity to perform and increased emotional creepiness. Furthermore, we found support for our hypothesized mediation of the relationship between AI-support and organizational attractiveness via the opportunity to perform and emotional creepiness. These results corroborate previous findings of the use of AI in asynchronous telephone and video interviews and negative fairness perceptions (Acikgoz et al. 2020; Langer et al. 2020; Nørskov et al. 2020).

However, we neither found a negative influence of AI-support in preselection on the opportunity to perform, emotional creepiness nor organizational attractiveness if the potential employer openly communicates the use of AI-support during the screening and preselection phase. Thus, applicants seem to accept a certain degree of AI support during the selection process. A possible reason for this finding could be that candidates do not expect a high degree of interpersonal treatment at the beginning of the selection process. Moreover, although candidates did not know how AI weighs certain personal characteristics, this did not yield negative affective responses to AI-support. Nevertheless, the robustness check showed that hiding the usage of AI during preselection can lead to negative applicant reactions if candidates find out about AI-support through other information, even after the recruitment process ended. Thus, hiding the AI-support during preselection can have an adverse impact on the organization if well-fitting candidates withdraw from the recruitment process or do not accept a job offer.

Focusing on later stages of the recruitment process, especially AI-based interviews seem to give applicants the feeling of not being able to present themselves sufficiently. An important aspect of interviews is impression management (Blacksmith et al. 2016), and the expectations of candidates are not met if they are not able to present themselves in a way as in synchronous situations. In contrast to an AI-based telephone or video interview, which is only recorded, applicants in in-person interviews can perceive their (unintended) feedback and react to it immediately (Langer et al. 2017). Moreover, our results corroborate previous findings that AI triggers a feeling of creepiness during the selection process (Langer et al. 2017) and that applicants seem to feel uncomfortable with being evaluated by AI and may even find the technology frightening (Tene and Polonetsky 2013).

4.2 Theoretical implications

Our study provides important contributions to the literature. First, the main contribution of this study advances our understanding of the candidate acceptance of AI systems in different stages of the selection process, while leaving the final decision about candidates with humans. The majority of previous studies primarily focused on one selection instrument (e.g., video analysis), so the literature neglected the affective response of different stages of the recruitment process.

Second, we shed a light on the emotional mechanism that helps explaining candidate acceptance of AI-based mechanisms. This study shows that not only the general fairness but also the opportunity to perform and creepiness play an important role, explaining the candidate reaction. Examining the applicant’s emotional responses, we answer several calls, demanding studies that better explain applicant emotional responses (e.g., Hiemstra et al. 2019; Lukacik et al. 2020; Noble et al. 2021).

4.3 Practical implications

Companies that decide to conduct parts of their recruitment process with the help of AI should consider the potential adverse effects of AI-support, especially for telephone or video analysis. We identified that the acceptance of AI for screening seems acceptable, but the acceptance decreased when using telephone or video analysis. In more detail, the results of this work emphasize that application processes with AI-support in later stages of the recruitment process do not provide applicants sufficient opportunity to present themselves and demonstrate their special abilities. Companies should avoid using AI-based video and telephone interviews in the application process without explaining and communicating the new situation to their applicants (Langer et al. 2020; van Esch et al. 2019). However, companies can use ADM-based CV screening without concern about the negative reactions of applicants.

Also, a lack of transparency might exacerbate the opportunity to perform and emotional creepiness, so when using technology, companies could pay attention to explain how the new technologies work and how they support human decisions. However, it is questionable to what extent emotional creepiness might decrease in the future as applicants become more and more used to these new technologies in the application process. As an interim solution, companies could rely on a combination of AI-based selection tools and human-assisted process steps. Furthermore, companies using AI-based selection tools should monitor if the usage yields to the candidate’s withdrawal from the selection process.

Finally, companies should not try to hide their usage of AI-based selection methods since applicants' perceptions of justice, trust towards the company, and organizational attractiveness decreases if applicants receive the information afterward. Also, van Esch et al. (2019) showed that companies do not need to hide their use of AI in fear of losing potential applicants.

4.4 Limitations and future research

First, scenarios offer an excellent way to investigate the perceptions, feelings, attitudes, and behaviors of real-life situations (Taylor 2005). However, the experimental design did not allow first-hand experience from actual application contexts. Thus, future studies could examine applicants’ reactions in real application contexts.

Second, due to the increasing usage of AI systems in HRM, applicants will become more familiar with these new selection tools that, in turn, might increase the general acceptance of AI in the future. Future studies could repeat the experiment to examine whether the negative affective responses diminish over time.

Third, the data collection of the sample was in Germany. Germany has specific characteristics concerning its culture (see Hofstede and Minkov (2010) for the cultural profile), labor market (employee organization; high industrial productivity; strict labor legislation), data protection regulations, and degree of digitization that might lead to constrained generalizability of the results to other cultural environments and labor markets. In countries that have a higher degree of digitization than Germany (Cámara and Tuesta 2017), the influence of AI-support on affective responses could be weaker during the selection process. However, several other studies conducted in countries with a higher degree of digitization than Germany (e.g., the United States or Denmark) showed similar results regarding the perception of asynchronous video interviews (e.g., Acikgoz et al. 2020; Nørskov et al. 2020). Moreover, in a recent study by Griswold et al. (2021), a large international sample was used to investigate whether there are cultural differences in the reactions to asynchronous video interviews. The results show that only three dimensions of national culture (Hofstede and Minkov 2010) (i.e., uncertainty avoidance, long-term orientation, and indulgence) have small to moderate moderating effects on the reactions to asynchronous video interviews. Nevertheless, future research could investigate our research model in other countries to enhance generalizability among other cultures and institutional environments.

Fourth, we do not have information about the ethnicity of the participants. In Germany, it is not common and not appropriate to ask about ethnicity. However, it would be interesting to know if, for example, people of color have a different perception, as the human bias is eliminated when using AI. Although several studies showed that human prejudices are involved in the algorithmic systems (e.g., Buolamwini and Gebru 2018; Köchling et al. 2020; Persson 2016; Yarger et al. 2019). It would be interesting to know how the perception is and whether there is an awareness that algorithms and AI can also be discriminatory in terms of ethnicity.

4.5 Conclusion

This paper aimed at raising awareness of the possible adverse affective response to AI-support in recruitment and selection at different stages of the process. Since research about the applicant's acceptance of AI tools in the selection is still at the beginning, this study examines in which steps of the selection process candidates accept AI-support under the condition that the final decision remains with humans. We find that applicants accept AI-support for CV and résumé screening as long as they know about the usage of AI upfront, but the applicant’s acceptance decreases for AI-support in telephone and video interviews nevertheless. Our results emphasize the importance of considering affective responses to these new technologies and warn companies to apply AI tools without considering the perceptions of their candidates. Our results open several avenues for future research in this area.

Availability of data and material

All material is available upon request.

Code availability

The codes are available upon request.

Notes

We would like to thank Lena Evertz, Rouven Kolitz, and Stefan Süß for allowing us to use Marzeo as a fictive company.

References

Acikgoz Y, Davison KH, Compagnone M, Laske M (2020) Justice perceptions of artificial intelligence in selection. Int J Sel Assess 28(4):399–416. https://doi.org/10.1111/ijsa.12306

Agarwal R, Prasad J (1998) A conceptual and operational definition of personal innovativeness in the domain of information technology. Inf Syst Res 9(2):204–215. https://doi.org/10.1287/isre.9.2.204

Aguinis H, Bradley KJ (2014) Best practice recommendations for designing and implementing experimental vignette methodology studies. Org Res Methods 17(4):351–371. https://doi.org/10.1177/1094428114547952

Aiman-Smith L, Bauer TN, Cable DM (2001) Are you attracted? Do you intend to pursue? A recruiting policy-capturing study. J Bus Psychol 16(2):219–237. https://doi.org/10.1023/A:1011157116322

Ajzen I (1991) The theory of planned behavior. Organ Behav Hum Decis Process 50(2):179–211. https://doi.org/10.1016/0749-5978(91)90020-T

Anderson N (2003) Applicant and recruiter reactions to new technology in selection: a critical review and agenda for future research. Int J Sel Assess 11(2–3):121–136. https://doi.org/10.1111/1468-2389.00235

Anderson JC, Gerbing DW (1988) Structural equation modeling in practice: a review and recommended two-step approach. Psychol Bull 103(3):411

Arbuckle JL (2014) Amos (Version 23.0). In: IBM SPSS.

Bagozzi RP, Yi Y (1988) On the evaluation of structural equation models. J Acad Mark Sci 16(1):74–94. https://doi.org/10.1007/BF02723327

Barber LK, Barnes CM, Carlson KD (2013) Random and systematic error effects of insomnia on survey behavior. Org Res Methods 16(4):616–649. https://doi.org/10.1177/1094428113493120

Bauer TN, Truxillo DM, Sanchez RJ, Craig JM, Ferrara P, Campion MA (2001) Applicant reactions to selection: development of the selection procedural justice scale (SPJS). Pers Psychol 54(2):387–419. https://doi.org/10.1111/j.1744-6570.2001.tb00097.x

Bauer TN, Truxillo DM, Tucker JS, Weathers V, Bertolino M, Erdogan B, Campion MA (2006) Selection in the information age: The impact of privacy concerns and computer experience on applicant reactions. J Manag 32(5):601–621. https://doi.org/10.1177/0149206306289829

Blacksmith N, Willford JC, Behrend TS (2016) Technology in the employment interview: a meta-analysis and future research agenda. Person Assess Decis 2(1):2. https://doi.org/10.25035/pad.2016.002

Bollen KA (1989) A new incremental fit index for general structural equation models. Sociol Methods Res 17(3):303–316. https://doi.org/10.1177/0049124189017003004

Brown M, Cudeck R (1993) Alternative ways of assessing model fit. In: Bollen KA, Long JS (eds) Testing structural equation models. Sage, Newbury Park

Buolamwini J, Gebru T (2018) Gender shades: Intersectional accuracy disparities in commercial gender classification. In: Conference on fairness, accountability and transparency

Cámara N, Tuesta D (2017) DiGiX: the digitization index. BBVA Bank, Economic Research Department

Chapman DS, Uggerslev KL, Carroll SA, Piasentin KA, Jones DA (2005) Applicant attraction to organizations and job choice: a meta-analytic review of the correlates of recruiting outcomes. J Appl Psychol 90(5):928. https://doi.org/10.1037/0021-9010.90.5.928

Chin WW (1998) The partial least squares approach to structural equation modeling. Mod Methods Bus Res 295(2):295–336

Clore GL, Schnall S (2005) The influence of affect on attitude. In: The handbook of attitudes. Lawrence Erlbaum Associates Publishers, pp 437–489

Cohen J, Cohen P, West SG, Aiken LS (2013) Applied multiple regression/correlation analysis for the behavioral sciences. Routledge, New York

Cohen J (1988) Statistical power analysis for the behavioral sciences

Devlin H (2020) AI systems claiming to ‘read’ emotions pose discrimination risks. The Guardian. https://amp.theguardian.com/technology/2020/feb/16/ai-systems-claiming-to-read-emotions-pose-discrimination-risks?__twitter_impression=true

Emons WHM, Meijer RR, Denollet J (2007) Negative affectivity and social inhibition in cardiovascular disease: Evaluating type-D personality and its assessment using item response theory. J Psychosom Res 63(1):27–39. https://doi.org/10.1016/j.jpsychores.2007.03.010

Evertz L, Kollitz R, Süß S (2019) Electronic word-of-mouth via employer review sites–the effects on organizational attraction. Int J Hum Resour Manag. https://doi.org/10.1080/09585192.2019.1640268

Faul F, Erdfelder E, Buchner A, Lang A-G (2009) Statistical power analyses using G* Power 3.1: tests for correlation and regression analyses. Behav Res Methods 41(4):1149–1160. https://doi.org/10.3758/BF03193146

Ferràs-Hernández X (2018) The future of management in a world of electronic brains. J Manag Inq 27(2):260–263. https://doi.org/10.1177/1056492617724973

Fornell C, Larcker DF (1981) Structural equation models with unobservable variables and measurement error: Algebra and statistics. SAGE Publications, Los Angeles

Fuller CM, Simmering MJ, Atinc G, Atinc Y, Babin BJ (2016) Common methods variance detection in business research. J Bus Res 69(8):3192–3198. https://doi.org/10.1016/j.jbusres.2015.12.008

Gilliland SW (1993) The perceived fairness of selection systems: an organizational justice perspective. Acad Manag Rev 18(4):694–734. https://doi.org/10.5465/amr.1993.9402210155

Gonzalez MF, Capman JF, Oswald FL, Theys ER, Tomczak DL (2019) “Where’s the IO?” ArtIfIcIAl IntellIgence And MAchIne leArnIng In tAlent MAnAgeMent systeMs. Person Assess Decis 5(3):5. https://doi.org/10.25035/pad.2019.03.005

Gosling SD, Rentfrow PJ, Swann WB (2003) A very brief measure of the Big-Five personality domains. J Res Person 37(6):504–528. https://doi.org/10.1016/S0092-6566(03)00046-1

Griswold KR, Phillips JM, Kim MS, Mondragon N, Liff J, Gully SM (2021) Global differences in applicant reactions to virtual interview synchronicity. Int J Hum Resour Manag. https://doi.org/10.1080/09585192.2021.1917641

Hausknecht JP, Day DV, Thomas SC (2004) Applicant reactions to selection procedures: an updated model and meta-analysis. Pers Psychol 57(3):639–683. https://doi.org/10.1111/j.1744-6570.2004.00003.x

Herzog W, Boomsma A (2009) Small-sample robust estimators of noncentrality-based and incremental model fit. Struct Equ Model Multi J 16(1):1–27

Hiemstra AM, Oostrom JK, Derous E, Serlie AW, Born MP (2019) Applicant perceptions of initial job candidate screening with asynchronous job interviews: Does personality matter? J Pers Psychol 18(3):138. https://doi.org/10.1027/1866-5888/a000230

Hofstede G, Minkov M (2010) Cultures and organizations: software of the mind. McGraw-Hill, New York

Hu L-T, Bentler PM (1998) Fit indices in covariance structure modeling: sensitivity to underparameterized model misspecification. Psychol Methods 3(4):424. https://doi.org/10.1037/1082-989X.3.4.424

Kaibel C, Mühlenbock M, Koch-Bayram I, Biemann T (2019) Wahrnehmung von KI-Was denken Mitarbeiter über ihre Anwendung und Fairness? Person Q 71(3):16–21

Kelly O (2019) Global talent market quarterly

Kline RB (2015) Principles and practice of structural equation modeling. Guilford publications, New York

Köchling A, Riazy S, Wehner MC, Simbeck K (2020) Highly accurate, but still discriminatory. Bus Inf Syst Eng. https://doi.org/10.1007/s12599-020-00673-w

Kung FYH, Kwok N, Brown DJ (2018) Are attention check questions a threat to scale validity? Appl Psychol 67(2):264–283. https://doi.org/10.1111/apps.12108

Langer M, König CJ (2018) Introducing and testing the creepiness of situation scale (CRoSS). Front Psychol 9:2220. https://doi.org/10.3389/fpsyg.2018.02220

Langer M, König CJ, Krause K (2017) Examining digital interviews for personnel selection: applicant reactions and interviewer ratings. Int J Sel Assess 25(4):371–382. https://doi.org/10.1111/ijsa.12191

Langer M, König CJ, Fitili A (2018) Information as a double-edged sword: the role of computer experience and information on applicant reactions towards novel technologies for personnel selection. Comput Hum Behav 81:19–30. https://doi.org/10.1016/j.chb.2017.11.036

Langer M, König CJ, Papathanasiou M (2019) Highly automated job interviews: acceptance under the influence of stakes. Int J Sel Assess. https://doi.org/10.1111/ijsa.12246

Langer M, König CJ, Hemsing V (2020) Is anybody listening? The impact of automatically evaluated job interviews on impression management and applicant reactions. J Manag Psychol. https://doi.org/10.1108/JMP-03-2019-0156

Langer M, König C (2017) Development of the Creepiness of Situation Scale—Study 3 convergent and divergent validity. Retrieved from osf.io/x4umb

Lee MK (2018) Understanding perception of algorithmic decisions: fairness, trust, and emotion in response to algorithmic management. Big Data Soc 5(1):2053951718756684. https://doi.org/10.1177/2053951718756684

Lee MK, Baykal S (2017) Algorithmic mediation in group decisions: Fairness perceptions of algorithmically mediated vs. discussion-based social division. In: Proceedings of the 2017 ACM conference on computer supported cooperative work and social computing,

Lievens F, Highhouse S (2003) The relation of instrumental and symbolic attributes to a company’s attractiveness as an employer. Pers Psychol 56(1):75–102. https://doi.org/10.1111/j.1744-6570.2003.tb00144.x

Lukacik E-R, Bourdage JS, Roulin N (2020) Into the void: a conceptual model and research agenda for the design and use of asynchronous video interviews. Hum Resour Manag Rev. https://doi.org/10.1016/j.hrmr.2020.100789

Maute MF, Dubés L (1999) Patterns of emotional responses and behavioural consequences of dissatisfaction. Appl Psychol 48(3):349–366. https://doi.org/10.1111/j.1464-0597.1999.tb00006.x

McColl R, Michelotti M (2019) Sorry, could you repeat the question? Exploring video-interview recruitment practice in HRM. Hum Resour Manag J 29(4):637–656. https://doi.org/10.1111/1748-8583.12249

Mirowska A (2020) AI evaluation in selection. J Pers Psychol 19(3):142–149. https://doi.org/10.1027/1866-5888/a000258

Morgan RM, Hunt SD (1994) The commitment-trust theory of relationship marketing. J Mark 58(3):20–38. https://doi.org/10.1177/002224299405800302

Newman DT, Fast NJ, Harmon DJ (2020) When eliminating bias isn’t fair: algorithmic reductionism and procedural justice in human resource decisions. Org Behav Hum Decis Process 160:149–167. https://doi.org/10.1016/j.obhdp.2020.03.008

Nikolaou I (2021) What is the role of technology in recruitment and selection? Span J Psychol. https://doi.org/10.1017/SJP.2021.6

Noble SM, Foster LL, Craig SB (2021) The procedural and interpersonal justice of automated application and resume screening. Int J Sel Assess. https://doi.org/10.1111/ijsa.12320

Nørskov S, Damholdt MF, Ulhøi JP, Jensen MB, Ess C, Seibt J (2020) Applicant fairness perceptions of a robot-mediated job interview: a video vignette-based experimental survey. Front Robot AI 7:163. https://doi.org/10.3389/frobt.2020.586263

Persson A (2016) Implicit bias in predictive data profiling within recruitments. In: IFIP international summer school on privacy and identity management

Podsakoff PM, MacKenzie SB, Lee J-Y, Podsakoff NP (2003) Common method biases in behavioral research: a critical review of the literature and recommended remedies. J Appl Psychol 88(5):879

Podsakoff PM, MacKenzie SB, Podsakoff NP (2012) Sources of method bias in social science research and recommendations on how to control it. Ann Rev Psychol 63:539–569. https://doi.org/10.1146/annurev-psych-120710-100452

Russell JA (2003) Core affect and the psychological construction of emotion. Psychol Rev 110(1):145–172. https://doi.org/10.1037/0033-295X.110.1.145

Ryan AM, Ployhart RE (2000) Applicants’ perceptions of selection procedures and decisions: a critical review and agenda for the future. J Manag 26(3):565–606. https://doi.org/10.1016/S0149-2063(00)00041-6

Rynes SL, Bretz RD Jr, Gerhart B (1991) The importance of recruitment in job choice: a different way of looking. Pers Psychol 44(3):487–521. https://doi.org/10.1111/j.1744-6570.1991.tb02402.x

Shadish WR, Cook TD, Campbell DT (2002) Experimental and quasi-experimental designs for generalized causal inference/William R. Shedish, Thomas D. Cook, Donald T. Campbell. Houghton Mifflin, Boston

Siemsen E, Roth A, Oliveira P (2010) Common Method bias in regression models with linear, quadratic, and interaction effects. Org Res Methods 13(3):456–476. https://doi.org/10.1177/1094428109351241

Spence M (1978) Job market signaling. In: Uncertainty in economics. Elsevier, pp 281–306. https://doi.org/10.1016/B978-0-12-214850-7.50025-5

Steenkamp J-BEM, De Jong MG, Baumgartner H (2010) Socially desirable response tendencies in survey research. J Mark Res 47(2):199–214. https://doi.org/10.1509/jmkr.47.2.199

Stone DL, Lukaszewski KM, Stone-Romero EF, Johnson TL (2013) Factors affecting the effectiveness and acceptance of electronic selection systems. Hum Resour Manag Rev 23(1):50–70. https://doi.org/10.1016/j.hrmr.2012.06.006

Suen H-Y, Chen MY-C, Lu S-H (2019) Does the use of synchrony and artificial intelligence in video interviews affect interview ratings and applicant attitudes? Comput Hum Behav 98:93–101. https://doi.org/10.1016/j.chb.2019.04.012

Swain AJ (1975) Analysis of parametric structures for variance matrices. Unpublished doctoral dissertation, Department of Statistics, University of Adelaide, Australia

Taylor BJ (2005) Factorial surveys: using vignettes to study professional judgement1. Br J Soc Work 36(7):1187–1207. https://doi.org/10.1093/bjsw/bch345

Tene O, Polonetsky J (2013) A theory of creepy: technology, privacy and shifting social norms. Yale JL Tech 16:59

van Esch P, Black JS, Ferolie J (2019) Marketing AI recruitment: the next phase in job application and selection. Comput Hum Behav 90:215–222. https://doi.org/10.1016/j.chb.2018.09.009

Walsh G, Beatty SE (2007) Customer-based corporate reputation of a service firm: scale development and validation. J Acad Mark Sci 35(1):127–143. https://doi.org/10.1007/s11747-007-0015-7

Ward MK, Pond SB III (2015) Using virtual presence and survey instructions to minimize careless responding on Internet-based surveys. Comput Hum Behav 48:554–568. https://doi.org/10.1016/j.chb.2015.01.070

Wehner M, Giardini A, Kabst R (2015) Recruitment process outsourcing and applicant reactions: When does image make a difference? Hum Resour Manag 54(6):851–875. https://doi.org/10.1002/hrm.21640

Weiber R, Mühlhaus D (2014) Mehrgruppen-Kausalanalyse (MGKA). In: Strukturgleichungsmodellierung. Springer, pp 285–322

Williams LJ, McGonagle AK (2016) Four research designs and a comprehensive analysis strategy for investigating common method variance with self-report measures using latent variables. J Bus Psychol 31(3):339–359. https://doi.org/10.1007/s10869-015-9422-9

Woods SA, Ahmed S, Nikolaou I, Costa AC, Anderson NR (2020) Personnel selection in the digital age: a review of validity and applicant reactions, and future research challenges. Eur J Work Org Psychol 29(1):64–77. https://doi.org/10.1080/1359432X.2019.1681401

Yarger L, Payton FC, Neupane B (2019) Algorithmic equity in the hiring of underrepresented IT job candidates. Online Information Review

Zhang P (2013) The affective response model: a theoretical framework of affective concepts and their relationships in the ICT context. MIS Q 37(1):247–274

Funding

Open Access funding enabled and organized by Projekt DEAL. No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare that are relevant for the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Hypothetical scenario

You found out that Marzeo AG has posted a job that exactly matches your skills and expectations for a position in your desired industry. You decide to apply for this position.

The job advertisement asks you to upload your application and contact details to the company's application portal. After you have uploaded your application, you will receive a confirmation of receipt by e-mail shortly afterward:

Scenario 1: Human-based selection process, no AI involvement | Scenario 2: AI in pre-selection | Scenario 3: AI in telephone interview | Scenario 4: AI in video interview |

|---|---|---|---|

Subject: Acknowledgement of receipt of your application | Subject: Acknowledgement of receipt of your application | Subject: Acknowledgement of receipt of your application | Subject: Acknowledgement of receipt of your application |

Dear Mr. / Mrs. (your name), | Dear Mr. / Mrs. (your name), | Dear Mr. / Mrs. (your name), | Dear Mr. / Mrs. (your name), |

Thank you for your application and your associated trust and interest in working for Marzeo AG | Thank you for your application and your associated trust and interest in working for Marzeo AG | Thank you for your application and your associated trust and interest in working for Marzeo AG | Thank you for your application and your associated trust and interest in working for Marzeo AG |

All applications received will be collected until the end of the application period. To guarantee a fair selection process, all applications will be reviewed and compared by our Human Resources staff after the application deadline. Afterward, our HR department will discuss the results together and make a final decision | All applications are analyzed very precisely and in detail by an artificial intelligence. To guarantee a fair selection process all applications are compared and evaluated by a software system based on artificial intelligence methods. Afterward, our HR department will discuss the results together and make a final decision | All applications are analyzed very precisely and in detail by an artificial intelligence. To guarantee a fair selection process all applications are compared and evaluated by a software system based on artificial intelligence methods. Afterward, our HR department discuss the results together and make a final decision | All applications are analyzed very precisely and in detail by an artificial intelligence. To guarantee a fair selection process all applications are compared and evaluated by a software system based on artificial intelligence methods. Afterward, our HR department will discuss the results together and make a final decision |

We, therefore, ask for your patience and will get back to you as soon as possible | We, therefore, ask for your patience and will get back to you as soon as possible | We, therefore, ask for your patience and will get back to you as soon as possible | We, therefore, ask for your patience and will get back to you as soon as possible |

With kind regards | With kind regards | With kind regards | With kind regards |

Your Marzeo AG | Your Marzeo AG | Your Marzeo AG | Your Marzeo AG |

Two weeks later, you will receive the following e-mail: | Two weeks later, you receive the following e-mail: | Two weeks later, you receive the following e-mail: | Two weeks later, you receive the following e-mail: |

Dear Mr. / Mrs. (your name), | Dear Ms / Mr (your name), | Dear Ms / Mr (your name), | Dear Ms / Mr (your name), |

Thank you for your application and the trust you put in our company. Your application has convinced us positively | Thank you for your application and the trust you put in our company. Your application has convinced us positively | Thank you once again for your application and the trust you put in our company. Your application has convinced us positively | Thank you once again for your application and the trust you put in our company. Your application has convinced us positively |

We would be pleased to get to know you better. We would therefore like to invite you to a personal telephone interview next Wednesday at 10:30 a.m. with a member of the HR department. Please confirm briefly whether you can keep the appointment | We would be pleased to get to know you better. We would therefore like to invite you to a personal telephone interview next Wednesday at 10:30 a.m. with a member of the HR department. Please confirm briefly whether you can keep the appointment | We would be pleased to get to know you better, which is why we would like to invite you to an automated telephone interview based on artificial intelligence | We would be pleased to get to know you better, which is why we would like to invite you to an automated video interview based on artificial intelligence |

To do this, all you have to do is log in to our application portal and click on the "Link to the telephone interview" under the heading "My application process". Under this link, you will receive an introduction and all the information you need to prepare yourself. You can start the automated interview at any time, within the next 7 days | To do this, all you have to do is log in to our application portal and click on the "Link to the video interview" under the heading "My application process". Under this link, you will receive an introduction and all the information you need to prepare yourself. You can start the automated interview at any time, within the next 7 days | ||

We wish you good luck! | We wish you good luck! | ||

With kind regards | With kind regards | With kind regards | With kind regards |

Your Marzeo AG | Your Marzeo AG | Your Marzeo AG | Your Marzeo AG |

On the same day, you confirm the proposed date for the telephone interview by e-mail | On the same day, you confirm the proposed date for the telephone interview by e-mail | The next day, you log into the application portal and open the "Link to the telephone interview". It will be explained to you once again that you will be asked questions by a software system using artificial intelligence, to which you are to respond individually. It will also be explained to you that the artificial intelligence will create a personality profile based on your answers. This is consequently compared with the predefined requirements of the job and serves as a basis for decision-making by the employees in the HR department. All you have to do is dial a telephone number provided there to start the automated interview | The next day, you log into the application portal and open the "Link to the video interview". It will be explained to you once again that you will be asked questions by a software system using artificial intelligence, to which you are to respond individually with a video. It also explains that the artificial intelligence will create a personality profile based on your answers. This is consequently compared with the predefined requirements of the job and serves as a basis for decision-making by the employees in the HR department. All you have to do is click on "Start video interview" to begin the automated video interview |

During the telephone interview, the HR representative will mainly ask you about your previous professional and academic history and your motivation for applying for this position. You are given enough time to answer the questions so that you can describe everything accurately from your point of view. After about 20 min, the interview is over and the HR representative promises to get back to you within the next week | During the telephone interview, the HR representative will mainly ask you about your previous professional and academic history and your motivation for applying for this position. You are given enough time to answer the questions so that you can describe everything accurately from your point of view. After about 20 min, the interview is over and the HR representative promises to get back to you within the next week | ||

In the automated phone interview, the system asks you mainly about your previous professional and academic history and motivation to apply for the job. You are given enough time to answer the questions so that you can describe everything accurately from your point of view. After about 20 min, the automated phone interview is over and the system promises that someone will get back to you shortly | In the automated video interview, the system mainly asks you about your previous professional and academic history and motivation to apply for this job. You are given enough time to answer the questions so that you can describe everything accurately from your point of view. After about 20 min, the automated video interview is over and the system promises that someone will get back to you shortly | ||

After a week, you receive a call from Marzeo AG. You are told that the telephone interviews of all potential applications for the advertised position have now been evaluated and that you have indeed been shortlisted for the position | After a week, you receive a call from Marzeo AG. You are told that the telephone interviews of all potential applications for the advertised position have now been evaluated and that you have indeed been shortlisted for the position | After a week, you receive a call from Marzeo AG. You are told that the telephone interviews of all potential applications for the advertised position have now been evaluated and that you have indeed been shortlisted for the position | After a week, you receive a call from Marzeo AG. You are told that the video interviews of all potential applications for the advertised position have now been evaluated and that you have indeed been shortlisted for the position |

For this reason, you will be invited for a personal interview in the department for which you have applied. The interview will take place with two members of staff from the department and a member of the HR department. Among other things, you will be asked why you are particularly suited for this position and where you see your strengths and weaknesses. Afterward, you will be asked a few technical questions and given ample opportunity to ask questions yourself. After just under an hour, the interview is over and you say goodbye to everyone involved | For this reason, you will be invited for a personal interview in the department for which you have applied. The interview will take place with two members of staff from the department and a member of the HR department. Among other things, you will be asked why you are particularly suited for this position and where you see your strengths and weaknesses. Afterward, you will be asked a few technical questions and given ample opportunity to ask questions yourself. After just under an hour, the interview is over and you say goodbye to everyone involved | For this reason, you will be invited for a personal interview in the department for which you have applied. The interview will take place with two members of staff from the department and a member of the HR department. Among other things, you will be asked why you are particularly suited for this position and where you see your strengths and weaknesses. After that, you will be asked some technical questions and given ample opportunity to ask questions yourself. After just under an hour, the interview is over and you say goodbye to everyone involved | For this reason, you will be invited for a personal interview in the department for which you have applied. The interview will take place with two members of staff from the department and a member of the HR department. Among other things, you will be asked why you are particularly suited for this position and where you see your strengths and weaknesses. After that, you will be asked some technical questions and given ample opportunity to ask questions yourself. After just under an hour, the interview is over and you say goodbye to everyone involved |

On your way home, you reflect once again on all the impressions you have gathered about Marzeo AG throughout the application process and consider whether you could imagine working for this company | On your way home, you reflect once again on all the impressions you have gathered about Marzeo AG throughout the application process and consider whether you could imagine working for this company | On your way home, you reflect once again on all the impressions you have gathered about Marzeo AG throughout the application process and consider whether you could imagine working for this company | On your way home, you reflect once again on all the impressions you have gathered about Marzeo AG throughout the application process and consider whether you could imagine working for this company |

1.2 Newspaper article at the end of the questionnaire for participants of scenario 1 (no AI involvement)

Marzeo AG uses artificial intelligence for the selection of applicants