Abstract

This review paper discusses the developments in immersed or unfitted finite element methods over the past decade. The main focus is the analysis and the treatment of the adverse effects of small cut elements. We distinguish between adverse effects regarding the stability and adverse effects regarding the conditioning of the system, and we present an overview of the developed remedies. In particular, we provide a detailed explanation of Schwarz preconditioning, element aggregation, and the ghost penalty formulation. Furthermore, we outline the methodologies developed for quadrature and weak enforcement of Dirichlet conditions, and we discuss open questions and future research directions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past decades, the finite element method (FEM) has become an essential tool in scientific research and engineering. In its standard form, the finite element method requires the construction of a mesh that fits to the boundary of the considered geometry. For problems of practical interest, such a boundary-fitting mesh is constructed using mesh generators. Automatically generating boundary-fitting meshes can lack robustness, however, in the sense that manual intervention is required to, for example, repair distorted elements or non-matching surfaces. This is particularly the case when the geometry is very complex, such as for (possibly non-water-tight) CAD objects with many patches and trimming curves, geometries presented in the form of scan data, or settings in which frequent remeshing is required (for example in fluid–structure interactions). For such problems, immersed methods have been demonstrated to be capable of establishing a more efficient analysis pipeline, as illustrated by the examples in Fig. 1.

The pivotal idea of immersed finite element methods is to embed a complex geometry into a geometrically simple ambient domain, on which a regular mesh can be built easily. Basis functions defined on this ambient-domain mesh are then restricted to the problem geometry, after which the solution is approximated by a linear combination of these restricted basis functions. Although this discretization is conceptually straightforward, non-standard treatment of various aspects (which are discussed below) is required on account of the fact that the mesh does not fit the boundaries of the geometry. Using a wide variety of techniques to treat these non-standard aspects, the concept of immersed finite element methods has successfully been applied in a broad range of fields, such as solid mechanics [2,3,4,5,6,7,8,9,10]; shell analysis [9,10,11,12,13,14,15]; interface problems [16,17,18,19,20,21,22,23,24,25]; fluid mechanics [26,27,28,29,30,31,32,33,34]; fluid–structure interaction [35,36,37,38,39,40,41,42,43], in particular fluid–structure interaction for biomedical applications [44,45,46,47]; scan-based analysis of both man-made and biological materials [48,49,50,51,52,53,54,55]; shape and topology optimization [56,57,58,59,60,61,62]; and many more.

Examples of applications of immersed FEM. a shows the stress in an aluminum die cast gearbox housing [63]. The geometry is implicitly defined from CT data of a product to investigate stress concentrations around pores. b depicts the stress in a specimen of trabecular bone, rendered from CT data [64]

The immersed analysis concept was originally proposed in the context of the finite difference method by Peskin [65] in 1972. This immersed boundary method (IBM) and its enhancements have been employed in a wide range of applications ever since (see, e.g., Ref. [66] for a contemporary review). The application of the immersed element concept in the finite element setting can be traced back to the work on the partition of unity method [67, 68], generally referred to as the generalized or extended finite element method (GFEM [69, 70] or XFEM [71, 72]), where elements are cut in order to construct enrichment functions. The concept of cutting finite elements as an unfitted meshing technique was pioneered by Hansbo [73]. This work can be considered as the first instance of an immersed finite element method. The pace in the development and impact of immersed finite element methods increased significantly with the introduction of the finite cell method (FCM) [2, 9, 74, 75], which combines the cut element concept with higher-order basis functions, and CutFEM [20, 26, 76,77,78], which generally employs the ghost penalty to enhance numerical stability [76, 79]. Besides these prominent immersed FEM techniques, other notable examples are the aggregated finite element method (AgFEM) [80, 80, 81], the Cartesian grid finite element method (cgFEM) [57, 82], weighted extended B-splines (WEB-splines) [83, 84] and immersed B-splines (i-splines) [85]. Discontinuous Galerkin methods can readily be used on cut meshes after aggregation of cells, since they can be posed on polytopal meshes [86]. In recent years, the immersed analysis concept has been considered in conjunction with isogeometric analysis [87, 88] (often referred to as iga-FCM [9] or immersogeometric analysis [45]). In this setting, immersed methods have been demonstrated to be capable of leveraging the advantageous properties of the spline basis functions used in isogeometric analysis, while enhancing the versatility of the simulation workflow for cases where boundary-fitting spline geometries are not readily available, e.g., in scan-based analyses.

Immersed finite element methods are typically confronted by three computational challenges in comparison to standard mesh-fitting finite elements, viz: (i) the numerical evaluation of integrals over cut elements; (ii) the imposition of (essential) boundary conditions over the immersed or unfitted boundaries; and (iii) the stability of the formulation in relation to small cut elements. A myriad of advanced techniques has been developed over the past decades to resolve these challenges, which has made immersed finite element techniques a competitive simulation strategy for a wide range of problems. This review focuses on the third challenge, i.e., the effects of small cut elements on the performance of immersed finite element methods. We restrict ourselves to a high-level consideration of the first two of these challenges, and we refer the reader to, e.g., Ref. [75] for a detailed review on these topics.

Small cut elements typically give rise to stability and conditioning problems. In this article we review three prominent methods to resolve these problems, which have been developed in recent years, viz: (Schwarz) preconditioning, ghost-penalty stabilization (commonly used in CutFEM), and aggregation of cut elements (commonly used in AgFEM). In the discussion of these techniques, it is important to note that small cut elements do not only affect the conditioning of the problem, but also the accuracy of the solution. More specifically, direct application of Nitsche’s method for the weak imposition of essential boundary conditions requires a Nitsche parameter that scales inversely proportional with the size (in particular the thickness) of the cut element. As observed in, e.g., [1], the unboundedness of this parameter can deteriorate the accuracy of the solution. When using preconditioning techniques to resolve the small-cut-element problem, it is important to realize that these techniques do not resolve this potential issue regarding the accuracy. In contrast to preconditioning techniques, ghost penalty stabilization and aggregation ensure well-posedness with a Nitsche parameter inversely proportional to the ambient-domain mesh size, in addition to controlling the condition number. This makes the immersed finite element approximation using these stabilization techniques robust with respect to the cut element configurations, and preserves the error estimates of boundary-fitted finite element methods. It should be noted that, besides ghost penalty stabilization and aggregation, several other techniques have been developed to resolve the stability problems on small cut elements. However, in general, these do not simultaneously resolve the conditioning problem. An overview of these techniques is also presented in this review.

This review article has three objectives, viz: (i) to clarify that the small-cut-element problem is multi-faceted; (ii) to present the different techniques in an accessible theoretical framework, so that the implications of practical choices become apparent to a non-expert audience; and (iii) to provide a comparison of the techniques for conditioning and stabilization of immersed finite element methods. With the large number of immersed finite element techniques available, naturally comes the luxury problem of choosing which technique is most suitable in a particular situation. The rigorous theoretical underpinning of the methods as reviewed in this article is instrumental to aiding in the selection of a particular method, as it provides a fundamental understanding of the relation between small cut elements, conditioning, and stability and accuracy.

It should be noted that immersed methods are not the only techniques to create a robust workflow to deal with complex or implicitly defined geometries. One alternative is the shifted boundary method [89,90,91,92]. The shifted boundary method aims to replace an immersed problem with a similar boundary-fitted problem on the interior element mesh, by projecting boundary conditions from the real (unfitted) boundary to the interior element boundaries. This concept was already introduced in [93], and is also applied in [62]. This method bypasses the aforementioned three computational challenges of immersed finite element methods, but instead introduces other challenges, such as a non-trivial treatment of boundary conditions (including the projection of the boundary data), and a non-obvious geometrical treatment. Another approach is to use hybridizable techniques on unfitted meshes [94, 95]. Hybridizable methods can naturally be posed on polytopal meshes, giving additional geometrical flexibility compared to standard finite element methods. As a result, these methods can readily be used on the meshes obtained after the intersection of the boundary representation and background mesh and possibly after aggregation of elements. The impact of small cut elements and small cut faces (these schemes add unknowns on the mesh skeleton) on stability and condition numbers has only been studied very recently in the context of hybrid high-order methods, see [95]. A detailed discussion of these alternative techniques is beyond the scope of this work.

This article is structured as follows. In Sect. 2 we introduce the basic formulation based on a model problem and discuss the developments regarding the three computational challenges associated with immersed finite element methods. In Sect. 3 a compact analysis of the ill-conditioning problem is presented, and Schwarz preconditioning is discussed as a natural technique to resolve this problem. Section 4 then considers stabilization techniques, specifically the ghost-penalty method and aggregation technique, and presents a theoretical framework required to analyze the stability and conditioning properties of these techniques. A discussion on the current state of the field and concluding remarks are finally presented in Sect. 5. Note that the results presented in this manuscript are reproduced from previous publications by the authors, which are referenced in the text or in the captions.

2 Immersed Finite Element Methods

In this section we introduce the immersed finite element framework. Section 2.1 presents the concept of immersed finite element methods and specifies the formulations based on a model problem. In Sect. 2.2 the most prominent challenges of immersed finite element methods, compared to standard boundary-fitted finite element methods, are discussed.

2.1 Formulations

As a model problem, we consider the Poisson equation on the domain \(\Omega \subset \mathbb {R}^{d}\), with \(d\in \{2,3\}\) being the number of spatial dimensions (Fig. 2). The domain \(\Omega \) has a boundary \(\partial \Omega \) with outward-pointing unit normal vector n. The boundary consists of complementary parts \(\partial \Omega _D\) and \(\partial \Omega _N\) on which Dirichlet (or essential) and Neumann (or natural) conditions are prescribed with boundary data \(g_D\) and \(g_N\), respectively. The field variable \(u:\Omega \rightarrow \mathbb {R}\) is subject to the strong formulation

where \(\partial _n= n \cdot \nabla \) denotes the normal gradient operator.

In boundary-fitted finite elements, the domain \(\Omega \) is subdivided into elements T (generally at the expense of a geometrical error), which together comprise the mesh \(\mathcal {T}_h\) (Fig. 2b). The size of element T is denoted by \(h_T\) and a global mesh size parameter is defined as \(h = \textrm{max}_{T\in \mathcal {T}_h} h_T\). This manuscript only considers quasi-uniform discretizations, that is \(h_T \approx h\) \(\forall T \in \mathcal {T}_h\). On the mesh, shape function are introduced, which form the basis of the approximate solution \(u_h\). Integration is performed via standard quadrature rules for polynomials on simplices or hypercubes. Homogeneous Dirichlet conditions are usually imposed strongly by removing the basis functions with support on \(\partial \Omega _D\). The span of the remaining basis functions is referred to as \(V_{h,0} \subset H^1_0(\Omega )\). The Bubnov-Galerkin finite element method imposes inhomogeneous boundary conditions through a so-called lifting function, \(\ell (g_D) \in H^1(\Omega )\), which is generally constructed using the removed basis functions. The Bubnov-Galerkin finite element formulation corresponding to the strong form (2.1) can then be condensed into

where the symmetric bilinear form \(a(w_h,v_h)=\int _\Omega \nabla w_h \cdot \nabla v_h \, \text {d}V\) is continuous and coercive on \(H^1_0(\Omega )\), and the linear form \(l(v_h)=\int _{\partial \Omega _N} g_N v_h \, \text {d}S-\int _\Omega \nabla \ell (g_D) \cdot \nabla v_h \, \text {d}V\) is also continuous on \(H^1_0(\Omega )\). These conditions are sufficient to guarantee well-posedness of the problem (2.2) to find \(w_h \in H^1_0(\Omega )\) and \(u_h \in H^1(\Omega )\) for sufficiently smooth boundary data [96, 97]. Because of the coercivity of the bilinear form, it induces the operator norm \(\Vert v_h \Vert ^2_{a} = a(v_h, v_h)\), which is identical to the \(H^1_0(\Omega )\)-seminorm for the boundary-fitted finite element formulation of the Poisson problem.

In immersed finite element methods, the domain \(\Omega \) is embedded into an ambient domain \(\mathcal {A}\) (Fig. 2c). Instead of generating a boundary-fitted partitioning of the domain \(\Omega \), the ambient domain is partitioned by the background mesh \(\mathcal {T}_h^\mathcal {A}\) (Fig. 2d). Since the ambient domain is geometrically simple, mesh generation is trivial. But, as the element boundaries do not coincide with the boundaries of the domain \(\Omega \), evaluating integrals requires dedicated procedures (see Sect. 2.2.1). Hence, the complexity of the geometry is essentially captured by the integration procedure, instead of by the mesh generation. This geometrical simplification also has implications on the Galerkin formulation.

To specify the immersed finite element formulation, we define the active mesh \(\mathcal {T}_h\) as the set of elements T which intersect the domain \(\Omega \), i.e.,

where \(T_\Omega = T \cap \Omega \). Furthermore, the active domain is defined as the union of all the active elements \(\Omega _h = \cup _{T \in \mathcal {T}_h} T \supseteq \Omega \). The set of active elements that are cut by (and therefore intersect) the boundary is defined as

and the set of internal elements that are fully supported on the domain \(\Omega \) is defined as

Analogous to the boundary-fitted case, shape functions are introduced on the active mesh \(\mathcal {T}_h\) and an approximate solution \(u_h\) is formed as a linear combination of the restriction of the shape functions to \(\Omega \). The space spanned by these functions is referred to as \(V_h\). In contrast to the boundary-fitted finite element formulation, in the unfitted setting it is generally not feasible to create a finite dimensional subspace of \(V_{h,0} \subset H^1_0(\Omega )\), which is needed to strongly impose Dirichlet conditions. Instead, Dirichlet conditions are generally imposed weakly. The most common approach for weakly enforcing Dirichlet boundary conditions is via Nitsche’s method [98], which gives rise to the immersed finite element formulation

where the bilinear and linear form are defined as

The parameter \(\beta \), commonly referred to as the Nitsche or penalty parameter, must be chosen large enough to ensure coercivity of the bilinear form \(a_h(\cdot ,\cdot )\) in the discrete space \(V_h\). A sufficient condition is \(\beta > \max _{v_h \in V_{h}} \Vert \partial _n v_h \Vert _{\partial \Omega _D}^2/\Vert \nabla v_h \Vert _{\Omega }^2\), which can be computed by solving a generalized eigenvalue problem [99]. This can result in arbitrarily high values of \(\beta \) over the entire boundary \(\partial \Omega _D\). It is therefore common to select a local, element-wise, Nitsche parameter satisfying \(\beta |_T > \max _{v_h \in V_{h}|_T} \Vert \partial _n v_h \Vert _{T\cap \partial \Omega _D}^2/\Vert \nabla v_h \Vert _{T_\Omega }^2\) by solving a local generalized eigenvalue problem for each element. Based on dimensional considerations, one can infer that \(\beta |_T\) should be inversely proportional to a generalized thickness, \(h_{T_\Omega }\), of \(T_\Omega \) normal to \(T\cap \partial \Omega _D\) [100, 101]. We refer to \(h_{T_\Omega }\) as a generalized thickness, because \(T_\Omega \) can be irregularly shaped and, consequently, a well-defined length scale cannot generally be provided. While, with an element-wise parameter, a single small cut element does not result in a high value of \(\beta \) over the entire boundary, an element-wise parameter is still locally unbounded, which can lead to numerical issues [1]. With appropriate stabilization (see Sect. 4), the properties of the immersed formulation revert to those of the boundary-fitted setting considered in the original paper by Nitsche [98], and a global parameter inversely proportional to the mesh width of the background mesh suffices. In the remainder of this manuscript, both element-wise and global Nitsche parameters will be indicated by \(\beta \) and the notation \(a_h(\cdot ,\cdot )\) and \(l_h(\cdot )\) will be used in both stabilized and unstabilized formulations, with the choice of the Nitsche parameter following from the context. Similar to the boundary-fitted setting, the coercive and bounded bilinear form \(a_h\) induces an equivalent operator norm \(\Vert v_h \Vert ^2_{a_h} = a_h(v_h, v_h)\) in the discrete space \(V_h\). This operator norm coincides with the matrix energy norm of a corresponding coefficient vector, which renders it useful in the analysis of condition numbers of linear-algebraic systems emerging from immersed formulations.

Multiple variants of the immersed finite element formulation in (2.6)–(2.7) exist, the most prominent of which will be discussed in Sect. 2.2.2. A particularly noteworthy variation to (2.7) is the penalty method, which corresponds to the case where the terms that involve normal gradients in the above-mentioned operators are left out. This makes the method formally inconsistent with the strong form (2.1), but simplifies the implementation and ensures coercivity independent of the parameter \(\beta \). In this review, we do not discuss the penalty method in detail and focus on the application of Nitsche’s method. In general, the use of the penalty method instead of Nitsche’s method has a negligible impact on the conditioning, but does affect the accuracy of the solution.

To solve the immersed finite element formulation (2.6) numerically, it is recast as a linear algebra problem

where the components of the matrix \(\textbf{A} \in \mathbb {R}^{N \times N}\) and right hand side vector \(\textbf{b} \in \mathbb {R}^N\) correspond to

with \(\phi _i\) the i-th basis function and N the number of dimensions of the finite dimensional function space \(V_h\). The approximate solution \(u_h\) is given by

with \(x_i\) the components of the coefficient vector \(\textbf{x} \in \ell ^2(N)\) in equation (2.8). In the remainder we employ two norms for this coefficient vector, viz the \(\ell ^2\) vector norm \(\Vert \textbf{x} \Vert _2^2 = \textbf{x}^T \textbf{x}\) and, as \(\textbf{A}\) is Symmetric Positive Definite (SPD), the matrix energy norm

Note that, on account of (2.9), this matrix energy norm is equal to the operator norm, i.e., \(\Vert \textbf{x} \Vert _{\textbf{A}} = \Vert u_h \Vert _{a_h}\).

In the remainder of this manuscript we focus our presentation on the single field Poisson problem (2.1), discretized by quasi-uniform meshes with \(C^0\)-continuous piecewise polynomial basis functions of order p. Unless otherwise specified, results apply to both linear and higher-order discretizations. The presented analyses and methods naturally extend to vector-valued problems that can be expressed as a Cartesian product of scalar fields (i.e., one field per space dimension). Mixed formulations and discretizations with local refinements are not discussed in detail, but, if not stated otherwise, the provided insights also carry over mutatis mutandis to these cases. Particular aspects of interface problems and transient problems are discussed, respectively, in Remarks 2.2 and 2.3 in Sect. 2.2.3. With respect to conditioning, maximum continuity splines as commonly used in isogeometric analysis [87, 102] can behave differently from \(C^0\)-continuous bases. Therefore, Sect. 3 also considers B-spline bases as a special case.

2.2 Challenges in Immersed Finite Elements

Although the immersed finite element formulation introduced above fits within the general framework of the conventional finite element method, the application of immersed finite elements involves the consideration of various specific challenges. In this section we discuss the most prominent of these, viz: (i) numerical evaluation of integrals over cut elements, (ii) imposition of Dirichlet boundary conditions, and (iii) stability and conditioning of the formulation.

2.2.1 Cut-Element Integration

In boundary-fitted FEM, integrals over the domain \(\Omega \) are split into element-wise integrals. The elements generally correspond to polygons such as simplices or hypercubes, and the integrands are usually element-wise polynomials. For this reason, standard quadrature rules can readily be used. Integration in immersed methods is more involved, as the integration procedure should adequately approximate integrals on, in principle, arbitrarily shaped cut elements. This problem closely relates to the special treatment of discontinuous integrands in enriched finite element methods such as XFEM and GFEM.

In immersed finite element methods the geometry representation is independent of the mesh. In general, there are two ways to represent the geometry, viz implicit representations (e.g., voxel data [21, 103] or a level set function [51]) and explicit boundary representations (e.g., spline surfaces [3], B-rep objects [9], or isogeometric analysis on trimmed CAD objects [8, 12]). For all geometry representations, dedicated techniques are required to evaluate volume integrals over the intersection of active elements with the domain. A myriad of such integration procedures has been developed over the years in the context of immersed FEM (see [75, 104] for reviews/comparisons) and enriched FEM (see [105]), an overview of which is presented below. Techniques to integrate over trimmed boundaries are strongly dependent on the geometry description. In explicit representations, the geometry description itself can sometimes be leveraged, whereas generally a boundary-reconstruction procedure is required in the case of implicit boundary representations. The reader is referred to, e.g., Refs. [75] for a discussion regarding the various techniques to handle different geometry representations, and the related problem of integrating over unfitted boundaries.

The cut element volume integration techniques can be categorized as:

-

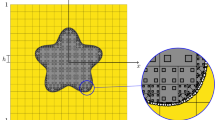

Octree subdivision: The general idea of octree (or quadtree in two dimensions) integration is to capture the geometry of a cut element by recursively bisecting sub-cells that intersect with the boundary of the domain, as illustrated in Fig. 3. At every level of this recursion, sub-cells that are completely inside the domain are preserved, while sub-cells that are completely outside of the domain are discarded. This cut element subdivision strategy was initially proposed in the context of the finite cell method (FCM) in [2] and is generally appraised for its simplicity and robustness with respect to cut element configurations. Octree integration has been widely adopted in immersed FEM, see, e.g., [45, 51, 75, 106, 107]. Various generalizations and improvements to the original octree procedure have been proposed, of which the consideration of tetrahedral cells [52, 108], the reconstruction of the unfitted boundary by tessellation at the lowest level of bisectioning [51], and the consideration of variable integration schemes for the sub-cells [104], are particularly noteworthy. Despite the various improvements to the original octree strategy, a downside of the technique remains the number of integration sub-cells (and consequently the number of integration points) that result from the procedure, especially in three dimensions and with high-order bases, where the refinement depth needs to be increased under mesh refinement to reduce the integration error with the same rate as the approximation error [109].

-

Cut element reparameterization: Accurate cut element integration schemes can be obtained by modifying the geometry parameterization of cut elements in such a way that the immersed boundary is fitted. This strategy was originally developed in the context of XFEM by decomposing cut elements into various sub-cells with only one curved side and then to alter the geometry mapping related to the curved sub-cell to obtain a higher-order accurate integration scheme [105]. This concept has been considered in the context of implicitly defined geometries (level sets) [110,111,112,113], the NURBS-enhanced finite element method (NEFEM) [114, 115], the Cartesian grid finite element method (cgFEM) [57, 82], and the mesh-transformation methodology presented in [116]. In the context of the finite cell method, the idea of cut element reparameterization has been adopted as part of the smart octree integration strategy [117,118,119], where a boundary-fitting procedure is employed at the lowest level of octree bisectioning in order to obtain higher-order integration schemes for cut elements with curved boundaries. Reparameterization procedures have the potential to yield accurate integration schemes at a significantly lower computational cost than octree procedures, but generally compromise in terms of robustness with respect to cut element configurations.

-

Polynomial integration: Provided that one can accurately evaluate integrals over cut elements (for example using octree integration), it is possible to construct computationally efficient integration rules for specific classes of integrands. In the context of immersed finite element methods, it is of particular interest to derive efficient cut-element integration rules for polynomial functions. The two most prominent methods to integrate polynomial functions over cut elements are moment fitting techniques [119,120,121,122,123,124,125,126], in which integration point weights and (possibly) positions are determined in order to yield exact quadrature rules, and equivalent polynomial methods [127, 128], in which a non-polynomial (e.g., discontinuous) integrand is represented by an equivalent polynomial which can then be treated using standard integration procedures. Such methods have been demonstrated to yield efficient quadrature rules for a range of scenarios. A downside of such techniques is the need for the evaluation of the exact integrals (using an adequate cut-element integration procedure) in order to determine the optimized integration rules. This can make the construction of such quadrature rules computationally expensive, which makes them more suitable in the context of time-dependent and non-linear problems (with fixed boundaries and interfaces), for which the construction of the integration rule is only considered as a pre-processing operation (for each cut element) and the optimized integration rule can then be used throughout the simulation. Another downside is that some of these methods can result in negative quadrature weights, which can cause instabilities as discussed in [126]. In the group of Dominik Schillinger at TU Darmstadt, work is currently being done to use neural networks for the computation of integration point weights to accelerate this process.

-

Dimension-reduction of integrals: Depending on the problem under consideration, it can be possible to reformulate volumetric integrals over cut elements by equivalent lower-dimensional integrals. This approach is advantageous from a computational effort point of view, as the equivalent integrals are generally less costly to evaluate. A reformulation of volume integrals in terms of boundary integrals has been proposed in the context of XFEM in [129] and in the immersed FEM setting in [130]. Dimension-reduction approaches for high-order quadrature with implicitly defined surfaces are presented for hexahedral and tetrahedral background elements in, respectively, [131] and [132]. Both these techniques rely on a reduction of integrals to one-dimensional integrations and provide strictly positive weights. The methodology proposed in [133] (and references therein) provides closed-form formulas for the integration of monomials on convex and nonconvex polyhedra and the extension to curved domains, relying on a reduction of integrals up to vertex evaluations. A downside of dimension-reduction techniques is that they are less general than standard quadrature rules.

-

Parameter optimization: Various strategies have been proposed to optimize the parameters of the cut element integration techniques discussed above, most notably for octree subdivision techniques. In [104, 109] algorithms are proposed to select the integration order on the different levels of sub-cells. Ref. [126] presents a methodology to reduce the number of integration points in a manner similar to moment fitting techniques. These optimization techniques have demonstrated that reducing the number of integration points does not necessarily compromise the accuracy of the simulations. This is theoretically supported by Strang’s first lemma [96, 134, 135], which indicates that integration does not need to be exact in order to attain (optimal) convergence [134]Footnote 1. It should be mentioned that this lemma is also considered in the context of CutFEM in e.g., [109, , 137].

In the selection of an appropriate cut element integration scheme one balances robustness (with respect to cut element configurations), accuracy, and expense. If one requires a method that automatically treats a wide range of cut element configurations and is willing to pay the price in terms of accuracy and computational effort, octree integration is the compelling option. For moderate accuracy, quadratures that rely on exact monomial integrations and only involve vertex evaluations136 are appealing in terms of accuracy and robustness. If the accuracy and computational-expense requirements are more stringent and the range of configurations is suitably restricted, alternative techniques such as cut element reparameterization are attractive. In the case of implicit boundary representations, an additional consideration in the selection of the cut element integration scheme is whether or not it is required to obtain a parameterization of (or integration scheme on) the unfitted boundary. Parameter optimization procedures can aid in fine-tuning the balance between robustness, accuracy and expense. The appropriateness of the various techniques also depends on the way in which the geometry is represented (implicit vs explicit), as this can have a substantial impact on the implementation of a particular technique.

2.2.2 Dirichlet Boundary Condition Imposition

Since the (immersed) boundary of the physical domain does not coincide with the background mesh, the imposition of boundary conditions in immersed methods requires special consideration. Given that a parameterized boundary representation for integration over the boundary exists, Neumann (or natural) boundary conditions can be imposed weakly, in the same way as done in boundary-fitted FEM. The imposition of Dirichlet (or essential) boundary conditions in immersed FEM is not as straightforward, however. Because of the disparity between the background grid and the physical domain, basis functions defined on the background mesh are not generally interpolatory on the unfitted boundary. This precludes strong imposition of Dirichlet conditions as in boundary-fitted FEM. Therefore, boundary conditions in immersed FEM are usually imposed weakly. Different techniques for the imposition of Dirichlet boundary conditions on unfitted boundaries exist, the most prominent of which are:

-

Penalty method: The penalty method supplements the weak form of boundary-fitted FEM with a penalty term that penalizes differences between the approximate solution and the prescribed Dirichlet data. This approach, which has been applied in the pioneering work on the finite cell method [2], is generally considered as the most straightforward technique to impose Dirichlet conditions on unfitted boundaries. The formulation omits the boundary terms that arise from the partial integration in the derivation of the weak form—in boundary-fitted FEM these terms drop out as the test functions vanish on Dirichlet boundaries—such that a modeling error is introduced which yields an inconsistent formulation. For appropriately selected penalty parameters the inconsistency can be acceptable [138], making the penalty method effective for a broad class of immersed problems with complex geometries, see, e.g., [21, 103]. Nevertheless, the choice of an appropriate penalty parameter is challenging. A too small value does not adequately enforce the prescribed boundary conditions, while a too large value exacerbates the conditioning problems [8] and can lead to large, nonphysical, gradients on cut elements [1, 139, 140].

-

Nitsche’s method: Nitsche’s method [98] can be considered as the consistent equivalent of the penalty method, as it retains the boundary gradients (sometimes referred to as the flux terms) in the weak formulation. Through appropriate scaling of the Nitsche (or penalty) parameter, a stable formulation is obtained, see, e.g., [141]. Nitsche’s method is a widely used technique for the weak imposition of boundary conditions in immersed finite element methods. An elegant aspect of Nitsche’s method is that the parameters can be computed per element [99], avoiding potential difficulties in the selection of a single global Nitsche parameter, see, e.g., [10]. The value of the Nitsche parameter should be inversely proportional to the thickness of the element,Footnote 2 and can become arbitrarily large for small cut elements [1]. This problem can be remedied by means of additional stabilization terms such as the ghost penalty, or by the aggregation of basis functions; see Sect. 4. Also, a nonsymmetric Nitsche method can be applied to avoid the need for stabilization [142,143,144], although this does affect the linear solver. Additionally, nonsymmetric Nitsche methods are not adjoint consistent, which means that these formulations result in suboptimal approximation properties in the \(L^2(\Omega )\)-norm [142]. Some other variations of Nitsche’s method are presented in [145].

-

Lagrange multiplier techniques: Dirichlet conditions on immersed boundaries can be enforced by supplementing the weak formulation with additional constraint terms, e.g., [141, 146]. In contrast to the penalty method and Nitsche’s method, in Lagrange multiplier techniques these constraint terms are associated with an auxiliary field variable which is defined over the unfitted boundary. This auxiliary field is referred to as the Lagrange multiplier field. Lagrange multiplier techniques result in a saddle point problem, which implies that the discrete Lagrange multiplier field needs to be selected in such a way that a stable system is obtained [147]. Examples of Lagrange multiplier type techniques for immersed FEM are presented in [39, 148,149,150,151]. While an advantage of Lagrange multiplier techniques over Nitsche’s method and the penalty method is that these do not require the selection of a parameter, the downsides are that additional degrees of freedom are introduced through the Lagrange multiplier field, and that a (inf-sup) stable discretization of the Lagrange multiplier field is generally non-trivial. Additionally, for many problems, the introduction of Lagrange multipliers changes the nature of the linear system from positive (semi-)definite to indefinite and breaks the diagonal dominance. This affects the applicability of iterative solvers (in particular this precludes the conjugate gradient method) and the factorization in sparse direct solvers.

-

Basis function redefinition: An alternative class of techniques to impose Dirichlet conditions on immersed boundaries is based on the idea to redefine the basis functions in such a way that the modified (non-vanishing) basis functions are interpolatory on the unfitted boundary. This enables traditional strong imposition of boundary conditions, as is standard in boundary-fitted finite element methods. Prominent examples of methods based on this concept are WEB-splines [83, 84] and, more recently, i-splines [85]. The main advantage of these techniques is that they do not require modifications to the weak formulation in comparison to boundary-fitted FEM, but the algorithms to redefine the basis functions constitute an additional non-trivial component in the implementation and analysis.

In the selection of an appropriate technique for imposing Dirichlet conditions in immersed finite elements, consistency and accuracy requirements are a prominent consideration. If there are no stringent accuracy requirements, the penalty method is an attractive option on account of its simplicity. If this method does not meet the accuracy requirements, one can resort to consistent weak formulations, where particularly Nitsche’s method strikes a suitable balance between accuracy and ease of implementation. Basis function redefinition strategies are an attractive alternative when there are reasons to enforce Dirichlet conditions in a strong manner, like in boundary-fitted finite element formulations.

2.2.3 Stability and Conditioning

Immersed discretizations that make use of finite element spaces defined on a background mesh generally suffer from the so-called small-cut-element problem. Conventional boundary-fitted finite element methods impose conditions on the shape and the size of the elements in the considered (family of) meshes. Such conditions can be (more-or-less) directly managed by the mesh-generation algorithm. In immersed finite element methods, on the other hand, one has no control over the shape and size of cut elements. Consequently, cut elements can have arbitrarily small intersections with the physical domain, which is commonly referred to as the small-cut-element problem. This loss of control in the immersed or unfitted setting can have extensive implications on the well-posedness and conditioning of the resulting discrete problem, unless the immersed method is judiciously formulated.

The challenge of stability and conditioning in immersed finite elements with respect to small cut elements is the main focus of this review. Frequently, this small-cut-element problem is considered as a single-faceted problem. In our opinion, however, there are two distinct (albeit strongly related) facets to the small-cut-element problem, viz stability and conditioning:

-

Stability is related to the fact that the numerical formulation itself can be ill-posed in the immersed setting. The most clear example of this is the imposition of Nitsche’s method with standard unfitted finite element spaces. The Nitsche parameter required for coercivity tends to infinity under mesh refinement, which can lead to unbounded gradients on immersed or unfitted boundaries. It should be mentioned that the stability of immersed finite elements is closely related to the way in which essential boundary conditions are enforced, and, in the case of Nitsche’s method, to the value of the Nitsche parameter.

-

Conditioning is related to the linear algebraic problem of obtaining the solution of the discrete system that arises from an immersed finite element formulation. Even if the problem is properly defined from a stability perspective, e.g., with only Neumann conditions on unfitted boundaries, the resulting system can be arbitrarily ill-conditioned, which impedes the solution of the system. This is caused by functions that are only supported on small cut elements, for which the operator norm (that is equal to the matrix energy norm of the corresponding coefficient vector) is affected by the cut-element size, while the norm of the coefficient vector itself is not. This implies that the eigenvalues of the system matrix can be arbitrarily close to zero, depending on the cut-element configuration.

Several techniques have been developed to counteract the problems associated with small cut elements, encompassing treatments for both stability and conditioning issues. The effects of cut elements on the conditioning of the linear system are discussed in detail in Sect. 3.1. Section 3.2 provides an overview of tailored preconditioners that address this issue. Specific attention in this section is devoted to Schwarz preconditioners, that form a natural resolution to the conditioning problem. The stability of immersed discretizations is treated in detail in Sect. 4, which considers both the stability problem itself in Sect. 4.1 and the methodologies that have been developed to resolve it in Sect. 4.2. Two particular approaches, viz element aggregation and the ghost-penalty method, resolve the stability problems in a manner that yields enhanced coercivity in \(H^1(\Omega _h)\) (i.e., on the union of all active elements) instead of just in \(H^1(\Omega )\). Consequently, these approaches do not only guarantee stability, but simultaneously preclude conditioning problems. Element aggregation and the ghost-penalty method are discussed in detail in Sects. 4.3 and 4.4, respectively, and Sect. 4.5 presents a unified mathematical approach to analyze these techniques.

Remark 2.1

Interpretation from the perspective of norm equivalences In terms of the problem definitions presented above, stability and conditioning can be distinguished as different norm equivalences in the discrete space. Stability pertains to the strength of the norm equivalence between the \(H^1(\Omega )\)-norm, which is a common measure for establishing the quality of a solution, and the operator norm, \(\Vert \cdot \Vert _{a_h}\) (or the equivalent \(\beta \)-norm or energy norm, which will be defined in Sect. 4). Conditioning, on the other hand, pertains to the strength of the norm equivalence of the matrix energy norm, \(\Vert \cdot \Vert _{\textbf{A}}\) (which is equal to the operator norm of the corresponding discrete function), and the \(\ell ^2\) vector norm, \(\Vert \cdot \Vert _2\). In an unfitted discretization that contains small cut elements, the equivalences between these norms can become very weak, which indicates the potential of difficulties with respect to stability and/or conditioning. To ensure coercivity of the weak formulation when Nitsche’s method is employed in an immersed setting without a dedicated stabilization technique, a (locally) very large Nitsche parameter is required. Such a very large Nitsche parameter, however, also causes the operator norm to be only very weakly bounded by the \(H^1(\Omega )\)-norm [1]. Similarly, functions that are only supported on small cut elements have a small operator norm, while the size of the cut elements does not affect the the \(\ell ^2\)-norm of the coefficient vector. For this reason, the bound on the \(\ell ^2\)-norm of the coefficient vector in terms of the matrix energy norm can be arbitrarily weak [152].

Remark 2.2

Interface problems Immersed formulations of interface problems such as [16] form a special class when considering the stability of cut elements. In such problems, an interface that is not aligned with the mesh separates parts of the domain, instigating a jump in the (material) properties (e.g., stiffness or conductivity) and thereby in the coefficients of the equation. Transmission conditions at the interface generally specify continuity of the solution and the fluxes, resulting in a jump in the gradients over the interface. The solution on different sides of the interface is therefore approximated by separate function spaces (defined on the same mesh covering the entire domain), with parts of basis functions that are intersected by the interface separately involved on both sides of the interface. This constructs a special case of immersed methods, because cut elements always have significantly large supports on either side of the interface. This can be leveraged with a weighted average flux in the bilinear formulation as presented in [18, 19, 73], resulting in a stable formulation with a bounded Nitsche parameter without the requirement for additional stabilization. Note that this formulation does not repair the conditioning problems and that the weighting of the fluxes is not effective for high-contrast problems. Besides formulations with weighted average fluxes, stable immersed interface problems can also be obtained by the techniques presented in Sect. 4, which additionally repair the conditioning. Particular examples of stable formulations with aggregation and ghost penalty are presented in [23, 24, 153] and [20, 25], respectively.

Remark 2.3

Stable explicit time integration While not considered in detail in this review, a related challenge is the stability of explicit time integrators on unfitted grids containing small cut elements. Analogous to the system matrix, the eigenvalues of the (consistent) mass matrix cannot be bounded from below in immersed formulations, such that the stable time step can become arbitrarily small. Similar to the stability of the solution on small cut elements discussed above, this can generally be resolved by the function space manipulations discussed in Sect. 4.3 or by the addition of the weak stabilization terms as discussed in Sect. 4.4 to the mass matrix, see, e.g., [154]. Regarding the stability of explicit time integrators, also the investigations into the spectral behavior of Nitsche’s method in both boundary-fitted and immersed settings presented in [155,156,157] are of particular interest. It should be mentioned that systems with lumped mass matrices and smooth (isogeometric) discretizations form an exception to the dependence of the stable time-step size on cut elements. For such systems, the eigenvalues of the lumped mass matrix on small cut elements scale more favorably with the size of small cut elements than the eigenvalues of the stiffness matrix. Therefore, stable explicit time integration can be performed without additional stabilization and with time steps dependent on the background element size, see [158, 159] for details.

3 Ill-Conditioning and Preconditioning

An integral aspect of finite element methods is to find the solution of the corresponding linear system of equations. For small systems this is generally done by a direct solver, which factorizes the linear system and directly computes the solution up to machine precision. The computational cost of direct solvers scales poorly with the size of the system, however, resulting in computation time and memory requirements that become prohibitive for large systems [160]. For this reason, large systems are generally solved by iterative solvers, the computational cost of which generally scales better with the size of the system [161]. The convergence of iterative solvers is strongly correlated with the conditioning of the system. Without tailored stabilization or preconditioning, systems derived from immersed finite element formulations are generally severely ill-conditioned, impeding the application of iterative solvers [152]. In Sect. 3.1, an analysis of the causes of ill-conditioning in immersed finite element systems is presented. Preconditioners which effectively resolve these conditioning problems are discussed in Sect. 3.2. Section 3.3 provides a detailed discussion about Schwarz preconditioners, which have commonly been applied to immersed finite element systems in recent years.

3.1 Conditioning Analysis

3.1.1 Condition Number

The conditioning of a linear system is often used as an indication of the complexity of solving that linear system by means of an iterative solver. An important property of a system matrix to indicate the conditioning is the (Euclidean) condition number, \(\kappa \left( \textbf{A}\right) \), which is defined as the product of the norm of the system matrix and the norm of its inverse

While the condition number is formally defined as the sensitivity of the solution to perturbations in the right hand side, it also relates to the convergence of iterative solvers. It is noted that the convergence of these solvers is dependent on more factors than simply the condition number, such as, e.g., the grouping of eigenvalues and the orthogonality of eigenvectors [161, 162].

Based on the equality between the matrix energy norm \(\Vert \textbf{y} \Vert _{\textbf{A}}\) of a vector \(\textbf{y}\) in the vector space \(\ell ^2(N)\) and the operator norm \(\Vert v_h \Vert _{a_h}\) of the corresponding function \(v_h = \sum _i y_i \phi _i\) in the isomorphic function space \(V_h\), the norm of the (symmetric) system matrix \(\textbf{A}\) can be written as

with \(w_h = \sum _i z_i \phi _i \in V_h\) and \(\textbf{z} \in \ell ^2(N)\). Similarly, for the norm of the inverse of \(\textbf{A}\) it can be written that

The identities in (3.2) and (3.3) convey that the condition number of a linear system emanating from an (immersed) finite element formulation is determined by the tightness of the equivalence between, on one side, the vector norm \(\Vert \textbf{y}\Vert _2\) of the coefficient vector, and, on the other side, the operator norm \(\Vert v_h \Vert _{a_h}\) of the corresponding function, on the isomorphic spaces \(\ell ^2(N)\) and \(V_h\). The weakness of this norm equivalence for systems that contain small cut elements is the root cause of ill-conditioning in immersed finite element methods.

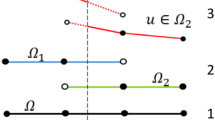

The norms defined above can be employed to provide an estimate, or lower bound, for the condition number of a system with a small cut element. To derive this estimate, we consider a two-dimensional cut-element scenario as indicated in Fig. 4. First, we define the volume fraction \(\eta _i\) of the cut element \(T_i\in \mathcal {T}_h^{\text {cut}}\) as the ratio between the volume of the intersection of the element with the domain \(\Omega \) and the volume of the full element in the background grid

Second, we note that in a polynomial basis of order p it is generally possible to construct a function \(v_h \in V_h\)

with \(\varvec{\xi }=(\xi _1,\xi _2)\) a local coordinate that has its origin \(\varvec{\xi } = \left( 0,0 \right) \) at the (not-cut) vertex of \(T_i\), as indicated in Fig. 4, and where the corresponding coefficient vector \(\textbf{y}\) in the vector space \(\ell ^2(N)\) has a norm that is approximately \(\Vert \textbf{y} \Vert _2 \approx 1\) depending on the employed basis (e.g., B-splines, Lagrange, or integrated Legendre). Under the assumption that element \(T_i\) is cut by a natural boundary such that the weak form does not contain boundary terms, the operator norm of \(v_h\) is given by

This shows that while the \(\ell ^2\) vector norm \(\Vert \textbf{y} \Vert _2\) is not affected by the volume fraction \(\eta _i\), the operator norm \(\Vert v_h \Vert _{a_h}\) and, by equivalence, the matrix energy norm scales with the volume fraction \(\eta _i\). Therefore it follows that

with \(\gtrsim \) denoting an inequality involving a constant (i.e., \(a \gtrsim b\) indicates \(a > C b\) for some constant C). As the norm \(\Vert \textbf{A}\Vert _2\) does not depend on the volume fraction \(\eta _i\), this results in a condition number \(\kappa (\textbf{A}) \gtrsim \eta _i^{-2p}\). In [152] this derivation is performed in an abstract setting for more general cut scenarios (under certain shape assumptions), for different numbers of dimensions, and with different boundary conditions. This results in the estimate of the condition number of linear systems derived from immersed finite element formulations of second order problems

with \(\eta \) denoting the smallest volume fraction in the system, i.e., \(\eta = \min _{i} \eta _i\). The scaling relation (3.8) is numerically verified in [152] for different grid sizes, discretization orders, and for both \(C^0\)-continuous and maximum continuity spline bases.

It can be noticed that the shape of the cut element is not included in the condition number estimate. That is because within certain shape-regularity assumptions (see [152] for details), the shape of the cut element is not a dominant factor in the order of magnitude of the smallest eigenvalue of the system matrix. When the system matrix is (diagonally) scaled, as will be discussed below, the shape of the cut element does play a role. It should be mentioned that, under the same shape-regularity assumptions, the derivation in [152] also establishes that when a local, element-wise, Nitsche parameter is employed, the condition number estimate is not affected by the type of boundary condition imposed on the (cut) boundary of the smallest cut element. When a global Nitsche parameter is employed, the value of this parameter is determined by the smallest cut element. While this does not affect the smallest eigenvalue of the system, this makes the largest eigenvalue dependent on the smallest cut element as well, resulting in even larger condition numbers.

3.1.2 Effect of Diagonal Scaling, Smoothness and the Cut-Element Shape

The previous paragraph considered the condition number of the system matrix \(\textbf{A}\) as is, without any treatment. It is noted, however, that linear algebra solvers typically perform basic rescaling procedures, most notably diagonal scaling operations such as Jacobi, Gauss-Seidel or SSOR preconditioning [163]. Consequently, it is important to not only consider the condition number independently, but also to have a closer look at the coefficient vector \(\textbf{y}\) corresponding to a function with a very small operator norm \(\Vert v_h \Vert _{a_h}\). As indicated in [152], there exist two mechanisms by which the operator norm of a basis function can be much smaller than the norm of the corresponding coefficient vector. First, it is possible that a basis function in itself is very small. In this case, simply the unit vector corresponding to that specific basis function will already cause a large condition number through (3.3) (i.e., with this unit vector taken as \(\textbf{y}\), the quotient in this equation will be very large). Second, on small cut elements it is possible that the dependence of certain basis functions on a specific parametric coordinate or on higher-order terms diminishes. This essentially reduces the dimension of the space spanned by the basis functions on the small cut element, such that these basis functions become almost linearly dependent (see Fig. 5). In this case, the small function \(v_h\) as in (3.5) cannot be represented by a unit vector, and the coefficient vector \(\textbf{y}\) corresponding to the small function requires multiple nonzero entries. This is an important nuance in relation to the scaling of the system, as small eigenvalues that correspond to an (almost) unit vector are resolved by diagonal scaling, while small eigenvalues caused by almost linear dependencies are not.

Illustration of an unfitted boundary with different types of cut elements. Element \(T_{1,\Omega }\) contains a vertex. With maximal continuity splines, only one basis function is supported on this element only (for a linear basis, the node corresponding to this function is indicated by the blue dot). Rescaling the basis functions will resolve the conditioning problems for this type of cut element. Element \(T_{2,\Omega }\) does not contain a vertex, and multiple basis functions are only supported on this element (with a linear basis, these are the basis functions corresponding to the nodes indicated with the green dots). If the volume fraction of this element is very small, the dependence of the basis functions on the horizontal coordinate (relative to the dependence on the vertical coordinate) diminishes, such that the basis functions describe essentially the same degree of freedom and become linearly dependent. Therefore, rescaling the basis functions will not resolve the conditioning problems on this type of cut element. In a similar manner, the relative contribution of higher-order terms diminishes relative to the contribution of linear terms on small cut elements. As a result, with higher-order discretizations that are not of maximal continuity, almost linear dependencies generally occur on small cut elements of any shape, while these only occur on specifically cut elements with discretizations of maximal continuity

Because of the above-discussed distinction between the classes of small eigenvalues in immersed finite element systems, there is a significant difference in conditioning between, on one hand, higher-order (\(p \ge 2\)) \(C^0\)-polynomials, and, on the other hand, maximal continuity splines and linears (note that linears are a subclass of maximal continuity splines). This is because in the former case, almost linear dependencies will be formed on all small cut elements. For such systems, Jacobi preconditioning lowers the condition number, but the resulting systems are still ill-conditioned and the condition number still shows a dependence on the smallest cut element. For maximum continuity splines, the shape of small cut elements starts to play a role. On elements in which a vertex is contained in \(T_{\Omega }\), as indicated by element \(T_{1,\Omega }\) in Fig. 5, a discretization with maximum continuity splines will only contain a single basis function of which the support is restricted to the small cut element. Therefore, a Jacobi preconditioner suffices to repair the conditioning with regard to that element. On cut elements where \(T_{\Omega }\) does not contain a vertex, as indicated by element \(T_{2,\Omega }\) in Fig. 5, the diminished dependence on the (in this case horizontal) parametric coordinate causes almost linear dependencies. Consequently, such elements still cause ill-conditioning even after diagonal rescaling of immersed systems with maximum continuity splines. Because of this dependence on the continuity of the basis, a scaled linear system derived from an unfitted discretization with maximum continuity splines will contain far fewer problematically small eigenvalues than a scaled linear system derived from an unfitted discretization with \(C^0\)-continuous basis functions of the same degree \(p \ge 2\). Depending on the size of the system and the smoothness of the boundary, the number of problematic eigenvalues in an immersed isogeometric discretization in conjunction with diagonal scaling can even be small enough to render direct application of a Krylov-subspace based iterative solver feasible. Because only cut elements of a specific shape cause small eigenvalues for systems based on maximum continuity splines in conjunction with diagonal scaling, the overall smallest volume fraction and the scaled condition number are in general only weakly correlated in such systems [152].

3.2 Preconditioning and Literature Overview

Based on the conditioning analysis presented above, ill-conditioning of immersed FEM systems can effectively be negated by dedicated preconditioning techniques. The general idea of preconditioning is to construct a preconditioning matrix, \(\textbf{B}\), which is an approximation of the inverse of the system matrix \(\textbf{A}\), and then to solve the preconditioned system

Although the original system matrix \(\textbf{A}\) in equation (2.8) can be ill-conditioned, i.e., \(\kappa (\textbf{A}) \gg 1\), a properly formed preconditioner results in a well-conditioned preconditioned system matrix, i.e., \(\kappa ( \textbf{B} \textbf{A} )\approx \kappa ( \textbf{A}^{-1} \textbf{A} ) = \kappa ( \textbf{I} ) = 1\). In constructing the preconditioner \(\textbf{B}\), one balances the computational effort required to compute and apply the preconditioner with the extent to which the inverse of the original system matrix is approximated.

Various dedicated preconditioning techniques to resolve ill-conditioning problems caused by small cut elements have been developed in the context of GFEM and XFEM, such as: a preconditioner based on local Cholesky decompositions [164], a FETI-type preconditioner tailored to XFEM [165], an algebraic multigrid preconditioner that is based on the Schur complement of the enriched basis functions [166], and domain decomposition preconditioners based on additive Schwarz [167, 168].

In recent years, dedicated preconditioners have been developed for immersed FEM. It is demonstrated in [169] that systems with linear bases can effectively be treated by a diagonal preconditioner in combination with the removal of very small basis functions. Under certain restrictions on the cut-element geometry, it is derived in [170] that a scalable preconditioner for linear bases is obtained by combining a Jacobi preconditioner for basis functions on cut elements with a standard multigrid preconditioner for interior basis functions that do not intersect the boundary. In [152], a preconditioner is developed that combines diagonal scaling with local Cholesky factorizations. This technique is motivated by the analysis of the conditioning problems of immersed methods in the previous section, and can be interpreted as a local change of basis on small cut elements, where the Cholesky factorizations correspond to local orthonormalization procedures for almost linearly dependent basis functions, which are identified by a tailored algorithm. The resulting preconditioner effectively resolves ill-conditioning for immersed methods discretized with higher-order (continuous) bases.

Recently, Schwarz preconditioners—which were already discussed above in the context of GFEM and XFEM [167, 168]—have also gained momentum in immersed finite elements. The concept of Schwarz preconditioning to overcome the problem of linear dependencies on small cut elements was considered in [64, 171] for both linear and higher-order discretizations. This concept was generalized to multilevel hp-finite element bases in [63], where it was also demonstrated to be effective in parallel computing frameworks. In [172], Schwarz preconditioning of unfitted systems was applied as a smoother in a multigrid solver, making it suitable for large scale computations. The methodology was applied in high-performance parallel-computing settings in [173, 174], with [174] demonstrating excellent scalability for problems with multi-billion degrees of freedom distributed over close to \(10^5\) cores. A Balancing Domain Decomposition by Constraints (BDDC) scalable preconditioner, tailored to immersed FEM by choosing appropriate weighting coefficients for cut basis functions, has been proposed in [175]. BDDC methods are multilevel additive domain decomposition algorithms that can scale up to millions of cores/subdomains [176]. The preconditioner in [175] results in an effective preconditioner for linear basis functions and exhibits the same parallelism potential as standard BDDC, thus being well-suited for large-scale systems on distributed-memory machines.

It is worth mentioning that dedicated preconditioners have also been developed and investigated for ghost-penalty stabilized discretizations. While, as will be demonstrated in Sect. 4, such stabilized systems do not suffer from the typical conditioning problems related to small cut elements discussed in Sect. 3.1, the different setting of the problem and the involvement of additional terms does warrant the investigation into the applicability of efficient solvers, in particular multigrid techniques. Dedicated multigrid routines for Nitsche-based ghost-penalty stabilized methods are presented in [177,178,179]. It is notable that [179] additionally presents multigrid routines for Lagrange multiplier based methods, see also [180, 181]. Furthermore, in [182] and [183] a similar approach as in [170] (discussed above) is followed. In these references it is demonstrated that a stable splitting of the degrees of freedom exists, and that a scalable solver is obtained by combining a standard multigrid technique for a well-defined set of internal degrees of freedom with a diagonal preconditioner for the set of degrees of freedom along the boundary. While this technique is only applicable to ghost-penalty stabilized systems, in contrast to [170] it can also be applied to higher-order discretizations. A final noteworthy contribution is [184], which presents a preconditioner for ghost-penalty stabilized immersed interface problems of high contrast. In this reference a different splitting of the degrees of freedom is applied to define a Schur complement, based on which preconditioners are presented that are robust to high contrast ratios.

The remainder of this section focuses on Schwarz preconditioning and the considerations regarding its application to systems derived from immersed finite element methods.

3.3 Schwarz Preconditioning

3.3.1 Concept of Schwarz Preconditioning

The concept of Schwarz preconditioning is to invert (restrictions of) local blocks of the system matrix \(\textbf{A}\in \mathbb {R}^{N \times N}\) and then sum these contributions to form the preconditioner. To provide a definition, we consider a set of (potentially overlapping) index blocks, where each index block contains \(M_i \ge 1\) indices. The additive Schwarz preconditioner is then defined as

and the multiplicative Schwarz preconditioner as

The prolongation operator \(\textbf{P}_i \in \mathbb {R}^{N \times M_i}\) consists of the unit vectors corresponding to the \(M_i\) indices in the i-th index block. Pre- and post-multiplying the system matrix \(\textbf{A}\in \mathbb {R}^{N \times N}\) with the (transpose of) this prolongation operator restricts it to the submatrix \(\textbf{A}_i\in \mathbb {R}^{M_i \times M_i}\) consisting of only the indices in the i-th index block. Similarly, the opposite pre- and post-multiplication with these operators injects the local inverse \(\textbf{A}_i^{-1}\in \mathbb {R}^{M_i \times M_i}\) into the matrix \(\textbf{B}_i\in \mathbb {R}^{N \times N}\). It is to be noted that the index blocks may overlap. In additive Schwarz these contributions are then added in \(\textbf{B}_{\textsc {AS}}\) and treated simultaneously. In multiplicative Schwarz, repetitions of the same index are treated sequentially. Application of the multiplicative Schwarz preconditioner can be expressed by recursive relations; see, e.g., [172]. It is noted that index blocks consisting of a single index simply reduce to Jacobi preconditioning in additive Schwarz, and to Gauss-Seidel preconditioning in multiplicative Schwarz. The formal definition and details about the construction of Schwarz preconditioners are presented [64] and [172].

3.3.2 Application to Immersed Systems

Schwarz preconditioners can be conceived of as locally orthonormalizing the basis functions corresponding to the indices in a block. In order to effectively employ the concept of Schwarz preconditioning as a tailored preconditioner for unfitted systems, it is therefore essential that for every set of almost linearly dependent functions, there is an index block containing all these functions. Since almost linear dependencies occur between basis functions that are supported on a small cut element, the index blocks are generally chosen by selection procedures based on the overlapping support of basis functions, either for all active elements in \(\mathcal {T}_h\) or only for the cut elements in \(\mathcal {T}_h^{\text {cut}}\). A discussion regarding considerations in the index blocks is provided later in this section.

A further interpretation of Schwarz preconditioning in relation to immersed finite element methods can be obtained from the additive Schwarz lemma. This lemma states that for a Symmetric Positive Definite (SPD) matrix \(\textbf{A}\), the \(\textbf{B}_{\textsc {AS}}^{-1}\)-inner product of an arbitrary vector \(\textbf{y}\) is equal to [185, 186] (see [187, 188] for this specific form)

In these identities, \(\tilde{\textbf{y}}_i\in \mathbb {R}^{M_i}\) denotes a block vector corresponding to the i-th index block. The statement \(\textbf{y} = \sum _j \textbf{P}_j \tilde{\textbf{y}}_j\) indicates that the sum of the prolongations of these block vectors form a partition of the vector \(\textbf{y}\in \mathbb {R}^{N}\). A set of block vectors with this property exists, provided that every index is contained in (at least) one index block. In the case that the blocks do not overlap, this set of block vectors is unique. In the case that the index blocks do overlap, multiple sets of block vectors have the partition property. Accordingly, the lemma states that the \(\textbf{B}_{\textsc {AS}}^{-1}\)-inner product of \(\textbf{y}\) is equal to the minimum of the sum of the \(\textbf{A}_i\)-inner products of the block vectors \(\tilde{\textbf{y}}_i\) over all sets of block vectors with the partition property.

To relate this to the specific conditioning problems in immersed finite element methods, recall that \(\textbf{B}_{\textsc {AS}}\) can be considered as a sparse approximation of \(\textbf{A}^{-1}\). Efficient preconditioning requires \(\textbf{B}_{\textsc {AS}}\) to have similar properties as \(\textbf{A}^{-1}\) or, similarly, \(\textbf{B}_{\textsc {AS}}^{-1}\) to have similar properties as \(\textbf{A}\). The analysis of the conditioning problems associated with immersed finite element methods in Sect. 3.1 conveys that the principal cause of these problems is that, potentially, \(\Vert \textbf{y} \Vert _2 \gg \Vert \textbf{y} \Vert _{\textbf{A}}\) in case \(\textbf{y}\) corresponds to a function comprised of (i) a very small basis function or (ii) almost linearly dependent basis functions. For the first case, it follows from (3.12) that \(\textbf{y}\) must also have a small \(\textbf{B}_{\textsc {AS}}^{-1}\)-inner product, such that this property is captured by the additive Schwarz preconditioner, independent of the index blocks. For the second case, assume that indeed for every set of almost linearly dependent functions, there is an index block containing all these functions, in accordance with the previously stated postulate. Then, in case \(\textbf{y}\) corresponds to a function comprised of almost linearly dependent basis functions, \(\textbf{y}\) can be written as the prolongation of a single block vector. Consequently, also in the second case, it follows from (3.12) that \(\textbf{y}\) will have a small \(\textbf{B}_{\textsc {AS}}^{-1}\)-inner product, such that this property is captured in the additive Schwarz preconditioner. As a result, with a proper choice of the index blocks, for both causes of very small eigenvalues of the matrix \(\textbf{A}\), the corresponding modes will also be present in \(\textbf{B}_{\textsc {AS}}^{-1}\). The Schwarz preconditioner thus specifically targets the problematic aspects of small eigenvalues due to small cut elements (for both linear and higher-order discretizations), and thereby effectively resolves the ill-conditioning in systems derived from immersed formulations.

3.3.3 Effectivity and Multigrid Preconditioning

While the effectivity of Schwarz preconditioning for immersed problems can be explained by the additive Schwarz lemma in combination with a particular selection of the blocks, a formal mathematical bound on the eigenvalues of an unfitted system treated by Schwarz preconditioning has not yet been formulated. Numerical results, however, consistently show that the resulting systems behave the same as boundary-fitted systems, in the sense that (for second order problems as the Poisson equation) the condition number scales as \(h^{-2}\), and the number of iterations that is required to solve the linear system up to a prescribed tolerance is proportional to \(h^{-1}\) [64, 172].

To resolve the remaining grid dependence after Schwarz preconditioning, the Schwarz preconditioner can be applied as a smoother in a geometric multigrid framework. In [172,173,174] this is demonstrated to result in a methodology that is robust to cut elements and which solves linear systems with quasi-optimal complexity, i.e., at a computational cost that is linear with the number of degrees of freedom (DOFs). A delicate consideration in a multigrid framework is the choice between additive and multiplicative Schwarz. As demonstrated in [172], the stability of a multigrid solver with an additive Schwarz smoother requires a considerable amount of relaxation, and for this reason [172] employs a multiplicative implementation. As discussed later in this section, multiplicative Schwarz is less suited for parallelization, such that parallel implementations employ either additive Schwarz [174] or a hybrid variant [173]. Another important aspect of multigrid solvers with Schwarz smoothers is the dependence of their effectivity on the discretization order, which is investigated and discussed in [172].

3.3.4 Block Selection

As previously mentioned, for Schwarz preconditioning to be effective, it is essential that every combination of basis functions that can become almost linearly dependent is contained in an index block, which is generally achieved by selecting these blocks based on the overlap in the supports of basis functions. In [64], an index block is devised for every cut element, containing all basis functions supported on it. For basis functions that are not supported on any cut element, a simple diagonal scaling is performed. A tailored block selection procedure for multilevel hp-adaptive discretizations is developed in [63]. In this procedure, the set of basis functions supported on a refined (leaf) element is further restricted to only the necessary DOFs. Additionally, the procedure is optimized by only devising blocks for cut elements with a volume fraction that is smaller than a certain threshold \(\eta ^* \in [0,1]\). For locally refined discretizations based on truncated hierarchical B-splines, a block selection strategy is presented in [172]. In [64] it is noted that, for vector-valued problems, degrees of freedom describing the solution in a certain geometrical dimension can generally not form a linear dependency with degrees of freedom describing the solution in another geometrical dimension. Hence, it can be beneficial to generate separate index blocks for each geometrical dimension.

The most important consideration in the selection of index blocks is the size of the blocks. Small blocks are computationally inexpensive, but can miss almost linear dependencies on pathologically cut elements. Large blocks are more robust, but are computationally more expensive. With the Schwarz preconditioner directly employed in an iterative solver, the number of iterations is generally large enough for small blocks to be more efficient. When the Schwarz procedure is employed as a smoother in a multigrid method, the small number of iterations generally renders larger blocks more appropriate. For this reason, [172] and [174] consider block selection procedures based on multiple elements. In [172] an index block is created for every basis function, containing all the basis functions with a support that is encapsulated by the support of the function for which the block is created. Ref. [174] employs index blocks containing all basis functions supported on a cluster of \(2^d\) elements, with d the number of dimensions.

Regarding the lack of consensus on the block selection procedures in different contributions, it can be concluded that an optimal choice of index blocks is still an unresolved question.

3.3.5 Implementation Aspects

A specific operation in the construction of a Schwarz preconditioner is the computation of stable inverses of the submatrices \(\textbf{A}_i\). With very small eigenvalues, numerical round-off errors can cause detrimental errors in the inversion of submatrices with eigenvalues that are too close to machine precision. In the worst case, this can lead to negative eigenvalues in the preconditioned system, resulting in failure of the iterative solver. For that reason, the inverses are generally not computed by a simple inversion operation, but via an eigenvalue decomposition. After the decomposition, eigenvalues that are smaller than a prescribed threshold are discarded. Details about this procedure are described in [63, 64].

The Schwarz preconditioner is suitable for parallel implementations [63]. A particular facet of the parallelization of a Schwarz preconditioner is that each submatrix \(\textbf{A}_i\) has to be available in a single subprocess for inversion. As this is not generally the case in parallel (boundary-fitted) finite element codes, this calls for special care in the implementation, e.g., by applying ghost elements [63]. As previously mentioned, a specific consideration pertaining to parallel multigrid implementations is the choice between additive and multiplicative Schwarz. While multiplicative Schwarz does not require relaxation, it does require extensive communication and synchronization between parallel processes, specifically in distributed memory systems. For that reason the parallel multigrid implementation in [174] employs an additive Schwarz smoother, while in [173] a hybrid variant is applied with an additive approach for DOFs that are shared between processes and a multiplicative treatment of DOFs that belong to a single process.

3.3.6 Extension to Other Problems