Abstract

Fluid–structure interaction simulations can be performed in a partitioned way, by coupling a flow solver with a structural solver. However, Gauss–Seidel iterations between these solvers without additional stabilization efforts will converge slowly or not at all under common conditions such as an incompressible fluid and a high added mass. Quasi-Newton methods can then stabilize and accelerate the coupling iterations, while still using the solvers as black boxes and only accessing data at the fluid–structure interface. In this review, the IQN-ILS, IQN-MVJ, IBQN-LS, MVQN, IQN-IMVLS and IQN-ILSM methods are reformulated in the generalized Broyden framework to illustrate their similarities and differences. Also related coupling techniques are reviewed and a performance comparison is provided where available.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fluid–Structure Interaction (FSI) is the interaction of a fluid with a moving or deforming structure and occurs in many different branches of engineering. In mechanical engineering, the blades of a wind turbine deform due to their interaction with the wind [1, 2]. Also Flow-Induced Vibration (FIV) can occur, for example in tube bundles with external flow, leading to leakage or even rupture of the tubes [3]. In civil engineering, there are interactions between wind flow and bridges [4], silos [5], tents [6] and many other structures. In the biomedical field, heart valves and arteries are flexible structures that interact with the blood flow [7, 8].

As fluid–structure interaction is a multi-physics problem, complex phenomena can occur and numerical simulations are frequently used for the analysis. These numerical simulations of FSI can be performed in a monolithic or partitioned way. Monolithic codes solve the equations for the fluid and for the structure simultaneously, for example with a Newton–Raphson procedure [9] or multigrid method [10]. In this review, the focus is on the partitioned approach, as it allows to reuse mature and optimized codes to solve the subproblems.

Among the partitioned approaches, one can distinguish between the weakly and strongly coupled techniques. Weakly coupled techniques (also called explicit or loose coupling) solve the flow equations and the structure equations only once per time step [11, 12]. Consequently, the equilibrium between the fluid and the structure is not satisfied exactly. In this context, the term equilibrium refers to the equality of velocities and forces on the fluid–structure interface. These weakly coupled techniques are typically suitable for aeroelastic simulations with light and compressible fluids [13], but specific schemes can also be applied with dense and incompressible fluids [14].

By contrast, strongly coupled techniques (or implicit coupling) use coupling iterations between the flow solver and structure solver to enforce the equilibrium at the fluid–structure interface up to a convergence tolerance in a steady simulation or in each time step of an unsteady one [15,16,17]. As a result, the flow problem and structure problem are solved multiple times per (time) step. Obviously, these coupling iterations increase the computational cost, but the cost per coupling iteration normally decreases during the iterations within a (time) step as the change per iteration decreases. In the remainder of the paper an unsteady simulation will be assumed; a steady simulation is then a special case with one large time step.

An important parameter for the choice between weakly and strongly coupled techniques, but also for the stability of the coupling iterations in several strongly coupled techniques, is the ratio of the added mass to the mass of the structure [18]. The added mass is the apparent increase in mass of the structure due to the fluid that is displaced by the motion of this structure. Physically, it is influenced by the shape of the fluid domain and the density of the fluid. Numerically, also the time step size determines its effect, but this effect depends on whether the fluid is compressible or not. FSI problems with a compressible fluid can always be stabilized as long as the time step is sufficiently small, regardless of the ratio of the apparent added mass to the structural mass. However, for an incompressible fluid, stability cannot be obtained by decreasing the time step size [13, 15]. On the contrary, for incompressible flows in combination with flexible structures, decreasing the time step size may even increase the instability [15, 19,20,21]. For example, for the simulation of an elastic panel clamped at both ends and adjacent to a semi-infinite fluid domain, the added mass effect of a compressible fluid is proportional to the time step size, while for incompressible fluids it approaches a constant as the time step size decreases [13].

Especially for an incompressible fluid, many cases have a high added mass, e.g., blood flow in a vascular system [22], vibrations of tube bundles in lead-bismuth eutectic [23] or flutter of a slender cylinder in axial flow [24]. For these cases, the straightforward iteration between flow and structure solver within a time step will typically converge very slowly, or not at all, if no additional stabilization efforts are implemented. In this work, the focus is on techniques which consider the solvers as black boxes, as this is typically the case in a partitioned approach. Then, stabilization methods that alter one of the solvers, e.g., including an approximate added mass operator in the structural solver as in [25], are not possible. To stabilize and accelerate the convergence of coupling iterations with black box solvers, quasi-Newton methods have been developed in the fluid–structure interaction community. These methods will be reviewed in this work using the generalized Broyden framework.

The remainder of this review paper is structured as follows. First the FSI problem is posed and the necessary notation is introduced in Sect. 2. Then, the most basic solution approach is discussed in Sect. 3, with focus on its shortcomings in terms of stability and convergence speed, and how they can be overcome by introducing Jacobian information. In Sect. 4, a general method to obtain these Jacobians is discussed, called generalized Broyden. Thereafter, in Sect. 5, different quasi-Newton techniques are discussed in detail, including the Interface Quasi-Newton technique with an approximation for the Inverse of the Jacobian from a Least-Squares model (IQN-ILS), the Interface Quasi-Newton technique with Multi-Vector Jacobian (IQN-MVJ), the Interface Block Quasi-Newton technique with approximations from Least-Squares models (IBQN-LS), the Multi-Vector update Quasi-Newton technique (MVQN), the Interface Quasi-Newton Implicit Multi-Vector Least-Squares (IQN-IMVLS) and the Interface Quasi-Newton algorithm with an approximation for the Inverse of the Jacobian from a Least-Squares model and additional Surrogate Model (IQN-ILSM). This section ends with further notes and extensions on these methods. Finally, some numerical results to compare the different techniques are provided in Sect. 6, followed by the conclusions in Sect. 7.

2 Formulation of the FSI Problem

An abstract fluid–structure interaction problem, as shown in Fig. 1, consists of the subdomains \(\Omega _f\) and \(\Omega _s\), with the subscripts f and s denoting fluid and structure, respectively. The boundaries of the subdomains are denoted as \(\Gamma _f=\partial \Omega _f\) and \(\Gamma _s=\partial \Omega _s\) and the fluid–structure interface \(\Gamma _i=\Gamma _f\cap \Gamma _s\) is their common boundary.

Besides having to satisfy the flow and structure equations in the respective subdomains while taking into account the appropriate boundary conditions on \(\Gamma _f\setminus \Gamma _i\) and on \(\Gamma _s\setminus \Gamma _i\), the solution of the FSI problem is also required to fulfill the equilibrium conditions on the fluid–structure interface \(\Gamma _i\). The equilibrium conditions on a no-slip fluid–structure interface are twofold. First, the equality of fluid and solid velocity on \(\Gamma _i\) is needed (kinematic condition)

where \(\vec {v}\) is the velocity vector in the fluid domain and \(\vec {u}\) the displacement vector in the structure domain. Remark that this equality also implies equal accelerations on the interface. Second, equal magnitude but opposite sense of traction on \(\Gamma _i\) is required (dynamic condition)

where \(\bar{\sigma }_{f,s}\) is the stress tensor in \(\Omega _{f,s}\) and \(\vec {n}_{f,s}\) the unit normal vector that points outwards from the corresponding subdomain.

As this work discusses coupling techniques that consider the solvers as black boxes, only the variables on the fluid–structure interface \(\Gamma _i\) are of interest. However, the discretization of this interface is often different in the flow and structure subdomains. Given the focus of this review on coupling techniques, it is assumed that an interpolation layer is wrapped around or included in one (or both) of the solvers, invisible to the implementation of the coupling technique. As a consequence, the discretized displacement on either side of the fluid–structure interface can be represented as a column array \(\varvec{\lowercase {x}}\in \mathbb {R}^{n_x\times 1}\) containing all components of the displacement vector \(\vec {u}\) in each of the \(n_p\) grid points on the interface.

with the first subscript referring to the grid point (1 to \(n_p\)) and the second one to the component (1 to d, with d the dimension).

Similarly, the pressure p and all components of the viscous traction vector \(\vec {t}\) in each load point (1 to \(n_l\)) on either side of the fluid–structure interface are grouped in a column array \(\varvec{\lowercase {y}}\in \mathbb {R}^{n_y\times 1}\)

also called load vector, with the same meaning of the subscripts as above. Note that the \(n_l\) load points do not need to coincide with the discretization of the displacement. It is important that the pressure load \(p\cdot \vec {n}\) and viscous traction \(\vec {t}\) are not added, but included individually into \(\varvec{\lowercase {y}}\), because the pressure is typically dominant and has to stay perpendicular to the surface, also when interpolation is performed. If pressure and viscous traction were added, the resulting interpolated vector would have a pressure contribution that is not necessarily perpendicular to the surface after interpolation, resulting in an artificial shear component that can be much larger than the physical shear component.

With the typical Dirichlet–Neumann decomposition of the FSI problem, the displacement (linked to the velocity through the time discretization in time-dependent problems) is imposed at the interface in the flow solver and a pressure and viscous traction distribution is applied on the interface in the structure solver. A flow solver with a deforming grid using the Arbitrary Lagrangian–Eulerian (ALE) frame of reference will be assumed for the explanation, but this can be replaced by other techniques, for example the combination of the ALE approach and the Chimera technique [26] to handle large body motions or non-conforming alternatives, such as Immersed Boundary Methods (IBM) [27] and Embedded Boundary Methods (EBM) [28], which can handle large deformations and even topology changes. The flow calculation in a coupling iteration within a time step can be written as

This notation concisely represents several operations and hides the dependence on previous time steps and the variables in the fluid domain next to the interface, while emphasizing the dependence on the discretized displacement \(\varvec{\lowercase {x}}\) of the fluid–structure interface. It represents the following actions. First, the discretized displacement is given to the flow solver and the fluid domain adjacent to the interface is adapted accordingly. Then, the flow equations are solved in the entire fluid domain, resulting in a new load distribution \(\varvec{\lowercase {y}}\) on the interface.

Similarly, the calculation of the structure is represented by the function

As before, this expression hides the dependence on both the previous time steps and the variables in the structure domain next to the interface. It indicates that the fluid pressure and viscous traction distribution on the interface \(\varvec{\lowercase {y}}\) is given to the structure code. Subsequently, that code calculates the displacement of the entire structure and thus also the new displacement \(\varvec{\lowercase {x}}\) of the fluid–structure interface.

With these notations, the FSI problem is formulated as the system

that has to be solved for \(\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {y}}\). This problem can be rewritten as the root-finding problem

with unknowns \(\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {y}}\).

Moreover, the system in Eq. (7) can be reduced by substituting one equation in the other. Commonly, the first line is substituted in the second, but the other way around is equally possible. In this way, the FSI problem is simplified to a smaller system of equations

which has to be solved for \(\varvec{\lowercase {x}}\). The notation \(\circ\) refers to function composition, so \(\varvec{\mathcal {S}}\,\circ\, \varvec{\mathcal {F}}(\varvec{\lowercase {x}})\) is equivalent with \(\varvec{\mathcal {S}}(\varvec{\mathcal {F}}(\varvec{\lowercase {x}}))\). This looks like a fixed-point equation for \(\varvec{\lowercase {x}}\), but can also be written as a root-finding problem with unknown \(\varvec{\lowercase {x}}\)

To write this more compactly, the residual operator \({\varvec{\mathcal {R}}}(\cdot )\) is defined as

with output \(\varvec{\lowercase {r}}={\varvec{\mathcal {R}}}(\varvec{\lowercase {x}})\). The FSI problem thus reduces to finding the \(\varvec{\lowercase {x}}\) that fulfills

In this section, we have presented two formulations of the FSI problem. The first is the complete system Eq. (7) with \(n_x+n_y\) unknowns. The second is the reduced system Eq. (9), which has the benefit of having only \(n_x\) unknowns. This system has been written more compactly using the residual operator \({\varvec{\mathcal {R}}}\) resulting in Eq. (12). In the next sections, several methods are discussed to solve the FSI problem presented here, in one of both formulations.

Both the solver operators as well as the residual operator are typically nonlinear. Therefore, the FSI problem exhibits similarities with nonlinear root-finding problems. The main difference is that an FSI problem usually involves time stepping (except for steady cases), which means that a nonlinear system has to be solved in each time step. Therefore, within each time step, coupling iterations are performed until the solution is reached. The nonlinear systems in subsequent time steps are somehow related to each other, because the solver operators change only gradually in time. As the solution is typically continuous, the initial guess for \(\varvec{\lowercase {x}}\) at the start of each time step can be obtained by extrapolating the solution from previous time steps [29].

3 Solving the FSI Problem

3.1 Gauss–Seidel Scheme

In order to solve the FSI problem, Eq. (8) has to be solved in each time step. One of the basic methods to solve such a system of nonlinear equations is the block Gauss–Seidel scheme. In this block-iterate scheme, each of the nonlinear equations is solved for one of the unknowns consecutively, and each unknown is updated to its new value as soon as it becomes available.

Because, further on, it will become necessary to make a distinction between the output of one solver and the input of the next, a tilde symbol is introduced to indicate the output of a solver:

Using the superscript \(k+1\) to indicate the current iteration, the block Gauss–Seidel scheme takes the following form

The lastly calculated displacement vector \(\tilde{\varvec{\lowercase {x}}}{}^k\) is used as \(\varvec{\lowercase {x}}^{k+1}\), the input of the flow solver in the following iteration. Subsequently, this vector is used to calculate a new load vector \(\tilde{\varvec{\lowercase {y}}}{}^{k+1}\) which is thereafter used as input of the structure solver \(\varvec{\lowercase {y}}^{k+1}\), to calculate a new displacement vector \(\tilde{\varvec{\lowercase {x}}}{}^{k+1}\). This iteration scheme, in which the output of the flow and structure solver is passed unchanged to the structure and flow solver, respectively, is the most basic way to find an equilibrium and is also called Gauss–Seidel scheme or fixed point iteration scheme.

The final solution of the FSI problem Eq. (7) has to fulfill the kinematic Eq. (1) and dynamic equilibrium condition Eq. (2) up to a certain tolerance. This means that \(\tilde{\varvec{\lowercase {x}}}{}\) and \(\tilde{\varvec{\lowercase {y}}}{}\) have to approach \(\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {y}}\), respectively, which is expressed by the convergence conditions

Because the output of each of the solvers is passed unchanged to the other, this can also be written as

which relates to the fixed point formulation in Eq. (9).

By eliminating the occurrence of the load vector \(\varvec{\lowercase {y}}\), the procedure can be simplified to

Furthermore, with the use of the residual operator introduced in Eq. (11), the iteration scheme becomes

which is considered converged once

3.2 Motivation for Using Quasi-Newton Methods

Unfortunately, the Gauss–Seidel scheme explained above is not unconditionally stable due to among others the added mass effect.

Many researchers have investigated the stability of Gauss–Seidel iterations. For example, its convergence behaviour has been studied based on a simple model problem with a single degree of freedom on the interface [30]. Some investigated the added-mass effect [31]. Many others have explored the case of blood flow through a simplified artery [15, 32, 33]. They observed that, besides an apparent fluid mass of the similar order of magnitude as the actual structural mass, also a decrease in the stiffness of the structure or increase in domain length has a destabilizing effect. A first attempt to mathematically analyze the stability was done through the determination of the maximum relaxation factor to obtain convergence [15, 25].

Instead of looking at a single number, the stability of a Gauss–Seidel scheme for a simplified flexible tube model with Dirichlet–Neumann decomposition can be examined by splitting the error on the interface into Fourier modes [34]. The mentioned error is the difference between the correct interface displacement and the one in a Gauss–Seidel iteration, based on linearized equations and without taking the boundary conditions into account. In this way, the authors were able to identify which frequency components become unstable. The analysis was first performed for a tube wall without inertia [34] and thereafter repeated including inertia [35], which proved to stabilize the convergence behaviour.

From this analysis it can be deduced that only a limited number of modes of the interface displacement are unstable and that the lowest wave numbers have the highest amplification factor and are hence the most unstable ones. This observation is true for different combinations of parameter values. In other words, the divergence or slow convergence of Gauss–Seidel iterations are caused by a limited number of unstable and slowly converging modes corresponding to the lowest wave numbers.

The physical explanation for this observation is shown in Fig. 2. The figure shows an axisymmetric tube, the wall of which is perturbed with two different wave numbers, while on the in- and outlet a zero pressure boundary condition is imposed. Initially, its cross section is constant and the incompressible fluid is at rest. In the upper part of Fig. 2, a low wave number perturbation is applied and, because the fluid is incompressible, it is accelerated globally resulting in large pressure variations. In the lower part, a higher wave number perturbation is applied and the fluid acceleration is confined to more local regions. As a consequence, the pressure variations are much smaller for higher wave numbers. The pressure variations in the lower part of Fig. 2 are even barely visible, because the same scale is used for both cases.

The pressure contours (in Pa) in an axisymmetric tube due to two displacements of the tube’s wall with the same amplitude but a different wave number. Initially, the fluid is at rest and the tube has a constant cross-section and zero pressure at both ends. A displacement of the tube’s wall with a low wave number (top) creates much larger pressure variations than a displacement with a high wave number (bottom). Only the difference between the two calculations and not the values as such are important [36]

Although the above analysis was performed on a flexible tube, the results are more widely applicable to incompressible fluids with a fluid–structure density ratio around one. For example, [37] arrived at the same conclusions by examining the stability of Gauss–Seidel iterations for a semi-infinite open fluid domain bounded by a string or a beam.

In summary, Gauss–Seidel iterations are not suitable for incompressible fluid cases with high added mass, because there is a limited number of error modes that are unstable. In order to obtain a solution for these cases, the unstable modes have to be removed by another technique to (efficiently) achieve convergence. Based on the results from the Fourier decomposition, it follows that only the low wave number modes have to be stabilized, while the others can still be treated using Gauss–Seidel iteration. The next section explains that the stabilization of these modes is achieved by including derivative information, which is the basic principle behind quasi-Newton techniques.

3.3 Quasi-Newton Schemes

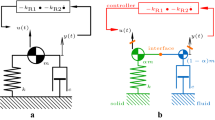

In order to overcome this limitation of the Gauss–Seidel iterations for problems with high added mass and an incompressible fluid, the quasi-Newton iteration scheme is adopted. To improve stability, one or both of the vectors \(\tilde{\varvec{\lowercase {x}}}{}\) and \(\tilde{\varvec{\lowercase {y}}}{}\) are modified before passing them to the other solver. If only one solver output is adapted, it is usually the output of the structural solver \(\varvec{\mathcal {S}}\) as in Fig. 3b, but the opposite is equally possible. Figure 3c shows a schematic representation of adapting both solver outputs.

In the remainder of this section we will first introduce the adaptation of one output where we will use the modification of the structural output as example. This scheme will be referred to as the residual formulation scheme. Thereafter, the adaptation of both solver outputs will be introduced. The corresponding scheme is called the block iteration scheme.

3.3.1 Residual Formulation Quasi-Newton Scheme

In this scheme, the output \(\tilde{\varvec{\lowercase {x}}}{}^k\) of the structural solver is modified to \(\varvec{\lowercase {x}}^{k+1}\) which is subsequently used as input for the flow solver. The output \(\tilde{\varvec{\lowercase {y}}}{}^k\) of the flow solver is passed unchanged to the structural solver. Therefore, the load vector \(\varvec{\lowercase {y}}\) can be left out altogether, as was the case for Gauss–Seidel iterations. With the use of the residual operator, defined in Eq. (11), the residual \(\varvec{\lowercase {r}}^{k+1}\) in iteration \(k+1\) is written as

and as before convergence is reached when Eq. (19) is satisfied. The difference with the Gauss–Seidel scheme is that \(\varvec{\lowercase {x}}^{k+1}\) is no longer equal to \(\tilde{\varvec{\lowercase {x}}}^{k}\). The adaption of the displacement vector follows from the use of a Newton–Raphson approach to solve the root-finding problem Eq. (12). This method uses the Jacobian of the nonlinear equation, which is denoted here by \({\varvec{\mathcal {R}}}'\), to estimate the input \(\varvec{\lowercase {x}}^{k+1}\) that will direct the residual to \(\varvec{\lowercase {0}}\) by solving

for \(\varvec{\lowercase {x}}^{k+1}\). Note that Gauss–Seidel iteration Eq. (18) is retrieved if \({\varvec{\mathcal {R}}}'(\varvec{\lowercase {x}}^k)=-\varvec{\uppercase {I}}\). Likewise, relaxed Gauss–Seidel iteration is obtained if the Jacobian is \(-\omega \varvec{\uppercase {I}}\).

Because both the flow and structure solvers are considered black box solvers, the Jacobians of \(\varvec{\mathcal {F}}\) and \(\varvec{\mathcal {S}}\) are not accessible and hence, neither is \({\varvec{\mathcal {R}}}'\). Therefore, the Jacobian of the residual operator is approximated, resulting in a quasi-Newton method

where \({\varvec{\widehat{{{{{\mathcal {R}}}}}'}}}(\varvec{\lowercase {x}}^k)\) is the approximated Jacobian.

As explained in the previous section, the instability of Gauss–Seidel iterations is caused by a limited set of modes, i.e., for the vectors \(\varvec{\lowercase {x}}\) in a small subspace of \(\mathbb {R}^{n_x\times 1}\). Consequently, an approximation of the complete Jacobian of the residual operator \({\varvec{\mathcal {R}}}\) is not required. An approximated Jacobian which takes care of these unstable modes and leaves the other modes unchanged is sufficient. Leaving some modes unchanged means that the quasi-Newton method will actually perform Gauss–Seidel iterations for those modes.

Solving the linear system in the equation above can be avoided by approximating the inverse of the Jacobian directly and calculating the update of the displacement vector as

To conclude this section, a new short-hand notation is introduced for the approximate (inverse) Jacobians. For a nonlinear function \(\varvec{\lowercase {r}}= {\varvec{\mathcal {R}}}(\varvec{\lowercase {x}})\), the approximate Jacobian and inverse Jacobian are written as

3.3.2 Block Iteration Quasi-Newton Scheme

Instead of only adapting the output of one solver, it is also possible to adapt the output of both the flow and structure solver. Now both \(\varvec{\lowercase {x}}^{k+1}\) and \(\varvec{\lowercase {y}}^{k+1}\) are different from \(\tilde{\varvec{\lowercase {x}}}^{k}\) and \(\tilde{\varvec{\lowercase {y}}}^{k+1}\), and, because the load vector is no longer passed unchanged, it is not possible to use the residual operator. The convergence conditions are again given by Eq. (15).

The modification of the output of the solvers is determined by applying block Newton–Raphson iterations to the root-finding problem Eq. (8) with unknowns \(\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {y}}\)

where \(\Delta \varvec{\lowercase {x}}\) and \(\Delta \varvec{\lowercase {y}}\) are the updates for the input \(\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {y}}\) of the flow and structure solvers, respectively. Further, \(\varvec{\uppercase {I}}\) is the identity matrix and \(\varvec{\mathcal {F}}'\) and \(\varvec{\mathcal {S}}'\) are the Jacobians of the flow and structure equations. Note that the two identity matrices will have different dimensions if the size of \(\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {y}}\) differ.

Starting from the displacement \(\varvec{\lowercase {x}}^k\) that was given as input to the flow solver in the previous coupling iteration, the displacement \(\varvec{\lowercase {x}}^{k+1}=\varvec{\lowercase {x}}^k+\Delta \varvec{\lowercase {x}}^k\) is calculated by solving the system

for \(\Delta \varvec{\lowercase {x}}^k\).

Using the updated value \(\varvec{\lowercase {x}}^{k+1}\) and after calling the flow solver to determine \(\tilde{\varvec{\lowercase {y}}}^{k+1}=\varvec{\mathcal {F}}(\varvec{\lowercase {x}}^{k+1})\), the pressure and viscous traction distribution \(\varvec{\lowercase {y}}^{k+1}=\varvec{\lowercase {y}}^k+\Delta \varvec{\lowercase {y}}^k\) is calculated by solving the analogous system

for \(\Delta \varvec{\lowercase {y}}^k\). Subsequently, the structure solver is called to determine \(\tilde{\varvec{\lowercase {x}}}^{k+1}=\varvec{\mathcal {S}}(\varvec{\lowercase {y}}^{k+1})\).

Similar to the previous section, the Jacobians are not accessible, because the solvers are considered black boxes. Therefore, approximations denoted by \({\widehat{\varvec{{\mathcal {F}}}'}}\) and \({\widehat{\varvec{{\mathcal {S}}}'}}\) are used instead. Note that here two normal Jacobians are required, one for each solver, whereas in the previous section only one inverse Jacobian was required, namely the inverse Jacobian of the residual operator.

Adopting the same short-hand for the approximated Jacobians as in the previous section results in the following notations

4 Approximating Jacobians

The previous section introduced quasi-Newton approaches to stabilize and at the same time accelerate the convergence of coupling iterations. These schemes adapt either one or both of the solver outputs before passing them on, resulting in respectively, a quasi-Newton system for the residual formulation of the FSI problem, or a block iteration quasi-Newton system. These systems each contain one or more approximate Jacobians. The residual formulation scheme requires an approximation for the inverse of the Jacobian \({\varvec{\mathcal {R}}}'(\varvec{\lowercase {x}}^k)\), which is denoted as \(\partial ^{k}_{\varvec{\lowercase {r}}} \varvec{\lowercase {x}}\). The block iteration quasi-Newton scheme requires approximations for the Jacobians of the flow solver and structure solver, so \(\partial ^{k}_{\varvec{\lowercase {x}}} \tilde{\varvec{\lowercase {y}}}\) approximates \(\varvec{\mathcal {F}}'(\varvec{\lowercase {x}}^k)\) and \(\partial ^{k}_{\varvec{\lowercase {y}}} \tilde{\varvec{\lowercase {x}}}\) approximates \(\varvec{\mathcal {S}}'(\varvec{\lowercase {y}}^k)\). In these notations the superscript k refers to the iteration in which the Jacobian has been approximated.

All of these approximate Jacobians can be created using the generalized Broyden method. In this section, we will explain this method for the construction of an approximate Jacobian of an arbitrary nonlinear function \(\varvec{\lowercase {b}}= \varvec{\mathcal {B}}(\varvec{\lowercase {a}})\). For now, we leave out the added complexity of FSI problems, for which an approximate Jacobian has to be constructed in each time step. This will be explained in the next section. Here, we just have an iterative method, where in each iteration k the Jacobian \(\varvec{\mathcal {B}}'(\varvec{\lowercase {a}}^k)\) is approximated by \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\). The same technique can also be used to approximate the inverse Jacobian \({\varvec{\mathcal {B}}'(\varvec{\lowercase {a}}^k)}^{-1}\) by \(\partial ^{k}_{\varvec{\lowercase {b}}} \varvec{\lowercase {a}}\).

Instead of immediately presenting the rather complex generalized Broyden equation, it is introduced step by step, in a way that better fits the quasi-Newton FSI explanations found in literature.

4.1 Satisfying the Secant Conditions

The core idea of any quasi-Newton Jacobian approximation is to use the nonlinear function input–output information from previous iterations. Indeed, an input \(\varvec{\lowercase {a}}^i\) resulting in a certain output \(\varvec{\lowercase {b}}^i\) is a piece of valuable information about the behavior of the black box function \(\varvec{\mathcal {B}}\), which can be used to approximate the Jacobian \(\varvec{\mathcal {B}}' (\varvec{\lowercase {a}}^k)\) by \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\). In the current iteration \(k+1\), the inputs of all previous iterations

are available, as well as the corresponding outputs

The input–output info is stored and used in the form of differences between consecutive iterations, defined as

for \(0 \le i \le k-1\). The \(\delta\) notation refers to the difference between previous iterations, in contrast to the \(\Delta\) notation, which refers to the desired change or update that needs to be performed.

Each pair \((\delta \varvec{\lowercase {a}}^i, \delta \varvec{\lowercase {b}}^i)\) is called the secant information at iterations i and is related to a secant line to the nonlinear function \(\varvec{\mathcal {B}}\). Therefore, it can be interpreted as a finite difference approximation for the Jacobian in the direction \(\delta \varvec{\lowercase {a}}^i\).

Furthermore, each secant information pair has a corresponding secant equation:

If the approximated Jacobian \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\) meets this secant condition, it uses a finite difference approximation for the actual Jacobian in the direction of \(\delta \varvec{\lowercase {a}}^i\), with the input–output information of iterations i and \(i+1\). This secant information is relevant only if the Jacobian stays more or less the same during the k iterations, which means that \(\varvec{\mathcal {B}}(\varvec{\lowercase {a}})\) has to behave close to linearly in the neighbourhood of \(\varvec{\lowercase {a}}^k\).

The idea is to construct \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\), so that it fulfills all the k secant equations. To write this compactly, the differences defined previously in Eq. (31) are stored in the matrices \(\varvec{\uppercase {A}}^k\) and \(\varvec{\uppercase {B}}^k\) as follows

Now, the k secant conditions can be collected in the matrix equation

With \(n_a\) and \(n_b\) being the length of the input and output vectors, this is a system of \(n_b k\) scalar equations for \(n_b n_a\) unknowns (the elements of the matrix \(\partial ^{k}_{\varvec{\lowercase {b}}} \varvec{\lowercase {a}}\)). The system is thus typically underdetermined (\(k<n_a\)). In order to find a unique solution, the least-norm solution is sought, which is in this case defined as the smallest matrix in the Frobenius norm that satisfies all secant conditions. The solution is given as

where \({\varvec{\uppercase {A}}^k}^+\) is the pseudo-inverseFootnote 1 (or Moore–Penrose inverse) of the rectangular matrix \(\varvec{\uppercase {A}}^k\), defined as

To calculate the pseudo-inverse, it is necessary that the columns of \(\varvec{\uppercase {A}}^k\) are linearly independent. For now, we will assume this is always the case and the issue of linear dependence of the secant information is addressed in detail in the discussion on filtering in Sect. 5.1.

The expression for the approximate Jacobian presented above is elegant and short, but not very intuitive. Therefore, a different approach to obtain the same expression is given below.

The purpose of the approximate Jacobian is to determine an estimated change in output \(\Delta \varvec{\lowercase {b}}\) that corresponds to an arbitrary change in input \(\Delta \varvec{\lowercase {a}}\), by evaluating

For this purpose, the secant information from the previous iterations is utilised in the following approach.

First, the arbitrary vector \(\Delta \varvec{\lowercase {a}}\) is approximated as a linear combination of vectors \(\delta \varvec{\lowercase {a}}^i\), i.e.

with \(\varvec{\lowercase {c}} \in \mathbb {R}^{k \times 1}\) a coefficient vector.

It follows from the secant information that an input difference \(\delta \varvec{\lowercase {a}}^i\) corresponds to an output difference \(\delta \varvec{\lowercase {b}}^i\), for \(0 \le i \le k-1\). Therefore, and under the assumption that the linear behavior of \(\varvec{\mathcal {B}}\) is locally dominant, it can be stated that a linear combination of vectors \(\delta \varvec{\lowercase {a}}^i\) will correspond to the same linear combination of vectors \(\delta \varvec{\lowercase {b}}^i\). This principle allows to determine \(\Delta \varvec{\lowercase {b}}\) as

Finally, it remains to determine the coefficients \(\varvec{\lowercase {c}}\). The system in Eq. (38) is typically overdetermined. Hence, the least-squares solution for \(\varvec{\lowercase {c}}\) will be used, which can be obtained by solving the square system of normal equations

Therefore, the coefficient vector is given as

Using this to calculate \(\Delta \varvec{\lowercase {b}}\) results in

Comparison with Eq. (37) reveals the same Jacobian as determined before in Eq. (35).

Matrix-free implementation Some of the algorithms explained in Sect. 5 require the explicit construction of the Jacobian matrix, while for others only its product with a vector, e.g., \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}} \ \Delta \varvec{\lowercase {a}}\), is required. This last set of algorithms allows matrix-free implementation, for which the Jacobian matrix Eq. (35) never has to be calculated explicitly in practice, nor is the explicit calculation of the pseudo-inverse defined in Eq. (36) needed. How this is achieved is explained here.

Equations (40) and (41) show that the product of the pseudo-inverse with a vector is in fact the solution of the normal equations, but solving the normal equations Eq. (40) becomes unstable if the number of columns in the matrix \(\varvec{\uppercase {A}}^k\) is rather high. A more robust method to calculate the pseudo-inverse uses the reduced or economy-size QR decomposition [38] of \(\varvec{\uppercase {A}}^k\)

where \(\varvec{\uppercase {Q}}_A^k \in \mathbb {R}^{n_a \times k}\) is a matrix with orthonormal columns and \(\varvec{\uppercase {R}}_A^k \in \mathbb {R}^{k \times k}\) is an upper triangular matrix.Footnote 2 Applying this to the normal equations Eq. (40) and using the fact that the inverse of \(\varvec{\uppercase {R}}_A^k\) exists because the columns of \(\varvec{\uppercase {A}}^k\) are linearly independent, results in

Symbolically, this means that the pseudo-inverse can be written as \({\varvec{\uppercase {R}}_A^k}^{-1}{\varvec{\uppercase {Q}}_A^k}^\textrm{T}\), but it should never be constructed or stored. Instead, the product of the pseudo-inverse with a vector can be calculated by first evaluating the right hand side of Eq. (44) and subsequently solving the system using back-substitution, as \(\varvec{\uppercase {R}}_A^k\) is an upper triangular matrix. The complete procedure to efficiently determine \(\Delta \varvec{\lowercase {b}}\) given \(\Delta \varvec{\lowercase {a}}\) is summarized in Algorithm 1.

In the following, the notation with the pseudo-inverse will still be used. Nonetheless, it should be kept in mind that the actual calculation has to be done using QR decomposition and back-substitution, avoiding the calculation of the inverse of matrices as well as the construction of large dense square matrices.

4.2 Adding an Initial Estimate for the Jacobian

Assuming the columns \(\varvec{\uppercase {A}}^k\) are linearly independent, the above obtained approximated Jacobian \(\varvec{\uppercase {B}}^k {\varvec{\uppercase {A}}^k}^+\) is of rank k. For the current discussion, it is assumed that \(k \ll n_a\). Therefore, the matrix is a low-rank Jacobian approximation, and has an image or range of dimension k and a nullspace of dimension \(n_a-k\). As a result, with regard to its product with an arbitrary \(\Delta \varvec{\lowercase {a}}\), only the part of \(\Delta \varvec{\lowercase {a}}\in \text {range}(\varvec{\uppercase {A}}^k)\) will have a non-zero result. This becomes clear from the definition of the pseudo inverse in Eq. (36). The part of \(\Delta \varvec{\lowercase {a}}\perp \text {range}(\varvec{\uppercase {A}}^k)\) falls in the nullspace of the approximated Jacobian and the product of this part with the Jacobian is therefore zero. In other words, the approximated Jacobian is zero in every direction that is not a linear combination of the directions \(\delta \varvec{\lowercase {a}}^i\), for \(0 \le i \le k-1\), encountered in the previous iterations.

Nonetheless, a full rank approximation of the Jacobian may be required, e.g., when it is used in a quasi-Newton method according to the residual formulation scheme. If this is the case, the current Jacobian approximation, using the approximation based on secant conditions for the part of \(\Delta \varvec{\lowercase {a}}\in \text {range}(\varvec{\uppercase {A}}^k)\), can be expanded with an initial estimate of the approximate Jacobian \(\partial ^{0}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\) for the remaining part \(\Delta \varvec{\lowercase {a}}\perp \text {range}(\varvec{\uppercase {A}}^k)\).

The splitting of \(\Delta \varvec{\lowercase {a}}\) in these two parts is based on orthogonal projection and visualized in Fig. 4. The orthogonal projection of a vector \(\Delta \varvec{\lowercase {a}}\) onto the range of \(\text {range}(\varvec{\uppercase {A}}^k)\) is given by

This is the part of \(\Delta \varvec{\lowercase {a}}\in \text {range}(\varvec{\uppercase {A}}^k)\). Using the complementary projector or just calculating the difference of \(\Delta \varvec{\lowercase {a}}\) and its orthogonal projection

gives the part of \(\Delta \varvec{\lowercase {a}}\perp \text {range}(\varvec{\uppercase {A}}^k)\). Refer to [39] for a more complete discussion of projectors. Moreover, note that, using the QR decomposition these two parts are given by \(\varvec{\uppercase {Q}}_A^k {\varvec{\uppercase {Q}}_A^k}^\textrm{T}\Delta \varvec{\lowercase {a}}\) and \((\varvec{\uppercase {I}} - \varvec{\uppercase {Q}}_A^k {\varvec{\uppercase {Q}}_A^k}^\textrm{T}) \Delta \varvec{\lowercase {a}}\).

Now, the Jacobian approximation based on secant information can be extended with an initial Jacobian \(\partial ^{0}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\):

The questions why and how an initial Jacobian can be added have been answered. What remains is the choice of its value. Often, the identity matrix is used, scaled with a factor, typically \(-1\) or \(-\omega\), which corresponds to (relaxed) Gauss–Seidel iteration, as explained below Eq. (21). This is the simplest approach to obtaining a full rank Jacobian approximation and will also be used in Sect. 5. In the case a low rank approximation suffices, e.g., the Jacobians for block iteration quasi-Newton, \(\partial ^{0}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}} = \varvec{\uppercase {0}}\) can be used, which means the second term disappears completely. In still other situations, a physics-based surrogate may be available to use as initial Jacobian. This approach may accelerate convergence, but is application-specific and will be discussed further in Sect. 5.6.

4.3 Generalized Broyden Method

Up to now, the approximation of the Jacobian \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\) was determined such that it met all secant conditions. However, this is not the only way to use the secant information. Another often used method (although not in FSI) is to only require the approximated Jacobian to fulfill the latest secant equation. Therefore, the matrices \(\varvec{\uppercase {A}}^k\) and \(\varvec{\uppercase {B}}^k\) only contain the latest piece of secant information:

For all vectors \(\Delta \varvec{\lowercase {a}}\perp \text {range}(\varvec{\uppercase {A}}^k)\) (i.e., \(\Delta \varvec{\lowercase {a}}\perp \delta \varvec{\lowercase {a}}^{k-1}\)), we want to use the previous Jacobian \(\partial ^{k-1}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\). In other words, the effect of the approximated Jacobian remains unchanged in all directions orthogonal to \(\delta \varvec{\lowercase {a}}^{k-1}\). This is called the no-change condition, which can be written formally as

To obtain a Jacobian approximation with these specifications, \(\partial ^{0}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\) is replaced by \(\partial ^{k-1}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\):

where \(\varvec{\uppercase {A}}^k\) and \(\varvec{\uppercase {B}}^k\) now contain only one column. This is a recursive expression for the approximated Jacobian. In fact, this is Broyden’s original method,Footnote 3 to construct the approximate Jacobian [40]. It was developed in the sixties, to solve systems of nonlinear equations.

Furthermore, Broyden’s method can be generalized. Instead of using only one secant condition and the approximate Jacobian from the previous iteration, m secant conditions can be used in combination with the approximate Jacobian from m iterations ago. This gives rise to the generalized Broyden method

with

This equation for the approximate Jacobian in generalized Broyden, however, can also be obtained in a more formal way, namely as the unique matrix that satisfies a number of conditions. Two equivalent ways are described in [41].

First, the approximated Jacobian can be obtained as the only matrix that simultaneously satisfies the m secant conditions in Eq. (34) and the \(n_a - m\) no-change conditions

Secondly, it can be obtained as the unique matrix that satisfies the m secant conditions Eq. (34) and minimizes the difference with the approximate Jacobian from m iterations ago, i.e.

where the subscript F denotes the Frobenius norm.

Furthermore, previously discussed methods are retrieved by choosing certain values for the parameter m in the generalized Broyden method. For \(m=1\), Broyden’s original method is recovered, while for \(m = k\) the pure secant method from Sects. 4.1 and 4.2 is obtained.

The generalized Broyden method was established much later than Broyden’s original method. The first extension to the original one in the eighties led to a rather complex modified Broyden method [42, 43]. In the nineties, Eyert [44] simplified this method by removing some nonessential parameters, resulting in the generalized Broyden method presented here.

Around that same time, the connection between the generalized Broyden method and Anderson acceleration (or Anderson mixing) was discovered. Anderson acceleration [45] was introduced in the sixties to accelerate fixed-point iterations. Based on the work by Van Leuken [46], Eyert showed that Anderson acceleration is mathematically equivalent to generalized Broyden with \(m = k\), i.e., the pure secant method introduced in Sects. 4.1 and 4.2. This is not immediately apparent due to the very different ideas on which Anderson and Broyden originally based their methods.

In partitioned FSI simulations, several variants of the generalized Broyden method are used to approximate Jacobians in quasi-Newton iterations. These techniques were developed independently from the older methods (Anderson, Broyden and generalized Broyden) and the correspondence to those methods was only discovered recently [47, 48].

Because a nonlinear system of equations has to be solved in every time step of an FSI simulation, there are some particularities with respect to Jacobian approximation, such as the reuse of secant information from previous time steps, as well as the removal of old and irrelevant secant information. These topics are discussed in the next section.

Computational complexity and storage This section ends with a first look into the computational complexity to obtain and use these approximate Jacobians. For simplicity, it is assumed that \(\varvec{\lowercase {a}}\) and \(\varvec{\lowercase {b}}\) are both vectors of length \(n_a\). This is usually not true for the block methods, but \(n_b\) is typically proportional to \(n_a\). Further, it is assumed that \(n_a\gg k\), i.e., the length of the vectors are much larger than the number of secant pairs available. More details will be provided later on for the different FSI methods. No details about the number of operations will be given, only the complexity of the leading-order term will be discussed.

At the basis of the generalized Broyden method is the economy-size QR decomposition of \(\varvec{\uppercase {A}}^k\), which is used for determination of the pseudo-inverse of \(\varvec{\uppercase {A}}^k\). This QR decomposition is typically done with Householder transformations, resulting in a complexity of \(\mathcal {O}(n_a m^2)\), which is also the total complexity of the evaluation of the product of this pseudo-inverse with a vector. Already, it can be noted that, in the case that \(m = k\), the computational cost quickly rises relative to a low fixed value for m.

If the approximate Jacobian is only needed to calculate its product with a vector, its explicit construction can be avoided. In some algorithms of the next section, however, the approximate Jacobian is used explicitly. Then, the construction of this \(n_a \times n_a\) matrix has a complexity of \(\mathcal {O}(n_a^2 m)\). In addition, the \(n_a \times n_a\) matrix requires a storage capacity \(\mathcal {O}(n_a^2)\), which is a strong disadvantage of these select algorithms.

In other algorithms, it is possible to avoid this expensive construction and use a matrix-free method to multiply the approximate Jacobian with a vector, i.e., without large dense square matrices. In practice, this is done by evaluating the product using Eq. (47) and multiplying the factors within each term from right to left. Then the complexity of this evaluation is only \(\mathcal {O}(n_a m^2)\), which is the complexity of performing the QR decomposition needed to evaluate the product of the pseudo-inverse of \(\varvec{\uppercase {A}}^k\) with a vector. Because only the secant information has to be stored, the storage requirements \(\mathcal {O}(n_a m)\) are lower as well.

4.4 Difference Between the Anderson and Broyden Approach

The previous part formulated the generalized Broyden method, in which the parameter m determines how the secant information from previous iterations is included. Setting \(m=k\) corresponds to the Anderson method, in which the approximated Jacobian is determined by imposing all secant equations directly. For \(m=1\), Broyden’s original method is retrieved, here simply referred to as the Broyden method, in which the approximated Jacobian only fulfills the latest secant equation and the secant information from the previous iterations is included indirectly by imposing no-change conditions. In this section, the difference in behaviour between these two extreme versions of the generalized Broyden method will be clarified.

Consider for example the approximation of the Jacobian \(\varvec{\mathcal {B}}' \! (\varvec{\lowercase {a}}^k)\) by \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\), when previously three iterations have been performed (\(k=2\)). The matrices containing the differences between consecutive iterations are

Without loss of generality, it is stated that

where \(\varvec{\lowercase {p}}\) and \(\varvec{\lowercase {q}}\) are orthonormal vectors, and x and y real scalars.

In the Anderson approach (\(m=k\)), the approximated Jacobian is

The QR decomposition of \(\varvec{\uppercase {A}}^2\) is given by

With this decomposition, the pseudo-inverse is calculated

Finally, the approximate Jacobian is given by

In the Broyden approach (\(m=1\)), the approximated Jacobian is

Note that the pseudo-inverse of a single column equals its transpose divided by its norm squared, such that

The resulting approximated Jacobian is given by

Comparing Eq. (61) with Eq. (64), it is clear that the Jacobian approximations are different. Their inequality is analyzed in Table 1 by looking at their product with particular vectors \(\Delta \varvec{\lowercase {a}}\).

For a vector \(\Delta \varvec{\lowercase {a}}\) equal to the lastly added difference \(\delta \varvec{\lowercase {a}}^{1}\), both approaches return the corresponding difference \(\delta \varvec{\lowercase {b}}^{1}\), as expected. If the one before last vector \(\delta \varvec{\lowercase {a}}^{0}\) is supplied, the results are different. The Anderson method simply returns \(\delta \varvec{\lowercase {b}}^{0}\), as this method attempts to approximate \(\Delta \varvec{\lowercase {a}}\) as closely as possible using the already available differences. In contrast, the Broyden method does not and returns a linear combination of \(\delta \varvec{\lowercase {b}}^{1}\) and \(\delta \varvec{\lowercase {b}}^{0}\). This approach gives priority to the lastly determined difference \(\delta \varvec{\lowercase {a}}^{1}\) and uses the corresponding \(\delta \varvec{\lowercase {b}}^{1}\) for the orthogonal projection of \(\Delta \varvec{\lowercase {a}}\) on that difference \(\delta \varvec{\lowercase {a}}^{1}\). For a result of the Broyden approach that lies along \(\delta \varvec{\lowercase {b}}^{0}\), a difference orthogonal to the last difference \(\delta \varvec{\lowercase {a}}^{1}\) needs to be supplied. Finally, the result for a general vector is given, where u, v and w are arbitrary scalars and \(\varvec{\lowercase {s}}\) a unit vector orthogonal to \(\varvec{\lowercase {p}}\) and \(\varvec{\lowercase {q}}\).

Both methods decompose \(\Delta \varvec{\lowercase {a}}\) in components along the previously determined vectors \(\delta \varvec{\lowercase {a}}^i\), \(0< i < k-1\), and multiply the respective components with the corresponding vectors \(\delta \varvec{\lowercase {b}}^i\). This is shown graphically in Fig. 5.

The vector \(\Delta \varvec{\lowercase {a}}\) is decomposed along the directions of the previously determined differences. The decomposition is different for the Anderson and Broyden approach. The green line is the direction of the lastly determined difference vector \(\delta \varvec{\lowercase {a}}^1\), the red line corresponds to the one before last \(\delta \varvec{\lowercase {a}}^0\). The red dotted vector is the remaining part after decomposition. The addition of the parts along \(\delta \varvec{\lowercase {a}}^1\) and \(\delta \varvec{\lowercase {a}}^0\) and the remaining part gives the original \(\Delta \varvec{\lowercase {a}}\). (Color figure online)

The Anderson method projects \(\Delta \varvec{\lowercase {a}}\) on all previously determined differences. Therefore, the remaining part

is orthogonal to these differences. The Broyden method projects \(\Delta \varvec{\lowercase {a}}\) first on the lastly obtained difference, and the leftover part on the one before last, and so on. Therefore, the remaining part

is not necessarily orthogonal to these differences. It will, however, always be orthogonal to the last difference onto which the projection was made, i.e., the oldest difference.

The difference between the two methods is essential to how nonlinearities in the secant information are dealt with. In general \(\varvec{\mathcal {B}}(\varvec{\lowercase {a}}^k)\) is nonlinear and its Jacobian is not constant, therefore the secant information will also contain nonlinear effects, especially when the step \(\delta \varvec{\lowercase {a}}^i\) is large. Because the Broyden method prioritizes more recent secant information, it effectively ignores these nonlinearities, while the Anderson method does not, as it wants to approximate \(\Delta \varvec{\lowercase {a}}\) as closely as possible using all available differences. This can lead to instabilities in the Anderson method. However, the Broyden method will also neglect small linear information, slowing down the convergence speed. More details and a method to remove nonlinearities from the secant information to stabilize Anderson are found in [49].

In the FSI community, the Anderson method is referred to as the least-squares approach and the Broyden method can be linked to the multi-vector approach. However, in FSI, fulfilling only the most recent secant equation as in the original Broyden method is typically not done and the multi-vector algorithms fulfill the secant equations in the most recent time step, using no-change conditions for older time steps. So in fact, the multi-vector approach is a generalized Broyden method, as will be explained in the following section.

5 Quasi-Newton Methods for FSI

In Sect. 3, different quasi-Newton schemes have been introduced. They required approximate Jacobians, which could be determined in different ways using information from previous iterations, as explained in Sect. 4. Up to this point, the focus was on solving the nonlinear equations in each time step separately. From here on, the distinction between different time steps will be necessary. Therefore, the superscript \(n+1\) will be used to indicate the values from the current time step, meaning that these are the values that are currently calculated. This notation is similar to the superscript \(k+1\), which indicates the current iteration.

In this section, the IQN-ILS, IBQN-LS, IQN-MVJ, MVQN, IQN-IMVLS and IQN-ILSM techniques will be derived and analyzed in the generalized Broyden framework. These techniques for partitioned FSI simulation have several differences, as summarized in Table 2.

The first difference is whether they use only the interface displacement as variables (IQN-ILS, IQN-MVJ, IQN-IMVLS, IQN-ILSM) or whether they are block iteration quasi-Newton methods using both interface displacement and load (IBQN-LS, MVQN). In the former case, they solve Eq. (12), in the latter they use Eq. (8), as explained in Sect. 3.

The second difference is related to how time stepping is handled, as most FSI simulations are time-dependent to capture a vibration or other dynamic behaviour. Assuming the inputs and outputs of the q previous time steps are stored, one can either impose the secant conditions from all time steps (IQN-ILS, IBQN-LS) or only for the latest time step, combined with no-change conditions for previous time steps (IQN-MVJ, MVQN, IQN-IMVLS, IQN-ILSM). This second difference is thus related to the choice of the parameter m of generalized Broyden, as explained in Sect. 4. On the one hand, m can be set to \(\infty\) (actually limited to q time steps), so all the info from q previous time steps is used together, without an old Jacobian, but only with an initial one to start the procedure. In fact, this corresponds with the Anderson approach and is typically termed least-squares approach in FSI. On the other hand, only the secant info from the current time step can be used, with an old approximate Jacobian that is the final one from the previous time step. This corresponds to \(m=k\), called multi-vector, and is really generalized Broyden, and not one of the limiting cases (Anderson or Broyden).

The third difference is the amount of memory required for the storage of the approximate Jacobian(s) and the computational time required for the calculations related to the quasi-Newton steps. This will be explained more in detail for each method below and will be summarized in Table 3.

5.1 IQN-ILS

IQN-ILS is the abbreviation for Interface Quasi-Newton technique with an approximation for the Inverse of the Jacobian from a Least-Squares model [50]. The IQN-ILS technique performs an update of the input for the flow solver in each coupling iteration, using an approximation for the inverse of the Jacobian of the residual operator, so

and \(\Delta \varvec{\lowercase {r}}^k=\varvec{\lowercase {0}}-\varvec{\lowercase {r}}^k\). The approximation for the inverse Jacobian \({\widehat{{\varvec{{\mathcal {R}}}}'^{-\hbox {1}}}}(\varvec{\lowercase {x}}^k)\equiv \partial ^{k}_{\varvec{\lowercase {r}}} \varvec{\lowercase {x}}\) can be obtained directly by following the method explained in Sect. 4.3 for \(\partial ^{k}_{\varvec{\lowercase {a}}} \varvec{\lowercase {b}}\), with \(\varvec{\lowercase {a}}=\varvec{\lowercase {r}}\) and \(\varvec{\lowercase {b}}=\varvec{\lowercase {x}}\). Because the approximation needs to be full rank for a working quasi-Newton method, an initial Jacobian \(\partial ^{k-m}_{\varvec{\lowercase {r}}} \varvec{\lowercase {x}}=-\varvec{\uppercase {I}}\) is used, which is the Jacobian of Gauss–Seidel iteration, as explained below Eq. (21). In the literature, however, the approximation for the inverse of the Jacobian \({\widehat{{\varvec{{\mathcal {R}}}}'^{-\hbox {1}}}}(\varvec{\lowercase {x}}^k)\) is usually rewritten using the identity \(\varvec{\lowercase {r}}=\tilde{\varvec{\lowercase {x}}}-\varvec{\lowercase {x}}\), giving

where the operator \(\partial _{\varvec{\lowercase {r}}}^k\tilde{\varvec{\lowercase {x}}}\) is constructed as explained in Sect. 4.3, with \(\varvec{\lowercase {a}}=\varvec{\lowercase {r}}\) and \(\varvec{\lowercase {b}}=\tilde{\varvec{\lowercase {x}}}\). In this way of explaining, no initial Jacobian is used, so \(\partial ^{k-m}_{\varvec{\lowercase {r}}} \tilde{\varvec{\lowercase {x}}}=\varvec{\uppercase {0}}\). It is worth mentioning that if instead of the inverse, the Jacobian \({\varvec{\mathcal {R}}}'(\varvec{\lowercase {x}}^k)\) is approximated, the Interface Quasi-Newton Least-Squares method (IQN-LS) is retrieved [51].

For an FSI simulation with a single step (e.g., a steady simulation), the generalized Broyden formula in Eq. (52) is used with \(m=k\) and without initial guess \(\partial ^{0}_{\varvec{\lowercase {r}}} \tilde{\varvec{\lowercase {x}}}=\varvec{\uppercase {0}}\), so

with

Note that \(\partial ^{k}_{\varvec{\lowercase {r}}} \tilde{\varvec{\lowercase {x}}}\) has at most rank k, while \({\widehat{{\varvec{{\mathcal {R}}}}'^{-\hbox {1}}}}(\varvec{\lowercase {x}}^k)\equiv \partial ^{k}_{\varvec{\lowercase {r}}} \varvec{\lowercase {x}}\) is full-rank.Footnote 4

In a time-dependent simulation, the secant information from the q previous time steps can be reused. As notation, the previous time steps are indicated with n, \(n-1\), \(\ldots\), \(n+1-q\), for the time step that is being calculated the superscript \(n+1\) is omitted and only the superscript k is used. The matrices \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) and \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\) are a concatenation of the matrices from the different time steps, giving

In this way, the information from each time step is treated equally, except when linear dependencies occur, because these are then removed by filtering, as will be explained below. The method thus satisfies all available secant conditions, i.e., from time step \(n+1\) and the q previous time steps. Consequently, m is equal to the number of columns in \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) and \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\), which is

if no filtering is applied. As no initial Jacobian \(\partial ^{k-m}_{\varvec{\lowercase {r}}} \tilde{\varvec{\lowercase {x}}}\) is used, the information from earlier time steps \(n < n+1-q\) is not considered. It is important to remark that the difference between the first \(\varvec{\lowercase {r}}\) or \(\tilde{\varvec{\lowercase {x}}}\) of a time step and the last one from the previous time step is not used. Only differences between vectors of the same time steps are taken into account. This approach ensures that the secant information matches with the meaning of the Jacobian that is being approximated, which is the derivative within a time step, and not between them.

The reuse parameter q has to be defined by the user. Reuse typically improves the performance, but too old data is no longer helping the convergence, and therefore an optimal value exists. In the literature, the existence of this parameter is often cited as a drawback of IQN-ILS, because the performance of the method would be sensitive to this parameter. However, by using filtering, the performance of the method is rendered rather insensitive to this parameter around the optimum, as is shown by numerical tests in Sect. 6 and in other work [52, 53]. Moreover, the parameter q allows the user to control how many time steps can be considered relevant, which is important in cases with rapid changes from one time step to the next, e.g., with multi-phase flows [54].

Matrix-free implementation Equation (69) is a symbolic notation to write IQN-ILS in the generalized Broyden framework, but this matrix should never be constructed or stored in the computer’s memory. One of the main benefits of IQN-ILS is its so-called matrix-free character, which means that no large square matrices need to be constructed or stored. The product of the approximation of the inverse of the Jacobian with \(\Delta \varvec{\lowercase {r}}^k=-\varvec{\lowercase {r}}^k\) in Eq. (67) is symbolically calculated as

In practice, the product \(\varvec{\lowercase {c}}^k={\varvec{\uppercase {R}}^{\![\!k\!]\!}}^+ \ \Delta \varvec{\lowercase {r}}^k\) of the pseudo-inverse of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) with \(\Delta \varvec{\lowercase {r}}^k\) is calculated using the economy-size QR decomposition and back-substitution, resulting in

as explained in Algorithm 1. The vector \(\Delta \varvec{\lowercase {r}}^k\) is thus written as a linear combination of the \(\delta \varvec{\lowercase {r}}^i\), resulting in coefficients \(\varvec{\lowercase {c}}^k\). As each \(\delta \varvec{\lowercase {r}}^i\) has a corresponding \(\delta \tilde{\varvec{\lowercase {x}}}^i\), the change in \(\tilde{\varvec{\lowercase {x}}}\) corresponding with \(\Delta \varvec{\lowercase {r}}^k\) can be obtained by calculating \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\varvec{\lowercase {c}}^k\).

The complete procedure can be found in Algorithm 2, with a relaxation step with factor \(\omega\) on line 7, for the case in which \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) and \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\) do not have any columns, e.g., at the beginning of the simulation.

Using \(\varvec{\uppercase {R}}^{\![\!k\!]\!}=\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}-\varvec{\uppercase {X}}^{\![\!k\!]\!}\), where \(\varvec{\uppercase {X}}^{\![\!k\!]\!}\) is defined analogous to Eq. (71), Eq. (73) can be rewritten as

This shows that \(\Delta \varvec{\lowercase {r}}^k\) is split into a part \(\varvec{\uppercase {R}}^{\![\!k\!]\!}{\varvec{\uppercase {R}}^{\![\!k\!]\!}}^+\Delta \varvec{\lowercase {r}}^k\) in the column span of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) and a part \((\varvec{\uppercase {I}}-\varvec{\uppercase {R}}^{\![\!k\!]\!}{\varvec{\uppercase {R}}^{\![\!k\!]\!}}^+)\Delta \varvec{\lowercase {r}}^k\) perpendicular to it. The secant-based approximate Jacobian \(\varvec{\uppercase {X}}^{\![\!k\!]\!}{\varvec{\uppercase {R}}^{\![\!k\!]\!}}^+\) is applied to the former, while Gauss–Seidel iteration with Jacobian \(-\varvec{\uppercase {I}}\) is used for the latter.

Filtering When columns of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) are linearly dependent up to a tolerance \(\epsilon _f\), the diagonal elements of \(\varvec{\uppercase {R}}_R^{\![\!k\!]\!}\) in Eq. (74) become small and this system can no longer be solved accurately. Hence, an essential component of IQN-ILS is filtering, especially when data from previous time steps is reused [50]. Columns of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) that are linearly dependent up to the tolerance \(\epsilon _f\) need to be removed together with the matching columns in \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\). As the newest information is stored on the left-hand side in \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\), a \(\delta \varvec{\lowercase {r}}^i\) that is a linear combination of newer \(\delta \varvec{\lowercase {r}}^j\) (j > i) is removed. Columns can be removed if \(\left|R^{\![\!k\!]\!}_{R,ii}\right|<\epsilon _f\) (QR0) or \(\left|R^{\![\!k\!]\!}_{R,ii}\right|<\epsilon _f\left\Vert \varvec{\uppercase {R}}^{\![\!k\!]\!}_R\right\Vert _2\) (QR1), with \(R^{\![\!k\!]\!}_{R,ii}\) referring to a diagonal element of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}_R\) [55]. The advantage of the first approach is that the tolerance \(\epsilon _f\) can be set by perturbing \(\varvec{\lowercase {x}}\) with smaller and smaller changes until the change in \(\tilde{\varvec{\lowercase {x}}}\) is no longer smooth, but numerical noise. In this case, the tolerance \(\epsilon _f\) can be considered as a measure of how accurate the flow solver and structural solver are calculating their solution. This filtering procedure is shown step by step in Algorithm 3. Obviously, it will be difficult to obtain convergence of the coupling iterations to a level that is lower than \(\epsilon _f\).

Alternative filtering approaches are algebraic QR filtering and POD filtering [55]. In the algebraic filtering method (QR2), a column is removed if the diagonal element \(\left|R^{\![\!k\!]\!}_{R,ii}\right|<\epsilon _f\left\Vert \varvec{\uppercase {R}}^{\![\!k\!]\!}_{R,i}\right\Vert _2\), with \(\varvec{\uppercase {R}}^{\![\!k\!]\!}_{R,i}\) referring to column i of matrix \(\varvec{\uppercase {R}}^{\![\!k\!]\!}_R\). In the POD filtering, the eigenvalues of the autocorrelation matrix of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) are used to truncate old data. The numerical tests in [55] showed that algebraic QR filtering worked better than POD filtering or filtering using \(\left|R^{\![\!k\!]\!}_{R,ii}\right|<\epsilon _f\left\Vert \varvec{\uppercase {R}}^{\![\!k\!]\!}_R\right\Vert _2\). However, the comparison with \(\left|R^{\![\!k\!]\!}_{R,ii}\right|<\epsilon _f\) was not performed and remains as an interesting future work. Because the latter is directly related to the solver tolerances themselves, as explained above, it has been chosen for this work.

Another reason to do filtering is to limit the number of secant conditions in cases with few degrees of freedom on the interface. Typically, \(m \ll n_x\), but with only few degrees of freedom on the interface, the oldest columns of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) and \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\) need to be removed such that there are at most \(n_x\) columns, to avoid an overdetermined Jacobian.

Computational complexity and storage The additional storage required for the IQN-ILS method is the matrices \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) and \(\widetilde{\varvec{\uppercase {X}}}^{\![\!k\!]\!}\), both \(\in \mathbb {R}^{n_x\times m}\). Temporary storage is necessary for \(\varvec{\uppercase {Q}}_R^{\![\!k\!]\!}\in \mathbb {R}^{n_x\times m}\) and \(\varvec{\uppercase {R}}^{\![\!k\!]\!}_R\in \mathbb {R}^{m\times m}\), and the small vector \(\varvec{\lowercase {c}}^k\in \mathbb {R}^{m}\). The storage thus scales linearly with the number of degrees of freedom in the interface’s discretization. Furthermore, m can be reduced compared to Eq. (72) due to filtering. A rule of thumb is that it is typically not beneficial to include more than 50 columns.

The economy-size QR decomposition of \(\varvec{\uppercase {R}}^{\![\!k\!]\!}\) has at most a complexity of \(\mathcal {O}(n_x m^2)\) if the fast Givens method or the Householder method is used [38]. The matrix–vector product in the right-hand side of Eq. (74) has a computational complexity of \(\mathcal {O}(n_x m)\) and solving the triangular system a complexity of \(\mathcal {O}(m^2)\). Consequently, also the computational complexity scales linearly with \(n_x\) and is limited. In numerical tests, the IQN-ILS algorithm normally accounts for less than 1% of the total CPU time.

5.2 IBQN-LS

IBQN-LS stands for Interface Block Quasi-Newton method with approximation of the Jacobians using Least-Squares models (initially called reduced-order models) [21]. It uses the formulation in Eq. (25) and solves Eq. (26) and Eq. (27) in turn for \(\Delta \varvec{\lowercase {x}}^k\) and \(\Delta \varvec{\lowercase {y}}^k\). Low-rank approximations for \(\varvec{\mathcal {F}}'(\varvec{\lowercase {x}}^k)\) and \(\varvec{\mathcal {S}}'(\varvec{\lowercase {y}}^k)\) are constructed using the generalized Broyden method with m as in Eq. (72) and without initial value for the Jacobian, so like in IQN-ILS. The reuse of previous time steps and the filtering are also applied in the same way as explained above. Consequently, this technique enforces all secant conditions from the current and q previous time steps, for both the flow solver and the structural solver.

For the approximate Jacobian of the flow solver \({\widehat{\varvec{{\mathcal {F}}}'}}(\varvec{\lowercase {x}}^k)\), the generalized Broyden framework is applied using \(\varvec{\lowercase {a}}=\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {b}}=\tilde{\varvec{\lowercase {y}}}\). For m as in Eq. (72) and without initial value for the Jacobian this can be written symbolically as

with

where the secant information from the q previous time steps is combined with that from the current time step

A symbolic formulation of the approximation \({\widehat{\varvec{{\mathcal {S}}}'}}\) in the generalized Broyden framework can be obtained in a similar way, using \(\varvec{\lowercase {a}}=\varvec{\lowercase {y}}\) and \(\varvec{\lowercase {b}}=\tilde{\varvec{\lowercase {x}}}\).

A disadvantage of this technique is that two linear systems need to be solved in each coupling iteration. In the original version, the \(n_x\times n_x\) and \(n_y\times n_y\) matrices corresponding with these systems were explicitly constructed using the symbolic notations as in Eq. (76) and they were solved with a direct linear solver [21]. However, by adopting an iterative linear solver like GMRES, only a procedure to calculate the product of the approximate Jacobians with a vector is required [56]. In practice, the number of iterations for the iterative solver is close to the number of columns used for the approximate Jacobians. Alternatively, the Woodbury matrix identity can be used to obtain a closed expression for the update [57].

Matrix-free implementation This matrix-free procedure will be explained here for the flow solver. When the product of \({\widehat{\varvec{{\mathcal {F}}}'}}(\varvec{\lowercase {x}}^k)\equiv \partial ^{k}_{\varvec{\lowercase {x}}} \varvec{\lowercase {y}}\) with a vector \(\Delta \varvec{\lowercase {x}}^k\) needs to be calculated during the iterative solution of Eqs. (26) or (27), this can symbolically be written as

For the practical implementation, Algorithm 1 is followed and this computation is split in two parts by the introduction of a coefficient vector \(\varvec{\lowercase {c}}^k\), giving

with \(\varvec{\lowercase {c}}^k\) the solution of

The last part is the least-squares solution to an overdetermined system that can be solved efficiently by calculating the economy-size QR decomposition, followed by using back-substitution.

To summarize this procedure, the \(\Delta \varvec{\lowercase {x}}^k\) is decomposed as a linear combination of the columns in \(\varvec{\uppercase {X}}^{\![\!k\!]\!}\), then the observation is made that columns in \(\varvec{\uppercase {X}}^{\![\!k\!]\!}\) and \(\widetilde{\varvec{\uppercase {Y}}}^{\![\!k\!]\!}\) with the same index form a secant pair, such that the result can be approximated as the same linear combination of the columns in \(\widetilde{\varvec{\uppercase {Y}}}^{\![\!k\!]\!}\), as shown in Eq. (80). The complete procedure can be found in Algorithm 4.

Computational complexity and storage Compared to IQN-ILS, IBQN-LS requires approximately twice the memory, as the data for two approximate Jacobians needs to be stored. Furthermore, even though the matrix-free procedure with the iterative linear solver is faster than explicit matrix construction and direct linear solver, the computing time is higher than for IQN-ILS, where none of this is required. Nevertheless, the time required for the coupling algorithm scales linearly with \(n_x\) and \(n_y\) and thus remains small compared to that of the actual solvers. The solution of the linear systems could be avoided by writing the solution to Eqs. (26) and (27) symbolically, using matrix inverses and applying the Woodbury matrix identity as in [57]. In this way, the size of the matrices that have to be inverted is reduced from \(n_x\) and \(n_y\) to m.

5.3 MVQN

MVQN is the abbreviation for Multi-Vector update Quasi-Newton [58]. This method is based on the IBQN-LS method and is even identical to it in the first time step. However, the differences appear when data from previous time steps is included. MVQN considers the current Jacobian as the sum of the Jacobian from the previous time step plus a rank-k update. This update is then determined by enforcing the secant conditions from the current time step and minimizing the Frobenius norm of the update. This coincides with the generalized Broyden method with \(m=k^{n+1}\) and the initial Jacobian equal to the one from the previous time step.

Considering again the approximate Jacobian of the flow solver \({\widehat{\varvec{{\mathcal {F}}}'}}\), the generalized Broyden framework is applied using \(\varvec{\lowercase {a}}=\varvec{\lowercase {x}}\) and \(\varvec{\lowercase {b}}=\tilde{\varvec{\lowercase {y}}}\). The value m is now \(k=k^{n+1}\) and the initial value for the Jacobian is the one for the previous time step, giving

Using the definition of the pseudo-inverse in Eq. (36), this can be reformulated as

which corresponds with the original formulation in [58]. However, analyzing this method is most straightforward when considering Eq. (81). If this approximate Jacobian is multiplied with a vector \(\Delta \varvec{\lowercase {x}}\), then the secant information from the most recent time step is used for the part for which it is available, i.e., within the column span of the matrix \(\varvec{\uppercase {X}}^{k}\). The previous approximate Jacobian is only multiplied with the leftover part of \(\Delta \varvec{\lowercase {x}}\), i.e., the part orthogonal to the column span of \(\varvec{\uppercase {X}}^{k}\). So, even if secant information from previous time steps is available for the first part of \(\Delta \varvec{\lowercase {x}}\), it will not be used. This relates to the difference between the least-squares and multi-vector approach explained in Sect. 4.4. The matrix \({\widehat{\varvec{{\mathcal {S}}}'}}\) is constructed in a similar way

Computational complexity and storage The main benefits of this method are that no parameter q is required and that filtering with a tolerance \(\epsilon _f\) is typically less essential as only a relatively small number of secant conditions from the most recent time step are considered. However, this comes at a significant cost, as the matrices \({\widehat{\varvec{{\mathcal {F}}}'}}\) and \({\widehat{\varvec{{\mathcal {S}}}'}}\) are constructed and stored in memory, such that the algorithm scales with \(n_x^2\) and \(n_y^2\), both in terms of memory use as in computational complexity. Combined with the linear systems that have to be solved in each coupling iteration, this becomes expensive compared to the actual solver for a reasonably large number of degrees of freedom on the interface (e.g., more than \(10^4\)). A linearly scaling adaptation is the RandomiZed Multi-Vector Quasi-Newton method (MVQN-RZ) [57], which will be explained in more detail with the other linearly scaling multi-vector methods at the end of Sect. 5.4.

5.4 IQN-MVJ