Abstract

In the developing world, parasites are responsible for causing several serious health problems, with relatively high infections in human beings. The traditional manual light microscopy process of parasite recognition remains the golden standard approach for the diagnosis of parasitic species, but this approach is time-consuming, highly tedious, and also difficult to maintain consistency but essential in parasitological classification for carrying out several experimental observations. Therefore, it is meaningful to apply deep learning to address these challenges. Convolution Neural Network and digital slide scanning show promising results that can revolutionize the clinical parasitology laboratory by automating the process of classification and detection of parasites. Image analysis using deep learning methods have the potential to achieve high efficiency and accuracy. For this review, we have conducted a thorough investigation in the field of image detection and classification of various parasites based on deep learning. Online databases and digital libraries such as ACM, IEEE, ScienceDirect, Springer, and Wiley Online Library were searched to identify sufficient related paper collections. After screening of 200 research papers, 70 of them met our filtering criteria, which became a part of this study. This paper presents a comprehensive review of existing parasite classification and detection methods and models in chronological order, from traditional machine learning based techniques to deep learning based techniques. In this review, we also demonstrate the summary of machine learning and deep learning methods along with dataset details, evaluation metrics, methods limitations, and future scope over the one decade. The majority of the technical publications from 2012 to the present have been examined and summarized. In addition, we have discussed the future directions and challenges of parasites classification and detection to help researchers in understanding the existing research gaps. Further, this review provides support to researchers who require an effective and comprehensive understanding of deep learning development techniques, research, and future trends in the field of parasites detection and classification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

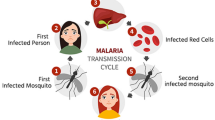

Parasites are organisms that grow in or on a host and obtain food from the host. Parasites are too tiny and not visible clearly with naked eyes however, they can be observed under a microscope. The parasites community that cause disease in humans includes protozoa, helminths and ectoparasites. It is estimated that 357 million cases of morbidity, mostly infected by protozoa, Cryptosporidium, Entamoeba, and Giardia, resulted in 33,900 deaths and the loss of 2.94 million disability per year [1] and more than 1.4 billion people are infected with helminths [2]. Various other parasites act as pathogenic and cause diseases also in plants, and other living organisms. They are responsible for causing various deadly diseases like Giardia (through contaminated water), toxoplasmosis (spread by cats), malaria etc. [3]. One of the helminths parasites globally causes infection in more than 800 million people [4]. In developing countries including East Asia, South America, Saharan Africa etc. there are more than 100 human intestinal parasite species that produce 200,000 eggs per day and annually 41,500 human deaths are reported due to parasitic infections [2, 5]. Infection caused by these diseases display a wide range of clinical explanations ranging from malnutrition to asymptomatic anaemia and even cause cancer [6, 7]. Conventional light microscopic examination remains the gold standard method for diagnosis of several parasites diseases, such as malaria [8].

There are numerous shortcomings of these methods, which have serious potential to sway the results of clinical examinations, such as variable sensitivity, resource and time-consumption. Another drawback of the traditional clinical parasitology classification and detection is maintaining staff competency and engagement. The clinical parasitology laboratory also suffers from two major aspects firstly, educated technologists increasingly gravitate toward technology-driven and automated disciplines of laboratory and secondly, shortage of adequately trained personnel [9] to keep them staffed. Results based on diagnosis of parasites are often obtained from clinical signs and symptoms that are susceptible to human errors which may lead to higher mortality and buying of unnecessary drugs leading to economic burden [10] and despondency. In consequence, alternate methods are required which help to generate quality diagnosis results. To date, to the best of our knowledge, there have been no significant technological advancements for the detection of protozoa in human stool specimens using permanently stained slides (e.g., trichrome, modified acid-fast, and modified safranin).

The objective of this review is to establish the vital aspect of deep learning in parasite microscopic image classification and detection to make it easy for researchers to have a clear image of deep learning for parasitology from this survey paper. Further, this systematic review of deep learning models for microscopic images helps people discover more about recent growth in this field. In this review, we analysed deep learning techniques applied in parasitological image application and in turn made the following contributions:

-

We demonstrated that how a pre-trained Convolution Neural Network (CNN) performance improves by fine-tuning different layers.

-

We demonstrated CNN and transfer learning potential to detect and classify human parasites taken from images along with state-of-the-art architectures.

-

In this review, we cover challenges faced in deep learning including small-scale training data, noised image, data interpretability, and model compression, uncertainty scaling, over-fitting and vanishing gradient problems.

This review provides a broad survey of the most important aspects of parasite microscopic image analysis using deep learning. This review depicted in Fig. 1 is organized as follows. Section 2 describes the fundamentals concepts of deep learning, which include convolution layers components, methods & models and evaluation metrics. In Sect. 3, we discuss methodology used to conduct this review including research questions, paper retrieval and filtering process. In Sects. 4 and 5, detailed gist of each machine learning and deep learning method and model elaborated in selected research papers has been provided respectively. Summary of each research paper and their future scope is presented in the form of tables. In Sect. 6 various results have been discussed that will provide research directions in parasites image detection and classifications. Finally, In Sect. 7 conclusion and future scope is presented.

2 Background

The following subsections provide a succinct explanation of various concepts and technologies associated with the work presented in this paper. Section 2.1 provides an overview of Deep Learning, Sect. 2.2 discusses about CNNs and Sect. 2.3 provides a gist of CNN models and parasite datasets.

2.1 Deep Learning Overview

In this section, we present the analysis of deep learning fundamentals that addresses wide range of issues including medical images detection, segmentation and classification. The section begins with an introduction to deep learning, then on to cover various techniques, and concludes with transfer learning techniques and models built on CNNs to increase the effectiveness of automatic parasite identification and categorization. Deep learning is a subfield of machine learning inspired by the structure and function of the human brain neural network. In medical diagnosis, deep learning methods and models operate on large amounts of medical image data to transfer the given dataset to some specific labels (Fig. 2).

Comparison between two techniques: a workflow vs. b deep learning workflow. Traditional Computer Vision algorithms employed pre- processing, feature extraction, wise feature selection to achieve the classification and deep learning techniques automate the several tasks of machine learning algorithms

A large number of artificial neural network layers are used in the development and design of deep learning, with each layer carrying out a different evaluation based on the information it receives. [11, 12]. Conventional machine learning algorithms employ various steps to achieve the classification tasks like pre- processing, feature extraction, feature selection and learning. Conversely, Deep learning techniques automate several tasks of machine learning algorithms [12, 13], as shown in Fig. 1. Deep learning gained popularity in recent years due to exponential growth of data [14]. Deep learning methods and models have the potential to enhance human lives with accuracy in diagnosis including pathogen detection and classification such as malaria, intestinal parasite and tuberculosis, etc. In recent times i.e. after the onset of COVID-19 pandemic, Deep learning (DL) is playing a vital role in the automatic diagnosis of novel coronavirus [15]. DL methods and models include Deep Feedforward Neural Network (DFNN), Convolutional Neural Network (CNN) [16], Recurrent Neural Network (RNN) and Auto-encoder. The overview of prominent DL-model architectures is depicted in Fig. 3, which can be used to enhance the efficiency of automatic parasite classification and detection. Due to it high accuracy, speed and flexibility deep learning is being applied in microscopic examination of parasite species. The end-results may include classification, detection and segmentation. Classification task is applied for recognition of multi-parasite species where microscopic images contain mixed infections. Detection is used to acquire the position of individual parasites or similar group of parasites. Segmentation task’s objective is grouping similar regions or segments of a parasitic image under their respective class. Classification and detection of microscopic parasites are usually performed by CNN and RCNN series models [48, 70].

2.2 Convolutional Neural Networks (CNN)

CNN structure perform computations based on the structure of human visual cortex and is a popular choice for automatic extraction of relevant features from large amounts of data [17]. A CNN is designed with a sequence of convolution layers, sampling layers followed by fully connected layer as shown in Fig. 3a. Input layers of CNN model is arranged in the 3-dimensions (height, width, and depth), m × m × r. The depth (r) represents the number of channels [14]. In each convolutional layer, several filters are applied and these filters are also organized in 3D, (n × n × q), but values of both n and q are smaller than m and r respectively. The convolutional layer evaluates the product of inputs and weights, as shown in Eq. 1.

To accelerate the training process and to handle the overfitting of a model, the down-sampling is applied to layers to extract features. Finally, mid and low-level features are fed to fully connected layers to obtain the high-level of abstraction.

2.2.1 CNN Layers

This subsection discusses each layer of CNN basic architecture along with their functions to deal with parasitic image classification and detection as elaborated below.

-

a.

Convolution Layer: Every CNN architecture includes an important component called convolution layer and each CNN layer is comprised of several filters (kernels). Over N-dimensional metrics of an input image, convolution operations are performed with these kernels to obtain output features. Firstly, the filters are convolved over the entire input image horizontally and vertically during the forwarding pass, then, the product of input images and the filters is determined. It generates a two dimensional visual of image that shows the output of filters at spatial location of the image. The entire process is repeated for input images until there is no possibility of sliding.

Stride is a component of convolutional neural networks that is streamlined for image data compression. Stride is a CNN filter parameter that regulates the amount of movement all over the image. For instance, if the stride of a neural network is set to 1, the filter will move one pixel at a time. Since the filter size influences the encoded output volume, stride is frequently set to a whole integer rather than a fraction or decimal. Padding is also applied to determine the border size information of the input image. It is a technique to maintain the size of image that reduces as a result of strides. Consider the input parasitic image of size H×H×C, suppose F implies number of filters, S implies stride, and P for padding. The following formula can used to determine the output image size:

$${I}_{out }= \frac{H-F+2P }{S}+1$$(2)The output size of parasitic image will be \({I}_{out }\times {I}_{out}\times {C}_{out}\)

-

b.

Pooling Layer: Pooling is another important component in CNN architecture to reduce the dimensionality of the feature map. This approach shrinks the large joint feature representation size into small valuable information. The same process of convolution layer is applied in the pooling layer. There are different types of pooling methods that are applied to different pooling layers. These are min pooling, max pooling, average pooling, global average pooling, global max pooling and tree pooling. Three familiar pooling operations are shown in Fig. 4.

-

c.

Activation Function: Activation functions are nonlinear functions and neural networks utilize different activation functions to map the input values to output. The input to activation evaluate by summation of the dot product of weights and inputs along with bias.

-

d.

Loss Function: In CNN architecture the final classification is obtained from the output layer. CNN model utilizes several loss functions in the output layer to evaluate the error predicted during training. Next, this error is applied to optimize the CNN learning process. Literally, the loss function is calculated by two parameters. First is the predicted output of the CNN model and the second parameter is the actual output

2.2.2 Evaluation Metrics

Evaluation metrics play a major role in achieving the optimized classifier to detect and classify the parasite species using deep learning. The performance metrics for parasitic image classification generally include Accuracy, Sensitivity or Recall, Specificity, Precision, F1-Score, J Score, False Positive Rate (FPR) and Area Under the ROC Curve. The evaluation metrics of parasitic egg detection include average precision (AP), precision recall curve, mean average precision (mAP) and area under curve (AUC). The Accuracy is the relationship between the predicted value and the target value. Accuracy evaluates how close the predicted value is to the target value. Sensitivity calculates the predicted output with respect to the change in input, which means sensitivity shows the ratio of the true positives that are correctly identified. In contrast specificity, which calculates the ratio of true negatives that are correctly identified. TN and TP are the number of negative and positive instances, respectively, for successfully classification and detection of parasites. Further, FN and FP are defined as the number positive and negative instances respectively, for misclassification. The parasitic images classification and detection based on deep learning are measured with the help of following formula:

-

a.

Accuracy measures the ratio of correct predicted value to the total number of instance evaluated.

$${\text{Accuracy}} = \frac{\text{TP+TN}}{\text{TP }+ \text{TN }+ \text{FP }+ {\text{FN}} }$$(3) -

b.

Sensitivity or Recall measures the fraction of positive values that are correctly classified

$$\text{Sensitivity }=\frac{\text{TP}}{\text{TP }+{\text{FN}}}$$(4) -

c.

Specificity measures the fraction of negative values that are correctly classified.

$$\text{Specificity = }\frac{\text{TN}}{\text{FP }+ {\text{TN}}}$$(5) -

d.

Precision measures the positive values that are correctly predicted by all predicted values in a positive class.

$$\text{Precision = }\frac{\text{TP}}{\text{TP }+ {\text{FP}}}$$(6) -

e.

F1-Score measures the harmonic average between recall and precision rates.

$$\text{F1-Score }\text{ = 2*}\frac{\text{Precion*Recall}}{\text{Precion+Recall}}$$(7) -

f.

J Score also called as Youdens J statistic.

$$\text{J-Score }\text{ = Sensitivity }+\mathrm{Specificity}-1$$(8) -

g.

False Positive Rate (FPR) measures the possibility of a false alarm ratio

$$\text{FPR }\text{ = 1 - }\mathrm{Specificity}$$(9) -

h.

Area Under the ROC Curve (AUC) is used to measure comparisons between learning algorithms, as well as to construct an optimal learning model. The following equation is used to measure the AUC value for two-class problem.

$$\text{AUC = }\frac{{s}_{p}- {n}_{p}({n}_{p}+1)2}{{n}_{p } \, {n}_{n}}$$(10)

2.3 CNN Models and Parasite Datasets

Over the last two decades, numerous CNN models have been proposed to perform different tasks [35]. CNN architecture has been updating from time to time from 1989 up to this day. Updating in CNN includes regularization, structural reformulation, parameter optimizations and more. In this subsection, the authors discuss the most popular CNN models starting from the AlexNet model to the EfficentNet model, as shown in Fig. 5. The overview of popular CNN architectures along with model depth, dataset used, parameter error rate and input size are presented in Table 1.

2.3.1 Parasite Datasets

In order to enhance the deep learning method and models to diagnose the parasitic diseases using microscopic images, various parasite datasets have been used which are summarized and described in Table 2. The Table includes the dataset of Plasmodium, Toxoplasma and Intestinal parasites. This review contains some important datasets of other parasites also, such as Leishmania, Babesia and Trichomonad. These datasets are mostly used for classification and detection tasks. Some publicly available microscopic images of parasites from representative datasets for deep learning are shown in Fig. 6.

Three different datasets of publicly available protozoan parasite for deep learning. a Intestinal parasitic dataset [29]. The dataset contains 11 types of parasitic eggs from faecal smear samples. Each category has 1000 images. b Depict the parasites images from dataset [38]. This dataset includes six types of parasites species images (Toxoplasma, Leishmania, Babesia, Plasmodium, Trichomonad, Trypanosome) and RBCs and Leukocyte host cells in the cropped patches. c The dataset contains Plasmodium falciparum cropped image patches from parasitized and uninfected RBCs [17]

3 Survey Methodology

To organize a systematic review, first, we frame the research questions for conducting this research and then present our analysis of the relevant deep learning-based research papers in the concerned domain, in chronological order. Next, we demonstrate the summary of existing methods and models along with the results based on the review of some potential techniques. Finally, we introduce the future directions and outline prominent challenges in parasites detection and classification. We reviewed 70 related technical papers from 2012 to the present. This work will act as a guide to researchers for a comprehensive understanding of the present state-of-the-art, future challenges and trends in the research area of parasites detection and classification.

3.1 Research Questions

This review aims to provide insights into cutting-edge machine learning and deep learning methods for parasite detection and classification based on microscopic images, as well as an analysis and summarization of previous work on deep computer vision. From that perspective, the authors develop the research questions to be addressed through the research methodologies implemented by researchers. These research questions are shown in Table 3. In the end, this systematic review paper also responds to these research questions posed here.

3.2 Paper Retrieval and Filtering Process

By applying deep learning, we have conducted a potential investigation in the field of image detection and classification of various parasites. As shown in Fig. 7, online databases and digital libraries were searched in order to collect sufficient relevant or related paper collections. The focus was to collect papers from the most reputed publishers, such as ACM, IEEE, ScienceDirect, Springer, and Wiley Online Library.

The search and filtering process of papers involved in this review is shown in Fig. 8. In the papers retrieving stage, we firstly explore an arbitrary collection of the successive words or phrases in ACM, IEEE, ScienceDirect, Springer, and Wiley Online Library: “machine learning or parasites” and “deep learning or parasites”, “CNN or microscopic images” along with detection, classification, where 298 papers were retrieved. We gathered an additional 50 papers from the references of the retrieved papers. In total, we collected 348 papers in the searching stage. We remove collected papers in two steps during the filtering stage. In the first step, we filtered papers by checking whether the paper was duplicated or not, 21 papers were excluded on this account. In step two, 54 papers were selected for traditional machine learning, 48 papers for deep learning, 15 for both classical image processing and traditional machine learning and 10 papers are related to potential methods, which include visual transformer-based methods.

4 Traditional Machine Learning Based Methods and Models

In recent decades, traditional ML methods have gained popularity as a research area and have been applied in a variety of fields, including Natural Language Processing (spam detection, text mining), Computer Vision (Face Recognition, picture deletion and classification), and others. The majority of ML-based techniques and models used today are for object recognition. Related efforts on parasite identification and classification using ML are chronologically discussed in Sect. 4.1.

4.1 Machine Learning Related Works in Proposed Field

This section demonstrates related works on parasite detection and classification based on ML, including methods, models, results, and experimental data collection.

In the year 2001, Yang et al. [31] proposed a framework based on an artificial neural network classifier and digital image processing techniques for automatic detection of helminth eggs of humans on microscopic faecal specimens. Digital image processing techniques were applied to extract the morphometric characteristics of eggs of human parasites in faecal specimens from microscopic images. The dataset contained 82 microscopic images of seven common human helminth eggs, which were used to train the proposed model. The proposed ANN model performed identification of human helminth eggs in two stages. In stage first, ANN-1 isolated eggs from confusing artefacts and in stage two, ANN-2 classified eggs by species, as shown in Fig. 9.

Overall processing steps of proposed ANN-based models for both isolation and classification of helminth eggs. For localization and classification of helminth eggs from obtained images. a Digital image processing methods are applied to get meaningful entities and extract their features. b Two stage ANN applied to classified them based on their features

In the same year, Tchinda et al. [32] presented a machine learning technique to recognize intestinal parasite cysts from microscopic images. Probabilistic neural network approach trained by using image pixels feature was employed. It is an effective machine learning approach for classification problems. In this approach parasites separated from microscopic images and then resized to 12 × 12 pixels images. Principal component analysis basis projection is used to reduce the dimensionality. The proposed model was trained on 540 human parasite cyst images. The trained model successfully classified intestinal parasites into 9 different kinds.

In year 2002, K. W. Widmer et al. [33] proposed a ANN based system for Cryptosporidium parvum oocysts detection to reduce the analysis time and achieve the high accuracy of diagnostic process. A total of 525 images of labelled oocysts, fluorescent microspheres, and other miscellaneous nonoocyst images were collected and employed in the training of the ANN. Each type of digital images were separated into 20% for training datasets and 80% for test datasets. Result shows that the correct identification of authentic oocyst images ranged from 80 to 97%, and the correct identification of nonoocyst images ranged from 77 to 82%, on test dataset.

Widmer et al. [34] developed an ANN-based model for automatic identifications of Cryptosporidium oocyst and Giardia cyst digital images. The digital images were captured using a camera at ×400 magnification and converted into a binary array. The ANN for Cryptosporidium oocyst was trained with 1,586 images whereas the ANN for Giardia cyst was trained with 2,431 images. After training these networks were validated with unseen 500 images (250 positives, 250 negatives) of Cryptosporidium oocyst and 282 (232 positives, 50 negatives) images of Giardia cysts. Experimental result of proposed model shows that the ANNs correctly identify the Cryptosporidium oocyst and Giardia cyst images with an accuracy of 91.8% and 99.6% respectively.

Chen et al. [35] proposed a model based on machine learning that performed classification and counting of bacterial colonies from Petri dish images. This model recognized both achromatic and chromatic images effectively. Support Vector Machine approach was used for classification based on morphological features. Two types of Petri-dish were used for experiment. The proposed model predicted comparable performance to accomplish automation of the bacterial colony. The proposed machine learning model achieved 96% accuracy level, For 75 achromatic images 97% accuracy obtained and for 25 chromatic images 95%.

An automatic and rapid detection model based on neural network was developed by Kumar et al. [36] for pathogens in foods. The proposed model involved identification in two stages. In stage first, background correction is applied to distinguish the treated image from the image background using better approaches. In stage second, collecting the images of the local region. Thereafter textural, optical and geometrical features of processed pathogen images are collected. Finally, the proposed model based on Probabilistic Neural Network applied to classify the microorganisms from collected.

Osman et al. [37] developed a model based on image processing and genetic neural network techniques for automatic detectioin of Mycobacterioum tuberculosis in tissues. The proposed model is divided into two steps: step one invoved K-means clustering methods for image segmentation and step second involved GANN method for feature selection, classification and feature extraction purposes. After applying a genetic algorithm to select features, a multilayer perceptron was trained for the final classification of bacteria (true TB and Possible TB). Dataset collection includes 960 total object images of which 360 for true TB and 600 for possible TB. The proposed model was trained on 400 images and the rest of 280 images used for testing purposes. Experimental results demonstrated that proposed approach able to produce 84.9% accuracy with fewer input features.

Hiremath et al. [38] presented identification and classification of a bacterial cell of cocci data collection using machine learning technique. The proposed model was developed using 3α, KNN classifiers and a selected neural network to recognize the pattern of cocci bacterial cells. The data is processed by applying a neural network pipeline that includes inputs layers, output layers, gradient descent and backpropagation function. In this experiment 500 different types (sarcinae, streptococci, diplococci, cocci and tetrad) of digital bacterial images were used. Using the proposed model up to 94% level accuracy was achieved based on the 3α classifier. With KNN classifier where k = 1, 75% to 100% accuracy achieved and up to 100% accuracy achieved with Neural Network classifier.

A ML based approach was implemented by Ghazali et al. [39] for human fecal parasite detection based on computerized image analysis. The presented model contains three stages as shown in Fig. 10. In stage first, the pre-processing techniques were applied to enhance features. In stage second, a features extraction mechanism was used with three characteristics (shape, shell smoothness and size). In stage third, filtration with Steady Determinations Thresholds System method was used to identify and classify the types of parasites based on features values. The final result predicted success rates of Ascarislumbricoides and Trichuristrichiura almost 93% and 94% respectively.

In [40] developed a model based on machine learning for automatic classification and segmentation of human intestinal parasites. The proposed model classifies into two stages, in stage first segmentation process was performed using image transformation, quantization border enhancement and ellipse matching, as seen in Fig. 11. In the second stage, classification was performed with different ML algorithms like ANN-MLP cum Adaboost, optimum path forest classifier, SVM and SVM cum Adaboost. After investigating the performance of different ML algorithms, the optimum path forest classifier prediceted a good result for classification images. The experiment result shows that the proposed ANN based model classify 155 images from the test dataset with 98.22% accuracy.

Nugroho et al. [41] developed a model based on image processing techniques to recognize three phases of malaria parasite cell host in microscopic images i.e. schizont, trophozoite and gametocyte plasmodium falciparum. The proposed model develops in two phases. In phase first image pre-processing was implemented with median filter and contrast stretching. In phase second k-means method was applied for image segmentation. Finally, a multilayer perception backpropagation technique was employed for classification. The data collection contained 60 images of trophozoite, gametocyte and schizont. The proposed model achieved accuracy level of 87% with specificity 90% for detection.

In the year 2016, Seo et al. [42] developed a machine learning model for classification of staphylococcus species. In this experiment, the authors used five different types of species of staphylococcus bacteria namely aureus, haemolyticus, hyicus, sciuri and simulans. Mahalanobis distance method was applied to eliminate the outliers, after that wavelength selection performed using correlation coefficient. The proposed model classified the staphylococcus bacteria species using Partial least square discriminant analysis and support vector machine. With the proposed model 89.8% accuracy was achieved using Support Vector Machine and 97.8% accuracy using Partial least square discriminant analysis.

In [43] purposed an approach that utilized the multi-scale wavelet counter detection to detect the parasites. Jointly active contours and Hough Transform were used to perform detection and segmentation of parasites images. The proposed model involved Principal Component Analysis and Probabilistic Neural Network. Principal Component Analysis was used to extract and reduce the features acquired from parasites images pixels and a probabilistic neural network model was used for the classification task. This model is tested on 15 intestinal parasites species with 900 microscopies images. Using this approach, the correct rate of classification obtained was 100%.

Nkamgang et al. [44] has purposed neuro-fuzzy approach to automatic detection and classification of human intestinal parasites. This model is based on segmentation and training of classifier. In this approach, parasites were localized using circular Hough transform and after that distance regularized level was initialized for segmentation. Finally, classification was performed by applying a trained neuro-fuzzy classifier. The proposed model has been applied for identification and classificatoin of 20 type’s human intestinal parasites. For every 20 classes of intestinal parasites satisfactory classification result was obtained and 100% recognized rate was achieved.

Vakilian et al. [45] developed a model based on image processing techniques and an artificial neural network for recognizing two types of fungi that are responsible for spreading infection in cucumber plant leaves. The proposed scheme contained total 300 images of healthy and infected plants. Among these, 250 images were used for training ANN model and the remaining were used for inspection. In this experiment, the training dataset indicated good fit. The relationship between the outputs and the inputs for validation and test dataset was 0.9.

Liu et al. [46] focused on KNN classifier to classify the morpho type bacterial species based on morphological features. Total 1937 digital images were collected for the proposed system. Among these, 1271 cells images were applied to train the classifier which exhibits 96% accuracy and 466 test cell images exhibit 97% accuracy.

Inayah et al. [47] worked on a Randomly Wired Neural Network for recognize the parasites from red blood cells. Secondary data set is used in this model collected from the National Library of Medicine (NLM). In this model total of 27,558 images of red blood cells were used as data. The proposed model worked on feedforward and backpropagation techniques. Using this model, average accuracy 95.08% was achieved in fivefold cross-validation.

4.2 Summary

In the past two decades, traditional ML methods for parasite detection and classification have been updated from time to time. Table 4 displays the related research works, including references, publication date, methods, objectives, species categories, and data details evaluation metrics, limitations, and future scope.

5 DL Based Methods and Models

In recent years, high-stake applications have been implemented using deep learning methods and models for microscopic image diagnosis. An extensive literature review and study is carried out for the proposed topic of convolutional neural network techniques based on deep learning for detection and interpretation using clinical intestinal protozoa microscopic images. In this section, an overall reviewed summary of methods and models of related subjects is prepared.

5.1 DL Related Works in Proposed Field

Hung et al. [48] presented the task of detection of individual cells and their respective classes using Faster Region-based Convolutional Neural Network (Faster R-CNN). The proposed model contains two sub-module, as seen in Fig. 12. Sub-module first apply Faster R-CNN to detect individual cells from the image by generating a bounding box around cells like red blood cells or non-red blood cell and in submodule second AlexNet model was used for further classification of cells from images. The data collection contain 1300 images and after pre-processing these images contain 100,000 labelled cells. Experimental results predicted an accuracy of 98% for the proposed model.

Detail of how proposed two stage deep learning model for recognition and classification is applied to images during test phase. a An original image is fed into Faster R-CNN model to recognize objects and label them as RBC or other. b The labelled objects as other are sent to AlexNet model to undergo more fine-grained classification

M. Górriz et al. [49] used U-Net, a deep convolution neural network to classify and segment the Leishmaniosis Parasite, which causes thousands of deaths in some undeveloped countries annually, as shown in Fig. 13. The number of images used in this task includes 45 having size 1500 × 1300pixels captured with light microscopic with the magnification of ×50 to facilitate image analysis. Evaluation of F1-Score based on pixel-wise classification of classes Background, Cytoplasm, Nucleus, Promastigote, Adhered and Amastigote is 0.980, 0.896, 0.950, 0.491, 0.457, 0.777respectively. Jaccard Index (j) is used to automatic detection of class Promastigote, Adhered and Amastigote and their evaluation when j > 0.75 is 0.50, 0.12, 0.55 respectively.

Viet et al. [50] developed an automatic Parasite worm egg detection and classification model by applying the deep learning Faster R-CNN method in microscopy stool images. Faster R-CNN uses RPN (a fully CNN) network to generate proposal regions that simultaneously predicts object bounds and object scores at each position. RoI Pooling layer draw interests region along with convolutional features as input to generate the bounding box around objects. The experiment result shows that the Faster R-CNN model performs better with an accuracy 97.67%.

Yang et al. [51] worked on a model that recognized the parasites in microscopic images of blood smear. The author divided this model into two parts, first to detect the parasites entities Iterative Global Minimum Screening technique used and second, the authors used a customized Convolutional Neural Network to classify each entity from images of either parasites or background. For this model, the authors collected 1819 thick smear digital images from 150 patients. The proposed model gave an accuracy of 93.46% and an AUC of 98.39%.

Khoa Pho et. al [52] proposed a model based on transfer learning and data augmentation techniques to detect and identify the images of systs and oocysts of various species like Iodamoeba butschilii, Toxoplasma gondi, Giardia lamblia, Cyclospora cayetanensis, Balantidium coli, Sarcocystis, Cystoisospora belli and Acanthamoeba, which have round shapes in common and affect seriously to human and animal health. The proposed modedl RetinaNet automatically detect and identify the protozoa’s. Even though there were lack of data in the training data, the proposed model still achieves good accuracy.

Mathison et al. [53] used a deep convolution neural network for detection of intestinal protozoa in Trichrome-Stained stool specimens from scan digital microscopic images. Traditionally, the ova-and-parasite (O&P) inspection method is used to manually microscopic evaluation of stool. It is a resource and time-consuming method. The purpose of this research is to develop a novel CNN model along with scan high-resolution digital side images to recognize intestinal parasites from stool, as shown in Fig. 14. The whole task is divided into three parts. Part I, collect the digital scan microscopic images of intestinal protozoa containing target classes. Part II, input the collected microscopic images into CNN model for training so as to detect defined classes. Part III, perform the validation and prediction of the trained model. The author collected and prepared one hundred twenty-seven slides of 11 categories of protozoa to train the model. During the model development, various training steps were executed and analysis of model performance was evaluated with the resulting metrics. All images were resized to 250 by 250-pixel images, of which 10% of label images of all classes were used for validation of CNN after training. The proposed intestinal detection model architecture is RGB CNN based on the SSD Inception V2 transfer learning model. The based model pertained with COCO image dataset. The trained model was shown the collection of 250 by 250-pixel images scenes to recognize the parasites. The model created a label image box to detect the parasite. The precision recall plot were used to view the model performance on the basis of per labelled parasite image box. The slide-level agreement is used to calculate the accuracy of the model, the positive agreement achieved was 98% and the negative was 98.11%.

Baek et al. [54] developed a model with Fast Regional Convolutional Network (Fast R-CNN) based on DL to quantify and classify five cyanobacteria. The proposed Fast R-CNN model includes two stages, in stage first classify cyanobacteria species taken from microscopic images using the fast R-CNN method and in step second CNN technique is used to quantify the cyanobacteria cells. The dataset collection covered 200 images of five species of cyanobacteria (Microcystiswesenbergii, Microcystis aeruginosa, Dolichospermum, Aphanizomenon and Oscillatoria). Experiment results show that the Fast R-CNN based model was able to achieve a reasonable accuracy for classification and yielding average precision (AP) values of 0.929, 0.973, 0.829, 0.890, and 0.890 for respective species.

Kang et al. [55] purposed an expensive deep learning network which utilized 1D CNN, Long-Short Term Memory Network (LSTM) and Deep Residual Network (ResNet). The proposed hybrid deep learning model define as Fusion-Net perform the classification of foodborne bacteria at a single-cell level. The Fusion-Net formation was performed in three parts comprising hyper parameter optimization, multiple deep learning architecture selections, and Fusion-Net construction. The dataset was prepared by collecting 5000 bacterial cells images of five foodborne bacterial cultures, of which 72% are used for training, 18% are used for validation and10% are used for the test dataset. Results show that the proposed model yields classification accuracy up to 98.4%.

Luo et al. [56] report a deep learning-based model for predicting Cryptosporidium and Giardia in drinking water. The proposed system merged imaging flow cytometry with MCellnet an efficient neural network. Figure 15 shows the architecture of MCellNet. The dataset collection included millions of raw images of which 80,146 images were selected for the final image database and each image from the database was patched to 120 × 120 pixels. 13 classes of Cryptosporidium (2082 images), Giardia (3569 images) were included in the dataset. The image data set is randomly split into a training data set (38,469 images), a validation data set (9618 images) and a test data set (32,059 images) that contained 48%, 12%, and 40% images respectively. The proposed model for multiclass and binary classifications achieves accuracies of 99.69% and 99.7%, respectively.

Nakasi et al. [57] evaluate the performance of the AlexNet and GoogleNet model based on transfer learning for the diagnosis of scan digital intestinal parasites stool microscopic images. The authors compared these two models with trained Convolution Neural Network for the same work. Models are evaluated on the system having low specification which shows that models can be deployed to tackle real word diagnostics problems. A total of 6500 (10.9% positive) image patches were applied in AlexNet, 6461 image patches (11% positive) applied in GoogleNet and 2071 image patches (30.5% positive) applied in Custom CNN. From proposed models, the AlexNet attained accuracy ROC AUC of 1.00 and GoogleNet attained ROC AUC of 99.

Lee et al. [58] developed a Helminth Egg Analysis Platform (HEAP) that help medical technicians to diagnosis parasite infections. The authors integrated the various deep learning techniques (SSD, Single Shot MultiBox Detector, U-net, and Faster R-CNN, Faster Region-based Convolutional Neural Network) to recognize the helminth egg specimens as shown in Fig. 16. The proposed model also includes pixel level based methods image binning and egg-in-edge algorithm to improve performance. HEAP exhibit effective performance in counting and recognizing the helminth eggs from digital images.

Functionality of HEAP-assisted parasite egg investigation. In stage first, all the specimen preparation measures are applied. Then, an automatic microscope image system was used to digitalize the specimen slides. Multiple focusing planes were required to gather all the images. HEAP carried out the egg recognition and egg counting using cloud computing. Finally, medical expert verify the model prediction result using computer client on internet

Litjens et al. [59] investigated a deep convolution neural network to improve the efficiency and accuracy of cancer diagnosis in H and E images. This model is used to perform two different tasks, first prostate cancer recognition from biopsy samples, and second breast cancer recognition from sentinel lymph nodes. Pre-processing pipeline of the model consists of 4 convolution layers for features extraction, 3 max-pooling layers to reduce the dimensionality and a dense layer for classification. Dataset for prostate cancer includes 225 glass slides of which 100 are selected for training, 75 for testing and remaining for validation. Dataset collection for breast cancer sentinel lymph nodes for the experiment includes 271 slides of which 98 for training, 33 for validation and remaining for testing the model. Optimal percentiles were obtained using the validation set for both ROC curves and the highest specificity that was the median and 90th percentile for both.

Panicker et al. [60] work on end to end selective auto-encoder approach based on convolution neural network to recognize complex soybean cyst nematode eggs from microscopic images. The soybean cyst nematode eggs training patches were used to train the proposed convolutional neural network. The trained model was validated with validation images. Figure 17 depict the architecture of the proposed Convolution auto-encoder model. The dataset collection included 644 nematode microscopic images that were used to investigate soybean cyst nematode eggs. 80% of images were used to train the model and the rest of the images were used for validation. Experimental results predicted accuracy of 94.33% for the proposed model.

Tahir et al. [61] develop a Convolution Neural Network based on deep learning for the detection and classification of five different types of fungus spores and dirt. Around 40,800 annotated RGB images of 6 classes were developed for fungus detection and classification. The model was trained on 30,000 fungus images and each class contain 5000 images. The test set comprises 10,800 fungus images with 800 images per class. The accuracy achieved by the purposed model is 94.8%.

Oomman et al. [62] developed an automatic approach based on deep convolution neural network for the detection of Tuberculosis bacilli from microscopic images. The proposed model was developed in two stages, stage first done image binarisation with Otsu threshold algorithm and in second stage classification of detected regions done using a convolutional neural network. The dataset collection included 120 images along with ground truth, each image in the dataset has 2816 × 2112 pixel resolution. For CNN training and testing the images were cropped to 900 negative patches and 900 positive patches. From the total of 1800 patches, 80% were used for training purposes and 20% used for testing. Experimental results show that the proposed model achieved a recall of 97.13%, a precision of 78.4% and an F-score of 86.76%.

Treebupachatsaku et al. [63] purposed a method based on deep learning for the detection of a genus of a bacterium from microscopic bacterial images using the Tensorflow framework. More than 800 sample images of S. aureus and L. delbruekii datasets were collected. Eighty percent of images from both datasets were applied to train the proposed model and the remaining images were used for testing. The proposed model achieved 96% of validation accuracy.

Pedraza et al. [64] worked on deep learning-based neural to check the diatom detection from water. The authors determined the diatom detection with two popular transfer learning techniques i.e. RCNNs (Region-based Convolutional Neural Networks), which applies convolution operation on candidate region and YOLO (You Only Look Once) which applies a neural network over the whole image. These two methods were trained on 11,000 microscopic images of diatom from 10 species. Diatom detection results of RCNN and YOLO are depicted in Fig. 18. The experimental result shows that the YOLO model performs better with 84% F-Measure than RCNN.

Sajedi et al. [65] proposed a model to recognize bacterial species from solid culture plates. Two methods based on deep learning were applied to detect action bacterial strains. In the first method, a two-level wavelet transform was utilized on action bacterial strains images. In the second method, two operations are performed i.e. data augmentation for blurring, cropping, and horizontal rotation and classification using transfer learning technique ResNet. The dataset collection was prepared from UTMC.V1.DB and UTMC.V2.DB databases containing 703 images from 55 different classes and 1303 images from 97 different classes from respectively. The experimental result exhibits that the former method acquired an accuracy of 80.81% and 84.81% on both datasets. The secondary method acquired accuracy of 90.24% and 85.96% on both datasets.

Zhou et al.[66] implemented a model by applying a transfer learning approach based on a convolution neural network to automatic analyses diatom from digital whole-slide images. The proposed model applied the GoogLeNet Inception-V3 transfer learning technique for training to recognize the diatom, as shown in Fig. 19. The dataset collection comprised 53 digital whole-slide images of which 43 slides were selected for training and 10 slides for validation. Experimental results show that the transfer learning model using the augmented database achieved accuracy of 97.67% and AUC: 99.51%.

Qian et al. [67] demonstrated a novel multi-target deep learning framework developed with Faster R-CNN for algae detection and classification. The proposed extensive model was trained on a large-scale coloured microscopic algae dataset, as depicted in Fig. 20. The dataset collection was prepared with 1859 images of 37 algae as well as annotations of genera and classes. In this experiment, 80% of images of genera were collected in the training set to train the model and the remaining were collected for the testing set. The experimental result show that the successful identification rate achieved at genus level by the proposed model was 74.64% and at class level it was 81.1%

Model architecture depicts 3 branches simultaneously outputs the genus, bounding box, and a biological class of the algae and orange component extended classification branch. Branch-1 is used to predict the genus of algae. Branch-2 is used for algal detection and localization. Branch-3 is used to predict the class of algae

Salido et al. [68] focus on mitigating the Diatom detection for specimen counting and sample classification challenge with the YOYO and SegNet network based on deep learning. The dataset prepared collects microscopic images of 80 species of diatoms and each species contain hundreds of images. Detection of diatoms for faster diatom counting by using YOLO for on-time inferences with an average sensitivity of 84,6%, specificity of 96.2%, and precision of 72.7%.

Holmström et al.[69] focuses on deep learning methods for accurate and fast detection of helminths and Schistosoma haematobium. The author used 8,342,769 echocardiograph images of 276 patients. 80 images were used to train the model and the remaining were used for testing and validation of the model. Train model showed 97% accuracy without any over-fitting. The model achieved the same test accuracy on low-resolution images among 15 views. Evaluation represented using confusion matrices also detect similarities and classification based on relevant image features.

Peixinho et al. [70] proposed a deep learning-based approach ConvNet that recognize the image features effectively for human intestinal parasite images. Random kernels are defined for hyper-parameters optimization CNN architecture. For the experiments, the dataset contained 16,437 objects including the 15 most common species of human intestinal parasites. Using the proposed approach effective accuracy was obtained for the classification of human intestinal protozoa’s and eggs.

López et al. [71] implement a model based on CNN to detect the mycobacterium tuberculosis (MT). Dataset consist of 9770 positive and negative smears patches prepared from 492 digital images. Three types of patches (grey-scale, RGB, R-G) were used to train the proposed CNN based model. The proposed model includes three layers to perform the classification of patches into two classes i.e. positive or negative MT. The proposed model accomplished 96% accuracy level.

Zieliski et al. [72]developed a hybrid deep learning model for the classification of bacterial species from digital images. In the proposed model deep convolutional neural network is used to recognize image descriptors, subsequently features vectors are generated with the pooling encoder method and finally Support Vector Machine was used for classification. The dataset used in this model includes 33 bacteria species with 20 digital images of each bacterium. The proposed model used 50% data of the dataset for training purposes and 50% for testing. The results exhibit that the proposed DL based model is 96.82% accurate in the classification of bacterial species from digital images.

Wahid et al. [73] implement a model by using a transfer learning technique to automate the classification and recognition task using a deep convolution neural network. The proposed inspection DCNN model was trained using 500 digital microscopic images of five bacterial species. The dataset was split into the training (80% images) and testing (20% images) parts. The detection and classification rate of the proposed model is 85%

Ahmed et al. [74] Implemented a hybrid approach to classify microscopic bacterial images using the SVM and Inception-V3 model. In the proposed model image processing techniques like image cropping, converting images from grayscale to RGB, image flipping, image translation and feature extraction by Inception-V3 Deep CNN method were used. The SVM was used to classify the microscopic bacterial images into defined classes. The authors used 800 bacterial images to train the proposed model and 200 images for testing. Based on the proposed model 75% accuracy in the classification of bacterial species from digital images was achieved.

In [75] performed an experiment on datasets of In Situ plankton images using deep learning techniques. Proposed model extract features from various planktonic images datasets i.e. Imaging Flow Cytobot (IFCB), Scripps Plankton Camera System (SPC) and Situ Icthyoplankton imaging system (ISIIS). The authors train CNN model by using IFCB and ISIIS plankton images datasets. The dataset SPC was small in size and so it was used for testing purposes. To train the proposed CNN model images of plankton were resized to 256 by 256. Experimental results of the proposed model work well on the feature extraction from planktonic images using CNN.

In [76] determined the classes of microalgae using convolution neural network technique of deep learning. FlowCam practical analyser used to extract microalgae images from water extracted from South Atlantic Ocean. The dataset contained 29,449 microalgae images that are further classified into 19 classes. The data augmentation technique was used to increase the size of the dataset. From this dataset, 70% of images were used to train the model and 30% were used for validation. The proposed model obtained experimental results with 88.59% accuracy.

In [77] presented a deep learning-based approach to classify bacterial colonies. The authors used Deep Convolutional neural network (CNN) to obtain image descriptors and support vector machine (SVM) and Random forest for classification, as shown in Fig. 21. Features based on shapes namely spiral, cylindrical and spherical were extracted. The dataset consisted of 660 images with 33 different genera and species of bacteria. The experimental results predicted the accuracy of recognition to be 97.24%.

Hay et al. [30] presented a convolutional neural network-based tool for differentiating bacteria images from non-bacterial images using three-dimensional microscopy data of gut bacteria found in larval Zebrafish. The authors used TensorFlow framework to implement 3D convolutional neural network and compared the performance of the model with support vector machine and random forest classifiers. The proposed model performed better with 89.3% accuracy whereas random forest classifier and support vector machine classifiers achieved accuracy of around 78.5% and 83.1% respectively.

In [78] developed a CNN based model using transfer learning approaches for automatic classification of parasites with low quality microscopic scanned images, as shown in Fig. 22. The patches based technique was used to search the location of eggs from images. The dataset collection contained ×10 magnification microscopic images of four different types of parasites i.e. Ascarislumbricoides (67 images), Hymenolepisdiminuta (27 images), Fasciolopsisbuski (32images) and Taeniaspp. (36 images). Before applying data augmentation and the patch overlapping, grayscale conversion and contrast enhancement are performed on the parasite egg image collection. The grayscale conversion operation decreases the depth of the input parasite image from three channels of RBG to one channel of grayscale. Further, the visualisation of low magnification of images is enhanced with contrast enhancement. Each parasitic image was split into small patches, which allowed the model to extract features from the image by examining the local areas. In order to encapsulate the mentioned parasites, patch size set to 100 × 100 pixels. The data augmentation technique was applied to increase the size of the dataset, approximately 10,000 patches per egg type. In order to implement the proposed framework, a transfer learning technique was employed with fine-tuning pretrained models. These models have been trained on large dataset of images collected from different specific applications. The last two layers of these models were replaced with a fully connected layer and a softmax layer to classify images into five classes. Grayscale conversion operation decreases the depth of the input parasite image from three channels of RBG to one channel of grayscale. Further, the visualisation of low magnification of images is enhanced with contrast enhancement. Each parasitic image was split into small patches, which allowed the model to extract features from the image by examining the local areas. In order to encapsulate the mentioned parasites, patch size set to 100 × 100 pixels. The data augmentation technique was applied to increase the size of the dataset, approximately 10,000 patches per egg type. In order to all implement the proposed framework, a transfer learning technique was employed with fine-tuning pertained models. These models have been trained on large dataset of images collected from different specific applications. The last two layers of these models were replaced with a fully connected layer and a softmax layer to classify images into five classes. For object detection, AlexNet is a cutting-edge model that improved CNN execution performance, whereas ResNet50 is a more sophisticated architecture that performs better for image classification tasks. The dataset of parasite egg images was split into two parts, part first contained 60% of the images for training purposes, and the second contained 40% of the images for testing purposes. Based on the proposed framework, experiment results represented state-of-the-art parasitic egg detection and classification task. Based on the proposed framework, experiment results represented state-of-the-art parasitic egg detection and classification.

Quinn et al. [27] authors purposed a framework based on Deep CNN to evaluate the performance with different microscopy tasks i.e. intestinal parasite eggs in stool samples, tuberculosis in sputum samples, and diagnosis of malaria in thick blood smears. The experts mark bounding boxes around each interested entity in all images. Finally, prepared, plasmodium was annotated (7245 entities in 1182 images) in thick blood smear images, tuberculosis bacilli were annotated (3734 entities in 928 images); in sputum samples, and, the eggs of hookworm, Taenia and Hymenolepsis nana were annotated (162 entities in 1217 images) in stool samples. The proposed model is trained on the collected images. After training the resulting model was applied to the test set: plasmodium detection set, which contains 261,345 test patches, tuberculosis set contain 315,142 test patches and hookworm set contains 253,503 patches. In all cases, experiment results show that accuracy was higher and better than traditional medical imaging techniques.

Butpoly et al. [75] proposed DL based method for classification of Ascaris lumbeicodes parasites images. The proposed model recognize three types of eggs of Ascaris lumbeicodes with effective approach of deep learning. The dataset collection included training and testing data. Both training and testing dataset included three types Ascaris lumbeicodes eggs namely infertile eggs, fertile eggs and decorticate eggs. For this experiment the training dataset consist of 200 of each type (total images 600). Experimental results predicted 93.33% classification accuracy of the parasites eggs.

Avic et al. [76] implemented methodology based on multi-class support vector machine for classification of human parasites eggs from digital microscopic images. The proposed model consists of four steps. These steps are pre-pre-processing feature extraction, classification and testing. In pre-processing step, the image processing methods, such as contrast enhancement, thresholding, noise reduction are applied. In second step i.e. feature extraction, the invariant moments of parasites images obtained in step first are evaluated. In classification step, the multi-class support vector machine was applied to classified feature collected in feature extraction step. The proposed model was tested with test data. The proposed approach achieved average accuracy of 97.70% for classification of human parasites.

5.2 Summary

In the past two decades, traditional deep learning methods for parasite detection and classification have been updated from time to time. Table 5 displays the related research works, including references, publication date, methods, objectives, species categories, data-details evaluation metrics, limitations, and future scope.

6 Discussion

We compiled traditional machine learning and deep learning-based methods for microscopic image classification and detection of various parasites in this paper. All the research studies discussed here show that both ML and DL methods and models are effectively used by researchers. Traditional machine learning methods and models include, as stated in the review, k-NN, SVM, ANN, GA-NN, and Neuro-fuzzy classifier. An overview of the processing flow of traditional machine learning detection and classification algorithms is shown in Fig. 23. In this review, we found that the most potential model of machine learning used for microscopic parasite image classification and detection is the Support Vector Machine. SVM is a supervised algorithm used for both linear and non-linear data. It defines the classification by constructing the set of hyperplanes in feature space [79]. In SVM, kernel functions are used to perform the transformation in the hyperplane. The most commonly used kernel functions are linear, polynomial, sigmoid, and radial basis functions. A better classification is achieved with the hyperplane maximum distance to the nearby training data point of classes [80]. However, this model is not effective for multiple species classification and detection.

The deep learning methods and models surveyed in this paper are playing a major role in parasitological research. Furthermore, it supports tackling similar problems within several other subdomains. Deep learning models handle more complex tasks than traditional machine learning, such as object detection, image segmentation, image recognition, and classification. Figure 24 shows CNN-based transfer learning models used in parasite classification and detection. Popular classical deep learning models, such as CNN and its offspring methods and models, are constantly used in the task of parasite classification and detection, such as CNN mentioned in [60, 61, 70], AlexNet and GoogleNet mentioned in [57], AlexNet and ResNet50 mentioned in [78], Faster R-CNN mentioned in [50], and R-CNN mentioned in [54]. According to the survey on deep learning methods and models for parasites, the most popular detection and classification model is Faster R-CNN. In [50, 51] Faster R-CNN is mentioned. In comparison to R-CNN, Faster R-CNN implemented with the Regional Proposal Network (RPN) technique performs better because in Faster R-CNN, RPN improves the performance and accuracy of detection. Moreover, it also exhibits end-to-end detection [81]. The main limitations of Faster R-CNN are that it cannot detect objects in real time and that it performs large amounts of computations due to the RPN extraction method.

7 Conclusion and Research Directions

In this systematic review, we demonstrated a significant investigation of parasites microscopic image detection and classification methods and models based on traditional machine learning and modern deep learning technologies. In Sect. 1, we discussed certain fundamentals to understand parasites along with diseases and diagnosis approaches, including motivation and research position. In Sect. 2, we outline the basic concepts of deep learning, which include CNN models for visual recognition. In Sect. 3, we have outlined the research progress of traditional and modern methods and models of parasite detection and classification in chronological order. In Sects. 4 and 5, we have organized various methods and models of traditional machine learning and deep learning, respectively. Finally, in Sect. 6, i.e., the discussion part, we analyse the two categories of methods and models and further present the performance of each method. After a detailed review on parasites detection and classification using deep learning, the experimental results show that deep learning is a better and more effortless approach than the traditional machine learning approach that employs hand-engineered features, which is a challenging and time-consuming task. Several deep learning methods and models have been extensively used for the detection and classification of parasite microscopic images. Some of the state-of-the-art techniques in the deep learning framework are CNN, F-RCNN, VGG-Net for detection and classification purposes. The native paper researchers hardly examined a few species of parasites due to the limited size of the data collection. The performance of methods and models falls short of expectations due to the scarcity of datasets. Deep learning methods and models achieve sophisticated development and produce better results than the traditional machine learning methods on various benchmarks. In spite of this, deep learning techniques are too immature in the field of visual recognition. Based on the analysis of deep learning techniques in Sect. 5, the authors found some major gaps, which are outlined as future directions for further enhancement for parasites detection and classification based on microscopic images. The summary of all CNN based methods and models along with their best scores and datasets is mentioned in Table 5. The AlexNet and Fast R-CNN models yield the best results for the detection and classification of parasites in digital images. They are followed by R-CNN, GoogleNet, LeNet, and U-Net models. Moreover, fine-tuning of concerned methods also outperforms whatever the network model may be. This approach provides a significant improvement in multiclass datasets.

The future development trends and challenges in deep learning are predicted on the basis of the development of parasites classification and detection methods and models. To detect and classify parasitic species in deep learning, the most promising development could be to combine existing transfer learning techniques. Due to the successful application of transfer learning-based models in computer vision, at present, many researchers are applying transfer learning to detect and classify objects from microscopic images. Combining transfer learning models with others can result in improved parasite detection and classification performance. In this domain, the most challenging task is real-time detection and classification. With the recent development in related methods and models, the performance of detection and classification is improving significantly. In deep learning, two parameters that run against experimental results and also act as a major challenge for parasites detection and classification are, complication in obtaining good quality parasite images data collection, and high processing costs, which affect generating good quality datasets.

References

Torgerson PR et al (2015) World Health Organization estimates of the global and regional disease burden of 11 foodborne parasitic diseases, 2010: a data synthesis. PLoS Med. https://doi.org/10.1371/JOURNAL.PMED.1001920

Hotez PJ, Brown AS (2009) Neglected tropical disease vaccines. Biologicals 37(3):160–164. https://doi.org/10.1016/j.biologicals.2009.02.008

CDC - DPDx - Parasites A-Z Index. https://www.cdc.gov/dpdx/az.html. Accessed 20 Nov 2021

Schistosomiasis and soil-transmitted helminthiases: numbers of people treated in 2017. https://www.who.int/publications/i/item/who-wer9350. Accessed 20 Nov 2021

Pullan RL, Smith JL, Jasrasaria R, Brooker SJ (2014) Global numbers of infection and disease burden of soil transmitted helminth infections in 2010. Parasites Vectors. https://doi.org/10.1186/1756-3305-7-37

Marcilla A et al (2012) Extracellular vesicles from parasitic helminths contain specific excretory/secretory proteins and are internalized in intestinal host cells. PLoS ONE. https://doi.org/10.1371/JOURNAL.PONE.0045974

Dematei A, Fernandes R, Soares R, Alves H, Richter J, Botelho MC (2017) Angiogenesis in Schistosoma haematobium-associated urinary bladder cancer. APMIS 125(12):1056–1062. https://doi.org/10.1111/APM.12756

Hart BL, Hart LA (2018) How mammals stay healthy in nature: the evolution of behaviours to avoid parasites and pathogens. Philos Trans R Soc B Biol Sci. https://doi.org/10.1098/RSTB.2017.0205

Bennett A et al (2014) Building a laboratory workforce to meet the FutureASCP task force on the laboratory professionals workforce. Am J Clin Pathol 141(2):154–167. https://doi.org/10.1309/AJCPIV2OG8TEGHHZ

Petti CA, Polage CR, Quinn TC, Ronald AR, Sande MA (2006) Laboratory medicine in Africa: a barrier to effective health care. Clin Infect Dis 42(3):377–382. https://doi.org/10.1086/499363

Zhang Z, Cui P, Zhu W (2020) Deep learning on graphs: a survey. IEEE Trans Knowl Data Eng 14(8):1–1. https://doi.org/10.1109/tkde.2020.2981333

Lecun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Shrestha A (2019) Review of deep learning algorithms and architectures. IEEE Access 7:53040–53065. https://doi.org/10.1109/ACCESS.2019.2912200

Alzubaidi L et al (2021) Review of deep learning : concepts, CNN architectures, challenges, applications, future directions. Springer, Cham

Jamshidi M et al (2020) Artificial Intelligence and COVID-19: deep learning approaches for diagnosis and treatment. IEEE Access 8:109581–109595. https://doi.org/10.1109/ACCESS.2020.3001973

Prashar N, Sangal AL (2022) Plant disease detection using deep learning (convolutional neural networks). Lect Notes Netw Syst 300 LNNS:635–649. https://doi.org/10.1007/978-3-030-84760-9_54

Krizhevsky BA, Sutskever I, Hinton GE (2012) Cnn实际训练的. Commun ACM 60(6):84–90

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. The 3rd international conference on learning representations (ICLR2015). https://arxiv.org/abs/1409.1556

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit 2016:2818–2826. https://doi.org/10.1109/CVPR.2016.308

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 2818–2826

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 2818–2826

He K, Zhang X, Ren S, Sun J (2015) Deep residual learning for image recognition. arXiv. https://doi.org/10.48550/arXiv.1512.03385

Chollet F (2016) Xception: deep learning with depthwise separable convolutions. arXiv. https://doi.org/10.48550/arXiv.1610.02357

Huang B, Thorne PW et al (2017) Extended Reconstructed Sea Surface Temperature version 5 (ERSSTv5), Upgrades, validations, and intercomparisons. J Climate https://doi.org/10.1175/JCLI-D-16-0836.1

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L (2018) MobileNetV2: inverted residuals and linear bottlenecks. arXiv. https://doi.org/10.48550/arXiv.1801.04381

Sun K, Zhao Y, Jiang B, Cheng T, Xiao B, Liu D, Mu Y, Wang X, Liu W, Wang J (2019) High-Resolution representations for labeling pixels and regions. arXiv. https://doi.org/10.48550/arXiv.1904.04514

Quinn JA, Nakasi R, Mugagga PK, Byanyima P, Lubega W, Andama A (2016) Deep convolutional neural networks for microscopy-based point of care diagnostics, pp 1–12, [Online]. Available from http://arxiv.org/abs/1608.02989

Li S, Du Z, Meng X, Zhang Y (2021) Multi-stage malaria parasite recognition by deep learning. Gigascience 10(6):1–11. https://doi.org/10.1093/gigascience/giab040

Li S, Li A, Molina Lara DA, Gómez Marín JE, Juhas M, Zhang Y (2020) Transfer learning for Toxoplasma gondii recognition. mSystems 5(1):1–12. https://doi.org/10.1128/msystems.00445-19

Parasitic Egg Detection and Classification in Microscopic Images | IEEE DataPort. https://ieee-dataport.org/competitions/parasitic-egg-detection-and-classification-microscopic-images. Accessed 19 May 2022

Yang YS, Park DK, Kim HC, Choi MH, Chai JY (2001) Automatic identification of human helminth eggs on microscopic fecal specimens using digital image processing and an artificial neural network. IEEE Trans Biomed Eng 48(6):718–730. https://doi.org/10.1109/10.923789

Saha B, Tchiotsop D, Tchinda R, Wolf D, Noubom M (2015) Automatic recognition of human parasite cysts on microscopic stools images using principal component analysis and probabilistic neural network. Int J Adv Res Artif Intell 4(9):26–33. https://doi.org/10.14569/ijarai.2015.040906

Widmer KW, Oshima KH, Pillai SD (2002) Identification of Cryptosporidium parvum oocysts by an artificial neural network approach. Appl Environ Microbiol 68(3):1115–1121. https://doi.org/10.1128/AEM.68.3.1115-1121.2002

Widmer KW, Srikumar D, Pillai SD (2005) Use of artificial neural networks to accurately identify Cryptosporidium oocyst and Giardia cyst images. Appl Environ Microbiol 71(1):80–84. https://doi.org/10.1128/AEM.71.1.80-84.2005

Chen WB, Zhang C (2009) An automated bacterial colony counting and classification system. Inf Syst Front 11(4):349–368. https://doi.org/10.1007/S10796-009-9149-0

Kumar S, Mittal GS (2010) Rapid detection of microorganisms using image processing parameters and neural network. Food Bioprocess Technol 3(5):741–751. https://doi.org/10.1007/s11947-008-0122-6

Osman MK, Ahmad F, Saad Z, Mashor MY, Jaafar H (2010) A genetic algorithm-neural network approach for mycobacterium tuberculosis detection in Ziehl-Neelsen stained tissue slide images. In: 2010 10th international conference on intelligent systems design and applications, pp 1229–1234. https://doi.org/10.1109/ISDA.2010.5687018.

Hiremath PS, Bannigidad P (2011) Identification and classification of cocci bacterial cells in digital microscopic images. Int J Comput Biol Drug Des 4(3):262–273. https://doi.org/10.1504/IJCBDD.2011.041414

Ghazali KH, Hadi RS, Mohamed Z (2013) Automated system for diagnosis intestinal parasites by computerized image analysis. Mod Appl Sci 7(5):98–114. https://doi.org/10.5539/mas.v7n5p98

Suzuki CTN, Gomes JF, Falcão AX, Papa JP, Hoshino-Shimizu S (2013) Automatic segmentation and classification of human intestinal parasites from microscopy images. IEEE Trans Biomed Eng 60(3):803–812. https://doi.org/10.1109/TBME.2012.2187204

Nugroho HA, Akbar SA, Murhandarwati EE (2016) Feature extraction and classification for detection malaria parasites in thin blood smear. In: 2015 2nd international conference on information technology, computer, and electrical engineering (ICITACEE), 1(c):197–201. https://doi.org/10.1109/ICITACEE.2015.7437798

Seo Y, Park B, Hinton A, Yoon SC, Lawrence KC (2016) Identification of Staphylococcus species with hyperspectral microscope imaging and classification algorithms. J Food Meas Charact 10(2):253–263. https://doi.org/10.1007/S11694-015-9301-0/TABLES/3

Tchinda BS, Noubom M, Tchiotsop D, Louis-Dorr V, Wolf D (2018) Towards an automated medical diagnosis system for intestinal parasitosis. Inform Med Unlocked 13(September):101–111. https://doi.org/10.1016/j.imu.2018.09.004

Nkamgang OT, Tchiotsop D, Tchinda BS, Fotsin HB (2018) A neuro-fuzzy system for automated detection and classification of human intestinal parasites. Inform Med Unlocked 13(June):81–91. https://doi.org/10.1016/j.imu.2018.10.007

Asefpour Vakilian K, Massah J (2013) An artificial neural network approach to identify fungal diseases of cucumber (Cucumis sativus L.) plants using digital image processing. Arch Phytopathol Plant Prot 46(13):1580–1588. https://doi.org/10.1080/03235408.2013.772321

Liu J, Dazzo FB, Glagoleva O, Yu B, Jain AK (2001) CMEIAS: a computer-aided system for the image analysis of bacterial morphotypes in microbial communities. Microb Ecol 41(3):173–194. https://doi.org/10.1007/S002480000004

Inayah N, Liebenlito M, Fitriyati N, Monardo K (2020) Classification of falciparum parasite in human red blood cells using randomly wired neural network. In: 2018 international conference on computer, information and telecommunication systems (CITS), pp 2018–2021. https://doi.org/10.1109/CITSM50537.2020.9268806

Hung J, et al, Applying faster R-CNN for object detection on malaria images deepali ravel * Agency for Science & Technology ( A * STAR ) Campinas, pp 1–7