Abstract

In this study, the technical papers on structural condition assessment of aged fixed-type offshore platforms reported over the past few decades are presented. Other ancillary related works are also discussed. Large numbers of researches are available in the area of requalification for life extension of offshore jacket platforms. Many of these studies involve reassessment of existing platforms by means of conducting pushover analysis, a static nonlinear collapse analysis method to evaluate the structure nonlinear behaviour and capacity beyond the elastic limit. From here, the failure mechanism and inherent reserve strength/capacity of the overall truss structure are determined. This method of doing reassessment is described clearly in the industry-adopted codes and standards such the API, ISO, PTS and NORSOK codes. This may help understand the structural behaviour of aged fixed offshore jacket structures for maintenance or decommissioning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Offshore Jacket Platform

In the year 1947, in the coast of Louisiana off Gulf of Mexico (GOM), the first offshore oil and gas platform was born in about 5 m of water depth. Since then, offshore platforms have been used actively in the oil and gas industry for decades. The offshore oil and gas industry was having a steep growth thereafter, and it contributed to 14% of the global production in 30 years. By the year 2010, it increased to about 33% of the global production (Kurian et al. 2012). To date, about 10,000 offshore platforms have been designed and installed throughout the world. The exponential growth of the oil and gas industry was triggered by the development in the energy-hungry post world war countries, the massive industrialisation processes in the 1970s and the liberalisation and the phenomenal growth in the economy of the BRICS countries (Brazil, Russia, India, China and South Africa) by the 1990s.

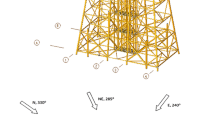

These factors contributed to the fast expansion and steady growth of the offshore oil and gas industry as well as the utilisation of the offshore platforms. An offshore platform is defined as a structure which has no fixed access to land and is required to stay safe in all weather conditions. Offshore platforms may be fixed to the seabed or floating. Almost all early offshore rigs were fixed-type platforms or fondly known as ‘Jackets’, mostly in shallow to intermediate waters, located in water depths of not more than 500 m. They are piled to the ground and support decks and/or functional platform facilities as shown in Figure 1. The design life of a typical jacket platform is between 20 and 30 years except for minimal production platforms with a 10-year design life. They are designed to provide support for the exploration and production facilities above the wave elevations. They need to perform under the service conditions which constitute of extreme and operational environmental conditions where the loads are generated from wind, wave and current.

Typical shape of offshore jacket platform (Fadly 2011)

Though offshore oil and gas industry has been active and grown rapidly since 1947, the design standards and guidelines for offshore jacket platform design were only made available in 1969 by publishing the first edition of API RP 2A (American Petroleum Institute Recommended Practice). Before 1969, there were no common design standards or guidelines available. The early platforms were designed based on the industry experience from onshore steel structure design. Since the introduction of the first edition of the API RP 2A document, there have been many revisions to the code over the past couple of decades. This has made the design basis and criteria of the platforms which were designed using the early edition obsolete by now (Mortazavi and Bea 1995).

In the south-east Asian region, the oil and gas industry blossomed in mid-1970s by the setting up of many national oil companies (NOCs). Today, the region is very active with offshore oil and gas exploration and production activities.

Profit sharing contract (PSC) is the term typically coined for the agreement between global players and NOCs for the development of the fields and facilities. Under the contract, NOCs own the assets, and the PSC partners develop and manage the fields and the facilities throughout the contract duration. Profit is shared between the parties with a predetermined ratio. Contract period varies from 20 to 25 years. After the completion of the contract, the platform assets will be returned to the owner, i.e. NOCs. The typical condition of the assets while it is handed over is aged, with high wear and tear and yet acceptably maintained.

In this technical review, the documented researches are grouped into categories based on the sequence of the current research direction. Large numbers of research studies are available in the area of requalification for life extension of offshore jacket platforms. Many of these studies involve reassessment of existing platforms by means of conducting pushover analysis, a static nonlinear collapse analysis method to evaluate the structure nonlinear behaviour and capacity beyond the elastic limit. From here, the failure mechanism and inherent reserve strength/capacity of the overall truss structure are determined. This method of doing reassessment is described clearly in the industry adopted codes and standards such the API, ISO, PTS and NORSOK codes.

1.2 Condition Assessment

Condition assessment or reassessment is defined as “the process of monitoring the integrity of a platform and assessing its fitness for purpose. It is known that remaining structural strength and fatigue performance should be checked by time based on well planned condition assessment procedure (Wong and Kim 2018; Mourão et al. 2019, 2020). In the process, changes in the platform function, estimates of environmental conditions, and physical condition through inspection are evaluated to better quantify the risk associated with its continued operation” mentioned by Banon et al. (1994). Typically, condition assessment is triggered by

-

1)

Changes from original design (e.g. change of platform exposure level such as from unmanned to manned, modification to the facilities, subsidence due to extraction of hydrocarbon and increase of environmental conditions).

-

2)

Damage of structural members due to major events such as dropped objects, small fire and/or blast, boat impact.

-

3)

Deterioration of primary structural components due to ageing effect such as corrosion and fatigue crack.

-

4)

Exceeding original design service life.

From the nonlinear collapse analysis, further progression into structure reliability analysis focusing on system safety is performed to evaluate the probability of failure of ageing platforms. Very few studies have reported on component reliability and component to system reliability. This is despite the fact that offshore platforms are designed on component basis rather than system. It has also been noticed that prior to the platform failure, depending on the degree of determinacy of the platform, several components would have yielded.

This section describes the reassessment procedure outlined in the codes, as practiced by the industry and the works of the researchers in this area. Most of the available codes and standards pay more attention to the design of new platforms. Hence, as mentioned by Nichols et al. (2006), reassessment guidelines recommended by current codes and standards are still immature in terms of its wide applicability and its resilience to variety of nature of design, operation and environment of the platforms. The API (2007) RP 2A WSD code, being the mostly utilised recommended practice (RP) among the industry players, prescribes common guideline for the reassessment of offshore jacket platforms in its Section 17 while the ISO (2007) code in its Section 24. The guideline is prepared from the collective industry experience gained to date as well as from the number of researches have been conducted.

Topside and underwater surveys as well as the soil foundation data are integral of the reassessment procedure. The recommended guide on the reassessment starts with platform risk screening, followed by design level analysis and finally ultimate strength analysis. The risk screening level comprises of platform selection, categorisation and condition assessment. Failure to fulfil this level will lead the platform to design and ultimate strength level analyses. The design level analysis has been described as simpler compared with the complex ultimate strength analysis. In addition, in the ultimate strength, the analysis begins with linear global followed by global inelastic analyses. In performing reassessment for requalification purpose, an ageing platform must demonstrate adequate strength and stability to survive ultimate strength loading criteria prescribed by the codes.

Many NOCs, typically follow own technical standards, which adopt the API guide for reassessment. The primary objective of the reassessment, according to the company, is to predict the strength performance against excessive load and insufficient strength. The reassessment procedure is crafted in such a way that the assessment difficulty increases as it moves from Risk Level to Global Ultimate Strength Analysis (GUSA) Level, i.e. from qualitative to quantitative approach. Risk-level assessment utilises factors influencing the likelihood of failure (LOF) and the consequence of failure (COF) of the platform to establish the risk level and map it into a 5 × 5 risk matrix. That is followed by design level assessment or the static in-place analysis. When a platform fails to comply with the acceptance criteria, load and resistance rationalisation which optimises the inherent conservatism in the initial design is practiced. Followed by reassessment in the form of GUSA is performed (Ayob et al., 2014a). Target reliability typically used in Malaysian industry practice is adopted form API RP 2A-WSD, with RSR value 1.32 for unmanned platform and 1.6 for manned platform. But the last decade has seen that Malaysian industry is looking to adopt ISO 19902 with regional annex for Malaysia. ISO code suggests that the platforms are designed for 100-year return period of load but reassessed for 10,000-year return period or 1 × 10−4 probability of failure for manned platforms and 1000-year return period or 1 × 10−3 probability of failure for unmanned platforms. In the context of RSR, ISO code suggests RSR value of 1.85 for high-consequence platforms, i.e. manned platforms and RSR value of 1.5 for low-consequence platforms, i.e. unmanned platforms. Since the ISO code is based on probabilistic design, the environmental load factor adopted is 1.35, as has been prescribed by the ISO code, which is derived from studies performed in the Gulf of Mexico and North Sea. But metocean studies performed near Malaysian waters have shown that the environmental condition is more benign than Gulf of Mexico and North Sea. Hence, in a recent study, an environmental load factor ranging from 1.255 to 1.295 was developed, derived from similar target reliability between ISO and API RP 2A-WSD codes.

Since the evolution of the offshore jacket platform in 1947 in the coast of Louisiana off Gulf of Mexico (GOM), it has seen steady growth of the platform installation around the world estimated to be in the order of 10 000 units to date. By industry standard, a jacket platform design life is around 20 to 25 years. Hence, by 1970s to 1990s, many offshore platform installations in the North Sea and Gulf of Mexico were nearing their design life. This has prompted industry wide attention on integrity of the existing ageing jacket platforms. The industry looked into procedural development in reassessing those ageing platforms. Following that, many researchers have studied the field of reassessment of jacket platforms for life extension. In the past few decades, they have contributed to the development of the theoretical background of proper industry-wide accepted procedures and guidelines. Risk assessment and mitigation were the main focus of most of the work, apart from enabling the procedure to be scrutinised, refined and updated further. To their assistance, back then, when those ageing platforms were designed, insufficient location-specific environmental data and computing tools for efficient and easy structural analysis, lead to conservative design in terms of metocean design data and component-based design approach. This has implicitly helped the reassessment of ageing platforms for life extension. The inherent conservatism assisted in providing redundant and robust platforms which weathered the degradation which came with age.

Bea (1974) is among the pioneer in this area. He has devised reliability analysis in the reassessment process into five major parts, namely loading probabilities, resistance probabilities, reliability estimates, value analysis and design criteria. By the 1990s, the state-of-the-art jacket-type platform reassessment issues in GOM has been reviewed by Banon et al. (1994). This is because the number of ageing jacket platforms worldwide has increased, hence reassessment to determine their fitness for purpose has gained considerable attention from the oil and gas industry and the regulatory agencies worldwide. But the reassessment process is found to be time consuming and costly relative to the design of a new platform, as it requires multiple steps such as gathering of information on design and actual physical condition of the platform, modelling of all the significant damages found, structural analysis of the platform, calculation of the safety indices and identifying the necessary mitigation and repair plan. It is also associated with high degree of uncertainty.

Meanwhile, Mobil E & P considered reassessment exercise in the GOM in the 1990s as timely, as it has one third of its 250 platforms aged 20 years or older (Day, 1994). Reassessment is necessary as many of those platforms were, at that time, still having significant economic value due to improved reservoir engineering techniques and advanced secondary and tertiary oil recovery methods. The necessary tools, procedures and expertise needed to conduct reassessment for fitness for purpose is acknowledged available. However this conflicts with the earlier conclusion by Banon et al. (1994). This is because in 1992, API has set-up a task force to develop guidelines for reassessment of existing platforms and the task force members had published their work to be adopted by industry which may be referred to Krieger et al. (1994). The motivation for developing this reassessment guideline was the evolution of jacket platform design practice over the past couple of decades, resulting in new platform design standards getting stringent than those older ones. The concerns raised by the regulating agencies in the USA, among others, are the adequacy of the older platforms to face occurrence of extreme environmental events like hurricane Juan in 1985, the Loma Prieta earthquake in 1989 and hurricane Andrew in 1992. Case studies utilizing the developed procedure were done successfully by Craig and Digre (1994) on three US West Coast production platforms. Following that, API officially issued the guideline as draft Supplement 1 for both the WSD and LRFD, RP2A-LRFD (API 1993), versions of API RP 2A in 1995 and subsequently has included in the new edition of API RP 2A in 1997, which also forms the basis for the analogous guidance in ISO 13813-2 for fixed steel platforms. Details may be referred to Moan (2000). In addition, damage assessment techniques for fixed and offshore platforms subjected to hurricane may be referred to Energo (2007, 2010).

From then onwards, many researchers, i.e. Tromans and van de Graaf (1994), Visser (1995), Sturm et al. (1997), Bea (2000), Hansen and Gudmestad (2001), Capanoglu and Coombs (2009), Bao et al. (2009), Gening and Ruiguang (2010), Håbrekke et al. (2011), Solland et al. (2011), Ayob et al. (2014b), Kurian et al. (2014a, b), George et al. (2016), Kim et al. (2017b) and Mohd Hairil et al. (2019), have utilised the reassessment guideline from the standards to demonstrate the safety of platforms by considering ageing effects as well as the low-risk potential for environmental damage from the operation of those platforms. A study related to the decommisioned offshore platforms converted in offshore wind turbine is also recently introduced by Alessi et al. (2019).

Petruska (1994) has adopted the newly launched code reassessment guide on a 1950 vintage shallow water platform in GOM to assess its fitness of purpose to extend its life span. Visser (1995) has studied reassessment of jacket structures in Cook Inlet, Alaska, utilizing the newly released API guideline on reassessment. Condition survey and reassessment audit of several ageing high-consequence platforms which were designed and installed in the mid-1960s were conducted.

Following Hurricane Roxanne which rocked Bay of Campeche in 1995, PEMEX initiated a program to reassess and requalify the affected platforms according to API guidelines. The findings showed that majority of the platforms would not qualify for the continued service without very expensive and extensive remedial work. Thus, Bea (2000) has conducted study on how risk assessment and management (RAM), a process developed in 1970s, is applied to the requalification of PEMEX platforms. Bea studied the results from field tests to verify loading and capacity analytical models and characterise the bias and uncertainty in the models. Among the biases discovered include wave attenuation due to the soft seafloor soils and extreme condition wave-current directionality.

Banon et al. (1994) reviewed the state-of-the-art jacket reassessment issues in GOM as a result of an effort by ASCE Committee on Offshore Structural Reliability. With the increased usage and confidence in the reassessment method, many researchers studied the effect of controlling variables on the requalification of existing platforms. Dalane and Haver (1995) studied reliability assessment of an existing jacket platform in 70-m water depth at North Sea exposed to reservoir subsidence due to hydrocarbon extraction activities. The safety of the platform is quantified in terms of wave-in-deck due to reduced air gap. Environmental loading, statistical and modelling uncertainties are quantified for the reliability analysis accounted for in the study. Hansen and Gudmestad (2001) also have looked into reassessment of existing jacket platform in North Sea, subjected to wave-in-deck due to subsidence as well as from revised environmental criteria.

Couple of researchers reviewed the development of available methods for safety requalification of jacket platforms. They gave complete picture of the methods, both deterministic and probabilistic, under extreme loading and also on the treatment of the resistance. Key issues like behaviour uncertainties and sensitivities, validation and benchmarking of the methods were examined. Highlighted also are the number of technical and philosophical issues which need to be addressed to increase the benefits from system reliability applications in design and reassessment of offshore jacket platforms as stated by Moan (2000) and Onoufriou and Forbes (2001).

Nichols et al. (2006) addressed the current issues where the continued use of ageing jacket platforms requires reassessment but the code recommended guides are inadequate. Hence, reassessment procedures, tools and technology initiative improvements are imperative. In this work, the challenges and probable solutions in managing the long-term integrity of the ageing platforms were described. The details on how those solutions are assisting in the ongoing development of Carigali Structural Integrity Management System (CSIMS) were presented as well. The adopted process is in line with the recent development of standalone API RP 2SIM, for the structural integrity management (SIM) of existing structures (Westlake et al. 2006).

Contribution to further development in the theoretical background of the procedures for life extension of existing jacket platform is made recently. This includes work on system strength parameters and the use of probabilistic methods in reliability analysis. Criterion for reserve strength ratio, damage strength ratio and reserve freeboard ratio has been suggested. Probabilistic predictive Bayesian method has been proposed for evaluating structural uncertainty for better decision making. The objective was to reevaluate the risk in order to decrease the failure probability (Ersdal 2005). In the Gulf of China, Bao et al. (2009) utilised ultimate strength system reliability analyses and parameters sensitivity to study the effect of parametric variables on the reliability of platform. Elsewhere, stochastic response surface is adopted by Kolios (2010) in the reassessment for better representation of parametric uncertainty. The developed method is simple and efficient, where it allows for reassessment in the form of individual design blocks, opposite to available methods which require deep understanding of mathematical expressions. Global ultimate strength assessment (GUSA) is performed to assist efficiently in identifying and comprehending the failure mechanism and safety indices of platforms that undergo reassessment exercise. Thus, correctly defining risk level and relevant type of required mitigation actions, if necessary for continued use (Ayob et al. 2014a, b).

2 Static Nonlinear Collapse Analysis

In this category, the finite element static nonlinear collapse analysis in obtaining ultimate strength of jacket platform is presented. Almost all the works reported on the reassessment of ageing jacket platforms utilised this method. There are two general approaches. Majority of the researchers adopted the first approach, i.e. incremental load factor for the horizontal environmental load application. The analysis is used in the system-based structural reliability analysis (SRA) to determine platform excess strength capacity fondly known as reserve strength ratio (RSR). RSR is the measure of ductility, and it gives the ultimate strength of a platform. Since all jacket platforms are designed as per their component linear elastic behaviour, the overall available reserve strength in a structural system is made up of combination of the entire components. Static nonlinear collapse analysis is also called as pushover analysis.

RSR compares the collapse capacity with the design capacity to establish excess strength beyond design strength. According to API WSD, the design strength value is determined from the 100-year return period metocean environmental loading. RSR is typically obtained from all directions and the lowest value is devoted as the most critical value from the critical direction. Thus that lowest value will be taken as the platform reserve strength ratio by ISO (2007). The reserve strength ratio (RSR) is defined as in Eq. (1) (Bolt et al. 1996).

According to Krawinkler and Seneviratna (1998) prior to the analysis, the finite element structural stick model in two or three dimension is developed. All the structural members, joints, hinges and its linear and nonlinear response characteristics are appropriately modelled. Gravitational vertical loads are applied as the initial step. Followed by the concurrent environmental lateral loads in chosen direction are applied to the model. The lateral loads are factored incrementally until the platform has been pushed to a specific target displacement or until collapse. The analysis utilises stiffness matrix approach in analysing the member stresses and strains. During the analysis, the program further selects load sub-step sizes, by determining when the next stiffness change occurs and ending the sub-step at the event. This is followed by the modification of the structure stiffness and reanalysis as the next step until the entire load is being applied by Asgarian and Lesani (2009). Further detail into the specific workflow of the analysis could be found in the application manuals of the specific types of computer software such as USFOS and SACS.

Conventional design of jacket platforms presumes linear elastic behaviour for all relevant analysis limit states as well as perfectly rigid joints. Consequently, members are checked based on linear-elastic theory, and no yield or buckling is permitted. As a result of this approach, conservatively, the jacket collapse is considered equal to first member failure (Golafshani et al. 2011). Figure 2 presents typical static nonlinear collapse analysis procedure. Here, with the gravitational loads already applied, the environmental load is applied horizontally on the jacket structure until it collapses.

The second approach as reported by Golafshani et al. (2011) is called the incremental wave analysis (IWA), in which the wave height is increased instead of the environmental load factor. This method has also been reported elsewhere by Nichols et al. (2006). It is claimed that the typical practice is imperfect as incrementally increasing the wave load factor alone does not depict the real situation. The current nonlinear collapse analysis does not account for the wave-in-deck loading condition where in reality, higher wave load comes from higher wave height, which eventually induces wave-in-deck scenario.

Traditionally, the offshore platforms, just like the land-based structures, are designed by component-based linear elastic method of analysis including the limit state design checks which are formed using empirical expressions. However, since the accidental and natural disasters like Piper Alpha in the North Sea and Hurricane Andrew in GOM, many efforts were made into understanding the nonlinear behaviour of the platforms. This has prompted the code in 1970s to 1980s to put requirements for design and reassessment for accidental and nonlinear loads. The design checks allow for large plastic straining, buckling, plastic mechanism, etc., but not the collapse or overturn of the platform. Hence, Skallerud and Amdahl (2002) mentioned that several efforts were made with the support of major oil and engineering companies, to develop nonlinear analysis programs, which are capable of demonstrating the strength reserves offered by ductile steel material and inherent load redistribution capability in statically indeterminate structures.

Hellan (1995) studied nonlinear collapse analyses and nonlinear cyclic analyses in ultimate limit state design and reassessment of offshore jacket platforms. The aim was to develop an assessment methodology based on system strength rather than component strength and to demonstrate the feasibility of the method. As part of the work, a computer program called USFOS was developed. Six North Sea jacket platforms were analysed according to the developed procedure. The occurrence of the system collapse with first member failure and cases of system strength in excess of first member failure were identified. Probabilistic Monte Carlo simulation was performed with parametric study on yield strength and initial imperfections. Small variation was found in the collapse strength due to variables sensitivity. Latin hypercube sampling method was used to generate random sample vectors. PROBAN software was used to generate statistical inputs and for processing the results of the statistical study.

Prior to that, an extensive study on the development and application of numerical finite element in collapse analysis of plates and truss shaped tubular structures was conducted by Mreide et al. (1986). This work was founded from the previous works of several studies, i.e. Ueda and Rashed (1975), Aanhold (1983) and Rashed (1980), which paved the way for the progressive collapse analysis.

In early 1990s, several researchers have statistically verified the predicted ultimate strength against observed storm damages at GOM by conducting pushover analysis on partially damaged jacket platforms to identify the failure sequence and ultimate collapse load. This is kind of first attempt to rationally evaluate the situation from hurricane damages. Here the extreme hurricane load is predicted probabilistically by adopting new wave theory for extreme random wave kinematics and the effect of current blockage, both introduced in the 1980s. Parametric study was also conducted by van de Graaf and Tromans (1991) to identify magnitude and direction of the load that caused the damage.

To evaluate and validate the performance of the collapse analysis in reality, the Frames project was initiated in 1987. Four units of 2-bay X braced large-scale tubular frames were collapse tested. The test set-up was designed to exhibit different modes of failure allowing for various aspects of reserve and post-failure residual strength to be examined. The project gave an insight into the role of redundancy and failure of tubular joints in a framed structure. This type of work in nature and scale had not been investigated before. A computer program, called SAFJAC, was developed for the nonlinear pushover analysis of framed structures with an aim to accurately represent both the material and geometric nonlinearities. From the study by Bolt et al. (1994), the frame test data from the project correlated well with the experimental results using the developed program. Following this, during the 1990s, many computer programs were developed by several researchers with the aim to improve computational efficiency and to reduce cost. Billington et al. (1993) mentioned that though the structural analysis methodology has advanced considerably over the years, still there exist variable capacities of nonlinear software and their validation with physical tests. Yet, the results from those analyses are well accepted without any prejudice.

Bea and Mortazavi (1996) have developed a simplified assessment method for system strength called ultimate limit state limit equilibrium analysis (ULSLEA). ULSLEA generates shear capacity depth profile based on simplified assumptions, and compares it with storm loading profile. Vannan et al. (1994) presented a simplified ultimate strength assessment procedure, called simplified ultimate strength analysis (SUS) which provides a lower bound estimate of the actual strength utilizing linear collapse analysis instead of nonlinear analysis. The method reasonably predicts the actual failure modes but has some limitations.

Studying parametric effects in collapse analysis, soil resistance has been analysed for wave and current loading (Mostafa et al. 2004). System reserve strength ratio from variations in water depth, topside and metocean loadings has shown significant affection (Pueksap-anan 2010). Study on sensitivity of metocean values on reliability of existing platforms suggested pile soil interaction is to be considered in the pushover analysis to capture foundation failure mechanism (Zaghloul 2008).

Ersdal (2005) has performed pushover analysis to assess the system strength of existing jacket platforms in North Sea. Multiple safety ratios such as RSR, structural redundancy (SR), damaged strength ratio (DSR) and residual strength factor (RIF) were presented as indicators to the level of excess capacity contained by existing platforms. Nevertheless, RSR from the static nonlinear collapse analysis is widely adopted to demonstrate adequate excess strength of existing platforms to withstand specified loading conditions. Apart from RSR, base shear at the bottom of the platforms during collapse could be used to demonstrate the strength of the existing platforms. Figure 3 shows the computation of base shear at different levels of static nonlinear collapse analysis.

3 Structural Reliability Analysis

Until the 1970s, structural engineering was dominated by deterministic approach where the design codes stipulated characteristic resistance and load properties based on past experiences. With the inherent high safety factors which were believed to produce absolute safety, the same was revisited with strings of accidents and their serious consequences. Hence, the work on probabilistic approach with structural reliability analysis gained traction to quantify and control the risks available within a structural system. Offshore jacket platforms demand for high attention in structural reliability analysis perspective as they are placed at random environment with serious consequences for failure. Therefore, probabilistic approach became a necessity (Guenard et al. 1984; Meng et al. 2020). The probabilistic approach was first introduced into the design code by adopting multiple safety factors accounting for various load and resistance uncertainties encountered in the structural design. The factors are determined corresponding to their relative importance (of the reliability of the structure) of the uncertainty it accounts for and following the overall reliability level. The probabilistic approach is also applicable in the reassessment of existing platforms.

The ability of a structure to provide fitness for purpose, under different conditions, i.e. operational, extreme, accidental, fatigue, etc., for a specific time period is, in principle called the structural reliability. The performance of the structure can be calculated mathematically, as the probability of failure, which occurs when the limit state function portraying the evaluated condition is violated. The violation of the performance function is measured in terms of probability of failure, reliability index and/or return period of the event.

Reliability analysis is categorised into four levels. Use of characteristic values alone places the analysis in level I while inclusion of standard deviation, σ and coefficient of correlation (COV) places it at level II. Addition of cumulative distribution function (CDF) puts it in level III. Finally, level IV consists of analysis of engineering economics with uncertainty (Fadly 2011).

Figure 4 shows typical representation of probability density function (PDF) of resistance, R and load, L variables in reliability computation. The central safety margin depicts the reliability of a structural system considering both the characteristic load and resistance parameters. The beginning and end points of the safety margin represent both the central moment (mean, μ) of load and resistance density functions respectively. Probability of failure is the intersection of both density functions as shown shaded in the figure.

Theoretical representation of probability of failure (Moses and Stahl 1979)

In mathematical terms, probability of failure is indicated as the violation of limit state function. Typical limit state function and probability of failure are shown in Eqs. (2.1) and (2.2) respectively.

where R is the resistance, L is the load, G is the limit state function and Pf is the probability of failure. Here, G < 0 indicates the failure region, G > 0 indicates the safe region and G = 0 as the failure surface. Hence, Pf is accepted as the probability of the limit state function less than zero, i.e. an event where the load exceeds resistance.

For the statistical representation of extreme variables in reliability computation, extreme values distributions such as Gumbel, Fretchet and Weibul are the typical theoretical distributions, commonly adopted to model the uncertainty parameters. These distributions are suitable for the use of maximum of infinite number of extreme events. They represent the maximum intensity by capturing the upper tail characteristics of the distribution. In offshore engineering, type 2 Weibull cumulative distribution function (CDF) is well adopted by many to represent the environmental load uncertainty. The required statistical parameters are the scale and shape parameters of the distribution. This distribution is suitable for rare events of interest such as maximum wave height, wind and current velocity. Typically, wave load is considered as probabilistic, while current and wind loads are deterministic. This is supported by many works in the literature. Recent study on joint density characteristics of measured and Hindcast metocean data in Malaysian waters using Weibull distribution for its extreme event analysis also has verified this (Mayeetae et al. 2012).

Since the preliminary work on structural reliability started in the 1940s to 1950s by Freudenthal, Pugsley and others, and followed by actual works encompassing basic philosophy and some simple calculation procedures were carried out later, there have been extensive and far reaching developments to date (Baker 1998). In the offshore industry, the early works by Bea (1974), Marshall (1979) and Fjeld (1978) have demonstrated the applicability and possible benefits of using reliability analysis as the tool for design and reassessment decision making. Following that several methods in conducting reliability analysis were outlined. The methods could be used in pre-design stage to perform comparative parametric analysis as well to perform overall reliability analysis under extreme conditions (Guenard et al. 1984). That was in accordance with the National Research Council’s DIRT (Design, Inspection and Redundancy Triangle) conference, held in 1984, called for expansion of new reliability analysis techniques. Following that, many studies were undertaken to meet the oil industry needs at that time. The gap between the use of probabilistic representation of loads, materials and design criteria and full structural system-level interactions and implications were identified and addressed by Cornell (1987). In addition, extensive study on new methods for reliability analysis was undertaken.

Karamchandani (1990) studied more realistic modelling of nonlinear behaviour of truss and frame-type structures. Member failure sequence leading to system failure was looked into by analysing the union of intersections of member failure events to obtain structural failure event. Branch and Bound method and FORM were utilised in this study. Sensitivity of failure probability due to distribution parameters such as mean and variance of random variables was studied. A new method which could be used with wide range of simulation techniques such as Monte Carlo simulation, latin hypercube sampling and importance sampling was developed for estimating the sensitivity. A different approach using FORM and SORM to estimate the sensitivities was also developed. This work garnered the attention of many researchers till today with regard to the methods to compute structural reliability in component and system levels.

Procedural wise, Tromans and van de Graaf (1994) devised a reliability assessment practice based on Hindcast data and pushover analysis with the help of probabilistic method. This was in the aftermath of Hurricane Camille in GOM, to obtain comparative reliability index and probability failure of jacket platforms affected by the hurricane. They concluded that reliability technique can be confidently applied to the problem of optimisation of design and reassessment. Following that, a method that utilises Hindcast data to generate extreme load distribution function in the load model and resistance model was derived by Efthymiou et al. (1997).

From the mid 1990s, many researchers have looked into more diversified procedures and methods to conduct reliability analysis, mainly for calibration works of limit state design codes. BOMEL Ltd. (2002) has utilised response surface method in performing reliability analysis for calibration of ISO code for North Sea region. Similar methodology was also utilised by many other researchers in many different regions in the development of regional specific environmental load factors (Zafarullah 2013). These works have referred mainly to the works of Moses (1987) who was involved in the development of calibration of API LRFD design code for jacket platforms in the GOM.

Frieze et al. (1997) has also referred to the works of Moses, for his reliability analysis method utilizing response surface for jacket and jack-up platform performance comparison in North Sea. Onoufriou and Forbes (2001) have summarised the available methods for reliability analysis covering both deterministic and probabilistic, under extreme loading condition for design and reassessment cases. Ersdal (2005) has referred to the works of Heideman (1980) whom has provided a simplified expression for the horizontal wave load in load functions of reliability analysis limit state function. He has developed a probabilistic model to establish the annual failure rate of any platform undergoing reassessment. Kolios (2010) in his work, with an objective to provide alternative procedure for reliability analysis, has developed normal and adaptive response surface algorithms that combine FORM/ SORM with linear and quadratic stochastic response surface approximation. He utilised simulation results from finite element collapse analysis combined with numerical reliability procedure via multivariate (quadratic) polynomial regression to compute reliability indices in component level. Lee (2012) has developed finite element–based system reliability analysis framework and method named FE-SRA and B3 respectively.

The proposed framework allows computation of failure probabilities of general system events with respect to design parameters based on component-level finite element reliability analysis. Meanwhile, the developed method helps in identifying critical system failure sequences efficiently and accurately. Thus, it brings significant reduction in computing time and simplicity in the procedure.

In Malaysian waters, in the early years of operation, adopting API WSD code, with a very minimal site specific data and statistics on load and resistance parametric values, platforms that were designed and installed often have the issues ranging from redundancy to overdesigned components. Currently, in managing the integrity issues related to these ageing platforms, industry utilises pushover analysis coupled with simplified structural reliability analysis (SSRA) methodology. SSRA method was jointly developed with DNV in 1999 utilizing 13 jacket platforms in Baram and Balingian fields in Sarawak region. It is a simplified and quick approach to the typical comprehensive SRA. The analysis utilises predetermined covariance and bias values adopted from SRA for a particular type of structure from the same operating region. From the analysis, reliability indices are obtained and subsequently compared with code-specified target reliability indices to make decision. As an alternative to the typical practice of utilizing SSRA, a recent study attempted to establish statistical relationship between reliability index and return period from Malaysian regional specific data (Fadly 2011). From the work, correlation graphs among the reliability parameters with high magnitude of correlation coefficients were established for the region. Comparison between SSRA computed and statistically analysed reliability parameters were done. Table 1 presents the adopted bias and covariance values.

In theory, two types of reliability analysis methods are available namely the simulation and analytical-based methods (Melchers 1999). Monte Carlo simulation (MCS) is the main example of simulation-based method while the other method is the moment-based method such as first-order reliability method (FORM). Monte Carlo simulation has been applied in many fields of science to generate random sampling sets of uncertain variables. Typical limit state functions utilised in the reliability analysis are the nonlinear type and consist of both normal and nonnormal basic variables (Cossa 2012). Hence, literature typically suggests using FORM for evaluation of the reliability indices. FORM also has been applied in the development and calibration of the load and resistance factors design codes such API RP2A LRFD and ISO 19902 (Zafarullah 2013; Moses 1987). It is typically used in structural optimisation practices to arrest uncertainties in reassessment parameters which lead to achieving a cost effective structural integrity management practice (Onoufriou and Frangopol 2002; Moses 1997; Toğan et al. 2010).

Describing further on Monte Carlo simulation, it is widely used, robust, easy-to-use and produces high accuracy provided large number of samples is utilised. Yet, it requires significant number of analyses to be performed for achieving quality approximation of values. It helps to approximate the probability of an event from a stochastic process. Many researchers have utilised MCS in offshore engineering as an alternative to evaluate the safety of the structures (Kolios 2010; Cossa et al. 2012; Kurian et al. 2013a). Though it is believed to give crude values, yet it is well adopted due to its simplicity (Veritas 1992). The method generates virtually, large number of random design variables based on statistical distribution of the variables. Later, it will be checked with limit state function against failure. Following a negative value from the evaluation of the limit state function, the structure is considered has failed. This process is repeated for a large number of times and the probability of failure is estimated as the number, of failed samples divided by the total number of simulations.

The number of random simulations generated highly affects the accuracy of this method. Furthermore, the accuracy declines when the probability of failure is estimated to be insignificant. In addition, the computation time increases for small probability of failures (Veritas 1992). Frieze et al. (1997) mentioned that Monte Carlo simulation produces noisy approximation of probability which becomes difficult to use for gradient-based optimisation compared with FORM. For cases of large number of random variables or for a very low probability of failure magnitude, the analysis requires large number of sampling sets, thus increases the computational time and effort (Kolios 2010). Nevertheless, in the literature the method has been widely used for computation of complicated integral of probability of failure using the outputs from multiple computational experiments.

Meanwhile, the FORM has evolved since Cornell in 1969 proposed mean value first-order second moment (MVFOSM) reliability method. The MVFOSM method is applicable for linear and nonlinear limit state function equations. Yet in case of high non linearity, this method is not suitable. This method fails to be invariant with different mathematical equations of similar question (Yu 1996). This method is not further explained as it has not been utilised in this work.

Hasofer and Lind (1974) proposed improvement to the Cornell approach and called their method as HL method. This method produces better results for nonlinear functions as it takes design point as the most probable point (MPP) for the approximation of limit state function instead of mean value. Multiple iterations are able to be run to converge the results. Distributions of variables are utilised in obtaining reliability indices. This method has limitations in nonconvergence issues in certain cases. It only caters for normally distributed random variables. To arrest these, Hasofer Lind-Rackwitz Fiessler (HL-RF) method was adopted. This is an extension of HL method, where the reliability indices could be obtained for the nonnormally distributed variables. The iteration issue for the nonconvergence problem was solved. Detailed analytical description of all these methods are found in Choi et al. (2007).

In summary, the building block of FORM is the HL-RF iteration method which enables the transformation of random variables. The limit state function and its gradients are evaluated in the standard normal space. The design point (MPP) is obtained and for each design point evaluated, the convergence is checked and repeated until convergence is achieved. Finally, the reliability index is calculated as the shortest distance from origin to the MPP.

The origin of HL-RF method is also from the MVFOSM method. In this method, the main variables are the mean and standard deviations; distribution is unnecessary. Taylor series expansion is used to linearise the limit state function as the expansion of limit state equations based on the mean value. From the HL-RF method, utilizing the mean and standard deviations, considering the variables as independently distributed, approximate limit state function at mean value is given by Eq. (3.1).

where μx = (μx1, μx2……μxn)T, ∇g(μx) is the gradient of g evaluated at μx as shown in Eq. (3.2).

The mean value, \( {\mu}_{\overset{\sim }{g}} \), of the approximate limit state function, \( \overset{\sim }{g}(X) \), is shown in Eq. (3.3).

where variances [g(μx)] and [ ∇ g(μx]) are zero. Hence, the standard deviation, \( {\sigma}_{\overset{\sim }{g}} \), of the limit state function \( \overset{\sim }{g}(X) \) is shown in Eq. (3.4).

Thus, the reliability index, β, is given by Eq. (3.5),

The formulation for reliability index above is similar to the linear limit state function where reliability index is defined geometrically as the distance from the failure surface depicting mean margin of safety. Typically, considering R and L as the independent (noncorrelated) and normally distributed resistance and load variables respectively, with central tendency, i.e. means, μR and μL, and standard deviations (dispersion about the mean), σR and σL, reliability index, β, is defined as in Eq. (3.6). Meanwhile, the relationship between reliability index and probability of failure, Pf, is shown in Eq. (3.7) (Choi et al. 2007).

where Φ() is the standard normal cumulative distribution function (CDF), μG is mean of the limit state function and σG is the standard deviation of limit state function. Here, the correlation coefficient between R and L is zero, i.e. non correlated variables. If the variables are correlated, the standard deviation of the limit state function would be as in Eq. (3.8).

In some instances, time-independent joint probability density functions have to be considered in probabilistic models for reliability analysis. For that purpose, in simplistic manner, the probability of failure, Pf, can be written as in Eq. (3.9).

where fx(x1, x2…xn) is the joint probability density function for the basic random variables of x1, x2…. xn and g() < 0 is the failure region. The direct integration of the above is complicated; hence, the application of moment-based approximate methods such as FORM is used to evaluate the limit state function. Typically, FORM is adopted in cases where the limit state function is linear or when there are uncorrelated normal load/resistance variables or when the nonlinear limit state function is presented by first-order (linear) approximation with equivalent normal variables.

In cases of high nonlinearity in the limit state function, approximation techniques above contain drawbacks in terms of the accuracy of the reliability index. Thus, linear mapping of basic variables into a set of normalised and independent variables is adopted. This approach utilises the design point, i.e. the MPP as the approximation of the limit state function replacing the mean and variance values. The standard normalised random variables for resistance and load are given in Eq. (3.10):

where μR and μL are mean values of random variables of resistance and load respectively, and σR and σL are standard deviation of random variables of resistance and load respectively.

The transformation of the limit state surface g(R, L) from original coordinate system into standard normal coordinate system \( \left(\hat{R},\hat{L}\right) \) is given in Eq. (3.11).

In geometrical form, the shortest distance from origin in the \( \left(\hat{R},\hat{L}\right) \) standard normal coordinate system to failure surface of \( \hat{g}\left(\hat{R},\hat{L}\right) \) is equal to the reliability index, \( \beta =\hat{O}P \)* = \( \left({\mu}_R-{\mu}_L\right)/\sqrt{\sigma_R^2+{\sigma}_L^2} \) as shown in Figure 5. Here, the point P* is the MPP.

Geometrical illustration of reliability index (Choi et al. 2007)

Typically for independent and normally distributed variables, the failure surface is a nonlinear function as given in Eq. (3.12)

Hence, the variables are transformed into standardised forms by adopting Eq. (3.13),

where μxi and σxi are the mean and standard deviation of xi respectively. The values of mean and standard deviation of standard normal distribution are 0 and 1 respectively. Thus, the reliability index is taken as the shortest distance from the origin to the failure surface as given in Eq. (3.14) and illustrated in Figure 6.

Mapping of failure surface from X space to U space using HL method (Choi et al. 2007)

From the above theoretical computational methods to find reliability index, it is observed that they are utilizing a solution of a constrained optimisation problem into the standard normal U space. Several algorithms were developed by many previously to perform these optimisation procedures. The Finite Element Reliability Using MATLAB (FERUM) which is an open source shell program originated from University of California, Berkeley, (Bourinet 2010) is utilised by many to develop reliability algorithms. The shell program contains probability density function resources which enable random variables to be transformed from its original physical space (X space) to an equivalent standard normal distribution space (U space). It helps to ease the iteration/optimisation process to transform the random variables in finding the design point, i.e. MPP until the convergence is reached and reliability indices are calculated. Here, in analysing the time-independent joint probability density functions, it is replaced with Nataf counterpart specifying marginal distributions and Gaussian correlation structure among the random variables. This in return produces library rich with probability density models.

3.1 Component Reliability Analysis

In the offshore jacket platform reassessment, violation of limit state function occurs when a structure exceeds a particular limit state; hence, the jacket is unable to perform as desired. The main limit state under typical concern is the ultimate limit state which is relevant to collapse of structure or failure of component. Jacket platforms typically have high redundancy and numerous different load paths such that failure of one member/component is unlikely to lead to catastrophic structural collapse. Yet, in minimally braced structures and/or critical members (leg members especially), if a component is damaged or the platform experience extreme loads, it would not have sufficient alternative load paths to redistribute the loads. Hence for component reliability, member internal stresses are obtained from structural analysis.

Handful of studies were reported on component reliability of jacket platforms (Baker 1998; Karamchandani and Cornell 1992; Shabakhty 2004; Chin 2005; Kolios 2010; Lee 2012; Morandi et al. 2015). The aim of most of the works was to evaluate the stresses in each component and compare against the code-determined functions. Most works utilised WSD code as the code produces generally conservative values than the other limit state-based codes. It produces less conservative design values when the stresses due to environmental loading are significantly higher than the gravitational loadings (Zafarullah 2013). The magnitude of the stresses and corresponding strengths determine the failure probability.

Typically, the material yield strength and dimensions of tubular members govern the strength characteristics while the gravitational and environmental loads control the load characteristics of tubular members. In a jacket platform member stress evaluation, typically the gravity load dominates the leg members while the environmental load dominates the brace members (Frieze et al. 1997). The gravitational load consists of the self-weight of the platform and its appurtenances as the dead load and live loads. As for the strength, the yield strength uncertainty dominates the failure probabilities of tubular legs and brace members. Axial tension or compression and bending stresses due to yielding of material (from local or global buckling) and hydrostatic stresses are the common types of stresses found affecting any members.

Typical jacket platform tubular members mostly experience combined compression and bending stresses. Resistance model for tubular members consists of variables of member geometry such as diameter and wall thickness, and material properties such as the yield strength (Zafarullah 2013). The adopted acceptance criteria are the nonviolation of the code prescribed limit state functions. Following that the failure probability of each component are determined. The statistical parameters from a recent local study are given in Table 2. The study was conducted for the purpose of establishing environmental load factors for the regional annex of ISO limit state code calibration.

In modelling the load and resistance uncertainty for component reliability, the ISO code calibration adopted approach was widely utilised. The uncertainty coefficients depend on the statistical parameters of the basic variables and the applied stresses. Their distribution is also to be identified by Zafarullah (2013). The typical model uncertainty (i.e. bias) coefficient definition is shown by Eq. (4).

In performing component reliability, the load model consists of gravitational and environmental loads. For gravitational load, its statistical values are obtained from operator from the available structural model, design reports and drawings. The statistical parameters for the environmental load model are determined from platform specific metocean data obtained from operator. These data then approached with statistical analysis using type 2 Weibull CDF, adopted from literature. For determining the resistance model, the typical basic variables for resistance of jacket platform such as member geometry and material properties are sought after. Recently, a study on extensive data from a leading local fabrication yard has established the resistance model and resistance model uncertainty values to be used locally for component reliability (Kurian et al. 2013b). In a separate work, the load model uncertainty values have been established (Zafarullah 2013).

3.2 System Reliability Analysis

Different than component reliability, system reliability manages the uncertainties affecting the overall reliability of a structural system. System reliability of jacket platforms has been studied by many researchers with multiple objectives (Bea 1974; Guenard et al. 1984; Cornell 1987; De 1990; Karamchandani 1990; Tromans and van de Graaf 1994; Onoufriou and Forbes 2001; Chin 2005; Ersdal 2005; Melchers 2005; Kolios 2010).

The analysis is commonly utilised for reassessment, code calibration and optimisation purposes. There are few studies reported on component to system reliability using techniques such as Branch and Bound method and enumeration method.

Offshore jacket platforms are generally comprising of highly redundant structural members; hence, failure of any single member, in principle, would not cause the failure of the overall structure. The reserve capacity between the first member failure to the overall structural failure is, generally, significant (De 1990) except for structures with minimally braced members or having critical members. In structural reliability, redundancy is often defined as the conditional probability of system failure, given the first failure of any member. Meanwhile, the ability of a structure to survive the extreme load demand in different damaged conditions is defined as robustness (Cornell 1987). Robustness and redundancy are inherent in any offshore jacket platforms due to multiple design demand such as loading conditions during load-out, transportation and installation. These provided system effects which bring inherent reserve in the strength of typical offshore jacket platforms.

Guenard et al. (1984) studied the application of developed reliability methods in the early 1980s on system reliability of offshore platforms under extreme metocean condition. Similar study has been conducted elsewhere (Nordal et al. 1987). Improvements were made to the available methodology by including more realistic behaviour of softening/hardening effects instead of plainly assuming strain independent post-limit behaviour of the tubular members (Gierlinski et al. 1993). Later, new methods were developed by applying more realistic modelling of nonlinear behaviour on truss and frame type of structures. Member failure sequence leading to system failure by analysing the union of intersections of member failure events to obtain structural failure event was developed utilizing Branch and Bound method and FORM (Karamchandani 1990). These were referred by many to be adopted with other possible simpler techniques to identify system reliability utilizing component reliability analysis of jacket platforms (Kolios 2010). Many also have studied comprehensively on the available reliability approaches for integrity management of ageing jacket platforms (Onoufriou 1999; Onoufriou and Forbes 2001; Onoufriou and Frangopol 2002). System reliability in the form of SSRA is adopted (Fadly 2011).

Generally, from the nonlinear collapse analysis, RSRs are obtained from 8 or 12 directions of a platform, depending on its number of legs. That is followed by system reliability analysis to evaluate the system reliability indices. RSR helps to demonstrate structure capacity sufficiency and stability against overloading (ISO 2007). Reliability indices obtained from system level analysis are compared with target reliability indices from the codes. The minimum requirement for probability of failure outside of GOM region is 1 × 10−4 for high-consequence platforms and 1 × 10−3 for low-consequence platforms. While, the minimum value of RSR is 1.60 for high-consequence platforms and 0.80 for low-consequence platforms (API 2007).

According to PTS (2010), the minimum value for RSR is 1.50 for high-consequence platforms and 1.32 for low-consequence platforms. These limits are conservative compared with the API prescribed limits. They were established from the risk assessment and acceptable consequence of failure (Ayob et al. 2014a, b). Further detail on the definition of the platform consequences, reference should be made to the platform exposure categories in the appropriate codes. The details of the procedure and methodology for the development of acceptance criteria and target reliability for an example region, Arabian Gulf, may be referred to Amer (2010).

In a recent work on the development of system reliability analysis procedure, Ersdal (2005) has provided a simple probabilistic model function between RSR and reliability indices. The system limit state function, G, is given in Eq. (5).

where the Load is the design environmental load for 100-year extreme condition that consists of wave height, current velocity, wind speed or combination of any of these. In the above function, a simplified expression of the wave load model (F) following the earlier work by Heideman (1980) is presented. The expression, as given in Eq. (6), utilises wave height (H) and current velocity (u) as the significant environmental parameters.

Here, C1, C2 and C3 are the curve fitted parameters based on the calculated platform specific loadings. Similar expressions also have been presented elsewhere which will be further explained later.

4 Uncertainty Analysis

Structural reliability analysis is based on probability theory and its treatment to different uncertainties involved. Probability theory treats the likelihood of occurrence of an event and eventually quantifies the uncertainties of random events. Structural reliability analysis is sensitive to uncertainty modelling (Mark et al. 2001). In offshore jacket platform reassessment, probability theory is utilised to manage the risks involved due to the uncertainties from the design parameters. This is because the offshore jacket platforms are placed in random environment and have high failure consequences. Uncertainties are dealt with by taking into consideration random variable parameters of load and resistance. Uncertainty modelling is the first important step in reliability analysis of jacket platforms.

Load and resistance are random variables. Uncertainties due to randomness in applied loads, structural material resistances and errors in computer models are always present and must be considered in structural reliability computation. Load and resistance uncertainty analysis is done utilizing available basic information about the random loads and resistance variables. Meanwhile, modelling uncertainties are analysed utilizing the physical models that predict the load effects and the structural responses. Structural analysis of offshore platforms is also subjected to uncertainties. Strength evaluations are not exact, true values deviate from idealised computer model (Baker 1998).

Uncertainty reflects lack of information. Hence, the randomness typically will be showcased by utilizing the probability density function (PDF) or called frequency function with mean (central tendency), standard deviation and lower and upper tail values of the density function. A PDF represents relative occurrence/frequency of certain events for random variables. Randomness in load and structural resistance variables is identified through either field data collection or from survey of literature, and fitting the data utilizing probability distribution functions to generate statistical parameters such as mean, standard deviation and coefficient of variation. Figure 7 shows a typical density function which presents the centre of the curve as the most probable point while the tail regions as the less probable points in the occurrence of an event. Interval Information is used in situation where incomplete or imperfect data available and this is defined within an interval of known lower and upper bounds (Choi et al. 2007).

Probability density function and interval information (Choi et al. 2007)

Statistical uncertainty is considered in the structural reliability analysis by assessing mean, variance/ standard deviation/ coefficient of variation (COV) and probability distribution for the available random variables. Bias factors have been utilised as modelling error in reliability analysis upon determination of ratio between model predictions and actual test results. Bias is defined as mean over nominal value. Design parameter variation due to uncertainty is able to be modelled and utilised with available probabilistic methods provided reliable statistical parameters can be determined from statistical data. Several analytical and simulation methods are available to address the uncertainty in structural analysis using probability theory.

In structural reliability analysis, commonly adopted probability distribution functions for random variables are the extreme, normal and lognormal types. Rare events such extreme load variables require the extreme types of distributions like Weibull and Gumbel; meanwhile for structural resistance variables, the normal and lognormal distributions are typically fitted in the literature. Wind load which carry insignificant effect to the responses of offshore structures is treated deterministically in most instances, except for very wind sensitive structures.

In system reliability load model computation, to probabilistically arrest the wave loading uncertainty, an uncertainty factor, α, is adopted (Dalane and Haver 1995; Ersdal 2005). The uncertainty factor is normally distributed with mean value 1.0 and COV 0.15. Meanwhile, type 2 Weibull distribution is mostly adopted in the literature to perform the environmental load model computation. Maximum wave height, Hmax, has been utilised typically for the load model computation. Hmax is the design wave for particular platform site for 100-year extreme condition. This maximum load is the most critical variable which could occur during the entire service life of a platform. ISO and API codes insist utilizing 100-year extreme condition of wave parameter for the design and assessment of jacket platforms.

Meanwhile the resistance model in the system reliability limit state function is described as the platform specific design loading multiplied by the platform RSR. Similar to the wave loading uncertainty factor, α, the resistance model uncertainty factor, β, is adopted from the literature. The uncertainty factor for resistance model is normally distributed with mean value 1.0 and COV 0.10, in respectively (Efthymiou et al. 1997; Ersdal 2005).

In the limit state code calibration exercise, for the component reliability analysis, researchers from many regions have undertaken load and material resistance uncertainty studies. The randomness in material comes from geometry and properties such as diameter, thickness, length, yield strength and tensile strength. Zafarullah (2013) has conducted environmental load calibration study for Malaysian waters by collecting material data from materials test report and performing field measurements at an ISO certified leading local fabrication yard. The collected data were used to compute the statistical parameters for the random resistance variables. Similarly, for the modelling of the loading uncertainty, ISO code equations and randomly generated environmental load parameters using type 2 Weibull distribution function from sample platform data, were utilised to obtain member internal stresses and extrapolated return period values of environmental parameters respectively.

Zhang et al. (2010) studied several types of uncertainty models for reliability analysis of an existing offshore jacket structure affected by marine corrosion. They compared and studied the results in terms of numerical efficiency adopting probabilistic models such as pure probabilistic, interval modelling, fuzzy methods and imprecise probabilities. The models were utilised to find upper bound for the failure probability due to the corrosion effect. Kolios (2010) has developed a probabilistic component reliability analysis procedure by arbitrarily utilizing stochastic variables from literature in validating and computing the reliability indices.

5 Response Surface

In this category, the response surface method which has been adopted by many to apprehend the inherent uncertainty in the load and resistance variables represented in the limit state function of reliability analysis is presented. This is considered among the nonintrusive stochastic approaches deployed in the domain of reliability analysis (BOMEL Ltd. 2002; Chin 2005; Kolios 2010). Among the other stochastic approaches are Importance Sampling, Latin Hypercube Sampling, Adaptive Response Surface and so on (Fjeld 1978; Olufsen et al. 1992; van de Graaf et al. 1996; Zhang et al. 2010; Mousavi and Gardoni 2014). In formulating limit state functions utilizing the load and resistance models, response surface method is helpful in generating respective load and resistance coefficients which are eventually utilised in producing response surface equations. Later, these equations are integrated into the limit state functions which are then used in the reliability analyses. According to Shabakhty (2004) in offshore structure reliability analysis, the uncertainty in random variables may have significant effect on the response of platform and therefore affect the probability of failure. Hence, response surface is suitable to be utilised to incorporate the random variables implicitly in the limit state function. Literature claims that response surface method is useful in obtaining parametric representation of environmental loads on jackets. The method evaluates implicitly the limit states at predefined number of points and fits a function to those points. This function is then utilised in reliability analysis (Petrauskas and Botelho 1994). The method has been utilised as an alternative to physical transfer function, using random variables in explicit multivariate functions. It is claimed that the approach is able to reduce the number of structural analyses required for probabilistic analysis (Chin 2005). The effective use of response surface depends on the proper selection of sampling points (Shabakhty et al. 2003). In the method, mean and standard deviation of basic random variables are utilised in enhancing the efficiency and accuracy of the simulations (Bucher and Bourgund 1990). A modified procedure using vector projection approach which provides better results may be available by Kim and Na (1997).

Many researchers agreed that response surface approximates well the limit state functions using simple and explicit mathematical polynomial functions of random probabilistic variables (Shabakhty 2004; Kolios and Brennan 2009). In their works, detailed explanation on the selection and justification of quadratic polynomial function is given. The coefficients of the functions are obtained from fitting the functions to multiple arbitrary member and system responses from the conducted structural analyses. Random variables are simulated using simple algorithm and the response surface function is fitted upon the simulation results. Chin (2005) has categorised response surface into two-level model, i.e. global and local responses prior to computing structural reliability indices in component and system reliability analyses. This approach is able to relate the environmental parameters acting on the structural system such as wave height and current velocity to the member internal stresses. This approach has been adopted by many previously in performing response surface analysis to obtain the system and component responses from the applied environmental loading (Lebas et al. 1992; Karunakaran et al. 1993; Cassidy et al. 2001). Lebas et al. (1992) compared the results from the response surface with results from direct simulations and verified the results.

Global response surface relates the environmental load to the global responses of the structure. Wave height and associated period, current velocity and wind speed are among the typical environmental parameters. The structure base shear and overturning moment are the typical global responses. The local response surface relates the global responses to the local responses namely member internal stresses such as axial and bending stresses of each component. The general limit state functions for the global and local responses are given in Eqs. (7.1) to (7.4) respectively.

where BS = base shear and OTM = over turning moment

where G and g = global and local limit state functions respectively, BS = base shear, OTM = overturning moment and a, b and c are the response surface coefficients. For the internal stresses here, base shear (BS) is used arbitrarily.

In the development of LRFD limit state codes for API, response surface method was utilised to fit the response surface coefficients from multiple simulation of wave height random variable (Moses 1987). Equation (8) was suggested to obtain the environmental load effect from the multiple wave height simulations.

where W = environmental load, H = wave height and A and α are the response surface coefficients.

Similar approach has been adopted in the ISO limit state code calibration study for North Sea and in a typical jacket structure reliability analysis (Efthymiou et al. 1997). Similar approach has been adopted locally due to its simplicity, in the environmental load factor calibration exercise for the adaptation of the limit state code (Cossa 2012). Adopting from the study by Heideman (1980), Petrauskas and Botelho (1994) have proposed to adopt additional environmental parameter, i.e. current velocity together with the wave height. They believed that the addition of current will better represent the inherent uncertainty in the environmental loading criteria. The suggested response surface function is as in Eq. (6).

Tromans and Vanderschuren (1995) have suggested little complicated response surface analysis method. They studied extreme base shear and mud-line overturning moment responses due to multiple environmental criteria. Hence the function is complicated and contains many unknown coefficients to be fitted from response surface analysis.

In case of any additional environmental parameter is to be included in the response surface function, the approach by Cossa (2012) as in Eq. (9) could be adopted.

where W = environmental load, H = wave height, Vc = current velocity, Vw = wind speed and a, b, c, d, e, f and g are the response surface coefficients obtained from nonlinear Least Mean Square fitting method with 95% confidence level and R2 value above 0.90.

In Kolios (2010), detail review of the available stochastic methods is given. He advocated for stochastic expansion tools such as Karhunen-Loeve (K-L) expansion and polynomial chaos expansion (PCE) as an efficient approach to treat uncertainties through series of polynomials in computing reliability. But having to analyse complex system such as jacket platforms, simulation techniques such as stochastic response surface method (SRSM) is much preferred. SRSM approximates limit state function using simple and explicit mathematical functions utilizing relevant random variables. The functions are typically simple polynomials with coefficients obtained by fitting the response surface function to a number of sample points from computation of responses of the system and component. Later, algorithms that combine FORM with linear and quadratic response surface approximation technique were developed to estimate the reliability indices.

6 Failure Path

In some instances, for statically determinate structures, the reliability of individual members portrays the reliability of the whole structure as the failure of one member will lead to the structure failure. However, this is not the case for highly redundant structures. The failure of one or few members would not necessarily result in the collapse of the structural system as the system will contain numerous failure paths. From the random nature characteristic, some failure paths are more likely to occur than others. The probability of occurrence of those failure modes and their method of determinations are the basis for the system reliability through failure path analysis.

6.1 System Reliability