Abstract

Polynomial approximation of smooth functions is becoming increasingly important in fields like numerical analysis and scientific computing. These approximations are vital in models that rely on spectral methods. To reduce the memory costs for large dimensional problems, various methods to provide data-sparse representations have been proposed, including methods based on singular value decomposition, adaptive cross approximation, and matrices with hierarchical low-rank structures, to mention a few. This work presents implementation details on the polynomial approximation of univariate smooth functions through the Polynomial1 class, and of bivariate smooth functions by low-rank matrix representation via the Polynomial2 class. These approaches are explained within Tau Toolbox, a mathematical software library for solving integro-differential problems by the spectral Tau method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The importance of approximating smooth functions by polynomials lies in the simplicity, computational efficiency, analytical tractability, and applicability to various mathematical and scientific problems. It provides a versatile tool for numerical computation, modeling, analysis, and understanding complex phenomena. These approximations are vital in models that rely on spectral methods [1].

To reduce the memory costs for large dimensional problems various methods to provide data-sparse representations have been proposed, including methods based on singular value decomposition (SVD) [2], hierarchical matrices [3] such as adaptive cross approximation (ACA) [4], hybrid cross approximation (HCA) [5], rank-revealing QR factorization, and randomized algorithms like the randomized SVD. All the cited methods are low-rank approximation methods, meaning they approximate the initial matrix by one of low rank in the outer product form.

Choosing such methods is not straightforward and most often involves a trade-off between efficiency and accuracy. It can be proved that methods based in SVD are the most accurate but the computational costs are usually high [2], while the others although not so accurate are much more efficient computationally [4]. Thus, choosing a convenient approach is not direct and is problem dependent.

In this work we show how to approximate smooth functions of two variables defined on rectangles by a polynomial using low-rank matrix representation, in line with [6]. The methodology is explained within the context of the Tau Toolbox software library, with a focus on its accuracy and efficiency. Furthermore, we delve into the implementation specifics of the Polynomial2 class, which adeptly handles all requisite operations. The Polynomial2 class implements a low rank approximation as an outer product form of univariate polynomial representations. These are implemented in the Polynomial1 class, which will be firstly detailed. For numerical stability reasons, the Tau Toolbox library implements the polynomials in terms of several orthogonal polynomial families.

The reminder of this paper is organized as follows. In Sect. 2 we present the Polynomial1 class, exploring the use of the whole family of classical polynomials. The functionality, efficiency and ease in working with this class will be highlighted. The low rank approximation implemented in Tau Toolbox for bivariate smooth functions will be addressed in Sect. 3, together with a plethora of illustrations. Finally, Sect. 4 contains our conclusions.

2 Polynomial Approximation for Univariate Functions

The need for creating the different polynomial classes (data types) came from the Tau Toolbox project. There these polynomials are used as approximations of smooth, or at least continuous, functions that are the solution of the solved integro-differential problems.

All the code migrated to a module called polynomial that can be used outside of Tau Toolbox as a standalone package. So polynomial development is mainly driven to address the needs of the Tau Toolbox project while keeping it self-contained.

Just like Tau Toolbox so polynomial is a mathematical software library available in both Python and MATLAB (R)/GNU Octave, which uses an object-oriented implementation to represent an approximation to a real function as a finite series of orthogonal polynomials. For one variable functions we use the class Polynomial1 to approximate the function

where \([a_{0},a_{1},\ldots ,a_{n}]\) are the coefficients associated with the elements in the polynomial basis \([P_{0},P_{1},\ldots ,P_{n}]\). In terms of widely available software packages that implement operations of polynomials, there are essentially two options: one in MATLAB and another in Python. MATLAB has a set of functionality that only applies to the standard power basis (functions polyval, polyfit and others that have the poly prefix). In Python, using a more object-oriented approach, NumPy [7] has the module numpy.polynomial that implements several orthogonal polynomial families.

For comparison the implementation in the polynomial package is more general since it implements any family of polynomials that follows a three-term recurrence relation. The implementation is more general than numpy.polynomial in the sense that it extends to other orthogonal families not available there while also supporting vector-valued and/or piecewise polynomials.

The polynomial package implements several classes. In this article, the focus is on Polynomial1, Polynomial2, and related classes required to their design.

The implementation of the Polynomial1 class is done in two steps:

-

The polynomial.bases.T3Basis abstract base class and corresponding derived classes; These classes implement the low-level details of the three-term recurrence relation polynomial bases. Almost all those bases are orthogonal.

-

The polynomial.Polynomial1 base class; This class implements the details related with the approximation of one variable functions in terms of polynomials.

The reason to separate the implementation in two parts is that it allows to place all the code that deals with an orthogonal polynomial basis, of a single variable and a given orthogonality domain, in a unique place. The polynomial.Polynomial1 class then uses these classes to implement an orthogonal polynomial class with the methods that are common to all orthogonal bases.

In this context we say that the polynomial.Polynomial1 has a polynomial.bases.T3Basis. In object-oriented language, by using a has a instead of a is a relation, the relation between both class is a composition instead of an inheritance. The advantage of this design is that it allows us to add other bases without changing any code in the polynomial class.

If this distinction seems arbitrary for one variable polynomial it becomes very convenient when we extend the classes to deal with vector-valued polynomials and/or piecewise polynomials.

This distinction also allows to separate the degree and the coefficients to be part of Polynomial1 but not of T3Basis.

In the case of a vector-valued polynomial, we consider a function \(f\left( x\right) =\left( f_{1}\left( x\right) ,\dots ,f_{n}\left( x\right) \right) .\) For implementation simplicity and efficiency reasons, we consider that the degree of P is given by the maximum of the degree of each of the vectorial components. We have then that

where \(P_{k}\left( x\right) \) are the basis polynomial of a given orthogonal polynomial family, and the coefficients of the vector-valued polynomial are given by the matrix \([a_{ki}].\)

2.1 The Three-Terms Recurrence Relation Class

The implementation of the polynomial bases uses an object-oriented approach using an abstract base class, i.e., a class that has no concrete realization, that implements all the operations in a general way for all polynomial families that follow a three-terms recurrence relation. For code management reasons all the polynomial classes are placed in polynomial.bases. The abstract base class is the root for all the other bases is T3Basis, that name is an abbreviation for three term (recurrence relation).

The concrete classes then inherit from the base class, called derived in object-oriented language, and implement only those methods for which there are more efficient formulas.

These classes aim to implement numerically well-behaved methods that minimize the number of operations and, thus, are numerically stable even for high-order approximations. Almost all the implementation is closely based on [8]. From there, we use the next propositions without proof.

In what follows, \({{{\mathcal {P}}}}=\left( P_{0},P_{1},\dots \right) \) is the orthogonal polynomial sequence defined by an inner product

where \(\delta _{ij}\) is the Kronecker symbol and w is the weight function associated with the orthogonal polynomial sequence. \({{{\mathcal {P}}}}\) constitutes a basis for \({\mathbb {P}},\) the space of polynomials of any degree. Another property of \({{{\mathcal {P}}}}\) is related to the coefficients of formal series.

Proposition 1

Let f be a function represented by an expansion over a basis of orthogonal polynomials \({{{\mathcal {P}}}}=\left( P_{0},P_{1},\dots \right) \) satisfying (1),

then

where the equality only holds when the infinite series converges to f.

This is relevant to show the following Proposition.

Proposition 2

If \(\ell \) is a linear operator acting on \({\mathbb {P}}\) and L is the infinite matrix defined by

then formally \(\ell {{{\mathcal {P}}}}={{{\mathcal {P}}}}L.\)

Orthogonal polynomials satisfy a three-term recurrence relation. The values for the recurrence relation coefficients depend on a normalization choice. We consider

and can then describe the integro-differential operations as matrix products.

One example of this can be described how to evaluate the product of a given polynomial by x :

Proposition 3

Let \({{{\mathcal {P}}}}\) be a basis satisfying (2), defining

then

and \(x{{{\mathcal {P}}}}={{{\mathcal {P}}}}M.\)

From this description, we can see that this matrix has a tridiagonal structure.

Also combining (1) with Proposition 3 we have an iterative way to evaluate the product between two polynomials of the same basis only by knowing the coefficients of each polynomial in that basis.

Other operations that can described similarly are the derivation and the integration.

Proposition 4

Let \({{{\mathcal {P}}}}\) be a basis satisfying (2), defining

then \(\eta _{i,0}=0\text { for }i\ge 0,\,\eta _{i,1}=\frac{1}{\alpha _{0}}\) and for \(j=1,2,\dots \)

and \(\frac{\text {d}}{\text {d}x}{{{\mathcal {P}}}}={{{\mathcal {P}}}}N.\)

In a similar vein, there is a representation for the primitive operator.

Proposition 5

Let \({{{\mathcal {P}}}}\) be a basis satisfying (2), defining

then for \(j=1,2,\dots \)

and \(\int {{{\mathcal {P}}}}={{{\mathcal {P}}}}O.\)

The way to implement these results computationally is then to define and abstract base class that defines the interface that each derived class should provide. A class in an object-oriented programming has two types of members: data and methods (functions). Some of the most important members of the abstract base class T3Basis are defined in Table 1.

The data members are: name; domain\(\left( \left[ a,b\right] \right) \) since this is an essential property of a basis; p1 and x1. The last two data members that are constant are provided for convenience: p1 corresponds to the coefficients of \(P_{1}\) in the power basis; x1 is the converse, it corresponds to the coefficients of the identity polynomial in the orthogonal polynomial family. For each class we also need to define the domain we are using. By default, for most orthogonal families, this is the interval \(\left[ -1,1\right] .\) If the domain is different, we convert the interval through an appropriated shift and scale transformation.

The methods of the abstract base class T3Basis are alpha, beta and gamma that correspond to the three-term recurrence relation defined in Equation (2) (\(\alpha \left( j\right) ,\) \(\beta \left( j\right) \) and \(\gamma \left( j\right) \) for \(j\ge 0\)). These methods are required to be always defined in the derived class, in the object-oriented sense. The methods multiplication, derivation (closely related with eta) and integration (closely related with theta) of polynomials are automatically defined using Propositions 3 to 5. So, even if the derived class does not define them, they are already implemented generically.

On the other hand, the methods corresponding to sum, subtraction, and division by a scalar are trivially defined. Since their implementation is immediate and simple, redefining them in the derived class is never necessary.

Other operations defined are evaluation of the polynomial at either a given scalar value, element-wise for matrices or the evaluation of the polynomial for a given square matrix. Another important method is to fit a function to a polynomial of fixed degree and the respective points where the function will be evaluated.

There are other methods that have a more complex implementation in the abstract base class. Examples of that are the conversion of coefficients from that orthogonal to the power basis, or the inverse operation conversion (from the power basis to the orthogonal family). These methods are provided for convenience.

The generic implementations are convenient in the sense that we only need to define the recurrence relation and the domain to get the basis fully implemented. Yet, for efficiency’s sake, we can do better using more specific relations for each orthogonal polynomial family.

Some of the functions above are defined in an iterative way where we build the matrices on a row-by-row way (see for example Propositions 4 and 5 where the relations are built using a recurrence relation). Using the specific properties of each orthogonal basis, we can sometimes use explicit formulas. This has two related advantages: it reduces the number of operations necessary and thus it improves the numerical stability of the methods.

One example of profiting from the particular properties of a given family of orthogonal polynomials is the polynomial.bases.ChebyshevT class, corresponding to Chebyshev polynomials of the first kind, that redefines the product method taking advantage that for this basis

If we consider, for simplicity, that both polynomials have degree n then the algorithmic complexity of the product goes from \({{{\mathcal {O}}}}\left( n^{3}\right) \) to \({{{\mathcal {O}}}}\left( n^{2}\right) .\)

The advantage of defining the derived classes based on the abstract base class is that we re-implement only those methods that have are more efficient or accurate than the general version implemented in the abstract base class. So if neither of these criteria applies the general implementation is deemed good enough and is automatically inherited from the base class.

For example, by default, the polynomial fitting is done using the a Lagrange interpolation evaluated at the zeros of a Legendre polynomial with the same degree of the approximation that we want to get. Again we can override this in the derived class by taking advantage of the properties of that base, e.g. using the Chebyshev orthogonal polynomials of the first kind, we can very efficiently evaluate the coefficients of the polynomial that best fits the function through the discrete cosine transform.

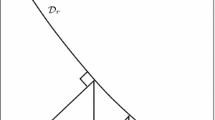

The extensibility of this scheme is best illustrated by the fact that derived classes can have additional parameters that are not present in the abstract base class. One example of this approach is the implementation of the Gegenbauer polynomials that depend on the \(\alpha \) parameter (not to be confused with the \(\alpha \) from the three-term recurrence relation). The parameter is stored as a property/data member of the derived class and used in the definition of the functions that are override, so after the initialization of the object all the properties are immediately inherited from the base class (Fig. 1).

Using the results in [8] we were able to use explicit formulas for eta and theta for several orthogonal basis. The implemented classes are Bessel, Chebyshev from the first to the fourth kind, Gegenbauer, Hermite, Laguerre, and Legendre.

A particular case implemented is the power basis, which also follows a three-term recurrence relation without the orthogonality property. Although it behaves badly in numerical terms, it is useful for debugging. Here it is enough to define \(\alpha \left( k\right) =1\) and \(\beta \left( k\right) =\gamma \left( k\right) =0.\) A useful optimization is to define the product as the convolution of the two series of coefficients.

2.2 Polynomial Implementation: Polynomial1

The Polynomial1 class has one associated orthogonal basis. As it was remarked before this allows to extend the orthogonal polynomial to apply to vector-valued polynomials where we consider a function \(f\left( x\right) =\left( f_{1}\left( x\right) ,\dots ,f_{n}\left( x\right) \right) .\) For implementation and efficiency reasons, we consider that the degree of P is given by the maximum degree of each vectorial component. We have then that \(f_{i}\left( x\right) =\sum _{k=0}^{n}a_{ki}P_{k}\left( x\right) ,\) where \(P_{k}\left( x\right) \) are the basis polynomial of a given orthogonal polynomial family, the coefficients of the vector-valued polynomial are given by the matrix \([a_{ki}].\)

This means that the degree of a polynomial is only present, and it can change with the operations, in Polynomial1 and not in T3Basis. We can see the list of data members of Polynomial1 class in Table 2.

Since we split the different tasks in two steps this allows that the verification for the validity of the operations is done at the Polynomial1 level. Also, for some operations, the upper coefficients that are zero are automatically trimmed. This means, in particular, that if we derive a polynomial of degree n the output will always be a polynomial of degree \(n-1,\) except, naturally, if the degree is zero where it will stay the same.

The final property/data member that is available are the coefficients of the polynomial in the orthogonal basis. If we are considering a real polynomial, then the coefficients are given by a column matrix with \(n+1\) rows for a polynomial of degree n. If we are considering a vector-valued polynomial with m coordinates then the coefficient data member will be a \(\left( n+1\right) \times m\) matrix.

From the methods referred as auxiliary, fit takes a function and returns the coefficients of an orthogonal polynomial that best fits the data for a given degree.

One of design purposes for the polynomial class was to use a notation as close as possible to the usual mathematical formulation, i.e., to be intuitive. That is why we refer to the polyval, a better name would have been eval, but that is a reserved word in both languages. That is not exported outside, instead, the purpose is to evaluate the polynomial as we would do using a functional notation. So if p is a Polynomial1 we evaluate the polynomial at a given point x by calling p(x).

2.3 Working with Polynomial1

To illustrate how the code works we will select some elementary examples to compare the actual results with the known results while at the same time showing how the user interfaces with the package.

We will show the code in Python, noting the calls are similar in MATLAB/GNU Octave, with the small differences being related to the proper idioms for each language.

In the next code we will create two instances of Polynomial1, \(c\approx \cos (x)\) and \(s\approx \sin (x)\), in the interval \(\left[ -\pi ,\pi \right] \).

The result is s an object

In a similar way c has degree 20.

Internally, both \(\sin \) and \(\cos \) functions were interpolated in the interval \(\left[ -\pi ,\pi \right] \) until a degree where the quotient between the largest coefficient and the highest order become smaller than the double precision \(\epsilon \approx 2\times 10^{-16}\). Since we are not specifying the basis we use the Chebyshev of first kind.

Since we are using floating point arithmetic errors are to be expected. For example, if we evaluate the approximation for the sinus in \(x \in \{-\pi ,0,\pi \}\) the results should be approximately zero. To do that, in the code, we do s([-np.pi, 0, np.pi]) and the outcome is array([-1.45511322e-16, 1.80892296e-16, 1.30383903e-16]), results within the double precision \(\epsilon \). Notice this example also shows that the Polynomial1 instances act like a function that evaluates multiple values at once in a vectorial way.

Another example is that we can operate using the usual syntax on polynomials. We can see that in Fig. 2 where we plot the result of \(s*s+c*c-1\). We know the exact result \(\sin ^2(x)+\cos ^2(x) -1=0\), which will allow us to compare the result with the expected value. Notice that in the code we are multiplying polynomials, adding them and subtracting the result by a scalar (that we treat like a constant function). Other operations behave similarly using the usual notation.

Finally, we will sum s with the derivative of c. In terms of code that is s+diff(c), we know that \(\sin (x) + \cos ^\prime (x)=0\). Notice that the result is worse because we are approximating a function by a finite order polynomial and so, each time that we derive, we decrease the order of the polynomial, decreasing thus the goodness of the result. The purpose of this example is also to show that we use diff to represent the derivative of the polynomial, another alternative representation that does not require to import diff from tautoolbox.functions, is to use directly c.diff() instead of diff(c).

3 Polynomial Approximation for Bivariate Functions

Working with bivariate functions brings new challenges, especially with regard to storage cost and computational complexity. The use of low-rank approximations to smooth functions will enable a cost-effective and quick solution to this problem All this comes, however, with the need to develop sophisticated numerical algorithms as well as their implementations. In recent times, a long list of publications written by well-known researchers have been produced, providing a rich source of solutions to attack the problem. We will closely follow the inspiring work of [4] and [6, 9], referring to these articles and the references contained therein for most of the justifications and evidence for the proposed numerical procedures (Fig. 3).

3.1 Polynomial Implementation: Polynomial2

Given a continuous bivariate function \(f: [a, b]\times [c,d] \mapsto {\mathbb {R}}\), the optimal rank k approximation in the \(L^2\)-norm is given by the SVD decomposition

where \(\sigma _1,\sigma _2,\cdots ,\sigma _k\) are the first k singular values, and \(\phi _i(y)\) and \(\psi _i(x)\), \(i=1,\cdots ,k\), are orthonormal functions in \(L^2([a, b])\) and \(L^2([c, d])\). As a low-rank approximation method, the SVD is expensive since it must be first computed and only after truncated, which is particularly relevant if the dimension of the matrix is large. Furthermore, for many applications there is no need for orthonormal bases, only natural bases that consist of the rows or columns of the discretized matrix [10]. Cheaper alternatives to get a quasi-optimal rank k approximation can be used such as the Adaptive Cross Approximation ACA, introduced in [4], where the rows and columns are chosen adaptively such that in each step a rank one approximation is added to the approximant.

In the implementation of the Polynomial2 class in Tau Toolbox, we use Gaussian Elimination with complete pivoting - Algorithm 1, a mathematically equivalent approach to ACA first proposed and developed in [9]:

where \(C(y)=[c_1(y),c_2(y),\cdots ,c_k(y)]\) and \(R(x)=\{r_1(x),r_2(x),\cdots ,r_k(x)]\). In matrix form, \(f(x,y)\approx f_k(x,y) = C(y)DR(x)\), with \(D=diag(d_1,\cdots ,d_k)\).

Basically, the smooth function is evaluated in a rectangular grid \(e_0(x,y)=f(x,y)\), and the image with maximal magnitude \(|e_0(x_1,y_1)|=\max (|e_0(x,y)|)\) together with its location \(x_1\) and \(y_1\) are found. A rank 1 function is formed, \(f_1(x,y)=d_1c_1(x)r_1(y)\), with \(d_1=\frac{1}{e_0}\), \(c_1(y)=e_0(x_1,y)\) and, \(r_1(x)=e_0(x,y_1)\), taking into account column \(c_1(x)\) and row \(r_1(x)\). The error \(e_1(x,y)=f(x,y)-f_1(x,y)\) is computed and the process is repeated from \(|e_1(x_2,y_2)|=\max (|e_1(x,y)|)\) to form the approximation of rank 2, \(f_2(x,y)=f_1(x,y)+d_2c_2(x)r_2(y)\), with \(d_2=\frac{1}{e_1}\), \(c_2(y)=e_1(x_2,y)\) and, \(r_2(x)=e_1(x,y_2)\). This iterative process proceeds until a certain criterion is verified.

The rank k approximation of a continuous function f, \(f_k\), computed after k steps of Algorithm 1, coincides with f in 2k lines (columns and rows). The factorization \(f_k(x,y)=C(y)DR(x)\) highlights the separation of variables: columns of C and rows of R are coefficients of univariate polynomials in the variables y and x. A Polynomial2 is thus built as a combination of Polynomial1 objects.

Likewise the Polynomial1 class, a set of methods have to be implemented and a list of data members is shown in Table 3.

3.2 Working with Polynomial2

The numerical experiments are designed to demonstrate the ease of use and the precision offered by the Polynomia2 class.

Considering \(f(x,y)=\tanh (10x)\tanh (10y)/\tanh (10)^2+\cos (5x)\), with Chebyshev basis of the first kind in the domain \([-1,1]\) (the bases and domain set by default), just perform the following code:

The result is an object p

that can be well represented by a low-rank approximation of rank only 2. Yet, the accuracy of this approximation is close to machine precision as can be computed with Tau Toolbox via

which delivers an error of 3.552713678800501e-15.

The Polynomial2 p has a CDR factorization given by c,d,r = p.cdr(), where c is a matrix with two Polynomials

d is an array

and r is a matrix with two Polynomials

We further highlight the use of the class. It is easy to compute the definite integral of f(x, y) over the domain by computing p.definite_integral(), with result  , and compute the definite integral over the y-axis, which is a univariate polynomial in the x-axis, p.sum(axis=0):

, and compute the definite integral over the y-axis, which is a univariate polynomial in the x-axis, p.sum(axis=0):

This class works with many orthogonal bases like Chebyshev of the first, second, third and fourth kind, Legendre, Gegenbauer, Laguerre and Hermite. One can choose a basis for both variables or any combination of them. For instance, setting Legendre and Chebyshev of the second kind in x and y, respectively, is straightforward with the Tau Toolbox

The new polynomial approximation of function g1, done in two different basis, is shown as:

The Tau Toolbox overlaps the usual operations to work with Polynomial2 objects likewise numbers and vectors. In the following, we illustrate the use of arithmetic operations:

with err_sum =  .

.

In the sequel, we reproduce with Tau Toolbox, the examples provided in [9], in approximating the functions shown in Table 4. There, we report on the polynomial approximation achieved, providing the polynomial degrees required, necessary rank and exact error. For all the functions, the polynomial degrees required for the approximation are moderate and further compression is achieved by taking only factor matrices with small rank.

In Figs. 4, 5, 6, 7, 8, and 9, we plot these functions drawing the surface and the level curves together with the location of the interception between the selected rows and columns (pivot points) chosen in the Algorithm 1 (red points).

4 Conclusion

With low-rank matrix representations, fully populated matrices can be treated with almost linear complexity. Together with the efficient storage, they also allow for tuned matrix operations with almost linear complexity.

In this work, within the scope of Tau Toolbox, a MATLAB/Python numerical library for the solution of integro-differential problems, we show how one variable and two variable smooth functions are approximated and tackled by polynomials. An object oriented approach is offered and implementation details on the Polynomial1 and Polynomial2 classes are addressed. Data-sparse matrix representations are explored for the latter class. Numerical illustrations on computing efficiency and simplicity of use are provided.

References

Shen, J., Tang, T., Wang, L.-L.: Spectral methods: algorithms, analysis and applications. Springer, Berlin (2011)

Van Loan, C.F., Golub, G.: Matrix computations (johns hopkins studies in mathematical sciences). Matrix Comput. 5 (1996)

Börm, S., Grasedyck, L., Hackbusch, W.: Introduction to hierarchical matrices with applications. Eng. Anal. Bound. Elem. 27(5), 405–422 (2003)

Bebendorf, M.: Hierarchical matrices. Springer, Berlin (2008)

Börm, S., Grasedyck, L.: Hybrid cross approximation of integral operators. Numer. Math. 101, 221–249 (2005)

Townsend, A., Trefethen, L.N.: Continuous analogues of matrix factorizations. Proc. R. Soc. A: Math. Phys. Eng. Sci. 471(2173), 20140585 (2015)

Harris, C.R., Millman, K.J., Walt, S.J., Gommers, R., Virtanen, P., Cournapeau, D., Wieser, E., Taylor, J., Berg, S., Smith, N.J., Kern, R., Picus, M., Hoyer, S., Kerkwijk, M.H., Brett, M., Haldane, A., Río, J., Wiebe, M., Peterson, P., Gérard-Marchant, P., Sheppard, K., Reddy, T., Weckesser, W., Abbasi, H., Gohlke, C., Oliphant, T.E.: Array programming with NumPy. Nature 585, 357–362 (2020)

Matos, J.C., Matos, J.M.A., Rodrigues, M.J.: Solving differential and integral equations with tau method. Math. Comput. Sci. 12, 197–204 (2018)

Townsend, A., Trefethen, L.N.: An extension of chebfun to two dimensions. SIAM J. Sci, Comput. 35(6), 495–518 (2013)

Kishore Kumar, N., Schneider, J.: Literature survey on low rank approximation of matrices. Linear Multilinear Algebra 65(11), 2212–2244 (2017)

Acknowledgements

The authors were partially supported by CMUP, member of LASI, which is financed by national funds through FCT–Fundação para a Ciência e a Tecnologia, I.P., under the projects with reference UIDB/00144/2020 and UIDP/00144/2020.

Funding

Open access funding provided by FCT|FCCN (b-on).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lima, N.J., Matos, J.A.O. & Vasconcelos, P.B. A Low-Rank Matrix Approach to Compute Polynomial Approximations of Smooth Two-Dimensional Functions. Math.Comput.Sci. 18, 9 (2024). https://doi.org/10.1007/s11786-024-00581-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11786-024-00581-2