Abstract

This paper briefly examines how literature addresses the numerical solution of partial differential equations by the spectral Tau method. It discusses the implementation of such a numerical solution for PDE’s presenting the construction of the problem’s algebraic representation and exploring solution mechanisms with different orthogonal polynomial bases. It highlights contexts of opportunity and the advantages of exploring low-rank approximations and well-conditioned linear systems, despite the fact that spectral methods usually give rise to dense and ill-conditioned matrices. It presents Tau Toolbox, a Python numerical library for the solution of integro-differential problems. It shows numerical experiments illustrating the implementations’ accuracy and computational costs. Finally, it shows how simple and easy it is to use the Tau Toolbox to obtain approximate solutions to partial differential problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Solving partial differential equations is challenging due to the nonlinear, high-dimensional, and often non-analytical nature of the equations involved. Various numerical and analytical methods have been developed to address these challenges in recent years. Among those is the spectral Tau method that produces, from a truncated series expansion in a complete set of orthogonal functions, an approximate polynomial solution for differential problems. Although powerful it is not widely used due to the lack of automatic mechanisms to wrap the analytical problem in a numerical treatable form.

Previous numerical implementations are usually dedicated to the solution of a particular problem, and there is no mathematical library that solves differential problems in a general and automatic way. There are, however, a few mathematical libraries implementing the spectral Galerkin method, such as Shenfun [1] and particularly the Chebfun project [2, 3], but not the spectral Tau method. Contrary to the most common spectral Galerkin method, where the expansion functions must satisfy the boundary conditions, in the Tau method, the coefficients of the series are computed by forcing the differential problem to be exact in its spectral representation as far as possible. Additional conditions are set such that the initial/boundary conditions are exactly satisfied.

The spectral Tau method to approximate the solution of partial differential problems is implemented as part of the Tau Toolbox—a numerical library for the solution of integro-differential problems. This mathematical software provides a general framework for the solution of such problems, ensuring accurate and fast approximate solutions. It enables a symbolic syntax to be applied to objects to manipulate and solve differential problems with ease, high accuracy, and efficiency.

In this paper we discuss the implementation of such a numerical solution for partial differential equations, presenting the construction of the problem’s algebraic representation, specifying different procedure possibilities to tackle the linear systems involved, and exploring solution mechanisms with different orthogonal polynomial bases.

The paper is organized as follows. After the preliminary introduction, in Sect. 3 we present an implementation of the spectral Tau method, following the operational traditional approach [4], to solve partial differential problems and use examples to illustrate its efficiency. A tuned direct method is devised to ensure the solution is well adapted to the original Lanczos’ idea, following [5]. It can be used on problems set on n-dimensional spaces but it can be both resource and time-consuming. In Sect. 4, we incorporate low-rank techniques [6, 7] within the existing operational spectral Tau approach and devise an implementation that produces an approximate solution fast and with good accuracy for smooth functions [8, 9]. This approach is only possible, for now, for bi-dimensional problems. An example of applying this low-rank implementation to numerically solve partial differential problems is given in the following section. We summarize our results in Sect. 5.

2 Preliminaries

Let \(\Omega \subset \mathbb {R}^n\) be a compact domain, \(\mathbb {F}(\Omega )\) and \(\mathbb {G}(\Omega )\) spaces of functions defined on \(\Omega \), and \(u(x_1, \dots , x_n) \in \mathbb {F}(\Omega )\) an unknown function, with independent variables \((x_1, \dots , x_n) \in \Omega \). The problem can be formulated as

where \(\mathcal {D}\), \(\mathcal {C}_i\), \(i=1, \dots ,\nu \), are given differential operators defined on \(\mathbb {F}(\Omega ) \rightarrow \mathbb {G}(\Omega )\), and f and \(s_i\) are given functions belonging to \(\mathbb {G}(\Omega )\).

Considering the inner products in the polynomial space \(\mathbb {P}(\Omega )\), \(\langle .,. \rangle _i : \mathbb {P}_i^2([a_i, b_i])\rightarrow \mathbb {R}\), the following sets of orthogonal polynomials

can be built.

Polynomial basis for \(\mathbb {P}(\Omega )\) are then constructed, \(\mathcal {P} = \mathcal {P}_1\otimes \mathcal {P}_2\dots \otimes \mathcal {P}_n =[P_0, P_1,\dots ]^T\), where \(\otimes \) is the Kronecker product. The sought solution u can be expressed as a polynomial in this basis as

where \(\mathbb {N}_0\) is the set of nonnegative integers.

3 Traditional Tau Approach

3.1 Tau Method

The Tau method consists in the truncation of u in the multi-order \(k=(k_1,\dots , k_n) \in \mathbb {N}^n_0\), giving rise to

solution of the perturbed problem with the form

with \(\mathbb {J} \in \mathbb {N}^n_0\).

The differential operator, being linear and of the form

with coefficients \( f_j\approx \sum _{\ell =0}^{d_j} f_{j,\ell }x^{\ell }, \; x\in \Omega ,\; \ell ,d_j\in \mathbb {N}^n_0\), can be cast in matrix form \(\textsf{D}\) by

with

Likewise, the boundary conditions

are translated in terms of the coefficients of \(u_k\) by \(\textsf{C}=\left[ \textsf{C}_i \right] _{i=1}^{\nu }\):

Finally, the Tau coefficient matrix is built

where the number of boundary rows is \(nbr=\sum _{j=1}^{\nu }\prod _{j\ne i=1}^{n} (k_i+1)\), the number of rows is \(nr = nbr+\prod _{i=1}^{n} (k_i+h_i+1)\) and the number of columns is \(nc=\prod _{i=1}^{n} (k_i+1)\), \(nr>nc\). Note that \(h_i =\max \{h_{ij}\}\), \(h_{i0}\) the height of D and \(h_{ij}\) the height of \(\mathcal {C}_i\), in \(x_j\), \(j=1,...,n\).

Given f and \(s_i,\ i=1,\ldots \nu \), the problem’s right-hand side, we define the coefficients vectors in the polynomial approximations

from which the Tau right-hand side vector

can be constructed.

The system \(\textsf{T}\textsf{a}=\textsf{b}\) is overdetermined and large dimensional. Different solvers may produce the usual Tau solution or a kind of weak form of it (e.g. linear least squares approach).

We provide an algorithm to solve the problem \(\textsf{T}\textsf{a}=\textsf{b}\) with a direct method ensuring a solution that fully satisfies the initial/boundary conditions and minimizes the error on the operator terms – Tau solution. This approach, drawn in Algorithm 1, is based on an adaptation of the LU factorization: a two-level approach. The pivoting process prioritizes two types of rows: (i) those that define boundary conditions, which impose a threshold on the growth factor, and (ii) those that specify terms of the operator with a lower degree.

3.2 Numerical Illustration

To illustrate the use of this approach, we solve Saint-Venant’s torsion problem for a prismatic bar with a square section

The analytic solution can be developed as

and we use a truncation of the series to evaluate the quality of the Tau approximate solution provided by Tau Toolbox using the traditional approach. Figure 1 shows, for problem (2), the difference surface between the truncated solution, using 99 terms, and the Tau approximation, for small polynomial degrees.

The results clearly evidence the quality of the approximation, bearing in mind the low polynomial degrees involved. Furthermore, they show a remarkable equioscillatory behavior [4].

On the other hand, the elapsed time to compute the approximation and memory resources are high whenever a moderate/high polynomial approximation is required. In Fig. 2 we depict the elapsed time required to compute a Tau approximate solution for the Saint-Venant’s problem using Algorithm 1 and a linear least squares approach. We report also on the time required to unpack the problem and build the linear system. Clearly, time increases exponentially with the polynomial degree.

To overcome this limitation, a Gegenbauer-Tau implementation together low-rank approximation techniques are used, as explained in the next section.

4 A Gegenbauer-Tau Approach with Low-Rank Approximation

4.1 Gegenbauer-Tau Approach

Following the ideas in [9], formulating the Tau method in terms of Gegenbauer polynomials and their associated sparse matrices, can bring additional benefits in terms of computational efficiency and numerical stability.

First recall that Gegenbauer, also known as ultraspherical, polynomials \(C_j^{(\alpha )}\), \(j\ge 0\) are orthogonal polynomials, belonging to the more general class of Jacobi polynomials, orthogonal on \([-1,1]\) with respect to the weight function \((1-x^2)^{\alpha -\frac{1}{2}}\). Although they can be used with any real parameter \(\alpha > -\frac{1}{2}\), three particular cases appear with special emphasis in applications: Legendre polynomials \(P_j(x)=C_j^{(\frac{1}{2})}(x)\); Chebyshev of second kind \(U_j(x)=C_j^{(1)}(x)\) and Chebyshev of first kind, the limit case, \(T_j(x)=\lim _{\alpha \mapsto 0^+} \frac{1}{2\alpha }C_{j}^{(\alpha )}(x)\).

Of great relevance is that a Gegenbauer polynomial \(C_j^{(\alpha )}\) satisfies the property of derivatives [10]

And, applying \(\ell \) times the last relation, one gets

where \((\alpha )_\ell =\alpha (\alpha +1)\cdots (\alpha +\ell -1)\) is the raising factorial (or Pochhammer symbol).

Let, for \(\alpha >-\frac{1}{2}\), \(\mathcal {C}^{(\alpha )}=[C_0^{(\alpha )}, C_1^{(\alpha )}, \ldots ]^T\) be a Gegenbauer polynomial basis, and

be a formal series represented by the coefficients vector \(\textsf{u}=[u_0,u_1,\ldots ]\), then the \(\ell \)th derivative

is represented by a sparse matrix operator

where

is the shift operator.

However, since \(\textsf{N}_\ell \) takes coefficients in \(\mathcal {C}^{(\alpha )}\) into coefficients in \(\mathcal {C}^{(\ell +\alpha )}\), all terms on the differential problem must be also translated into coefficients in \(\mathcal {C}^{(\ell +\alpha )}\). This can be accomplished using the relation [10]

which can be applied via the following operator matrices

This is important to explore since spectral methods usually represent differential operators by dense matrices, even if there is some cost associated with the change in polynomial basis.

Finally, to have an operational formulation of the Tau method in terms of Gegenbauer polynomials, we have to represent the multiplication operation by an algebraic operator. This can be done from the three-term characteristic recurrence relation [10]

with \(C_{-1}^{(\alpha )}=0\) and \(C_{0}^{(\alpha )}=1\). This can be written in matrix form using matrices

So, if \(u=\textsf{u}\mathcal {C}^{(\alpha )}\) (3) and \(D=\sum _{\ell =0}^{\nu } f_\ell \dfrac{d^\ell }{dx^\ell }\) is a differential operator with coefficients \(f_\ell =\sum _{i=0}^{n_\ell } f_{\ell ,i} x^i\), then

with

and

The resulting linear system, \(\textsf{T}\textsf{a}=\textsf{b}\) has the usual trapezoidal shape and can be solved by LU factorization, where

Using Gegenbauer polynomials, matrix \(\textsf{T}\) is well-conditioned [11].

Another possibility is to use QR factorization, following Algorithm 2, which works on a triangular matrix similar to \(\textsf{T}\).

4.2 Low-Rank Implementation

In this subsection, we succinctly summarize the inclusion of low rank approximations in the resolution of partial differential problems. Indeed, partial differentiation can be adapted to the use of low-rank approximations since it can be represented by a tensor product of univariate differentiation. For the moment, only the bidimensional case is treated.

Let us consider the two-dimensional partial differential problem from (1)

for \(\Omega \subset \mathbb {R}^2\), \(u(x, y) \in \mathbb {F}(\Omega )\), and

a linear operator, where \(\nu _1\) is the differential order of \(\mathcal {D}\) in the x variable and \(\nu _2\) in the y variable, and \(p_{i,j}(x,y)\) are functions defined on \(\Omega \). A low-rank approximation for a bivariate function \(p_{i,j}\) (tau.Polynomial2) can be expressed by a sum of outer products of univariate polynomials (tau.Polynomial1):

with \(0\le i \le \nu _2\) and \(0 \le j \le \nu _1\). As a consequence, the partial differential operator \(\mathcal {D}\) can be expressed by a low-rank representation of rank k as

where \(c_j(y)\) and \(r_j(x)\) belong to the tau.Polynomial1 class.

Thus, a sum of tensor products of linear ordinary differential operators is built from a separable representation of a partial differential operator. Then the ordinary differential operator is tackled with the Gegenbauer-Tau spectral approach, and finally a \(\nu _2 \times \nu _1\) discretized generalized Sylvester matrix equation is solved to provide an approximate solution to the problem. A detailed explanation on these steps can be found in [9, 11]. This set of actions brings benefits in terms of computational efficiency and numerical stability.

4.3 Numerical Illustration

We begin by illustrating the Saint-Venant problem using the Tau Toolbox:

The writing of the code is intuitive and self-explanatory. The problem is set in the library by calling |tau.Problem2|. By default, \([-1,1]\) is the domain, and ChebyshevT is the basis for all variables. The result is a Tau object |u| computed via the |solve| functionality of Tau Toolbox:

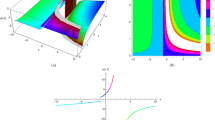

The computations were carried out using a low-approximation factorization of rank 33, even if the discretization involved a \(257\times 257\) matrix. The approximate solution and the error are depicted in Fig. 3.

In Fig. 4 we show the performance achieved in the computation of the low-rank approximate solution with Gegenbauer-Tau approach. For very large polynomial degree approximation, there is no gain to achieve since the rank of the problem stays unchanged. As expected, the computation time increases with the degree of the approximation but is much less than the traditional approach.

Another example is the partial differential problem

with exact solution given by \(u(x,t)=e^{tx} \cos (x + t)\). The excerpt of the code to solve the problem follows:

The solution of problem (5) is computed to almost machine precision (see Fig. 5), working on matrix structures with rank 12 to approximate the operators:

5 Conclusion

In this work we have introduced the Tau Toolbox—a MATLAB/Python toolbox for the solution of integro-differential problems, presented implementation details on how to solve bidimensional PDEs making use of low-rank approximations and Gegenbauer polynomials, and illustrated the simplicity of use of Tau Toolbox in the solution of PDEs as well as the efficiency of the implemented approach.

References

Mortensen, M.: Shenfun: high performance spectral Galerkin computing platform. J. Open Source Softw. 3(31), 1071 (2018)

Trefethen, L.N.: Computing numerically with functions instead of numbers. Math. Comput. Sci. 1, 9–19 (2007)

Driscoll, T.A., Hale, N., Trefethen, L.N.: Chebfun Guide. Pafnuty Publications, Oxford (2014)

Ortiz, E.L., Samara, H.: Numerical solution of partial differential equations with variable coefficients with an operational approach to the tau method. Comput. Math. Appl. 10(1), 5–13 (1984)

Matos, J., Rodrigues, M.J., Vasconcelos, P.B.: New implementation of the Tau method for PDEs. J. Comput. Appl. Math. 164, 555–567 (2004)

Bebendorf, M.: Adaptive cross approximation of multivariate functions. Constr. Approx. 34, 149–179 (2011)

Hackbusch, W.: Hierarchical Matrices: Algorithms and Analysis. Springer, Berlin (2015)

Townsend, A., Trefethen, L.N.: An extension of Chebfun to two dimensions. SIAM J. Sci. Comput. 35(6), 495–518 (2013)

Olver, S., Townsend, A.: A fast and well-conditioned spectral method. SIAM Rev. 55(3), 462–489 (2013)

Olver, F.W.: NIST Handbook of Mathematical Functions Hardback and CD-ROM. Cambridge University Press, Cambridge (2010)

Townsend, A., Olver, S.: The automatic solution of partial differential equations using a global spectral method. J. Comput. Phys. 299, 106–123 (2015)

Acknowledgements

The authors were partially supported by CMUP, member of LASI, which is financed by national funds through FCT – Fundação para a Ciência e a Tecnologia, I.P., under the projects with reference UIDB/00144/2020 and UIDP/00144/2020.

Funding

Open access funding provided by FCT|FCCN (b-on).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lima, N.J., Matos, J.M.A. & Vasconcelos, P.B. Solving Partial Differential Problems with Tau Toolbox. Math.Comput.Sci. 18, 8 (2024). https://doi.org/10.1007/s11786-024-00580-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11786-024-00580-3