Abstract

In this article we study the bivariate truncated moment problem (TMP) of degree 2k on reducible cubic curves. First we show that every such TMP is equivalent after applying an affine linear transformation to one of 8 canonical forms of the curve. The case of the union of three parallel lines was solved in Zalar (Linear Algebra Appl 649:186–239, 2022. https://doi.org/10.1016/j.laa.2022.05.008), while the degree 6 cases in Yoo (Integral Equ Oper Theory 88:45–63, 2017). Second we characterize in terms of concrete numerical conditions the existence of the solution to the TMP on two of the remaining cases concretely, i.e., a union of a line and a circle \(y(ay+x^2+y^2)=0, a\in {\mathbb {R}}{\setminus } \{0\}\), and a union of a line and a parabola \(y(x-y^2)=0\). In both cases we also determine the number of atoms in a minimal representing measure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a real 2-dimensional sequence

of degree 2k and a closed subset K of \({\mathbb {R}}^2\), the truncated moment problem (K-TMP) supported on K for \(\beta ^{(2k)}\) asks to characterize the existence of a positive Borel measure \(\mu \) on \({\mathbb {R}}^2\) with support in K, such that

If such a measure exists, we say that \(\beta ^{(2k)}\) has a representing measure supported on K and \(\mu \) is its K-representing measure (K-rm).

In the degree-lexicographic order

of rows and columns, the corresponding moment matrix to \(\beta \) is equal to

where

Let \({\mathbb {R}}[x,y]_{\le k}:=\{p\in {\mathbb {R}}[x,y]:\deg p\le k\}\) stand for the set of real polynomials in variables x, y of total degree at most k. For every \(p(x,y)=\sum _{i,j} a_{i,j}x^iy^j\in {\mathbb {R}}[x,y]_{\le k}\) we define its evaluation p(X, Y) on the columns of the matrix \({\mathcal {M}}(k)\) by replacing each capitalized monomial \(X^iY^j\) in \(p(X,Y)=\sum _{i,j} a_{i,j}X^iY^j\) by the column of \({\mathcal {M}}(k)\), indexed by this monomial. Then p(X, Y) is a vector from the linear span of the columns of \({\mathcal {M}}(k)\). If this vector is the zero one, i.e., all coordinates are equal to 0, then we say p is a column relation of \({\mathcal {M}}(k)\). A column relation p is nontrivial, if \(p\not \equiv 0\). We denote by \({{\mathcal {Z}}}(p):=\{(x,y)\in {\mathbb {R}}^2:p(x,y)=0\}\), the zero set of p. We say that the matrix \({\mathcal {M}}(k)\) is recursively generated (rg) if for \(p,q,pq\in {\mathbb {R}}[x,y]_{\le k}\) such that p is a column relation of \({\mathcal {M}}(k)\), it follows that pq is also a column relation of \({\mathcal {M}}(k)\). The matrix \({\mathcal {M}}(k)\) is p-pure, if the only column relations of \({\mathcal {M}}(k)\) are those determined recursively by p. We call a sequence \(\beta \) p-pure, if \(\mathcal M(k)\) is p-pure.

A concrete solution to the TMP is a set of necessary and sufficient conditions for the existence of a K-representing measure \(\mu \), that can be tested in numerical examples. Among necessary conditions, \({\mathcal {M}}(k)\) must be positive semidefinite (psd) and rg [14, 25], and by [12], if the support \(\textrm{supp}(\mu )\) of \(\mu \) is a subset of \({{\mathcal {Z}}}(p)\) for a polynomial \(p\in {\mathbb {R}}[x,y]_{\le k}\), then p is a column relation of \({\mathcal {M}}(k)\). The bivariate K-TMP is concretely solved in the following cases:

-

(1)

\(K={{\mathcal {Z}}}(p)\) for a polynomial p with \(1\le \deg p\le 2\). Assume that \(\deg p=2\). By applying an affine linear transformation it suffices to consider one of the canonical cases: \(x^2+y^2=1\), \(y=x^2\), \(xy=1\), \(xy=0\), \(y^2=y\). The case \(x^2+y^2=1\) is equivalent to the univariate trigonometric moment problem, solved in [13]. The other four cases were tackled in [13,14,15, 27] by applying the far-reaching flat extension theorem (FET) [12, Theorem 7.10] (see also [16, Theorem 2.19] and [34] for an alternative proof), which states that \(\beta ^{(2k)}\) admits a \(({{\,\textrm{rank}\,}}{\mathcal {M}}(k))\)-atomic rm if and only if \({\mathcal {M}}(k)\) is psd and admits a rank-preserving extension to a moment matrix \({\mathcal {M}}(k+1)\). For an alternative approach with shorter proofs compared to the original ones by reducing the problem to the univariate setting see [4, Section 6] (for \(xy=0\)), [42] (for \(y^2=y\)), [43] (for \(xy=1\)) and [44] (for \(y=x^2\)). For \(\deg p=1\) the solution is [17, Proposition 3.11] and uses the FET, but can be also derived in the univariate setting (see [44, Remark 3.3.(4)])

-

(2)

\(K={\mathbb {R}}^2\), \(k=2\) and \({\mathcal {M}}(2)\) is invertible. This case was first solved nonconstructively using convex geometry techniques in [29] and later on constructively in [22] by a novel rank reduction technique.

-

(3)

K is one of \({{\mathcal {Z}}}(y-x^3)\) [26, 41], \({{\mathcal {Z}}}(y^2-x^3)\) [41], \({{\mathcal {Z}}}(y(y-a)(y-b))\) [38, 42], \(a,b\in {\mathbb {R}}{\setminus }\{0\}\), \(a\ne b\), or \({{\mathcal {Z}}}(xy^2-1)\) [43]. The main technique in [26] is the FET, while in [41,42,43] the reduction to the univariate TMP is applied.

-

(4)

\({\mathcal {M}}(k)\) has a special feature called recursive determinateness [18] or extremality [19].

-

(5)

\({\mathcal {M}}(3)\) satisfies symmetric cubic column relations which can only cause extremal moment problems. In order to satisfy the variety condition, another symmetric column relation must exist, and the solution was obtained by checking consistency [20].

-

(6)

Non-extremal sextic TMPs with \({{\,\textrm{rank}\,}}{\mathcal {M}}(3)\le 8\) and with finite or infinite algebraic varieties [21].

-

(7)

\({\mathcal {M}}(3)\) with reducible cubic column relations [39].

The solutions to the K-TMP, which are not concrete in the sense of definition from the previous paragraph, are known in the cases \(K={{\mathcal {Z}}}(y-q(x))\) and \(K={{\mathcal {Z}}}(yq(x)-1)\), where \(q\in {\mathbb {R}}[x]\). [26, Section 6] gives a solution in terms of the bound on the degree m for which the existence of a positive extension \(\mathcal M(k+m)\) of \({\mathcal {M}}(k)\) is equivalent to the existence of a rm. In [44] the bound on m is improved to \(m=\deg q-1\) for curves of the form \(y=q(x)\), \(\deg q\ge 3\), and to \(m=\ell +1\) for curves of the form \(yx^\ell =1\), \(\ell \in {\mathbb {N}}{\setminus }\{1\}\).

References to some classical work on the TMP are monographs [2, 3, 33], while for a recent development in the area we refer a reader to [36]. Special cases of the TMP have also been considered in [6, 7, 24, 28, 31, 32], while [35] considers subspaces of the polynomial algebra and [8] the TMP for commutative \({\mathbb {R}}\)-algebras.

The motivation for this paper was to solve the TMP concretely on some reducible cubic curves, other than the case of three parallel lines solved in [42]. Applying an affine linear transformation we show that every such TMP is equivalent to the TMP on one of 8 canonical cases of reducible cubics of the form \(yc(x,y)=0\), where \(c\in {\mathbb {R}}[x,y]\), \(\deg c=2\). In this article we solve the TMP for the cases \(c(x,y)=ay+x^2+y^2\), \(a\in {\mathbb {R}}{\setminus } \{0\}\), and \(c(x,y)=x-y^2\), which we call the circular and the parabolic type, respectively. The main idea is to characterize the existence of a decomposition of \(\beta \) into the sum \(\beta ^{(\ell )}+\beta ^{(c)}\), where \(\beta ^{(\ell )}=\{\beta _{i,j}^{(\ell )}\}_{i,j\in {\mathbb {Z}}_+,\; 0\le i+j\le 2k}\) and \(\beta ^{(c)}=\{\beta _{i,j}^{(c)}\}_{i,j\in {\mathbb {Z}}_+,\; 0\le i+j\le 2k}\) admit a \({\mathbb {R}}\)-rm and a \({{\mathcal {Z}}}(c)\)-rm, respectively. Due to the form of the cubic \(yc(x,y)=0\), it turns out that all but two moments of \(\beta ^{(\ell )}\) and \(\beta ^{(c)}\) are not already fixed by the original sequence, i.e., \(\beta _{0,0}^{(\ell )}\), \(\beta _{1,0}^{(\ell )}\), \(\beta _{0,0}^{(c)}\), \(\beta _{1,0}^{(c)}\) in the circular type case and \(\beta _{0,0}^{(\ell )}\), \(\beta _{2k,0}^{(\ell )}\), \(\beta _{0,0}^{(c)}\), \(\beta _{2k,0}^{(c)}\) in the parabolic type case. Then, by an involved analysis, the characterization of the existence of a decomposition \(\beta =\beta ^{(\ell )}+\beta ^{(c)}\) can be done in both cases. We also characterize the number of atoms in a minimal representing measure, i.e., a measure with the minimal number of atoms in the support.

1.1 Readers Guide

The paper is organized as follows. In Sect. 2 we present some preliminary results needed to establish the main results of the paper. In Sect. 3 we show that to solve the TMP on every reducible cubic curve it is enough to consider 8 canonical type relations (see Proposition 3.1). In Sect. 4 we present the general procedure for solving the TMP on all but one of the canonical types and prove some results that apply to them. Then in Sects. 5 and 6 we specialize to the circular and the parabolic type relations and solve them concretely (see Theorems 5.1 and 6.1). In both cases we show, by numerical examples, that there are pure sequences \(\beta ^{(6)}\) with a psd \({\mathcal {M}}(3)\) but without a rm (see Examples 5.3 and 6.3).

2 Preliminaries

We write \({\mathbb {R}}^{n\times m}\) for the set of \(n\times m\) real matrices. For a matrix M we call the linear span of its columns a column space and denote it by \({{\mathcal {C}}}(M)\). The set of real symmetric matrices of size n will be denoted by \(S_n\). For a matrix \(A\in S_n\) the notation \(A\succ 0\) (resp. \(A\succeq 0\)) means A is positive definite (pd) (resp. positive semidefinite (psd)). We write \(\textbf{0}_{t_1,t_2}\) for a \(t_1\times t_2\) matrix with only zero entries and \(\textbf{0}_{t}=\textbf{0}_{t,t}\) for short, where \(t_1,t_2,t\in {\mathbb {N}}\). The notation \(E^{(\ell )}_{i,j}\), \(\ell \in {\mathbb {N}}\), stands for the usual \(\ell \times \ell \) coordinate matrix with the only nonzero entry at the position (i, j), which is equal to 1.

In the rest of this section let \(k\in {\mathbb {N}}\) and \(\beta \equiv \beta ^{ (2k)}=\{\beta _{i,j}\}_{i,j\in {\mathbb {Z}}_+,\; 0\le i+j\le 2k}\) be a bivariate sequence of degree 2k.

2.1 Moment Matrix

Let \({\mathcal {M}}(k)\) be the moment matrix of \(\beta \) (see (1.2)). Let \(Q_1, Q_2\) be subsets of the set \(\{X^iY^j:i,j \in {\mathbb {Z}}_+,\; 0\le i+j\le k\}\). We denote by \(({\mathcal {M}}(k))_{Q_1,Q_2}\) the submatrix of \({\mathcal {M}}(k)\) consisting of the rows indexed by the elements of \(Q_1\) and the columns indexed by the elements of \(Q_2\). In case \(Q:=Q_1=Q_2\), we write \((\mathcal M(k))_{Q}:=({\mathcal {M}}(k))_{Q,Q}\) for short.

2.2 Affine Linear Transformations

The existence of representing measures is invariant under invertible affine linear transformations of the form

\(a,b,c,d,e,f\in {\mathbb {R}}\) with \(bf-ce \ne 0\). Namely, let \(L_{\beta }:\mathbb {R}[x,y]_{\le 2k}\rightarrow {\mathbb {R}}\) be a Riesz functional of the sequence \(\beta \) defined by

We define \({\widetilde{\beta }}=\{{\widetilde{\beta }}_{i,j}\}_{i,j\in {\mathbb {Z}}_+,\; 0\le i+j\le 2k}\) by

By [14, Proposition 1.9], \(\beta \) admits a (r-atomic) K-rm if and only if \({\widetilde{\beta }}\) admits a (r-atomic) \(\phi (K)\)-rm. We write \({\widetilde{\beta }}=\phi (\beta )\) and \(\mathcal M(k;{\widetilde{\beta }})=\phi ({\mathcal {M}}(k;\beta ))\).

2.3 Generalized Schur Complements

Let

be a real matrix where \(A\in {\mathbb {R}}^{n\times n}\), \(B\in {\mathbb {R}}^{n\times m}\), \(C\in {\mathbb {R}}^{m\times n}\) and \(D\in {\mathbb {R}}^{m\times m}\). The generalized Schur complement [45] of A (resp. D) in M is defined by

where \(A^\dagger \) (resp. \(D^\dagger \)) stands for the Moore–Penrose inverse of A (resp. D).

The following lemma will be frequently used in the proofs.

Lemma 2.1

Let \(n,m\in {\mathbb {N}}\) and

where \(A\in S_n\), \(B\in {\mathbb {R}}^{n\times m}\) and \(C\in S_m\). If \({{\,\textrm{rank}\,}}M={{\,\textrm{rank}\,}}A\), then the matrix equation

where \(W\in {\mathbb {R}}^{n\times m}\), is solvable and the solutions are precisely the solutions of the matrix equation \(AW=B\). In particular, \(W=A^{\dagger }B\) satisfies (2.2).

Proof

The assumption \({{\,\textrm{rank}\,}}M={{\,\textrm{rank}\,}}A\) implies that

for some \(W\in {\mathbb {R}}^{n\times m}\). So the Eq. (2.2) is solvable. In particular, \(AW=B\). It remains to prove that any solution W to \(AW=B\) is also a solution to (2.3). Note that all the solutions of the equation \(A{\widetilde{W}}=B\) are

where each column of \(Z\in {\mathbb {R}}^{n\times m}\) is an arbitrary vector from \(\ker A\). So W satisfiying (2.3) is also of the form \(A^\dagger B+Z_0\) for some \(Z_0\in {\mathbb {R}}^{n\times m}\) with columns belonging to \(\ker A\). We have that

where we used the fact that each column of B belongs to \({{\mathcal {C}}}(A)\) and \(\ker (A)^\perp = {{\mathcal {C}}}(A).\) Replacing W with any \({\widetilde{W}}\) of the form (2.4) in the calculation (2.5) gives the same result, which proves the statement of the proposition. \(\square \)

The following theorem is a characterization of psd \(2\times 2\) block matrices.

Theorem 2.2

[1] Let

be a real symmetric matrix where \(A\in S_n\), \(B\in {\mathbb {R}}^{n\times m}\) and \(C\in S_m\). Then:

-

(1)

The following conditions are equivalent:

-

(a)

\(M\succeq 0\).

-

(b)

\(C\succeq 0\), \({{\mathcal {C}}}(B^T)\subseteq {{\mathcal {C}}}(C)\) and \(M/C\succeq 0\).

-

(c)

\(A\succeq 0\), \({{\mathcal {C}}}(B)\subseteq {{\mathcal {C}}}(A)\) and \(M/A\succeq 0\).

-

(a)

-

(2)

If \(M\succeq 0\), then

$$\begin{aligned} {{\,\textrm{rank}\,}}M= {{\,\textrm{rank}\,}}A+{{\,\textrm{rank}\,}}M/A={{\,\textrm{rank}\,}}C+{{\,\textrm{rank}\,}}M/C. \end{aligned}$$

2.4 Extension Principle

Proposition 2.3

Let \({{\mathcal {A}}}\in S_n\) be positive semidefinite, Q a subset of the set \(\{1,\ldots ,n\}\) and \({{\mathcal {A}}}|_Q\) the restriction of \({{\mathcal {A}}}\) to the rows and columns from the set Q. If \({{\mathcal {A}}}|_Qv=0\) for a nonzero vector v, then \({{\mathcal {A}}}{\widehat{v}}=0\), where \(\widehat{v}\) is a vector with the only nonzero entries in the rows from Q and such that the restriction \(\widehat{v}|_Q\) to the rows from Q equals to v.

Proof

See [25, Proposition 2.4] or [42, Lemma 2.4] for an alternative proof. \(\square \)

2.5 Partially Positive Semidefinite Matrices and Their Completions

A partial matrix \(A=(a_{i,j})_{i,j=1}^n\) is a matrix of real numbers \(a_{i,j}\in {\mathbb {R}}\), where some of the entries are not specified.

A partial symmetric matrix \(A=(a_{i,j})_{i,j=1}^n\) is partially positive semidefinite (ppsd) (resp. partially positive definite (ppd)) if the following two conditions hold:

-

(1)

\(a_{i,j}\) is specified if and only if \(a_{j,i}\) is specified and \(a_{i,j}=a_{j,i}\).

-

(2)

All fully specified principal minors of A are psd (resp. pd).

For \(n\in {\mathbb {N}}\) write \([n]:=\{1,2,\ldots ,n\}\). We denote by \(A_{Q_1,Q_2}\) the submatrix of \(A\in {\mathbb {R}}^{n\times n}\) consisting of the rows indexed by the elements of \(Q_1\subseteq [n]\) and the columns indexed by the elements of \(Q_2\subseteq [n]\). In case \(Q:=Q_1=Q_2\), we write \(A_{Q}:=A_{Q,Q}\) for short.

It is well-known that a ppsd matrix \(A(\textbf{x})\) of the form as in Lemma 2.4 below admits a psd completion (This follows from the fact that the corresponding graph is chordal, see e.g., [5, 23, 30]). Since we will need an additional information about the rank of the completion \(A(x_0)\) and the explicit interval of all possible \(x_0\) for our results, we give a proof of Lemma 2.4 based on the use of generalized Schur complements.

Lemma 2.4

Let \(A(\textbf{x})\) be a partially positive semidefinite symmetric matrix of size \(n\times n\) with the missing entries in the positions (i, j) and (j, i), \(1\le i<j\le n\). Let

Let

and

Then:

-

(i)

\(A(x_{0})\) is positive semidefinite if and only if \(x_0\in [x_-,x_+]\).

-

(ii)

$$\begin{aligned} {{\,\textrm{rank}\,}}A(x_0)= \left\{ \begin{array}{rl} \max \big \{{{\,\textrm{rank}\,}}A_2, {{\,\textrm{rank}\,}}A_3\big \},&{} \text {for}\;x_0\in \{x_-,x_+\},\\[0.5em] \max \big \{{{\,\textrm{rank}\,}}A_2, {{\,\textrm{rank}\,}}A_3\big \}+1,&{} \text {for}\;x_0\in (x_-,x_+). \end{array}\right. \end{aligned}$$

-

(iii)

The following statements are equivalent:

-

(a)

\(x_-=x_+\).

-

(b)

\(A_2/A_1=0\) or \(A_3/A_1=0\).

-

(c)

\({{\,\textrm{rank}\,}}A_2={{\,\textrm{rank}\,}}A_1\) or \({{\,\textrm{rank}\,}}A_3={{\,\textrm{rank}\,}}A_1\).

-

(a)

Proof

We write

Let P be a permutation matrix, which changes the order of columns to

Then

Note that

Lemma 2.4 with the missing entries in the positions \((n-1,n)\) and \((n,n-1)\) was proved in [41, Lemma 2.11] using computations with generalized Schur complements under one additional assumption:

Here we explain why the assumption (2.7) can be removed from [41, Lemma 2.11]. The proof of [41, Lemma 2.11] is separated into two cases: \(A_2/A_1>0\) and \(A_2/A_1=0\). The case \(A_2/A_1=0\) does not use (2.7). Assume now that \(A_2/A_1>0\) or equivalently \({{\,\textrm{rank}\,}}A_2>{{\,\textrm{rank}\,}}A_1\). Invertibility of \(A_1\) (and by \(A_2/A_1>0\) also \(A_2\) is invertible) is used in the proof of [41, Lemma 2.11] for the application of the quotient formula ( [10])

where

However, the formula (2.8) has been generalized [9, Theorem 4] to noninvertible \(A_1\), \(A_2\), where all Schur complements are the generalized ones, under the conditions:

So if we show that the conditions (2.9) hold, the same proof as in [41, Lemma 2.11] can be applied in the case \(A_1\) is singular. From \(A_2\) (resp. \(A_3\)) being psd, \(a \in {{\mathcal {C}}}(A_1)\) (resp. \(b\in {{\mathcal {C}}}(A_1)\)) follows by Theorem 2.2, used for \((M,A):=(A_2,A_1)\) (resp. \((M,A):=(A_3,A_1)\)). The assumption \(A_2/A_1>0\) implies that \(\begin{pmatrix}a&\alpha \end{pmatrix}^T \notin {{\mathcal {C}}}(\begin{pmatrix} A_1&a^T \end{pmatrix}^T)\). Since \(a \in {{\mathcal {C}}}(A_1)\), it follows that \(\begin{pmatrix}0&1\end{pmatrix}^T \in {{\mathcal {C}}}(A_2)\). Hence, \(\begin{pmatrix}b&x\end{pmatrix}^T \in {{\mathcal {C}}}(A_2)\) for every \(x\in {\mathbb {R}}\), which concludes the proof of (2.9). \(\square \)

2.6 Hamburger TMP

Let \(k\in {\mathbb {N}}\). For

\(v=(v_0,\ldots ,v_{2k} )\in {\mathbb {R}}^{2k+1}\)

we define the corresponding Hankel matrix as

We denote by \(\mathbf {v_j}:=\left( v_{j+\ell } \right) _{\ell =0}^k\) the \((j+1)\)-th column of \(A_{v}\), \(0\le j\le k\), i.e.,

As in [11], the rank of v, denoted by \({{\,\textrm{rank}\,}}v\), is defined by

For \(m\le k\) we denote the upper left-hand corner \(\left( v_{i+j} \right) _{i,j=0}^m\in S_{m+1}\) of \(A_{v}\) of size \(m+1\) by \(A_{v}(m)\). A sequence v is called positively recursively generated (prg) if for \(r={{\,\textrm{rank}\,}}v\) the following two conditions hold:

-

\(A_v(r-1)\succ 0\).

-

If \(r<k+1\), denoting

$$\begin{aligned} (\varphi _0,\ldots ,\varphi _{r-1}):=A_{v}(r-1)^{-1}(v_r,\ldots ,v_{2r-1})^{T}, \end{aligned}$$(2.11)the equality

$$\begin{aligned} v_j=\varphi _0v_{j-r}+\cdots +\varphi _{r-1}v_{j-1} \end{aligned}$$(2.12)holds for \(j=r,\ldots ,2k\).

The solution to the \({\mathbb {R}}\)-TMP is the following.

Theorem 2.5

[11, Theorems 3.9–3.10] For \(k\in {\mathbb {N}}\) and \(v=(v_0,\ldots ,v_{2k})\in {\mathbb {R}}^{2k+1}\) with \(v_0>0\), the following statements are equivalent:

-

(1)

There exists a \({\mathbb {R}}\)-representing measure for \(\beta \).

-

(2)

There exists a \(({{\,\textrm{rank}\,}}A_v)\)-atomic \({\mathbb {R}}\)-representing measure for \(\beta \).

-

(3)

\(A_v\) is positive semidefinite and one of the following holds:

-

(a)

\(A_v(k-1)\) is positive definite.

-

(b)

\({{\,\textrm{rank}\,}}A_v(k-1)={{\,\textrm{rank}\,}}A_v\).

-

(a)

-

(4)

v is positively recursively generated.

2.7 TMP on the Unit Circle

The solution to the \({{\mathcal {Z}}}(x^2+y^2-1)\)-TMP is the following.

Theorem 2.6

[13, Theorem 2.1] Let \(p(x,y)=x^2+y^2-1\) and \(\beta :=\beta ^{(2k)}=(\beta _{i,j})_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\), where \(k\ge 2\). Then the following statements are equivalent:

-

(1)

\(\beta \) has a \({\mathcal {Z}}(p)\)-representing measure.

-

(2)

\(\beta \) has a \(({{\,\textrm{rank}\,}}{\mathcal {M}}(k))\)-atomic \({\mathcal {Z}}(p)\)-representing measure.

-

(3)

\({\mathcal {M}}(k)\) is positive semidefinite and the relations \(\beta _{2+i,j}+\beta _{i,2+j}=\beta _{i,j}\) hold for every \(i,j\in {\mathbb {Z}}_+\) with \(i+j\le 2k-2\).

2.8 Parabolic TMP

We will need the following solution to the parabolic TMP (see [44, Theorem 3.7]).

Theorem 2.7

Let \(p(x,y)=x-y^2\) and \(\beta :=\beta ^{(2k)}=(\beta _{i,j})_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\), where \(k\ge 2\). Let

Then the following statements are equivalent:

-

(1)

\(\beta \) has a \({\mathcal {Z}}(p)\)-representing measure.

-

(2)

\(\beta \) has a \(({{\,\textrm{rank}\,}}{\mathcal {M}}(k))\)-atomic \({\mathcal {Z}}(p)\)-representing measure.

-

(3)

\({\mathcal {M}}(k)\) is positive semidefinite, the relations \(\beta _{1+i,j}=\beta _{i,2+j}\) hold for every \(i,j\in {\mathbb {Z}}_+\) with \(i+j\le 2k-2\) and one of the following statements holds:

-

(a)

\(\big ({\mathcal {M}}(k)\big )_{{{\mathcal {B}}}\setminus \{X^k\}}\) is positive definite.

-

(b)

\({{\,\textrm{rank}\,}}\big ({\mathcal {M}}(k)\big )_{{{\mathcal {B}}}{\setminus } \{X^k\}} = {{\,\textrm{rank}\,}}{\mathcal {M}}(k).\)

-

(a)

-

(4)

The relations \(\beta _{1+i,j}=\beta _{i,2+j}\) hold for every \(i,j\in {\mathbb {Z}}_+\) with \(i+j\le 2k-2\) and \(\gamma =(\gamma _0,\ldots ,\gamma _{4k})\), defined by \(\gamma _i=\beta _{\lfloor \frac{i}{2}\rfloor ,i\; \textrm{mod}\; 2}\), admits a \({\mathbb {R}}\)-representing measure.

Remark 2.8

The equivalence (3)\(\Leftrightarrow \)(4) is part of the proof of [44, Theorem 3.7].

3 TMP on Reducible Cubics: Case Reduction

In this section we show that to solve the TMP on reducible cubic curves it suffices, after applying an affine linear transformation, to solve the TMP on 8 canonical forms of curves.

Proposition 3.1

Let \(k\in {\mathbb {R}}\) and \(\beta := \beta ^{(2k)}= (\beta _{i,j})_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\). Assume \({\mathcal {M}}(k;\beta )\) does not satisfy any nontrivial column relation between columns indexed by monomials of degree at most 2, but it satisfies a column relation \(p(X,Y)=\textbf{0}\), where \(p\in {\mathbb {R}}[x,y]\) is a reducible polynomial with \(\deg p=3\). If \(\beta \) admits a representing measure, then there exists an invertible affine linear transformation \(\phi \) of the form (2.1) such that the moment matrix \(\phi \big (\mathcal M(k;\beta )\big )\) satisfies a column relation \(q(x,y)=0\), where q has one of the following forms:

- Parallel lines type::

-

\(q(x,y)=y(a+y)(b+y)\), \(a,b\in {\mathbb {R}}\setminus \{0\}\), \(a\ne b\).

- Circular type::

-

\(q(x,y)=y(ay+x^2+y^2)\), \(a\in {\mathbb {R}}\setminus \{0\}\).

- Parabolic type::

-

\(q(x,y)=y(x-y^2).\)

- Hyperbolic type 1::

-

\(q(x,y)=y(1-xy)\).

- Hyperbolic type 2::

-

\(q(x,y)=y(x+y+axy)\), \(a\in {\mathbb {R}}\setminus \{0\}\).

- Hyperbolic type 3::

-

\(q(x,y)=y(ay+x^2-y^2)\), \(a\in {\mathbb {R}}\).

- Intersecting lines type::

-

\(q(x,y)=yx(y+1)\),

- Mixed type::

-

\(q(x,y)=y(1+ay+bx^2+cy^2)\), \(a,b,c\in {\mathbb {R}}\), \(b\ne 0\).

Remark 3.2

The name of the types of the form q in Proposition 3.1 comes from the type of the conic \(\frac{q(x,y)}{y}=0\). The conic \(x+y+axy=0\), \(a\in {\mathbb {R}}{\setminus } \{0\}\), is a hyperbola, since the discriminant \(a^2\) is positive. Similarly, the conic \(ay+x^2-y^2=0\), \(a\in {\mathbb {R}}\), is a hyperbola, since its discriminant is equal to 4. Clearly, the conic \(ay+x^2+y^2=0\), \(a\in {\mathbb {R}}\), is a circle with the center \((0,- \frac{a}{2})\) and radius \(\frac{a}{2}\).

Now we prove Proposition 3.1.

Proof of Proposition 3.1

Since p(x, y) is reducible, it is of the form \(p=p_1p_2\), where

Without loss of generality we can assume that \(a_2\ne 0\), since otherwise we apply the alt \((x,y)\mapsto (y,x)\) to exchange the roles of x and y. Since \(a_2\ne 0\), the alt

is invertible and hence:

We separate two cases according to the value of \(c_3\).

Case 1: \(c_3=0\). In this case (3.1) is equal to

If \(c_0=c_1=c_2=0\), then (3.2) is equal to \(c_4XY^2+c_5Y^3=\textbf{0}\). Since by assumption \(\beta \) and hence \(\phi _1(\beta )\) admit a rm, supported on

it follows by [12] that \(c_4 XY+c_5 Y^2=\textbf{0}\) is a nontrivial column relation in \(\phi _1\big ({\mathcal {M}}(k;\beta )\big )\). Hence, also \({\mathcal {M}}(k;\beta )\) satisfies a nontrivial column relation between columns indexed by monomials of degree at most 2, which is a contradiction with the assumption of the proposition. Therefore \((c_0,c_1,c_2)\ne (0,0,0).\)

Case 1.1: \(c_0\ne 0\). Dividing the relation in (3.2) by \(c_0\), we get:

Case 1.1.1: \({\widetilde{c}}_1=0\). In this case (3.3) is equivalent to:

Case 1.1.1.1: \({\widetilde{c}}_4=0\). In this case (3.4) is equivalent to

The quadratic equation \(1+{\widetilde{c}}_2 y+ {\widetilde{c}}_5 y^2=0\) must have two different real nonzero solutions, otherwise \({{\mathcal {Z}}}(y(1+{\widetilde{c}}_2x+ {\widetilde{c}}_5y))\) is a union of two parallel lines. Then it follows by [12] that there is a nontrivial column relation in \({\mathcal {M}}(k;\beta )\) between columns indexed by monomials of degree at most 2, which is a contradiction with the assumption of the proposition. So we have the parallel lines type relation from the proposition.

Case 1.1.1.2: \({\widetilde{c}}_4\ne 0\). In this case the alt

is invertible and applying it to \(\phi _1(\beta )\), we obtain:

Case 1.1.2: \({\widetilde{c}}_1\ne 0\). We apply the alt

to \(\phi _1(\beta )\) and obtain:

Case 1.1.2.1: \({\widehat{c}}_4\ne 0\). We apply the alt

to \((\phi _3\circ \phi _1)(\beta )\) and obtain:

Case 1.1.2.1.1: \(\breve{c}_2= 0\). In this case the relation in (3.7) is of the form

Applying the alt

to \((\phi _4\circ \phi _3\circ \phi _1)(\beta )\) we obtain:

Case 1.1.2.1.2: \(\breve{c}_2\ne 0\). We apply the alt

to \((\phi _4\circ \phi _3\circ \phi _1)(\beta )\) and obtain:

Case 1.1.2.2: \({\widehat{c}}_4= 0\). In this case (3.6) is equivalent to:

Case 1.1.2.2.1: \({\widetilde{c}}_2=0\). Applying the alt

to \((\phi _3\circ \phi _1)(\beta )\) we obtain:

Case 1.1.2.2.2: \({\widetilde{c}}_2\ne 0\). Applying the alt

to \((\phi _3\circ \phi _1)(\beta )\) and obtain:

Further on, the relation in (3.9) is equivalent to

Finally, applying the alt

to \((\phi _8\circ \phi _3\circ \phi _1)(\beta )\), we obtain:

Case 1.2: \(c_0= 0\). In this case (3.2) is equivalent to:

Assume that \(c_1=0\). Since by assumption \(\beta \) and hence \(\phi _1(\beta )\) admits a rm, supported on

it follows by [12] that \(c_2Y+c_4 XY+c_5 Y^2=\textbf{0}\) is a nontrivial column relation in \(\phi _1\big ({\mathcal {M}}(k;\beta )\big )\). Hence, also \({\mathcal {M}}(k;\beta )\) satisfies a nontrivial column relation between columns indexed by monomials of degree at most 2, which is a contradiction with the assumption of the proposition. Hence, \(c_1\ne 0.\) Applying the alt \((x,y)\mapsto (c_1x,y)\) to \(\phi _1(\beta )\), we obtain a sequence with the moment matrix satisfying the column relation of the form (3.6) and we can proceed as in the Case 1.1.2 above.

Case 2: \(c_3\ne 0\). Applying the alt

to \(\phi _1(\beta )\), we obtain:

Case 2.1: \({\widetilde{c}}_1= 0\). In this case (3.12) is equivalent to:

Case 2.1.1: \(c_0= 0\). Dividing the relation in (3.13) with \(\frac{|c_3|}{c_3}\), (3.13) is equivalent to:

Applying the alt

to \((\phi _{10}\circ \phi _1)(\beta )\), we obtain:

Case 2.1.1.1: \(\breve{c}_5=0\). Since by assumption of the proposition, \((\phi _{11}\circ \phi _{10}\circ \phi _1)(\beta )\) admits a rm, supported on \({{\mathcal {Z}}}(y(\breve{c}_2y+x^2))\), \(\breve{c}_2\) in (3.15) cannot be equal to 0. Indeed, \(\breve{c}_2=0\) would imply that \({{\mathcal {Z}}}(y(\breve{c}_2y+x^2))= {{\mathcal {Z}}}(yx^2)={{\mathcal {Z}}}(yx)\) and by [12], \(XY=\textbf{0}\) would be a nontrivial column relation in \((\phi _{11}\circ \phi _{10}\circ \phi _1)\big (\mathcal M(k;\beta )\big )\). Hence, also \({\mathcal {M}}(k;\beta )\) would satisfy a nontrivial column relation between columns indexed by monomials of degree at most 2, which is a contradiction with the assumption of the proposition. Since \(\breve{c}_2\ne 0\), after applying the alt

to \((\phi _{11}\circ \phi _{10}\circ \phi _1)(\beta )\), we obtain:

Case 2.1.1.2: \(\breve{c}_5>0\). Applying the alt

to \((\phi _{11}\circ \phi _{10}\circ \phi _1)(\beta )\) we obtain:

Case 2.1.1.3: \(\breve{c}_5<0\). Applying the alt

to \((\phi _{11}\circ \phi _{10}\circ \phi _1)(\beta )\), we obtain:

Case 2.1.2: \(c_0\ne 0\). Dividing the relation in (3.13) with \(c_0\), (3.13) is equivalent to:

Applying the alt

to \((\phi _{10}\circ \phi _1)(\beta ),\) we obtain:

Case 2.2: \({\widetilde{c}}_1\ne 0\). Dividing the relation in (3.12) with \(\frac{|c_3|}{c_3}\), (3.12) is equivalent to:

Now we apply the alt

to \((\phi _{10}\circ \phi _1)(\beta )\) and obtain:

Case 2.2.1: \(\breve{c}_0= 0\). In this case the relation in (3.18) becomes equal to the relation in (3.14) from the Case 2.1.1, so we can proceed as above.

Case 2.2.2: \(\breve{c}_0\ne 0\). Dividing the relation in (3.18) with \(\breve{c}_0\), it becomes equal to the relation in (3.16) from the Case 2.1.2, so we can proceed as above. \(\square \)

4 Solving the TMP on Canonical Reducible Cubic Curves

Let \(\beta =\{\beta _i\}_{i\in {\mathbb {Z}}_+^2,|i|\le 2k}\) be a sequence of degree 2k, \(k\in {\mathbb {N}}\), and

the set of rows and columns of the moment matrix \({{\mathcal {M}}}(k;\beta )\) in the degree-lexicographic order. Let

be a polynomial of degree 3 in one of the canonical forms from Proposition 3.1. Hence, c(x, y) a polynomial of degree 2. \(\beta \) will have a \({{\mathcal {Z}}}(p)\)-rm if and only if it can be decomposed as

where

and the sum in (4.3) is a component-wise sum. On the level of moment matrices, (4.3) is equivalent to

Note that if \(\beta \) has a \({{\mathcal {Z}}}(p)\)-rm, then the matrix \(\mathcal M(k;\beta )\) satisfies the relation \(p(X,Y)=\textbf{0}\) and it must be rg, i.e.,

We write \(\vec {X}^{(0,k)}:=( 1 ,X,\ldots ,X^k)\). Let \({{\mathcal {T}}}\subseteq {{\mathcal {C}}}\) be a subset, such that the columns from \({{\mathcal {T}}}\) span the column space \({{\mathcal {C}}}({{\mathcal {M}}}(k;\beta ))\) and

In this new order of rows and columns, (4.4) becomes equivalent to

We write

By the form of the atoms, we know that \({\widetilde{{{\mathcal {M}}}}}(k;\beta ^{(\ell )})\) and \({\widetilde{{{\mathcal {M}}}}}(k;\beta ^{(c)})\) will be of the forms

for some Hankel matrix \(A\in S_{k+1}.\) Define matrix functions \({{\mathcal {F}}}:S_{k+1}\rightarrow S_{\frac{(k+1)(k+2)}{2}}\) and \({{\mathcal {H}}}:S_{k+1}\rightarrow S_{k+1}\) by

Using (4.9), (4.7) becomes equivalent to

for some Hankel matrix \(A\in S_{k+1}\).

Lemma 4.1

Assume the notation above. The sequence \(\beta =\{\beta _i\}_{i\in {\mathbb {Z}}_+^2,|i|\le 2k}\), where \(k\ge 3\), has a \({{\mathcal {Z}}}(p)\)-representing measure if and only if there exist a Hankel matrix \(A\in S_{k+1}\), such that:

-

(1)

The sequence with the moment matrix \({{\mathcal {F}}}(A)\) has a \({{\mathcal {Z}}}(c)\)-representing measure.

-

(2)

The sequence with the moment matrix \({{\mathcal {H}}}(A)\) has a \({\mathbb {R}}\)-representing measure.

Proof

First we prove the implication \((\Rightarrow )\). If \(\beta \) has a \({{\mathcal {Z}}}(p)\)-rm \(\mu \), then \(\mu \) is supported on the union of the line \(y=0\) and the conic \(c(x,y)=0\). Since the moment matrix, generated by the measure supported on \(y=0\), can be nonzero only when restricted to the columns and rows indexed by \({\vec {X}}^{(0,k)}\), it follows that the moment matrix generated by the restriction \(\mu |_{\{c=0\}}\) (resp. \(\mu |_{\{y=0\}}\)) of the measure \(\mu \) to the conic \(c(x,y)=0\) (resp. line \(y=0\)), is of the form \({{\mathcal {F}}}(A)\) (resp. \({{\mathcal {H}}}(A)\oplus \textbf{0}_{\frac{k(k+1)}{2}}\)) for some Hankel matrix \(A\in S_{k+1}\).

It remains to establish the implication \((\Leftarrow )\). Let \(\mathcal M^{(c)}(k)\) (resp. \({\mathcal {M}}^{(\ell )}(k)\)) be the moment matrix generated by the measure \(\mu _1\) (resp. \(\mu _2\)) supported on \({{\mathcal {Z}}}(c)\) (resp. \(y=0\)) such that

respectively, where P is as in (4.6). The equalities (4.12) imply that \({{\mathcal {M}}}(k;\beta )={{\mathcal {M}}}^{(c)}(k)+{{\mathcal {M}}}^{(\ell )}(k;\beta )\). Since the measure \(\mu _1+\mu _2\) is supported on the curve \({{\mathcal {Z}}}(c)\cup \{y=0\}={{\mathcal {Z}}}(p)\), the implication \((\Leftarrow )\) holds. \(\square \)

Lemma 4.2

Assume the notation above and let the sequence \(\beta =\{\beta _i\}_{i\in {\mathbb {Z}}_+^2,|i|\le 2k}\), where \(k\ge 3\), admit a \({{\mathcal {Z}}}(p)\)-representing measure. Let \(A:=A_{\big (\beta _{0,0}^{(c)},\beta _{1,0}^{(c)},\ldots ,\beta _{2k,0}^{(c)}\big )}\in S_{k+1}\) be a Hankel matrix such that \({{\mathcal {F}}}(A)\) admits a \({{\mathcal {Z}}}(c)\)-representing measure and \({{\mathcal {H}}}(A)\) admits a \({\mathbb {R}}\)-representing measure. Let c(x, y) be of the form

If:

-

(1)

\(a_{00}\ne 0\), then

$$\begin{aligned} \beta _{i,0}^{(c)} = - \frac{1}{a_{00}} (a_{01}\beta _{i,1}+a_{02}\beta _{i,2}+a_{11}\beta _{i+1,1}) \quad \text {for }i=0,\ldots ,2k-2. \end{aligned}$$ -

(2)

\(a_{10}\ne 0\), then

$$\begin{aligned} \beta _{i,0}^{(c)} = - \frac{1}{a_{10}} (a_{01}\beta _{i,1}+a_{02}\beta _{i,2}+a_{11}\beta _{i+1,1}) \quad \text {for }i=1,\ldots ,2k-1. \end{aligned}$$ -

(3)

\(a_{20}\ne 0\), then

$$\begin{aligned} \beta _{i,0}^{(c)} = - \frac{1}{a_{20}} (a_{01}\beta _{i,1}+a_{02}\beta _{i,2}+a_{11}\beta _{i+1,1}) \quad \text {for }i=2,\ldots ,2k. \end{aligned}$$

Proof

By Lemma 4.1, \({{\mathcal {F}}}(A)\) has a \({{\mathcal {Z}}}(c)\)-rm for some Hankel matrix \(A\in S_{k+1}\). Hence, \({{\mathcal {F}}}(A)\) satisfies the rg relations \(X^iY^jc(X,Y)=\textbf{0}\) for \(i,j\in {\mathbb {Z}}_+\), \(i+j\le k-2\). Let us assume that \(a_{00}\ne 0\) and \(a_{10}=a_{20}=0\). In particular, \({{\mathcal {F}}}(A)\) satisfies the relations

Observing the rows \( 1 ,X,\ldots ,X^k\) of \({{\mathcal {F}}}(A)\), the relations (4.14) imply that

Using the forms of \({\widetilde{{{\mathcal {M}}}}}(k;\beta )\) and \({{\mathcal {F}}}(A)\) (see (4.8) and (4.10)), it follows that \(\beta _{i,1}^{(c)}=\beta _{i,1}\) and \(\beta _{j,2}^{(c)}=\beta _{j,2}\) for each i, j. Using this in (4.15) proves the statement 1 of the lemma. The proofs of the statements 2 and 3 are analogous. \(\square \)

Lemma 4.2 states that for all canonical relations from Proposition 3.1 except for the mixed type relation, all but two entries of the Hankel matrix A from Lemma 4.1 are uniquely determined by \(\beta \). The following lemma gives the smallest candidate for A in Lemma 4.1 with respect to the usual Loewner order of matrices.

Lemma 4.3

Assume the notation above and let \(\beta =\{\beta _i\}_{i\in {\mathbb {Z}}_+^2,|i|\le 2k}\), where \(k\ge 3\), be a sequence of degree 2k. Assume that \(\widetilde{{\mathcal {M}}}(k;\beta )\) is positive semidefinite and satisfies the column relations (4.5). Then:

-

(1)

\({{\mathcal {F}}}(A)\succeq 0\) for some \(A\in S_{k+1}\) if and only if \(A\succeq A_{12}(A_{22})^{\dagger } (A_{12})^T\).

-

(2)

\({{\mathcal {F}}}\big (A_{12}(A_{22})^{\dagger } (A_{12})^T\big )\succeq 0\) and \({{\mathcal {H}}}\big (A_{12}(A_{22})^{\dagger } (A_{12})^T\big ) \succeq 0\).

-

(3)

\({{\mathcal {F}}}\big (A_{12}(A_{22})^{\dagger } (A_{12})^T\big )\) satisfies the column relations \(X^iY^jc(X,Y)=0\) for \(i,j\in {\mathbb {Z}}_+\) such that \(i+j\le k-2\).

-

(4)

We have that

$$\begin{aligned} {{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta )&= {{\,\textrm{rank}\,}}A_{22}+ {{\,\textrm{rank}\,}}\big (A_{11}-A_{12}(A_{22})^{\dagger } (A_{12})^T\big )\\&={{\,\textrm{rank}\,}}{{\mathcal {F}}}\big (A_{12}(A_{22})^{\dagger } (A_{12})^T\big )+ {{\,\textrm{rank}\,}}{{\mathcal {H}}}\big (A_{12}(A_{22})^{\dagger } (A_{12})^T\big ). \end{aligned}$$

Proof

By the equivalence between (1a) and (1b) of Theorem 2.2 used for \((M,A)=(\widetilde{{\mathcal {M}}}(k;\beta ),A_{11})\) and \((M,A)=(\big (\widetilde{\mathcal {M}}(k;\beta )\big )_{\vec {X}^{(0,k)}\cup {{\mathcal {T}}}},A_{11})\), it follows in particular that

and

where

Using the equivalence between (1a) and (1b) of Theorem 2.2 again for the pairs \((M,A)=({{\mathcal {F}}}(A),A)\) and \((M,A)=(\big ({{\mathcal {F}}}(A)\big )_{\vec {X}^{(0,k)}\cup {{\mathcal {T}}}},A)\), it follows that

Since \({{\mathcal {F}}}(A)\succeq 0\) implies, in particular, that \(\big ({{\mathcal {F}}}(A)\big )_{\vec {X}^{(0,k)}\cup {{\mathcal {T}}}}\succeq 0\), (4.18) implies that

Claim. \(A_{\min }= {\widetilde{A}}_{\min }\).

Proof of Claim

By (4.18) and (4.19), it suffices to prove that \({{\mathcal {F}}}({\widetilde{A}}_{\min })\succeq 0\). By definition of \({{\mathcal {T}}}\) and the relations \(X^iY^jp(X,Y)=X^iY^{j+1}c(X,Y)=\textbf{0}\), \(i,j\in {\mathbb {Z}}_+,i+j\le k-3\), which hold in \(\widetilde{{{\mathcal {M}}}}(k;\beta )\), it follows, in particular, that

(4.16) and (4.20) together imply that

(4.16) and (4.21) can be equivalently expressed as

We have that

where I is the identity matrix of the same size as \(A_{22}\) and we used (4.22) in the second equality. This proves the Claim. \(\square \)

Using (4.17), (4.18) and Claim, the statements 1 and 2 follow. By Theorem 2.2.2, used for \((M,A)=(\widetilde{{{\mathcal {M}}}}(k;\beta ),A_{11})\), we have that

By (4.20) and

it follows by Theorem 2.2, used for \((M,A)=(B,A_{22})\), that \({{\,\textrm{rank}\,}}B={{\,\textrm{rank}\,}}A_{22}\). Using this and the Claim, (4.23) implies the statement 4.

Since \(\widetilde{{\mathcal {M}}}(k;\beta )\) satisfies the relations (4.5), it follows that the restriction \(\big ({{\mathcal {F}}}({\widetilde{A}}_{\min })\big )_{{{\mathcal {C}}}{\setminus } \vec {X}^{(0,k)},{{\mathcal {C}}}} \) satisfies the column relations \(X^iY^jc(X,Y)=\textbf{0}\) for \(i,j\in {\mathbb {Z}}_+\) such that \(i+j\le k-2\). By Proposition 2.3, these relations extend to \({{\mathcal {F}}}({\widetilde{A}}_{\min })\), which proves 3. \(\square \)

Remark 4.4

By Lemmas 4.1–4.3, solving the \({{\mathcal {Z}}}(p)\)-TMP for the sequence \(\beta =\{\beta _i\}_{i\in {\mathbb {Z}}_+^2,|i|\le 2k}\), where \(k\ge 3\), with p being any but the mixed type relation from Proposition 3.1, the natural procedure is the following:

-

(1)

First compute \(A_{\min }:=A_{12}(A_{22})^{\dagger }A_{12}\). By Lemma 4.3.3, there is one entry of \(A_{\min }\), which might need to be changed to obtain a Hankel structure. Namely, in the notation (4.13), if:

-

(a)

\(a_{00}\ne 0\), then the value of \((A_{\min })_{k,k}\) must be made equal to \((A_{\min })_{k-1,k+1}\).

-

(b)

\(a_{10}\ne 0\), then the value of \((A_{\min })_{1,k+1}\) must be made equal to \((A_{\min })_{2,k}\).

-

(c)

\(a_{20}\ne 0\), then the value of \((A_{\min })_{2,2}\) must be made equal to \((A_{\min })_{3,1}\).

Let \({\widehat{A}}_{\min }\) be the matrix obtained from \(A_{\min }\) after performing the changes described above.

-

(a)

-

(2)

Study if \({{\mathcal {F}}}({\widehat{A}}_{\min })\) and \({{\mathcal {H}}}({\widehat{A}}_{\min })\) admit a \({{\mathcal {Z}}}(c)\)-rm and a \({\mathbb {R}}\)-rm, respectively. If the answer is yes, \(\beta \) admits a \({{\mathcal {Z}}}(p)\)-rm. Otherwise by Lemma 4.2, there are two antidiagonals of the Hankel matrix \({\widehat{A}}_{\min }\), which can by varied so that the matrices \({{\mathcal {F}}}({\widehat{A}}_{\min })\) and \({{\mathcal {H}}}({\widehat{A}}_{\min })\) will admit the corresponding measures. Namely, in the notation (4.13), if:

-

(a)

\(a_{00}\ne 0\), then the last two antidiagonals of \({\widehat{A}}_{\min }\) can be changed.

-

(b)

\(a_{10}\ne 0\), then the left-upper and the right-lower corner of \({\widehat{A}}_{\min }\) can be changed.

-

(c)

\(a_{20}\ne 0\), then the first two antidiagonals of \({\widehat{A}}_{\min }\) can be changed.

To solve the \({{\mathcal {Z}}}(p)\)-TMP for \(\beta \) one needs to characterize, when it is possible to change these antidiagonals in such a way to obtain a matrix \(\breve{A}_{\min }\), such that \({{\mathcal {F}}}(\breve{A}_{\min })\) and \({{\mathcal {H}}}(\breve{A}_{\min })\) admit a \({{\mathcal {Z}}}(c)\)-rm and a \({\mathbb {R}}\)-rm, respectively.

-

(a)

In Sects. 5 and 6 we solve concretely the TMP on reducible cubic curves in the circular and parabolic type form (see the classification from Proposition 3.1). The parallel lines type form was solved in [42], while the hyperbolic type forms will be solved in the forthcoming work [40].

5 Circular Type Relation: \(p(x,y)=y(ay+x^2+y^2)\), \(a\notin {\mathbb {R}}{\setminus }\{0\}\)

In this section we solve the \({{\mathcal {Z}}}(p)\)-TMP for the sequence \(\beta =\{\beta _{i,j}\}_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\) of degree 2k, \(k\ge 3\), where \(p(x,y)=y(ay+x^2+y^2)\), \(a\in {\mathbb {R}}{\setminus }\{0\}\). Assume the notation from Sect. 4. If \(\beta \) admits a \({{\mathcal {Z}}}(p)\)-TMP, then \({\mathcal {M}}(k;\beta )\) must satisfy the relations

In the presence of all column relations (5.1), the column space \({{\mathcal {C}}}({\mathcal {M}}(k;\beta ))\) is spanned by the columns in the set

where

Let \({\widetilde{{{\mathcal {M}}}}}(k;\beta )\) be as in (4.9). Let

As described in Remark 4.4, \(A_{\min }\) might need to be changed to

where

Let \({{\mathcal {F}}}(\textbf{A})\) and \({{\mathcal {H}}}(\textbf{A})\) be as in (4.10). Write

Define also the matrix function

The solution to the cubic circular type relation TMP is the following.

Theorem 5.1

Let \(p(x,y)=y(ay+x^2+y^2)\), \(a\in {\mathbb {R}}{\setminus }\{0\}\), and \(\beta =(\beta _{i,j})_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\), where \(k\ge 3\). Assume also the notation above. Then the following statements are equivalent:

-

(1)

\(\beta \) has a \({{\mathcal {Z}}}(p)\)-representing measure.

-

(2)

\(\widetilde{{\mathcal {M}}}(k;\beta )\) is positive semidefinite, the relations

$$\begin{aligned} a\beta _{i,2+j} + \beta _{2+i,1+j} = - \beta _{i,3+j} \quad \text {hold for every }i,j\in {\mathbb {Z}}_+\text { with }i+j\le 2k-3 \nonumber \\ \end{aligned}$$(5.6)and one of the following statements holds:

-

(a)

\(\eta =0\) and one of the following holds:

-

(i)

\({{\,\textrm{rank}\,}}({{\mathcal {H}}}(A_{\min }))_{\vec {X}^{(0,k-1)}}=k\).

-

(ii)

\({{\,\textrm{rank}\,}}(H_2)_{\vec {X}^{(1,k-1)}}={{\,\textrm{rank}\,}}H_2\).

-

(i)

-

(b)

\(\eta >0\), \(H_2\) is positive semidefinite and defining a real number

$$\begin{aligned} \begin{aligned} u_0&= \beta _{1,0}-(A_{\min })_{1,2} -(h_{12}^{(1)})^T (H_{22})^{\dagger } h_{12}^{(2)}, \end{aligned} \end{aligned}$$(5.7)a function

$$\begin{aligned} h(\textbf{t})= \sqrt{ (H_1/H_{22}- \textbf{t}) (H_2/H_{22}) } \end{aligned}$$(5.8)and a set

$$\begin{aligned} \begin{aligned} {\mathcal {I}}&= \Big \{(t,\sqrt{\eta t})\in {\mathbb {R}}_+\times {\mathbb {R}}_+:\sqrt{\eta t}= u_{0}+h(t)\Big \},\\&\hspace{0.5cm} \cup \Big \{(t,\sqrt{\eta t})\in {\mathbb {R}}_+\times {\mathbb {R}}_- :\sqrt{\eta t}= u_{0}-h(t)\Big \},\\&\hspace{0.5cm} \cup \Big \{(t,- \sqrt{\eta t})\in {\mathbb {R}}_+\times {\mathbb {R}}_+:- \sqrt{\eta t}= u_{0}+h(t)\Big \},\\&\hspace{0.5cm} \cup \Big \{(t,- \sqrt{\eta t})\in {\mathbb {R}}_+\times {\mathbb {R}}_- :- \sqrt{\eta t}= u_{0}-h(t)\Big \},\\ \end{aligned}\nonumber \\ \end{aligned}$$(5.9)

-

(a)

one of the following holds:

-

(i)

The set \({\mathcal {I}}\) has two elements and \(H_2\) is positive definite.

-

(ii)

\({\mathcal {I}}=\{({\tilde{t}},{\tilde{u}})\}\) and

$$\begin{aligned} {{\,\textrm{rank}\,}}\big ( \big ( {{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}})) \big )_{\vec {X}^{(0,k-1)}} \big ) = {{\,\textrm{rank}\,}}{{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}})). \end{aligned}$$(5.10)

Moreover, if a \({\mathcal {Z}}(p)\)-representing measure for \(\beta \) exists, then:

-

There exists at most \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta )+1)\)-atomic \({{\mathcal {Z}}}(p)\)-representing measure.

-

There exists a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-representing measure if and only if any of the following holds:

-

\(\eta =0\).

-

\(\eta >0\) and \({{\mathcal {H}}}(A_{\min })\) is positive definite.

-

In particular, a p-pure sequence \(\beta \) with a \({{\mathcal {Z}}}(p)\)-representing measure admits a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-representing measure.

Remark 5.2

In this remark we explain the idea of the proof of Theorem 5.1 and the meaning of the conditions in the statement of the theorem.

By Lemmas 4.1–4.2, the existence of a \({\mathcal {Z}}(p)\)-rm for \(\beta \) is equivalent to the existence of \(t,u\in {\mathbb {R}}\) such that \({{\mathcal {F}}}({\mathcal {G}}(t,u))\) admits a \({{\mathcal {Z}}}(ay+x^2+y^2)\)-rm and \({{\mathcal {H}}}({\mathcal {G}}(t,u))\) admits a \({\mathbb {R}}\)-rm. Let

We denote by \(\partial R_i\) and \(\mathring{R}_i\) the topological boundary and the interior of the set \(R_i\), respectively. By the necessary conditions for the existence of a \({{\mathcal {Z}}}(p)\)-rm [12, 14, 25], \(\widetilde{{\mathcal {M}}}(k;\beta )\) must be psd and the relations (5.6) must hold. Using also Theorem 2.6, Theorem 5.1.1 is equivalent to

In the proof of Theorem 5.1 we show that (5.11) is equivalent to Theorem 5.1.2:

-

(1)

First we establish (see Claims 1 and 2 below) that the form of:

-

\({\mathcal {R}}_1\) is one of the following:

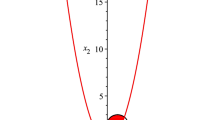

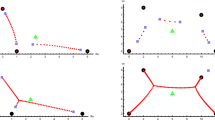

where the left case occurs if \(\eta >0\) and the right if \(\eta =0\). The case \(\eta <0\) cannot occur.

-

\({\mathcal {R}}_2\) is one of the following:

where the left case occurs if \(H_2/H_{22}>0\) and the right if \(H_2/H_{22}=0\).

-

-

(2)

If \(\eta =0\), then we show that (5.11) is equivalent to

$$\begin{aligned} \begin{aligned}&\widetilde{{{\mathcal {M}}}}(k;\beta )\succeq 0, \text { the relations } 6.8\text { hold and } {{\mathcal {H}}}({\mathcal {G}}(0,0))\text { admits a }{\mathbb {R}}\text {-rm}. \end{aligned} \end{aligned}$$The latter statement is further equivalent to Theorem 5.1.(2a).

-

(3)

If \(\eta >0\), then by the forms of \({\mathcal {R}}_1\) and \({\mathcal {R}}_2\), \({{\mathcal {I}}}=\partial {\mathcal {R}}_1\cap \partial {\mathcal {R}}_2\) is one of the following: (i) \(\emptyset \), (ii) a one-element set, (iii) a two-element set. In the case:

-

(i), a \({{\mathcal {Z}}}(p)\)-rm for \(\beta \) clearly cannot exist.

-

(ii), then denoting \({{\mathcal {I}}}=\{({\tilde{t}},{\tilde{u}})\}\), (5.11) is equivalent to

$$\begin{aligned} \begin{aligned}&\widetilde{{{\mathcal {M}}}}(k;\beta )\succeq 0, \text { the relations } 6.8\text { hold and } {{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}}))\text { admits a }{\mathbb {R}}\text {-rm}. \end{aligned} \end{aligned}$$The latter statement is equivalent to Theorem 5.1.(2(b)ii).

-

(iii), (5.11) is equivalent to \(H_2\) being positive definite, which is Theorem 5.1.(2(b)i). Moreover, in this case for at least one of the points \((t,u)\in {{\mathcal {I}}}\), a \({{\mathcal {Z}}}(ay+x^2+y^2)\)-rm and a \({\mathbb {R}}\)-rm exist for \({{\mathcal {F}}}({\mathcal {G}}(t,u))\) and \({\mathcal {H}}({\mathcal {G}}(t,u))\), respectively.

-

Proof of Theorem 5.1

Let \({\mathcal {R}}_1, {\mathcal {R}}_2\) be as in Remark 5.2. As explained in Remark 5.2, Theorem 5.1.(1) is equivalent to (5.11), thus it remains to prove that (5.11) is equivalent to Theorem 5.1.(2).

First we establish a few claims needed in the proof. Claim 1 (resp. 2) describes \({\mathcal {R}}_1\) (resp. \({\mathcal {R}}_2\)) concretely. \(\square \)

Claim 1. Assume that \(\widetilde{{\mathcal {M}}}(k;\beta )\succeq 0\). Then

If \(\eta \ge 0\), we have

where \(A_{\min }\) is an in (5.3).

Proof of Claim 1

Note that

By Lemma 4.3, we have that

Using (5.14), (5.15) and the definition of \({\mathcal {R}}_1\), we have that

which proves (5.12).

To prove (5.13) first note that by construction of \({{\mathcal {F}}}(A_{\min })\), the columns 1 and X are in the span of the columns indexed by \({{\mathcal {C}}}\setminus \vec {X}^{(0,k)}\). Hence, there are vectors

of the forms

Let \(r:={{\,\textrm{rank}\,}}\begin{pmatrix} t &{} u \\ u &{} \eta \end{pmatrix}\). Clearly,

We separate three cases according to r.

Case 1: \(r=0\). In this case \(t=u=\eta =0\) and \(\mathcal G(0,0)=A_{\min }\). In this case (5.13) clearly holds.

Case 2: \(r=1\). In this case \(t\eta =u^2\). Together with (5.16), this is equivalent to \((t>0 \text { or }\eta >0) \text { and } u\in \{- \sqrt{\eta t},\sqrt{\eta t}\}\). By (5.18) and \({{\mathcal {F}}}({\mathcal {G}}(t,u))\succeq {{\mathcal {F}}}(A_{\min })\) to prove (5.13), it suffices to find \(v\in \ker {{\mathcal {F}}}(A_{\min })\) and \(v\notin \ker {{\mathcal {F}}}({\mathcal {G}}(t,u))\). Note that at least one of \(v_1,v_2\) from (5.17) is such a vector, since

Case 3: \(r=2\). In this case \(t\eta >u^2\). Together with (5.16), this is equivalent to \(t>0,\eta >0,u\in (- \sqrt{\eta t},\sqrt{\eta t})\). Note that

By Case 2, we have \({{\,\textrm{rank}\,}}{{\mathcal {F}}}\Big (\mathcal G\Big (\frac{u^2}{\eta },u\Big )\Big )={{\,\textrm{rank}\,}}{{\mathcal {F}}}(A_{\min })+1\). By (5.18) and (5.19), to prove (5.13), it suffices to find \(v\in \ker {{\mathcal {F}}}\Big (\mathcal G\Big (\frac{u^2}{\eta },u\Big )\Big )\) and \(v\notin \ker {{\mathcal {F}}}(\mathcal G(t,u))\). We will check below, that \(v_3\), defined by

is such a vector. This follows by

and

This concludes the proof of Claim 1. \(\square \)

Claim 2. Assume that \(\widetilde{{\mathcal {M}}}(k;\beta )\succeq 0\). Let \(u_{0}\), \(h(\textbf{t})\) be as in (5.7),(5.8) and

Then

If \(H_2\succeq 0\), we have that

Proof of Claim 2

Write

Note that \(H(0)=({{\mathcal {H}}}(A_{\min }))_{\{ 1 \}\cup \vec {X}^{(2,k)}}\). By Lemma 4.3.(2), \({{\mathcal {H}}}(A_{\min })\succeq 0\) and hence, \(H(0)\succeq 0\). By Theorem 2.2, used for \((M,C)=(H(0),H_{22})\), it follows that \(H_2\succeq 0\) and \(h_{12}^{(1)}\in {{\mathcal {C}}}(H_{22}).\) Again, by Theorem 2.2, used for \((M,C)=(H(t),H_{22})\), it follows that \(H(t)\succeq 0\) iff \(t\le t_0\). For a fixed t satisfying \(t\le t_0\), Lemma 2.4, used for \(A(\textbf{x})={{\mathcal {H}}}(\mathcal {G}(t,\textbf{x}))\), together with \(H(t)/H_{22}=H_1/H_{22}-t\), implies (5.20)–(5.21) and proves Claim 2. \(\square \)

Claim 3. If \(\eta =0\), then \((0,0)\in \partial {\mathcal {R}}_1\cap {\mathcal {R}}_2\).

Proof of Claim 3

By Claim 1, \(\eta =0\) implies that \((0,0)\in \partial {\mathcal {R}}_1\). By (5.14) and \(\eta =0\), \({{\mathcal {H}}}(A_{\min })={{\mathcal {H}}}({\mathcal {G}}(0,0))\). By Lemma 4.3.(2), \({{\mathcal {H}}}(A_{\min })\succeq 0\). Hence, \((0,0)\in {\mathcal {R}}_2\), which proves Claim 3. \(\square \)

Claim 4. If \(\eta >0\), then:

-

The set \({{\mathcal {I}}}\) (see (5.9)) has at most 2 elements.

-

\({\mathcal {R}}_1\cap {\mathcal {R}}_2\ne \emptyset \) if and only if \({{\mathcal {I}}}\ne \emptyset .\)

-

If \({{\mathcal {I}}}\) has two elements, then \(H_2/H_{22}>0\).

-

If \({{\mathcal {I}}}\) has one element, which we denote by \(({\tilde{t}},{\tilde{u}})\), then one of the following holds:

-

\({\mathcal {R}}_1 \cap {\mathcal {R}}_2={{\mathcal {I}}}\).

-

\(\partial {\mathcal {R}}_2={\mathcal {R}}_2=\{(t,u_0):t\le t_0\}\) and \({{\mathcal {I}}}\subsetneq {\mathcal {R}}_1\cap {\mathcal {R}}_2= \{(t,u_0):{\tilde{t}}\le t\le t_0\}\).

-

Proof of Claim 4

Note that the set \({{\mathcal {I}}}\) is equal to \(\partial {{\mathcal {R}}}_1 \cap \partial { {\mathcal {R}}}_2\) (see (5.12) and (5.20)). Further on, \(\partial {{\mathcal {R}}}_1\) is the union of the square root functions \(\pm \sqrt{\eta \textbf{t}}\), defined for \(\textbf{t}\in [0,\infty )\). Similarly, \(\partial {{\mathcal {R}}}_2\) is the union of the square root functions \(u_0\pm \sqrt{(H_1/H_{22}- \textbf{t}) (H_2/H_{22})}\), defined for \(\textbf{t}\in (- \infty ,t_0]\). If \(H_2/H_{22}=0\), then the latter could be a half-line \(\{(t,u_0):t\le t_0\}\). If \({\mathcal {R}}_1\cap {\mathcal {R}}_2\ne \emptyset \), then geometrically it is clear that \({{\mathcal {I}}}\) contains one or two elements. Assume that \({{\mathcal {I}}}\) contains only one element, denoted by \(({\tilde{t}},{\tilde{u}})\). Clearly, \({{\mathcal {I}}}\subseteq {\mathcal {R}}_1\cap {\mathcal {R}}_2\). Further on, we either have \({{\mathcal {I}}}={\mathcal {R}}_1\cap {\mathcal {R}}_2\) or \({{\mathcal {I}}}\subsetneq {\mathcal {R}}_1\cap {\mathcal {R}}_2\). By the forms of \(\partial \mathcal R_1\) and \(\partial {\mathcal {R}}_2\), the latter case occurs if \(H_2/H_{22}=0\) or equivalently \(\partial {\mathcal {R}}_2=\mathcal R_2=\{(t,u_0):t\le t_0\}\). But then the whole line segment \(\{(t,u_0:{\tilde{t}}\le t\le t_0\}\) lies in \({\mathcal {R}}_1\), which proves Claim 4. \(\square \)

Claim 5. Let \(H_2\) (see (5.4)) be positive definite, \((t_1,u_1)\in \partial {\mathcal {R}}_2, (t_2,u_2)\in \partial {\mathcal {R}}_2\) and \(u_1\ne u_2\). Then at least one of \({{\mathcal {H}}}(\mathcal G(t_1,u_1))\) and \({{\mathcal {H}}}({\mathcal {G}}(t_2,u_2))\) admits a \({\mathbb {R}}\)-rm.

Proof of Claim 5

Note that \({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\), \(i=1,2\), is of the form

Assume on the contrary that none of \({{\mathcal {H}}}({\mathcal {G}}(t_1,u_1))\) and \({{\mathcal {H}}}({\mathcal {G}}(t_2,u_2))\) admits a \({\mathbb {R}}\)-rm. Theorem 2.5 implies that the column \(X^k\) of \({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\), \(i=1,2\), is not in the span of the other columns. Using this fact, the facts that \({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\), \(i=1,2\), are not pd (by \((t_i,u_i)\in \partial {\mathcal {R}}_2\), \(i=1,2\)) and \(H_2\) is pd, it follows that there is a column relation \( 1 =\sum _{j=1}^{k-1} \alpha ^{(i)}_j X^{j}, \) \(\alpha _j^{(i)}\in {\mathbb {R}}\), in \({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\), \(i=1,2\). Since \({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\succeq 0\), \(i=1,2\), it follows in particular by Theorem 2.2, used for \((M,A)=({{\mathcal {H}}}(\mathcal G(t_i,u_i)),({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i)))_{\vec {X}^{(0,k-1)}})\), \(i=1,2\), that

Since the first column of \({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\succeq 0\), \(i=1,2\), is in the span of the others, (5.22) is equivalent to

Since

is invertible as a principal submatrix of \(H_2\), it follows that

with

If \(v_1\ne 0\), this contradicts to (5.24) since \(u_{1}\ne u_2\). Hence, \(v_1=0\). By the Hankel structure of \({{\mathcal {H}}}(\mathcal G(t_i,u_i))\), \(i=1,2\), we have that

Then (5.24) and \(v_1=0\) imply that

where \( {\widetilde{v}} = \begin{pmatrix} v_2&\cdots&v_{k-1}&-1 \end{pmatrix}. \) Since \(\big ({{\mathcal {H}}}({\mathcal {G}}(t_i,u_i))\big )_{ \vec {X}^{(1,k-1)},\vec {X}^{(1,k-1)}},\) \(i=1,2\), is a principal submatrix of \(H_{2}\), (5.25) contradicts to \(H_2\) being pd. This proves Claim 5. \(\square \)

Now we prove the implication (5.11)\(\Rightarrow \) Theorem 5.1.(2). Since \((t_0,u_0)\in {\mathcal {R}}_1\), it follows that \({\mathcal {R}}_1\ne \emptyset .\) By (5.12), \(\eta \ge 0\). We separate two cases according to the value of \(\eta \).

Case 1: \(\eta =0\). We separate two cases according to the invertibility of \(H_2\).

Case 1.1: \(H_2\) is not pd. Since \(H_2\) is not pd, then by Theorem 2.5, the last column of \({{\mathcal {H}}}({{\mathcal {G}}}(t_{0},u_{0}))\) is in the span of the previous ones. But then by rg, the last column of \(H_2\) is in the span of the previous ones. This is the case Theorem 5.1.(2(a)ii).

Case 1.2: \(H_2\) is pd. We separate two cases according to the invertibility of \(({{\mathcal {H}}}(A_{\min }))_{\vec {X}^{(0,k-1)}}\).

Case 1.2.1: \({{\,\textrm{rank}\,}}({{\mathcal {H}}}(A_{\min })_{\vec {X}^{(0,k-1)}})=k\). This is the case Theorem 5.1.(2(a)i).

Case 1.2.2: \({{\,\textrm{rank}\,}}({{\mathcal {H}}}(A_{\min })_{\vec {X}^{(0,k-1)}})<k\). We will prove that this case cannot occur. It follows from the assumption in this case that \({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })={{\,\textrm{rank}\,}}H_2=k\). Further on, the last column of \({{\mathcal {H}}}(A_{\min })\) cannot be in the span of the previous ones (otherwise \({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })<k\)). Hence, by Theorem 2.5, \({{\mathcal {H}}}(A_{\min })={{\mathcal {H}}}({{\mathcal {G}}}(0,0))\) does not admit a \({\mathbb {R}}\)-rm. Using this fact and Claim 3, \((0,0)\in \partial {\mathcal {R}}_2\). If \(t_0=0\), then \({\mathcal {R}}_1\cap {\mathcal {R}}_2= \{(0,0)\}\), which contradicts to the third condition in (5.11). So \(0<t_0\) must hold. Since \(\eta =0\), Claim 1 implies that \({\mathcal {R}}_1= \{(t,0) :t\ge 0 \}\) is a horizontal half-line. By the form of \(\partial \mathcal R_2\), which is the union of the graphs of two square root functions on the interval \((- \infty ,t_0]\), intersecting in the point \((t_0,u_0)\) and such that \((t_0,u_0)\in \partial R_2\), it follows that \({\mathcal {R}}_1\cap {\mathcal {R}}_2= \{(0,0)\}\). Note that by \(H_2\succ 0\), we have \(H_2/H_{22}>0\) and hence \(h(t)\not \equiv 0\) (see (5.8)), which implies that the square root functions are indeed not just a horizontal half-line. As above this contradicts to the third condition in (5.11). Hence, Case 1.2.2 cannot occur.

Case 2: \(\eta >0\). By assumptions, \((t_0,u_0)\in {\mathcal {R}}_1\cap {\mathcal {R}}_2\). By Claim 4, \({{\mathcal {I}}}\ne \emptyset \) and \({{\mathcal {I}}}\) has one or two elements. We separate two cases according to the number of elements in \({{\mathcal {I}}}\).

Case 2.1: \({{\mathcal {I}}}\) has two elements. By Claim 4, \(H_2/H_{22}>0\). If \(H_2\) is not pd, then the fact that \({{\mathcal {H}}}(\mathcal {G}(t_0,u_0))\) has a \({\mathbb {R}}\)-rm, implies that \(H_2/H_{22}=0\), which is a contradiction. Indeed, if \(H_2/H_{22}>0\) and \(H_2\) is not pd, then there is a nontrivial column relation among columns \(X^2,\ldots ,X^k\) in \(H_2\). By Proposition 2.3, the same holds for \({{\mathcal {H}}}({\mathcal {G}}(t_0,u_0))\). Let \(\sum _{i=0}^{k-2} c_i X^{i+2}=\textbf{0}\) be the nontrivial column relation in \({{\mathcal {H}}}(\mathcal {G}(t_0,u_0))\). But then \({{\mathcal {Z}}}(x^2\sum _{i=0}^{k-2} c_i x^i)={{\mathcal {Z}}}(x\sum _{i=0}^{k-2} c_i x^i)\) and it follows by [12] that \(\sum _{i=0}^{k-2} c_i X^{i+1}=\textbf{0}\) is also a nontrivial column relation in \({{\mathcal {H}}}({\mathcal {G}}(t_0,u_0))\). In particular, \(H_2/H_{22}=0\). Hence, \(H_2\) is pd. This is the case Theorem 5.1.(2(b)i).

Case 2.2: \({{\mathcal {I}}}\) has one element. Let us denote this element by \(({\tilde{t}},{\tilde{u}})\). By Claim 4, \({{\mathcal {I}}}={\mathcal {R}}_1\cap {\mathcal {R}}_2\) or \(\partial {\mathcal {R}}_2={\mathcal {R}}_2=\{(t,u_0):t\le t_0\}\) and \({{\mathcal {I}}}\subsetneq {\mathcal {R}}_1\cap {\mathcal {R}}_2= \{(t,u_0):{\tilde{t}}\le t\le t_0\}\). We separate two cases according to these two possibilities.

Case 2.2.1: \({{\mathcal {I}}}={\mathcal {R}}_1\cap {\mathcal {R}}_2\). In this case \((t_0,u_0)=({\tilde{t}},{\tilde{u}})\) and hence \({{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}})\) admits a \({\mathbb {R}}\)-rm. Since \((\tilde{t},{\tilde{u}})\in \partial {\mathcal {R}}_1\), \({{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}}))\) is not pd. Hence, by Theorem 2.5, the statement Theorem 5.1.(2(b)ii) holds.

Case 2.2.2: \(\partial {\mathcal {R}}_2=\mathcal {R}_2=\{(t,u_0):t\le t_0\}\) and \({{\mathcal {I}}}\subsetneq {\mathcal {R}}_1\cap \mathcal {R}_2= \{(t,u_0):{\tilde{t}}\le t\le t_0\}\). By (5.20), it follows that \(H_2/H_{22}=0\) (see the definition (5.8) of \(h(\textbf{t})\)). Since \(H_2\) is not pd, Theorem 2.5 used for \({{\mathcal {H}}}({\mathcal {G}}(t_0,u_0))\), implies that the last column of \(H_2\) is in the span of the others. Hence, the same holds by Proposition 2.3 for \({{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}}))\) and \({{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}}))\) admits a \({\mathbb {R}}\)-rm by Theorem 2.5. Since \({{\mathcal {H}}}(\mathcal {G}({\tilde{t}},{\tilde{u}}))\) is not pd, it in particular satisfies (5.10). Hence, we are in the case Theorem 5.1.(2(b)ii).

This concludes the proof of the implication (5.11)\(\Rightarrow \) Theorem 5.1.(2).

Next we prove the implication Theorem 5.1.(2)\(\Rightarrow \) (5.11). We separate four cases according to the assumptions in Theorem 5.1.(2).

Case 1: Theorem5.1.(2(a)i) holds. By Claim 3, \((0,0)\in {\mathcal {R}}_1\cap {\mathcal {R}}_2\). This and the assumption \({{\,\textrm{rank}\,}}({{\mathcal {H}}}(A_{\min }))_{\vec {X}^{(0,k-1)}}=k\), imply by Theorem 2.5, that \({{\mathcal {H}}}({\mathcal {G}}(0,0))={{\mathcal {H}}}(A_{\min })\) admits a \({\mathbb {R}}\)-rm. This proves (5.11) in case of Theorem 5.1.(2(a)i).

Case 2: Theorem5.1.(2(a)ii) holds. By Claim 3, \((0,0)\in {\mathcal {R}}_1\cap {\mathcal {R}}_2\). Since the last column of \(H_2\) is by assumption in the span of the previous ones, the same holds for \({{\mathcal {H}}}({\mathcal {G}}(0,0))\) by Proposition 2.3. By Theorem 2.5, \({{\mathcal {H}}}({\mathcal {G}}(0,0))\) admits a \({\mathbb {R}}\)-rm. This proves (5.11) in case of Theorem 5.1.(2(a)ii).

Case 3: Theorem5.1.(2(b)i) holds. By assumption, \({{\mathcal {I}}}=\partial {\mathcal {R}}_1\cap \partial {\mathcal {R}}_2=\{(t_1,u_1),(t_2,u_2)\}\). Since \(H_2\) is pd, \(\partial {\mathcal {R}}_{2}\) is not a half-line and hence \(u_1\ne u_2\). By Claim 5, at least one of \({{\mathcal {H}}}({\mathcal {G}}(t_{1},u_{1}))\) and \({{\mathcal {H}}}({\mathcal {G}}(t_2,u_2))\) admits a \({\mathbb {R}}\)-rm. This proves (5.11) in case of Theorem 5.1.(2(b)i).

Case 4: Theorem5.1.(2(b)ii) holds. The assumptions imply by Theorem 2.5, that \({{\mathcal {H}}}({\mathcal {G}}(\tilde{t},{\tilde{u}}))\) admits a \({\mathbb {R}}\)-rm. This proves (5.11) in case of Theorem 5.1.(2(b)ii).

This concludes the proof of the implication Theorem 5.1.(2)\(\Rightarrow \)(5.11).

Up to now we established the equivalence (1) \(\Leftrightarrow \) (2) in Theorem 5.1. It remains to prove the moreover part. We observe again the proof of the implication (2) \(\Rightarrow \) (5.11). By Lemma 4.3.(4),

In the proof of the implications Theorem 5.1.(2(a)i)\(\Rightarrow \) (5.11) and Theorem 5.1.(2(a)ii)\(\Rightarrow \) (5.11) we established that \({{\mathcal {H}}}({\mathcal {G}}(0,0))\) has a \({\mathbb {R}}\)-rm. By Theorem 2.5, there also exists a \(({{\,\textrm{rank}\,}}{{\mathcal {H}}}({\mathcal {G}}(0,0)))\)-atomic one. By Theorem 2.6, the sequence with the moment matrix \({{\mathcal {F}}}({\mathcal {G}}(0,0))\) can be represented by a \(({{\,\textrm{rank}\,}}{{\mathcal {F}}}({\mathcal {G}}(0,0)))\)-atomic \({{\mathcal {Z}}}(ay+x^2+y^2)\)-rm. By (5.26) and \(\mathcal {G}(0,0)=A_{\min }\) if \(\eta =0\), in these two cases \(\beta \) has a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-rm.

In the proof of the implication Theorem 5.1.(2(b)i)\(\Rightarrow \) (5.11) we established that \({{\mathcal {H}}}({\mathcal {G}}(t',u'))\) has a \({\mathbb {R}}\)-rm for some \((t',u')\in {{\mathcal {I}}}\). Analogously as for the point (0, 0) in the previous paragraph, it follows that \(\beta \) has a \(( {{\,\textrm{rank}\,}}{{\mathcal {F}}}({\mathcal {G}}(t',u')) + {{\,\textrm{rank}\,}}{{\mathcal {H}}}({\mathcal {G}}(t',u')) )\)-atomic \({{\mathcal {Z}}}(p)\)-rm. Using (5.13), (5.21) and \({{\,\textrm{rank}\,}}H_2={{\,\textrm{rank}\,}}H_{22}+1\) (by \(H_2\) being pd), it follows that

We separate two cases:

-

If \({{\mathcal {H}}}(A_{\min })\) is pd, then \({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })={{\,\textrm{rank}\,}}H_{2}+1\). This, (5.26) and (5.27) imply that \(\beta \) admits a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-rm.

-

If \({{\mathcal {H}}}(A_{\min })\) is not pd, then we must have \({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })={{\,\textrm{rank}\,}}H_{2}\), Otherwise we have \(({{\mathcal {H}}}(A_{\min }))_{\vec {X}^{(1,k)}}/H_{22}=0\) and hence \(({{\mathcal {H}}}(A_{\min }- \eta E_{2,2}^{(k+1)}))_{\vec {X}^{(1,k)}}/H_{22}<0\), which contradicts to \({{\mathcal {H}}}(A_{\min }- \eta E_{2,2}^{(k+1)})\) being psd. Hence, in this case \(\beta \) has a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta )+1)\)-atomic \({{\mathcal {Z}}}(p)\)-rm. Moreover, there cannot exist a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-rm. Indeed, since \(\eta >0\), at least \({{\,\textrm{rank}\,}}{{\mathcal {F}}}(A_{\min })+1\) (resp. \({{\,\textrm{rank}\,}}H_2\)) atoms are needed to represent \({{\mathcal {F}}}({\mathcal {G}}(t'',u''))\) (resp. \({{\mathcal {H}}}({\mathcal {G}}(t'',u''))\)) for any \((t'',u'')\in {\mathcal {R}}_1\cap {\mathcal {R}}_2\) (see (5.13) and (5.21)). Hence, at least \({{\,\textrm{rank}\,}}{{\mathcal {F}}}(A_{\min }) + {{\,\textrm{rank}\,}}H_{2}+1\) atoms are needed in a \({{\mathcal {Z}}}(p)\)-rm for any \((t'',u'')\in {\mathcal {R}}_1\cap {\mathcal {R}}_2\).

In the proof of the implication Theorem 5.1.(2(b)ii)\(\Rightarrow \) (5.11) we established that \({{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}}))\) has a \({\mathbb {R}}\)-rm. Analogously as for the point (0, 0) in two paragraphs above, it follows that \(\beta \) has a \(( {{\,\textrm{rank}\,}}{{\mathcal {F}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}})) + {{\,\textrm{rank}\,}}{{\mathcal {H}}}({\mathcal {G}}({\tilde{t}},{\tilde{u}})) )\)-atomic \({{\mathcal {Z}}}(p)\)-rm. By (5.13) and (5.21), this measure is \(( {{\,\textrm{rank}\,}}{{\mathcal {F}}}(A_{\min }) + {{\,\textrm{rank}\,}}H_{22}+2 )\)-atomic.

-

If \({{\mathcal {H}}}(A_{\min })\) is pd, then \({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })={{\,\textrm{rank}\,}}H_{22}+2\). This and (5.26) imply that \(\beta \) admits a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-rm.

-

If \({{\mathcal {H}}}(A_{\min })\) is not pd, then we have \({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })={{\,\textrm{rank}\,}}H_{22}+1\), since otherwise the equality \(({{\mathcal {H}}}(A_{\min }))_{\vec {X}^{(1,k)}}/H_{22}=0\) implies \(({{\mathcal {H}}}(A_{\min }- \eta E_{2,2}^{(k+1)}))_{\vec {X}^{(1,k)}}/H_{22}<0\), which contradicts to \({{\mathcal {H}}}(A_{\min }- \eta E_{2,2}^{(k+1)})\) being psd. Hence, in this case \(\beta \) has a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta )+1)\)-atomic \({{\mathcal {Z}}}(p)\)-rm. Moreover, there cannot exist a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-rm in this case. Indeed,

$$\begin{aligned} ({\mathcal {R}}_1\cap {\mathcal {R}}_2)\setminus {{\mathcal {I}}}= (\partial {\mathcal {R}}_1\cap \mathring{{\mathcal {R}}}_2) \cup (\mathring{{\mathcal {R}}}_1\cap \partial {\mathcal {R}}_2) \cup (\mathring{{\mathcal {R}}}_1\cap \mathring{{\mathcal {R}}}_2). \end{aligned}$$Using (5.13) and (5.21), in every point from \(({\mathcal {R}}_1\cap {\mathcal {R}}_2)\setminus {{\mathcal {I}}}\) at least \({{\,\textrm{rank}\,}}{{\mathcal {F}}}(A_{\min }) + {{\,\textrm{rank}\,}}H_{22}+2\) atoms are needed in a \({{\mathcal {Z}}}(p)\)-rm.

This concludes the proof of the moreover part.

Since for a p-pure sequence with \(\widetilde{\mathcal {M}}(k;\beta ))\succeq 0\), (5.26) implies that \({{\mathcal {H}}}(A_{\min })\) is pd, it follows by the moreover part that the existence of a \({{\mathcal {Z}}}(p)\)-rm implies the existence of a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-rm. \(\square \)

The following example, generated by [37], demonstrates the use of Theorem 5.1 to show that there exists a bivariate \(y(-2y+x^2+y^2)\)-pure sequence \(\beta \) of degree 6 with a positive semidefinite \({\mathcal {M}}(3)\) and without a \({{\mathcal {Z}}}(y(-2y+x^2+y^2))\)-rm.

Example 5.3

Let \(\beta \) be a bivariate degree 6 sequence given by

Assume the notation as in Theorem 5.1. \(\widetilde{\mathcal {M}}(3)\) is psd with the eigenvalues \(\approx 4445\), \(\approx 189.2\), \(\approx 16.6\), \(\approx 11.9\), \(\approx 3.2\), \(\approx 1.22\), \(\approx 0.57\), \(\approx 0.022\), \(\approx 0.0030\), 0 and the column relation

We have that

and so

The matrix \(H_{2}\) is equal to:

The eigenvalues of \(H_2\) are \(\approx 4441.1\), \(\approx 6.74\), \(\approx -0.019\) and hence \(H_2\) is not psd. By Theorem 5.1, \(\beta \) does not have a \({{\mathcal {Z}}}(y(-2y+x^2+y^2))\)-rm, since by (2b) of Theorem 5.1, \(H_2\) should be psd.

6 Parabolic Type Relation: \(p(x,y)=y(x-y^2)\)

In this section we solve the \({{\mathcal {Z}}}(p)\)-TMP for the sequence \(\beta =\{\beta _i\}_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\) of degree 2k, \(k\ge 3\), where \(p(x,y)=y(x-y^2)\). Assume the notation from Sect. 4. If \(\beta \) admits a \({{\mathcal {Z}}}(p)\)-TMP, then \({\mathcal {M}}(k;\beta )\) must satisfy the relations

In the presence of all column relations (6.1), the column space \({{\mathcal {C}}}({\mathcal {M}}(k;\beta ))\) is spanned by the columns in the set

where

Let \({\widetilde{{{\mathcal {M}}}}}(k;\beta )\) be as in (4.8). Let

As described in Remark 4.4, \(A_{\min }\) might need to be changed to

where

Let \({{\mathcal {F}}}(\textbf{A})\) and \({{\mathcal {H}}}(\textbf{A})\) be as in (4.10). Define also the matrix function

Write

Let us define the matrix

Let

and

Write

The solution to the cubic parabolic type relation TMP is the following.

Theorem 6.1

Let \(p(x,y)=y(x-y^2)\) and \(\beta :=\beta ^{(2k)}=(\beta _{i,j})_{i,j\in {\mathbb {Z}}_+,i+j\le 2k}\), where \(k\ge 3\). Assume also the notation above. Then the following statements are equivalent:

-

(1)

\(\beta \) has a \({{\mathcal {Z}}}(p)\)-representing measure.

-

(2)

\(\widetilde{{\mathcal {M}}}(k;\beta )\) is positive semidefinite, the relations

$$\begin{aligned} \beta _{i,j+3}=\beta _{i+1,j+1} \quad \text {hold for every }i,j\in {\mathbb {Z}}_+\text { with }i+j\le 2k-3, \end{aligned}$$(6.8)\({{\mathcal {H}}}({\widehat{A}}_{\min })\) is positive semidefinite, defining real numbers

$$\begin{aligned} \begin{aligned} t_1&=H_1/H_{22} =\beta _{0,0}-(A_{\min })_{1,1} - (h_{12})^T (H_{22})^\dagger h_{12},\\ u_1&=H_2/H_{22} = \beta _{2k,0} -(A_{\min })_{k+1,k+1} - (h_{23})^T (H_{22})^{\dagger } h_{23}, \end{aligned} \nonumber \\ \end{aligned}$$(6.9)and the property

$$\begin{aligned}&({{\mathcal {H}}}({\widehat{A}}_{\min }))_{\vec {X}^{(0,k-1)}}\succ 0 \quad \text {or}\quad {{\,\textrm{rank}\,}}({{\mathcal {H}}}({\widehat{A}}_{\min }))_{\vec {X}^{(0,k-1)}} = {{\,\textrm{rank}\,}}{{\mathcal {H}}}({\widehat{A}}_{\min }), \end{aligned}$$(6.10)one of the following statements holds:

-

(a)

\(F_{22}\) is not positive definite, \(\eta =0\) and (6.10) holds.

-

(b)

\(F_{22}\) is positive definite, \(H_{22}\) is not positive definite and one of the following holds:

-

(i)

\(u_1=\eta =0\).

-

(ii)

\(u_1>0\), \(t_1>0\), \(t_1u_1\ge \eta ^2\) and \( \beta _{k,0}-(A_{\min })_{2,k}= (h_{12})^T(H_{22})^{\dagger } h_{23}. \)

-

(i)

-

(c)

\(F_{22},H_{22}\) are positive definite and one of the following holds:

-

(i)

\(\eta =0\) and (6.10) holds.

-

(ii)

\(\eta \ne 0\) and

$$\begin{aligned} \left( \sqrt{k_{11}k_{22}}- {{\,\textrm{sign}\,}}(k_{12})k_{12}\right) ^2\ge \eta ^2, \end{aligned}$$(6.11)where \({{\,\textrm{sign}\,}}\) is the sign function and \({{\,\textrm{sign}\,}}(0)=0\).

-

(i)

-

(a)

Moreover, if a \({\mathcal {Z}}(p)\)-representing measure for \(\beta \) exists, then:

-

There exists at most \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta )+1)\)-atomic \({{\mathcal {Z}}}(p)\)-representing measure.

-

There exists a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-representing measure if and only if any of the following holds:

-

\(\eta =0\).

-

\({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })= {{\,\textrm{rank}\,}}H_{22}+2. \)

-

\({{\,\textrm{rank}\,}}{{\mathcal {H}}}(A_{\min })= {{\,\textrm{rank}\,}}H_{22}+1 \) and one of the following holds:

- \(*\):

-

\(H_{22}\) is not positive definite and \(t_1u_1=\eta ^2\).

- \(*\):

-

\(H_{22}\) is positive definite, \(k_{12}=0\) and \(k_{11}k_{22}=\eta ^2\).

-

In particular, a p-pure sequence \(\beta \) with a \({{\mathcal {Z}}}(p)\)-representing measure admits a \(({{\,\textrm{rank}\,}}\widetilde{{\mathcal {M}}}(k;\beta ))\)-atomic \({{\mathcal {Z}}}(p)\)-representing measure.

Remark 6.2

In this remark we explain the idea of the proof of Theorem 6.1 and the meaning of conditions in the statement of the theorem.

By Lemmas 4.1–4.2, the existence of a \({\mathcal {Z}}(p)\)-rm for \(\beta \) is equivalent to the existence of \(t,u\in {\mathbb {R}}\) such that \({{\mathcal {F}}}({\mathcal {G}}(t,u))\) admits a \({{\mathcal {Z}}}(x-y^2)\)-rm and \({{\mathcal {H}}}({\mathcal {G}}(t,u))\) admits a \({\mathbb {R}}\)-rm. Let

We denote by \(\partial R_i\) and \(\mathring{R}_i\) the topological boundary and the interior of the set \(R_i\), respectively. By the necessary conditions for the existence of a \({{\mathcal {Z}}}(p)\)-rm [12, 14, 25], \(\widetilde{{\mathcal {M}}}(k;\beta )\) must be psd and the relations (6.8) must hold. Then Theorem 6.1.(1) is equivalent to

In the proof of Theorem 6.1 we show that (6.12) is equivalent to Theorem 6.1.(2):

-

(1)

First we establish (see Claims 1 and 2 below) that the form of:

-

\({\mathcal {R}}_1\) is one of the following:

where the left case occurs if \(\eta \ne 0\) and the right if \(\eta =0\).

-

\({\mathcal {R}}_2\) is one of the following:

where the left case occurs if \(k_{12}\ne 0\) and the right if \(k_{12}=0\).

-

-

(2)

If \(F_{22}\) is only positive semidefinite but not definite, then we show that (6.12) is equivalent to

$$\begin{aligned} \begin{aligned}&\widetilde{{{\mathcal {M}}}}(k;\beta )\succeq 0, \text { the relations } 6.8\text { hold}, \eta =0\text { and } {{\mathcal {H}}}({\mathcal {G}}(0,0))\text { admits a }{\mathbb {R}}\text {-rm}. \end{aligned} \end{aligned}$$(6.13)The latter statement is further equivalent to Theorem 6.1.(2a).

-

(3)

Assume that \(F_{22}\) is positive definite and \(H_{22}\) is only positive semidefinite but not definite. If:

-

\(u_1=0\), then we show that (6.12) is equivalent to (6.13). The latter statement is further equivalent to Theorem 6.1.(2(b)i).

-

\(u_1>0\), then we show that (6.12) is equivalent to

$$\begin{aligned} \begin{aligned}&\widetilde{{{\mathcal {M}}}}(k;\beta )\succeq 0, \text { the relations } 6.8\text { hold, } {{\mathcal {F}}}({\mathcal {G}}(t_1,u_1)) \text { and }\\&{{\mathcal {H}}}({\mathcal {G}}(t_1,u_1))\text { admit a } {{\mathcal {Z}}}(x-y^2)\text {-rm and a }{\mathbb {R}}\text {-rm, respectively.} \end{aligned} \end{aligned}$$The latter statement is further equivalent to Theorem 6.1.(2(b)ii).

-

\(u_1<0\), then (6.12) cannot hold.

-

-

(4)

Assume that \(F_{22}\) and \(H_{22}\) are positive definite. If:

-

\(\eta =0\), then we show that (6.12) is equivalent to (6.13). The latter statement is further equivalent to Theorem 6.1.(2(c)i).

-

\(\eta \ne 0\), then we show that (6.12) is equivalent to \({\mathcal {R}}_1\cap {\mathcal {R}}_2\ne \emptyset \). The latter statement is further equivalent to Theorem 6.1.(2(c)ii).

-

Proof of Theorem 6.1

Let \({\mathcal {R}}_1, {\mathcal {R}}_2\) be as in Remark 6.2. As explained in Remark 6.2, Theorem 6.1.(1) is equivalent to (6.12), thus it remains to prove that (6.12) is equivalent to Theorem 6.1.(2).

First we establish a few claims needed in the proof. Claim 1 (resp. 2) describes \({\mathcal {R}}_1\) (resp. \({\mathcal {R}}_2\)) concretely.

Claim 1. Assume that \(\widetilde{{\mathcal {M}}}(k;\beta )\succeq 0\). Then

If \((t,u)\in {\mathcal {R}}_1\), we have