Abstract

We study here properties of free Generalized Inverse Gaussian distributions (fGIG) in free probability. We show that in many cases the fGIG shares similar properties with the classical GIG distribution. In particular we prove that fGIG is freely infinitely divisible, free regular and unimodal, and moreover we determine which distributions in this class are freely selfdecomposable. In the second part of the paper we prove that for free random variables X, Y where Y has a free Poisson distribution one has \(X{\mathop {=}\limits ^{d}}\frac{1}{X+Y}\) if and only if X has fGIG distribution for special choice of parameters. We also point out that the free GIG distribution maximizes the same free entropy functional as the classical GIG does for the classical entropy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Free probability was introduced by Voiculescu [35] as a non-commutative probability theory where one defines a new notion of independence, so called freeness or free independence. Non-commutative probability is a counterpart of the classical probability theory where one allows random variables to be non-commutative objects. Instead of defining a probability space as a triplet \((\Omega ,{\mathcal {F}},{\mathbb {P}})\) we switch to a pair \(({\mathcal {A}},\varphi )\) where \({\mathcal {A}}\) is an algebra of random variables and \(\varphi :{\mathcal {A}}\rightarrow {\mathbb {C}}\) is a linear functional, in the classical situation \(\varphi ={\mathbb {E}}\). It is natural then to consider algebras \({\mathcal {A}}\) where random variables do not commute (for example \(C^*\) or \(W^*\)-algebras). For bounded random variables independence can be equivalently understood as a rule of calculating mixed moments. It turns out that while for commuting random variables only one such rule leads to a meaningful notion of independence, the non-commutative setting is richer and one can consider several notions of independence. Free independence seems to be the one which is the most important. The precise definition of freeness is stated in Sect. 2 below.

Free probability emerged from questions related to operator algebras however the development of this theory showed that it is surprisingly closely related with the classical probability theory. First evidence of such relations appeared with Voiculescu’s results about asymptotic freeness of random matrices. Asymptotic freeness roughly speaking states that (classically) independent, unitarily invariant random matrices, when size goes to infinity, become free.

Another link between free and classical probability goes via infinite divisibility. With a notion of independence in hand one can consider a convolution of probability measures related to this notion. For free independence such operation is called free convolution and it is denoted by \(\boxplus \). More precisely for free random variables X, Y with respective distributions \(\mu ,\nu \) the distribution of the sum \(X+Y\) is called the free convolution of \(\mu \) and \(\nu \) and is denoted by \(\mu \boxplus \nu \). The next natural step is to ask which probability measures are infinitely divisible with respect to this convolution. We say that \(\mu \) is freely infinitely divisible if for any \(n \ge 1\) there exists a probability measure \(\mu _n\) such that

Here we come across another striking relation between free and classical probability: there exists a bijection between classically and freely infinitely divisible probability measures, this bijection was found in [7] and it is called Bercovici–Pata (BP) bijection. This bijection has number of interesting properties, for example measures in bijection have the same domains of attraction. In free probability literature it is standard approach to look for the free counterpart of a classical distribution via BP bijection. For example Wigner’s semicircle law plays the role of the Gaussian law in free analogue of Central Limit Theorem, the Marchenko–Pastur distribution appears in the limit of free version of Poisson limit theorem and is often called the free Poisson distribution.

While BP bijection proved to be a powerful tool, it does not preserve all good properties of distributions. Consider for example Lukacs theorem which says that for classically independent random variables X, Y random variables \(X+Y\) and \(X/(X+Y)\) are independent if and only if X, Y have Gamma distribution with the same scale parameter [23]. One can consider similar problem in free probability and gets the following result (see [32, 33]) for free random variables X, Y random variables \(X+Y\) and \((X+Y)^{-1/2}X(X+Y)^{-1/2}\) are free if and only if X, Y have Marchenko–Pastur (free Poisson) distribution with the same rate. From this example one can see our point—it is not the image under BP bijection of the Gamma distribution (studied in [13, 27]), which has the Lukacs independence property in free probability, but in this context the free Poisson distribution plays the role of the classical Gamma distribution.

In [34] another free independence property was studied—a free version of so called Matsumoto–Yor property (see [22, 24]). In classical probability this property says that for independent X, Y random variables \(1/(X+Y)\) and \(1/X-1/(X+Y)\) are independent if and only if X has a Generalized Inverse Gaussian (GIG) distribution and Y has a Gamma distribution. In the free version of this theorem (i.e. the theorem where one replaces classical independence assumptions by free independence) it turns out that the role of the Gamma distribution is taken again by the free Poisson distribution and the role of the GIG distribution plays a probability measure which appeared for the first time in [12]. We will refer to this measure as the free Generalized Inverse Gaussian distribution or fGIG for short. We give the definition of this distribution in Sect. 2.

The main motivation of this paper is to study further properties of fGIG distribution. The results from [34] suggest that in some sense (but not by means of the BP bijection) this distribution is the free probability analogue of the classical GIG distribution. It is natural then to ask if fGIG distribution shares more properties with its classical counterpart. It is known that the classical GIG distribution is infinitely divisible (see [4]) and selfdecomposable (see [14, 31]). In [21] the GIG distribution was characterized in terms of an equality in distribution, namely if we take \(X,Y_1,Y_2\) independent and such that \(Y_1\) and \(Y_2\) have Gamma distributions with suitable parameters and we assume that

then X necessarily has a GIG distribution. A simpler version of this theorem characterizes smaller class of fGIG distributions by equality

for X and \(Y_1\) as described above.

The overall result of this paper is that the two distributions GIG and fGIG indeed have many similarities. We show that fGIG distribution is freely infinitely divisible and even more that it is free regular. Moreover fGIG distribution can be characterized by the equality in distribution (1.2), where one has to replace the independence assumption by freeness and assume that \(Y_1\) has free Poisson distributions. While there are only several examples of freely selfdecomposable distributions it is interesting to ask whether fGIG has this property. It turns out that selfdecomposability is the point where the symmetry between GIG and fGIG partially breaks down: not all fGIG distributions are freely selfdecomposable. We find conditions on the parameters of fGIG family for which this distributions are freely selfdecomposable. Except from the results mentioned above we prove that fGIG distribution is unimodal. We also point out that in [12] it was proved that fGIG maximizes a certain free entropy functional. An easy application of Gibbs’ inequality shows that the classical GIG maximizes the same functional of classical entropy.

The paper is organized as follows: in Sect. 2 we shortly recall basics of free probability and next we study some properties of fGIG distributions. Section 3 is devoted to the study of free infinite divisibility, free regularity, free selfdecomposability and unimodality of the fGIG distribution. In Sect. 4 we show that the free counterpart of the characterization of GIG distribution by (1.2) holds true, and we discuss entropy analogies between GIG and fGIG.

2 Free GIG Distributions

In this section we recall the definition of free GIG distribution and study basic properties of this distribution. In particular we study in detail the R-transform of fGIG distribution. Some of the properties established in this section will be crucial in the subsequent sections where we study free infinite divisibility of the free GIG distribution and characterization of the free GIG distribution. The free GIG distribution appeared for the first time (not under the name free GIG) as the almost sure weak limit of empirical spectral distributions of GIG matrices (see [12]).

2.1 Basics of Free Probability

This paper deals mainly with properties of free GIG distribution related to free probability and in particular to free convolution. Therefore in this section we introduce notions and tools that we need in this paper. The introduction is far from being detailed, reader not familiar with free probability may find a very good introduction to the theory in [25, 26, 38].

- \(1^o\) :

-

A \(C^*\)-probability space is a pair \(({\mathcal {A}},\varphi )\), where \({\mathcal {A}}\) is a unital \(C^*\)-algebra and \(\varphi \) is a linear functional \(\varphi :{\mathcal {A}}\rightarrow {\mathbb {C}}\), such that \(\varphi ( 1 _{\mathcal {A}})=1\) and \(\varphi (aa^*)\ge 0\). Here by \( 1 _{\mathcal {A}}\) we understand the unit of \({\mathcal {A}}\).

- \(2^o\) :

-

Let I be an index set. Subalgebras \((\mathcal {A}_i)_{i\in I}\) are called free if \(\varphi (X_1\cdots X_n)=0\) whenever \(a_i\in {\mathcal {A}}_{j_i}\), \(j_1\ne j_2\ne \cdots \ne j_n\) and \(\varphi (X_i)=0\) for all \(i=1,\ldots ,n\) and \(n=1,2,\ldots \). Similarly, self-adjoint random variables \(X,\,Y\in {\mathcal {A}}\) are free (freely independent) when subalgebras generated by \((X,\, 1 _{\mathcal {A}})\) and \((Y,\, 1 _{\mathcal {A}})\) are freely independent.

- \(3^o\) :

-

The distribution of a self-adjoint random variable is identified via moments, that is for a random variable X we say that a probability measure \(\mu \) is the distribution of X if

$$\begin{aligned} \varphi (X^n)=\int t^n\,\mathrm{d}\mu (t),\quad \text{ for } \text{ all } n=1,2,\ldots \end{aligned}$$Note that since we assume that our algebra \({\mathcal {A}}\) is a \(C^*\)-algebra, all random variables are bounded, thus the sequence of moments indeed determines a unique probability measure.

- \(4^o\) :

-

The distribution of the sum \(X+Y\) for free random variables X, Y with respective distributions \(\mu \) and \(\nu \) is called the free convolution of \(\mu \) and \(\nu \), and is denoted by \(\mu \boxplus \nu \).

2.2 Free GIG Distribution

In this paper we are concerned with a specific family of probability measures which we will refer to as free GIG (fGIG) distributions.

Definition 2.1

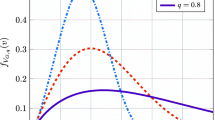

The free Generalized Inverse Gaussian (fGIG) distribution is a measure \(\mu =\mu (\alpha ,\beta ,\lambda )\), where \(\lambda \in {\mathbb {R}}\) and \(\alpha ,\beta >0\) which is compactly supported on the interval [a, b] with the density

where \(0<a<b\) are the solution of

Observe that the system of equations for coefficients for fixed \(\lambda \in {\mathbb {R}}\) and \(\alpha ,\beta >0\) has a unique solution \(0<a<b\). We can easily get the following

Remark 2.2

Let \(\lambda \in {\mathbb {R}}\). Given \(\alpha ,\beta >0\), the system of Eqs. (2.1) and (2.2) has a unique solution (a, b) such that

Conversely, given (a, b) satisfying (2.3), the set of Eqs. (2.1)–(2.2) has a unique solution \((\alpha ,\beta )\), which is given by

Thus we may parametrize fGIG distribution using parameters \((a,b,\lambda )\) satisfying (2.3) instead of \((\alpha ,\beta ,\lambda )\). We will make it clear whenever we will use a parametrization different than \((\alpha ,\beta ,\lambda )\).

Remark 2.3

It is useful to introduce another parameterization to describe the distribution \(\mu (\alpha ,\beta ,\lambda )\). Define

observe that we have then

The condition (2.3) is equivalent to

Thus one can describe any measure \(\mu (\alpha ,\beta ,\lambda )\) in terms of \(\lambda ,A,B\).

2.3 R-Transform of fGIG Distribution

The R-transform of the measure \(\mu (\alpha ,\beta ,\lambda )\) was calculated in [34]. Since the R-transform will play a crucial role in the paper we devote this section for a detailed study of its properties. We also point out some properties of fGIG distribution which are derived from properties of the R-transform.

Before we present the R-transform of fGIG distribution let us briefly recall how the R-transform is defined and stress its importance for free probability.

Remark 2.4

- \(1^o\) :

-

For a probability measure \(\mu \) one defines its Cauchy transform via

$$\begin{aligned} G_\mu (z)=\int \frac{1}{z-x}\mathrm{d}\mu (x). \end{aligned}$$It is an analytic function on the upper-half plane with values in the lower half-plane. The Cauchy transform determines uniquely the measure and there is an inversion formula called Stieltjes inversion formula, namely for \(h_\varepsilon (t)=-\tfrac{1}{\pi }\text { Im}\, G_\mu (t+i\varepsilon )\) one has

$$\begin{aligned} \mathrm{d}\mu (t)=\lim _{\varepsilon \rightarrow 0^+} h_\varepsilon (t)\,\mathrm{d}t, \end{aligned}$$where the limit is taken in the weak topology.

- \(2^o\) :

-

For a compactly supported measure \(\mu \) one can define in a neighbourhood of the origin so called R-transform by

$$\begin{aligned} R_\mu (z)=G_\mu ^{\langle -1 \rangle }(z)-\frac{1}{z}, \end{aligned}$$where by \(G_\mu ^{\langle -1 \rangle }\) we denote the inverse under composition of the Cauchy transform of \(\mu \).

The relevance of the R-transform for free probability comes form the fact that it linearizes free convolution, that is \(R_{\mu \boxplus \nu }=R_\mu +R_\nu \) in a neighbourhood of zero.

The R-transform of fGIG distribution is given by

in a neighbourhood of 0, where the square root is the principal value,

and

Note that \(z=0\) is a removable singular point of \(r_{\alpha ,\beta ,\lambda }\). Observe that in terms of A, B defined by (2.6) we have

It is straightforward to observe that (2.7) implies \(A (\lambda A+B)<2 A B<2B^2\), thus we have \(\gamma <0\).

The following remark was used in [34, Remark 2.1] without a proof. We give a proof here.

Remark 2.5

We have \(f_{\alpha ,\beta ,\lambda }(z)=f_{\alpha ,\beta ,-\lambda }(z)\), where \(\alpha ,\beta >0,\lambda \in {\mathbb {R}}\).

Proof

To see this one has to insert the definition of \(\gamma \) into (2.9) to obtain

where \(a=a(\alpha ,\beta ,\lambda )\) and \(b=b(\alpha ,\beta ,\lambda )\). Thus it suffices to show that the quantity \(g(\alpha ,\beta ,\lambda ):=ab\alpha ^2-2\alpha \beta \frac{a+b}{\sqrt{ab}}+\frac{\beta ^2}{ab}\) does not depend on the sign of \(\lambda \). To see this, observe from the system of Eqs. (2.1) and (2.2) that \(a(\alpha ,\beta ,-\lambda )=\frac{\beta }{\alpha b(\alpha ,\beta ,\lambda )}\) and \(b(\alpha ,\beta ,-\lambda )=\frac{\beta }{\alpha a(\alpha ,\beta ,\lambda )}\). It is then straightforward to check that \( g(\alpha ,\beta ,-\lambda ) = g(\alpha ,\beta ,\lambda ). \)\(\square \)

Proposition 2.6

The R-transform of the measure \(\mu (\alpha ,\beta ,\lambda )\) can be extended to a function (still denoted by \(r_{\alpha ,\beta ,\lambda }\)) which is analytic on \({\mathbb {C}}^{-}\) and continuous on \(({\mathbb {C}}^- \cup {\mathbb {R}}){\setminus }\{\alpha \}\).

Proof

A direct calculation shows that using parameters A, B defined by (2.6) the polynomial \(f_{\alpha ,\beta ,\lambda }\) under the square root factors as

Thus we can write

where

It is straightforward to verify that (2.7) implies \(\eta \ge \alpha \) with equality valid only when \(\lambda =0\).

Calculating \(f_{\alpha ,\beta ,\lambda }(0)\) using first (2.9) and then (2.10) we get \(4 \beta \eta \delta ^2 = \alpha ^2\), since \(\eta \ge \alpha \) we see that \(\delta \ge -\sqrt{\alpha /(4\beta )}\) with equality only when \(\lambda =0\).

Since all roots of \(f_{\alpha ,\beta ,\lambda }\) are real, the square root \(\sqrt{f_{\alpha ,\beta ,\lambda }(z)}\) may be defined continuously on \({\mathbb {C}}^-\cup {\mathbb {R}}\) so that \(\sqrt{f_{\alpha ,\beta ,\lambda }(0)}=\alpha \). As noted above \(\delta <0\), and continuity of \(f_{\alpha ,\beta ,\lambda }\) implies that we have

where we take the principal value of the square root in the expression \(\sqrt{4\beta (\eta -z)}\). Thus finally we arrive at the following form of the R-transform

which is analytic in \({\mathbb {C}}^-\) and continuous in \(({\mathbb {C}}^- \cup {\mathbb {R}}){\setminus }\{\alpha \}\) as required. \(\square \)

Next we describe the behaviour of the R-transform around the singular point \(z=\alpha \).

Proposition 2.7

If \(\lambda >0\) then

If \(\lambda <0\) then

In the remaining case \(\lambda =0\) one has

Proof

By the definition we have \(f_{\alpha ,\beta ,\lambda }(\alpha ) = (\lambda \alpha )^2\), substituting this in the expression (2.13) we obtain that \( \alpha |\lambda | = 2(\alpha -\delta )\sqrt{\beta (\eta -\alpha )}. \) Taking the Taylor expansion around \(z=\alpha \) for \(\lambda \ne 0\) we obtain

This implies (2.15) and (2.16).

The case \(\lambda =0\) follows from the fact that in this case we have \(\eta =\alpha \). \(\square \)

Corollary 2.8

In the case \(\lambda <0\) one can extend \(r_{\alpha ,\beta ,\lambda }\) to an analytic function in \({\mathbb {C}}^-\) and continuous in \({\mathbb {C}}^- \cup {\mathbb {R}}\).

2.4 Some Properties of fGIG Distribution

We study here further properties of the free GIG distributions. Some of them motivate Sect. 4 where we will characterize fGIG distribution in a way analogous to classical GIG distribution.

The next remark recalls the definition and some basic facts about free Poisson distribution, which will play an important role in this paper.

Remark 2.9

- \(1^o\) :

-

Marchenko–Pastur (or free-Poisson) distribution \(\nu =\nu (\gamma , \lambda )\) is defined by the formula

$$\begin{aligned} \nu =\max \{0,\,1-\lambda \}\,\delta _0+{\tilde{\nu }}, \end{aligned}$$where \(\gamma ,\lambda > 0\) and the measure \({\tilde{\nu }}\), supported on the interval \((\gamma (1-\sqrt{\lambda })^2,\,\gamma (1+\sqrt{\lambda })^2)\), has the density (with respect to the Lebesgue measure)

$$\begin{aligned} {\tilde{\nu }}(\mathrm{d}x)=\frac{1}{2\pi \gamma x}\,\sqrt{4\lambda \gamma ^2-(x-\gamma (1+\lambda ))^2}\,\mathrm{d}x. \end{aligned}$$ - \(2^o\) :

-

The R-transform of the free Poisson distribution \(\nu (\gamma ,\lambda )\) is of the form

$$\begin{aligned} r_{\nu (\gamma , \lambda )}(z)=\frac{\gamma \lambda }{1-\gamma z}. \end{aligned}$$

The next proposition was proved in [34, Remark 2.1] which is the free counterpart of a convolution property of classical Gamma and GIG distribution. The proof is a straightforward calculation of the R-transform with the help of Remark 2.5.

Proposition 2.10

Let X and Y be free, X free GIG distributed \(\mu (\alpha ,\beta ,-\lambda )\) and Y free Poisson distributed \(\nu (1/\alpha ,\lambda )\) respectively, for \(\alpha ,\beta ,\lambda >0\). Then \(X+Y\) is free GIG distributed \(\mu (\alpha ,\beta ,\lambda )\).

We also quote another result from [34, Remark 2.2] which is again the free analogue of a property of classical GIG distribution. The proof is a simple calculation of the density.

Proposition 2.11

If X has the free GIG distribution \(\mu (\alpha ,\beta ,\lambda )\) then \(X^{-1}\) has the free GIG distribution \(\mu (\beta ,\alpha ,-\lambda )\).

The two propositions above imply some distributional properties of fGIG distribution. In the Sect. 4 we will study characterization of the fGIG distribution related to these properties.

Remark 2.12

- \(1^o\) :

-

Fix \(\lambda ,\alpha >0\). If X has fGIG distribution \(\mu (\alpha ,\alpha ,-\lambda )\) and Y has the free Poisson distribution \(\nu (1/\alpha ,\lambda )\) and X, Y are free then \(X{\mathop {=}\limits ^{d}}(X+Y)^{-1}\).

Indeed by Proposition 2.10 we get that \(X+Y\) has fGIG distribution \(\mu (\alpha ,\alpha ,\lambda )\) and now Proposition 2.11 implies that \((X+Y)^{-1}\) has the distribution \(\mu (\alpha ,\alpha ,-\lambda )\).

- \(2^o\) :

-

One can easily generalize the above observation. Take \(\alpha ,\beta ,\lambda >0\), and \(X,Y_1,Y_2\) free, such that X has fGIG distribution \(\mu (\alpha ,\beta ,-\lambda )\), \(Y_1\) is free Poisson distributed \(\nu (1/\beta ,\lambda )\) and \(Y_2\) is distributed \(\nu (1/\alpha ,\lambda )\), then \(X{\mathop {=}\limits ^{d}}(Y_1+(Y_2+X)^{-1})^{-1}\).

Similarly as before we have that \(X+Y_2\) has distribution \(\mu (\alpha ,\beta ,\lambda )\), then by Proposition 2.11 we get that \((X+Y_2)^{-1}\) has distribution \(\mu (\beta ,\alpha ,-\lambda )\). Then we have that \(Y_1+(Y_2+X)^{-1}\) has the distribution \(\mu (\beta ,\alpha ,\lambda )\) and finally we get \((Y_1+(Y_2+X)^{-1})^{-1}\) has the desired distribution \(\mu (\alpha ,\beta ,-\lambda )\).

- \(3^o\) :

-

Both identities above can be iterated finitely many times, so that one obtains that \(X{\mathop {=}\limits ^{d}}\left( Y_1+\left( Y_2+\cdots \right) ^{-1}\right) ^{-1}\), where \(Y_1,Y_2,\ldots \) are free, for k odd \(Y_k\) has the free Poisson distribution \(\nu (1/\beta ,\lambda )\) and for k even \(Y_k\) has the distribution \(\nu (1/\alpha ,\lambda )\). For the case described in \(1^o\) one simply has to take \(\alpha =\beta \). We are not sure if infinite continued fractions can be defined.

Next we study limits of the fGIG measure \(\mu (\alpha ,\beta ,\lambda )\) when \(\alpha \rightarrow 0\) and \(\beta \rightarrow 0\). This was stated with some mistake in [34, Remark 2.3].

Proposition 2.13

As \(\beta \downarrow 0\) we have the following weak limits of the fGIG distribution

Taking into account Proposition 2.11 one can also describe limits when \(\alpha \downarrow 0\) for \(\lambda \ge 1\).

Remark 2.14

This result reflects the fact that GIG matrix generalizes the Wishart matrix for \(\lambda \ge 1\), but not for \(\lambda <1\) (see [12] for GIG matrix and [19] for the Wishart matrix).

Proof

We will find the limit by calculating limits of the R-transform, since convergence of the R-transform implies weak convergence. Observe that from Remark 2.5 we can consider only \(\lambda \ge 0\), however we decided to present all cases, as the consideration will give asymptotic behaviour of support of fGIG measure. In view of (2.9), the only non-trivial part is the limit of \(\beta \gamma \) when \(\beta \rightarrow 0\). Observe that if we define \(F(a,b,\alpha ,\beta ,\lambda )\) by

then the solution for the system (2.1), (2.2) are functions \((a(\alpha ,\beta ,\lambda ),b(\alpha ,\beta ,\lambda ))\), such that \(F(a(\alpha ,\beta ,\lambda ),b(\alpha ,\beta ,\lambda ),\alpha ,\beta ,\lambda )=(0,0)\). We use Implicit Function Theorem, we calculate the Jacobian with respect to (a, b), and observe that \(a(\alpha ,\beta ,\lambda )\) and \(b(\alpha ,\beta ,\lambda )\) are continuous (even differentiable) functions of \(\alpha ,\beta >0\) and \(\lambda \in {\mathbb {R}}\).

Case 1. \(\lambda >1\)

Observe if we take \(\beta =0\) then a real solution \(0<a<b\) for the system (2.1), (2.2)

still exists. Moreover, because at \(\beta =0\) Jacobian is non-zero, Implicit Function Theorem says that solutions are continuous at \(\beta =0\). Thus using (2.20) we get

The above implies that \(\beta \gamma \rightarrow 0\) when \(\beta \rightarrow 0\) since a, b have finite and non-zero limit when \(\beta \rightarrow 0\), as explained above.

Case 2. \(\lambda <-1\)

In that case we see that setting \(\beta =0\) in (2.1) leads to an equation with no real solution for (a, b). In this case the part \(\beta \tfrac{a+b}{2ab}\) has non-zero limit when \(\beta \rightarrow 0\). To be precise substitute \(a=\beta a^\prime \) and \(b=\beta b^\prime \) in (2.1), (2.2), and then we get

The above system is equivalent to the system (2.1), (2.2) with \(\alpha :=\alpha \beta \) and \(\beta :=1\). If we set \(\beta =0\) as in Case 1 we get

The above system has solution \(0<a^\prime <b^\prime \) for \(\lambda <-1\). Calculating the Jacobian we see that it is non-zero at \(\beta =0\), so Implicit Function Theorem implies that \(a^\prime \) and \(b^\prime \) are continuous functions at \(\beta =0\) in the case \(\lambda <-1\).

This implies that in the case \(\lambda <-1\) the solutions of (2.1), (2.2) are \(a(\beta )= \beta a^\prime +o(\beta )\) and \(b(\beta )= \beta b^\prime +o(\beta )\). Thus we have

where in the equation one before the last we used (2.23).

Case 3. \(|\lambda |< 1\)

Observe that neither (2.20) nor (2.23) has a real solution in the case \(|\lambda |< 1\). This is because in this case asymptotically \(a(\beta )=a^\prime \beta +o(\beta )\) and b has a finite positive limit as \(\beta \rightarrow 0\). Similarly as in Case 2 let us substitute \(a=\beta a^\prime \) in (2.1), (2.2), which gives

If we set \(\beta =0\) we get

which obviously has positive solution \((a^\prime ,b)\) when \(|\lambda |<1\). As before the Jacobian is non-zero at \(\beta =0\), so \(a^\prime \) and b are continuous at \(\beta =0\).

Now we go back to the limit \(\lim _{\beta \rightarrow 0}\beta \gamma \). We have \(a(\beta )=\beta a^\prime +o(\beta )\), thus

Case 4.\(|\lambda |= 1\)

An analysis similar to the above cases shows that in the case \(\lambda =1\) we have \(a(\beta )=a^\prime \beta ^{2/3}+o(\beta ^{2/3})\) and b has positive limit when \(\beta \rightarrow 0\). In the case \(\lambda =-1\) one gets \(a(\beta )=a^\prime \beta +o(\beta )\) and \(b(\beta )=b^\prime \beta ^{1/3}+o(\beta ^{1/3})\) as \(\beta \rightarrow 0\).

Thus we can calculate the limit of \(f_{\alpha , \beta , \lambda }\) as \(\beta \rightarrow 0\),

The above allows us to calculate limiting R-transform and hence the Cauchy transform which implies (2.19). \(\square \)

Corollary 2.15

Considering the continuous dependence of roots on parameters we get the following asymptotic behaviour of the double root \(\delta <0\) and the simple root \(\eta \ge \alpha \).

-

(i)

If \(|\lambda |>1\) then \(\delta \rightarrow \alpha /(1-|\lambda |)\) and \(\eta \rightarrow +\infty \) as \(\beta \downarrow 0\).

-

(ii)

If \(|\lambda |<1\) then \(\delta \rightarrow -\infty \) and \(\eta \rightarrow \alpha /(1-\lambda ^2)\) as \(\beta \downarrow 0\).

-

(iii)

If \(\lambda =\pm 1\) then \(\delta \rightarrow -\infty \) and \(\eta \rightarrow +\infty \) as \(\beta \downarrow 0\).

3 Regularity of fGIG Distribution Under Free Convolution

In this section we study in detail regularity properties of the fGIG distribution related to the operation of free additive convolution. In the next theorem we collect all the results proved in this section. The theorem contains several statements about free GIG distributions. Each subsection of the present section proves a part of the theorem.

Theorem 3.1

The following holds for the free GIG measure \(\mu (\alpha ,\beta ,\lambda )\):

- \(1^o\) :

-

It is freely infinitely divisible for any \(\alpha ,\beta >0\) and \(\lambda \in {\mathbb {R}}.\)

- \(2^o\) :

-

The free Levy measure is of the form

$$\begin{aligned} \tau _{\alpha ,\beta ,\lambda }(\mathrm{d}x)=\max \{\lambda ,0\} \delta _{1/\alpha }(\mathrm{d}x) + \frac{(1-\delta x) \sqrt{\beta (1-\eta x)}}{\pi x^{3/2} (1-\alpha x)} 1_{(0,1/\eta )}(x)\, \mathrm{d}x. \end{aligned}$$(3.1) - \(3^o\) :

-

It is free regular with zero drift for all \(\alpha ,\beta >0\) and \(\lambda \in {\mathbb {R}}\).

- \(4^o\) :

-

It is freely self-decomposable for \(\lambda \le -\frac{B^{\frac{3}{2}}}{A\sqrt{9B-8A}}.\)

- \(5^o\) :

-

It is unimodal.

3.1 Free Infinite Divisibility and Free Lévy Measure

As we mentioned before, having the operation of free convolution defined, it is natural to study infinite divisibility with respect to \(\boxplus \). We say that \(\mu \) is freely infinitely divisible if for any \(n\ge 1\) there exists a probability measure \(\mu _n\) such that

It turns out that free infinite divisibility of compactly supported measures can by described in terms of analytic properties of the R-transform. In particular it was proved in [36, Theorem 4.3] that the free infinite divisibility is equivalent to the inequality \(\text { Im}(r_{\alpha ,\beta ,\lambda }(z)) \le 0\) for all \(z\in {\mathbb {C}}^-\).

As in the classical case, for freely infinitely divisible probability measures, one can represent its free cumulant transform with a Lévy–Khintchine type formula. For a probability measure \(\mu \) on \({\mathbb {R}}\), the free cumulant transform is defined by

Then \(\mu \) is FID if and only if \({\mathcal {C}}^\boxplus _\mu \) can be analytically extended to \({\mathbb {C}}^-\) via the formula

where \(\xi \in {\mathbb {R}},\)\(\zeta \ge 0\) and \(\tau \) is a measure on \({\mathbb {R}}\) such that

The triplet \((\xi ,\zeta ,\tau )\) is called the free characteristic triplet of \(\mu \), and \(\tau \) is called the free Lévy measure of \(\mu \). The formula (3.3) is called the free Lévy–Khintchine formula.

Remark 3.2

The above form of free Lévy–Khintchine formula was obtained by Barndorff-Nielsen and Thorbjørnsen [6] and it has a probabilistic interpretation (see [30]). Another form was obtained by Bercovici and Voiculescu [8], which is more suitable for limit theorems.

In order to prove that all fGIG distributions are freely infinitely divisible we will use the following lemma.

Lemma 3.3

Let \(f:({\mathbb {C}}^{-} \cup {\mathbb {R}}) {\setminus } \{x_0\} \rightarrow {\mathbb {C}}\) be a continuous function, where \(x_0\in {\mathbb {R}}\). Suppose that f is analytic in \({\mathbb {C}}^{-}\), \(f(z)\rightarrow 0\) uniformly with \(z\rightarrow \infty \) and \(\text { Im}(f(x))\le 0\) for \(x \in {\mathbb {R}} {\setminus } \{x_0\}\). Suppose moreover that \(\text { Im}(f(z))\le 0\) for \(\text { Im}(z)\le 0\) in a neighbourhood of \(x_0\) then \(\text { Im}(f(z))\le 0\) for all \(z\in {\mathbb {C}}^{-}\).

Proof

Since f is analytic the function \(\text { Im}f\) is harmonic and thus satisfies the maximum principle. Fix \(\varepsilon >0\). Since \(f(z)\rightarrow 0\) uniformly with \(z\rightarrow \infty ,\) let \(R>0\) be such that \(\text { Im}f(z)<\varepsilon \). Consider a domain \(D_\varepsilon \) with the boundary

Observe that on \(\partial D_\varepsilon \)\(\text { Im}f(z)<\varepsilon \) by assumptions, and hence by the maximum principle we have \(\text { Im}f(z)<\varepsilon \) on whole \(D_\varepsilon \). Letting \(\varepsilon \rightarrow 0\) we get that \(\text { Im}f(z) \le 0\) on \({\mathbb {C}}^{-}\). \(\square \)

Next we proceed with the proof of free infinite divisibility of fGIG distributions.

Proof of Theorem 3.1

\(1^o\)Case 1.\(\lambda >0\).

Observe that we have

From (2.14) we see that \(\text { Im}(r_{\alpha ,\beta ,\lambda }(x))=0\) for \(x \in (-\infty ,\alpha ) \cup (\alpha ,\eta ]\), and

since \(\eta>\alpha>0>\delta \).

Moreover observe that by (2.15) for \(\varepsilon >0\) small enough we have \(\text { Im}(r_{\alpha ,\beta ,\lambda }(\alpha +\varepsilon e^{i \theta }))<0\), for \(\theta \in [-\pi ,0]\). Now Lemma 3.3 implies that free GIG distribution is freely ID in the case \(\lambda >0\).

Case 2\(\lambda <0\).

In this case similar argument shows that \(\mu (\alpha ,\beta ,\lambda )\) is FID. Moreover by (2.16) point \(z=\alpha \) is a removable singularity and \(r_{\alpha ,\beta ,\lambda }\) extends to a continuous function on \({\mathbb {C}}^-\cup {\mathbb {R}}\). Thus one does not need to take care of the behaviour around \(z=\alpha \).

Case 3\(\lambda =0\).

For \(\lambda =0\) one can adopt a similar argumentation using (2.17). It also follows from the fact that free GIG family \(\mu (\alpha ,\beta ,\lambda )\) is weakly continuous with respect to \(\lambda \). Since free infinite divisibility is preserved by weak limits, then the case \(\lambda =0\) may be deduced from the previous two cases. \(\square \)

Next we will determine the free Lévy measure of free GIG distribution \(\mu (\alpha ,\beta ,\lambda )\).

Proof of Theorem 3.1

\(2^o\) Let \((\xi _{\alpha ,\beta ,\lambda }, \zeta _{\alpha ,\beta ,\lambda },\tau _{\alpha ,\beta ,\lambda })\) be the free characteristic triplet of the free GIG distribution \(\mu (\alpha ,\beta ,\lambda )\). By the Stieltjes inversion formula mentioned in Remark 2.4, the absolutely continuous part of the free Lévy measure has the density

atoms are at points \(1/p~(p\ne 0)\), such that the weight given by

is non-zero, where z tends to p non-tangentially from \({\mathbb {C}}^-\). In our case the free Lévy measure does not have a singular continuous part since \(r_{\alpha ,\beta ,\lambda }\) is continuous on \({\mathbb {C}}^-\cup {\mathbb {R}}{\setminus }\{\alpha \}\). Considering (2.15)–(2.17) and (3.6) we obtain the free Lévy measure

Recall that \(\eta \ge \alpha>0>\delta \) holds, and \(\eta =\alpha \) if and only if \(\lambda =0\). The other two parameters \(\xi _{\alpha ,\beta ,\lambda }\) and \(\zeta _{\alpha ,\beta ,\lambda }\) in the free characteristic triplet will be determined in Sect. 3.2. \(\square \)

3.2 Free Regularity

In this subsection we will deal with a property stronger than free infinite divisibility, so called free regularity.

Let \(\mu \) be a FID distribution with the free characteristic triplet \((\xi ,\zeta ,\tau )\). When the semicircular part \(\zeta \) is zero and the free Lévy measure \(\tau \) satisfies a stronger integrability property \(\int _{{\mathbb {R}}}\min \{1,|x|\}\tau (\mathrm{d}x) < \infty \), then the free Lévy–Khintchine representation reduces to

where \(\xi ' =\xi -\int _{[-1,1]}x \,\tau (\mathrm{d}x) \in {\mathbb {R}}\) is called a drift. The distribution \(\mu \) is said to be free regular [28] if \(\xi '\ge 0\) and \(\tau \) is supported on \((0,\infty )\). A probability measure \(\mu \) on \({\mathbb {R}}\) is free regular if and only if the free convolution power \(\mu ^{\boxplus t}\) is supported on \([0,\infty )\) for every \(t>0\), see [3]. Examples of free regular distributions include positive free stable distributions, free Poisson distributions and powers of free Poisson distributions [16]. A general criterion in [3, Theorem 4.6] shows that some boolean stable distributions [1] and many probability distributions [2, 3, 15] are free regular. A recent result of Ejsmont and Lehner [11, Proposition 4.13] and its proof provide a wide class of examples: given a nonnegative definite complex matrix \(\{a_{ij}\}_{i,j=1}^n\) and free selfadjoint elements \(X_1,\dots , X_n\) which have symmetric FID distributions, the polynomial \(\sum _{i,j=1}^n a_{ij} X_i X_j\) has a free regular distribution.

Proof of Theorem 3.1

\(3^o\) For the free GIG distributions, the semicircular part can be found by \(\displaystyle \zeta _{\alpha ,\beta ,\lambda }= \lim _{z\rightarrow \infty } z^{-1} r_{\alpha ,\beta ,\lambda }(z)=0\). The free Lévy measure (3.1) satisfies

and so we have the reduced formula (3.10). The drift is given by \(\xi _{\alpha ,\beta ,\lambda }'=\lim _{u \rightarrow -\infty } r_{\alpha ,\beta ,\lambda }(u)=0\). \(\square \)

3.3 Free Selfdecomposability

Classical GIG distribution is selfdecomposable [14, 31] (more strongly, hyperbolically completely monotone [10, p. 74]), and hence it is natural to ask whether free GIG distribution is freely selfdecomposable.

A distribution \(\mu \) is said to be freely selfdecomposable (FSD) [5] if for any \(c\in (0,1)\) there exists a probability measure \(\mu _c\) such that \(\mu = (D_c\mu ) \boxplus \mu _c \), where \(D_c\mu \) is the dilation of \(\mu \), namely \((D_c\mu )(B)=\mu (c^{-1}B)\) for Borel sets \(B \subset {\mathbb {R}}\). A distribution is FSD if and only if it is FID and its free Lévy measure is of the form

where \(k:{\mathbb {R}}\rightarrow [0,\infty )\) is non-decreasing on \((-\infty ,0)\) and non-increasing on \((0,\infty )\). Unlike the free regular distributions, there are only a few known examples of FSD distributions: the free stable distributions, some free Meixner distributions, the classical normal distributions and a few other distributions (see [17, Example 1.2, Corollary 3.4]). The free Poisson distribution is not FSD.

Proof of Theorem 3.1

\(4^o\) In view of (3.1), the free GIG distribution \(\mu (\alpha ,\beta ,\lambda )\) is not FSD if \(\lambda > 0\). Suppose \(\lambda \le 0\), then \(\mu (\alpha ,\beta ,\lambda )\) is FSD if and only if the function

is non-increasing on \((0,1/\eta )\). The derivative is

Hence FSD is equivalent to

Using \(\eta \ge \alpha>0>\delta \), one can show that \(2 \alpha \eta -2\eta \delta + \alpha \delta >0\), a straightforward calculation shows that the function g takes a minimum at a point in \((0,1/\eta )\). Thus FSD is equivalent to

In order to determine when the above inequality holds, it is convenient to switch to parameters A, B defined by (2.6). Using formulas derived in Sect. 2.3 we obtain

Calculating \(\lambda \) for which D is non-positive we obtain that

\(\square \)

Corollary 3.4

One can easily find that the maximum of the function \(-\frac{B^{\frac{3}{2}}}{A\sqrt{9B-8A}}\) over \(A,B\ge 0\) equals \(-\frac{4}{9}\sqrt{3}\). Thus the set of parameters (A, B) that give FSD distributions is nonempty if and only if \(\lambda \le -\frac{4}{9}\sqrt{3}\).

In the critical case \(\lambda = -\frac{4}{9}\sqrt{3}\) only the pairs \((A, \frac{4}{3}A), A>0\) give FSD distributions. If one puts \(A=12 t, B= 16 t\) then \(a=(2-\sqrt{3})^2t,b=(2+\sqrt{3})^2t\), \(\alpha = \frac{3-\sqrt{3}}{18 t}\), \(\beta = \frac{3+\sqrt{3}}{18}t, \delta = -\frac{3-\sqrt{3}}{6t}=- 2\eta \). One can easily show that \(\mu (\alpha ,\beta ,-1)\) is FSD if and only if \((0<A<)~B\le \frac{-1+\sqrt{33}}{2} A\).

Finally note that the above result is in contrast to the fact that classical GIG distributions are all selfdecomposable.

3.4 Unimodality

Since relations of unimodality with free infinite divisibility and free self decomposability were studied in the literature, we decided to determine whether measures from the free GIG family are unimodal.

A measure \(\mu \) is said to be unimodal if for some \(c\in {\mathbb {R}}\)

where \(f:{\mathbb {R}}\rightarrow [0,\infty )\) is non-decreasing on \((-\infty ,c)\) and non-increasing on \((c,\infty )\). In this case c is called the mode. Hasebe and Thorbjørnsen [18] proved that FSD distributions are unimodal. Since some free GIG distributions are not FSD, the result from [18] does not apply. However it turns out that free GIG measures are unimodal.

Proof of Theorem 3.1

\(5^o\) Calculating the derivative of the density of \(\mu (\alpha ,\beta ,\lambda )\) one obtains

Denoting by f(x) the quadratic polynomial in the numerator, one can easily see from the shape of the density that \(f(a)>0>f(b)\) and hence the derivative vanishes at a unique point in (a, b) (since f is quadratic). \(\square \)

4 Characterizations the Free GIG Distribution

In this section we show that the fGIG distribution can be characterized similarly as classical GIG distribution. In [34] fGIG was characterized in terms of free independence property, the classical probability analogue of this result characterizes classical GIG distribution. In this section we find two more instances where such analogy holds true, one is a characterization by some distributional properties related with continued fractions, the other is maximization of free entropy.

4.1 Continued Fraction Characterization

In this section we study a characterization of fGIG distribution which is analogous to the characterization of GIG distribution proved in [21]. Our strategy is different from the one used in [21]. We will not deal with continued fractions, but we will take advantage of subordination for free convolutions, which allows us to prove the simpler version of “continued fraction” characterization of fGIG distribution.

Theorem 4.1

Let Y have the free Poisson distribution \(\nu (1/\alpha ,\lambda )\) and let X be free from Y, where \(\alpha ,\lambda >0\) and \(X>0\), then we have

if and only if X has free GIG distribution \(\mu (\alpha ,\alpha ,-\lambda )\).

Remark 4.2

Observe that the “if” part of the above theorem is contained in the Remark 2.12. We only have to show that if (4.1) holds where Y has free Poisson distribution \(\nu (1/\alpha ,\lambda )\), then X has the free GIG distribution \(\mu (\alpha ,\alpha ,-\lambda )\).

As mentioned above our proof of the above theorem uses subordination of free convolution. This property of free convolution was first observed by Voiculescu [37] and then generalized by Biane [9]. Let us shortly recall what we mean by subordination of free additive convolution.

Remark 4.3

Subordination of free convolution states that for probability measures \(\mu ,\nu \), there exists an analytic function defined on \({\mathbb {C}}{\setminus }{\mathbb {R}}\) with the property \(F({\overline{z}})=\overline{F(z)}\) such that for \(z\in {\mathbb {C}}^+\) we have \(\text { Im}F(z)>\text { Im}z\) and

Now if we denote by \(\omega _1\) and \(\omega _2\) subordination functions such that \(G_{\mu \boxplus \nu }=G_\mu (\omega _1)\) and \(G_{\mu \boxplus \nu }=G_\nu (\omega _2)\), then \(\omega _1(z)+\omega _2(z)=1/G_{\mu \boxplus \nu }(z)+z\).

Next we proceed with the proof of Theorem 4.1 which is the main result of this section.

Proof

First note that (4.1) is equivalent to

Which may be equivalently stated in terms of Cauchy transforms of both sides as

Subordination allows as to write the Cauchy transform of \(X+Y\) in two ways

Moreover \(\omega _X\) and \(\omega _Y\) satisfy

From the above we get

this together with (4.2) and (4.3) gives

Since we know that Y has free Poisson distribution \(\nu (\lambda ,1/\alpha )\) we can calculate \(\omega _Y\) in terms of \(G_{X^{-1}}\) using (4.4). To do this one has to use the identity \(G_Z^{\langle -1\rangle }(z)=r_Z(z)+1/z\) for any self-adjoint random variable Z and the form of the R-transform of free Poisson distribution recalled in Remark 2.9.

Now we can use (4.3), where we substitute \(G_{X+Y}(z)=G_{X^{-1}}(z)\) to obtain

Next we observe that we have

which allows to transform (4.7) to an equation for \(G_X\). It is enough to show that this equation has a unique solution. Indeed from Remark 2.12 we know that free GIG distribution \(\mu (\alpha ,\alpha ,\lambda )\) has the desired property, which in particular means that for X distributed \(\mu (\alpha ,\alpha ,\lambda )\) Eq. (4.7) is satisfied. Thus if there is a unique solution it has to be the Cauchy transform of the free GIG distribution.

To prove uniqueness of the Cauchy transform of X, we will prove that coefficients of the expansion of \(G_X\) at a special “good” point, are uniquely determined by \(\alpha \) and \(\lambda \).

First we will determine the point at which we will expand the function. Observe that with our assumptions \(G_{X^{-1}}\) is well defined on the negative half-line, moreover \(G_{X^{-1}}(x)<0\) for any \(x<0\), and we have \(G_{X^{-1}}(x)\rightarrow 0\) with \(x\rightarrow -\infty \). On the other hand the function \(f(x)=1/x-x\) is decreasing on the negative half-line, and negative for \(x\in (-1,0)\). Thus there exist a unique point \(c\in (-1,0)\) such that

Let us denote

and

where the last equality follows from (4.8).

One has \(N(c)=c\), and our functional Eq. (4.7) may be rewritten (with the help of (4.8)) as

Functions M and N are analytic around any \(x<0\). Consider the expansions

Observe that \(\beta _0=c\) since \(N(c)=c\). Differentiating (4.10) we observe that any \(\beta _n,\, n\ge 1\) is a rational function of \(\alpha , \lambda , c, \alpha _0,\alpha _1,\dots , \alpha _n\). Moreover any \(\beta _n,\,n\ge 1 \) is a degree one polynomial in \(\alpha _n\). We have

where \(R_n\) is a rational function of \(n+3\) variables evaluated at \((\alpha ,\lambda ,c,\alpha _0,\alpha _1,\dots , \alpha _{n-1})\), which does not depend on the distribution of X. For example \(\beta _1\) is given by

Next we investigate some properties of \(c, \alpha _0\) and \(\alpha _1\). Evaluating both sides of (4.11) at \(z=c\) yields

since \(M(c)=\alpha _0\) we get

Observe that \(\alpha _0= M(c) = G_X(1/c)\) and \(\alpha _1=M'(c)=-c^{-2} G_X'(1/c)\) hence we have

where \(\mu _X\) is the distribution of X. Using the Schwarz inequality for the first estimate and a simple observation that \(0\le 1/(1-cx) \le 1\) for \(x>0\), for the latter estimate we obtain

The Eq. (4.9) together with (4.8) gives

Substituting (4.14) to (4.16) after simple calculations we get

We start by showing that \(\alpha _0\) is determined only by \(\alpha \) and \(\lambda \). We will show that c, which we showed before is a unique number, depends only on \(\alpha \) and \(\lambda \) and thus (4.14) shows that \(\alpha _0\) is determined by \(\alpha \) and \(\lambda \).

Since the polynomial \(c^4 - (1+\lambda )c^3\) is non-negative for \(c<0\) and has a root at \(c=0\), and the polynomial \((\lambda -1)c +\alpha \) equals \(\alpha >0\) at \(c=0\) it follows that there is only one negative c, such that the two polynomials are equal and thus the number c is uniquely determined by \((\alpha ,\lambda )\). From (4.14) we see that \(\alpha _0\) is also uniquely determined by \((\alpha ,\lambda )\).

Next we will prove that \(\alpha _1\) only depends on \(\alpha \) and \(\lambda \). Differentiating (4.11) and evaluating at \(z=c\) we obtain

Substituting \(\alpha _0\) and \(\lambda \) from the Eqs. (4.14) and (4.17) we simplify (4.13) and we get

and then Eq. (4.18) may be expressed in the form

The above is a degree 2 polynomial in \(\alpha _1\), denote this polynomial by f, we have then

Where the first inequality follows from the fact that \(c<0\). Since the coefficient \(c(1+c^2)^2\) is negative we conclude that f has one root in the interval \((0,1/(1+c^2))\) and the other in \((1/(1+c^2),\infty )\). The inequality (4.15) implies that \(\alpha _1\) is the smaller root of f, which is a function of \( \alpha \) and c and hence of \(\alpha \) and \(\lambda \).

In order to prove that \(\alpha _n\) depends only on \((\alpha ,\lambda )\) for \(n\ge 2\), first we estimate \(\beta _1\). Note that (4.18) and (4.14) imply that

Combining this with the inequality (4.15) we easily get that

Now we prove by induction on n that \(\alpha _n\) only depends on \(\alpha \) and \(\lambda \). For \(n\ge 2\) differentiating n-times (4.11) and evaluating at \(z=c\) we arrive at

where \(Q_n\) is a universal polynomial (which means that the polynomial does not depend on the distribution of X) in \(2n+1\) variables evaluated at \((\alpha ,\lambda ,c,\alpha _1,\dots , \alpha _{n-1}, \beta _1,\ldots , \beta _{n-1})\). According to the inductive hypothesis, the polynomials \(R_n\) and \(Q_n\) depend only on \(\alpha \) and \(\lambda \). We also have that \(\beta _n=p \alpha _n + R_n\), where

The last formula is obtained by substituting \(\alpha _0\) and \(\lambda \) from (4.14) and (4.17). The Eq. (4.22) then becomes

The inequalities (4.15) and (4.21) show that

thus \(1+c^2\beta _1^n + c^2 p \alpha _1\) is non-zero. Therefore, the number \(\alpha _n\) is uniquely determined by \(\alpha \) and \(\lambda \).

Thus we have shown that, if a random variable \(X>0\) satisfies the functional Eq. (4.7) for fixed \(\alpha >0\) and \(\lambda >0\), then the point c and all the coefficients \(\alpha _0,\alpha _1,\alpha _2,\dots \) of the series expansion of M(z) at \(z=c\) are determined only by \(\alpha \) and \(\lambda \). By analytic continuation, the Cauchy transform \(G_X\) is determined uniquely by \(\alpha \) and \(\lambda \), so there is only one distribution of X for which this equation is satisfied. \(\square \)

4.2 Remarks on Free Entropy Characterization

Féral [12] proved that fGIG \(\mu (\alpha ,\beta ,\lambda )\) is a unique probability measure which maximizes the following free entropy functional with potential

among all compactly supported probability measures \(\mu \) on \((0,\infty )\), where \(\alpha , \beta >0\) and \(\lambda \in {\mathbb {R}}\) are fixed constants, and

Here we point out the classical analogue. The (classical) GIG distribution is the probability measure on \((0,\infty )\) with the density

where \(K_\lambda \) is the modified Bessel function of the second kind. Note that this density is proportional to \(\exp (-V_{\alpha ,\beta ,\lambda }(x))\). Kawamura and Iwase [20] proved that the GIG distribution is a unique probability measure which maximizes the classical entropy with the same potential

among all probability density functions p on \((0,\infty )\). This statement is slightly different from the original one [20, Theorem 2], and for the reader’s convenience a short proof is given below. The proof is a straightforward application of the Gibbs’ inequality

for all probability density functions p and q, say on \((0,\infty )\). Taking q to be the density (4.23) of the classical GIG distribution and computing \(\log q(x)\), we obtain the inequality

Since the Gibbs inequality (4.24) becomes equality if and only if \(p=q\), the equality in (4.25) holds if and only if \(p=q\), as well.

Remark 4.4

From the above observation, it is tempting to investigate the map

where \(C>0\) is a normalizing constant. Under some assumption on V, the free entropy functional \(I_V\) is known to have a unique maximizer (see [29]) and so the above map is well defined. Note that the density function \(C e^{-V(x)}\) is the maximizer of the classical entropy functional with potential V, which follows from the same arguments as above. This map sends Gaussian law to Semicircle law, Gamma law to free Poisson distribution (when \(\lambda \ge 1\)), and GIG distribution to fGIG distribution. More examples can be found in [29].

References

Arizmendi, O., Hasebe, T.: Classical and free infinite divisibility for Boolean stable laws. Proc. Am. Math. Soc. 142(5), 1621–1632 (2014)

Arizmendi, O., Hasebe, T.: Classical scale mixtures of boolean stable laws. Trans. Am. Math. Soc. 368, 4873–4905 (2016)

Arizmendi, O., Hasebe, T., Sakuma, N.: On the law of free subordinators. ALEA Lat. Am. J. Probab. Math. Stat. 10(1), 271–291 (2013)

Barndorff-Nielsen, O.E., Halgreen, C.: Infinite divisibility of the hyperbolic and generalized inverse Gaussian distributions. Z. Wahrsch. Verw. Gebiete 38(4), 309–311 (1977)

Barndorff-Nielsen, O.E., Thorbjørnsen, S.: Self-decomposability and Lévy processes in free probability. Bernoulli 8(3), 323–366 (2002)

Barndorff-Nielsen, O.E., Thorbjørnsen, S.: Lévy laws in free probability. Proc. Natl. Acad. Sci. 99, 16568–16575 (2002)

Bercovici, H., Pata, V.: Stable laws and domains of attraction in free probability theory. Ann. Math (2) 149(3), 1023–1060 (1999). (With an appendix by Philippe Biane)

Bercovici, H., Voiculescu, D.: Free convolution of measures with unbounded support. Indiana Univ. Math. J. 42(3), 733–773 (1993)

Biane, P.: Processes with free increments. Math. Z. 227(1), 143–174 (1998)

Bondesson, L.: Generalized Gamma Convolutions and Related Classes of Distributions and Densities. Lecture Notes in Statistics, vol. 76. Springer, New York (1992)

Ejsmont, W., Lehner, F.: Sample variance in free probability. J. Funct. Anal. 273(7), 2488–2520 (2017)

Féral, D.: The limiting spectral measure of the generalised inverse Gaussian random matrix model. C. R. Math. Acad. Sci. Paris 342(7), 519–522 (2006)

Haagerup, U., Thorbjørnsen, S.: On the free gamma distributions. Indiana Univ. Math. J. 63(4), 1159–1194 (2014)

Halgreen, C.: Self-decomposability of the generalized inverse Gaussian and hyperbolic distributions. Z. Wahrsch. verw. Gebiete 47, 13–17 (1979)

Hasebe, T.: Free infinite divisibility for beta distributions and related ones. Electron. J. Probab. 19(81), 1–33 (2014)

Hasebe, T.: Free infinite divisibility for powers of random variables. ALEA Lat. Am. J. Probab. Math. Stat. 13(1), 309–336 (2016)

Hasebe, T., Sakuma, N., Thorbjørnsen, S.: The normal distribution is freely self-decomposable. Int. Math. Res. Not. arXiv:1701.00409

Hasebe, T., Thorbjørnsen, S.: Unimodality of the freely selfdecomposable probability laws. J. Theor. Probab. 29(3), 922–940 (2016)

Hiai, F., Petz, D.: The Semicircle Law, Free Random Variables and Entropy. Mathematical Surveys and Monographs, vol. 77. American Mathematical Society, Providence (2000)

Kawamura, T., Iwase, K.: Characterizations of the distributions of power inverse Gaussian and others based on the entropy maximization principle. J. Jpn. Stat. Soc. 33(1), 95–104 (2003)

Letac, G., Seshadri, V.: A characterization of the generalized inverse Gaussian distribution by continued fractions. Z. Wahrsch. Verw. Gebiete 62, 485–489 (1983)

Letac, G., Wesołowski, J.: An independence property for the product of GIG and gamma laws. Ann. Probab. 28, 1371–1383 (2000)

Lukacs, E.: A characterization of the gamma distribution. Ann. Math. Stat. 26, 319–324 (1955)

Matsumoto, H., Yor, M.: An analogue of Pitman’s \(2M-X\) theorem for exponential Wiener functionals. II. The role of the generalized inverse Gaussian laws. Nagoya Math. J. 162, 65–86 (2001)

Mingo, J.A., Speicher, R.: Free Probability and Random Matrices. Springer, New York (2017)

Nica, A., Speicher, R.: Lectures on the Combinatorics of Free Probability. London Mathematical Society Lecture Note Series, vol. 335. Cambridge University Press, Cambridge (2006)

Pérez-Abreu, V., Sakuma, N.: Free generalized gamma convolutions. Electron. Commun. Probab. 13, 526–539 (2008)

Pérez-Abreu, V., Sakuma, N.: Free infinite divisibility of free multiplicative mixtures of the Wigner distribution. J. Theor. Probab. 25(1), 100–121 (2012)

Saff, E.B., Totic, V.: Logarithmic Potentials with External Fields. Springer, Berlin (1997)

Sato, K.: Lévy Processes and Infinitely Divisible Distributions, corrected paperback edition. Cambridge Studies in Advanced Mathematics, vol. 68. Cambridge University Press, Cambridge (2013)

Shanbhag, D.N., Sreehari, M.: An extension of Goldie’s result and further results in infinite divisibility. Z. Wahrsch. verw. Gebiete 47, 19–25 (1979)

Szpojankowski, K.: On the Lukacs property for free random variables. Stud. Math. 228(1), 55–72 (2015)

Szpojankowski, K.: A constant regression characterization of the Marchenko–Pastur law. Probab. Math. Stat. 36(1), 137–145 (2016)

Szpojankowski, K.: On the Matsumoto–Yor property in free probability. J. Math. Anal. Appl. 445(1), 374–393 (2017)

Voiculescu, D.: Symmetries of some reduced free product \(C^\ast \)-algebras. In: Araki, H., Moore, C.C., Stratila, Ş.V., Voiculescu, D.V. (eds.) Operator Algebras and their Connections with Topology and Ergodic Theory. Lecture Notes in Mathematics, vol. 1132. Springer, Berlin, Heidelberg (1985)

Voiculescu, D.: Addition of certain noncommuting random variables. J. Funct. Anal. 66(3), 323–346 (1986)

Voiculescu, D.: The analogues of entropy and of Fisher’s information measure in free probability theory. I. Commun. Math. Phys. 155(1), 71–92 (1993)

Voiculescu, D., Dykema, K., Nica, A.: Free Random Variables. A Noncommutative Probability Approach to Free Products with Applications to Random Matrices, Operator Algebras and Harmonic Analysis on Free Groups. CRM Monograph Series, vol. 1. American Mathematical Society, Providence (1992)

Acknowledgements

The authors would like to thank BIRS, Banff, Canada for hospitality during the workshop “Analytic versus Combinatorial in Free Probability” where we started to work on this project. TH was supported by JSPS Grant-in-Aid for Young Scientists (B) 15K17549 and (A) 17H04823. KSz was partially supported by the NCN (National Science Center) Grant 2016/21/B/ST1/00005.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Marek Bozejko.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hasebe, T., Szpojankowski, K. On Free Generalized Inverse Gaussian Distributions. Complex Anal. Oper. Theory 13, 3091–3116 (2019). https://doi.org/10.1007/s11785-018-0790-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11785-018-0790-9