Abstract

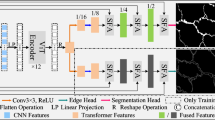

Crack detectors based on deep learning have made tremendous progress compared to inefficient traditional inspections. However, missing thin local structures, blurred boundary segmentation and slow inference speed restrict the performance improvement of existing crack detectors. To this end, we propose a serial–parallel network (SP-CrackNet) for pixel-level real-time crack detection. Specifically, a serial–parallel feature extractor with global bottleneck blocks (GB Block) is proposed. Based on the serial–parallel structure, SPFE can enlarge the receiving field and capture the local information of thin cracks effectively, and the GB Block designed by us can ensure the continuity of the slender cracks is sensed with a lower computational cost. Moreover, a boundary contrastive learning scheme (BCL scheme) is designed to enhance the learning ability of SP-CrackNet for crack boundary features, thereby improving the segmentation accuracy of crack boundary regions. Extensive experiments on CFD and DeepCrack datasets show that SP-CrackNet outperforms the comparative methods while achieving real-time inference speed.

Similar content being viewed by others

Data availability

The research has been used publicly available dataset.

References

Shi, Y., Cui, L., Qi, Z., Meng, F., Chen, Z.: Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transportat. Syst. 17(12), 3434–3445 (2016)

Liu, Y., Yao, J., Xiaohu, L., Xie, R., Li, L.: Deepcrack: a deep hierarchical feature learning architecture for crack segmentation. Neurocomputing 338, 139–153 (2019)

Shelhamer, E., Long, J., Darrell, T.: Fully convolutional networks for semantic segmentation. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3431–3440, (2014)

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Patt. Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pp. 234–241. Springer, (2015)

Gao, X., Tong, B.: Mra-unet: balancing speed and accuracy in road crack segmentation network. Signal, Image Video Process 17(5), 2093–2100 (2023)

Pang, J., Zhang, H., Zhao, H., Li, L.: Dcsnet: a real-time deep network for crack segmentation. Signal, Image Video Process 1, 1–9 (2022)

Yao, H., Liu, Y., Li, X., You, Z., Feng, Y., Lu, W.: A detection method for pavement cracks combining object detection and attention mechanism. IEEE Trans. Intell. Transport. Syst. 23(11), 22179–22189 (2022)

König, J., Jenkins, M.D., Mannion, M., Barrie, P., Morison, G.: Optimized deep encoder-decoder methods for crack segmentation. Digital Signal Process. 108, 102907 (2021)

Yang, L., Bai, S., Liu, Y., Hongnian, Y.: Multi-scale triple-attention network for pixelwise crack segmentation. Automat. Construct. 150, 104853 (2023)

Liu, H., Miao, X., Mertz, C., Xu, C., Kong, H.: Crackformer: transformer network for fine-grained crack detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3783–3792, (2021)

Hamaguchi, R., Fujita, A., Nemoto, K., Imaizumi, T., Hikosaka, S.: Effective use of dilated convolutions for segmenting small object instances in remote sensing imagery. In: 2018 IEEE winter conference on applications of computer vision (WACV), pp. 1442–1450. IEEE, (2018)

Yang, F., Zhang, L., Sijia, Y., Prokhorov, D., Mei, X., Ling, H.: Feature pyramid and hierarchical boosting network for pavement crack detection. IEEE Trans. Intell. Transport. Syst. 21(4), 1525–1535 (2019)

Lee, C-Y, Xie, S, Gallagher, P, Zhang, Z, Tu, Z: Deeply-supervised nets. In: Artificial intelligence and statistics, pp. 562–570. Pmlr (2015)

Zhang, A., Wang, K.C.P., Li, B., Yang, E., Dai, X., Peng, Y., Fei, Y., Liu, Y., Li, J.Q., Chen, C.: Automated pixel-level pavement crack detection on 3d asphalt surfaces using a deep-learning network. Comput-Aided Civ Infrastruct. Eng. 32(10), 805–819 (2017)

Fei, Y.W., Kelvin, C.P., Zhang, A., Chen, C., Li, J.Q., Liu, Y., Yang, G., Li, B.: Pixel-level cracking detection on 3d asphalt pavement images through deep-learning-based cracknet-v. IEEE Trans. Intell. Transport. Syst. 21(1), 273–284 (2019)

Hu, H., Cui, J., Wang, L.: Region-aware contrastive learning for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 16291–16301, (2021)

Park, T, Efros, A A., Zhang, R, Zhu, J-Y: Contrastive learning for unpaired image-to-image translation. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX 16, pp. 319–345. Springer, (2020)

Chen, X, Pan, J, Jiang, K, Li, Y, Huang, Y, Kong, C, Dai, L, Fan, Z: Unpaired deep image deraining using dual contrastive learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2017–2026, (2022)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: 3rd International Conference on Learning Representations (ICLR 2015). Computational and Biological Learning Society, (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, (2016)

Zhang, Z., Tang, J., Wu, G.: Simple and lightweight human pose estimation. arXiv preprintarXiv:1911.10346, (2019)

Cao, Y., Xu, J., Lin, S., Wei, F., Hu, H.: Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, (2019)

Zou, Q., Zhang, Z., Li, Q., Qi, X., Wang, Q., Wang, S.: Deepcrack: learning hierarchical convolutional features for crack detection. IEEE Trans. Image Process 28(3), 1498–1512 (2018)

Liu, H., Yang, J., Miao, X., Mertz, C., Kong, H.: Crackformer network for pavement crack segmentation. IEEE Transactions on Intelligent Transportation Systems, (2023)

Li, Y., Ma, R., Liu, H., Cheng, G.: Real-time high-resolution neural network with semantic guidance for crack segmentation. Autom. Construct. 156, 105112 (2023)

Liu, Yun, Cheng, Ming-Ming, Hu, Xiaowei, Wang, Kai, Bai, Xiang: Richer convolutional features for edge detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3000–3009, (2017)

Acknowledgements

This work is supported by the Fundamental Research Funds for the Central Universities (Science and technology leading talent team project) (2022JBQY009), National Nature Science Foundation of China (51827813), National Key R &D Program “Transportation Infrastructure” “Reveal the list and take command” project (2022YFB2603302) and R &D Program of Beijing Municipal Education Commission (KJZD20191000402).

Author information

Authors and Affiliations

Contributions

Xie Ying was responsible for all experiments and main manuscript text. Yin Hui was in charge of writing main manuscript text. Chong Aixin was responsible for main manuscript text and figures. Yang Ying wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xie, Y., Yin, H., Chong, A. et al. SP-CrackNet: serial–parallel network with boundary contrastive learning for real-time crack detection. SIViP 18, 3265–3274 (2024). https://doi.org/10.1007/s11760-023-02988-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02988-z