Abstract

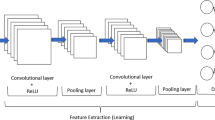

Agriculture is heavily affected by weeds due to their random appearances in fields, competition for water, nutrients, and sunlight, and, if not controlled effectively, negative impact on crop yields. In general, there are many prevention strategies, but they are expensive and time consuming; moreover, labor costs have increased substantially. To overcome these challenges, a novel AGS-MNFELM model has been proposed for weed detection in agricultural fields. Initially, the gathered images are pre-processed using bilateral filter for noise removal and CLAHE for enhancing the image quality. The pre-processed images are taken as an input for automatic graph cut segmentation (AGS) model for segmenting regions with bounding box using the RCNN rather than manual initialization, hence eliminating the need for manual interpretation. The Mobile Net model is used to acquired rich feature representations for a variety of images, and the retrieved features FELM (Fuzzy Extreme Learning Machine Model) classifier is used to classify four weed types of maize and soyabean: cocklebur, redroot pigweed, foxtail, and giant ragweed. The proposed AGS-MNFELM model has been evaluated in terms of its sensitivity, accuracy, specificity, and F1 score. The experimental result reveals that the proposed AGS-MNFELM model attains the overall accuracy of 98.63%. The proposed deep learning-based MobileNet improves the overall accuracy range of 7.91%, 4.15%, 3.44% and 5.88% better than traditional LeNet, AlexNet, DenseNet and ResNet, respectively.

Similar content being viewed by others

Availability of data and material

Data sharing is not applicable to this article as no new data were created or analyzed in this Research.

References

Su, D., Kong, H., Qiao, Y., Sukkarieh, S.: Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 190, 106418 (2021)

Zou, K., Chen, X., Wang, Y., Zhang, C., Zhang, F.: A modified U-Net with a specific data argumentation method for semantic segmentation of weed images in the field. Comput. Electron. Agric. 187, 106242 (2021)

Lan, Y., Huang, K., Yang, C., Lei, L., Ye, J., Zhang, J., Zeng, W., Zhang, Y., Deng, J.: Real-time identification of rice weeds by UAV low-altitude remote sensing based on improved semantic segmentation model. Remote Sens. 13(21), 4370 (2021)

Yang, M.D., Tseng, H.H., Hsu, Y.C., Tsai, H.P.: Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 12(4), 633 (2020)

García-Martínez, H., Flores-Magdaleno, H., Ascencio-Hernández, R., Khalil-Gardezi, A., Tijerina-Chávez, L., Mancilla-Villa, O.R., Vázquez-Peña, M.A.: Corn grain yield estimation from vegetation indices, canopy cover, plant density, and a neural network using multispectral and RGB images acquired with unmanned aerial vehicles. Agriculture 10(7), 277 (2020)

Khan, A., Ilyas, T., Umraiz, M., Mannan, Z.I., Kim, H.: Ced-net: crops and weeds segmentation for smart farming using a small cascaded encoder-decoder architecture. Electronics 9(10), 1602 (2020)

Wang, A., Xu, Y., Wei, X., Cui, B.: Semantic segmentation of crop and weed using an encoder-decoder network and image enhancement method under uncontrolled outdoor illumination. IEEE Access 8, 81724–81734 (2020)

Nevavuori, P., Narra, N., Lipping, T.: Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 163, 104859 (2019)

Yang, Q., Shi, L., Han, J., Zha, Y., Zhu, P.: Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images. Field Crop Res 235, 142–153 (2019)

Reza, M.N., Na, I.S., Baek, S.W., Lee, K.H.: Rice yield estimation based on K-means clustering with graph-cut segmentation using low-altitude UAV images. Biosys. Eng. 177, 109–121 (2019)

Smith, L.N., Byrne, A., Hansen, M.F. Zhang, W., Smith, M.L.: Weed classification in grasslands using convolutional neural networks. In Applications of Machine Learning, Vol. 11139, pp. 334–344. SPIE (2019)

Abdalla, A., Cen, H., Wan, L., Rashid, R., Weng, H., Zhou, W., He, Y.: Fine-tuning convolutional neural network with transfer learning for semantic segmentation of ground-level oilseed rape images in a field with high weed pressure. Comput. Electron. Agric. 167, 105091 (2019)

Huang, H., Deng, J., Lan, Y., Yang, A., Deng, X., Zhang, L.: A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 13(4), e0196302 (2018)

dos Santos Ferreira, A., Freitas, D.M., da Silva, G.G., Pistori, H., Folhes, M.T.: Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 143, 314–324 (2017)

Bah, M.D., Dericquebourg, E., Hafiane, A., Canals, R.: Deep learning-based classification system for identifying weeds using high-resolution UAV imagery. In Intelligent Computing: Proceedings of the 2018 Computing Conference, Vol. 2, pp. 176–187. Springer (2019)

Selvi, C.T., Subramanian, R.S., Ramachandran, R.: Weed detection in agricultural fields using deep learning process. In 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Vol. 1, pp. 1470–1473. IEEE (2021)

Jin, X., Che, J., Chen, Y.: Weed identification using deep learning and image processing in vegetable plantation. IEEE Access 9, 10940–10950 (2021)

Badhan, S., Desai, K., Dsilva, M., Sonkusare, R., Weakey, S.: Real-time weed detection using machine learning and stereo-vision. In 2021 6th International Conference for Convergence in Technology (I2CT), pp. 1–5. IEEE (2021)

Osorio, K., Puerto, A., Pedraza, C., Jamaica, D., Rodríguez, L.: A deep learning approach for weed detection in lettuce crops using multispectral images. AgriEngineering 2(3), 471–488 (2020)

Islam, N., Rashid, M.M., Wibowo, S., Xu, C.Y., Morshed, A., Wasimi, S.A., Moore, S., Rahman, S.M.: Early weed detection using image processing and machine learning techniques in an Australian chilli farm. Agriculture 11(5), 387 (2021)

Haq, M.A.: CNN based automated weed detection system using UAV imagery. Comput. Syst. Sci. Eng. 42(2), 837–849 (2022)

Razfar, N., True, J., Bassiouny, R., Venkatesh, V., Kashef, R.: Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 8, 100308 (2022)

Subeesh, A., Bhole, S., Singh, K., Chandel, N.S., Rajwade, Y.A., Rao, K.V.R., Kumar, S.P., Jat, D.: Deep convolutional neural network models for weed detection in polyhouse grown bell peppers. Artif. Intell. Agric. 6, 47–54 (2022)

Hu, K., Coleman, G., Zeng, S., Wang, Z., Walsh, M.: Graph weeds net: a graph-based deep learning method for weed recognition. Comput. Electron. Agric. 174, 105520 (2020)

Garibaldi-Márquez, F., Flores, G., Mercado-Ravell, D.A., Ramírez-Pedraza, A., Valentín-Coronado, L.M.: Weed classification from natural corn field-multi-plant images based on shallow and deep learning. Sensors 22(8), 3021 (2022)

Peng, H., Li, Z., Zhou, Z., Shao, Y.: Weed detection in paddy field using an improved RetinaNet network. Comput. Electron. Agric. 199, 107179 (2022)

Manikandakumar, M., Karthikeyan, P.: Weed classification using particle swarm optimization and deep learning models. Comput. Syst. Sci. Eng. 44, 913–927 (2023)

Ajayi, O.G., Ashi, J.: Effect of varying training epochs of a faster region-based convolutional neural network on the accuracy of an automatic weed classification scheme. Smart Agric. Technol. 3, 100128 (2023)

Aggarwal, V., Ahmad, A., Etienne, A., Saraswat, D.: 4Weed dataset: annotated imagery weeds dataset. Preprint at https://arxiv.org/abs/2204.00080 (2022)

Asad, M.H., Bais, A.: Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 7(4), 535–545 (2020)

You, J., Liu, W., Lee, J.: A DNN-based semantic segmentation for detecting weed and crop. Comput. Electron. Agric. 178, 105750 (2020)

Czymmek, V., Harders, L.O., Knoll, F.J. and Hussmann, S.: Vision-based deep learning approach for real-time detection of weeds in organic farming. In 2019 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), pp. 1–5. IEEE (2019)

Ma, X., Deng, X., Qi, L., Jiang, Y., Li, H., Wang, Y., Xing, X.: Fully convolutional network for rice seedling and weed image segmentation at the seedling stage in paddy fields. PLoS ONE 14(4), e0215676 (2019)

Acknowledgements

The authors would like to thank the reviewers for all of their careful, constructive and insightful comments in relation to this work.

Funding

No Financial support.

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: Study conception and design: SPS, KM, SK; Data collection: SPS; Analysis and interpretation of results: KM; Draft manuscript preparation: SK. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

This paper has no conflict of interest for publishing.

Ethical approval

My research guide reviewed and ethically approved this manuscript for publishing in this Journal.

Human and animal rights

This article does not contain any studies with human or animal subjects performed by any of the authors.

Informed consent

I certify that I have explained the nature and purpose of this study to the above-named individual, and I have discussed the potential benefits of this study participation. The questions the individual had about this study have been answered, and we will always be available to address future questions.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Samuel, S.P., Malarvizhi, K. & Karthik, S. Weed detection in agricultural fields via automatic graph cut segmentation with Mobile Net classification model. SIViP 18, 1549–1560 (2024). https://doi.org/10.1007/s11760-023-02863-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02863-x