Abstract

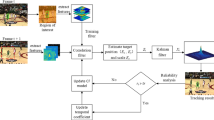

There are numerous applications for visual object tracking in computer vision, and it aims to attain the highest tracking reliability and accuracy depending on the applications’ varied evaluation criteria. Although DCF tracking algorithms have been used in the past and achieved great results, they are still unable to provide robust tracking under difficult conditions such as occlusion, scale fluctuation, quick motion, and motion blur. To address the instability during tracking brought on by various challenging issues in complex sequences, we present a novel framework termed improved spatial–temporal regularized correlation filters (I-STRCF) to integrate with instantaneous motion estimation and Kalman filter for visual object tracking which can minimize the possible tracking failure during tracking as the tracking model update itself with Kalman filter throughout the video sequence. We also include a unique scale estimate criterion called average peak-to-correlation energy to address the issue of target loss brought on by scale change. Using the previously calculated motion data, the suggested method predicts the potential scale region of the target in the current frame, and then the target model updates the target object’s position in successive frames. Additionally, we examine the factors affecting how well the suggested framework performs in extensive experiments. The experimental results show that this proposed framework achieves the best visual tracking for computer vision and performs better than STRCF on Temple Color-128 datasets for object tracking attributes. Our framework produces greater AUC improvements for the scale variation, background clutter, lighting variation, occlusion, out-of-plane rotation, and deformation properties when compared to STRCF. Our system gets much better improvements than its rivals in terms of performance and robustness for sporting events.

Similar content being viewed by others

Availability of data and materials

All data collected from well-known repositories are available on the internet.

References

Fu, C., Li, B., Ding, F., Lin, F., Lu, G.: Correlation filters for unmanned aerial vehicle-based aerial tracking: a review and experimental evaluation. IEEE Geosci. Remote Sens. Mag. 10, 125–160 (2021)

Lucas, B.D., Kanade, T.: An iterative image registration technique with an application to stereo vision. Vancouver, British Columbia (1981)

Lan, X., Ma, A.J., Yuen, P.C., Chellappa, R.: Joint sparse representation and robust feature-level fusion for multi-cue visual tracking. IEEE Trans. Image Process. 24(12), 5826–5841 (2015)

Bai, T., Li, Y.F.: Robust visual tracking with structured sparse representation appearance model. Pattern Recogn.Recogn. 45(6), 2390–2404 (2012)

Jia, X., Lu, H., Yang, M.-H.: Visual tracking via adaptive structural local sparse appearance model. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1822–1829. IEEE (2012)

Ning, J., Yang, J., Jiang, S., Zhang, L., Yang, M.-H.: Object tracking via dual linear structured SVM and explicit feature map. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4266–4274 (2016)

Fu, C., Duan, R., Kayacan, E.: Visual tracking with online structural similarity-based weighted multiple instance learning. Inf. Sci. 481, 292–310 (2019)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell.Intell. 37(3), 583–596 (2014)

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 2544–2550. IEEE (2010)

Danelljan, M., Hager, G., Shahbaz Khan, F., Felsberg, M.: Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4310–4318 (2015).

Li, F., Tian, C., Zuo, W., Zhang, L., Yang, M.-H.: Learning spatial–temporal regularized correlation filters for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4904–4913 (2018).

Dai, K., Wang, D., Lu, H., Sun, C., Li, J.: Visual tracking via adaptive spatially-regularized correlation filters. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4670–4679 (2019)

Zhang, J., Feng, W., Yuan, T., Wang, J., Sangaiah, A.K.: SCSTCF: spatial-channel selection and temporal regularized correlation filters for visual tracking. Appl. Soft Comput.Comput. 118, 108485 (2022)

Zhang, J., He, Y., Feng, W., Wang, J., Xiong, N.N.: Learning background-aware and spatial–temporal regularized correlation filters for visual tracking. Appl. Intell.Intell. 53(7), 7697–7712 (2023)

Moorthy, S., Joo, Y.H.: Adaptive spatial–temporal surrounding-aware correlation filter tracking via ensemble learning. Pattern Recogn.Recogn. 139, 109457 (2023)

Zhang, H., Li, H.: Interactive spatio-temporal feature learning network for video foreground detection. Complex Intell. Syst. 8(5), 4251–4263 (2022)

Yuan, D., Chang, X., Li, Z., He, Z.: Learning adaptive spatial–temporal context-aware correlation filters for UAV tracking. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 18(3), 1–18 (2022)

Zhang, Y., Yang, Y., Zhou, W., Shi, L., Li, D.: Motion-aware correlation filters for online visual tracking. Sensors 18(11), 3937 (2018)

Khalkhali, M.B., Vahedian, A., Yazdi, H.S.: Vehicle tracking with Kalman filter using online situation assessment. Robot. Auton. Syst.Auton. Syst. 131, 103596 (2020)

Mehmood, K., et al.: Context-aware and occlusion handling mechanism for online visual object tracking. Electronics 10(1), 43 (2020)

Khan, B., Ali, A., Jalil, A., Mehmood, K., Murad, M., Awan, H.: AFAM-PEC: adaptive failure avoidance tracking mechanism using prediction-estimation collaboration. IEEE Access 8, 149077–149092 (2020)

Elayaperumal, D., Joo, Y.H.: Robust visual object tracking using context-based spatial variation via multi-feature fusion. Inf. Sci. 577, 467–482 (2021)

Wang, W., Zhang, K., Lv, M., Wang, J.: Hierarchical spatiotemporal context-aware correlation filters for visual tracking. IEEE Trans. Cybern. 51(12), 6066–6079 (2020)

Chen, X., Yan, B., Zhu, J., Wang, D., Yang, X., Lu, H.: Transformer tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8126–8135 (2021)

Wang, W., Zhang, K., Su, Y., Wang, J., Wang, Q.: Learning cross-attention discriminators via alternating time–space transformers for visual tracking. IEEE Trans. Neural Netw. Learn. Syst. 1–14 (2023)

Mehmood, K., et al.: Spatio-temporal context, correlation filter and measurement estimation collaboration based visual object tracking. Sensors 21(8), 2841 (2021)

Mehmood, K., et al.: Efficient online object tracking scheme for challenging scenarios. Sensors 21(24), 8481 (2021)

Khan, B., et al.: Multiple cues-based robust visual object tracking method. Electronics 11(3), 345 (2022)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 3(1), 1–122 (2011)

Yin, X., Liu, G., Ma, X.: Fast scale estimation method in object tracking. IEEE Access 8, 31057–31068 (2020)

Ma, H., Acton, S.T., Lin, Z.: SITUP: scale invariant tracking using average peak-to-correlation energy. IEEE Trans. Image Process. 29, 3546–3557 (2020)

Liang, P., Blasch, E., Ling, H.: Encoding color information for visual tracking: algorithms and benchmark. IEEE Trans. Image Process. 24(12), 5630–5644 (2015)

Pérez, P., Hue, C., Vermaak, J., Gangnet, M.: Color-based probabilistic tracking. In: European Conference on Computer Vision, pp. 661–675. Springer (2002)

Adam, A., Rivlin, E., Shimshoni, I.: Robust fragments-based tracking using the integral histogram. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06), vol. 1, pp. 798–805: IEEE (2006)

Grabner, H., Grabner, M., Bischof, H.: Real-time tracking via on-line boosting. In: Bmvc, vol. 1, no. 5, p. 6. Citeseer (2006)

Ross, D.A., Lim, J., Lin, R.-S., Yang, M.-H.: Incremental learning for robust visual tracking. Int. J. Comput. Vis.Comput. Vis. 77(1), 125–141 (2008)

Babenko, B., Yang, M.-H., Belongie, S.: Visual tracking with online multiple instance learning. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 983–990. IEEE (2009)

Kwon, J., Lee, K.M.: Visual tracking decomposition. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1269–1276. IEEE (2010)

L. Sevilla-Lara and E. Learned-Miller, "Distribution fields for tracking," in 2012 IEEE Conference on Computer Vision and Pattern Recognition, 2012: IEEE, pp. 1910–1917.

Bao, C., Wu, Y., Ling, H., Ji, H.: Real time robust l1 tracker using accelerated proximal gradient approach. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1830–1837. IEEE (2012)

Oron, S., Bar-Hillel, A., Levi, D., Avidan, S.: Locally orderless tracking. Int. J. Comput. Vis.Comput. Vis. 111(2), 213–228 (2015)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: Exploiting the circulant structure of tracking-by-detection with kernels. In: European Conference on Computer Vision, pp. 702–715. Springer (2012)

Zhang, K., Zhang, L., Yang, M.-H.: Real-time compressive tracking. In: European Conference on Computer Vision, pp. 864–877. Springer (2012)

Danelljan, M., Shahbaz Khan, F., Felsberg, M., Van de Weijer, J.: Adaptive color attributes for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1090–1097 (2014)

Zhang, K., Zhang, L., Yang, M.-H.: Fast compressive tracking. IEEE Trans. Pattern Anal. Mach. Intell.Intell. 36(10), 2002–2015 (2014)

Zhang, J., Ma, S., Sclaroff, S.: MEEM: robust tracking via multiple experts using entropy minimization. In: European Conference on Computer Vision, pp. 188-203. Springer (2014)

Grabner, H., Leistner, C., Bischof, H.:Semi-supervised on-line boosting for robust tracking. In: European Conference on Computer Vision, pp. 234–247. Springer (2008)

Funding

The authors received no financial support or funding for this article.

Author information

Authors and Affiliations

Contributions

Writing—original draft presentation was done by MUH; conceptualization was done by KM and AA; supervision was done by AA and MA; writing—review and editing was done by KM, AA, and BK; data analysis and interpretation were done by MUH and KU; investigation was done by MUH, MA, and KU; methodology was done by KM, AA, and BK; software was done by KU, MA, and BK; visualization was done by MUH, KM, and BK.; resources were done by BK, KU, and AA; project administration was done by MA, and AA.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no relevant financial or non-financial competing interests to disclose in any material discussed in this article.

Ethical approval

The proposed study does not require any ethical approval.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hayat, M.U., Ali, A., Khan, B. et al. An improved spatial–temporal regularization method for visual object tracking. SIViP 18, 2065–2077 (2024). https://doi.org/10.1007/s11760-023-02842-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02842-2