Abstract

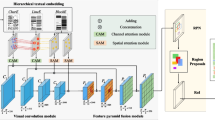

Document layout analysis can help people better understand and use the information in a document. However, the diversity of document layouts and considerable variation in aspect ratios among document objects pose significant challenges. In this study, we designed the multi-convolutional deformable separation (MCDS) module as the main structure of the network, using the YOLO model as a baseline. Integration of this module into the backbone and neck layers enhances the image feature extraction process significantly. Moreover, we incorporate ParNet-Attention to direct the network’s focus toward document objects through parallel networks, thereby facilitating a more exhaustive feature extraction. To optimize the model’s predictive potential, the decouple fusion head is employed within the head layer. This technique leverages multi-scale features based on the decoupled head, thereby enhancing the accuracy of predictions. Our proposed model achieves remarkable performance on three distinct public datasets with varying characteristics, namely ICDAR-POD, PubLayNet, and IIIT-AR-13K. Notably, in ICDAR-POD, both IoU\(_{0.6}\) and IoU\(_{0.8}\) achieve the optimal mean Average Precision (mAP), 96.2 and 94.4, respectively.

Similar content being viewed by others

References

Ren, S., He, K., Girshick, R., et al.: Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inform. Process. Syst. 9199(105555), 2969239–50 (2015)

He, K., Gkioxari, G., Dollár, P., et al.: Mask r-cnn[C]//In: Proceedings of the IEEE International Conference on Computer Vision. (2017): 2961-2969

Kise, K., Sato, A., Iwata, M.: Segmentation of page images using the area Voronoi diagram. Comput. Vis. Image Underst. 70(3), 370–382 (1998)

Nagy, George, Seth, Sharad: (1984). Hierarchical representation of optically scanned documents. In: The International Conference on Pattern Recognition. IEEE, 347-349

Yun, Jia, Xuedong, Tian, Lina, Zuo: A method for analyzing ancient book layout images based on local outlier factors and fluctuation thresholds. Sci. Technol. Eng. 20(29), 12021–12027 (2020)

Saha, R., Mondal, A., Jawahar, C.V.: Graphical object detection in document images. In: 2019 International Conference on Document Analysis and Recognition (ICDAR). IEEE: 51-58 (2019)

Alaasam, R., Kurar, B., El-Sana, J.: Layout analysis on challenging historical Arabic manuscripts using Siamese network. Int. Conf. Document Anal. Recognit. (ICDAR) 2019, 738–742 (2019). https://doi.org/10.1109/ICDAR.2019.00123

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In CVPR, (2016)

Yang, H., Hsu, W.H.: ”Vision-Based Layout Detection from Scientific Literature using Recurrent Convolutional Neural Networks,” In: 2020 25th International Conference on Pattern Recognition (ICPR), (2021), pp. 6455-6462, https://doi.org/10.1109/ICPR48806.2021.9412557.

Lee, Y., Hwang, J., Lee, S., et al.: An energy and GPU-computation efficient backbone network for real-time object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. (2019): 0-0

Huang, Y., et al.: "LayoutLMv3: Pre-training for Document AI with Unified Text and Image Masking." (2022)

Liu, Zhuang et al. “A ConvNet for the 2020s.” In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022): 11966-11976

Han, K., Xiao, A., Wu, E., et al.: Transformer in transformer. Adv. Neural. Inf. Process. Syst. 34, 15908–15919 (2021)

Dai, Jifeng et al. “Deformable Convolutional Networks.” In: 2017 IEEE International Conference on Computer Vision (ICCV) (2017): 764-773

Goyal, A., Bochkovskiy, A., Deng, J., et al.: Non-deep networks. Adv. Neural. Inf. Process. Syst. 35, 6789–6801 (2022)

Gao, L., et al. “ICDAR2017 competition on page object detection,” In: 2017 14th IAPR Int. Conf. Doc. Anal. Recogn. (ICDAR), IEEE, vol. 1, pp. 1417-1422, (2017)

Zhong, X., Tang, J., Yepes, A.J.: “PubLayNet: largest dataset ever for document layout analysis’.’ In: 2019 Int. Conf. Document Anal Recog. (ICDAR), IEEE, pp. 1015-1022, (2019)

Mondal, A., Lipps, P., Jawahar, C.: IIIT-AR-13K: A new dataset for graphical object detection in documents. In: International Workshop on Document Analysis Systems; Springer: Cham, Switzerland, (2020); pp. 216-230

Gao, L., Yi, X., Jiang, Z., Hao, L., Tang, Z.: “ICDAR2017 competition on page object detection”. In ICDAR, (2017)

Younas, J., Siddiqui, S.A., Munir, M., et al.: Fi-fo detector: Figure and formula detection using deformable networks. Appl. Sci. 10(18), 6460 (2020)

Bi, H., Xu, C., Shi, C., et al.: SRRV: A novel document object detector based on spatial-related relation and vision. IEEE Trans. Multimed. 25, 3788–3798 (2023). https://doi.org/10.1109/TMM.2022.3165717

Zhang, H., Xu, C., Shi, C., et al.: HSCA-Net: A hybrid spatial-channel attention network in multiscale feature pyramid for document layout analysis. J. Artif. Intell. Technol. 3(1), 10–17 (2023)

Li, X.-H., Yin, F., Liu, C.-L.: “Page object detection from pdf document images by deep structured prediction and supervised clustering”. In: 2018 24th International Conference on Pattern Recognition (ICPR), (2018), pp. 3627-3632

Li, K., Wigington, C., Tensmeyer, C., Zhao, H., Barmpalios, N., Morariu, V.I., Manjunatha, V., Sun, T., Fu, Y.: "Cross-domain documentobject detection: Benchmark suite and method”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12 915-12 924 (2020)

He, K. et al. "Masked Autoencoders Are Scalable Vision Learners." (2021)

Gu, J., et al.: UniDoc: Unified Pretraining Framework for Document Understanding. Adv. Neural Inform. Process. Syst. 34, 39–50 (2021)

Li, Junlong, Xu, Yiheng, Lv, Tengchao, Cui, Lei, Zhang, Cha, Wei, Furu: Dit: Self-supervised pre-training for docu-ment image transformer. arXiv preprint arXiv:2203.02378,2022

Bao, H., Dong, L., Piao, S., et al. Beit: Bert pre-training of image transformers. arXiv preprint arXiv:2106.08254, (2021)

Zhang, P., Li, C., Qiao, L., Cheng, Z., Pu, S., Niu, Y., Wu, F.: VSR:a unified framework for document layout analysis combining vision, semantics and relations [C]//Document Analysis and Recognition–ICDAR 2021: 16th International Conference, Lausanne, Switzerland, September 5–10, 2021, Proceedings, Part I 16. Springer International Publishing, pp. 115–130

Xie, S., Girshick, R., Dollár, P., et al.: Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (2017): 1492-1500

Nguyen, P., Ngo, L., Truong, T.: Nguyen, T.T.; Vo, N.D.; Nguyen, K. Page Object Detection with YOLOF. In: Proceedings of the 2021 8th NAFOSTED Conference on Information and Computer Science (NICS), Hanoi, Vietnam, 21-22 December 2021;pp. 205-210

Kallempudi, G., Hashmi, K.A., Pagani, A., et al.: Toward semi-supervised graphical object detection in document images. Future Internet 14(6), 176 (2022)

Naik, S., Hashmi, K.A., Pagani, A., et al.: "Investigating attention mechanism for page object detection in document images". Appl. Sci. 12(15), 7486 (2022)

Funding

Funding This work was supported by the National Natural Science Foundation of China (No.62166043, U2003207).

Author information

Authors and Affiliations

Contributions

QD: Writing - original draft, Conceptualization. MI: Supervision, Funding acquisition, Writing - review & editing. CZ and AH: Validation, Writing - review & editing, Formal analysis.

Corresponding author

Ethics declarations

Conflict of interest

Conflict of Interests The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Deng, Q., Ibrayim, M., Hamdulla, A. et al. The YOLO model that still excels in document layout analysis. SIViP 18, 1539–1548 (2024). https://doi.org/10.1007/s11760-023-02838-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02838-y