Abstract

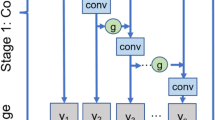

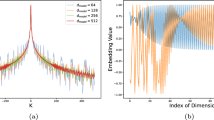

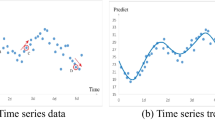

Time series prediction is tough resulting from the lack of multiple time-scale dependencies and the correlation among input concomitant variables. A novel method has been developed for time series prediction by leveraging a multiscale convolutional neural-based transformer network (MCTNet). It is composed of multiscale extraction (ME) and multidimensional fusion (MF) frameworks. The original ME has been designed to mine different time-scale dependencies. It contains a multiscale convolutional feature extractor and a temporal attention-based representator, following a transformer encoder layer for high-dimensional encoding representation. In order to use the correlation among variables sufficiently, a novel MF framework has been designed to capture the relationship among inputs by utilizing a spatial attention-based highway mechanism. The linear elements of the input sequence are effectively preserved in MF, which helps MCTNet make more efficient predictions. Experimental results show that MCTNet has excellent performance for time series prediction in comparison with some state-of-the-art approaches on challenging datasets.

Similar content being viewed by others

Data availability

The ETT and AQI datasets used in this paper are publicly available. The ETT and AQI datasets can be acquired from the following links. All data used in this paper, including images and codes, are available by contacting the corresponding author by reasonable request. ETT: https://opendatalab.com/ETTAQI: https://www.kaggle.com/datasets/fedesoriano/air-quality-data-set

References

Zouaidia, K., Rais, S., Ghanemi, S.: Weather forecasting based on hybrid decomposition methods and adaptive deep learning strategy. Neural Comput. Appl. 35(1), 11109–11124 (2023)

Mosavi, A., Ozturk, P., Chau, K.: Flood prediction using machine learning models: Literature review. Water 10(11), 1536–1576 (2018)

Liu, R., Zhou, H., Li, D., et al.: Evaluation of artificial precipitation enhancement using UNET-GRU algorithm for rainfall estimation [J]. Water 15(8), 1585–1602 (2023)

Fischer, T., Krauss, C.: Deep learning with long short-term memory networks for financial market predictions. Eur. J. Oper. Res. 270(2), 654–669 (2018)

Kim, Y., Won, H.: Forecasting the volatility of stock price index: a hybrid model integrating LSTM with multiple GARCH-type models. Expert Syst. Appl. 103(1), 25–37 (2018)

Polson, G., Sokolov, O.: Deep learning for short-term traffic flow prediction [J]. Transport. Res. Part C: Emerg. Technol. 79(1), 1–17 (2017)

Yuan, Q., Shen, H., Li, T., et al.: Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 241(1), 111716–111736 (2020)

Yuan, X., Li, L., Yang, C., et al.: Deep learning with spatiotemporal attention-based LSTM for industrial soft sensor model development. IEEE Trans. Industr. Electron. 68(5), 4404–4414 (2020)

Yuan, X., Li, L., Yang, C., et al.: Nonlinear dynamic soft sensor modeling with supervised long short-term memory network. IEEE Trans. Industr. Inf. 16(5), 3168–3176 (2019)

Liu, L., Hsaio, H., Yao, Tu., et al.: Time series classification with multivariate convolutional neural network. IEEE Trans. Ind. Electr. 66(6), 4788–4797 (2018)

Lacasa, L., Nicosia, V., Latora, V.: Network structure of multivariate time series. Sci. Rep. 5(1), 1–9 (2015)

Zhou, H., Zhang, S., Peng, J., et al.: Informer: beyond efficient transformer for long sequence time-series forecasting. In: AAAI Conference on Artificial Intelligence, pp. 11106–11115 (2021)

Das, M., Ghosh, K.: Data-driven approaches for meteorological time series prediction: a comparative study of the state-of-the-art computational intelligence techniques. Patt. Recogn. Lett. 105(1), 155–164 (2018)

Johannesson, P., Podgórski, K., Rychilk, I., et al.: AR: time series with autoregressive gamma variance for road topography modeling. Probab. Eng. Mech. 43(1), 106–116 (2016)

Schaffer, L., Dobbins, A., Pearon, S.: Interrupted time series analysis using autoregressive integrated moving average (ARIMA) models: a guide for evaluating large-scale health interventions. BMC Med. Res. Methodol. 21(1), 1–12 (2021)

Li, Q., Lin, C.: A new approach for chaotic time series prediction using recurrent neural network. Math. Probl. Eng. 2016(1), 1–9 (2016)

Chen, X., Chen, X., She, J., et al.: A hybrid time series prediction model based on recurrent neural network and double joint linear–nonlinear extreme learning network for prediction of carbon efficiency in iron ore sintering process. Neurocomputing 249(1), 128–139 (2017)

Zheng, W., Chen, G.: An accurate GRU-based power time-series prediction approach with selective state updating and stochastic optimization. IEEE Trans. Cybernet. 52(12), 13902–13914 (2021)

Karevan, Z., Suykens, A.: Transductive LSTM for time-series prediction: an application to weather forecasting. Neural Netw. 125(1), 1–9 (2020)

Dey, R., Salem, M.: Gate-variants of gated recurrent unit (GRU) neural networks. In: International Midwest Symposium on Circuits and Systems, pp. 1597–1600 (2017)

Yu, Y., Si, X., Hu, C., et al.: A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 31(7), 1235–1270 (2019)

Hwang, S., Jeon, G., Jeong, J., et al.: A novel time series based Seq2Seq model for temperature prediction in firing furnace process. Procedia Comput. Sci. 155(1), 19–26 (2019)

Wang, X., Cai, Z., Wen, Z., et al.: Long time series deep forecasting with multiscale feature extraction and Seq2seq attention mechanism. Neural Process. Lett. 54(4), 3443–3466 (2022)

Chen, H., Zhang, X.: Path planning for intelligent vehicle collision avoidance of dynamic pedestrian using Att-LSTM, MSFM, and MPC at unsignalized crosswalk. IEEE Trans. Industr. Electron. 69(4), 4285–4295 (2021)

Vaswani, A., Shazeer, N., Parmar, N., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 1–11 (2017)

Zerveas, G., Jayaraman, S., Patel, D., et al.: A transformer-based framework for multivariate time series representation learning. In: Knowledge Discovery & Data Mining, pp. 2114–2124 (2021)

Li, S., Jin, X., Yao, X., et al.: Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. In: Advances in Neural Information Processing Systems, pp. 1–14 (2019)

Li, Z., Cai, J., He, S., et al.: Seq2seq dependency parsing. In: Computational Linguistics, pp. 3203–3214 (2018)

Sun, F., Liu, J., Wu, J., et al.: BERT4Rec: Sequential recommendation with bidirectional encoder representations from transformer. In: Information and Knowledge Management, pp. 1441–1450 (2019)

Geng, Z., Chen, Z., Meng, Q., et al.: Novel transformer based on gated convolutional neural network for dynamic soft sensor modeling of industrial processes. IEEE Trans. Industr. Inf. 18(3), 1521–1529 (2021)

Lai, G., Chang, C., Yang, Y., et al.: Modeling long-and short-term temporal patterns with deep neural networks. In: Research & Development in Information Retrieval, pp. 95–104 (2018)

Vito, S., Massera, E., Piga, M., et al.: On field calibration of an electronic nose for benzene estimation in an urban pollution monitoring scenario. Sens. Actuators, B Chem. 129(2), 750–757 (2008)

Afrin, T., Nita, Y.: A long short-term memory-based correlated traffic data prediction framework. Knowl.-Based Syst. 237(1), 775–786 (2022)

Zhang, J., Hao, K., Tang, X., et al.: A multi-feature fusion model for Chinese relation extraction with entity sense. Knowl.-Based Syst. 206(1), 106348–106358 (2020)

Tang, B., Matteson, S.: Probabilistic transformer for time series analysis. In: Advances in Neural Information Processing Systems, pp. 23592–23608 (2021)

Funding

This work is supported in part by National Key R&D program of China (Grant No. 2020YFC1523004).

Author information

Authors and Affiliations

Contributions

ZW presented the innovation of paper, designed and carried out the experiments, and analyzed the result of the experiments. YG drafted the work or revised it critically for important intellectual content. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This paper does not contain any studies involving humans or animals. This declaration is not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Z., Guan, Y. Multiscale convolutional neural-based transformer network for time series prediction. SIViP 18, 1015–1025 (2024). https://doi.org/10.1007/s11760-023-02823-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02823-5