Abstract

A perennial objection against Bayes factor point-null hypothesis tests is that the point-null hypothesis is known to be false from the outset. We examine the consequences of approximating the sharp point-null hypothesis by a hazy ‘peri-null’ hypothesis instantiated as a narrow prior distribution centered on the point of interest. The peri-null Bayes factor then equals the point-null Bayes factor multiplied by a correction term which is itself a Bayes factor. For moderate sample sizes, the correction term is relatively inconsequential; however, for large sample sizes, the correction term becomes influential and causes the peri-null Bayes factor to be inconsistent and approach a limit that depends on the ratio of prior ordinates evaluated at the maximum likelihood estimate. We characterize the asymptotic behavior of the peri-null Bayes factor and briefly discuss suggestions on how to construct peri-null Bayes factor hypothesis tests that are also consistent.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the Bayesian paradigm, the support that data \(y^{n}:= (y_{1}, \ldots , y_{n})\) provide for an alternative hypothesis \( {\mathcal {H}}_{1}\) versus a point-null hypothesis \( {\mathcal {H}}_{0} \) is given by the Bayes factor \( \mathrm{BF}_{10}(y^{n}) \):

where the first line indicates that the Bayes factor quantifies the change from prior to posterior model odds (Wrinch and Jeffreys 1921), and the second line indicates that this change is given by a ratio of marginal likelihoods, that is, a comparison of prior predictive performance obtained by integrating the parameters \( \theta _{j} \) out of the jth model’s likelihood \( f( y^{n} \, | \, \theta _{j}) \) at the observations \( y^{n} \) with respect to the prior density \( \pi (\theta _{j} \, | \, {\mathcal {H}}_{j}) \) (Jeffreys 1935, 1939; Kass and Raftery 1995). Although the general framework applies to the comparison of any two models (as long as the models make probabilistic predictions; Dawid 1984; Shafer and Vovk 2019), the procedure developed by Harold Jeffreys in the late 1930s was explicitly designed as an improvement on p value null-hypothesis significance testing.

In the prototypical scenario, a null hypothesis \( {\mathcal {H}}_{0} \) has \(p_{0} \) free parameters, whereas an alternative hypothesis \({\mathcal {H}}_{1}\) has \( p = p_{0} + 1 \) free parameters; the additional free parameter in \( {\mathcal {H}}_{1} \) is the one that is test-relevant. For instance, in Jeffreys’s t-test, the test-relevant parameter \(\delta = \mu / \sigma \) represents standardized effect size; after assigning prior distributions to the models’ parameters, we may compute the Bayes factor in favor of \( {\mathcal {H}}_{0}: \delta = 0 \) with free parameter \( \theta _{0} = \sigma \in (0, \infty ) \) against \({\mathcal {H}}_{1}: \theta _{1} = ( \delta , \sigma ) \in {\mathbb {R}}\times (0, \infty )\) where \( \delta \) is unrestricted and where \( \sigma \) denotes the common nuisance parameter. When \( \mathrm{BF}_{01}(y^{n}) =1/\mathrm{BF}_{10}(y^{n}) \) is larger than 1, the data provide evidence that the ‘general law’ \( {\mathcal {H}}_{0} \) can be retained; when \(\mathrm{BF}_{01}(y^{n})\) is smaller than 1, the data provide evidence for \({\mathcal {H}}_{1} \), the model that relaxes the general law. The larger the deviation from 1, the stronger the evidence. Importantly, in Jeffreys’s framework, the test-relevant parameter is fixed under \({\mathcal {H}}_{0} \) and free to vary under \( {\mathcal {H}}_{1} \). The hypothesis \({\mathcal {H}}_{0} \) is generally known as a ‘point-null’ hypothesis.

A perennial objection against point-null hypothesis testing—whether Bayesian or frequentist—is that in most practical applications, the point-null is never true exactly (e.g., Bakan 1966; Berkson 1938; Edwards et al. 1963; Jones and Tukey 2000; Kruschke and Liddell 2018; see also Laplace 1774/1986, p. 375). If this argument is accepted and \( {\mathcal {H}}_{0} \) is deemed to be false from the outset, then the test merely assesses whether or not the sample size was sufficiently large to detect the nonzero effect. This objection was forcefully made by Tukey:

“Statisticians classically asked the wrong question—and were willing to answer with a lie, one that was often a downright lie. They asked “Are the effects of A and B different?” and they were willing to answer “no.”

All we know about the world teaches us that the effects of A and B are always different—in some decimal place—for any A and B. Thus, asking “Are the effects different?” is foolish. (Tukey 1991, p. 100)

This perennial objection has been rebutted in several ways (e.g., Jeffreys 1937, 1961; Kass and Raftery 1995); in the current work, we focus on the most common rebuttal, namely that the point-null hypothesis is a mathematically convenient approximation to a more realistic ‘peri-null’ (Tukey 1955) hypothesis \( {\mathcal {H}}_{\widetilde{0}} \) that assigns the test-relevant parameter a distribution tightly concentrated around the value specified by the point-null hypothesis (e.g., Good 1967, p. 416; Berger and Delampady 1987; Cornfield 1966, 1969; Dickey 1976; Edwards et al. 1963; George and McCulloch 1993; Jeffreys 1935, 1936; Gallistel 2009; Rousseau 2007; Rouder et al. 2009). For instance, in the case of the t-test the peri-null \({\mathcal {H}}_{\widetilde{0}} \) could specify \( \delta \sim \pi (\delta \, | \, {\mathcal {H}}_{\widetilde{0}}) = {\mathcal {N}}(0, \kappa _{0}^{2}) \), where the width \(\kappa _{0} \) is set to a small value.

Previous work has suggested that the approximation of a point-null hypothesis by an interval is reasonable when the width of that interval is half a standard error in width (Berger and Delampady 1987; Rousseau 2007) or one standard error in width (Jeffreys 1935). Here, we explore the consequences of replacing the point-null hypothesis \( {\mathcal {H}}_{0} \) by a peri-null hypothesis \( {\mathcal {H}}_{\widetilde{0}} \) from a different angle. We alter only the specification of the null-hypothesis \( {\mathcal {H}}_{0} \), which means that the alternative hypothesis \( {\mathcal {H}}_{1} \) now overlaps with \( {\mathcal {H}}_{\widetilde{0}} \).

Below we show, first, that the effect on the Bayes factor of replacing \( {\mathcal {H}}_{0} \) with \( {\mathcal {H}}_{\widetilde{0}} \) is given by another Bayes factor, namely that between \( {\mathcal {H}}_{0} \) and \({\mathcal {H}}_{\widetilde{0}} \) (cf. Morey and Rouder 2011, p. 411). This ‘peri-null correction factor’ is usually near 1, unless sample size grows large. For large sample sizes, we demonstrate that the Bayes factor for the peri-null \( {\mathcal {H}}_{\widetilde{0}} \) versus the alternative \( {\mathcal {H}}_{1} \) is bounded by the ratio of the prior ordinates evaluated at the maximum likelihood estimate. This proves earlier statements from Morey and Rouder (2011, pp. 411–412) and confirms suggestions in Jeffreys (1961, p. 367) and Jeffreys (1973, p. 39, Eq. 2). In other words, the Bayes factor for the peri-null hypothesis is inconsistent.

Note that there exist several Bayes factor methods that have replaced point-null hypotheses with either peri-null hypotheses (e.g., Stochastic Search Variable Selection, George and McCulloch 1993Footnote 1) or with other hypotheses that have a continuous prior distribution close to zero (e.g., the skeptical prior proposed by Pawel and Held in press). As far as evidence from the marginal likelihood is concerned, the results below show that these methods are inconsistent.

We end with a brief discussion on how a consistent method for hypothesis testing can be obtained without fully committing to a point-null hypothesis.

2 The peri-null correction factor

Consider the three hypotheses discussed earlier: the point-null hypothesis \( {\mathcal {H}}_{0} \) fixes the test-relevant parameter to a fixed value (e.g., \( \delta = 0 \)); the peri-null hypothesis \({\mathcal {H}}_{\widetilde{0}} \) assigns the test-relevant parameter a distribution that is tightly centered around the value of interest (e.g., \( \delta \sim \pi (\delta \, | \, {\mathcal {H}}_{\widetilde{0}}) = {\mathcal {N}}(0, \kappa _{0}^{2}) \) with \( \kappa _{0} \) small); and the alternative hypothesis \( {\mathcal {H}}_{1} \) assigns the test-relevant parameter a relatively wide prior distribution, \( \delta \sim \pi (\delta \, | \, {\mathcal {H}}_{1}) \). The Bayes factor of interest is between \( {\mathcal {H}}_{1} \) and \( {\mathcal {H}}_{\widetilde{0}} \), which can be expressed as the product of two Bayes factors involving \( {\mathcal {H}}_{0} \):

In words, the Bayes factor for the alternative hypothesis against the peri-null hypothesis equals the Bayes factor for the alternative hypothesis against the point-null hypothesis, multiplied by a correction factor (cf. Kass and Vaidyanathan 1992; Kass and Raftery 1995; Morey and Rouder 2011, p. 411). This correction factor quantifies the extent to which the point-null hypothesis outpredicts the peri-null hypothesis. With data sets of moderate size, and \( \kappa _{0} \) small, the peri-null and point-null hypotheses will make similar predictions, and consequently, the correction factor will be close to 1. In such cases, the point-null can indeed be considered a mathematically convenient approximation to the peri-null.

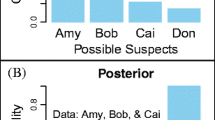

2.1 Example

Consider the hypothesis that “more desired objects are seen as closer” (Balcetis and Dunning 2011). In the authors’ Study I, 90 participants had to estimate their distance to a bottle of water. Immediately prior to this task, 47 ‘thirsty’ participants had consumed a serving of pretzels, whereas 43 ‘quenched’ participants had drank as much as they wanted from four 8-oz glasses of water. In line with the authors’ predictions, “Thirsty participants perceived the water bottle as closer (\( M = 25.1 \) in., \( SD = 7.3 \)) than quenched participants did (\( M = 28.0 \) in., \( SD = 6.2 \))” (Balcetis and Dunning 2011, p. 148), with \( t=2.00 \) and \(p=.049 \). A Bayesian point-null t-test concerning the test-relevant parameter \( \delta \) may contrast \( {\mathcal {H}}_{0}: \delta = 0 \) versus \({\mathcal {H}}_{1} : \delta \in {\mathbb {R}}\) with a Cauchy distribution with location parameter 0 and scale \( \kappa _{1} \), the common default value \(\kappa _{1}=1/\sqrt{2} \) (Morey and Rouder 2018). The resulting point-null Bayes factor is \( \mathrm{BF}_{10} = 1.259 \), a smidgen of evidence in favor of \( {\mathcal {H}}_{1} \). We may also compute a peri-null correction factor by contrasting \( {\mathcal {H}}_{0}: \delta = 0 \) against \({\mathcal {H}}_{\widetilde{0}}: \delta \sim {\mathcal {N}}(0, \kappa _{0}^{2}) \), with \(\kappa _{0} = 0.01 \), say. The resulting peri-null correction factorFootnote 2 is \( \mathrm{BF}_{0 \widetilde{0}} = 0.997\), which means that, practically, it does not matter if the point-null or the peri-null is tested. With a larger value of \(\kappa _{0} = 0.05 \), we have \( \mathrm{BF}_{0\widetilde{0}} = 0.927 \), thus, a peri-null Bayes factor of \( \mathrm{BF}_{1 \widetilde{0}} = 1.167 \). The change from \( \mathrm{BF}_{1 0} = 1.259 \) to \( \mathrm{BF}_{1 \widetilde{0}} = 1.167 \) is utterly inconsequential.

The difference between the peri-null and point-null Bayes factor remains inconsequential for larger values of t. When we change \(t=2.00 \) to \( t=4.00 \), the point-null Bayes factor equals \(\mathrm{BF}_{10} = 174 \), which according to Jeffreys’s classification of evidence (e.g., Jeffreys 1961, Appendix B) is considered compelling evidence for \( {\mathcal {H}}_{1} \). With \( \kappa _{0} = 0.01 \), the peri-null correction factor equals \( \mathrm{BF}_{0\widetilde{0}} = 0.986 \) and consequently a peri-null Bayes factor equals of about 172 in favor of \( {\mathcal {H}}_{1} \) over \( {\mathcal {H}}_{\widetilde{0}} \). With \( \kappa _{0} = 0.05 \), the peri-null correction factor equals \( \mathrm{BF}_{0 \widetilde{0}} = 0.713 \) and \( \mathrm{BF}_{1\widetilde{0}} \approx 124 \). In absolute numbers, the change from 174 to 124 may appear considerable, but with equal prior model probabilities this translates to a modest difference in posterior probabilities: \(P({\mathcal {H}}_{1} \, | \, y^{n}) = 174/175 \approx 0.994 \) versus \( 124/125 = 0.992 \).

The peri-null correction factor does become influential when sample size is large. As we prove in the next section, the peri-null Bayes factor is inconsistent and converges to the ratio of prior ordinates under \( {\mathcal {H}}_{1} \) and \( {\mathcal {H}}_{\widetilde{0}} \) at the maximum likelihood estimate.

3 The peri-null Bayes factor is inconsistent

Historically, the main motivation for the development of the Bayes factor was the desire to be able to obtain arbitrarily large evidence for a general law: “We are looking for a system that will in suitable cases attach probabilities near 1 to a law.” (Jeffreys 1977, p. 88; see also Etz and Wagenmakers 2017; Ly et al. 2020; Wrinch and Jeffreys 1921).

Statistically, this desideratum means that we want Bayes factors to be consistent, which implies that, as sample size increases, (i) \(\mathrm{BF}_{10}(Y^{n}) \) tends to zero when the data are generated under the null model, whereas (ii) \( \mathrm{BF}_{01}(Y^{n}) \) tends to zero when the data are generated under the alternative model \( {\mathcal {H}}_{1} \), that is,

Thus, regardless of the chosen prior model probabilities \( P ( {\mathcal {H}}_{0} ), P ( {\mathcal {H}}_{1} ) \in (0, 1) \),

where \( {\mathbb {P}}_{\theta } \) refers to the data generating distribution, here, \( Y_{i} \overset{\mathrm{iid}}{\sim }{\mathbb {P}}_{\theta } \), and where \( X_{n} \overset{{\mathbb {P}}_{\theta }}{\rightarrow } X \) denotes convergence in probability, that is, \( \lim _{n \rightarrow \infty } {\mathbb {P}}_{\theta } (|X_{n} - X | > \epsilon ) = 0 \) as usual.

Below we prove that the peri-null Bayes factor \( \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \) is inconsistent (cf. suggestions by Jeffreys 1961, p. 367; Jeffreys 1973, p. 39, Eq. 2; and the statements by Morey and Rouder 2011, p. 411-412). The proof relies on the observation that the replacement of the point-null restriction on the test-relevant parameter, i.e., \({\mathcal {H}}_{0} : \delta = 0 \), where \( \theta = (\delta , \theta _{0}) \) as before, yields a peri-null model that defines the same likelihood function as the alternative model. Consequently, the numerator and the denominator of the peri-null Bayes factor \( \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \) only differ in how the priors are specified.

The inconsistency of peri-null Bayes factors then follows quite directly from Laplace’s method (Laplace 1774/1986) and consistency of the maximum likelihood estimator (MLE). Both Laplace’s method and consistency of the MLE hold under weaker conditions than stated here, namely, for absolute continuous priors (e.g., van der Vaart 1998, Chapter 10), and regular parametric models (e.g., van der Vaart 1998, Chapter 7; Ly et al. 2017, Appendix E). These models only need to be one time differentiable with respect to \( \theta \) in quadratic mean and have non-degenerate Fisher information matrices that are continuous in \( \theta \) with determinants that are bounded away from zero and infinity. The inconsistency of the peri-null Bayes factor is therefore expected to hold more generally.

We show that under the stronger conditions of Kass et al. (1990), the asymptotic sampling distribution of peri-null Bayes factors can be easily derived. These stronger conditions imply that the model is regular for which we know that the MLE is not only consistent, but also locally asymptotically normal with a variance equal to the inverse observed Fisher information matrix at \( \hat{\theta } \) with entries

see for instance Ly et al. (2017) for details.

Theorem 1

(Limit of a peri-null Bayes factor) Let \( Y^{n} = ( Y_{1}, \ldots , Y_{n}) \) with \( Y_{i} \overset{\mathrm{iid}}{\sim }{\mathbb {P}}_{\theta } \in {\mathcal {P}}_{\varTheta } \), where \( {\mathcal {P}}_{\varTheta } \) is an identifiable family of distributions that is Laplace-regular (Kass et al. 1990). This implies that \( {\mathcal {P}}_{\varTheta } \) admits densities \( f(y^{n} \, | \, \theta ) \) with respect to the Lebesgue measure that are six times continuously differentiable in \( \theta \) at the data-governing parameter \( \theta \in \varTheta \subset {\mathbb {R}}^{p} \) and \( \varTheta \) open with non-empty interior. Furthermore, assume that the (peri-null) prior densities \( \pi ( \theta \, | \, {\mathcal {H}}_{\widetilde{0}}) \) and \( \pi ( \theta \, | \, {\mathcal {H}}_{1}) \) assign positive mass to a neighborhood at the data-governing parameter \( \theta \) and are four times continuously differentiable at \( \theta \); then \( \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \overset{{\mathbb {P}}_{\theta }}{\rightarrow } \tfrac{ \pi ( \theta \, | \, {\mathcal {H}}_{1})}{\pi ( \theta \, | \, {\mathcal {H}}_{\widetilde{0}})} \). \( \diamond \)

Proof

The condition that the model is Laplace-regular allows us to employ the Laplace method to approximate the numerator and the denominator of the peri-null Bayes factor by

where \( C^{1}(\hat{\theta } \, | \, {\mathcal {H}}_{j}) \) and \(C^{2}(\hat{\theta } \, | \, {\mathcal {H}}_{j}) \) for \( j= \widetilde{0}, 1 \) are bounded error terms of the Laplace approximation (cf. Kass et al. 1990) and given explicitly by Eq. (32) and Eq. (33) based on the notation introduced in Appendix A.

From the fact that the peri-null and the alternative models define the same likelihood function, thus, have the same maximum likelihood estimator, and only differ in how the priors concentrate on the parameters, we conclude that

Identifiability and the regularity conditions on the model imply that the maximum likelihood estimator is consistent, thus, \(\hat{\theta } \overset{{\mathbb {P}}_{\theta }}{\rightarrow } \theta \) (e.g., van der Vaart 1998, Chapter 5). As all functions of \(\hat{\theta } \) in Eq. (8) are smooth at \( \theta \), the continuous mapping theorem applies and the assertion follows. \(\square \)

Theorem 1 implies that \( \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \) is inconsistent; for all data-governing parameter values with a neighborhood that receives positive mass from both priors, the peri-null Bayes factor approaches a limit that is given by the ratio of prior densities evaluated at the data-governing \( \theta \) as n increases. Note that this holds in particular for the test point of interest, e.g., \( \delta = 0 \), which has a neighborhood that the peri-null prior assigns positive mass to. This inconsistency result can be intuited as follows. The peri-null Bayes factor compares two marginal likelihoods with the same data-distribution (or sampling) model, but different prior distributions on the same parameter space; hence, the peri-null Bayes factor effectively assesses which prior performs best, and this should not matter asymptotically (i.e., as the data accumulate, the posterior distributions of the two models converge, and consequently the change in the Bayes factor will converge as well).Footnote 3

From Theorem 1 it follows that the Bayes factor comparing the alternative \( {\mathcal {H}}_{1} : \delta \in \varDelta = {\mathbb {R}}\) against a directed hypothesis, say, \( {\mathcal {H}}_{+} : \delta > 0 \), is also inconsistent. For data under any \( \delta > 0 \), the associated Bayes factor \( \mathrm{BF}_{1+}(Y^{n}) \) then converges in probability to \( \varPi _{u}( \{ \delta > 0 \} )/ \varPi _{u}(\varDelta ) \), where \( \varPi _{u}(B) = \int _{B} \pi _{u}(\theta ) \mathrm{d}\theta \) with \( \pi _{u} \) the unnormalized prior on \( \delta \).

The limit described in Theorem 1 can also be derived differently. For instance, Theorem 1 (ii) of Dawid (2011) can be applied twice: once to approximate the logarithm of the marginal likelihood of the alternative model, and once for the null model.Footnote 4 Another way to derive the limit in Theorem 1 is by using the generalized Savage-Dickey density ratio (Verdinelli and Wasserman 1995) and by exploiting the transitivity of the Bayes factor. Theorem 1, however, can be more straightforwardly extended to characterize the asymptotic behavior of the peri-null Bayes factor.

The limiting value of the peri-null Bayes factor is not representative when n is small or moderate. Theorem 2 below shows that the sampling mean of \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \) is expected to be of smaller magnitude than its limiting value. In other words, the limit in Theorem 1 should be viewed as an upper bound under the alternative and a lower bound under the null.

This theorem exploits the fact that without a point-null hypothesis the gradients of the densities \( \pi (\theta \, | \, {\mathcal {H}}_{1}) \) and \(\pi (\theta \, | \, {\mathcal {H}}_{\widetilde{0}}) \) are of the same dimension, which implies that the gradient \(\tfrac{ \partial }{\partial \theta } \log \big ( \tfrac{\pi ( \theta \, | \, {\mathcal {H}}_{1})}{\pi ( \theta \, | \, {\mathcal {H}}_{\widetilde{0}})} \big ) \) is well-defined. As such, the delta method can be used to show that the peri-null Bayes factor inherits the asymptotic normality property of the MLE.

To state the theorem, we write D for the differential operator with respect to \( \theta \), e.g., \( [D^{1} \pi (\theta \, | \, {\mathcal {H}}_{j})] = \frac{ \partial }{\partial \theta } \pi (\theta \, | \, {\mathcal {H}}_{j}) \) denotes the gradient, and \( [ D^{2} \pi (\theta \, | \, {\mathcal {H}}_{j})] = [ \frac{ \partial ^{2}}{\partial \theta \partial \theta } \pi (\theta \, | \, {\mathcal {H}}_{j}) ]\) denotes the Hessian matrix.

Theorem 2

(Asymptotic sampling distribution of a peri-null Bayes factor) Under the regularity conditions stated in Theorem 1 and for all data-governing parameters \( \theta \) for which

the asymptotic sampling distribution of the logarithm of the peri-null Bayes factor is normal, that is,

where \( \overset{{\mathbb {P}}_{\theta }}{\rightsquigarrow } \) denotes convergence in distribution under \( {\mathbb {P}}_{\theta } \) and where

is a bias term that is asymptotically negligible, and where \(C^{1}(\theta \, | \, {\mathcal {H}}_{j}) \) and \( C^{2}(\theta \, | \, {\mathcal {H}}_{j})\) are given explicitly by Eq. (32) and Eq. (33) based on the notation introduced in Appendix A.

For all \( \theta \) for which \( \dot{v}(\theta ) = 0 \), but \(\ddot{v}(\theta ) := [ D^{2} \log \big ( \tfrac{\pi ( \theta \, | \, {\mathcal {H}}_{1})}{\pi ( \theta \, | \, {\mathcal {H}}_{\widetilde{0}})} \big )] \ne 0 \in {\mathbb {R}}^{p \times p} \), the asymptotic distribution of \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \) has a quadratic form, that is,

where \( Z \sim {\mathcal {N}}( 0 , I ) \) with \( I \in {\mathbb {R}}^{p \times p} \) the identity matrix. \( \diamond \)

Proof

The proof depends on (another) Taylor series expansion, see Appendix A for full details. Firstly, we recall that \( \sqrt{n} ( \hat{\theta } - \theta ) \overset{\theta }{\rightsquigarrow } {\mathcal {N}}( 0, I^{-1}(\theta )) \). To relate this asymptotic distribution to that of \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \), we note that Eq. (8) is, up to a decreasing error in n, a smooth function of the maximum likelihood estimator. The goal is to ensure that the error terms \( 1 + C^{1}( \theta \, | \, {\mathcal {H}}_{j})/n + C^{2}( \theta \, | \, {\mathcal {H}}_{j})/n^{2} \) are asymptotically negligible. A Taylor series expansion at the data-governing \( \theta \) shows that

The asymptotic normality result follows after rearranging Eq. (13), a multiplication of \( \sqrt{n} \) on both sides, and an application of Slutsky’s lemma.

To conclude that the bias term \( E(\theta , n) \) is indeed asymptotically negligible, note that \( \log ( 1 + x/n) \approx x/n \) as \( n \rightarrow \infty \) and therefore \( D^{k} E(\theta , n) = {\mathcal {O}}\Big ( \frac{1}{n} D^{k} \big \{ C^{1}(\theta \, | \, {\mathcal {H}}_{1}) - C^{1}(\theta \, | \, {\mathcal {H}}_{\widetilde{0}}) \big \} \Big ) \) for all \(k \le 3 \). The approximation \( \log ( 1 + x/n) \approx x/n \) requires \( C^{k}(\theta \, | \, {\mathcal {H}}_{j}) \) for \( k=1,2 \) and \(j=\widetilde{0}, 1 \) to be of similar magnitude, but this is typically not the case when \( \kappa _{0} \) is relatively small compared to \( \kappa _{1} \). The bias is, therefore, expected to decay much more slowly.

Similarly, when \( \dot{v}(\theta ) \) is zero, but \( \ddot{v}(\theta )\) not, we have

Since \(\sqrt{n} ( \hat{\theta } - \theta ) \overset{{\mathbb {P}}_{\theta }}{\rightsquigarrow } {\mathcal {N}}( 0, I(\theta )^{-1}) \), the second-order result follows. \(\square \)

Theorem 2 also shows that under the alternative hypothesis, \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n}) \) is expected to increase towards the limiting value \( \log \big ( \tfrac{\pi ( \theta \, | \, {\mathcal {H}}_{1})}{\pi ( \theta \, | \, {\mathcal {H}}_{\widetilde{0}})} \big ) \) as \( n \rightarrow \infty \) whenever \( E(\theta , n) < 0 \). The bias is expected to be negative, because if the data-governing parameter \( \delta \) is far from zero, but the peri-null prior is specified such that it is peaked at zero, the Laplace approximations become less accurate. In other words, for fixed n and \( \delta \ne 0 \), we typically have \( C^{1}(\theta \, | \, {\mathcal {H}}_{1}) \le C^{1}(\theta \, | \, {\mathcal {H}}_{\widetilde{0}}) \) and \( C^{2}(\theta \, | \, {\mathcal {H}}_{1}) \le C^{2}(\theta \, | \, {\mathcal {H}}_{\widetilde{0}}) \) and, therefore, \( E(\theta , n) < 0 \). This intuition can be made rigorous using the explicit formulas for \(E(\theta , n) \) provided by Eq. (32) and Eq. (33) from Appendix A, as is shown in the following example.

4 Example

We consider a Bayesian t-test and for the peri-null Bayes factor use the priors

Note that \( \pi (\delta , \sigma \, | \, {\mathcal {H}}_{1}) \) is chosen as in the default Bayesian t-test (Jeffreys 1948; Ly et al. 2016b, a; Rouder et al. 2009), where \(\kappa _{1} > 0 \) denotes the scale parameter of the Cauchy distribution on standardized effect size \( \delta = \mu / \sigma \), and \( \sigma \propto \sigma ^{-1} \) implies that the standard deviation common in both models is proportional to \( \sigma ^{-1} \) (for advantages of this choice see Hendriksen et al. 2021; Grünwald et al. 2019). For data-governing parameters \( \theta = (\mu , \sigma ) \), where \( \mu \) is the population mean, Theorem 1 shows that as \( n \rightarrow \infty \)

Direct calculations show that \( \dot{v}(\theta ) = 0 \) only when \(\mu =0 \). Hence, under the alternative \( \mu \ne 0 \), the logarithm of these peri-null Bayes factor t-tests are asymptotically normal with an approximate variance of

To characterize the asymptotic mean, we also require the bias term \(E(\theta , n) \). An application of Eq. (32) and Eq. (33) from Appendix A shows that for the problem at hand the bias term comprises of

More concretely, under \( \mu = 0.167 \) and \( \sigma = 1 \), \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n} \, ; \, 0.05, 1) \) converges in probability to \( \log (10) \). This limit is depicted as the pink dashed horizontal line in the top left subplot of Fig. 1.

Under the alternative, the logarithm of the peri-null Bayes factor t-test is asymptotically normal with a mean (i.e., the solid curves) that increases to the limit, e.g., \( \log \mathrm{BF}_{1 \widetilde{0}} = \log ( 10) \) and \( \log \mathrm{BF}_{1 \widetilde{0}} =\log ( 30) \) in the top and bottom row, respectively. The black and green curves correspond to the simulated and asymptotic normal sampling distribution, respectively. The dotted curves show the 97.5% and 2.5% quantiles of the respective sampling distribution. Note that the convergence to the upper bound is slower when the peri-null is more concentrated, e.g., compare the left to the right column

This subplot also shows the mean (solid green curve) and the 97.5% and 2.5% quantiles (dotted green curves above and below the solid curve, respectively) based on the asymptotic normal result of Theorem 2. The black curves represent the analogous quantities based on simulated normal data with \( \mu =0.167 \), \( \sigma =1 \) based on 1,000 replications at sample sizes \( n=100, 200, 300, \ldots , 10000 \).

Observe that for small sample sizes, the simulated peri-null Bayes factors are more concentrated on small values. In this regime, the concentration of the peri-null prior dominates, and the Laplace approximation of \( p(Y^{n} \, | \, {\mathcal {H}}_{\widetilde{0}}) \) is still inaccurate.

As expected, the Laplace approximation becomes accurate sooner, whenever the peri-null prior is less concentrated. The top right subplot depicts results of \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n} \, ; \, 0.10, 1) \) under \( \mu =0.314 \) and \( \sigma =1 \), which converges in probability to \( \log (10) \).

Similarly, the asymptotic normal distribution becomes adequate at a smaller sample size for larger population means \( \mu \). The bottom left subplot corresponds to \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n} \, ; \, 0.05, 1) \) under \( \mu =0.182 \) and \( \sigma =1 \), whereas the bottom right subplot corresponds to \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n} \, ; \, 0.10, 1) \) under \( \mu =0.348 \) and \(\sigma =1 \). The logarithms of both peri-null Bayes factors converge in probability to \( \log (30) \).

In sum, the plots show that under the alternative hypothesis, the asymptotic normal distribution approximates the sampling distribution of the logarithm of the peri-null Bayes factor quite well, and it approximates better when the peri-null prior is less concentrated.

Under the null hypothesis \( \mu = 0 \), the gradient \( \dot{v}(0, \sigma )=0 \), and so is the Hessian, except for the the first entry of \( \ddot{v} \), that is,

As such, \( \log \mathrm{BF}_{1 \widetilde{0}} (Y^{n}) \) has a shifted asymptotically \( \chi ^{2}(1) \)-distribution, i.e.,

where \( Z \sim {\mathcal {N}}( 0, 1) \).

More concretely, under \( \mu =0 \) and \( \sigma =1 \), \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n} \, ; \, 0.05, 1) \overset{{\mathbb {P}}_{0, 1}}{\rightarrow } -3.22 \), whereas \( \log \mathrm{BF}_{1 \widetilde{0}}(Y^{n} \, ; \, 0.10, 1) \) converges in probability to \( -2.53 \). Both cases yield evidence for the null hypothesis, but the evidence is stronger for the peri-null that is more tightly concentrated around 0. The approximation based on the asymptotic \(\chi ^{2}(1) \)-distribution (in green) and the simulations (in black) are shown in Fig. 2.

Under the null, the logarithm of the peri-null Bayes factor t-test has a shifted asymptotically \(\chi ^{2}(1)\)-distribution with a mean (i.e., the solid curves) that decreases to the limit, e.g., \( \log \mathrm{BF}_{1 \widetilde{0}} = -3.22 \) and \( \log \mathrm{BF}_{1 \widetilde{0}} = -2.53 \) in the left and right plot, respectively. The black and green curves correspond to the simulated and asymptotic \( \chi ^{2}(1) \) sampling distribution, respectively. The dotted curves show the 97.5% and 2.5% quantiles of the respective sampling distribution. Note that the convergence to the lower bound is slower when the peri-null is more concentrated, e.g., compare the left to the right plot

In the left subplot, the curves based on the asymptotic \(\chi ^{2}(1) \)-distribution only start from \( n=185 \), because only for \( n \ge 185 \) does \( \log ( 1 + C^{1}( 0, 1 \, | \, {\mathcal {H}}_{\widetilde{0}})/n + C^{2}( 0, 1 \, | \, {\mathcal {H}}_{\widetilde{0}})/n^{2}) \) have a non-negative argument; for \( \kappa _{0} = 0.05 \), we have that \(C^{1}( 0, 1 \, | \, {\mathcal {H}}_{\widetilde{0}}) = -199.83 \). Note that under the null hypothesis, the Laplace approximations are accurate sooner than under the alternative hypothesis, because the priors are already concentrated at zero. Under the null hypothesis, the general observation remains true that for reasonable sample sizes, the expected peri-null Bayes factor is far from the limiting value.

Unlike the peri-null Bayes factor, the (default) point-null Bayes factor is consistent. Figure 3 shows the simulated sampling distribution of the point-null and peri-null Bayes factors in blue and black, respectively. As before the 97.5% quantile (top dotted curve), the average (solid curve), and the 2.5% quantile (bottom dotted curve) are depicted as well.

(Default) point-null Bayes factor t-tests (depicted in blue) are consistent under both the alternative and null, e.g., top and bottom row, respectively, as opposed to peri-null Bayes factors (depicted in black). Note that the peri-null and the default point-null Bayes factors behave similarly when n is small. The domain where the two types of Bayes factors behave similarly is smaller when the peri-null is less concentrated, e.g., compare the right to the left column

The top left subplot of Fig. 3 shows that under \( \mu =0.167 \) and \( \sigma =1 \), the point-null and peri-null Bayes factor behave similarly up to \( n=30 \). Furthermore, the average point-null log Bayes factor crosses the peri-null upper bound of \( \log (10) \) at around \( n=380 \), whereas the peri-null Bayes factor remains bounded even in the limit, and is therefore inconsistent. The top right subplot shows, under \( \mu =0.348 \) and \(\sigma =1 \), that the discrepancy between the point-null and peri-null Bayes factor becomes apparent sooner when the peri-null prior is less concentrated, i.e., \( \kappa _{0} = 0.10 \) instead of \(\kappa _{0}=0.05 \). Also note that under these alternatives, the logarithm of the point-null Bayes factor grows linearly (e.g., Bahadur and Bickel 2009; Johnson and Rossell 2010). Hence, the point-null Bayes factor has a larger power to detect an effect than that afforded by the peri-null Bayes factor.

The bottom row of Fig. 3 paints a similar picture; under the null, the point-null Bayes factor accumulates evidence for the null hypothesis without bound as n grows. For \(\kappa _{0} = 0.05 \), the behavior of the peri-null and the point-null Bayes factor is similar up to \( n=200 \) and it takes about \( n=1,000\) samples before the average point-null log Bayes factor crosses the peri-null lower bound of \( -3.22 \). For \(\kappa _{1} = 0.10 \), only \( n=270 \) samples are needed before the log Bayes factor for the point-null hypothesis crosses the peri-null lower bound of \( -2.53 \).

5 Towards consistent peri-null Bayes factors

There are at least three methods to adjust the peri-null Bayes factor in order to avoid inconsistency. The first method changes both the point-null hypothesis \( {\mathcal {H}}_{0} \) and the alternative hypothesis \( {\mathcal {H}}_{1} \). Specifically, one may define the hypotheses under test to be non-overlapping (e.g., Berger and Delampady 1987; Chandramouli and Shiffrin 2019; Rousseau 2007). The resulting procedure is usually known as an ‘interval-null hypothesis’, where the interval-null is defined as a (renormalized) slice of the prior distribution for the test-relevant parameter under an alternative hypothesis (e.g., Morey and Rouder 2011). For instance, in the case of a t-test, an encompassing hypothesis \( {\mathcal {H}}_{e} \) may assign effect size \( \delta \) a Cauchy distribution with location parameter 0 and scale \( \kappa _{e} \); from this encompassing hypothesis, one may construct two rival hypotheses by restricting the Cauchy prior to particular intervals: the interval-null hypothesis truncates the encompassing Cauchy distribution to an interval centered on \( \delta =0 \): \( \delta \sim \text {Cauchy}(0, \kappa _{e})I(-a, a) \), whereas the interval-alternative hypothesis is the conjunction of the remaining two intervals, \( \delta \sim \text {Cauchy}(0, \kappa _{e})I(-\infty ,-a) \) and \( \delta \sim \text {Cauchy}(0, \kappa _{e})I(a, \infty ) \). As a consequence of Theorem 1, or Theorem 1 (ii) of Dawid (2011), the resulting peri-null Bayes factor is then consistent in accordance to subjective interval belief; for all data-governing parameters \( \delta \) in the interior of the interval-null, \( \lim _{n \rightarrow \infty } \mathrm{BF}_{1 \widetilde{0}} =0 \), and for \( \delta \) in the interior of the sliced out alternative \( \lim _{n \rightarrow \infty } \mathrm{BF}_{\widetilde{0}1} = 0\).Footnote 5 In particular, when \( a = 1 \) and the data-governing \( \delta = 0.7 \), then this Bayes factor will eventually show unbounded evidence for the interval-null.

One disadvantage of this method is the need to specify the width of the interval (Jeffreys 1961, p. 367). This disadvantage can be mitigated by reporting a range of non-overlapping interval-null Bayes factors as a function of a; the researcher can then draw their own conclusion. The resulting range of interval-null Bayes factors also respects the uncertainty about the proper specification of the interval-null hypothesis and thereby avoids a false sense of precision.Footnote 6 A second disadvantage of the non-overlapping interval-null method is that the prior distributions for the rival interval hypotheses are of an unusual shape – a continuous distribution up to the point of truncation, where the prior mass abruptly drops to zero. It is debatable whether such artificial forms would ever result from an elicitation effort. A third disadvantage is that it seems somewhat circuitous to parry the critique “the null hypothesis is never true exactly” by adjusting both the null hypothesis and the alternative hypothesis.

The second method to specify a (partially) consistent peri-null Bayes factor is to change the point-null hypothesis to a peri-null hypothesis by supplementing rather than supplanting the spike with a distribution (Morey and Rouder 2011). In other words, the point-null hypothesis is upgraded to include a narrow distribution around the spike. This mixture distribution is generally known as a ‘spike-and-slab’ prior, but here the slab represents the peri-null hypothesis and is relatively peaked. This mixture model \( {\mathcal {H}}_{0'} \) may be called a ‘hybrid null hypothesis’ (Morey and Rouder 2011), a ‘mixture null hypothesis’, or a ‘peri-point null hypothesis’. Thus, \( {\mathcal {H}}_{0'} = \xi {\mathcal {H}}_{0} + (1-\xi ) {\mathcal {H}}_{\widetilde{0}} \), with \( \xi \in (0,1) \) the mixture weights and, say, \( \xi =\frac{1}{2} \). Because \( \xi > 0 \), the Bayes factor comparing \( {\mathcal {H}}_{0'} \) to \({\mathcal {H}}_{1} \) will be consistent when the data come from \( {\mathcal {H}}_{0} \); and because \( {\mathcal {H}}_{0'} \) also has mass away from the point under test, the presence of a tiny true nonzero effect will not lead to the certain rejection of the null hypothesis as n grows large. The data determine which of the two peri-point components receives the most weight. As before, for modest sample sizes and small \(\kappa _{0} \), the distinction between point-null, peri-null, and peri-point null is immaterial. The main drawback of the peri-point null hypothesis is that it is consistent only when the data come from \( {\mathcal {H}}_{0} \); when the data come from \( {\mathcal {H}}_{1} \) or \({\mathcal {H}}_{\widetilde{0}} \), the Bayes factor remains bounded as before (i.e., Eq. 8).

The third method is to define a peri-null hypothesis whose width \(\kappa _{0} \) slowly decreases with sample size (i.e., a ‘shrinking peri-null hypothesis’). For the t-test, one can take \(\kappa _{0} = c \sigma / \sqrt{n} \) for some constant \( c > 0 \) as proposed by Berger and Delampady (1987), see also Rousseau (2007), except that their proposal involves a test of non-overlapping hypotheses. More generally, consistency follows by adapting Theorem 1, which depends on the Laplace approximation that becomes invalid if \(\kappa _{0} \) shrinks too quickly. The representation Eq. (3) shows that this consistency fix is equivalent to keeping the peri-null correction Bayes factor \(\mathrm{BF}_{\widetilde{0} 0} \) close to one regardless of the data. Note that this is attainable as \( \kappa _{0} \rightarrow 0 \) the peri-null and point-null become indistinguishable. In other words, the consistency of such a shrinking peri-null Bayes factor is essentially driven by the asymptotic behavior of the point-null Bayes factor; arguably, one might as well employ this point-null Bayes factor to begin with. One drawback of the shrinking peri-null hypothesis is that it is incoherent, because the prior distribution depends on the intended sample size.Footnote 7 There could nevertheless be a pragmatic argument for tailoring the definition of the peri-null to the resolving power of an experiment.

6 Concluding comments

The objection that “the null hypothesis is never true” may be countered by abandoning the point-null hypothesis in favor of a peri-null hypothesis. For moderate sample sizes and relatively narrow peri-nulls, this change leaves the Bayes factor relatively unaffected. For large sample sizes, however, the change exerts a profound influence and causes the Bayes factor to be inconsistent, with a limiting value given by the ratio of prior ordinates evaluated at the maximum likelihood estimate (cf. Jeffreys 1961, p. 367 and Morey and Rouder 2011, pp. 411–412). Hence, we believe that as far as Bayes factors are concerned, there is much to lose and little to gain from adopting a peri-null hypothesis in lieu of a point-null hypothesis. Here, we also derived the asymptotic sampling distribution of the peri-null Bayes factor and show that its limiting value is essentially an upper bound under the alternative and a lower bound under the null. The asymptotic distributions also provide insights to typical values of the peri-null Bayes factor at a finite n. Inconsistency may not trouble subjective Bayesians: if the peri-null hypothesis truly reflects the belief of a subjective skeptic, and the alternative hypothesis truly reflects the belief of a subjective proponent, then the Bayes factor provides the relative predictive success for the skeptic versus the proponent, and it is irrelevant whether or not this relative success is bounded. Objective Bayesians, however, develop and apply procedures that meet various desiderata (e.g., Bayarri et al. 2012; Consonni et al. 2018), with consistency a prominent example. As indicated above, the desire for consistency was the primary motivation for the development of the Bayesian hypothesis test (Wrinch and Jeffreys 1921). For objective Bayesians then, it appears the point-null hypothesis is more than just a mathematically convenient approximation to the peri-null hypothesis (Jeffreys 1961, p. 367). The peri-point mixture model (consistent only under the point-null hypothesis) and the shrinking peri-point model (incoherent because the prior width depends on sample size) may provide acceptable compromise solutions.

Regardless of one’s opinion on the importance of consistency, it is evident that seemingly inconsequential changes in prior specification may asymptotically yield fundamentally different results. Researchers who entertain the use of peri-null hypotheses should be aware of the asymptotic consequences; in addition, it generally appears prudent to apply several tests and establish that the conclusions are relatively robust.

Notes

“A similar setup in this context was considered by Mitchell and Beauchamp (1988), who instead used “spike and slab” mixtures. An important distinction of our approach is that we do not put a probability mass on \(\beta _i = 0\).” (George and McCulloch 1993, p. 883).

We thank the first anonymous reviewer for providing this intuition.

We thank the second anonymous reviewer for bringing this reference to our attention. When comparing our Theorem 1 to that of Theorem 1 (ii) of Dawid (2011), it is worth noting that the apparent difference in the order of the remainder term vanishes once the MLE \( \hat{\theta } \) in Eq. (7) is replaced by \( \hat{\theta } = \theta + h / \sqrt{n} \), which holds in probability for large n for regular models.

For consistency to hold the standard condition is assumed that the interval-null or sliced up prior assigns positive mass to a neighborhood of \( \delta \) in the respective intervals.

We thank the first anonymous reviewer for suggesting this procedure to circumvent a definite choice for a.

We term a Bayes factor incoherent if the result depends on whether the data are analyzed all at once or batch by batch.

References

Bahadur RR, Bickel PJ (2009) An optimality property of Bayes’ test statistics. Monogr Ser 57:18–30

Bakan D (1966) The test of significance in psychological research. Psychol Bull 66:423–437

Balcetis E, Dunning D (2011) Wishful seeing: more desired objects are seen as closer. Psychol Sci 21:147–152

Bayarri MJ, Berger JO, Forte A, García-Donato G (2012) Criteria for Bayesian model choice with application to variable selection. Ann Stat 40:1550–1577

Berger JO, Delampady M (1987) Testing precise hypotheses. Stat Sci 2:317–352

Berkson J (1938) Some difficulties of interpretation encountered in the application of the chi-square test. J Am Stat Assoc 33:526–536

Chandramouli SH, Shiffrin RM (2019) Commentary on Gronau and Wagenmakers. Comput Brain Behav 2:12–21

Consonni G, Fouskakis D, Liseo B, Ntzoufras I (2018) Prior distributions for objective Bayesian analysis. Bayesian Anal 13:627–679

Cornfield J (1966) A Bayesian test of some classical hypotheses–with applications to sequential clinical trials. J Am Stat Assoc 61:577–594

Cornfield J (1969) The Bayesian outlook and its application. Biometrics 25:617–657

Dawid AP (1984) Present position and potential developments: some personal views: statistical theory: the prequential approach (with discussion). J R Stat Soc Ser A 147:278–292

Dawid AP (2011) Posterior model probabilities. In: Gabbay DM, Bandyopadhyay PS, Forster MR, Thagard P, Woods J (eds) Handbook of the philosophy of science, vol 7. Elsevier, North-Holland, pp 607–630

Dickey JM (1976) Approximate posterior distributions. J Am Stat Assoc 71:680–689

Edwards W, Lindman H, Savage LJ (1963) Bayesian statistical inference for psychological research. Psychol Rev 70:193–242

Etz A, Wagenmakers E-J (2017) J. B. S. Haldane’s contribution to the Bayes factor hypothesis test. Stat Sci 32:313–329

Gallistel CR (2009) The importance of proving the null. Psychol Rev 116:439–453

George EJ, McCulloch RE (1993) Variable selection via Gibbs sampling. J Am Stat Assoc 88:881–889

Good IJ (1967) A Bayesian significance test for multinomial distributions. J Roy Stat Soc Ser B (Methodol) 29:399–431

Gronau QF, Ly A, Wagenmakers E-J (2020) Informed Bayesian \(t\)-tests. Am Stat 74:137–143

Grünwald P, de Heide R, Koolen W (2019) Safe testing. arXiv preprint arXiv:1906.07801

Hendriksen A, de Heide R, Grünwald P (2021) Optional stopping with Bayes factors: a categorization and extension of folklore results, with an application to invariant situations. Bayesian Anal 16(3):961–989

Isserlis L (1918) On a formula for the product-moment coefficient of any order of a normal frequency distribution in any number of variables. Biometrika 12:134–139

Jeffreys H (1935) Some tests of significance, treated by the theory of probability. Proc Cambridge Philos Soc 31:203–222

Jeffreys H (1936) Further significance tests. Math Proc Cambridge Philos Soc 32:416–445

Jeffreys H (1937) Scientific method, causality, and reality. Proc Aristot Soc 37:61–70

Jeffreys H (1939) Theory of probability, 1st edn. Oxford University Press, Oxford

Jeffreys H (1948) Theory of probability, 2nd edn. Oxford University Press, Oxford

Jeffreys H (1961) Theory of probability, 3rd edn. Oxford University Press, Oxford

Jeffreys H (1973) Scientific inference, 3rd edn. Cambridge University Press, Cambridge

Jeffreys H (1977) Probability theory in geophysics. J Inst Math Appl 19:87–96

Johnson VE, Rossell D (2010) On the use of non-local prior densities in Bayesian hypothesis tests. J R Stat Soc Ser B (Stat Methodol) 72:143–170

Jones LV, Tukey JW (2000) A sensible formulation of the significance test. Psychol Methods 5:411–414

Kass RE, Raftery AE (1995) Bayes factors. J Am Stat Assoc 90:773–795

Kass RE, Vaidyanathan S (1992) Approximate Bayes factors and orthogonal parameters, with application to testing equality of two binomial proportions. J R Stat Soc Ser B (Methodological) 2:129–144

Kass RE, Tierney L, Kadane JB (1990) The validity of posterior expansions based on Laplace’s method. In: Geisser S, Hodges JS, Press SJ, Zellner A (eds) Bayesian and likelihood methods in statistics and econometrics: essays in honor of George A. Barnard, vol 1. Elsevier, UK, pp 473–488

Kruschke JK, Liddell TM (2018) The Bayesian new statistics: hypothesis testing, estimation, meta-analysis, and power analysis from a Bayesian perspective. Psychon Bull Rev 25:178–206

Laplace P-S (1774/1986) Memoir on the probability of the causes of events. Stat Sci 1:364–378

Ly A, Verhagen AJ, Wagenmakers E-J (2016a) An evaluation of alternative methods for testing hypotheses, from the perspective of Harold Jeffreys. J Math Psychol 72:43–55

Ly A, Verhagen AJ, Wagenmakers E-J (2016b) Harold Jeffreys’s default Bayes factor hypothesis tests: explanation, extension, and application in psychology. J Math Psychol 72:19–32

Ly A, Marsman M, Verhagen AJ, Grasman RPPP, Wagenmakers E-J (2017) A tutorial on Fisher information. J Math Psychol 80:40–55

Ly A, Komarlu Narendra Gupta AR, Etz A, Marsman M, Gronau QF, Wagenmakers E-J (2018) Bayesian reanalyses from summary statistics and the strength of statistical evidence. Adv Methods Pract Psychol Sci 1(3):367–374. https://doi.org/10.1177/2515245918779348

Ly A, Stefan A, van Doorn J, Dablander F, van den Bergh D, Sarafoglou A, Kucharskỳ Š, Derks K, Gronau QF, Komarlu Narendra Gupta AR, Boehm U, van Kesteren E-J, Hinne M, Matzke D, Marsman M, Wagenmakers E-J (2020) The Bayesian methodology of Sir Harold Jeffreys as a practical alternative to the p-value hypothesis test. Comput Brain Behav 3(2):153–161

McCullagh P (2018) Tensor methods in statistics. Courier Dover Publications, London

Mitchell TJ, Beauchamp JJ (1988) Bayesian variable selection in linear regression. J Am Stat Assoc 83:1023–1032

Morey RD, Rouder JN (2011) Bayes factor approaches for testing interval null hypotheses. Psychol Methods 16:406–419

Morey RD, Rouder JN (2018) BayesFactor 0.9.12-4.2. Comprehensive R Archive Network. http://cran.r-project.org/web/packages/BayesFactor/index.html

Pawel S, Held L (2015) The sceptical Bayes factor for the assessment of replication success. J R Stat Soc Ser B (Stat Methodol). https://doi.org/10.1111/rssb.12491

Rouder JN, Speckman PL, Sun D, Morey RD, Iverson G (2009) Bayesian \(t\) tests for accepting and rejecting the null hypothesis. Psychon Bull Rev 16:225–237

Rousseau J (2007) Approximating interval hypothesis: p-values and Bayes factors. In: Bernardo J, Bayarri MJ, Berger JO, Dawid AP, Heckerman D, Smith A, West M (eds) Bayesian statistics 8: proceedings of the eighth valencia international meeting June 2–6, 2006. Oxford University Press, Oxford, pp 417–452

Shafer G, Vovk V (2019) Game-theoretic foundations for probability and finance, vol 455. Wiley, London

Tukey JW (1991) The philosophy of multiple comparisons. Stat Sci 6:100–116

Tukey JW (1995) Controlling the proportion of false discoveries for multiple comparisons: Future directions. In Williams VSL, Jones LV, Olkin I, (eds.), Perspectives on statistics for educational research: proceedings of a workshop, pp 6–9, Research Triangle Park, NC. National Institute of Statistical Sciences

van der Vaart AW (1998) Asymptotic statistics. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, Cambridge

Verdinelli I, Wasserman L (1995) Computing Bayes factors using a generalization of the Savage-Dickey density ratio. J Am Stat Assoc 90:614–618

Wrinch D, Jeffreys H (1921) On certain fundamental principles of scientific inquiry. Phil Mag 42:369–390

Acknowledgements

This research was supported by the Netherlands Organisation for Scientific Research (NWO; grant #016.Vici.170.083). The authors would like to thank Paulo Serra and Muriel Pérez for their enlightening discussions regarding the asymptotics discussed in this paper. We are also grateful for the constructive suggestions for improvement by the editor and two anonymous reviewers.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Laplace approximation

A Laplace approximation

The Laplace approximation uses a (multivariate) Taylor expansion for which we introduce notation. Let \( h : \varTheta \subset {\mathbb {R}}^{p} \rightarrow {\mathbb {R}}\), i.e., \( h(\theta )= - \tfrac{1}{n} \sum _{i=1}^{n} \log f(y_{i} \, | \, \theta ) \), and we write \( \hat{\theta } \) for the point in its domain where h takes its global minimum. Furthermore, we use subscripts to denote partial derivatives, whereas superscripts refer to components of a vector, or more generally an array. For instance, \( \pi _{a} = \tfrac{ \partial }{\partial \theta _{a}} \pi (\hat{\theta }) \) refers to the a-th component of the vector of partial derivatives \( [D^{1} \pi (\hat{\theta })] \) of the prior \( \pi \) evaluated at the MLE. Similarly, we write \( h_{abc} = \tfrac{\partial ^{3}}{ \partial \theta _{a} \partial \theta _{b} \partial \theta _{c} } h(\hat{\theta })\) for the abc-th component of the three-dimensional array \([D^{3} h(\hat{\theta }) ] \). Hence, the number of indices in the subscript corresponds to the number of derivatives of h and the indices, each in \( 1, 2, \ldots , p \), provide the location of the component.

We use superscripts to refer to the component of a vector. For instance, \( \tilde{q}^{a} = (\theta ^{a} - \hat{\theta }^{a}) \) represents the a-th component of the difference vector \( \tilde{q} = \theta - \hat{\theta } \), thus, equivalently \( \tilde{q}^{a} := e_{a}^{T} \tilde{q} \), where \( e_{a} \) is the unit (column) vector with entry 1 at index a and zero elsewhere. Similarly, \( \varsigma ^{abcd} \) the abcd-th component of a four dimensional array.

Moreover, we employ Einstein’s summation convention and suppress the sum whenever an index occurs in both the sub and superscript. For instance,

The former defines an inner product between the gradient of h and deviations \( \tilde{q} \), whereas the \( h_{abc} = [D^{3} h]_{abc} \) refers to the a-th row, b-th column, and c-th depth of the three-dimensional array consisting of partial derivatives of h of order three. Lastly, we use the shorthand notation

to denote the nested sum which is needed for Cauchy products \((h_{a} \tilde{q}^{a}) (h_{b} \tilde{q}^{b}) \). For instance, with \(d =2 \)

which is equivalent to

With these notational conventions, a multivariate Taylor approximation is denoted as

and note the similarity to its one-dimensional counterpart.

Theorem 3

(Laplace expansion with error term) Let \( {\mathcal {P}}_{\varTheta } \) be a collection of density functions that are six times continuously differentiable in \( \theta \in \varTheta \subset {\mathbb {R}}^{p} \), and \( \pi (\theta ) \) a prior density that is four times continuously differentiable. Let \( Y \overset{\mathrm{iid}}{\sim }f(y \, | \, \theta ) \) for certain \( \theta \), then with \( \hat{\theta } \) the MLE

where \( | \cdot | \) denotes the determinant and

where \( \varsigma ^{ab} \), \( \varsigma ^{abcd} \), \(\varsigma ^{abcdef}\), \( \varsigma ^{abcdefgh} \), \(\varsigma ^{abcdefghij} \), and \( \varsigma ^{abcdefghijkl} \) represent the ab-th component of the second, the abcd-th component of the fourth, the abcdef-th component of the sixth moment, the abcdefgh-th component of the eighth moment, the abcdefghij-th component of the tenth moment, and the abcdefghijkl-th component of the twelfth moment, of the p dimensional random vector \( Q \sim {\mathcal {N}}_{p} ( 0, \hat{I}(\hat{\theta })^{-1}) \), respectively. \( \diamond \)

Proof

The proof is based on (i) Taylor-expanding the exponential of the log-likelihood of order five around \( \hat{\theta } \), (ii) the definition of the exponential as a series and Taylor-expanding \( \pi \) to third order at the same point \( \hat{\theta } \), and (iii) properties of the normal distribution.

Step (i) Let \( h(\theta ) = \tfrac{1}{n} \sum _{i=1}^{n} \log f(y_{i} \, | \, \theta ) \), then since \( h(\theta ) \in C^{6}(\varTheta ) \) we know that there exists \( \delta > 0 \) such that in a ball \( B_{\hat{\theta }}(\delta ) \subset {\mathbb {R}}^{p} \) of radius \( \delta \) centered at \( \hat{\theta } \) the average log-likelihood \( h_{n}(\theta ) \) is well-approximated by a Taylor expansion of order 5. This combined with \( \hat{\theta } \) being the MLE and the notation \( \tilde{q} = \theta - \hat{\theta } \) yields

where

is the bounded remainder term since \( h \in C^{6}(\varTheta ) \). The replacement of \( \varTheta \) by \( B_{\hat{\theta }}(\delta ) \) in the integral is justified if the mass is concentrated at \(\hat{\theta }\), thus, whenever the integral with respect to the first-order term falls off quadratically, that is, if

which is the case when \( \hat{\theta } \) is unimodal. When it is not unimodal, but \( \hat{\theta } \) is a global maximum, then the condition implies that the requirement that the contribution of the other maxima is not too big.

Step (ii) After centering the integral at \( \hat{\theta } \) we scale with respect to \( \sqrt{n} \), that is, we apply the change of variable \( q = \sqrt{n} \tilde{q} \), thus, \( \int n^{-p/2} \mathrm{d}q = \int \mathrm{d}\tilde{q} \) and therefore

where \( \tilde{\varphi } \) is the density of a multivariate normal distribution centered at 0 and covariance matrix \( \varSigma = \hat{I}^{-1}(\hat{\theta }) \), and where \( \tilde{\pi }(q) \) is the Taylor approximation of \( \pi \) at the MLE, that is,

and where the remainder term is now

To exploit the properties of Gaussian integrals, we replace integration domain \( B_{\hat{\theta }}(\sqrt{n} \delta ) \) by \( {\mathbb {R}}^{p} \), which is justified when n is large, and because the tails of a normal density fall off exponentially.

By definition of \( e^{-R(q)} \) as a series and without the exponential approximation error

From here onwards, we focus on the integral Eq. (40), which after some straightforward but tedious computations can be shown to be of the form

where the \( A_{j} \) terms are functions of q and \( \hat{\theta } \) defined by the series representation of \( e^{-R(q)} \) and \( \tilde{\pi }(q) \).

Step (iii) The terms \( A_{j} \) are given below. Of the following results, only the exact values of \( A_{0}, A_{2} \) and \( A_{4} \) matter; what matters for \( A_{1} \) and \( A_{3} \) is that they only involve odd powers of q:

Since for k odd \( A_{k} \) only involve odd powers of q, we conclude that their integral with respect to \( \tilde{\varphi }(q)\) vanishes. Hence,

where \( E[A_{2}] \) and \( E[A_{4}] \) are expectations with respect to \( Q \sim {\mathcal {N}}( 0, \hat{I}(\hat{\theta })^{-1}) \). This implies that the order \( n^{-1} \) and \( n^{-2} \) terms in the assertion are \(C^{(1)}(\hat{\theta }) = E[A_{2}]/\pi (\hat{\theta }) \) and \(C^{(2)}(\hat{\theta }) = E[A_{4}]/\pi (\hat{\theta }) \). \(\square \)

The components of higher moments can be expressed in terms of the covariances \( \varsigma ^{ab} = \mathrm{Cov} ( Q^{a}, Q^{b}) \) using Isserlis’ formula (Isserlis 1918; McCullagh 2018). For moments \(\varsigma ^{a_{1} \cdots a_{w}}\), that is, a component of the wth moment of Q with \(w = 2 v \) even, the following holds

where \(P_{w}^{2} \) is the collection of all pairs of which there are v. For instance, for \( w=4 \), \( \varsigma ^{abcd} \) is a sum of 2-products of pairs, for \( w=6 \) is a sum of 3-products of \(\varsigma ^{abcdef} \) and so forth and so on. More specifically,

where all indexes \( a,b,c,d,e,f =1,2, \ldots , p \). The expression of \( \varsigma ^{abcdefgh} \), \( \varsigma ^{abcdefghij} \), and \(\varsigma ^{abcdefghijkl} \) define sums of \( 105=3 \times 5 \times 7\), \( 945=3 \times 5 \times 7 \times 9 \), and \( 10,395=3 \times 5 \times 7 \times 9 \times 11 \) terms, respectively, and due to space restrictions, their exact forms are not displayed here.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ly, A., Wagenmakers, EJ. Bayes factors for peri-null hypotheses. TEST 31, 1121–1142 (2022). https://doi.org/10.1007/s11749-022-00819-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-022-00819-w