Abstract

Setting an optimal image exposure is crucial for acquiring dense point clouds using 3D active optical sensor systems such as structured light sensors [structured light sensors (SLSs)] and active stereo sensors. One of the most common and seamless ways to optimize the image brightness of an image exposure is to adjust the camera’s exposure time. However, optimizing the image exposure alone is ineffective for acquiring surfaces of large-scale objects with a complex topology if a spatial understanding of the scene is neglected. Hence, the present paper proposes a data-driven approach using two Gaussian processes [Gaussian processes (GPs)] regression models to select a proper exposure time considering the nonlinear correlations between image exposure and the scene spatial relationships. To model these correlations, our study introduces first the generic synthesization of seven inputs and two target variables. Then, based on these inputs, two independent GPs are designed: one for predicting the measurement quality and one for estimating the exposure time. The performance and generalizability of both models are thoroughly evaluated using an SLS and an active stereo sensor. The evaluation demonstrated that the point cloud quality models adequately matched observations with an R2 exceeding 90%. Specifically, the models predicted point cloud quality with an root mean square error (RMSE) of 10%. Additionally, the assessment of the performance of the exposure time models showed a model fit with an R2 above 97%. The exposure time prediction accuracy, as evidenced by the RMSE values, was within 10% of the corresponding exposure time range for each sensor. The present research shows the potential and effectiveness to completely automate the assessment of a point cloud quality and the selection of exposure times with the help of data-driven models.

Similar content being viewed by others

1 Introduction

Measurement of a car door using an SLS: a camera image and corresponding, b 3D point cloud. The 2D image provides a qualitative representation of the scene illumination. The red pixels indicate an over saturation of the camera impeding the acquisition of the surface points of the features in the point cloud (color figure online)

The increasing performance of 2D and 3D sensors combined with the falling prices of electronic components over the last two decades have enabled researchers and industry to develop cost-effective solutions [1] for improving vision tasks, such as image-based quality inspection, digitization, reverse engineering, object detection, surveillance, and navigation. In particular active 3D imaging sensors, such as structured light sensors (SLSs), comprising at least one camera and a light projecting source, have been established as the leading acquisition technology for high precision vision tasks due to their accuracy and large working volumes [2]. By combining optical triangulation and pattern illumination, active sensors can generate an accurate 3D sampled representation of a probing object’s surface, known as a point cloud. The generated point clouds can then be interpreted and processed to solve different vision tasks.

However, obtaining a robust and reliable sampling of an object’s surface, i.e., a high-density point cloud, requires that an adequate camera exposure has been previously selected. Correctly setting the camera exposure of imaging sensors is a non-trivial task that requires the consideration of several influencing variables, e.g., camera parameters (exposure time, lens aperture, sensitivity), projecting device parameters (light intensity, pattern) [3], probing object surface material [4]. Figure 1 exemplifies the complexity of this task for measuring highly reflective large-scale objects, such as a car door, using an SLS. The camera image (left) shows that the scene illumination is mostly inhomogeneous and that a globally optimized exposure time is likely ineffective to obtain a high-density point cloud (right) for acquiring all features. Furthermore, this example illustrates the importance of considering the spatial relationships between the features and the sensor in order to achieve an appropriate image exposure.

Due to the many factors influencing scene illumination, selecting a proper exposure time is still a challenging task generally performed manually, which can take up to several hours and strongly depends on the vision system characteristics, the object’s geometric complexity and size, and user expertise. Therefore, automated optimization of image exposure to ensure dense point cloud quality is a key step toward effective and resource-efficient performance of vision tasks using active 3D optical sensors. For this reason, the present study proposes a data-driven, supervised learning approach using two independent Gaussian processes (GPs) regression models for predicting the local quality of a point cloud and for estimating the required camera exposure time. The introduced models were developed in the context of coordinate metrology applications, where the robust acquisition of individual features (related terms: inspection feature, artifact, region, area, or point of interest) has a higher priority than the entire object’s surface. For this reason, the current research follows a local feature-driven optimization rather than a global approach. Both proposed data-driven models are trained using first the camera image’s local light intensity around a feature and the spatial relationships between the features and the sensor. The models are iteratively developed and analyzed to gain a better general understanding of the model outputs and input spatial correlations. Our work aims at providing users and researchers with a novel method to assess and automate the acquisition of robust point clouds without considering any hardware or software alteration of the vision system.

1.1 Related work

Effective control of the camera exposure for measuring high reflective surfaces is a well-known challenge faced by any active sensor. Hence, several researchers have analyzed this problem over the last three decades and proposed different solutions comprising hardware- and software-based techniques to address it.

One of the most common and pragmatic methods to obtain uniform reflectivity is matte coating the surface of the measuring object [5]. Although this technique is still considered to be highly effective for eliminating the overexposure of the camera image, this approach also has disadvantages, e.g., not all workpieces can be coated, the process is difficult to automate, and the measurement accuracy is affected by the powder thickness and its distribution homogeneity [6].

In addition, there are a handful of methods that combine multiple camera images with different exposures to optimize image intensity. Such approaches strive for targeted exposure control of individual pixels and fall under the category of High Dynamic Range (HDR). The targeted spatial image exposure can be achieved using different techniques, e.g., multiple exposure times [7], modulation of the fringe projector [3], pixel-wise adaptation of the fringe projection [8], or hybrid approaches using external filters [9] and multiple cameras [10] or projectors [11]. Moreover, [12] proposed a software-based solution that generates synthetic fringe images without considering any system adaptation. This study refers to the following review papers [13, 14], which offer an overview of the various HDR approaches and their applications.

Although HDR techniques offer robust solutions, such methods are impractical for commercial or industrial active systems that do not allow the required hardware or software modifications. Additionally, many of the HDR techniques require an a priori image analysis, which affects the resource-efficiency (computational and time) of the whole process [15]. Hence, [15] proposed a global exposure time optimization using a single acquired fringe image and an approximated linear model of the exposure time and the image intensity. Although the study demonstrated the validity and effectiveness of an approximated model, the investigation of the spatial relationships between camera and object was not further addressed. More analytical methods were used by [16], which proposed an offline planning system for predicting a valid exposure time based on the reflectance simulation model framework of [4] and a 3D surface model of the probing object to control the camera exposure time. The study of [16] utilized the reflectance model to compute a global optimized exposure time for a given ideal image intensity. Their study showed that the expected surface area could be estimated with a deviation of 20% to predict the quality of the resulting point cloud. Furthermore, [17] argues the importance and relevance of quantifying the quality of point clouds. To this end, the authors introduce a comprehensive set of performance indicators to assess, among other metrics, the quality of point clouds. However, their work focuses on post-acquisition evaluation rather than deriving optimized parameters to ensure high point density point clouds.

1.2 Approach

An approach involving two data-driven models is utilized to predict measurement quality in order to determine the ideal exposure time subsequently. The quality model (top) predicts the quantitative point cloud quality for a particular feature to evaluate the measurement’s validity. The second model (bottom) calculates the necessary exposure time to achieve a local image intensity for the same feature

Based on the literature review, the automated optimization of exposure times for active 3D sensors remains an open issue within academia and industry. Moreover, the effective and efficient selection of exposure times can be regarded as a multi-dimensional problem that requires an imaging and spatial understanding of the acquiring scene taking into account a variety of different the influencing factors. For this reason, a human operator is still required for setting adequately the camera exposure within complex vision applications such as depicted in Fig. 1. Motivated by the idea that an operator is capable of adjusting effectively the camera’s exposure time, where other approaches fail, our study first considers an analysis of the operator’s behavior based on an observation-action-assessment procedure:

-

Observation (2D image exposure and spatial relations): Considering an initial exposure time, the operator observes the image intensity concentrating on the features to be acquired.

-

Action (exposure time): Based on the observed image and lighting conditions, the operator adapts the exposure time.

-

Assessment (point cloud): The operator evaluates the measurement based on the resulting point cloud. The previous steps are repeated if the required quality has not been reached.

Towards replicating the cognitive visual and spatial capabilities and expertise of a human operator, our study proposes a data-driven approach using \(\text {GP}\)s trained on 2D images, point clouds and spatial data to predict the expected measurement quality and estimate an adequate exposure time for a given feature. To this purpose, our study considers two \(\text {GP}\) models, one for assessing the quality of the point cloud (\({}^{q}{\mathcal{G}\mathcal{P}}\)) and one for estimating the exposure time (\({}^{e}{\mathcal{G}\mathcal{P}}\)) given a local image intensity and the spatial relationships between the sensor and a given feature. Since the proposed approach does not globally optimize measurements, a local image intensity value is used to assess the quality of the point cloud or predict an exposure time matching it. The local image intensity represents the illumination around a feature and serves as an input for the point cloud assessment or nominal value for prediction of the exposure time.

-

\({}^{q}{\mathcal{G}\mathcal{P}}\): The present research assumes that the quality of the point cloud can be predicted (operator assessment) using a local image light intensity and the spatial relationships between the sensor and the \({\text {features}}\) (operator observation). Hence, in a first step, this study considers a \(\text {GP}\) model to predict the local point cloud quality corresponding to a \(\text {feature}\).

-

\({}^{e}{\mathcal{G}\mathcal{P}}\): On the assumption that the required local image exposure matching an expected point cloud quality can be found, a second \(\text {GP}\) model is used to estimate (operator action) the exposure time also considering the spatial relationships between the sensor and a \(\text {feature}\).

A simplified overview of the proposed models, inputs, and outputs is shown in Fig. 2. In addition, the proposed approach assumes that the overall image exposure can be well controlled solely by the camera exposure time if the spatial relationships between the sensor and measurement object are known, and a constant scene illumination can be guaranteed during each measurement. This characteristic is mostly given for active sensors, where the projector can be considered as the dominant light source of the scene. Therefore, the influence of external light sources can usually be neglected [15].

1.3 Contributions

Our work presents a novel and data-based framework, which proposes a systematic and incremental design of two \(\text {GP}\) regression models for assessing the point cloud quality and estimating the exposure time for 3D active sensors. The main contributions of the study are the following:

-

Synthesization of generic input and output variables for characterizing the point cloud quality, imaging, and spatial system state.

-

Four-dimensional (4D) \(\text {GP}\) kernel to predict the point cloud quality (number of points) around a \(\text {feature}\) based on a given local image intensity and its relative position (3D) to the sensor.

-

Six-dimensional (6D) \(\text {GP}\) kernel to estimate the required exposure time for a feature’s nominal local image intensity considering its relative position (3D) and rotation (2D) to the sensor.

1.4 Outline

This paper first introduces in Sect. 2 the experimental setup for training the proposed regression models and presents the fundamentals of GPs. Then, the core contribution of this paper, i.e., the development and evaluation of both regression models as well as the design of the model inputs and outputs, is discussed in detail in Sect. 3. A thorough validation based on experiments with different lighting conditions, surface finishes and the generalization of the framework to other sensors is presented in Sect. 4.

2 Experimental setup and Gaussian process models

2.1 Experimental setup for data collection

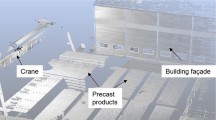

The integrated industrial robot vision system AIBox from ZEISS was utilized to acquire all training and evaluation datasets of the present study. The AIBox is an industrial robot-based measurement cell comprising an SLS (ZEISS Comet PRO AE) used for different vision tasks, e.g., dimensional metrology, digitization, and reverse engineering. The SLS is attached to a six-axis industrial robot (Fanuc M20ia) to enable a free positioning of the sensor; hence, the full automation of the measurement process. Figure 3 shows the core elements of the AIBox, which are more detailed described in the following subsections.

Overview of the core components of the measurement cell AIBox: car door as probing object, SLS ZEISS Comet PRO AE, and six-axis industrial robot for automated sensor positioning. In addition, an alternative active stereo system, rc_visard 65 and dot projector from Roboception, was attached to the SLS for evaluating the transferability of the models

2.1.1 Object

A sheet metal car door with different surface finishes as shown in Fig. 4 was used for acquiring training and evaluation datasets. Car doors are well-known reference objects in industry for the evaluation of measurement systems, since they exhibit a high feature density and variability (e.g., edges, pockets, holes, slots, and spheres) and different surface characteristics (e.g., finishes, topological complexity).

2.1.2 Features

The car door contains up to 500 features. Our study focuses exclusively on the acquisition and evaluation of hole features. In coordinate metrology, holes are challenging to evaluate since they require an assessment of the surface around the perforation. Given their inherently hollow topology, considering this property within the data processing pipeline facilitates a seamless transferability of this approach to the assessment of similar features, such as pockets and slots. The pipeline should also be easily applicable to other geometric features, such as points, spheres, and squares. Additionally, the car door has a high variability (sizes, finishes, and locations) of hole features, which is useful for a comprehensive evaluation of the models. Fig. 4 visualizes some exemplary circular features used within this study. It is assumed that the 3D position and 3D orientation of all \({\text {features}}\) are known with respect to a reference coordinate system.

2.1.3 Sensors

The SLS ZEISS Comet PRO AE consists of a monochrome camera and a blue-light digital fringe projector. The camera integrates a band-pass filter adjusted to the digital projector. Additionally, the SLS has a photogrammetric camera for high-accuracy image registration.

The sensor is controlled by the proprietary software ZEISS colin3D, which allows the setting of the camera exposure time. Furthermore, the software has an automatic exposure function and a proprietary HDR mode, which were deactivated for the acquisition phase. A parameterization of further camera settings, such as light sensitivity, lens aperture, or any light projector configuration is not allowed.

To evaluate the transferability of our approach with other sensors and acquisition principles (cf. Sect. 4.2), a stereo sensor (rc_visard 65) and a dot projector from Roboception were attached to the SLS (see Fig. 3). The imaging parameters of both sensors are given in Table 7.

2.1.4 Positioning devices

The AIBox integrates a six-axis industrial robot to position the SLS in six degrees of freedom. The rotary table enables the acquisition of the car door inside and outside.

2.1.5 Measuring environment

The whole system is enclosed by a cell box with tinted windows to minimize the influence of external lighting sources. The measurement cell is mounted in our laboratory, which resembles a real production hall and corresponding lighting conditions.

2.2 Gaussian processes

The present study proposes two GP regression models for predicting the point cloud quality and the exposure time. This subsection provides a brief overview of the GPs mathematical foundations and their general characteristics.

2.2.1 Fundamentals

Formally, a GP is a stochastic process, i.e., a collection of random variables over a time or space domain, in which any finite subset of it has a multivariate Gaussian distribution. According to [18], a GP can also be seen as the probability distribution of an infinite number of functions over the output \(g({\textbf{x}})\) for a particular input value \({\textbf{x}}\). A GP is fully specified by its mean function \(m({\textbf{x}})\) and its kernel \(k({\textbf{x}},{\textbf{x}}^{\prime })\) representing the covariance function between its inputs:

If a function \(g({\textbf{x}})\) follows a GP, this can be denoted as

Usually, the mean function corresponds to a zero mean function \(m({\textbf{x}})={\textbf{0}}\) and the kernel \(k\left( {\textbf{x}},{\textbf{x}}^{\prime }\right)\) remains as the key element to determine the values of \(g({\textbf{x}})\).

2.2.2 Kernel design

The kernel function \(k\left( {\textbf{x}},{\textbf{x}}^{\prime }\right)\) defines the covariance between a pair of function values of f(x). This characteristic is considered one of the principal strengths of kernel methods, such as GPs, since the kernel function can be designed using explicit prior knowledge and assumptions about the unknown function f(x). Therefore, selecting or designing an appropriate kernel is crucial for effective training, as it influences how random variables and observations correlate and ultimately impact the model’s performance. The mathematical definition of some exemplary kernels is given in Appendix 1.

Moreover, Fig. 5 presents a graphical representation of the impact of the kernel through the utilization of two exemplary GP regression models trained on the basis of a dataset \({\mathcal {D}}=\left\{ \left( {\textbf{x}}_{i},y_{i}\right) \right\} _{i=1}^{N}\) with \(N=7\) observations. The models aim to predict the function \(f(x)=x\sin (2\pi x)\) using a squared exponential (SE) kernel (left of Fig. 5) and a rational quadratic (RQ) kernel (right of Fig. 5). The trends emphasize the significance of kernel design. Although both models manifest effective performance on observations, the SE kernel provides better predictions within interpolation ranges. In addition, Fig. 5 depicts a further characteristic of GPs, which shows that model predictions are intrinsically associated to an uncertainty. This feature is illustrated in Fig. 5, which exhibits a 10% confidence interval which increases beyond the training regions in both scenarios. This characteristic is of great advantage in industrial applications, as it allows also to evaluate the reliability of the prediction and to act accordingly.

3 Model development

This section provides first an overview of the acquired dataset used for the training and evaluation of the GP models. First Sect. 3.1 introduces the characterization of the models inputs and outputs. Then, an overview of the used trained data is given in Sect. 3.2.2. Section 3.3 outlines the development of the \(\text {GP}\) model for predicting the point cloud quality and Sect. 3.4 presents the development of the \(\text {GP}\) model for the estimation of the exposure time. Following an incremental and systematic model design, the models are evaluated and compared with each other to find the best balance between complexity and performance. Fig. 6 provides an overview of the generic workflow followed in this research to develop, train, and evaluate the models.

3.1 Model input and target variables

The selection and synthesis of adequate model input and target variables are considered fundamental steps within the design and employment of data-based models. Given the proposed goals of our study, the synthesized variables should be able to describe the correlations between the local image exposure of a specific area corresponding to a feature and its spatial relationships to the sensor for predicting the resulting point cloud quality and the corresponding exposure time.

This section introduces the design of the target and input variables using linear algebra, geometric analysis, and machine vision algorithms. The characterization of the inputs aims at a high generalization and an automated dataset generation. An overview of the model target variables and the synthesized input variables is given in Table 1.

3.1.1 Target variables

This subsection introduces the synthesization of the target variables, i.e., the point cloud quality and the exposure time, for the quality and the exposure time prediction models.

Point Cloud Quality

Our study introduces a quantitative metric to express the acquisition quality of a feature. This metric is expressed by the absolute number of points \(n_{points}\) in an acquired point cloud that correspond to a given area of a feature.

This area is calculated by intersecting the point cloud with a synthetic hollow cylinder. The hollow cylinder is built based on the geometry, position and orientation of the features. Given a circular feature with a radius \(r_{f}\), a hollow cylinder \(c_{cyl}\), with an arbitrary height \(h_{cyl}\), an inner radius \({r_{i}=r_{f}+r_{tol,i}}\) and outer radius \({r_{o}=r_{f}+r_{tol,o}}\) is defined. The parameters \(h_{cyl}\), \(r_{tol,i}\) and \(r_{tol,a}\) define tolerance variables that must be selected based on the specific application, system or standards. \(n_{points}\) represent then the subset of points of the point cloud \(n_{pcl}\) that are within the hollow cylinder. \(n_{points}\) is given as the intersection of these elements:

The position of the cylinder corresponds to the feature’s origin and its orientation to the feature’s normal vector. Figure 7 shows a simplified 2D graphical representation of the circular feature, the hollow cylinder, and the \(n_{points}\). The hollow cylinders and the resulting intersected points of some exemplary features of an exemplary real measurement are shown in Fig. 8.

Normalized Point Cloud Quality

Since the number of points depends theoretically on the feature’s radius and acquisition plane (the point density depends on the measurement’s depth), a further post-processing step must be considered to allow a better generalization of the local point cloud quality. Consequently, it can be assumed that an optimal measurement is given when the maximum possible number of 3D points denoted as \(n_{max,3D}\) is obtained. The normalized number of points given as \(p_{norm}\) is calculated as follows:

The maximum number of 3D points that can be acquired is equivalent to the total number of pixels of the camera image \(n_{max,2D}\), hence:

Assuming that an acquired 3D point \({\varvec{t}}_{\textrm{3D}}\in {\mathbb {R}}^{3}\) can be associated with one pixel of the camera image, the 2D projection of \({\varvec{t}}_{\textrm{3D}}\) can be approximated using a pinhole-camera model and the projection matrix \({\varvec{M}}_{s}\):

The projection matrix comprising the camera’s intrinsic parameters can be obtained using a camera calibration procedure based on a calibration pattern [19].

Using Eq. 7 the 2D projection of the hollow cylinder at the \(\text {feature}\)’s plane can be computed and the total number of pixels \(n_{max,2D}\), which corresponds to \(n_{max,3D}\), can be estimated. The 2D projection represents a 2D mask, which can be directly applied to the camera’s image. Figure 9 shows the corresponding masks of the hollow cylinders from Fig. 8.

Exposure time

The exposure time \(t_{exp}\) is used as the target variable of the second GP model. Since most active sensors, such as the examples shown in Sect. 2.1.3, allow for the specific setting of an exposure time, additional pre-processing of this variable is unnecessary.

3.1.2 Input variables

In the following subsection, the input variables and their pre-processing steps for their synthesization are described.

Local light intensity

Like other works [3, 20], this study assumes that the external lighting conditions remain constant, the projector provides the dominant lighting source of the scene, and the projector’s configuration remains constant. Hence, a single fringe image is considered to quantify the light intensity around each feature at each measurement.

Similar to [3, 20], this work proposes to utilize the camera image of a stripe pattern to determine the light intensity as accurately as possible. In contrast to the related works, this research follows a local approach to quantify the image intensity around a feature using the 2D mask of the hollow cylinder (cf. Sect. 3.1.1). The average light intensity of the masked section denoted as \(i_{avg}\) characterizes the local illumination provided by the projector, which is given in an 8-Bit grayscale range \(i_{avg}\in [0,\dots ,255]\) (see Fig. 9).

Relative position and orientation

It is assumed that at least one reference frame of the sensor, e.g. at the tool center point (TCP) or the lens, is known. Hence, the rigid transformation between this sensor’s reference frame and a feature frame is utilized to characterize the spatial relationships between sensor and features for each measurement. The rigid transformation can be calculated using different methods, e.g., robot kinematics, feature extraction, surface-based registration, or photogrammetry. In the present study, the relative position is given in the sensor’s TCP by the following translation vector \({\varvec{t}}_{tcp}=(x_{tcp},y_{tcp},z_{tcp})^{T}\) and the orientation by the Euler angles \({\varvec{r}}_{tcp}=(w_{tcp}^{x},p_{tcp}^{y},r_{tcp}^{z})^{T}\). In addition, it is assumed that the sensor’s TCP lies in the middle plane of the sensor’s working volume. For example, Fig. 19 depicts the translation vectors between the sensor TCP and two \({\text {features}}\).

3.1.3 Implementation

The input and target variables are generated using a self developed processing pipeline considering following characteristics:

-

Normalized number of points: The height of the cylinder and the radii tolerances were defined based on an internal defined norm as follows: \(h=\) 20 mm, \(r_{tol,i}=\) 3 mm, and \(r_{tol,o}=\) 5 mm. The generation of the synthetic cylinder and the estimation of \(n_{points}\) was computed using the trimesh Library [21].

-

Exposure time: The exposure time \(t_{exp}\) does not require any special pre-processing. Hence, the raw value in milliseconds (ms) was stored for each measurement.

-

Position and orientation: The position and orientation of the door features are known and given in the door’s coordinate system. The rigid transformations between the sensor’s TCP and all features were calculated using the aligned transformation of each measurement. To increase the accuracy of the alignment, most point clouds were aligned using the system’s photogrammetry capabilities. Otherwise, the robot’s kinematic model was utilized.

-

Local light intensity: For the computation of the light intensity, the initial stripe pattern image was used for computing the image intensity. The initial stripe pattern is permanently projected and helps the manual operator to estimate the current camera exposure. The computation of the 2D masks, grayscale values, and projection matrix of the camera calibration was performed using the Python API of the OpenCV library [22].

An example dataset can be found in the supplementary files of this publication.

3.2 Data acquisition

The data acquisition phase represents a critical and resource-demanding step during the design of data-based models. Therefore, this section presents a thoughtful design of experiments (DoE) that utilizes a uniform sampling method of the sensor’s workspace.

3.2.1 Design of experiments

In the first step, a measurement strategy was developed to estimate the positions at which the Comet PRO AE sensor could acquire data within its workspace. This selection of the measurement positions followed the space-filling design strategy as suggested by [23], which advocates using an orderly grid pattern to evenly distribute the input values across the experimental range. Hence, the data collection positions were uniformly discretized using a grid along the x and y planes for three distinct depths (z-plane) to guarantee the collection of training data throughout the entire sensor’s 3D workspace (see Table 7). The resulting measuring sensor positions were generated using the \(\text {feature}\) \(f_{1}\) as reference. Figure 21 depicts the discretized workspace for the measuring positions. Moreover, to minimize the data collection effort and for storage resource efficiency reasons, the exposure time acquisition ranges were defined based on a logarithmic scale enabling a finer discretization at low exposure time range. Moreover, the minimum and maximum exposure time range values were selected empirically for three different depth planes (near, middle, and far). Table 9 summarizes the considered spatial and exposure time ranges comprising 480 measurements at 48 different positions. The DoE regards a constant sensor orientation for all measurements. However, a minimal orientation variability was achieved by considering features with different normal surface orientations. The generated dataset is denoted as X.

In the final step, the planned 480 measurements were acquired automatically using the integrated robot. To ease and automate the generation of the robot’s path plan, the viewpoint planning framework introduced in our previous works [24, 25] was used. Moreover, the whole acquisition process was automated using the C# and Python API provided by the proprietary software of ZEISS, i.e., Visio7 and colin3D. The acquisition of all measurements required approximately 105 min. The complete dataset size comprises 480 point clouds and 2D images with a resolution of 4 megapixels.

3.2.2 Training, validation and test datasets

The input and target variables were synthesized from the acquired dataset X for a set of four features denoted as \(\{f_{1}^{},\dots ,f_{4}^{}\}\) using the parameters presented in Sect. 3.1.3. The resulting 6D spatial range spanned by the training \({\text {features}}\) is given in Table 9 in the Appendix. Figure 21 visualizes the spatial distribution of the training data in the Euclidean space.

All models are trained with 80% of all observations; let this training dataset be given as \(X^{train}\subseteq X\). Moreover, the model metrics are computed using the remaining 20% (\(X^{val}\)). Additionally, the trained models are evaluated against a test dataset \(X^{test}\subseteq X\) with unknown observations from different \({\text {features}}\) denoted as \(\{f_{1}^{test},\dots ,f_{4}^{test}\}\) to evaluate the models’ performance. The total size of observations of each subset corresponds to \(N_{train}=912\), \(N_{val}=228\), and \(N_{test}=779\). Figure 4 depicts all training and test \({\text {features}}\), whose radii lie between 3 and 30mm.

The dataset \(X^{test}\) includes individual observations (see Table 9) lying outside the 6D space range spanned by the training dataset. Hence, the models’ interpolation and extrapolation capabilities can be individually investigated.

Note: Some of the acquired measurements in the front plane could not be photogrammetrically aligned. These measurements were aligned using a less accurate method based on the robot and measurement cell kinematic model. The summed alignment error of some of these noisy observations corresponded up to 5 mm. These alignment inaccuracies led to a miscalculation of some 2D and 3D masks and ultimately to a miscalculation of \(p_{norm}\). This effect can be observed in some observations within the red square of Fig. 10 showing a considerable drop of \(p_{norm}\) under 80%. However, these misaligned measurements are included in the dataset to consider a more realistic and biased acquisition scenario. Additionally, it is important to note that the present study does not aim to evaluate the sensor’s accuracy and that neither the DoE nor the input extraction pipeline is aligned to a standard for sensor qualification.

3.2.3 Preliminary data analysis

After synthesizing a dataset that can be used for the models’ design, the next step regards selecting the input variables with the highest correlation to the model outputs. Hence, a preliminary prioritization of the inputs is performed based on the maximum information coefficient (MIC) of the acquired data to evaluate these correlations. The MIC matrix of the training dataset \(X^{train}\) is depicted in Fig. 20 and is used for supporting the incremental kernel design of each model. The MIC is often used as a measure of the correlation between features that exhibit partially nonlinear relationships, rather than relying on the more commonly used Pearson correlation coefficient. The interpretation of these values is individually addressed in the following subsections.

3.3 Quality prediction model

Given that light intensity is a characteristic used to estimate surface point depth utilizing image processing techniques, appropriate image illumination is vital to ensure accurate depth calculations. Moreover, measurement uncertainty in some sensors can lead to varying point cloud quality within the measurement volume, as noted by [26]. For these reasons, to predict the local quality of the point cloud, expressed by the number of points belonging to a specific feature, this study employs a GP regression model that takes into account the local light intensity and spatial relation to the sensor. This subsection outlines an incremental and systematic approach for designing the kernel of a prediction model, which considers the local light intensity of the image and the spatial relationships between the sensor and a feature.

3.3.1 Formulation

According to Eq. 3, a simplified representation of the n dimensional GP quality regression model is given as follows:

with

and a zero mean \(\text {GP}\) (i.e., \({m(X^{train})=0}\)). \({}^{q}k_{n}\) denotes the model’s kernel and \({\varvec{x}}_{n}\) the input vector.

3.3.2 Inputs selection

The MIC correlation values between all inputs and the model’s output \(p_{norm}\) (see Fig. 20) are used to support the incremental kernel design of the quality regression model. As expected, the local light intensity \(i_{avg}\) has the highest correlation with \(p_{norm}\) followed by the spatial inputs.

Moreover, the normalized number of points shows a considerable correlation to the \({\text {features}}\)’ radii \(r_{f}\) and positional inputs, i.e., \(x_{tcp}\), \(y_{tcp}\), and \(z_{tcp}\), suggesting a remaining dependency between \(p_{norm}\) and the individual \({\text {features}}\). A similar behavior can be observed with the inputs modeling the \(\text {feature}\)s’ orientation (\(w_{tcp}^{x}\), \(p_{tcp}^{y}\), and \(r_{tcp}^{z}\)), which also exhibit a high correlation to \(p_{norm}\), between themselves and the positional inputs despite the fact that the sensor’s orientation remained constant for all observations. For these reasons, our work considers primarily the positional inputs for the kernel design. Furthermore, the exposure time \(t_{exp}\) is disregarded because the information regarding the camera exposure is implicitly represented within the average light intensity \(i_{avg}\).

3.3.3 Base kernel

Based on a comprehensive evaluation of different kernel combinations and a visual interpretation of the data between \(p_{norm}\) and \(i_{avg}\) (see Fig. 10), the following kernel was selected to be the most suitable for modeling the required correlations:

where \(k_{RQ}(X_{n})\) represents a RQ kernel.

First, the rational quadratic kernel \(k_{RQ,n}(X_{n})\) (see Eq. 23) is used to model the general relationships between the normalized points and the local light intensity and positional inputs. Furthermore, an improvement of the model’s performance and smoother progress was observed using a change point kernel for a targeted kernel activation. The change point kernel \(k_{CP,min}(i_{avg})\) behaves like a step function, which changes from 0 to 1 at a minimal average brightness of \(i_{avg}\ge 10\) (cf. Fig. 10). Consequently, an intensity value below 10 automatically yields \(p_{norm}=0\). The reverse behavior applies for observations with an \(i_{avg}\) value over 255 with the change point kernel \(k_{CP,max}(i_{avg})\).

3.3.4 1D kernel

In the first step a kernel including just the \(\text {feature}\)’s light intensity was designed. Hence, the model was trained considering the following dataset:

and, consequently, the corresponding kernel (see Eq. 9):

The GP denoted as \({}^{q}{\mathcal{G}\mathcal{P}}_1{}\) is given as follows:

The notation is analogously used for the following kernels consisting of more dimensions.

The 1D GP regression model given by Eq. 12 and all following \(\text {GP}\)s were trained and evaluated using the GPy library [27]. The model hyperparameters were calculated using an L-BFGS optimizer and a triple restart optimization. Table 8 provides an overview of the optimized hyperparameters.

The results of the evaluation using the corresponding datasets (\(X_{1}^{test}\),\(X_{1}^{val}\)) are summarized in Table 2. The \({}^{q}{\mathcal{G}\mathcal{P}}_1\) shows a solid performance and generalization for unseen \({\text {features}}\) in view of the 1D kernel simplicity with an \(\text {R2}\) score over 78%. However, the model’s predictions also demonstrate that the image exposure is insufficient for predicting the point cloud quality for all validating and testing features with a root mean square error (RMSE) up to 18%. This effect can also be observed within various observations of Fig. 10, suggesting that the same local image intensity can lead to different values of \(p_{norm}\).

3.3.5 2D and 3D kernel

The second model considers a 2D feature vector integrating \(z_{tcp}\) due to its next highest correlation (cf. Fig. 20):

The regression model is trained similarly to the 1D model, but the 2D rational quadratic kernel (\({}^{q}k_{RQ,2}\)) uses the automatic relevance determination (ARD) variant (see Eq. 24), which allows an individual optimization of the lengthscale for each input. Table 2 presents the performance results, which suggest at first glance an outstanding performance for the validation data with a model accuracy of \(\text {R2}=\,\) 96% and an \(\text {RMSE}\) of 8%. However, to judge by the generalization accuracy on the unknown observations (\(\text {RMSE}=\,\) 32%), it can be assumed that the model was overfitted.

Furthermore, a 3D kernel integrating the spatial feature with the following highest correlation, i.e, \(x_{tcp}\), was considered. However, the model showed a similarly poor performance on the test \({\text {features}}\) (see Table 2).

Discretized visualization of the mean prediction \(\mu (p_{norm})\) and corresponding double standard deviations \(2\sigma\) of the 4D \(\text {GP}\) quality regression model \({}^{q}{\mathcal{G}\mathcal{P}}_4\) at three different depths \(z_{tcp}=[-150,0,150]\,\)mm (left, middle, right) for three different light intensities \(i_{avg}=[25,75,125]\) (top, middle, bottom) for the SLS Zeiss Comet Pro AE. The graphs demonstrate that the point cloud around a feature depends on the image intensity but also on the 3D relative position between a feature and the sensor. The trends confirm the nonlinear correlation between the point cloud quality, the image light intensity, and the spatial variables

3.3.6 4D kernel

In the last step a 4D kernel integrating all spatial inputs was considered. The input matrix is given as follows:

The 4D kernel model yielded remarkable results with an \(\text {R2}\) of 92% and an \(\text {RMSE}\) of 10.5% for the test \({\text {features}}\) confirming the model’s transferability and performance (see Table 2).

The graphs from Fig. 11 depict the 2D model’s trend of some of the best and worst predictions at different positions. The curves show the model’s mean prediction and the corresponding confidence interval (\(2\sigma\), shaded region) for the whole light intensity range. On the one hand, Fig. 11b illustrates a selection of three of the worst predictions at the rising and falling slopes exhibiting that the model still has some difficulties making accurate predictions within these areas. On the other hand, the best predictions in Fig. 11a confirm the overall performance and generalizability of the model. A constant and overall acceptable prediction accuracy can be observed mainly at the curve’s plateau, where the highest number of points is expected.

A more comprehensive visualization of the model’s mean prediction and corresponding double standard deviation in the x-y plane and light intensities at different sensor-depth planes in the z direction is given in the form of 3D surface plots in Fig. 12. The 3D model slices also visualize the nonlinear characteristic of the problem and the existence of local maximum values depending on the image intensity and the spatial variables. Additionally, these trends further confirm the present study’s motivation showing the existence of local minima of the point cloud quality for different positions and light intensities.

3.3.7 6D Kernel

For the sake of completeness, a 6D kernel comprising the sensor orientation represented by the \(p_{tcp}^{y}\)and \(w_{tcp}^{x}\) was considered. The results (see Table 2) showed a non-statistical performance improvement and even a poorer performance than the 4D kernel on the unknown observations. Thus, a detailed analysis is not further considered. However, it should be noted that the training dataset considered feature surfaces with a relative sensor orientation up to 13\({^{\circ }}\). The present study does not rule out that higher incidence angles may have a higher correlation with the point cloud quality.

3.3.8 Discussion

This section outlined and evaluated different multi-dimensional \({}^{q}{\mathcal{G}\mathcal{P}}\, s\) to predict the number of expected 3D points around a \(\text {feature}\) considering an increasing number of inputs. The best performance was delivered by a 4D model, whose characteristics and limitations are discussed more extensively below.

-

Performance The considered 1D model (see Sect. 3.3.4) demonstrated that the number of 3D points assigned to a \(\text {feature}\) could be estimated with an \(\text {RMSE}\) of 18% using just the local light intensity of a 2D image. These initial results are consistent with the findings of [16] and suggest that the quality of the point cloud could be optimized using only the image exposure, for example, by adjusting the sensor exposure time or the projector illumination parameters. However, the performed evaluation also revealed that the image exposure alone would be insufficient to predict accurately the number of expected surface points. Hence, the present study considered spatial variables and the design of a 4D kernel to learn the missing correlations. The evaluation of the 4D GP regression model showed that the prediction confidence could be increased to an \(\text {RMSE}\) of 10.5% (\(\text {R2}=\,\) 93%), if the relative position between \({\text {features}}\) and sensor are considered. Moreover, the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) demonstrated a high computational efficiency, e.g., 100 predictions were calculated under 19 ms.

-

Inputs The target variable \(p_{norm}\) and input variable \(i_{avg}\) showed a significant validity for modeling the point cloud quality and image intensity independent of the feature dimensions. However, Fig. 20 shows that \(p_{norm}\) and \(i_{avg}\) have a correlation to the \({\text {features}}\)’ radii. Hence, it can be hypothesized that the model’s performance and generalizability could be improved by considering further input variables, e.g., a discretization of the 2D mask, standard deviation or median of the light intensity.

-

Dataset Size: It is well known that the performance of data-driven approaches depends on the available training dataset size. For this reason, the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) was retrained with different training dataset sizes for analyzing the influence on the model’s performance. Figure 22 shows the results of this analysis based on the test dataset \(X_{4}^{test}\). The trend shows that the model’s accuracy (\(\text {R2}=\,\) 92%, \(\text {RMSE}=\,\) 11%) starts converging with a dataset size of \(\approx\) 450 observations. Therefore, it is reasonable to assume that collecting more data from the same training \({\text {features}}\) would not improve the performance of the model. Furthermore, this analysis also demonstrates that an acceptable prediction accuracy can be obtained with a reduced number of observations, e.g., an \(\text {RMSE}\) of 13.8% with 114 observations. Nevertheless, it should be noted that the spatial distribution should still be maintained to obtain similar results.

-

Kernel design The training loops of the models revealed showed that the kernel and its hyperparameters might considerably influence the model’s performance. In consideration of the findings, it can be concluded that the proposed kernel and hyperparameters are capable of adequately modeling the necessary system correlations. However, it is possible that a different set of kernel hyperparameters or an alternate kernel could yield a superior performance.

3.4 Exposure time prediction model

This section outlines the design of a \(\text {GP}\) to estimate the camera’s exposure time for a given local light intensity around a \(\text {feature}\) and its spatial relationships to the sensor.

3.4.1 Preliminary dataset analysis

Contrary to the \({}^{q}{\mathcal{G}\mathcal{P}}\), the performance and training efficiency of the \({}^{e}{\mathcal{G}\mathcal{P}}\) can be increased by considering exclusively observations leading to a satisfactory point cloud quality. Hence, taking advantage of the measurement quality quantification expressed by \(p_{norm}\), the proposed models are trained and evaluated considering observations with \(p_{norm}\ge 75\,\%\). The datasets’ sizes are then reduced: \({N_{e}^{train}=421}\), \({N_{e}^{val}=106}\), and \({N_{e}^{test}=508}\). The train, validation, and test datasets are denoted as follows \({}^{e}X^{train}\), \({}^{e}X^{val}\), and \({}^{e}X^{test}\).

Figure 13 shows the trend between the exposure time and local light intensity of \(\text {feature}\) \(f_{1}^{}\) at different positions considering a translation in the x-axis alone. At first glance, it can be assumed that a linear model would be sufficient to model the correlation between \(i_{avg}\) and \(t_{exp}\) confirming the validity of the approximation of [3, 15]. However, this reduced set of observations, which considers the shift of a feature in only one direction, shows that exposure time has a considerably high correlation with spatial inputs. This insight confirms the motivation of our work that spatial relationships must be considered for selecting an appropriate exposure time. Recalling the qualitative distribution of the projector illumination from Fig. 1, a nonlinear correlation is to be expected, suggesting the necessity of a more complex model.

3.4.2 Formulation

Aligned to the generic definition of \(\text {GP}\)s (see Eq. 3), the regression model for estimating the exposure time is given:

with

3.4.3 Input selection

Based on the MIC values from Fig. 20, the kernel design is supported by the correlation magnitude between the exposure time \(t_{exp}\) and the rest of the inputs. Moreover, it should be noted that the input \(z_{tcp}\) has an overestimated correlation with the \(t_{exp}\) due to the different exposure time ranges considered at each acquisition depth plane (cf. Table 9). Once again the rotational variables are not considered in a first step due to their high correlation to the translational features.

3.4.4 Base kernel

Based on an exhaustive benchmarking of various kernel combinations, the exponential kernel \(k_{\text {exp}}\) (see Eq. 22) was discovered to perform slightly best than the well-known SE kernel and was best suited for learning the necessary correlations to predict the exposure time:

with

3.4.5 1D kernel

In a first step, a 1D kernel considering just the light intensity \(i_{avg}\) was evaluated. The input matrix is given as follows:

The hyperparameters of all models are also found in Table 8. Table 3 shows the model’s evaluation results considering the validation and test datasets. The model’s poor performance on the test data (\(\text {R2}=\,\) 16%, \(\text {RMSE}=\,\) 81ms) confirms the visual interpretation of Fig. 13 corroborating that the local light intensity is ineffective for predicting the corresponding exposure time and that a linear model alone would be ineffective. Consequently, the model’s accuracy on the test dataset confirms this thesis and suggests that a more complex model including spatial inputs must be applied.

3.4.6 2D kernel

Based on the previous observations, in the next step, a model with a 2D and a 3D kernel (without ARD) comprising the spatial inputs \(z_{tcp}\) and \(x_{tcp}\) is evaluated. Although a slight performance improvement for both models was achieved, the prediction accuracy remains unsatisfactory, cf. Table 3.

3.4.7 4D kernel

In the next step, a 4D kernel \({}^{e}k_{4}({}^{e}X_{4})\) composed analogously to the 2D kernel and considering all positional inputs, i.e., \(x_{tcp}\), \(y_{tcp}\), and \(z_{tcp}\) was evaluated. The evaluation results from Table 3 exhibit an overall improvement of the model’s performance compared to the 2D counterpart. However, the model’s accuracy between the validation (\(\text {R2}= {88}{\%}\), \({\text {RMSE}= {30.1}\hbox {ms}}\)) and test (\(\text {R2}={18}{\%}\), \(\text {RMSE}={70.8}\hbox {ms}\)) dataset continues exhibiting major discrepancies requiring a detailed analysis of the data.

Given the qualitative and nonlinear trend of the light distribution and the door’s surface topology (see Fig. 1), it seems logical that the training dataset comprising four \({\text {features}}\) fails to represent the high variability and complexity of the car door surface. Hence, in a further step the 4D model was reevaluated considering exclusively the interpolating test dataset denoted as \({}^{e}X^{val,i}\). The results confirmed the proposed thesis and showed a considerable improvement in the model’s performance (\(\text {R2}= {50}{\%}\), \(\text {RMSE}={49.8}\hbox {ms}\)) within the interpolated range. Note that hereafter the evaluation of the following models considers only observations within the interpolated spatial range of the training dataset.

Moreover, these findings confirm the high correlation between the exposure time and the spatial correlations and suggest that the model’s accuracy and generalization should be improved by increasing the spatial variability within the training observations. Hence, the training dataset was extended by considering five additional \({\text {features}}\) (\(f_{5-9}^{}\)). The extended dataset denoted as \({{}^{e}X^{train^{+}}(f_{1-9}^{})}\) considers an increased number of observations with \(N_{e}^{train^{+}}=758\) and \(N_{e}^{val^{+}}=190\). As expected, the extended dataset contributed to a considerable improvement in the model’s accuracy (\(\text {R2}={80}{\%}\), \(RMSE= {32.7}\hbox {ms}\)). Nevertheless, the model’s performance difference between test and validation data is still significant suggesting that a more complex model needs to be considered.

3.4.8 6D kernel

In the last step, a 6D kernel (\({}^{e}k_{6}^{+}\)) considering the local light intensity and three positional plus two rotational inputs is investigated. The training input matrix for the 6D kernel is given as follows:

The results of the 6D model exhibit a slightly better performance (\(\text {R2}={84}{\%}\), \(\text {RMSE}={29}\hbox {ms}\)) on the test dataset. Given the high correlation between exposure time and spatial inputs, an additional dataset with a finer discretization of the exposure time and displacement in the x and y axes was considered in a next step for training the model. Table 9 summarizes the considered DoE. The extended dataset is denoted as \({}^{e}X_{6}^{train^{++}}\) with \(N_{e}^{train^{++}}=1936\) and \(N_{e}^{val^{++}}=485\) observations. Once again, the model’s accuracy and, in particular, the generalization performance could be improved to an \(\text {RMSE}\) below 27ms.

In a next step, an exponential kernel (\({}^{e}k_{6}^{*}\)) with ARD was considered. The kernel showed a significant improvement on the generalization performance yielding an \(\text {RMSE}\) of 15ms. In the last step, a modification of the base kernel (18) was considered by using the multiplication of two exponential kernels, one for the light intensity and one for the spatial inputs:

The modified kernel (21) yielded the best performance, i.e., \(\text {R2}={97}{\%}\) and an \(\text {RMSE}\) of 13.1ms, for unknown observations.

A graphical evaluation of the model’s performance is provided in Fig. 14, which depicts three of the best and worst predictions including a confidence interval of 95% (\(2\sigma\)). The best predictions visualized in Fig. 14a demonstrate that the model can accurately predict the exposure time at various positions for different light intensities. On the contrary, the worst trends shown in Fig. 14b are mainly in the observations of the more distant acquisition plane. This behavior was to be expected, considering that in the more distant plane, the illumination provided by the projector loses its dominance, and other lighting sources might more easily influence the image exposure. Nevertheless, the model generally shows a high prediction confidence and demonstrates that even the worst predictions lie within the \(2\sigma\) interval.

A more comprehensive visualization of the model’s trend and corresponding double standard deviation is provided by Fig. 24, which shows 3D surface plots in the x–y plane for a constant sensor orientation with three different light intensities at different depths. Similar to the trend of \({}^{q}{\mathcal{G}\mathcal{P}}_4\), these graphs visualize and quantify the nonlinearity behavior of the problem. Furthermore, the graphs also agree with the qualitative light distribution of the SLS shown in Fig. 1, confirming that the right side (positive x-axis) is more overexposed than the left side.

3.4.9 Discussion

This section outlined the design of a Gaussian Process regression model for predicting the exposure time of a 3D measurement using a \(\text {feature}\)’s local image exposure and its relative displacement and orientation to the sensor. Moreover, the incremental and systematic design of the model’s kernel contributed to a better general understanding of the correlations between the exposure time, input variables, dataset size, and data entropy. A more detailed discussion regarding these correlations follows below.

-

Performance Our 1D model analysis showed that the light intensity alone was insufficient to predict an adequate exposure time (\(\text {R2}\) = 7%, \(\text {RMSE}\) = 75 ms). Hence, by increasing the model’s complexity and integrating spatial inputs the 6D model (cf. Sect. 3.4.8) demonstrated that the prediction accuracy (\(\text {R2}\) =97%) could be considerably improved up to an \(\text {RMSE}\) of 13 ms for unseen observations. In addition, a model with a 6D linear kernel (\({}^{e}k_{6}^{lin}\)) was trained for performance comparison with previous research proposing linear models. The results showed that the performance of the linear model (\(\text {R2}\approx\) 50% and an \(\text {RMSE}\) = 44 ms) was insufficient to compensate the inhomogeneous and nonlinear surface illumination.

-

Inputs Our study revealed that the exposure time exhibited a high correlation to the spatial inputs. Based on our incremental kernel design, this observation could be corroborated, confirming that the model requires a dataset comprising a high variability of exposure times combined with a good spatial distribution of the observations. Our experiments showed that the model’s accuracy held well for observations within the training space (interpolation), which covered the sensor’s workspace and considered a relative sensor orientation up to 10\({^{\circ }}\) between sensor and feature’s normal plane. A more exhaustive acquisition and comprehensive analysis to evaluate the model’s performance and correlation for higher sensor inclinations are required to be further investigated.

-

Dataset size Furthermore, the correlation between dataset size and model performance was analyzed for efficiency purposes. The evaluation results of the \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) trained with a different number of test observations are displayed in Fig. 23. In contrast to the quality prediction model, the exposure time model requires more observations throughout the sensor’s workspace. Hence, for replication purposes and efficient and effective data acquisition, we recommend a thoughtful design of the probing object considering a heterogeneous spatial distribution of the features with different surface orientations.

Performance overview of the \(\text {GP}\) models compared to the ground truth (GT) values for predicting the exposure time using the \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) (left) and for predicting the point cloud quality using the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) (right) with five different features

3.5 Summary

This section outlined and investigated the design of two multi-dimensional \(\text {GP}\)s trained on the basis of a local image exposure and spatial relationships to predict the point cloud quality around a feature and to estimate the corresponding exposure time. Both models showed promising performance and demonstrated that spatial variables are necessary for increasing the models’ prediction accuracy. A visual representation of the performance of both models using five different test features is depicted in Fig. 15. The image on the left displays a 2D sensor image taken with an exposure time of 27ms, while the image on the right shows the corresponding point cloud. The selected exposure duration and resulting point cloud quality set the ground truth (GT) values as the benchmark to qualitatively evaluate model performance for this empirical measurement. In this scenario, the GT values for the point cloud quality model are the individual normalized points for each feature, while for the exposure regression model, the chosen exposure time \(t_{exp}^{GT}\) denotes the GT value. The exposure time predictions on the left demonstrate that the \(\text {GP}\) \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) can accurately predict the exposure time for different features and image intensities. The performance of the \(\text {GP}\) \({}^{q}{\mathcal{G}\mathcal{P}}_4\) is confirmed on the right side for estimating the expected point cloud quality at different locations. Moreover, the left bottom feature can be considered as an outlier showing a poorer prediction for both models. However, it must be noted that this feature lies outside the training range due to its surface orientation. Hence, a model’s performance improvement should be expected by extending the dataset with different sensor orientations.

Note that some of the results, including the proposed kernels, are to some extent limited to the considered experimental setup. Hence, to evaluate the transferability of our approach to other systems, Sect. 4 presents a further evaluation of both models regarding their transferability to different surfaces, sensitivity to external conditions, and generalizability of kernel design for an alternative active sensor.

4 Extended evaluation

This section considers a comprehensive evaluation of the 4D \({}^{q}{\mathcal{G}\mathcal{P}}\) and the 6D \({}^{e}{\mathcal{G}\mathcal{P}}\) outlined in Sect. 3 considering the models sensitivity to external lighting conditions, transferability to other active sensors, and performance on alternative surface finishes.

4.1 External lighting conditions

Within our work it is assumed that the stripe projector of an SLS can be considered the predominant lighting source within the acquisition environment. This requirement can be well fulfilled by the employed measurement setup which comprised a closed measurement cell with tinted windows and the band-pass filter of the sensor (cf. Sect. 2.1). However, the performance of the models may be affected under different lighting conditions. Hence, the models’ sensitivity to external lighting sources is evaluated in this subsection.

4.1.1 Dataset

The DoE considered three different use-cases and the acquisition of their corresponding datasets with following lighting conditions: I) equal lighting as training, i.e., closed measurement cell without external lighting (\(X^{l,I}\)), II) closed measurement cell with internal LED light of the AI-Box (\(X^{l,II}\)), and III) open measurement cell with the typical external lighting of a production hall ( \(X^{l,III}\)). Figure 18 depicts the internal LED light and the opened measurement cell as considered for the third scenario. The three evaluation datasets considered just one single sensor position and five exposure times for each lighting scenario (see Table 9). The models were evaluated only with the \({\text {features}}\) \(f_{1-3}^{test}\), because \(f_{4}^{test}\) could not be captured from the selected sensor position.

4.1.2 Evaluation and discussion

The sensitivity of the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) (see Sect. 3.3.6) and \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) (see Sect. 3.4.8) to external light sources was evaluated with the acquired datasets of the previously mentioned lighting scenarios. The results are summarized in Table 4.

On the one hand, the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) showed an encouraging and constant performance with statistically insignificant differences for all lighting scenarios. The results are also in line with the previous validating results from Table 2 and confirm the robustness and usability of the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) to some measure for different lighting conditions.

On the other hand, the exposure estimation model \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) showed a more significant variance for the different lighting scenarios. As could have been anticipated, the best performance is observed in the scenario I, which corresponds to the same lighting conditions as for the training dataset. On the contrary, the scenarios II and III consider a more illuminated environment compared to the first scenario and exhibit an acceptable overestimation of the exposure time around 2 ms to 3 ms. From these results, it can be concluded that the image exposure is not significantly affected by external light sources and the projector can be assumed to be the dominant lighting source. Consequently, it can be assumed that the prediction accuracy of the \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) should hold for different lighting conditions as long as the projector can be considered the dominant light source.

Even though these experiments demonstrate the usability of the proposed models under different lighting conditions, a more exhaustive design of experiments comprising more observations and different light sources is necessary to assess the models’ light sensitivity and prediction limitations properly.

4.2 Transferability to an active stereo sensor

In the following subsection, the transferability of the kernel design of the \({}^{q}{\mathcal{G}\mathcal{P}}_4\) and \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\) using a different active system is evaluated. The combination of a dot projector and the rc_visard 65 can be classified as a 3D active stereo sensor system (see Sect. 2.1). Such configurations are not rare in industrial applications and even encouraged to improve the point cloud density for acquiring homogeneous surfaces. Similar to the SLS, it is assumed that the dot projector is the constant and predominant active lighting source. Hence, our study evaluates the transferability of the proposed inputs and kernels with a 3D active stereo system.

The \(\text {GP}\) models of the stereo sensor are denoted as \({}^{q}{\mathcal{G}\mathcal{P}}^{s_{2}}\) and \({}^{e}{\mathcal{G}\mathcal{P}}^{s_{2}}\). Note that the stereo system \(\text {GP}\) models were trained and evaluated independently of the SLSmodels.

4.2.1 Dataset

Similar to the proposed DoE from Sect. 3.2, the uniformly discretization of the measurement volume was also applied for this dataset acquisition. Taking into account the storage size of one measurement and the acquisition speed of the stereo sensor, a finer discretization of the acquisition space was used (see Table 9).

The models were trained and validated considering the same \({\text {features}}\) as for the SLS. Due to the high number of acquired observations and based on the findings associated to the influence of the dataset size (cf. Figs. 22 and 23 ), just 20% (2693) of the observations were used for training and the rest for validation. The datasets are denoted \(X^{s_{2},train}\), \(X^{s_{2},val}\), and \(X^{s_{2},test}\).

The models’ input and target variables were generated analogously as suggested in Sect. 3.1.3. In view of the sensor’s short baseline (65 mm) and based on empirical experiments, it was assumed that the image light intensity is similar for both cameras. Hence, the \(i_{avg}\) was calculated just for the left camera of the rc_visard 65.

4.2.2 Quality prediction model

Figure 16 illustrates the relation between the number of normalized points (\(p_{norm}\)) and the local light intensity (\(i_{avg}\)) for two features. The curve shows, similar to the SLS, a nonlinear decrease after a certain number of points has been reached. Unlike the SLS, the stereo sensor can still capture some surface points for higher exposures. Moreover, these observations demonstrate that the same light intensity can also lead to a different number of points. Hence, it can be assumed that a more accurate prediction should be expected if spatial inputs are considered.

In the first step, a 1D model was trained using the same kernel (9) to evaluate the influence of the image exposure represented by \(i_{avg}\). The evaluation results (see Table 5) yielding an \(\text {RMSE}\) approx. of 21% for the unseen observations indicate a similar correlation between the number of points and the local light intensity compared to the SLS. Hence, the model’s performance could be increased to an \(\text {RMSE}\) of 15% by considering a 4D model integrating positional inputs and observations within the training spatial range (\(X_{4}^{s_{2},test,i}\)). Note that if observations outside the spatial range (extrapolation) are considered, the model accuracy falls to an \(\text {RMSE}\) of 24%. In general these insights further confirm the high correlation of the spatial inputs to the quality of the point cloud. A more detailed visualization of the model trend and double standard deviation at different light intensities and depths is given in Fig 25. The graphs show, similar to the SLS model (see. Fig. 12), a nonlinear trend with multiple local optimum values depending on the spatial inputs and light intensity.

Furthermore, a detailed analysis showed that the model performed significantly better (up to an \(\text {RMSE}\) of 8%) on \({\text {features}}\) with bigger radii agreeing with the average performance of the SLSmodel. The poorer accuracy on the smaller features can be easily justified given that the stereo sensor has a lower resolution and point cloud density. Since the camera’s resolution directly affects the accuracy of the 2D and 3D masks, the computation of \(p_{norm}\) and \(i_{avg}\) is consequently less accurate for \({\text {features}}\) with small radii.

4.2.3 Exposure time model

Similar to the SLS, the observations on the graph displayed in Fig. 17 show mostly a linear trend between the light intensity and the exposure time for different positions. Moreover, it can also be appreciated that the curves have a high correlation with the feature’s position. For this reason, it is assumed that a \({}^{e}{\mathcal{G}\mathcal{P}}\) could also be used for the active stereo system to predict more effectively the exposure time based on the \(\text {feature}\)’s local light intensity and spatial relationships.

Analogously to the SLS, a training dataset \({}^{e}X^{s_{2}}\) was considered with a threshold of \(p_{norm}>\) 75%. The same incremental kernel design as proposed in Sect. 3.4 was followed. In the first step, a 1D kernel was considered to analyze the correlation magnitude between \(t_{exp}\) and \(i_{avg}\). The results (\(\text {R2}\) = 46.7%, \(\text {RMSE}\) = 3.6ms) of the 1D kernel (shown in Table 5) confirm that the light intensity is also ineffective for setting an adequate exposure time. Hence, the model was extended first to a 4D kernel considering just positional inputs and then to a 6D kernel considering the sensor inclination. The 6D model denoted as \({}^{e}{\mathcal{G}\mathcal{P}}_{6}^{s_{2}}\) with the kernel \({}^{e}k_{6}^{s_{2}} ({}^{e}X_{6}^{s_{2},train})\) (see Eq. 21) showed a considerable improvement on the test data as well as on the unseen observations with an \(\text {R2}\) = 65.7% and an \(\text {RMSE}\) of 2.9ms.

Moreover, a more detailed analysis showed that a performance equivalent to the test dataset could then be obtained by considering \({\text {features}}\) (\({}^{e}X_{6}^{s_{2},test,i}\)) within the training range (interpolation), i.e., \(\text {R2}\) = 97.4% and RMSE= 0.79ms. These findings align well to our previous observations from Sect. 3.4.8 confirming that the the DoE for the training dataset should consider an heterogeneous spatial distribution of the \({\text {features}}\). Furthermore, Fig. 26 confirms the nonlinear trend of the problem and the existence of local maxima and minima at different positions with different light intensities.

4.2.4 Discussion

The results of this subsection confirmed that spatial relationships must also be considered when adjusting the camera exposure of active 3D stereo systems. Moreover, the model evaluations showed that the quality of the point cloud and the exposure time can be predicted analogously to the SLSfollowing the proposed data-driven approach of the present study.

The \(\text {GP}\) models of the active stereo system showed a comparable performance to the SLSmodels demonstrating the high transferability and generalizability of the designed model inputs and \(\text {GP}\) kernels. However, it must be noted that the kernels’ validity is limited to the camera-projector configuration of the present study (cf. Sect. 2.1). For replication purposes, the suggested kernels can be used as a solid initial base. However, to optimize the accuracy of the models, a thorough and systematic kernel design should always be considered.

4.3 Different surface finishes

To evaluate the models’ performance with different surface finishes, exemplary \({\text {features}}\) from the car’s exterior were used. Figure 18 provides an overview of the selected \({\text {features}}\).

4.3.1 Dataset

To properly evaluate the \(\text {GP}\) models of both sensors, additional datasets with random sensor positions were collected for the SLS \(X^{out}\) and the active stereo sensor \(X^{s_{2},out}\). The \(\text {feature}\)s are categorized into three groups depending on their surface finishes. The dataset characteristics for both sensors are given in Table 9. The evaluation of all models comprises exclusively observations lying within the spatial range of the training dataset.

4.3.2 Quality prediction model

The results of the evaluation of the quality prediction models from both sensors are summarized in Table 6. For both sensors, it must be mentioned that the models show a performance similar to that of the test dataset (see. Tables 2 and 5 ) for the \({\text {features}}\) \(f_{1}^{o}\)–\(f_{3}^{o}\). These results confirm the models’ accuracy and transferability to similar surfaces.

However, both models show a higher uncertainty for the \({\text {features}}\) (\(f_{4}^{o}\),\(f_{5}^{o}\)), limiting to some extent the models’ usability for this surface finish. Moreover, it should be noted that most predictions exhibited an average overestimation of \(p_{norm}\). This observation agrees with a common understanding that the model’s accuracy could be improved by considering an additional input variable representing the surface reflectance properties.

4.3.3 Exposure time model

The accuracy of the exposure time models for both sensors showed a performance similar to that of the test features from the inside of the door (compare Table 3 and Table 5 with Table 6). While for the SLS model, \({}^{e}{\mathcal{G}\mathcal{P}}_{6}\), the predictions of the first two \(\text {feature}\) groups yielded an acceptable \(\text {RMSE}\) of 20ms, the evaluation of the \({\text {features}}\) \(f_{4-5}^{o}\) showed an outstanding performance with an \(\text {RMSE}\) below 10ms. On the contrary, the performance of the stereo sensor prediction model \({}^{e}{\mathcal{G}\mathcal{P}}_{6}^{s_{2}}\) revealed an even better prediction accuracy compared to the test \({\text {features}}\) from the door’s inside with an average \(\text {RMSE}\) under 2ms. These results also seem plausible, given that the radii of the outside \({\text {features}}\) were bigger than those from the inside.

On the one hand, our experiments showed that the SLS is more sensitive to the surface reflectance properties. For this reason, it can be expected that the model performance could be improved by integrating an input representing the surface reflectivity. On the other hand, the active stereo sensor seemed less sensitive to the surface reflectivity showing a better model’s generalizability for similar surfaces. Given the results from Sect. 3.4.5, it is also reasonable to assume that a 6D model trained with the interior door surface would outperform a 1D model trained on each specific surface from the outside.

4.3.4 Discussion

The evaluation results in this subsection demonstrate that the performance of the respective sensors’ \({}^{q}{\mathcal{G}\mathcal{P}}\) and \({}^{e}{\mathcal{G}\mathcal{P}}\) apply to similar surface surfaces to some extent. While the surface reflectance properties seem to have little influence on the \({}^{q}{\mathcal{G}\mathcal{P}}\)s’ performance, the material reflectivity showed a higher correlation to the exposure time affecting the \({}^{e}{\mathcal{G}\mathcal{P}}\)s’ prediction accuracy more significantly. Hence, our results suggest that the models’ performance should improve by considering an input variable characterizing the object’s reflectance properties. Such investigations fall outside the scope of this paper. Therefore, future studies should consider a more exhaustive evaluation including different surface finishes with more observations.

5 Conclusion

A well-known challenge when using active optical 3D sensors (e.g., SLSs, active stereo systems) for scanning an object’s surface is to adjust the image brightness using the camera’s exposure time to produce a dense point cloud without holes. This task becomes even more complicated in dealing with highly reflective materials with a complex surface topology that produce nonlinear and inhomogeneous illumination for the camera image. Therefore, the optimal parameterization of the sensor for such use cases is still done manually, as it requires an advanced multisensory perception (spatial and visual) of the acquisition environment.

5.1 Summary