Abstract

Cognitive biases are systematic cognitive distortions, which can affect clinical reasoning. The aim of this study was to unravel the most common cognitive biases encountered in in the peculiar context of the COVID-19 pandemic. Case study research design. Primary care. Single centre (Division of General Internal Medicine, University Hospitals of Geneva, Geneva, Switzerland). A short survey was sent to all primary care providers (N = 169) taking care of hospitalised adult patients with COVID-19. Participants were asked to describe cases in which they felt that their clinical reasoning was “disrupted” because of the pandemic context. Seven case were sufficiently complete to be analysed. A qualitative analysis of the clinical cases was performed and a bias grid encompassing 17 well-known biases created. The clinical cases were analyzed to assess for the likelihood (highly likely, plausible, not likely) of the different biases for each case. The most common biases were: “anchoring bias”, “confirmation bias”, “availability bias”, and “cognitive dissonance”. The pandemic context is a breeding ground for the emergence of cognitive biases, which can influence clinical reasoning and lead to errors. Awareness of these cognitive mechanisms could potentially reduce biases and improve clinical reasoning. Moreover, the analysis of cognitive biases can offer an insight on the functioning of the clinical reasoning process in the midst of the pandemic crisis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The COVID-19 pandemic had a major impact on the clinical reasoning processes of physicians and healthcare professionals; the term “COVID blindness” has been recently used to define this issue [1]. Clinical reasoning can be defined as “the sum of the thinking and decision-making processes associated with clinical practice (…) and it enables practitioners to take (…) the best judged action in a specific context” [2]. The context of pandemic is overshadowed by multiple uncertainties such as an ill-defined, still ongoing construction of COVID-19 illness scripts. The “illness script”, i.e. the organised representation in the provider’s mind of an illness, is a knowledge structure composed of 4 parts: “Fault” (i.e. the pathophysiological mechanisms), “Enabling Conditions” (i.e. signs and symptoms), “Consequences”, and “Management” [3,4,5,6].

Illness scripts are embedded within local, organisational, socio-cultural and global factors, which are referred to as “problem space” [2, 7] or, metaphorically, as a “maze of mental activity through which individuals wander when searching for a solution to a problem”. [8]

Further difficulties can arise when healthcare professionals are under a great deal of pressure to make decisions quickly. Time pressure is indeed a stress factor that can negatively impact diagnostic accuracy, as it can limit the number of hypotheses a physician can make [9]. As a matter of fact, patients with COVID-19 pneumonia can rapidly worsen, and require urgent care, thus critically reducing the optimal time for clinical reasoning process.

These contextual factors can provide a breeding ground for cognitive biases and errors in judgment and decision-making. The concept of cognitive bias was first introduced by Tversky and Kahneman in their seminal 1974 Science paper [10]; at first extensively studied in the field of psychology, the notion of cognitive bias has been explored in various fields, including medicine. Cognitive biases can be defined as systematic cognitive distortions (a misleading and false logical thought pattern) in the processing of information that can results in impaired judgement, especially when dealing with large amounts of information or when time is limited and thereby affect decision-making [11,12,13,14]; according to Wilson and Brekke [15] cognitive biases are, in a nutshell, “mental contaminations”, capable of driving unwanted responses because of an unconscious/ uncontrollable mental processing.

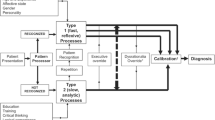

Clinical reasoning can be conceptualized through the “dual process” theory (or model) [16, 17]. Stemmed from the field of psychology (William James, 1890), this theory has been increasingly employed to illustrate medical reasoning since the 1970s’. According to this model, two types of mental processes exist, that jointly work together. These are called System 1 (Type 1): a fast, non-analytical, intuitive, heavily based on pattern recognition, and System 2 (Type 2): slow, analytical, hypothetico-deductive, involving a conscious explicit analytical approach [18]. Diagnostic errors can occur within both systems of thinking [12, 19]. Recently, a scoping review explored the state of research on clinical reasoning in emergency medicine (to note: only a few studies focused on clinical reasoning per se, and most were treatment oriented) [20]. This review demonstrated that the clinical reasoning process of emergency physicians is similar to that of other specialties, such as family medicine, and anaesthesiology, but contextual peculiarities.

The reasoning and decision-making during emergency situation have to be fast and sometimes might hinder the decision-making and lead to resurgence of certain cognitive biases (anchoring bias, for example) A few key studies have deciphered the implications of such biases on the decision-making of physicians [21, 22].

The COVID-19 pandemic, characterised by a number of clinical, diagnostic and therapeutic uncertainties, and at the same time by hospital overload of critical patients, can disrupt their clinical reasoning. Working in such a context can easily lead to errors attributable not only to inadequate knowledge, but also to cognitive biases [12, 18, 23].

This study is a case analysis aimed at unravelling the most commonly encountered cognitive biases in a hospital setting in the peculiar context of the COVID-19 pandemic.

Materials and methods

Given the exploratory nature of this study, and to allow an in-depth analysis to bring forward new hypotheses, an analysis of several cases was chosen. A case study research design was used to investigate the cognitive biases, which might arise during a sanitary health crisis. According to Hartley [24] and Yin [25] a case study is an empirical inquiry that investigates a current phenomenon within its real-life context, especially when the boundaries are not obvious. This makes this approach a tentative measure of the phenomena [26]. The use of multiple sources of evidence led us to create a small case study database which led to what Yin would name “maintaining the chain of evidence” [27].

Data collection

To showcase whether COVID-19 pandemic could affect clinical reasoning, one of the researchers (MC) sent in May 2020 a short survey to all residents and chief residents taking care of adult patients with a diagnosis of SARS-CoV-2 infection admitted in the Division of General Internal Medicine of University Hospitals of Geneva. MC asked to describe clinical cases in which they felt that their clinical reasoning was “disrupted” because of the unique context of the pandemic. Participant were not given a limit of text length for reporting their clinical case. All participants gave informed consent to participate in the study.

Bias grid design and analysis

A trio (MC, JS, MN) with a prior expertise in the understanding of cognitive structures and of clinical reasoning processes, performed a qualitative analysis of the clinical cases. Using an hybrid approach (deductive and inductive) the trio created a bias grid (Table 1) encompassing a dozen cognitive biases most frequently observed in the medical setting (Table 1, N 1 to 12) [12, 16, 17, 28] as well as some well-known additional biases not frequently encountered in the medical literature (Table 1, N 13 to 17) that were identified after reading the clinical cases [9,10,11, 29].

The members of the trio independently analyzed the clinical cases and assessed the likelihood (highly likely, plausible, not likely) of the different biases for each case. The results of the three distinct analyses were merged together. In case of disagreement between the researchers, the trio re-read and re-analyzed the case reports, and an agreement was achieved. The results were finally crosschecked by two additional researchers (NJ and MCA) before final decisions.

Results

Out of the 169 physicians contacted, 9.4% (10; 5 females; 4 chief residents, 6 residents) provided a case. Seven reported clinical cases (approximately 200 words long each) were sufficiently complete to be suitable for analysis. Physicians who provided these cases agreed with the use and publication of the anonymised data. Transcriptions of the clinical cases are provided in Table 2. The likelihood of all cognitive biases for each referred clinical case is shown in Table 3. The most common, “highly likely”, cognitive biases that stem from our analysis were cognitive dissonance (6/7), premature closure (6/7), availability bias (5/7), confirmation bias (5/7), and anchoring bias (4/7); moreover, cognitive dissonance and premature closure co-occurred as “highly likely” in 5/7 cases.

Cognitive dissonance can be defined as the psychological discomfort encountered when simultaneous thoughts are in conflict with each other suggesting an incorrect diagnosis. It was encountered, for example, in case 6, in which doctors felt certain of a diagnosis of COVID-19 and discounted the multiple evidence suggesting the diagnosis was incorrect (e.g. an atypical clinical course and several negative nasopharyngeal swabs, expectorations and serologies, not supporting the initial diagnosis). The following verbatim illustrated this dissonance “often […] we asked ourselves about his (scil. the patient) safety, in a COVID-19 unit with his negative tests”.

Availability bias and confirmation bias occurred in 5/7 clinical cases. Availability bias, i.e. to consider a diagnosis more likely because it readily comes to mind, could be easily recognized in case 1 (verbatim: “A patient in his 70ies… coming from Italy… “Unluckily” […] at that time the epicentre of COVID-19. He was taken to intensive care in a COVID-19 area”), 3 (verbatim: [scil. patient was…] In the emergency department […] his symptoms: asthenia and mild dyspnea (possibly a “small” COVID-19)), and 7 “[verbatim] A man in his seventies consulted the emergency department because of a generalized weakness, fatigue and fever […] The patient […] was transferred to a normal medical ward for stable patients with COVID-19”.

Confirmation bias (i.e. to look only for symptoms or signs that may confirm a diagnostic hypothesis) was present in case 5 where doctors appeared to interpret clinical findings only to support a previous diagnostic hypothesis (verbatim: “Blood smears identified Plasmodium falciparum and she was started on IV artesunate […] Three days after, parasitemia was negative […] On day 4 the patient presented fever […] this could have been still attributable to malaria […]).Premature closure, i.e. to fail to consider reasonable alternatives after an initial diagnosis, was a major reason for the right diagnosis to go undetected. For example, in case 1, in which symptoms (orthopnoea), imaging findings (venous diversion of chest X ray), and predisposing factors (ischemic/rhythmic heart disease) for acute pulmonary oedema were neglected in favour of a diagnosis of SARS-CoV-2 infection, despite a negative C-Reactive Protein value (i.e. no inflammation), and several SARS-CoV-2-negative RT-PCR on nasopharyngeal swabs. In case 7, although clinical features (high fever, tachycardia, confusion, heart murmur, Osler nodules) and predisposing factors (biological heart-valve prostheses) suggested infectious endocarditis, it was first rapidly concluded to a diagnosis of COVID-19 pneumonia although respiratory parameters were normal, and SARS-CoV-2 RT-PCR on nasopharyngeal swabs was negative.

Anchoring bias (i.e. “To be unable to adjust the initial diagnostic hypothesis when further information becomes available”) could be recognised in 4/7 clinical cases, for example in case 4([verbatim]: “Initially we suspected COVID-19. The initial investigations were concentrated on the viral etiology. Given the rapid deterioration of his condition, the effusions which recurred, the decline in renal function […] and 2 negative SARS-CoV-2 RT-PCR in nasopharyngeal swab… he was eventually transferred to a non-COVID-19 hospital […]).

The cognitive biases that were the least likely in the analysed cases were visceral bias, choice overload bias and decision fatigue (evaluated as “not likely” in 6/7, 6/7 and 4/7 cases, respectively).

Cognitive dissonance and premature closure were associated with default bias and in-group bias in 3/7 clinical cases. In case 6, for example, where both cognitive dissonance and premature closure were “highly-likely”, default bias (i.e. to stick with previously made decisions, regardless of changing circumstances) and in-group bias/favouritism (i.e. to favour those who belong to the same group that they do) could be easily evoked. Not only doctors adhered to the prior hypothesis (verbatim: “[…] in the face of uncertainty, the patient remains in a COVID-19 unit), although a scanty evidence. Moreover, the hypothesis was judged “positively” by the group (verbatim: “Often, with the infectious disease specialists and the resident, we asked ourselves […]), discounting the validity of information against an alternative diagnosis.

Discussion

Stressful situations (e.g. characterized by time-pressure, high stakes, multitasking, fatigue, patients overload) [30] are particularly common in the intensive care unit (ICU) and the operating room (defined as “hotbeds for human error”) [31]. Likewise emergency medicine, characterized by “poor access to information and with limited time to process it” [32] is potentially “a natural laboratory of error” [33].

Among the cognitive and affective factors (either organization- or individual-related) that can act as error‐catalyzing factors [34], cognitive biases, i.e. systemic diagnostic distortions, [11,12,13,14,15, 17, 35] associate with diagnostic inaccuracies and flawed clinical decisions [21, 22, 36]. Interestingly, stress can exacerbate biases by affecting the emotion-cognition balance [37]. Likewise, a stressor like time pressure can limit the number of hypotheses a physician can make; this is extremely suggestive of a premature closure of the diagnostic process [9].

Broad assessment of cognitive bias in the emergency setting has been seldom explored. We could identify only one original paper [38] who explored the most common biases in emergency physicians. By using the Rationality Quotient™ Test the authors found that, although common, cognitive biases where less represented in emergency physicians in comparison with laypersons (in particular blind spot and representative bias).

The COVID-19 pandemic shares similarities with the emergency setting, inasmuch as it is a stressful context dominated by uncertainty [7, 39]. A literature research focused on the occurrence of cognitive biases in this pandemic context retrieved only a few original articles (mostly position papers, reviews, case reports and small case series). In particular, the study by Lucas et al. [40] investigated the patients upgraded to the ICU following admission to non-critical care units during the first wave of COVID-19 pandemic (March to July 2020; N = 18) is a US hospital. A group of physicians were asked to analyse the cases, and to assign one cognitive bias (chosen among availability bias, anchoring bias, premature closure, and confirmation bias). They concluded that premature closure (72.2%), anchoring (61.1%), and confirmation bias (55.6%) were more likely to be “responsible” of the patients’ upgrades.

Compared to that of Lucas [40] we did not focus exclusively on patients upgraded to the ICU. All patients hospitalised for COVID-19 were potentially worthy of analysis. Also, employing a hybrid approach involving deductive (theoretical) and inductive (data-driven) processes to bias grid construction allowed additional biases to emerge from the study of cases. This approach is particularly useful to capture the richness and complexity of bias research [41].

At the best of our knowledge, our study is the first one to perform a broad investigation of the type of biases that occurred during the COVID-19 pandemic, and how they can affect decision-making. Moreover, we also analysed how cognitive biases interact and influence each other.

The concept of cognitive dissonance has been introduce by the psychologist Leon Festinger in 1957 [42]. Cognitive dissonance bias occurs when contradictory cognitions intersect, thus producing discomfort and underlying tension. To minimize these feelings, avoidance and/or rejection responses are often elicited; this can manifest, as in the collected cases, by discounting or ignoring information (e.g. negative SARS-CoV-2 tests, atypical clinical course) that disconfirm a previous hypothesis (e.g. a COVID-19 diagnosis).

It is not surprising that premature closure occurrence was “highly likely” as frequent as that of the bias of cognitive dissonance. Indeed, premature closure is known to be encountered more commonly than any other type of cognitive bias, at least in medicine, and it is linked to a high proportion of diagnostic errors [43,44,45,46,47]. In the “liquid time” of pandemic (i.e. characterized by rapid change where the only constant is change itself according to Baumann’s [48, 49]), time pressure can be particularly detrimental and force to premature rejection of alternatives, thus leading to premature diagnostic closure. Indeed, inferencing (i.e. to formulate a hypothesis when clear deductions are not available) [50] that a diagnosis is possible, is less time consuming than identifying all the aspects of the non-preferred, yet possible, alternatives (inference of impossibility) [51, 52]. One can conceive that premature closure can arise from an attempt to avoid cognitive dissonance by prematurely rejecting other possible, but “dissonant” diagnoses; indeed, premature closure frequently co-occurred with the bias of cognitive dissonance.

The association of cognitive dissonance and premature closure with in-group bias (or in-group favoritism) is understandable, inasmuch as adhering to ideas coming from members of one's in-group can help overcome the discomfort produced by contradictory information and prematurely close on a “shared”, but wrong, diagnosis. This can be seen, for example, in case 6 where, albeit uncomfortable uncertainties regarding patient’s COVID status, it was decided by the in-group peers not to move the patient to another “non-COVID” unit. According to the psychologist Irving L. Janis, the deep involvement in a cohesive in-group can lead to groupthink, whereby the strive for keeping a conformity or a harmony amongst the members of the in-group “override their motivation to realistically appraise alternative courses of action”; [53] this phenomenon can result in irrational decision-making. [54].

Cognitive dissonance and premature closure also tended to associate with default (or status quo) bias, i.e. when people stick to previously made decisions and/or prefer things to stay the same by doing nothing (inertia) although this occurs in spite of changing circumstances [55] Time pressure can foster this bias, as recently suggested by Couto et al. [56] Moreover, preferring status quo options can serve to reduce the negative emotions connected to choice making (anticipatory emotions) [57, 58] Adhering to a status quo can be seen as a coping strategy against the stressful and “cognitive expensive” situation of decision making in the “liquid times” of pandemic, dominated by unpredictability and uncertainty and involving conflicting attitudes, beliefs or behaviors (i.e. cognitive dissonance). In this context, premature closure is a rapid way to preserve a “no change” attitude.

Availability bias is a mental shortcut (heuristic) to help the decision-making to occur faster, since it reduces the time and the effort involved in decision making [16, 17]. It often occurs as a consequence to recent experiences with similar clinical cases [29]. In such situations, physicians tend to weight their clinical reasoning toward more recent information, easily coming to mind [59]. During the pandemic waves, physicians were exposed to multiple patients with similar clinical features in rapid succession; this indeed is a suitable condition for the occurrence of the availability bias (see case 3 above).

Confirmation bias, i.e. to give greater weight to data that support a preliminary diagnostic assumption while failing to seek, or dismissing, evidence contradictory to the favored hypothesis [60]. This bias can lead to premature closure, and become a source of error. It has been suggested that confirmation bias can arise from an attempt to avoid cognitive dissonance [61].

Closely related to the confirmation bias, is the anchoring bias [60]. This bias occurs when interpreting evidence, and refers to the tendency of physicians to prioritize information and data supporting their initial diagnosis, making them unable to adjust their initial hypothesis when further information becomes available. This anchoring can eventually lead to wrong decisions, as in clinical case 1.

It is worth noticing that, akin to our study, the most frequent biases in Lucas’ study [40] were premature closure, anchoring bias and confirmation bias—and this notwithstanding the methodological and conceptual differences.

We can thus hypothesize that the pandemic context had the potential to heavily influence the clinical reasoning processes of physicians, and that each clinical case carried different embedded risks for cognitive biases.

Different strategies of cognitive “debiasing” (i.e. the mental correction of a mental contamination) [15] have been suggested [11, 12, 29, 62, 63]. Among these, deliberate reflection (i.e. look for evidence that fits or contradicts an initial diagnostic hypothesis) has shown some success [64], while the efficiency of other approaches still require further investigations.

Debiasing is far from being simple, satisfying, and effective. The reasons are many, such as [12, 35]: Type 2 thinking is not unequivocally less prone to cognitive bias that Type 1 thinking; debiasing involves meta-awareness and meta-cognition [65], i.e. self-diagnostic processes that are per se error prone [66]; bias identification is unreliable, even among experts, and is often biased (hindsight bias) [67]. Paraphrasing Kahneman, despite a lifetime spent studying bias, we are far better in recognizing the errors of others than our owns [68].

Moreover, one should not forget that the construction of the “illness script” of COVID-19 is still ill defined and ongoing. Until solid knowledge and understanding of the disease is built up, and knowledge gaps are filled, clinical reasoning cannot be but flawed [21, 66, 69,70,71].

Learning which biases arise, and how, is not a golden hammer to mitigate errors in clinical reasoning. However, this awareness remains “interesting, humbling and motivating” [66], and may facilitate a much desirable shift in medical culture: improving the tolerance to uncertainty in clinical life [72, 73].

Study’s strengths and limitations

One of the key strengths of this study is that it has allowed us to explore, through an empirical design in real clinical context, which cognitive biases were at play in real clinical context and has deepened our understanding on how they interact in these unforeseen and ever-changing contexts.

This study has some limitations. Participants were asked to describe clinical cases in which they felt their clinical reasoning could have gone awry. The way they described their cases could thus potentially reflect their own feeling on cognitive biases. The overall response rate was low (9.4%), in an expected range for medical practitioners [74, 75]. This could be attributed to the context of the COVID-19 sanitary crisis (particularly time pressure), as well as to the efforts required from participants who were asked to analyse and describe their clinical cases. Additionally, participants may have had no awareness about potential clinical reasoning problems arising when taking care of patient with possible COVID-19. We also solicited a large number of physicians, without specific targeting, thus explaining a large denominator.

This case study design is of exploratory nature and rooted in an empirical inquiry, which Yin described as “the chain of events” [27]. This allows the exploration of the clinical reasoning process at play with the underlying context of the pandemic and how this might hinder the decision-making process. Our results can, therefore, be seen as clues to pursue and further investigate.

Conclusions

Cognitive biases are part of everyday medical practice. The analysis of clinical cases in the peculiar context of the COVID-19 pandemic has highlighted several biases that can affect clinical reasoning and lead to errors. The emergence of cognitive biases in this new context, that shares similarities with emergency medicine, has never been assessed before and offers an insight on the functioning of the “clinical brain” in the midst of the pandemic crisis.

Although physicians should be aware of these cognitive mechanisms in order to potentially reduce biases and improve clinical reasoning, this is far from being sufficient. Knowledge and experience matter. For the moment, the illness-script of COVID-19 is still under construction, and we may hope for a mitigation of clinical errors with improvement of knowledge deficit.

Data statement section

Dataset available from the corresponding author at matteo.coen@hcuge.ch.

References

Brown L (2020) COVID blindness. Diagnosis 7(2):83–84. https://doi.org/10.1515/dx-2020-0042

Higgs J, Jones M (2008) Clinical decision making and multiple problem spaces. In: Higgs J, Jones M (eds) Clinical reasoning in the health professions, 3rd edn. Butterworth-Heineman Ldt, Oxford

Feltovich P, Barrows H (1984) Issues of generality in medical problem-solving. In: Schmidt HG (ed) Tutorials in problem-based learning. Van Gorcum, Assen

Custers E, Boshuizen H, Schmidt H (1988) The role of illness scripts in the development of medical diagnostic expertise: results from an interview study. Cogn Instr 16(4):367–398

Charlin B, Boshuizen HP, Custers EJ et al (2007) Scripts and clinical reasoning. Med Educ 41(12):1178–1184. https://doi.org/10.1111/j.1365-2923.2007.02924.x

Keemink Y, Custers E, van Dijk S et al (2018) Illness script development in pre-clinical education through case-based clinical reasoning training. Int J Med Educ 9:35–41. https://doi.org/10.5116/ijme.5a5b.24a9

Audétat M-C, Sader J, Coen M (2020) Clinical reasoning and COVID 19 pandemic: current influencing factors Let us take a step back! Internal Emerg Med. https://doi.org/10.1007/s11739-020-02516-8

Lesgold A (1988) Problem solving. In: Sternberg RJS, Edward E (eds) The psychology of human thought. Cambridge University Press, Cambridge

Al-Qahtani DA, Rotgans JI, Mamede S et al (2018) Factors underlying suboptimal diagnostic performance in physicians under time pressure. Med Educ 52(12):1288–1298. https://doi.org/10.1111/medu.13686

Tversky A, Kahneman D (1974) Judgment under uncertainty: heuristics and biases. Science 185(4157):1124. https://doi.org/10.1126/science.185.4157.1124

Croskerry P, Singhal G, Mamede S (2013) Cognitive debiasing 1: origins of bias and theory of debiasing. BMJ Qual Saf 22(Suppl 2):ii58–ii64. https://doi.org/10.1136/bmjqs-2012-001712

Nendaz M, Perrier A (2012) Diagnostic errors and flaws in clinical reasoning: mechanisms and prevention in practice. Swiss Med Wkly 142:w13706. https://doi.org/10.4414/smw.2012.13706

Mamede S, Schmidt HG, Rikers RM et al (2008) Influence of perceived difficulty of cases on physicians’ diagnostic reasoning. Acad Med 83(12):1210–1216. https://doi.org/10.1097/ACM.0b013e31818c71d7

Tversky A, Kahneman D (1981) The framing of decisions and the psychology of choice. Science 211(4481):453–458. https://doi.org/10.1126/science.7455683

Wilson TD, Brekke N (1994) Mental contamination and mental correction: unwanted influences on judgments and evaluations. Psychol Bull 116(1):117–142. https://doi.org/10.1037/0033-2909.116.1.117

Norman G (2009) Dual processing and diagnostic errors. Adv Health Sci Educ 14(1):37–49. https://doi.org/10.1007/s10459-009-9179-x

Norman GR, Eva KW (2010) Diagnostic error and clinical reasoning. Med Educ 44(1):94–100. https://doi.org/10.1111/j.1365-2923.2009.03507.x

Pelaccia T, Tardif J, Triby E et al (2011) An analysis of clinical reasoning through a recent and comprehensive approach: the dual-process theory. Med Educ. https://doi.org/10.3402/meo.v16i0.5890

Monteiro S, Sherbino J, Sibbald M et al (2020) Critical thinking, biases and dual processing: The enduring myth of generalisable skills. Med Educ 54(1):66–73. https://doi.org/10.1111/medu.13872

Pelaccia T, Plotnick LH, Audétat MC et al (2020) A scoping review of physicians’ clinical reasoning in emergency departments. Ann Emerg Med 75(2):206–217. https://doi.org/10.1016/j.annemergmed.2019.06.023

Norman GR, Monteiro SD, Sherbino J et al (2017) The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med 92(1):23–30. https://doi.org/10.1097/acm.0000000000001421

Croskerry P (2009) Context is everything or how could I have been that stupid? Healthc Q. https://doi.org/10.12927/hcq.2009.20945

Kahneman D, Klein G (2009) Conditions for intuitive expertise: A failure to disagree. Am Psychol 64(6):515–526. https://doi.org/10.1037/a0016755

Hartley J (2004) Case study research. In: Cassell CSG (ed) Essential guide to qualitative methods in organizational research. Sage, London, pp 323–333

Yin R (2014) Case Study Research Design And Methods, 5th edn. Sage, Thousand Oaks

Kohlbacher F (2006) The Use of Qualitative Content Analysis in Case Study Research. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 7(1)

Yin R (2003) Applications of case study research, 3rd edn. Sage, Thousand Oaks

Ark TK, Brooks LR, Eva KW (2007) The benefits of flexibility: the pedagogical value of instructions to adopt multifaceted diagnostic reasoning strategies. Med Educ 41(3):281–287. https://doi.org/10.1111/j.1365-2929.2007.02688.x

Mamede S, Van Gog T, Van Den Berge K et al (2010) Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA 304(11):1198. https://doi.org/10.1001/jama.2010.1276

Croskerry P (2014) ED cognition: any decision by anyone at any time. CJEM 16(01):13–19. https://doi.org/10.2310/8000.2013.131053

Bogner M (1994) Introduction. Lawrence Erlbaum Associates, Hillsdale

Croskerry P (2003) The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med 78(8):775–780

Croskerry P, Sinclair D (2001) Emergency medicine: a practice prone to error? CJEM 3(4):271–276. https://doi.org/10.1017/s1481803500005765s

Seshia SS, Bryan Young G, Makhinson M et al (2018) Gating the holes in theSwisscheese (part I): Expanding professor Reason’s model for patient safety. J Eval Clin Pract 24(1):187–197. https://doi.org/10.1111/jep.12847

Gopal DP, Chetty U, O’Donnell P et al (2021) Implicit bias in healthcare: clinical practice, research and decision making. Future Healthc J 8(1):40–48. https://doi.org/10.7861/fhj.2020-0233

Saposnik G, Redelmeier D, Ruff CC et al (2016) Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak 16(1):138. https://doi.org/10.1186/s12911-016-0377-1

Yu R (2016) Stress potentiates decision biases: a stress induced deliberation-to-intuition (SIDI) model. Neurobiol Stress 3:83–95. https://doi.org/10.1016/j.ynstr.2015.12.006

Pines JM, Strong A (2019) Cognitive biases in emergency physicians: a pilot study. J Emerg Med 57(2):168–172. https://doi.org/10.1016/j.jemermed.2019.03.048

Martínez-Sanz J, Pérez-Molina JA, Moreno S et al (2020) Understanding clinical decision-making during the COVID-19 pandemic: a cross-sectional worldwide survey. EClinicalMedicine 27:100539. https://doi.org/10.1016/j.eclinm.2020.100539

Lucas NV, Rosenbaum J, Isenberg DL et al (2021) Upgrades to intensive care: the effects of COVID-19 on decision-making in the emergency department. Am J Emerg Med 49:100–103. https://doi.org/10.1016/j.ajem.2021.05.078

Boyatzis R (1998) Transforming qualitative information: thematic analysis and code development. Sage, Thousand Oaks

Festinger L (1957) A theory of cognitive dissonance. Stanford University Press, Stanford

Berner ES, Graber ML (2008) Overconfidence as a cause of diagnostic error in medicine. Am J Med 121(5 Suppl):S2-23. https://doi.org/10.1016/j.amjmed.2008.01.001

Schwartz AEA (2008) Clinical reasoning in medicine. In: Higgs JJM, Loftus S, Christensen N (eds) Clinical reasoning in the health professions. Elsevier, Edinburgh

Voytovich AE, Rippey RM, Suffredini A (1985) Premature conclusions in diagnostic reasoning. J Med Educ 60(4):302–307. https://doi.org/10.1097/00001888-198504000-00004

Kuhn GJ (2002) Diagnostic errors. Acad Emerg Med 9(7):740–750. https://doi.org/10.1111/j.1553-2712.2002.tb02155.x

Dubeau CE, Voytovich AE, Rippey RM (1986) Premature conclusions in the diagnosis of iron-deficiency anemia: cause and effect. Med Decis Mak 6(3):169–173. https://doi.org/10.1177/0272989x8600600307

Trede FHJ (2019) Collaborative decision making in liquid times. In: Higgs J, Jones M (eds) Clinical Reasoning in the Health Professions. Elsevier, Edinburgh

Bauman Z (2012) Liquid modernity. Polity Books, Cambridge

Higgs JJM (2019) Multiple spaces of choice, engagement and influence in clinical decision making. In: Higgs JJG, Loftus S, Christensen N (eds) Clinical reasoning in the health professions, 4th edn. Elsevier, New York

Steinbruner JD (1974) The cybernetic theory of decision. Princeton University Press, Princeton

Khemlani SS, Johnson-Laird PN (2017) Illusions in reasoning. Minds Mach 27(1):11–35. https://doi.org/10.1007/s11023-017-9421-x

Janis IL (1972) Victims of groupthink: A psychological study of foreign-policy decisions and fiascoes. Houghton Mifflin, Oxford

Turner ME, Pratkanis AR (1998) A social identity maintenance model of groupthink. Organ Behav Hum Decis Process 73(2):210–235. https://doi.org/10.1006/obhd.1998.2757

Samuelson W, Zeckhauser R (1988) Status quo bias in decision making. J Risk Uncertain 1(1):7–59. https://doi.org/10.1007/BF00055564

Couto J, van Maanen L, Lebreton M (2020) Investigating the origin and consequences of endogenous default options in repeated economic choices. PLoS ONE 15(8):e0232385. https://doi.org/10.1371/journal.pone.0232385

Riis J, Schwarz N (2000) Status quo selection increases with consecutive emotionally difficult decisions. Poster presented at the meeting of the Society for Judgment and Decision Making, New Orleans, LA

Anderson CJ (2003) The psychology of doing nothing: forms of decision avoidance result from reason and emotion. Psychol Bull 129(1):139–167. https://doi.org/10.1037/0033-2909.129.1.139

Wellbery C (2011) Flaws in clinical reasoning: a common cause of diagnostic error. Am Fam Physician 84(9):1042–1048

Elston DM (2020) Confirmation bias in medical decision-making. J Am Acad Dermatol 82(3):572. https://doi.org/10.1016/j.jaad.2019.06.1286

Mithoowani S, Mulloy A, Toma A et al (2017) To err is human: a case-based review of cognitive bias and its role in clinical decision making. Canad J Gen Internal Med. https://doi.org/10.22374/cjgim.v12i2.166

Mamede S, Schmidt HG, Penaforte JC (2008) Effects of reflective practice on the accuracy of medical diagnoses. Med Educ 42(5):468–475. https://doi.org/10.1111/j.1365-2923.2008.03030.x

Graber ML, Sorensen AV, Biswas J et al (2014) Developing checklists to prevent diagnostic error in emergency room settings. Diagnosis 1(3):223–231. https://doi.org/10.1515/dx-2014-0019

Mamede S, Splinter TA, van Gog T et al (2012) Exploring the role of salient distracting clinical features in the emergence of diagnostic errors and the mechanisms through which reflection counteracts mistakes. BMJ Qual Saf 21(4):295–300. https://doi.org/10.1136/bmjqs-2011-000518

Norman G (2009) Dual processing and diagnostic errors. Adv Health Sci Educ 14(S1):37–49. https://doi.org/10.1007/s10459-009-9179-x

Dhaliwal G (2017) Premature closure? Not so fast. BMJ Qual Saf 26(2):87–89. https://doi.org/10.1136/bmjqs-2016-005267

Zwaan L, Monteiro S, Sherbino J et al (2017) Is bias in the eye of the beholder? A vignette study to assess recognition of cognitive biases in clinical case workups. BMJ Qual Saf 26(2):104–110. https://doi.org/10.1136/bmjqs-2015-005014

Kahneman D (2011) Thinking, fast and slow. Farrar, Straus and Giroux, New York

Rikers RMJP, Loyens S, te Winkel W et al (2005) The role of biomedical knowledge in clinical reasoning: a lexical decision study. Acad Med 80(10):945–949

Graber ML, Carlson B (2011) Diagnostic error: the hidden epidemic. Physician Exec 37(6):12–14

Monteiro SD, Sherbino JD, Ilgen JS et al (2015) Disrupting diagnostic reasoning: do interruptions, instructions, and experience affect the diagnostic accuracy and response time of residents and emergency physicians? Acad Med 90(4):511–517. https://doi.org/10.1097/acm.0000000000000614

Simpkin AL, Schwartzstein RM (2016) Tolerating uncertainty: the next medical revolution? N Engl J Med 375(18):1713–1715. https://doi.org/10.1056/NEJMp1606402

Audétat M-C, Nendaz M (2020) Face à l’incertitude : humilité, curiosité et partage. Pédagogie Médicale 21(1):1–4. https://doi.org/10.1051/pmed/2020032

Aitken C, Power R, Dwyer R (2008) A very low response rate in an on-line survey of medical practitioners. Aust N Z J Public Health 32(3):288–289. https://doi.org/10.1111/j.1753-6405.2008.00232.x

Kippen R, O’Sullivan B, Hickson H et al (2020) National survey of COVID 19 challenges. Aust J Gen Practitioners 49:745–751

Acknowledgements

The authors wish to thank all the doctors who showed their interest and support in this project and contributed to case collection.

Funding

This work was supported by the Edmond J SAFRA foundation for clinical research in internal medicine grant number CGR 75976/ME-HERO.

Author information

Authors and Affiliations

Contributions

MC (guarantor): conceived and designed the analysis; collected the data; performed the analysis; wrote the paper; approved the version to be published. JS (guarantor): conceived and designed the analysis; collected the data; performed the analysis; wrote the paper; approved the version to be published. NJ: contributed to data analysis; critically revised the Work, Approved the version to be published. M-CA: conceived the analysis; Contributed to data analysis; critically revised the paper approved the version to be published. MN: conceived and designed the analysis; performed the analysis; critically revised the Work, Approved the version to be published.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interests.

Transparency declaration

The lead authors (MC; JS) affirm that this manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned (and, if relevant, registered) have been explained.

Patient and public involvement

No patient involved.

Ethical statement

According to the Human Research Ethics Committee of the Canton of Geneva, the project is not covered by the Federal Act on Research involving Human Beings (Human Research Act). Therefore, ethical approval was not needed; a waiver from the Cantonal Commission of Research Ethics was obtained (20.05.2020). All participants gave informed consent to participate in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The article belongs to COVID 19.

Rights and permissions

About this article

Cite this article

Coen, M., Sader, J., Junod-Perron, N. et al. Clinical reasoning in dire times. Analysis of cognitive biases in clinical cases during the COVID-19 pandemic. Intern Emerg Med 17, 979–988 (2022). https://doi.org/10.1007/s11739-021-02884-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11739-021-02884-9