Abstract

Collective sensing is an emergent phenomenon which enables individuals to estimate a hidden property of the environment through the observation of social interactions. Previous work on collective sensing shows that gregarious individuals obtain an evolutionary advantage by exploiting collective sensing when competing against solitary individuals. This work addresses the question of whether collective sensing allows for the emergence of groups from a population of individuals without predetermined behaviors. It is assumed that group membership does not lessen competition on the limited resources in the environment, e.g., groups do not improve foraging efficiency. Experiments are run in an agent-based evolutionary model of a foraging task, where the fitness of the agents depends on their foraging strategy. The foraging strategy of agents is determined by a neural network, which does not require explicit modeling of the environment and of the interactions between agents. Experiments demonstrate that gregarious behavior is not the evolutionary-fittest strategy if resources are abundant, thus invalidating previous findings in a specific region of the parameter space. In other words, resource scarcity makes gregarious behavior so valuable as to make up for the increased competition over the few available resources. Furthermore, it is shown that a population of solitary agents can evolve gregarious behavior in response to a sudden scarcity of resources, thus individuating a possible mechanism that leads to gregarious behavior in nature. The evolutionary process operates on the whole parameter space of the neural networks; hence, these behaviors are selected among an unconstrained set of behavioral models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Group dynamics is a topic of interest in both the field of sociology (Forsyth 2009; Lewin 1947; Hamilton et al. 2007; Perc et al. 2013) and that of biology (Krause and Ruxton 2002; Giraldeau and Caraco 2000; Couzin et al. 2002; Nowak et al. 2010). Several factors are considered to support the existence of groups: Social hunters see their feeding efficiency increase as groups can prey on larger animals (Schoener 1971; Krebs 1973) and can resist the competition of other groups (Puurtinen and Mappes 2009). Groups also come with antipredatory advantages as individuals see their chance of survival increase (Bertram 1978; Elgar 1989). Moreover, close contact with other individuals also increases reproductive opportunities (Bertram 1978) and information exchange (Valone 1989; Kurland and Beckerman 1985).

The question that motivates this work is: Can collective sensing lead to the emergence of groups in a population of individualistic agents, without making any assumption about the individual behavior? Collective sensing is an emergent phenomenon present in nature (Berdahl et al. 2013), and it refers to the ability of a group to sense what is beyond the capabilities of the individual. The additional information about the environment, conveyed through collective behavior, provides individuals with an evolutionary advantage (Elgar 1989; Seeley 2009; Hein et al. 2015; Torney et al. 2011; Bhattacharya and Vicsek 2014). Information plays a key role in nature: It enabled the evolution of complexity in nature (Szathmáry and Smith 1995), and it shapes individual behavior (Vergassola et al. 2007), group behavior (Skyrms 2010) and collective intelligence (Garnier et al. 2007). This paper argues that information can be responsible for the existence of groups. The information considered in this work is about food location, but it is also suitable for other interpretations, e.g., mating opportunities, suitable nest locations (Berdahl et al. 2013). For example, bird assemblages, e.g., communal roosts, are believed to have evolved as information exchanges (Ward and Zahavi 1973). The approach taken in this work is based on simulation and is general across species.

An agent-based simulation environment is used to model a population of agents which perform a foraging task in a patchy environment with hidden resources. Agents compete for the same limited resources, and their fitness depends on their foraging strategy. Two foraging strategies are compared: random walk, an individualistic strategy that ignores other agents, and gregariousness, which attracts agents toward crowded areas. Experiments show that gregarious agents have an evolutionary advantage over random walkers if food sources are rare, as it increases the foraging competition between agents, but collective sensing makes up for this disadvantage by increasing the efficiency of finding resources. The success of gregarious agents is compromised for high population densities as larger groups increase the rate of resource consumption, thus making exploration more successful than exploitation of known resources. Moreover, it has been shown that gregariousness can emerge from a population of individualistic agents as an evolutionary response to a reduction in resource availability [see also (Kurland and Beckerman 1985)]. The main result of this work is to show and explain how evolution and collective sensing interact so that gregariousness, and hence groups, emerges from a population of randomly initialized agents. Initially, evolution selects agents that forage for resources for longer periods. This individual behavior produces a pattern in the collective behavior that correlates to food location. Agents who are able to exploit this pattern through collective sensing, i.e., gregarious agents, gain an evolutionary advantage; therefore, groups form.

The contribution of this work can be summarized as follows: (i) It unifies and expands on the results of previous work in a framework that combines evolution and social learning via neural networks (Hein et al. 2015; Torney et al. 2011); (ii) it quantifies the effect of environmental conditions on the evolutionary fitness of different foraging strategies; (iii) it identifies environmental conditions under which a gregarious population can invade an individualistic population; (iv) it validates previous work by showing that, in a limited region of the parameter space, gregariousness is the evolutionary-fittest strategy; and (v) it shows empirically that groups can emerge from a randomly initialized population as a result of interactions between natural selection and collective sensing.

The article is structured as follows. In Sect. 2 this work is situated within the agent-based modeling literature. The experiments are described in Sect. 3, while the model is described in Sect. 4. Section 5 comments on the results and their implications, which are summarized and discussed in Sect. 6.

2 Literature review

Literature on agent-based modeling of group and societal issues spans over decades. Many agent-based models of society concentrate on the problem of cooperation (Helbing and Yu 2009) or coordination (Mäs et al. 2010). This work considers instead a simpler scenario where agents cannot actively communicate; thus, neither cooperation nor coordination is possible. A foraging task is modeled, where agents compete for the same pool of resources.

This work relaxes many common assumptions in the literature:

-

Environmental factors that favor groups, e.g., (Bowles and Gintis 2004; Montanier and Bredeche 2013; Torney et al. 2011). Cooperation cannot be achieved as agents are unable to communicate. Moreover, agents compete for the same resources and there are no advantages in being part of a group.

-

Kin selection, e.g., (Smith 1964; Hales 2000; Hammond and Axelrod 2006; Helbing and Yu 2008). Agents do not have any visible characteristic that make them recognizable as members of a group, e.g., Green Beard (Dawkins 1976), so they cannot develop mechanisms that favor kin.

-

Limited dispersal, e.g., (Hamilton 1964; Nowak and May 1992; Santos et al. 2006; Grund et al. 2013). Agents are randomly placed, and there is no mechanism that explicitly keeps offspring near their parents. Of course, this could still happen as a result of specific environmental settings, e.g., an offspring is born in a patch with food. Similarly, food is created randomly in the grid, so a new food source is not likely to be created near a depleted one.

-

Learning agents, e.g., (Axelrod et al. 2002; Németh and Takács 2007; Duan and Stanley 2010). Agent behavior is fully determined by their genetic characteristics.

Moreover, agents have bounded rationality (Simon 1982) as they have to cope with an imperfect perceptual system. Nevertheless, the conclusions are the same as other work on collective sensing, in that collective sensing is shown to evolve from a population of individualistic agents (Hein et al. 2015) if resources are scarce (Torney et al. 2011).

The current work reproduces previous results in a general framework, where agent behavior is determined by a neural network, that does not make any assumption about the agent’s behavior. Previous work combined neural networks and evolutionary simulations, but the focus there was on individual learning (Hinton and Nowlan 1987; Gruau and Whitley 1993; Batali and Grundy 1996; Red’ko and Prokhorov 2010). Another body of literature looks at interactions between neural networks, in particular the emergence of language (Sukhbaatar et al. 2016; Lazaridou et al. 2016; Foerster et al. 2016) and cooperation (Tampuu et al. 2017), but evolution was not part of these studies. In the proposed model, gregarious behavior is not defined by the value of a parameter but is instead a region in a \(N \times M\)-dimensional parameter space, where N is the number of perceptions and M the number of actions. Moreover, agents are not able to emit any signal, instead evolving the capacity to respond to inadvertent social information (ISI) (Danchin 2004). Despite the generality of the model, gregariousness emerges as the evolutionary-fittest strategy under some environmental conditions, thus validating the previous results in a specific area of the parameter space.

3 Experimental setting

This section describes the goal and the design of the experiments, while results are presented and discussed in Sect. 4. Table 1 compares how the model parameters vary across these experiments. “Appendix” section describes how to reproduce the results.

The first experiment compares the efficiency of the two competing foraging strategies, random walk and gregariousness, and investigates how it changes for different parameter configurations. Each agent in the population is instructed to play one strategy, and strategies are compared by computing the average fitness, that is, the number of successful foraging actions at the end of the simulation, of individuals implementing that strategy. All simulations start with a population of the same size, and a fraction of it is initialized with the gregariousness strategy, which is obtained by initializing the weights of the neural network such that weights connecting the input and the movement action in the same direction are larger than the other weights. This configuration of weights ensures that at a large input in one direction corresponds to a large output at the corresponding movement action. If the input vector includes more than one large value, the winning action is determined by noise. The validity of this initialization is verified by means of a suit of tests, developed with the goal of classifying agent behavior in a linear scale from random walk to gregariousness strategy Burtsev and Turchin (2006). Each test presents the agent with a predetermined perception vector and records the chosen action. The action of foraging is tested by placing the agent in a location with food and no agents in sight; the action of moving north is tested by placing the agent in a location without food and with another agent to their north, and so on for the remaining movement actions. All tests are performed on all agents in the population, and the frequency of choosing the appropriate action is recorded: A high score in all tests means that the population behaves gregariously, while random walk scores close to 25% on all movement actions.

The second experiment looks at the evolution of competing foraging strategies. The experiment is set up as before, but agents reproduce with a probability proportional to their fitness and die with a probability proportional to their age (see Table 2). During every reproduction, the neural weights of the offspring are initialized with a mutated version of the parent’s weights. Mutations allow the behavior to drift away from the predefined strategies and adapt over time to the environment and to the competition. The evolution of the behavior of the two sub-populations is investigated in different environmental situations by means of the test suite described above.

The last experiment tests whether a population of randomly initialized agents can evolve gregariousness in response to a change in the environment. The population is initialized randomly, and a strategy is allowed to evolve. The strategy of the population is measured, as in the previous experiment, by testing the behavior of each agent at each timestep. The experiment is divided into two phases: In the first phase, the resources are abundant, while in the second phase they become scarce. The two phases are delimited by an event denominated famine, after which the quantity of the resources decreases gradually from the high value to the low value.

4 Methods

The effect of collective sensing in group formation is investigated by means of an agent-based evolutionary model (Epstein 1999). Simulation is a suitable tool and preferable over mathematical models for studying processes generated by individual interactions, as it allows for capturing their dynamics (Healy and Boudon 1967).

A population of agents performs a foraging task in a patchy environment (Bennati et al. 2017), which is modeled on previous work (Beauchamp 2000; Hamblin and Giraldeau 2009). The environment is a square grid with periodic boundary conditions in which a fixed number of patches contain a random positive quantity of food units. Food quantity is sampled from a uniform distribution with a mean of 100. This allows several agents to forage from the same resource for a short time. The total number of food sources is governed by a model parameter: Whenever a food source is exhausted, a new one is spawned at a random location.

Agent behavior is driven by a neural network which connects each perceptual input to each of the possible actions (see Fig. 1). Given a perception vector, the score of each action is the sum of all input values, multiplied by the corresponding weight that connects each input to the action, and by random noise. The action with the highest score is executed. If several actions obtain a similar score, the choice of one action over another is determined by a combination of noise, small differences in the input values and/or small differences in the weights. Behavior is selected by the evolutionary process among a vast set of alternatives, i.e., any of all possible mappings from any input vector to an action. The use of neural networks as controllers removes the need for modeling assumptions, e.g., a social parameter, which might limit the space of the possible behaviors. Agents are initially placed at random locations at the start of the experiment. At every timestep agents play their turn one after the other, in a random order: Each agent perceives the environment and executes one action. Randomization of the order of play is important as agents compete for the same rivalrous resources, i.e., food consumed by an agent is not available for other agents. Agents’ actions are either those of movement or of foraging: Agents can spend an action moving of one patch in any direction; alternatively, each agent can forage one unit of food from the current patch. Foraging is successful if the current patch contains at least one food unit, which is then consumed and removed from the patch. Foraging from a patch that does not contain any food produces a foraging failure. Fitness is defined as the number of successful foraging actions during an agent’s lifetime; thus, fitness measures the quality of the individual foraging strategy. For simplicity, we assume that energy is never consumed; thus, fitness is equivalent to energy.

Agents reproduce with a probability proportional to their energy. Reproduction spawns a new agent in the same patch, and energy is equally split between parent and offspring. The offspring’s behavior is determined by a mutated version of the parent’s weights. Mutations increase or decrease the original weights by a small random value. Agents are removed from the game with a probability proportional to their age. Both reproduction and death are modeled with a roulette wheel algorithm with stochastic acceptance [as in (Torney et al. 2011)].

Agents perceive the quantity of food and agents present in their surroundings. The perception of food is limited to only the current patch, while agents can be perceived within a given distance. This assumption is often verified in nature (Kurland 1973; Klein and Klein 1973; Kurland and Beckerman 1985) and is also a prerequisite for collective sensing. The perception mechanism does not distinguish the number of agents in each visible patch, and it aggregates instead the number of agents by angular direction (Strandburg-Peshkin et al. 2013). For simplicity, the field of view is subdivided into four areas corresponding to the cardinal directions and a parameter called “field of view” determines how far agents can see: A higher value implies that each of the four areas contains more patches; therefore, perception is more coarse-grained. A higher number of agents in one area correspond to a higher input signal: The more crowded the surrounding patches are, the higher the input signals; thus, if the population grows large, as in the evolutionary simulations, it becomes more difficult to perceive the signal carried by collective sensing. For this reason, it is assumed that the perceptual system can distinguish whether an agent is foraging or moving (Rosa Salva et al. 2014), and that agents are considered only if they are foraging (Bhattacharya and Vicsek 2014). This allows the supporting of larger populations in the environment. This assumption does not change the results qualitatively: Fig. 2 confirms that gregarious agents have higher fitness than random walkers, also when this assumption is relaxed (Bennati et al. 2016).

a Parameters of the simulation: field of view 4, 20 food sources, 10% gregarious agents, b parameters of the simulation: field of view 4, population size 20, 10% gregarious agents, c parameters of the simulation: population size 20, 20 food sources, 10% gregarious agents. Gregarious agents have an evolutionary advantage also when relaxing the assumption in their perception

Collective sensing allows individuals to perceive an invisible signal (food location) by means of a visible proxy signal (group dynamics); thus, the proxy signal must be visible where the other signal is not. In this case, the proxy signal is the location of other agents, as a higher concentration of agents correlates to the presence of a food source, under the assumption that agents remain on food sources [cf. position-dependent diffusion (Schnitzer 1993)]. Interestingly, this signal does not correlate with food availability. In fact the stronger the signal the more agents there will be foraging in a patch and the faster the food resource gets depleted.

5 Results

In the first experiment two foraging strategies, gregariousness and random walk, are compared. The fitness of a strategy is measured by the average number of resource units foraged by all agents implementing such a strategy. The main result is that gregariousness can be a more efficient strategy than random walk.

If food sources are rare, gregarious agents have a higher evolutionary fitness than random walkers, which decreases with population size (see Fig. 3) as there is higher competition for resources. Abundance of food reduces the advantage of gregarious strategy over random walk, until it eventually disappears (see Fig. 4).

The gregariousness strategy allows agents to exploit information about the behavior of other agents to find sources of food. Whenever an agent finds a food source and exploits it, gregarious agents start converging and eventually exploit the same resource. This behavior leads agents to concentrate on food locations; thus, the fewer the food locations the more crowded they are. Large groups of agents in one patch produce a strong signal, which gregarious agents can exploit to their advantage. Gregarious agents compete with each other for the same resources, while random walkers explore the environment for new resources; therefore, a larger number of gregarious agents increase competition, hence reducing their average fitness (see Fig. 3). After an agent finds a new resource, it can exploit it exclusively until another agent reaches the same position; the longer an agent can exploit a resource exclusively, the higher the benefit from exploring the environment. Therefore exploration, i.e., random walk, becomes advantageous if food is abundant because the probability of randomly finding a new food resource increases.

The effect of the field of view of agents on their performance is shown in Fig. 5. Gregarious agents see their fitness decrease with an increasing field of view, an effect of the perception system’s design which aggregates the contents of multiple cells in a region. The more agents are in the field of view, the more difficult it becomes to distinguish whether the region contains a crowded location or many locations with few agents. This effect can be reduced with a more sophisticated perception system which is able to distinguish counts at the patch level.

The second experiment repeats the comparison of two foraging strategies in an evolutionary setting, where agents can die and reproduce. Figure 6 shows the change in size of the two groups over the course of the simulation.

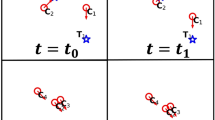

Evolution of strategy over time. Each line represents the frequency at which one action is executed according to the gregarious strategy: For example a value of 80% for action “left” indicates that action “left” is executed 80% of the time in a situation where gregarious strategy would dictate “left.” Left: Gregarious agents keep their behavior consistent with the gregarious strategy; right: Random walkers start with a random walk (each movement action is executed approximately 25% of the time) but rapidly adopt a gregarious behavior. Error bars represent 0.95 confidence intervals. Parameters of the simulation: initial population of size 20 and 50 food sources, field of view 1

This effect is explained by the evolution of the individual behaviors: Gregarious agents keep their behavior constant for the entire simulation (see Fig. 7 left), while random walkers adapt their behavior until they adopt the gregarious strategy (see Fig. 7 right). The whole population adopts the gregarious strategy at around time 10,000, which confirms that gregariousness is the evolutionary-fittest strategy.

In the third experiment a population of randomly initialized agents is let evolve in a variable environment. The strategy adopted by the population is evaluated by testing the behavior of each agent at every timestep (see Fig. 8). The simulation starts with abundant resources; agents are quickly selected on the basis of their ability to forage, but their movement is largely independent from the perceptual stimuli. At one point during the simulation, signaled by the red vertical line, the number of resources drops and the population rapidly adopts gregarious behavior in response to this change in the environment. One possible interpretation for this result is that some agents learn gregarious behavior at the beginning of the simulation, but the selective pressure is not enough to remove other less efficient strategies from the population until resources become scarce. Another interpretation is that selective pressure is high enough to remove inefficient strategies from the start; therefore, random walk is indeed the most efficient strategy when the resources are abundant. In order to distinguish between these two interpretations, it is necessary to measure the efficiency of a strategy; the frequency of foraging failures is chosen as a measure for strategy efficiency. The frequency of foraging failures approaches zero after 10,000 timesteps, which means that efficient strategies are selected at the beginning, supporting the second interpretation (see Fig. 9). This implies that, in a collective sensing scenario, gregariousness is the evolutionary-fittest strategy when food sources are rare, while random walk is the fittest strategy otherwise.

This result validates previous work on collective sensing by showing that groups do indeed emerge from a population of individualistic agents without any assumption about their behavior, but only if resources are scarce. Moreover, it shows that evolution favors from the start strategies that produce a low number of foraging failures, thus creating a correlation between the location of agents and resources. Without evolution selecting for efficient strategies, collective sensing would not be able to track the location of food; therefore, gregarious behavior would not provide an evolutionary advantage.

6 Conclusion

This paper studies the role of collective sensing in the formation of groups in a population of individualistic agents. An agent-based model of a foraging task is used to study the evolution of foraging strategies in a patchy environment with limited resources. Agent behavior is determined by a neural network which translates perceptual inputs into actions, without any explicit model about the environment and the interactions between agents. The generality of results is increased by the relaxation of many common assumptions in the literature, thanks to a simple environment and the use of neural networks.

Two different foraging strategies are compared: random walk and gregarious behavior, i.e., moving toward other agents. Results show that gregarious behavior provides an evolutionary advantage at the individual level through collective sensing at the group level. Depending on the characteristics of the environment, and specifically in the case of scarce resources, this advantage can overcome the competition caused by sharing resources and groups can spontaneously form in a population of individualistic agents. Moreover, it is shown that the evolutionary-fittest strategy is random walk if resources are abundant and gregarious behavior if resources are scarce. This result, caused by the interaction between evolution and collective sensing, validates and unifies previous work on collective sensing under a general framework that combines evolution and social learning via neural networks.

The framework introduced in this paper is relevant to the literature about collective sensing as it reproduces results from different models, without requiring the assumption of a “social” parameter. A region in the space of environmental parameters is identified that invalidates these results, thus calling for further investigation about the effect of the environment on the previous models. A mechanism is individuated, i.e., there is a sudden decrease of resource availability which could have triggered the creation of gregarious behavior in a population of solitary individuals. The results presented in this paper may be of interest to the fields of biology and social sciences, as they introduce collective sensing as another mechanism that supports the existence of groups. Finally, a similar effect of resource availability on the efficiency of gregarious behavior could appear in other scenarios in which gregarious behavior is present, such as financial markets (Devenow and Welch 1996), product choice (Huang and Chen 2006) and forecasts (Cote and Sanders 1997).

References

Axelrod, R., Riolo, R. L., & Cohen, M. D. (2002). Beyond geography: Cooperation with persistent links in the absence of clustered neighborhoods. Personality and Social Psychology Review, 6(4), 341–346.

Batali, J., & Grundy, W. N. (1996). Modeling the evolution of motivation. Evolutionary Computation, 4(3), 235–270.

Beauchamp, G. (2000). Learning rules for social foragers: Implications for the producer–scrounger game and ideal free distribution theory. Journal of Theoretical Biology, 207(1), 21–35.

Bennati, S., Wossnig, L., & Thiele, J. (2016). The role of information in group formation. In Proceedings of the 8th international conference on agents and artificial intelligence (ICAART) 2016 (Vol. 1, pp. 231–235). SciTePress.

Bennati, S., Wossnig, L., & Thiele, J. (2017). Agent-based simulation of a foraging task. https://github.com/bennati/group_formation.

Berdahl, A., Torney, C. J., Ioannou, C. C., Faria, J. J., & Couzin, I. D. (2013). Emergent sensing of complex environments by mobile animal groups. Science, 339(6119), 574–576.

Bertram, B. C. (1978). Living in groups: Predators and prey. In J. R. Krebs & N. B. Davies (Eds.), Behavioural ecology. Oxford: Blackwell.

Bhattacharya, K., & Vicsek, T. (2014). Collective foraging in heterogeneous landscapes. Journal of The Royal Society Interface, 11(100), 20140674.

Bowles, S., & Gintis, H. (2004). The evolution of strong reciprocity: Cooperation in heterogeneous populations. Theoretical Population Biology, 65(1), 17–28.

Burtsev, M., & Turchin, P. (2006). Evolution of cooperative strategies from first principles. Nature, 440(7087), 1041–1044.

Cote, J., & Sanders, D. (1997). Herding behavior: Explanations and implications. Behavioral Research in Accounting, 9, 20–45.

Couzin, I. D., Krause, J., James, R., Ruxton, G. D., & Franks, N. R. (2002). Collective memory and spatial sorting in animal groups. Journal of Theoretical Biology, 218(1), 1–11.

Danchin, E. (2004). Public information: From nosy neighbors to cultural evolution. Science, 305(5683), 487–491.

Dawkins, R. (1976). The selfish gene. Essays and reviews. Berlin: Walter de Gruyter GmbH.

Devenow, A., & Welch, I. (1996). Rational herding in financial economics. European Economic Review, 40(3), 603–615.

Duan, W. Q., & Stanley, H. E. (2010). Fairness emergence from zero-intelligence agents. Physical Review E, 81(2), 026104.

Elgar, M. A. (1989). Predator vigilance and group size in mammals and birds: A critical review of the empirical evidence. Biological Reviews, 64(1), 13–33.

Epstein, J. M. (1999). Agent-based computational models and generative social science. Complexity, 4(5), 41–60.

Foerster, J. N., Assael, Y. M., de Freitas, N., & Whiteson, S. (2016). Learning to communicate to solve riddles with deep distributed recurrent q-networks. Preprint. arXiv:1602.02672.

Forsyth, D. R. (2009). Group dynamics. Boston: Cengage Learning.

Garnier, S., Gautrais, J., & Theraulaz, G. (2007). The biological principles of swarm intelligence. Swarm Intelligence, 1(1), 3–31.

Giraldeau, L. A., & Caraco, T. (2000). Social foraging theory. Princeton: Princeton University Press.

Gruau, F., & Whitley, D. (1993). Adding learning to the cellular development of neural networks: Evolution and the baldwin effect. Evolutionary Computation, 1(3), 213–233.

Grund, T., Waloszek, C., & Helbing, D. (2013). How natural selection can create both self-and other-regarding preferences, and networked minds. Scientific Reports, 3, 1480 EP.

Hales, D. (2000). Cooperation without memory or space: Tags, groups and the Prisoner’s dilemma. Multi-agent-based simulation (pp. 157–166). Berlin: Springer Science + Business Media.

Hamblin, S., & Giraldeau, L. A. (2009). Finding the evolutionarily stable learning rule for frequency-dependent foraging. Animal Behaviour, 78(6), 1343–1350.

Hamilton, M. J., Milne, B. T., Walker, R. S., Burger, O., & Brown, J. H. (2007). The complex structure of hunter-gatherer social networks. Proceedings of the Royal Society of London B: Biological Sciences, 274(1622), 2195–2203.

Hamilton, W. (1964). The genetical evolution of social behaviour. I. Journal of Theoretical Biology, 7(1), 1–16.

Hammond, R. A., & Axelrod, R. (2006). Evolution of contingent altruism when cooperation is expensive. Theoretical Population Biology, 69(3), 333–338.

Healy, M. J. R., & Boudon, R. (1967). L’analyse mathematique des faits sociaux. Man, 2(4), 639.

Hein, A. M., Rosenthal, S. B., Hagstrom, G. I., Berdahl, A., Torney, C. J., & Couzin, I. D. (2015). The evolution of distributed sensing and collective computation in animal populations. eLife, 4, e10955.

Helbing, D., & Yu, W. (2008). Migration as a mechanism to promote cooperation. Advances in Complex Systems, 11(04), 641–652.

Helbing, D., & Yu, W. (2009). The outbreak of cooperation among success-driven individuals under noisy conditions. Proceedings of the National Academy of Sciences, 106(10), 3680–3685.

Hinton, G. E., & Nowlan, S. J. (1987). How learning can guide evolution. Complex Systems, 1(3), 495–502.

Huang, J. H., & Chen, Y. F. (2006). Herding in online product choice. Psychology and Marketing, 23(5), 413–428.

Klein, L. L., & Klein, D. J. (1973). Observations on two types of neotropical primate intertaxa associations. American Journal of Physical Anthropology, 38(2), 649–653.

Krause, J., & Ruxton, G. D. (2002). Living in groups. Oxford: Oxford University Press.

Krebs, J. R. (1973). Social learning and the significance of mixed-species flocks of chickadees (parus spp.). Canadian Journal of Zoology, 51(12), 1275–1288.

Kurland, J. A. (1973). A natural history of Kra macaques (Macaca fascicularis Raffles, 1821) at the Kutai Reserve, Kalimantan Timur, Indonesia. Primates, 14(2–3), 245–262.

Kurland, J. A., & Beckerman, S. J. (1985). Optimal foraging and hominid evolution: Labor and reciprocity. American Anthropologist, 87(1), 73–93.

Lazaridou, A., Peysakhovich, A., & Baroni, M. (2016). Multi-agent cooperation and the emergence of (natural) language. Preprint. arXiv:1612.07182.

Lewin, K. (1947). Frontiers in group dynamics: Concept, method and reality in social science; social equilibria and social change. Human Relations, 1(1), 5–41.

Mäs, M., Flache, A., & Helbing, D. (2010). Individualization as driving force of clustering phenomena in humans. PLoS Computational Biology, 6(10), e1000959.

Montanier, J. M., & Bredeche, N. (2013). Evolution of altruism and spatial dispersion: An artificial evolutionary ecology approach. In Advances in artificial life, ECAL 2013 (pp. 260–267). MIT Press.

Németh, A., & Takács, K. (2007). The evolution of altruism in spatially structured populations. Journal of Artificial Societies and Social Simulation, 10(3), 4.

Nowak, M. A., & May, R. M. (1992). Evolutionary games and spatial chaos. Nature, 359(6398), 826–829.

Nowak, M. A., Tarnita, C. E., & Antal, T. (2010). Evolutionary dynamics in structured populations. Philosophical Transactions of the Royal Society of London B: Biological Sciences, 365(1537), 19–30.

Perc, M., Gómez-Gardeñes, J., Szolnoki, A., Floría, L. M., & Moreno, Y. (2013). Evolutionary dynamics of group interactions on structured populations: A review. Journal of the Royal Society Interface, 10(80), 20120997.

Puurtinen, M., & Mappes, T. (2009). Between-group competition and human cooperation. Proceedings of the Royal Society B: Biological Sciences, 276(1655), 355–360.

Red’ko, V. G., & Prokhorov, D. V. (2010). Learning and evolution of autonomous adaptive agents. Advances in machine learning I (pp. 491–500). Berlin: Springer Science + Business Media.

Rosa Salva, O., Sovrano, V. A., & Vallortigara, G. (2014). What can fish brains tell us about visual perception? Frontiers in Neural Circuits, 8, 119.

Santos, F. C., Pacheco, J. M., & Lenaerts, T. (2006). Cooperation prevails when individuals adjust their social ties. PLoS Computational Biology, 2(10), e140.

Schnitzer, M. J. (1993). Theory of continuum random walks and application to chemotaxis. Physical Review E, 48(4), 2553–2568.

Schoener, T. W. (1971). Theory of feeding strategies. Annual Review of Ecology and Systematics, 2(1), 369–404.

Seeley, T. D. (2009). The wisdom of the hive: The social physiology of honey bee colonies. Cambridge: Harvard University Press.

Simon, H. A. (1982). Models of bounded rationality: Empirically grounded economic reason (Vol. 3). Cambridge: MIT Press.

Skyrms, B. (2010). Signals: Evolution, learning, and information. Oxford: Oxford University Press.

Smith, J. M. (1964). Group selection and kin selection. Nature, 201(4924), 1145–1147.

Strandburg-Peshkin, A., Twomey, C. R., Bode, N. W., Kao, A. B., Katz, Y., Ioannou, C. C., et al. (2013). Visual sensory networks and effective information transfer in animal groups. Current Biology, 23(17), R709–R711.

Sukhbaatar, S., Szlam, A., & Fergus, R. (2016). Learning multiagent communication with backpropagation. In D. D. Lee, M. Sugiyama, U. V. Luxburg, I. Guyon, & R. Garnett (Eds.), Advances in neural information processing systems (pp. 2244–2252). Red Hook: Curran Associates Inc.

Szathmáry, E., & Smith, J. M. (1995). The major evolutionary transitions. Nature, 374(6519), 227–232.

Tampuu, A., Matiisen, T., Kodelja, D., Kuzovkin, I., Korjus, K., Aru, J., et al. (2017). Multiagent cooperation and competition with deep reinforcement learning. PLoS ONE, 12(4), e0172395.

Torney, C. J., Berdahl, A., & Couzin, I. D. (2011). Signalling and the evolution of cooperative foraging in dynamic environments. PLoS Computational Biology, 7(9), e1002194.

Valone, T. J. (1989). Group foraging, public information, and patch estimation. Oikos, 56(3), 357.

Vergassola, M., Villermaux, E., & Shraiman, B. I. (2007). ‘Infotaxis’ as a strategy for searching without gradients. Nature, 445(7126), 406–409.

Ward, P., & Zahavi, A. (1973). The importance of certain assemblages of birds as information-centres for food-finding. Ibis, 115(4), 517–534.

Acknowledgements

The author acknowledges support by the European Commission through the ERC Advanced Investigator Grant “Momentum” (Grant No. 324247). The author thanks Leonard Wossnig and Johannes Thiele for their help with writing the simulation software, Andrew Berdahl and Dirk Helbing for many useful comments and several anonymous reviewer for their constructive criticism.

Author information

Authors and Affiliations

Corresponding author

Appendix: Reproducibility

Appendix: Reproducibility

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request. The source code used to generate and analyze the datasets is available on GitHub. (Bennati et al. 2017).

A C++ compiler with MPI support is required in order to compile the code. The code has been compiled with Make and the GCC compiler, but other development environments might be compatible as well. Data analysis and figures are produced by R; the code relies on the executable “Rscript” to run the analysis non-interactively. Compilation and start-up scripts are written for bash on a *nix system, but other shells might be supported as well. The code has support for the LSF platform for parallel execution on clusters, but it can also be run on a single machine.

In order to run the code, execute the script “build.sh”, which will build the appropriate code for each simulation scenario and start the simulation. Parameters for each scenario are found in the “params.sh” file in the respective folder. After the simulation has completed, the analysis scripts, “analysis.R” and “time_series_3d.R”, are executed. Figures are produced for each simulation in the subfolder “results.” The output of the simulation might take large amounts of space on disk; therefore, files are zipped after the analysis is completed. The scripts rely on “gzip” for compression.

Experiments differ in the parameters, contained in the file “params.sh”, (see Table 3) and in the features of the simulation, encoded as compile flags in the Makefile (see Table 4).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bennati, S. On the role of collective sensing and evolution in group formation. Swarm Intell 12, 267–282 (2018). https://doi.org/10.1007/s11721-018-0156-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11721-018-0156-y