Abstract

This paper highlights kernel principal component analysis (KPCA) in distinguishing damage-sensitive features from the effects of liquid loading on frequency response. A vibration test is performed on an aircraft wing box incorporated with a liquid tank that undergoes various tank loading. Such experiment is established as a preliminary study of an aircraft wing that undergoes operational load change in a fuel tank. The operational loading effects in a mechanical system can lead to a false alarm as loading and damage effects produce a similar reduction in the vibration response. This study proposes a non-nonlinear transformation to separate loading effects from damage-sensitive features. Based on a baseline data set built from a healthy structure that undergoes systematic tank loading, the Gaussian parameter is measured based on the distance of the baseline data set to various damage states. As a result, both loading and damage features expand and are distinguished better. For novelty damage detection, Mahalanobis square distance (MSD) and Monte Carlo-based threshold are applied. The main contribution of this project is the nonlinear PCA projection to understand the dynamic behavior of the wing box under damage and loading influences and to differentiate both effects that arise from the tank loading and damage severities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

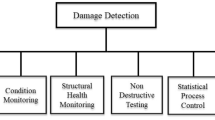

Structural health monitoring (SHM) is a condition-based maintenance that provides an alternative strategy to traditional maintenance by utilizing sensor networks to detect irregularities through data processing, recognition algorithms and statistical methods. It can continuously monitor the structural health conditions and detect any irregularities in the structure with minimal human intervention in the earliest time possible before the structural health deteriorates, which can put human lives at risk and cause substantial operating loss. SHM incorporates mainly dimensional reduction and statistical pattern recognition (SPR) techniques to transform the measured data into a more useful information to determine damage and abnormalities present in the system [1,2,3,4,5].

Within aircraft maintenance, SHM technology is significantly important to improve the reliability and monitoring of the structural integrity. As the aircraft structures are made of composite materials as such it requires more intelligent damage detection strategy to identify any fault or irregularities present in the complex structure. SHM is proved to reduce breakdown time and lower the maintenance cost. In developing a reliable SHM technology, all influences from the operational and environmental variations (OEVs) must be considered [1, 6]. The OEVs effects should be distinguished from the real damage effects in the measured response. In reality, load variations on the aircraft wing due to the change of fuel load poses a significant effect on the vibration-based damage detection (VBDD). However, there is a limited research work of the SHM technology focusing on an aircraft wing under the effects of fuel tank load variation. The current work focuses on VBDD of an aircraft wing box subjected to a fuel tank load variations based on a replicated model of an aircraft wing box.

There are different SHM techniques such as comparative vacuum monitoring (CVM), piezoelectric impedance, vibration-based and strain monitoring. Among the most matured SHM approaches is a VBDD [1]. One main difference of the VBDD compared to the other approaches is that VBDD is focused on damage detection and identification at global level in contrast to the local damage detection techniques such as CVM, strain monitoring and elastic waves. VBDD parameters such as the natural frequencies, vibration modes, structural damping are examined which are based on the principal that structural damages generally modify vibration parameters. The approach is capable to perform damage diagnostics with the integration of statistical and Machine Learning tool.

One of the key procedures in performing SHM is to transform the measured data into a more useful representation by acquiring data trends that can provide a beneficial clue on the structure’s health condition. This often achieved by means of statistical pattern recognition, machine learning algorithms or probability theories [7,8,9,10,11,12]. The general procedures usually involve data acquisition via sensors, data preprocessing, feature extraction and pattern recognition before the structural health condition can be inferred. Generally, under SPR technique, a reference data set usually acquired from an undamaged of structure state which are then recorded and compared to the new data set of unknown structural health states. Any pattern deviations from the baseline feature indicate possibility of irregularities or a damage might occur in the structure [13,14,15]. The use of SPR has been applied in VBDD intensively in the last decade, which has allowed damage detection and identification to be carried out globally in effective manner without the necessity to remove the components to reach to the hidden damage [1,2,3].

There are numerous works of SHM centered on aircraft and civil structures using various algorithms. Kernel-based algorithms such as KPCA, greedy KCA and support vector machine have been successfully studied and compared with alternative algorithms such as auto-associative neural network (AANN), factor analysis (FA) and Mahalanobis squared distance (MSD) regarding damage detection performance under the presence of OEVs [3]. The study carried out by Adam et al. concludes that KPCA produces lower false positive error compared to the alternative algorithms [3]. The authors extract damage-sensitive features from the time series which the parameters of the autoregressive (AR) model are calculated based on the order of the AR model. High damage detection rate of the KPCA, indicates the algorithm’s capability to capture the OEVs in the high-dimensional space which are proved by the authors in [13,14,15].

Another related nonlinear PCA-based approach is a strain field pattern recognition-based damage detection method using its statistical damage indices that are T2 index and Q index. These indices are used to classify various damage severities when the structure is exposed to different loading conditions. The pattern recognition approach successfully performs damage detection when the structure is under OEVs based on measurements data from various strain sensors [9].To produce a reliable and robust SHM system, the system should be able to discriminate the OEVs effects from the signal of which the process commonly referred as data normalization. If those effects are not accounted, the detection system can often trigger a false positive or false negative damage detection [1, 4, 6]. In vibration-based SHM, regression-based approach has also been utilized to normalize the confounding effects on the dynamic response. Cross and Worden (2018) reported that fitting a regression model to a data with the OEVs will capture mainly the OEVs in the system [6]. By subtracting the OEV predictions model from the test data, the remaining data can show higher sensitivity to damage after eliminating the OEVs effects. Some regression models that are commonly used are ANN, SVM and Gaussian Process. Treed Gaussian Process is shown to be effective as a switching nonlinear model to remove temperature variation to produce a damage-sensitive feature. By partitioning the data effectively using regression trees, a linear regression model is fitted to the response signal over each distinct region more smoothly which allow it to produce excellent results.

The current work introduces nonlinear PCA based on kernel Gaussian in solving the overlapping and hidden features that mask the DS features. KPCA is also used to better establish nonlinear relationship between the loading and damage variables than a linear PCA method. The performance of the KPCA relies on the radial distance parameter, also known as the precision. In this work, the precision value is computed from the distance between the baseline data and test data of various damage classes that undergo equivalent loading conditions. To perform a more convenience search for this precision, Euclidian distance block is proposed and presented in this work.

The strategy applied in the KPCA model is illustrated in Fig. 1. The FRF data associated with healthy conditions comprising all measured loading conditions are stored as the baseline set. The covariance matrix and the eigenvectors are then computed from the baseline set. Some selected eigenvectors (principal components) with the highest variance are stored as the coefficient which to be applied on various structural health conditions. The selected principal components are then multiplied with each data sets named as structural conditions 1, 2, 3 and 4 which has been normalized. It is important to note that, the healthy state and all damaged states undergo similar loading conditions labeled as L1, L2, …, L5 (empty load, quarter full, half full, three quarter and full load) as illustrated in Fig. 1.

This paper is organized as the following style. A brief background and related previous work of PCA are described in the Introduction section. Next, the formulation of the KPCA is presented in the Mathematical Equations section. MSD is also given here as it is used for novelty detection in this paper. The experimental setup and configuration and data preprocessing steps are explained in the Methodologies section. The results of the frequency spectrum of the structure and feature selection are addressed in the Results and Analysis section. Euclidean distance matrix block and KPCA transformation are also presented here. Outlier analysis using MSD is also addressed here. Finally, the important findings and summary are concluded in the Summary and Conclusion section.

Mathematical Equations

Constructing the Kernel PCA

Kernel substitution applies a nonlinear function in a form of a scalar product \({\text{x}}^{T}{\text{x}}^{^{\prime}}\), before merging it with the PCA in an eigenvalue solution. It avoids performing a complex standard PCA in the new feature space which can be extremely costly and inefficient [15,16,17]. By principle, for a nonlinear transformation \(\phi (x)\) from the original-dimensional feature space D to an M-dimensional feature space, the case M > D must be satisfied. First, assume that the projected new features have zero mean, defined as

The covariance matrix of the projected features with dimensional size \(M \times M\) is

Its eigenvalues and eigenvectors are indicated by

where k=1, 2, …, M. From Eqs 1 and 3, it gives

which \(v_{k}\) can be restated as

Now by substituting \(v_{k}\) in Eq 5 with Eq 4, it yields

Kernel function can be defined as

Multiply both sides of Eq 6 by \(\phi ({\text{x}}_{l} )^{T}\), gives

Using the matrix notation

where

and \(a_{k}\) is the N-dimensional column vector of \(a_{ki}\)

solving \(a_{k}\) by

then the resulting kernel principal components can be solved using

In transformation space, the projected data set \(\{ \phi (x_{i} )\}\) generally does not have a zero mean. The data cannot simply be subtracted off the mean in the transformed space. The algorithm can be formulated in terms of the kernel function using the Gram matrix representation for this purpose, given by

where 1N equals to N-by-N matrix with all elements equal to 1/N.

As stated earlier, the advantage of kernel methods is that the computation is not explicitly performed in the feature space but by directly constructing kernel matrix from the training data set {xi} avoiding the feature space. Some popular kernels normally applied are the polynomial kernel and sigmoid (hyperbolic tangent) kernel with the Gaussian kernel is the most popular kernel to use in complex problem [13,14,15]. In this study, Gaussian kernel is utilized to achieve the objective of this work. Gaussian kernel is given by

where \(\sigma^{2}\) is the inverse variance which depends on the data variation [3].

Mahalanobis Squared Distance (MSD)

MSD function is used in this work to analyze the variation in the data sample in the KPCA model. It is based on Hotelling’s T2-statistic [14]. High variation is desirable as it distinguish the conditions of the structure [1]. To compute the T2 index in the reduced space corresponding to the principal component projections in which its focus is to compare the degree of variability between the undamaged and damaged states, the following equation is applied by computing the score matrix obtained from the PCA

Methodologies

Experiment Configuration and Data Acquisition

The data acquisition system used is a DIFA SCADAS III of 16-channel and high-speed data acquisition system, controlled by LMS software running on a Dell desktop PC. The samples were captured using frequency range 0–1024 Hz with 0.25 Hz resolution. Based on a sampling rate 2048 samples per second, the data are presented in the results in terms of spectral lines amounting 8192 spectral lines.

White Gaussian signal is used to excite the wing box using LDS shaker powered by an amplifier. The response signal was measured using PCB piezoelectric accelerometers (s1 – s7) mounted vertically below the wing box and the water tank (Fig. 3). The excitation signal was measured by a standard PCB force transducer.

Water tanks are bonded above the wing box plate in order to provide changing loading effects in each measurement. The top sheet has a size of 750 × 500 × 3 mm aluminum sheet. It is stiffened by two ribs of length of C-channel riveted to the shorter edges and two stiffening stiffeners of angle section bolted along the length of the sheet. Free–free boundary conditions are approximated by suspending the wing box from a substantial frame using springs and nylon lines attached at the corners of the top sheet (Fig. 2). The structural weight is approximately 6.464 kg.

Introduce Load Variables and Damage Variables

The liquid loading takes the amount as empty, quarter full, half full, three quarter full and full as measured on the tank. The process of filling up and emptying is reversible (repeated) and the same amount must be taken into account for each class. After completing the measurement for the baseline set, damage severities are introduced in the structure’s stiffener using four different saw-cut lengths (labeled as D1, D2, D3 and D4) as shown in Fig. 3.

In this work, the data are grouped based on the structural health conditions (Fig. 4). The baseline set comprised of data from undamaged state that undergoes similar operational loading conditions as described above. The KPCA transformation in high-dimensional space based on Gaussian function allows the nonlinear relationship between the baseline set and damage set to be compared. The effects of loading variations can also be examined as all data groups (from damage and undamaged label) as they are constituted naturally in the frequency response. All data groups are compared in term damage conditions.

In the column of every data group, 200 samples are measured which total is 1000 samples recorded. Within each loading class, 40 samples are recorded for each of the 5 loading classes. The PCA model (illustrated in Fig. 1) is based on Fig. 4 where the eigenvectors of the covariance matrix are computed from the baseline (undamaged set). The goal is to identify if the new data transformation established from damage set can be distinguished from the baseline set when projected in the feature space (Fig. 1).

Results and Analysis

Dynamic Behavior Due to Operational Loading and Damage Variations

The signal captured from all the accelerometers are analyzed. The best signal from one of the accelerometers is selected based on the criteria that the signal shows good damage and loading sensitivity. The sensitivity is based on the shifting of the frequency peaks as the result of the effects of different loading conditions. It is indicated that the signal produced by the loading effects in the structure display better sensitivity compared to the effects of damage severities. It is evident on the shift of the frequency peaks as the structure is subjected to various loadings (Fig. 5). With known labels as in the study, it allows one to understand the structure’s behavior in response to the loading changes and damage, respectively.

The FRF plot in Fig. 5 shows distinct peaks at lower spectral lines in the range 200–800 spectral lines (50–200 Hz). Zooming into the selected range, it highlights damage sensitivity in the frequency range between 350 and 450 spectral lines (87.5 Hz and 112.5 Hz).

In calculating the precision value for kernel Gaussian function, data on row 0–40 samples of distance block between undamaged and damage class which labeled as 1 (Fig. 6) is considered. The smallest distance matrix is determined from the undamaged and each damage class data set which located in the first row indicated by 1 in darkest blue-colored scale.

The precision value is computed based on the mean of the distance between undamaged and damage class data represented in the matrix block. It is written as \(\sigma = {\text{ mean}}\left( {D_{i}^{NN} } \right)\) where DiNN represents the smallest distance (excluding zero) between each data point in a row.

To calculate the precision value, firstly, the value (σ) is set to be minimum in each column of the distance matrix and its average is then calculated (across the first row of distance block). Based on this strategy, the result shows greater separation of class especially among lower variance data group. As the result, this value is opted for damage detection approach as presented in the MSD. This is illustrated in Fig. 8. On the other hand, using larger sigma values (taken second row in distance block in white dash box marked as 2) produce better data pattern for the purpose of tracking of the various damage states. This is preferable for monitoring the structural health. Also, for the purpose of damage detection, a choice of minimum mean distance is shown to be more appropriate so that the smallest damage can be captured. KPCA can generalize different level of separation by adopting a slightly higher sigma. This rule has a potential application in monitoring of structural conditions and damage progressions as demonstrated in Fig. 7.

The results produced by KPCA shown in Fig. 8 display significant separation between the baseline sets and the damage states. The data points from damage states are distinctly separated from the baseline data points regardless of all loading classes. However, the separation between damage states especially for full tank is not systematically promising.

All data points from all damage states including the smallest damage, D1 are easily identified as damage and projected well above the threshold line.

Note that, the main purpose of establishing visualization model via KPCA is to monitor the structural health conditions by highlighting the separation between the baseline set (undamaged variables) and the damage set. KPCA is shown to fulfill this expectation as highlighted in the above results.

Highlighting on overall loading and damage classes in Fig. 7, the results using the kernel Gaussian PCA confirms that the loading features are distinctly separated. It displays a distinct pattern recognition in the interest of tracking and monitoring damage changes in a changing load environment. Features of the damage severities also provide good identification especially for higher damage level (D3 and D4).

The performance of the transformation is measured by the degree of the baseline separation from the damaged condition using T-squared statistics (MSD function) as illustrated in Fig. 8. The variance (eigenvalues) based on the first 100 principal components are extracted as to provide sufficient representation of the structural characteristic.

Using KPCA, joining all operational loading conditions as the baseline produces a novelty detection with excellent result for all loading conditions. The results shown in Fig. 8 display higher data separation between the baseline and the damage class. The damage classes are distinctly separated from the baseline regardless of all loading classes.

All data points from all damage states including the smallest damage, D1 are easily identified as damage and projected well above the threshold line. The distance associated to damage severities especially between undamaged and damage class is significantly seperated. This can be due to the large variation in the distance matrix calculated based on the combined loads (empty to full load joint as baseline set) and cause the sigma that is calculated from the distance matrix that is difficult to adjust to the high variability from the large variance.

It had been investigated that by varying the sigma values alter the trend of the damage severities variations and their T-squared index in the reference set. In this case, the sigma is adapted to each structural health condition as illustrated in distance matrix (Fig. 6) to obtain appropriate data variations. Despite some data are not correlated with the damage severities level, due to the small damage size introduced in the wing. Through this holistic approach, KPCA has shown to be useful in SHM and novelty detection area under the influence of operational loading variations.

Summary and Conclusions

The key contribution of this work is the adaptation of the KPCA parameter which is the flexibility of the precision value. This study also highlights data behavior under various loading and damage variables for the VBDD application. The factor size of the loading variables poses significant effects in comparison to the real damage variables when using KPCA. A smaller loading variation in the system may cause the damage detection to be falsely identified as damage. The data features are likely to be overlapped in the linear PCA projection space; hence making the detection of damage more challenging. Therefore, KPCA transformation can successfully be utilized to reveal and distinguish the feature driven by the damage or loading effects when projected into the feature space. Besides the separation of the loading and damage effects, the flexibility of the precision value in the kernel function also provides a good advantage for monitoring and detecting damage of engineering structures.

The important criteria when applying KPCA is to choose a suitable hyper parameter sigma (σ) which is also known as the precision value. It is computed as an inverse variance (1/σ2) used for the nonlinear mapping transformation function based on the kernel Gaussian or radial-based function (RBF) [15,16,17]. The work has also established a visualization method using a color-scale distance matrix to assist one for visualizing the data separation between the baseline and damage class. Based on the Euclidean distance matrix, the inverse variance can be computed on the basis that the sigma parameter value (σ) should be smaller than the average inter-class data distance (of different class) and larger than the average of inner class data distance. Another key benefit of using kernel Gaussian PCA, besides improving data separation between undamaged condition and damaged states, the full formation data trajectory or its pattern can be established and visualized. This complete pattern can guide one in monitoring of the structural health based on the feature departure from the baseline feature. In the study, the pattern of the damage features forms the radius of the ellipse directing toward the center while the loading class forms the outer ellipse. This can provide useful information when examining the damage state and find the associated loading class instantaneously.

References

C.R. Farrar, K. Worden, Structural Health Monitoring: A Machine Learning Perspective, 1st edn. (Wiley, Hoboken, 2013)

J. Alvarez-Montoya, A. Carvajal-Castrillón, J. Sierra-Pérez. In-flight and wireless damage detection in a UAV composite wing using fiber optic sensors and strain field pattern recognition. Mech. Syst. Signal Process. 136, 106526, ISSN 0888-3270 (2020).

A. Santos, E. Figueiredo, M.F.M. Silva, C.S. Sales, J.C.W.A. Costa. Machine learning algorithms for damage detection: Kernel-based approaches. J. Sound Vib. 363, 584–599, ISSN 0022-460X (2016). https://doi.org/10.1016/j.jsv.2015.11.008.

K. Worden, T. Baldacchino, J. Rowson, E.J. Cross. Some recent developments in SHM based on nonstationary time series analysis. In Proc. IEEE, vol. 104 (2016)

A. Montazeri, S.M. Kargar. Fault detection and diagnosis in air handling using data-driven methods. J. Build. Eng. 31, 101388, ISSN 2352-102 (2020). https://doi.org/10.1016/j.jobe.2020.101388.

E.J. Cross, G. Manson, K. Worden, S.G. Pierce, Features for damage detection with insensitivity to environmental and operational variations. Proc. R. Soc. A Math. Phys. Eng. Sci. 468(2148), 4098–4122 (2012)

F. Bencheikh, M.F. Harkat, A. Kouadri, A. Bensmail. New reduced kernel PCA for fault detection and diagnosis in cement rotary kiln. Chemom. Intell. Lab. Syst. 204, 104091, ISSN 0169-7439 (2020). https://doi.org/10.1016/j.chemolab.2020.104091

H. Wang, M. Peng, Y. Yu, H. Saeed, C. Hao, Y. Liu. Fault identification and diagnosis based on KPCA and similarity clustering for nuclear power plants. Ann. Nucl. Energy 150, 107786, ISSN 0306-4549 (2021). https://doi.org/10.1016/j.anucene.2020.107786

J. Sierra-Pérez, A. Güemes, L.E. Mujica, Damage detection by using FBGs and strain field pattern recognition techniques. Smart Mater. Struct. 22(2), 025011 (2013)

H.J. Lim, M.K. Kim, H. Sohn, C.Y. Park, Impedance based damage detection under varying temperature and loading conditions. NxDT E Int. 44(8), 740–750 (2011)

L. Qiu, S. Yuan, H. Mei, F. Fang, An improved gaussian mixture model for damage propagation monitoring of an aircraftwing spar under changing structural boundary conditions. Sensors (Switzerland). 16(3), 1–18 (2016)

R. Sharafiz. Investigating the Effect of Variable Mass Loading in Structural Health Monitoring from a Machine Learning Perspective. Ph.D. thesis, University of Sheffield (2018)

A. Vinay, S. Shekhar, K.N. Balasubramanya Murthy, S. Natarajan. Face recognition using Gabor wavelet features with PCA and KPCA—a comparative study. Procedia Comput. Sci. 57, 650–659, ISSN 1877-0509 (2015). https://doi.org/10.1016/j.procs.2015.07.434

A. Bakdi, A. Kouadri. A new adaptive PCA based thresholding scheme for fault detection in complex systems. Chemom. Intell. Lab. Syst. 162, 83–93, ISSN 0169-7439 (2017). https://doi.org/10.1016/j.chemolab.2017.01.013

L. Zhang, J. Lin, R. Karim. Adaptive kernel density-based anomaly detection for nonlinear systems. Knowl. Based Syst. 139, 50–63, ISSN 0950-7051 (2018). https://doi.org/10.1016/j.knosys.2017.10.009

J. Yu, J. Yoo, J. Jang, J.H. Park, S. Kim. A novel hybrid of auto-associative kernel regression and dynamic independent component analysis for fault detection in nonlinear multimode processes. J. Process Control 68, 129–144, ISSN 0959-1524 (2018). https://doi.org/10.1016/j.jprocont.2018.05.004

R. Shao, W. Hu, Y. Wang, X. Qi. The fault feature extraction and classification of gear using principal component analysis and kernel principal component analysis based on the wavelet packet transform. Measurement 54, 118–132, ISSN 0263-241 (2014). https://doi.org/10.1016/j.measurement.2014.04.016

Acknowledgments

The author would like to express full appreciation to his supervisor Dr Graeme Manson for his technical advice during the implementation of the work at the University of Sheffield. He also would like to thank Prof Keith Worden for his insights on this subject. This research is undertaken with the support of Ministry of Education under the scholarship reference number 760321026183.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is an invited paper selected from presentations at the 5th Symposium on Damage Mechanism in Materials and Structures (SDMMS 20–21), held March 8–9, 2021, in Penang, Malaysia, and has been expanded from the original presentation.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rahim, S.A., Manson, G. Kernel Principal Component Analysis for Structural Health Monitoring and Damage Detection of an Engineering Structure Under Operational Loading Variations. J Fail. Anal. and Preven. 21, 1981–1990 (2021). https://doi.org/10.1007/s11668-021-01260-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11668-021-01260-1