Abstract

When intelligent voice-based assistants (VBAs) present news, they simultaneously act as interlocutors and intermediaries, enabling direct and mediated communication. Hence, this study discusses and investigates empirically how interlocutor and intermediary predictors affect an assessment that is relevant for both: trustworthiness. We conducted a secondary analysis using data from two online surveys in which participants (N = 1288) had seven quasi-interactions with either Alexa or Google Assistant and calculated hierarchical regression analyses. Results show that (1) interlocutor and intermediary predictors influence people’s trustworthiness assessments when VBAs act as news presenters, and (2) that different trustworthiness dimensions are affected differently: The intermediary predictors (information credibility; company reputation) were more important for the cognition-based trustworthiness dimensions integrity and competence. In contrast, intermediary and interlocutor predictors (ontological classification; source attribution) were almost equally important for the affect-based trustworthiness dimension benevolence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the past decade, the world has seen a rapid development and dissemination of intelligent voice-based assistants (VBAs) that use a human-sounding voice to interact with humans (e.g., Apple’s Siri, Amazon’s Alexa, Google Assistant). These software applications first and foremost serve as digital personal assistants (Knote et al. 2019): They are implemented in numerous technological devices (e.g., smartphones, laptops, headphones, smart speakers) and meant to assist humans with their everyday tasks like setting timers or getting directions. However, in addition to these assistive functionalities, they are marketed and designed as intelligent agents with a unique persona and an elaborate conversational user interface (CUI). Based on speech recognition, natural language processing, and AI-aided speech synthesis, VBAs can understand spoken instructions and answer in a human-sounding voice (Deloitte 2018; McTear et al. 2016; Yang et al. 2019). Thus, unlike previous assistance applications, VBAs also have the capacities to imitate human communication patterns and simulate intentions (Burgoon et al. 1999; Guzman 2019; Hearst 2011; McTear et al. 2016), thereby becoming interlocutors. Human-Machine Communication (HMC) scholars have been at the forefront of investigating how these machines become social actors through their ontological hybridity between assistive tool and autonomous agent, and how this affects users’ reactions and behavior toward them.

However, the VBAs’ connection to the World Wide Web, numerous cloud services and platforms allows them to adopt functionalities that lie outside of traditional HMC research and touch areas within Computer-Mediated (Mass) Communication (CMC) research: by accessing, selecting, and presenting news from external content providers like mass media, VBAs become information intermediaries. In some cases, VBAs simply announce a source and play the original audio (or audiovisual) content; in other cases, they present the content themselves. Thus, VBAs may even adopt the role of “artificial news anchors” (Weidmüller 2022, p. 86), a role previously inherent to human interactants (apart from a few experimental exceptions, e.g., at China’s state news agency Xinhua). Importantly, VBAs are not only increasingly capable to present news (Lyons 2020; Porter 2019), they are also increasingly used as news presenters (Ammari et al. 2019; Kinsella and Mutchler 2020; Natale and Cooke 2021; Newman et al. 2019). Hence, people could rely on the information they receive from VBAs to form opinions about the world surrounding them. This brings us to the question how people evaluate VBAs in this context and the content they present. Do they base their assessments on the VBAs’ context-specific tool characteristics, i.e., its function as intermediary, or rather on its human-like characteristics, i.e., its function as interlocutor?

Depending on which function of the VBA is the focus, two different research traditions offer important insights. These are HMC, traditionally focusing on the interlocutor function, and CMC, traditionally focusing on the intermediary function of machines. However, there is a dearth of research that considers that VBAs fulfill both functions simultaneously. Hence, we investigate the effect of predictors from both research traditions on an assessment that is relevant for both: trustworthiness. Concerning news, trust is one of the most important evaluative aspects (Kohring 2019; Strömbäck et al. 2020) and it also drives the adoption of and reliance on autonomous technologies (Schaefer et al. 2016). It develops over time based on context-specific trustworthiness assessments (Mayer et al. 1995). So far, HMC (and adjacent research fields) has focused on the influence of VBAs’ anthropomorphic design and social cues (e.g., voice properties, appearance) on trustworthiness and feelings of trust in various contexts (e.g., de Visser et al. 2016; Rheu et al. 2021). However, it is unclear how these cues influence people’s context-specific assessment of trustworthiness when VBAs become information intermediaries. Other cues and heuristics like source reputation and content credibility (Metzger et al. 2010) that have been found influential in CMC research could be even more important in this context. Thus, this paper investigates the following research question:

RQ

When VBAs present news, how do interlocutor and intermediary predictors relate to the assessment of their trustworthiness?

To investigate this question, we, firstly, elaborate on important predictors of trustworthiness from both research traditions. Secondly, we examine the extent to which interlocutor and intermediary predictors affect different dimensions of trustworthiness in a hierarchical regression model. We show that both sets of predictors contribute differently to the explanatory power of the model and discuss the substantive and methodological implications for future research. In doing so, this study follows in the tradition of combining extant research fields of communication science to gain a more holistic understanding of how new communication technologies are perceived, and how they shape communication processes.

2 Trust and trustworthiness

As one of the most important social heuristics, trust helps people to reduce the complexity and uncertainties of their environment, enabling them to act and choose between alternatives (Lee and See 2004). Trust in general can be defined as an attitude of a trusting party (trustor) toward another (trustee) involving the expectation that interacting with or relying on the trustee will prompt favorable outcomes (Blöbaum 2016; Lee and See 2004). It is of relevance in any situation where two or more distinct parties have to rely on another in order to effectively or successfully complete an interaction that involves uncertainty, unequally distributed knowledge, and/or the risk of negative consequences (Lee and See 2004; Mayer et al. 1995; McKnight et al. 2011). It thus includes a willingness to be vulnerable to the actions of another party irrespective of the ability to control it (Lee and See 2004; Mayer et al. 1995) and applies to human-human, human-machine, or human-organization relationships (Schaefer et al. 2016).

In the context of information or more specifically news use, people must be able to trust the information intermediaries because they do not have the resources or capabilities to (a) experience all recent events and societally relevant decisions firsthand, and (b) thoroughly evaluate every information they are presented with in an increasingly complex information environment (Kohring 2019; Metzger 2007; Strömbäck et al. 2020). Imperative for the evolvement of trust over time are the trustor’s prior experiences with, and evaluation of the (perceived) qualities of the trustee.

This assessment of the trustee’s qualities and characteristics is studied under the term trustworthiness (Lee and See 2004). In this study, trustworthiness is conceptualized as the assessment of characteristics that correspond with a triad of dimensions, namely competence, integrity, and benevolence (e.g., Blöbaum 2016; Lee and See 2004; Mayer et al. 1995; Metzger et al. 2003). These dimensions comprise different components of trustworthiness: The competence dimension encompasses the “can-do” component of the assessment, i.e., whether the trustee possesses the skills and knowledge to fulfill a certain task (Colquitt et al. 2007, p. 910). Integrity and benevolence are two facets of the “will-do” component of the assessment, thus including the judgment of the trustee’s intentionality (Colquitt et al. 2007, p. 911): Benevolence refers to the extent to which a trustee intends to do good to the trustor (Mayer et al. 1995, p. 718), which can foster emotional attachment and positive affect toward the trustee (Colquitt et al. 2007, p. 911). In contrast, integrity is defined as the extent to which the trustee adheres to sound moral and ethical principles (Mayer et al. 1995) which is “a very rational reason to trust someone” as these principles provide a long-term predictability that helps overcome uncertainty (Colquitt et al. 2007, p. 911). Competence and integrity can thus also be interpreted as cognition-based calculations of the trustee’s abilities and principles, which are supplemented by the more affect-based benevolence dimension (Colquitt et al. 2007, p. 918). While this conceptualization of trustworthiness has been developed for human trustees, it has been proven to be applicable to technologies that exhibit primary social cues, like a human-sounding voice and natural language processing capabilities, thereby mirroring human interaction patterns, and implying meaningful behavior and human-likeness (Hearst 2011; Lankton et al. 2015; Lombard and Xu 2021; Weidmüller 2022).

However, which specific qualities or characteristics are the reasons to trust a trustee depends on the context, meaning the goal for which the trustor relies on the trustee. Thus, trust is domain-specific and different aspects of the trustee’s characteristics can be relevant regarding all three dimensions of trustworthiness in any given context (Mayer et al. 1995, p. 727). In this study, we examine the trustworthiness of VBAs in their increasingly important function as information intermediaries. Hence, trustworthiness can be defined as the competence to provide information, the integrity to provide truthful information (thus adhering to a central journalistic norm), and the benevolence to help satisfy the trustors’ information need(s). The latter would manifest as the presentation of information that is relevant to the trustor and not misleading or potentially harmful to the trustor.

There is a large body of research dealing with the factors that influence the trustworthiness assessment of (perceptually) autonomous agents (see for example Hancock et al. 2011; Schaefer et al. 2016). When VBAs act as information intermediaries, they simultaneously assume the functions of direct conversation partners, due to the conversational mode in which they present news, and of mediating information channels, since they present content from external sources. Therefore, we consider the effect of predictors pertaining to the VBAs’ perceived capacities as interlocutors as well as predictors relevant to their intermediary function on the trustworthiness assessment. Furthermore, individual characteristics of the trustor have been shown to affect assessments of trustworthiness and will be considered as control variables (Schaefer et al. 2016).

2.1 Predictors pertaining to the VBA as interlocutor

In the past decade, we have seen a rapid progress regarding the abilities of VBAs to imitate human communication: Automatic speech recognition is now equally reliable to human speech recognition in terms of its word error rate (Scharenborg 2019), thereby enabling to ask queries by using more natural language. On the other hand, speech synthesis using neural networks is now tackling issues like prosody and speech melody (Lyons 2020; Porter 2019; Wood 2018), thus making the VBAs’ responses more human-like and natural. These technological advances regarding the CUI have heightened the similarities between interactions with these machines, and human-human interactions. They enable VBAs to imitate human communication behavior and simulate intentions (Burgoon et al. 1999; Guzman 2019; Hearst 2011; McTear et al. 2016; Moon et al. 2016). Additionally, scripted small talk responses, designed to convey a unique character for each VBA (Natale 2021), enhance this illusion of an autonomous communicator. “By exchanging messages with people or by performing a communicative task on their behalf” (Guzman 2020, p. 37), VBAs occupy the role of an interlocutor that people can communicate with, instead of a tool that people communicate through.

Whether, and under which circumstances people perceive machines such as VBAs more as tools or as autonomous communicators and what results from this perception has been the focus of HMC research. Studies have shown that users respond socially to technologies that exhibit social cues, leading them to attribute human-like traits and mental capacities to the technology (Banks 2021; Edwards 2018; Etzrodt and Engesser 2021; Garcia et al. 2018; Reeves and Nass 1996). Additionally, several studies have shown that these social cues can lead to people perceiving technology in general, and VBAs specifically, as veritable sources of communication rather than mere channels for communication (Guzman 2019; Lombard and Xu 2021; Sundar and Nass 2000, 2001).

These attributions and social reactions were once explained by scholars as “mindless anthropomorphism” that results from the human tendency to “overuse human social categories” (Nass and Moon 2000, p. 82). Other scholars argue that there is mindless and mindful anthropomorphism, depending on the quantity and quality of the social cues exhibited by a technology (Kim and Sundar 2012; Lombard and Xu 2021). With recent technological advancements in VBAs, but also chatbots, virtual agents, or social robots, the social cues and human-likeness these technologies exhibit, have become far more sophisticated. As these agents are further integrated in everyday life, people are continuously assessing, either consciously or non-consciously, the nature of their interactants and “the roles that they themselves and the technology play in the interaction” (Jia et al. 2022, p. 388). As an interlocutor, the VBA can be defined as a party that “is involved in a conversation” (Cambridge Dictionary n.d.-a). Hence, the VBA’s perceived nature or identity as a trustee that can understand and meaningfully answer the trustor’s utterances is of importance for assessing the VBA. Importantly, previous research has emphasized that the perceived nature of the trustee has fundamental implications for the trustworthiness assessment (Lankton et al. 2015; Lee and See 2004; Weidmüller 2022). Thus, we ask:

RQ1

How does the perceived nature of VBAs relate to their trustworthiness?

2.1.1 Ontological classification

To classify the nature of VBAs is especially challenging for users, seeing as they are perceptual hybrids between the ontological classification of person/subject and thing/object (Etzrodt and Engesser 2021; Gunkel 2020; Guzman 2019). While VBAs are technological devices, their elaborate CUI triggers scripts of interpersonal communication (Burgoon et al. 1999; Hearst 2011) and thus confuses the fundamental classification between the “psychomorph” (“who”) and the “physicomorph” (“what”) scheme (Etzrodt 2021). While the first describes subjects (i.e., living beings), possessing intrinsic capabilities such as thinking or feeling, the latter is applicable for non-living objects, which can be understood by extrinsic precise, logical-mathematical categories and deterministic causal rules (Piaget 1974). VBAs, and similar intelligent technologies, have been found to challenge this distinction, to be objects of doubt, as people attribute to them a certain level of subjecthood, mental states, sociality, and even morality (Edwards 2018; Etzrodt and Engesser 2021; Guzman 2019; Kahn, Jr. et al. 2011).

To date there are no published studies on the effect of ontological classification between subject and object on trust or trustworthiness. However, we can infer assumptions about this relationship from related studies on the effect of (a) manipulated and (b) perceived human-likeness. (a) Firstly, several experiments compare the trust in, and trust-related behavior toward human vs. artificial agents with varying degrees of human-likeness (e.g., computers, chatbots, virtual assistants, robots). These studies found that mostly, the human, or more human-like agent (as defined by the researcher) was also the more trustworthy, eliciting more trusting behavior (e.g., de Visser et al. 2016; Kulms and Kopp 2019). However, studies using this experimental setting only include the definitional assumptions of the researchers and do not allow for a nuanced ontological classification on behalf of the participants. (b) The second strand of research is concerned with the effect of perceived human-likeness on trustworthiness, trust, or trust-related behavior. These studies found further evidence for the positive effect of human-likeness on trust in a variety of contexts and with a variety of artificial agents (e.g., Calhoun et al. 2019; Følstad et al. 2018; Gong 2008; Natarajan and Gombolay 2020; Seymour and Van Kleek 2021).

Perceptions of human-likeness in artificial agents can be understood as a projection of similarity to humans in thinking, feeling, and behaving, which has been found to increase the ontological classification of VBAs as subjects (Etzrodt and Engesser 2021). However, measuring human-likeness can only grasp perceptions of the trustee’s nature, but not whether the individual perceiver defines the trustee ontologically as subject or subject-like (Etzrodt 2021). Since we are interested in the latter, we investigate the effect of the distinct ontological classification on trustworthiness. Based on ample empirical evidence of an overall positive effect of human-likeness on trust, and the positive relationship between perceived human-likeness and the ontological classification as subject (Etzrodt and Engesser 2021) we propose:

H1

Higher ontological classification toward subject leads to higher trustworthiness.

However, an alternative effect is conceivable as well: Related to the machine heuristic proposed by Sundar (2008), machines are perceived as more objective than humans, and free of biases, which should lead to higher assessments of competence regarding news selection. Hence it is likely, that the different dimensions of trustworthiness are affected differently by the ontological classification. Regarding the cognition-based competence dimension (“can-do”), higher classification toward subject may have a negative effect (H1a). Regarding the intentionality-focused dimensions (“will-do”) it should have a positive effect due to the assumed similarity to humans that is incorporated in the ontological classification which may suggest aligning principles (i.e., integrity, H1b) and intentions (i.e., benevolence, H1c).

2.1.2 Source attribution

Closely related to the ontological classification is the attribution of agency. Machine agency can be defined as “the perceived level of autonomy and volitional control” (Jia et al. 2022, p. 389) displayed or suggested by the machine’s functionalities and capabilities. To possess agency in a communicative interaction essentially means the ability to be the source of communication, not just the recipient or mediator. Several studies have shown that people can perceive VBAs as veritable sources of communication rather than mere channels for communication (Guzman 2019; Sundar and Nass 2000, 2001). This can be explained in line with the CASA (Computers Are Social Actors) paradigm, according to which technologies that exhibit sufficient social cues are ascribed the agency to be the source of communication (Gambino et al. 2020; Nass and Moon 2000; Sundar and Nass 2000). In a recent extension of the CASA paradigm, the MASA (Media Are Social Actors: Lombard and Xu 2021) paradigm, a human-sounding voice was identified as a primary social cue that can lead to the perception of MASA-presence and, thus, to the identification of the technology itself as the source of communication. Hence, VBAs exhibit particularly sophisticated social cues through their vocal abilities and artificial intelligence (Guzman 2019). Their human-like CUI makes interactions with VBAs more similar to human interactions (Burgoon et al. 1999; Guzman 2019; Hearst 2011), thereby triggering interpersonal scripts as well as social reactions and behavior toward them (Guzman 2019; Moon et al. 2016; Nass et al. 1999; Reeves and Nass 1996). Based on prior research (Guzman 2019; Sundar and Nass 2000), it is plausible to assume that users perceive VBAs as sources instead of mere channels for information, thus attributing communicative agency to them. Importantly for the context of this study, source attribution has been shown to not only influence users’ interaction with and behavior toward the technology (Guzman 2019), but also their evaluation of the information received from the technology (Sundar and Nass 2001). However, it is unclear how source attribution affects the assessment of VBAs’ trustworthiness. Since identifying a technology itself as the source relates to the concept of social presence as “the illusion of nonmediation” (e.g., Lombard and Ditton 1997; Solomon and Wash 2014) we can deduce assumptions about the effect of source attribution on trustworthiness from studies investigating social presence. A large body of research has found a positive effect of social presence on trust and trustworthiness (e.g., Gefen and Straub 2004; Liu 2021; Pitardi and Marriott 2021; Tan and Liew 2020; Toader et al. 2019). Hence, we propose:

H2

(a) Competence, (b) integrity, and (c) benevolence are higher if the VBA is perceived to be the source of communication rather than the medium.

2.2 Predictors pertaining to the VBA as intermediary

Use cases for VBAs increasingly include—besides a stable focus on simple, organizational tasks like setting alarms or reminders—general fact-related questions, access to service information (e.g., weather, traffic), and news updates (Kinsella and Mutchler 2020; Newman et al. 2019; splendid research GmbH 2019), which users rely upon. For example, Germans who were using VBAs at the beginning of 2020 (31%) said they used them mainly for information search (Reichow and Schröter 2020). By presenting information from external sources, VBAs serve as information (or media) intermediaries and can even be regarded as functional equivalents to traditional news anchors, in other words, “artificial news anchors” (Weidmüller 2022, p. 86). This informant role is actively pursued by VBA developer companies who aim to provide news services through their VBAs. Amazon, for example, explicitly announced that they were working on a voice that is particularly suitable for presenting news (Lyons 2020; Wood 2018). In terms of information selection, VBAs narrow the slim “bottleneck” (Broadbent 1958) for information that is determined by the human cognitive capacity even more than other intermediaries, as only one piece of information can be verbally presented in answer to a search query. How a piece of information is selected thereby depends on the underlying voice search optimization mechanisms that are built by the developer companies and not made public. This simultaneously establishes VBAs as modern gatekeepers and news anchors, granting them and their developer companies unprecedented potential influence on the selection and distribution of news and therefore public opinion formation. Moreover, the original sources of the information that is accessed through VBAs are not always self-evident, which diminishes the applicability of established heuristic cues, such as source reputation, to assess information credibility and source trustworthiness (Kalogeropoulos and Newman 2017; Metzger et al. 2010).

Their intermediary functionalities only recently brought VBAs to the attention of Computer-Mediated (Mass) Communication (CMC) scholars. CMC research has been focused on identifying the effect of channel attributes on the effectiveness of human-human communication, or mass media communication. It can thus be distinguished from HMC research regarding source orientation, seeing as HMC investigates users’ interaction with the technology itself as source, while CMC investigates human (or organizational) sources that are mediated by technology (Sundar 2020). As an intermediary, the VBA “carries messages between people [or organizations]” (Cambridge Dictionary n.d.-b). Thus, the VBA itself recedes to the background while the companies responsible for the algorithms based on which the VBA selects the content, and the presented content itself are paramount for the assessment of the VBA as an intermediary. Thus, we ask:

RQ2

How do factors of the mediation context relate to the VBAs’ trustworthiness?

2.2.1 Reputation

Reputation cues of the source have been found to be among the most salient for source credibility and trustworthiness assessments (Koh and Sundar 2010; Metzger et al. 2010; Sundar 2008). Reputation can be defined as an attitudinal concept comprising a cognitive component (i.e., competence), and an affective component (i.e., sympathy; Schwaiger 2004, p. 49). Prior research shows a positive relationship between reputation and trustworthiness of endorsers or presenters (e.g., news anchors) (Chan-Olmsted and Cha 2008; Lis and Post 2013; Oyedeji 2007). The reputation heuristic is built on the psychological preference of known alternatives that are perceived as more valuable and credible than less familiar options (Gigerenzer and Todd 1999; Metzger et al. 2010; Sundar 2008). However, sources in the contemporary information environment have become multi-layered, and are sometimes missing, unidentifiable, or invisible, making it hard to allocate and use reputation cues to assess a source. Thus, when encountering news via channels in which information from multiple sources is presented, it is more difficult for users to remember the professional source of the information. Instead, they recall through which aggregator or platform they found the information (Kalogeropoulos and Newman 2017). This means that the brand of the professional source (i.e., the media company that produced the information) is less available in the mind of the user than the brand of the more proximal intermediary. Due to the fleeting, conversational presentation of information by VBAs, this effect might be even more pronounced. Thus, the brand and reputation of the developer companies (e.g., Google or Amazon) might be the more “visible” or available heuristic cue for users than the brand and reputation of the actual information source (e.g., BBC or CNN). Additionally, at the time of this study, VBAs often presented information without citing the (professional) source at all—a scenario we used for the current study. Hence, cues related to the original (professional) source are completely absent in this study design. Furthermore, VBAs had only recently entered the realm of news presentation at the time of this study. Therefore, users still lacked experience with VBAs as information intermediaries, meaning that they likely relied on their knowledge and prior experiences with the company they associate with the VBAs.

Based on research showing a positive relationship between reputation and trustworthiness of endorsers or presenters (e.g., news anchors) (Chan-Olmsted and Cha 2008; Lis and Post 2013; Oyedeji 2007) and the suggested importance of reputation cues for credibility and trustworthiness assessments (Metzger et al. 2010; Sundar 2008), we propose:

H3

Higher company reputation leads to higher (a) competence, (b) integrity, and (c) benevolence.

Additionally, it can be assumed that the cognitive component of reputation (i.e., competence) is more influential for the cognitive trustworthiness dimensions competence (H3a), and integrity (H3b), while the affective reputation component (i.e., sympathy) has a greater effect on the affective benevolence dimension (H3c).

2.2.2 Information credibility

When VBAs act as information intermediaries, the context in which they are assessed is that of information presentation. A large body of research suggests that recipients base their evaluations of content and source primarily on presentational elements (Fogg et al. 2003; Fogg and Tseng 1999; Metzger 2007; Metzger and Flanagin 2013; Sundar 2008). Thus, in this user-centered approach, the wider social, and production context of the information that is presented by the intermediary recedes to the background and we focus on the presentation level of the interaction. This means that the perceived credibility of the information plays an important role. In fact, it might be of greater importance for the assessment of the VBAs’ trustworthiness in this context than their interlocutor status.

Based on Appelman and Sundar (2016, p. 63) we define information credibility as “an individual’s judgment of the veracity of the content of communication”. Previous research has found a strong, positive relation between the assessment of the message (i.e., the information) and the source (Metzger et al. 2010). Especially in complex information environments, where heuristic rather than systematic cognitive processing helps individuals to cope with uncertainty (Gigerenzer and Todd 1999; Metzger et al. 2010; Sundar 2008; Taraborelli 2008), people base their assessments on surface (i.e., presentation and design) and reputation cues (Fogg et al. 2003; Fogg and Tseng 1999; Metzger 2007; Metzger and Flanagin 2013; Sundar 2008). Because the VBAs’ functionality to present news was still in its infancy at the time of this study, it is likely, that the assessment of the information may serve as a cue for the assessment of the information presenter (i.e., the VBA). In line with the large body of research suggesting a positive relationship between these assessments (e.g., Metzger et al. 2010), the following hypothesis is investigated:

H4

Higher information credibility leads to higher (a) competence, (b) integrity, and (c) benevolence.

Furthermore, we assume that the calculative assessment of the content has a stronger effect on the cognitive trustworthiness dimensions, competence (H4a), and integrity (H4b) than on the more affective trustworthiness dimension benevolence (H4c).

2.3 Trustor characteristics

Apart from the perceived nature of the trustee as an interlocutor and its role as an intermediary, the characteristics and experiences of the trustor influence the formation of trust toward technologies (Schaefer et al. 2016). Prior experience especially, is suggested to be significant for establishing trust in any trustee (Lee and See 2004; Mayer et al. 1995; McKnight et al. 2002; Rousseau et al. 1998; Schaefer et al. 2016). Experiences—both positive and negative—from previous interactions with a trustee affect the expectations that the trustor has toward the trustee, and thus, also the trustworthiness assessment of the trustee (Lankton et al. 2014). Because the news functionalities were still under development for the German VBA market at the time of the study, people are unlikely to have context-specific firsthand experiences. Hence, based on the heuristic principle of favoring familiar over unfamiliar options (Metzger et al. 2010; Sundar 2008), prior experience with VBAs might have a generally positive effect on VBA trustworthiness in this study.

Furthermore, the trustor’s disposition to trust (Mayer et al. 1995) as an inherent individual trait has been found to positively influence the trustworthiness assessment (Schaefer et al. 2016) and is therefore considered as control variable in this study.

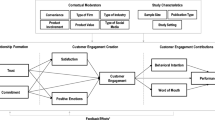

Table 1 summarizes the differing aspects regarding the functions of VBAs as interlocutors and intermediaries that we have discussed up to this point. Fig. 1 provides an overview of all predictors whose influence on VBA trustworthiness will be examined in this study. Importantly, the goal of this study is not to provide and examine an exhaustive model with all predictors relevant for trustworthiness but to consider the most relevant and most established variables from the two research traditions we apply to VBAs.

3 Method

3.1 Sample and procedure

This study is a secondary analysis using data from a larger project, the results of which have partly been published in Etzrodt (2021), Etzrodt and Engesser (2021), and Weidmüller (2022).Footnote 1 Data was gathered in late 2018 by conducting two demonstrational online surveys among the students (study 1) and employees (study 2) of a large German university. For this secondary analysis, both samples were merged and examined in one data set. Participants (N = 1288, mean age = 27, SD = 8.64, age range = 17–65, 52.5% male) were recruited through the university’s respective student and employee email lists and asked to participate anonymously.Footnote 2 During the online survey, participants experienced seven quasi-interactions with either Amazon’s Alexa (n = 640) or Google Assistant (n = 648). These VBAs were chosen due to their market leader positions on the voice assistant market (Kinsella and Mutchler 2020; Statista 2021). Still, while most participants said they knew the VBA they were randomly assigned to (82.5%), few had firsthand prior experience with the VBA by owning it (18.3%). This was anticipated due to the low adoption rate for VBAs in Germany at the time of the survey (Newman et al. 2018) and is one of the reasons why (a) participants were recruited among highly educated, young cohorts, which are more likely to adopt new technologies more quickly (acatech and Körber-Stiftung 2019; Czaja et al. 2006; Elliot et al. 2014), and (b) why the stimulus material was presented in the form of videos that can convey the VBAs’ voice and design. To simulate an interaction, participants had to activate all videos during the survey by clicking a button containing a question to the VBA and then received the VBAs prerecorded response in form of a video on the next page of the survey. Throughout the survey, participants subsequently “asked” the VBA four “personal”, and three news-related questions in this manner and received the VBAs respective “answers”.

After asking about participants’ prior experience with VBAs, they had four simulated personal quasi-interactions with the respective VBA: The participants saw four videos that contained the assigned VBAs’ real answers to “personal” questions (e.g., “How are you?”). These allowed participants to familiarize themselves with the survey style and the respective VBA before they were asked to classify the VBA in terms of ontology. After that, they saw three prerecorded videos in which the respective VBA presented news about the implementation of the General Data Protection Regulation (GDPR), a topic that was chosen due to its societal relevance in Germany at the time of the study. The news-related videos contained information from real news of quality German media, which the VBAs were manipulatedFootnote 3 to present verbally and without citing the original news source. The news content was the same for both VBAs. After the news-related quasi-interactions, participants were asked to assess the credibility of the information, the trustworthiness of the respective VBA, and the reputation of the respective developer company.

The videos and English transcripts are available in this study’s OSF repository.

3.2 Measures

The dependent variable of VBA trustworthiness was measured using the human-like trustworthiness scale that is adapted to the information mediation context from Weidmüller (2022). Participants were asked to indicate how much they agreed with nine items, representing the dimensions competence, integrity, and benevolence, on a seven-point Likert-scale (1 “do not agree at all”–7 “agree completely”). These have been shown to be applicable for technologies that exhibit social cues (e.g., the human-like voice interface of VBAs), thereby implying human-likeness (Guzman 2015; Hearst 2011; Lankton et al. 2015; Lombard and Xu 2021; McTear et al. 2016; Weidmüller 2022). Sample items include “is competent in providing information” (competence), “gives truthful information” (integrity), and “would do its best to help me if I needed help” (benevolence). An explanatory factor analysis (EFA) confirmed the three factors which showed acceptable internal reliability (integrity: α = 0.86, competence: α = 0.84, benevolence: α = 0.78). Mean indices were calculated accordingly. On average, participants perceived both VBAs to have rather low integrity (Alexa: M = 3.82, SD = 1.44, Google Assistant: M = 3.93, SD = 1.47) and benevolence (Alexa: M = 3.36, SD = 1.59, Google Assistant: M = 3.35, SD = 1.58), but high competence (Alexa: M = 4.64, SD = 1.45, Google Assistant: M = 4.52, SD = 1.46) to present information. The mean differences between the VBAs were not significant.

3.2.1 Interlocutor predictors

The ontological classification was measured using the 101-point object-subject classification scale from Etzrodt and Engesser (2021), who adopted Hubbard’s (2010) concept of personhood to Piaget’s (1974) object-subject dichotomy. The binary semantic differential consists of the two poles thing (1) and person (101), with 1 indicating the classification of the VBA as mere thing and 101 indicating the classification as mere person. The higher the value, the stronger the ontological classification toward subject of the VBA (M = 17.11; SD = 17.87). This broad scale allows for the expression of slight classification tendencies without forcing participants to over- or underestimate object- or subjecthood (Etzrodt 2021). Our participants’ classifications ranged from complete classification of the VBA as thing (31.6%) to complete classification of the VBA as person (0.2%). However, most participants classified the VBA as something in between, giving weight to the already discussed challenge that the VBAs’ perceptual hybridity poses for their ontological classification. The classification did not differ significantly between Alexa and Google Assistant.

Source attribution was exploratively measured by asking participants to indicate which source they thought the information originated from (answer categories: “from Alexa/Google Assistant”; “from another source”; “I don’t know”). Both VBAs were mostly perceived as an intermediary mediating information from another source (Alexa: 79.4%, Google Assistant: 51.4%), instead of being the source of the information. However, Google Assistant was significantly more often attributed the source status (32.6%) than Alexa (8.8%), Phi = −0.337 (p < 0.001).

3.2.2 Intermediary predictors

Company reputation regarding the developer company of the respective VBA (Google or Amazon) was measured via agreement on a five-point Likert-scale (1 “do not agree at all”–5 “agree completely”) with nine items. These items were adapted and translated from Schwaiger’s (2004) repository of items that explain the two dimensions of brand reputation, sympathy (α = 0.77, M = 2.17, SD = 0.86) and competence (α = 0.61, M = 3.94, SD = 0.77). Factor structure was confirmed by an EFA after excluding one item due to low communality after extraction. Both developer companies received very low sympathy ratings (Amazon: M = 2.11, SD = 0.85, Google: M = 2.24, SD = 0.86), but very high competence ratings (Amazon: M = 3.86, SD = 0.77, Google: M = 4.04, SD = 0.77). However, Google was rated significantly higher regarding both sympathy (t (1225) = 2.54, p < 0.05, Hedge’s g = 0.15) and competence (t (1147) = 3.94, p < 0.001, Hedge’s g = 0.23) than Amazon.

To measure participants’ assessment of the presented information, participants were asked how well they thought eleven adjectives (e.g., “concise”, “credible”, “well-presented”) described the information from the three news-related quasi-interactions on a seven-point Likert-scale (1 “describes very poorly”–7 “describes very well”). The items were adapted and translated into German from Appelman and Sundar’s (2016) scale to measure information credibility and showed high internal reliability (α = 0.91, M = 4.71, SD = 1.16). There was a small, significant difference in perceived information credibility between the two VBAs (Alexa: M = 4.78, SD = 1.12; Google Assistant: M = 4.64, SD = 1.19, t (1250) = −2.01, p < 0.05, Hedge’s g = 0.11).

3.2.3 Trustor characteristics

Participants’ prior experience with the VBA was assessed by asking them in the beginning of the survey whether they own the VBA they were randomly assigned to. Disposition to trust others was measured by using three items (Beierlein et al. 2012) that participants answered on a five-point Likert-scale (1 “do not agree at all”–5 “agree completely”; α = 0.75, M = 3.58, SD = 0.83). Demographic variables included age and gender.

4 Results

To investigate and compare the effect of predictors pertaining to the VBA as interlocutor and intermediary on the trustworthiness assessment, we conducted hierarchical multiple regression analyses for each of the three dimensions of trustworthiness as dependent variables. The results for each step of these regression analyses are displayed in Table 2.

4.1 Baseline model: trustor characteristics (step 1)

The participants’ (i.e., trustor) characteristics were entered into the regression first to form the baseline model. Additionally, this step also included the experimental group (i.e., which VBA the participants were assigned) to control for the slight differences in appearance, and voice between the two VBAs used in this study. The baseline model explained 2% of variance in competence, 5% of variance in integrity, and 6.9% of variance in benevolence. The more participants were dispositioned to trust in general, the more trustworthy they assessed the VBA in all three dimensions. This was the only significant predictor of VBA competence in this model. The other participant characteristics only affected the dimensions integrity, and benevolence: prior experience with an VBA increased the ratings; higher age decreased the ratings; female participants assessed the VBAs as less integer and benevolent. The VBA itself (Google Assistant or Alexa) did not affect any of the trustworthiness dimensions in a significant way.

4.2 Interlocutor model (step 2)

Participants’ ontological classification of, and source attribution to the VBA were entered into the regression in the second step. While an increase in the classification toward subject significantly heightened the assessment and was the strongest predictor in this model for all three dimensions of trustworthiness, source attribution did not have any effect. The effects of the participant characteristics remained the same. Including the interlocutor predictors into the regression added an explained variance of 6.1% in VBA competence, 6.2% in VBA integrity, and 8.7% in VBA benevolence to the model.

4.3 Full model: interlocutor plus intermediary (step 3)

The integration of predictors pertaining to the intermediary function of the VBA in the third regression step added 32.2% of explained variance in competence, 31.2% in integrity, and 11.3% in benevolence. Hence, adding this set of predictors explains the largest portion of variance in VBA trustworthiness in this study. Nonetheless, the ontological classification maintained its effect on all dimensions of trustworthiness, even though it lost some of its strength as a predictor and was exceeded by the intermediary predictors’ effects: company sympathy exceeded the impact of the classification for all trustworthiness dimensions; company competence, and information credibility exceeded the impact of the classification on competence and integrity. In fact, information credibility proved to be the strongest predictor of VBA competence and integrity. In contrast, although information credibility also affected the assessment of the VBAs’ benevolence, it is only the third most influential predictor for this dimension, while the company’s sympathy and the VBAs’ ontological classification were the strongest predictors.

Among the participant characteristics, only disposition to trust significantly affected VBA trustworthiness in all dimensions in a positive way. The effect of prior experience on integrity and benevolence from step one and two of the regression ceased to be significant when the intermediary predictors were entered into the model. This may either be explained by the fact that VBAs had only begun to implement news use functionalities at the time of the study, and participants’ prior experiences are therefore likely not specific for the news context, or hint at a mediating effect of the newly included intermediary predictors. The negative effect of higher age on integrity and benevolence persisted; the more negative assessment by female participants persisted only for benevolence but ceased for integrity.

In total, all predictors explained 39.7% of variance in competence, 41.7% in integrity, and 26.2% in benevolence. The inclusion of both interlocutor and intermediary predictors revealed (1) a significant rise in explained variance for all trustworthiness dimensions, and (2) demonstrated that the ontological classification of VBAs toward subject remained a relevant predictor even in this mediation context. However, by comparing the sum of squared semi-partial correlations (∑sr2) of interlocutor, and intermediary predictors for each trustworthiness dimension, we can conclude that the intermediary predictors explained more variance in competence (∑sr2 = 23.54), integrity (∑sr2 = 21.35), and benevolence (∑sr2 = 7.86) than the interlocutor predictors (competence: ∑sr2 = 1.36; integrity: ∑sr2 = 1.04; benevolence: ∑sr2 = 4.00). Hence, the context-related predictors were of greater importance for the trustworthiness assessment in this study than the VBAs’ perceived nature. Still, examining the squared semi-partial correlations also revealed that combining both sets of predictors (and including trustor characteristics) explained an additional 13.73% of variance in competence, 18.13% in integrity, and 10.52% in benevolence.

4.4 Hypotheses results

Results for the expected relationship between each predictor and dependent dimension of trustworthiness can be concluded from the last step of the multiple regression which contains all predictors. The ontological classification toward subject had a significant positive effect on all three trustworthiness dimensions, which means H1a must be rejected, but H1b and H1c are supported. Source attribution did not affect any trustworthiness dimension in a significant way, which means H2a, b, c, must be rejected. As expected with H3a, b, c, both reputation dimensions were positively related to all trustworthiness dimensions. However, H3c can only partly be supported because company competence did not significantly affect benevolence. Hence, our assumptions that the sympathy reputation component would affect the trustworthiness dimensions integrity and benevolence more than the competence dimension while this would be the other way around for the competence reputation component were supported as well by looking at the squared semi-partial correlations. H4a, b, c, were supported due to the significant positive effect of information credibility on all three trustworthiness dimensions. As expected, this predictor had a stronger effect on the competence dimension than on the integrity and benevolence dimensions. Results for all hypotheses are summarized in Table 3.

5 Discussion

When VBAs act as news presenters, they are interlocutors and intermediaries at the same time. Just like when human news anchors present news, users can only marginally retrace or check how the content was chosen for presentation. Therefore, users need to trust the news presenter, in this case the VBA, that the presented news originate from valid sources, are sufficiently comprehensive, or are simply reported truthfully. When VBAs act as news presenters, their elaborate human-like CUI displays affordances that trigger scripts of human-human interaction. Thus, their social cues, and anthropomorphized design might be more salient to users and trigger evaluation heuristics connected to the VBA’s interlocutor status. However, classic intermediary heuristics such as the reputation of their affiliated company and the evaluation of the presented information might be of equal, or greater importance for the context-related trustworthiness assessment than the VBAs’ interlocutor status. So far, studies have either focused on VBAs as interlocutors or as intermediaries. Yet, VBAs inhabit both functions simultaneously. Hence, this paper argues that insights from HMC, traditionally focusing on the interlocutor function and from CMC, traditionally focusing on the intermediary function of machines, should be combined. We took a first step at demonstrating the merit of such a combination by investigating how predictors from both research fields affect an assessment that is relevant for both: trustworthiness.

5.1 Key results and implications

Most importantly, we found that the joint analysis of interlocutor and intermediary predictors contributed to the substantial improvement of the model’s explanatory power. For the cognition-based trustworthiness dimensions, competence and integrity, the interlocutor predictors added a rather small percentage of variance in step 2 of the regression (less than 7%). By adding the intermediary predictors reputation, and information credibility in step 3, both models gained over 30% of explained variance. Hence, interlocutor status—though still influential—receded to the background while context-specific predictors were of greater importance for these more cognitive assessment dimensions. In contrast, interlocutor (change in R2 = 8.7%) and intermediary predictors (change in R2 = 11.3%) added about the same amount of variance for the affective trustworthiness dimension benevolence. And even though the sum of squared semi-partial correlations was higher for interlocutor than intermediary predictors for all three trustworthiness dimensions, including both sets of predictors to the models added a substantial amount of explained variance for all trustworthiness dimensions. These results demonstrate in a quantifiable way that (1) both interlocutor and intermediary predictors are relevant to understand people’s assessments of trustworthiness when VBAs act as news presenters, and (2) that different trustworthiness dimensions are affected differently by the predictors. Hence, our results also show that the three trustworthiness dimensions must be examined individually as each predictor had a unique relationship with each dimension.

The VBA’s interlocutor status constituted a significant predictor of trustworthiness across all three dimensions. We uncovered that the more the VBA’s classification leaned toward subject, the more trustworthy the VBA was deemed. This indicates that people apply heuristics to VBAs that they have developed in human-human interactions—at least when assessing human-like trustworthiness. However, source attribution did not affect trustworthiness. In our study, we chose a narrow concept of source orientation that examined the attribution of content to the VBA rather than social presence. The results indicate that this is less important for the development of trust than the VBA’s classification as more subject-like. Consequently, we encourage future studies to distinguish more explicitly between the narrow understanding of source attribution, which is directed at the source of content production, and the broad understanding, which assumes social presence and is thus more closely related to the ontological classification as subject. Therefore, the degree of ontological classification toward subject—rather than the mere attribution of source—needs to be considered when investigating machines that exhibit human-like cues even when they fulfill an intermediary function. However, the fact that the impact of the interlocutor status decreased when adding predictors that have proven influential in classic mediation research indicates that these predictors may be important mediators for the effect of interlocutor status on downstream assessments like trustworthiness. Not including them may result in an overestimation of the impact of anthropomorphized designs.

In fact, information credibility was the strongest predictor of VBA trustworthiness. While it predominantly affected the cognition-based trustworthiness dimensions competence and integrity, it still ranked as the third most influential predictor for the dimension benevolence. Its strong effect is potentially problematic seeing as previous studies uncovered that people usually do not check news they consume thoroughly (Metzger 2007). While we only used high quality information in this study, in everyday news use, the sources that are available through VBAs are very diverse in quality and journalistic professionalism (Weidmüller et al. 2021). The second most important predictor of VBA trustworthiness was the company’s reputation: The company’s sympathy was particularly influential for the more affect-based trustworthiness dimension benevolence, and ranked second regarding integrity, which also contains affective facets. While the company’s competence only had a minor (albeit significant) effect on the VBAs’ benevolence and integrity, it affected VBA competence to a similar extent as the company’s sympathy. Reputation is thereby confirmed once more as an important heuristic for people to trust (Metzger et al. 2010; Sundar 2008). Remarkably, it does not appear to matter whether the companies built their reputation specifically regarding the news domain: In contrast to human news anchors, VBAs as artificial news presenters are affiliated with companies that classify as Big Tech companies (e.g., Amazon, Apple, Microsoft, Google). These companies have built their expertise in areas outside of the traditional media business. Hence, they do not necessarily adhere to journalistic standards in the way they develop their VBAs’ news selection algorithms. The strong effect of company reputation may in part be explained by most participants’ lack of direct experience with the VBAs prior to the study. This could be the reason why participants relied heavily on the company’s reputation from other domains as a heuristic to assess the associated VBA.

5.2 Limitations and directions for future research

The results from this study must be considered in light of certain limitations regarding the procedure and sample. The online survey mode with simulated interactions through pre-recorded videos was chosen to achieve high internal validity by excluding as many external influences (e.g., technical problems, different content presented) as possible, and to achieve a large sample size while reducing the cost and time effort of recruiting participants. It does not, however, resemble the natural interaction situation of users with smart speakers in their homes, or VBAs on their smartphones or other mobile devices and thus, comes at the cost of external validity. Additionally, this cross-sectional study and the regression analyses cannot provide proof of causality for our hypotheses which need further validation. We encourage future research to replicate this study in a more natural interaction setting, potentially using a long-term study design.

Furthermore, most participants did not have direct prior experience with the VBAs as intermediaries, which might have led to an overestimation of the effect that company reputation has on the VBAs’ assessment. Due to the increasing diffusion of VBAs into everyday life, future studies can follow up on our results by using a more evenly distributed sample regarding prior experience with the VBAs. Because previous studies found that with increasing experience, people adapt their mental models, and thus their assessment of machines that exhibit social cues (Horstmann and Krämer 2019; Weidmüller 2022), we would expect an even stronger effect of the VBAs’ interlocutor status for experienced users, while company reputation might be less influential. Additionally, how VBAs present news has changed since our study: They now almost always cite the source from which the information they present originated. Examining how the reputation of the professional source and that of the associated company interact to influence the assessment of the VBA is therefore an exciting new direction for research.

The relative effect of interlocutor and intermediary predictors on the different trustworthiness dimensions should also be examined for other VBAs as well as other communicative machines that display similar, less, or more social cues, like virtual avatars, text-based chatbots, or social robots. Additionally, examining VBA trustworthiness in a more social interaction context (e.g., as a playmate) or when presenting other types of information where objectivity is less in focus than for news (e.g., playing a podcast) may yield different results. The interlocutor predictors might be more influential in such settings. Furthermore, other interlocutor predictors besides the explicit classification, such as the projection of subjecthood through a perceived similarity in thinking, feeling, or behaving, should be considered as well. It is important to note that the selected predictors in this study are by far not the only ones that may be relevant to the assessment of VBAs in this context. We chose these specifically, due to their relevance to the respective research tradition. Although the interlocutor predictors alone did only account for a small portion of the variance in trustworthiness in our study, the combination of both interlocutor and intermediary predictors did substantially improve the explanatory power of the model. Thus, both sets of predictors should be included when examining VBAs as news presenters. Additional insights may be achieved by conducting mediation analyses due to results from the hierarchical regression analyses that hinted at a mediating role of intermediary predictors on the relationship between interlocutor predictors and trustworthiness.

Furthermore, both sets of predictors were differently relevant for the assessment of different trustworthiness dimensions. To take these differences into account, HMC and CMC researchers can benefit from a detailed measurement of trustworthiness. Depending on the technology, it may also be important to include machine-like trustworthiness dimensions (i.e., reliability, functionality, helpfulness), or to consider a hybrid trustworthiness model (Weidmüller 2022) for which the effects of interlocutor and intermediary predictors might differ.

6 Conclusion

In conclusion, this study took a first step at demonstrating the merit of including both interlocutor and intermediary predictors to investigate VBAs that enable direct and mediated communication simultaneously. Therefore, we appeal to unite both research traditions in boundary contexts like this. On the one hand, HMC can benefit from established trustworthiness predictors from mediated human-human interaction, especially when these artificial interlocutors engage in a mediating function. On the other hand, HMC’s sophisticated elaboration on a machine’s ontological classification can contribute to CMC research, which is increasingly dealing with media that are becoming interlocutors, such as intelligent voice-based assistants.

Notes

These previous publications focus on either the assessment of the VBAs’ trustworthiness or their ontological classification. In contrast, this paper is the first to investigate the relationship between the two concepts.

All participants had the opportunity to participate in a raffle of 30 10 € vouchers for a vendor of their choosing at the end of the survey. Participation in the raffle was voluntary. The contact data required for this purpose was stored separately from the survey responses of the respective participant and deleted (including all correspondence) after the raffle was completed.

We used the IFTT (“If This Then That”)-app to control the VBAs’ output when filming the videos.

References

acatech, & Körber-Stiftung (2019). TechnikRadar 2019: Was die Deutschen über Technik denken [Technology radar 2019: What Germans think about technology]. https://www.acatech.de/publikation/technikradar-2019/. Accessed 9 Jan 2020.

Ammari, T., Kaye, J., Tsai, J. Y., & Bentley, F. (2019). Music, search, and IoT: How people (really) use voice assistants. ACM Transactions on Computer-Human Interaction, 26(3), 1–28. https://doi.org/10.1145/3311956.

Appelman, A., & Sundar, S. S. (2016). Measuring message credibility: Construction and validation of an exclusive scale. Journalism & Mass Communication Quarterly, 93(1), 59–79. https://doi.org/10.1177/1077699015606057.

Banks, J. (2021). Of like mind: The (mostly) similar mentalizing of robots and humans. Technology, Mind, and Behavior. https://doi.org/10.1037/tmb0000025.

Beierlein, C., Kemper, C. J., Kovaleva, A., & Rammstedt, B. (2012). Kurzskala zur Messung des zwischenmenschlichen Vertrauens [Short scale for measuring interpersonal trust]. GESIS-Working Papers. https://nbn-resolving.org/urn:nbn:de:0168-ssoar-312126. Accessed 10 Oct 2017.

Blöbaum, B. (2016). Key factors in the process of trust. On the analysis of trust under digital conditions. In B. Blöbaum (Ed.), Trust and communication in a digitized world: Models and concepts of trust research (pp. 3–25). Cham: Springer. https://doi.org/10.1007/978-3-319-28059-2.

Broadbent, D. E. (1958). Perception and communication. Elmsford: Pergamon Press. https://doi.org/10.1037/10037-000.

Burgoon, J. K., Bonito, J. A., Bengtsson, B., Ramirez, A., Dunbar, N. E., & Miczo, N. (1999). Testing the interactivity model: Communication processes, partner assessments, and the quality of collaborative work. Journal of Management Information Systems, 16(3), 33–56. https://doi.org/10.1080/07421222.1999.11518255.

Calhoun, C. S., Bobko, P., Gallimore, J. J., & Lyons, J. B. (2019). Linking precursors of interpersonal trust to human-automation trust: An expanded typology and exploratory experiment. Journal of Trust Research, 9(1), 28–46. https://doi.org/10.1080/21515581.2019.1579730.

Cambridge Dictionary Interlocutor. https://dictionary.cambridge.org/dictionary/english/interlocutor. Accessed 19 Aug 2022.

Cambridge Dictionary Intermediary. https://dictionary.cambridge.org/dictionary/english/intermediary. Accessed 19 Aug 2022.

Chan-Olmsted, S. M., & Cha, J. (2008). Exploring the antecedents and effects of brand images for television news: An application of brand personality construct in a multichannel news environment. International Journal on Media Management, 10(1), 32–45. https://doi.org/10.1080/14241270701820481.

Colquitt, J. A., Scott, B. A., & LePine, J. A. (2007). Trust, trustworthiness, and trust propensity: A meta-analytic test of their unique relationships with risk taking and job performance. Journal of Applied Psychology, 92(4), 909–927. https://doi.org/10.1037/0021-9010.92.4.909.

Czaja, S. J., Charness, N., Fisk, A. D., Hertzog, C., Nair, S. N., Rogers, W. A., & Sharit, J. (2006). Factors predicting the use of technology: Findings from the center for research and education on aging and technology enhancement (CREATE). Psychology and Aging, 21(2), 333–352. https://doi.org/10.1037/0882-7974.21.2.333.

Deloitte (2018). Beyond touch: Voice-commerce 2030. https://www2.deloitte.com/de/de/pages/consumer-business/articles/sprachassistent.html. Accessed 20 Jan 2021.

Edwards, A. P. (2018). Animals, humans, and machines: Interactive implications of ontological classification. In A. L. Guzman (Ed.), Human-machine communication: Rethinking communication, technology, and ourselves (pp. 29–50). https://doi.org/10.3726/b14399. New York: Peter Lang

Elliot, A. J., Mooney, C. J., Douthit, K. Z., & Lynch, M. F. (2014). Predictors of older adults’ technology use and its relationship to depressive symptoms and well-being. Journals of Gerontology, Series B: Psychological Sciences and Social Sciences, 69(5), 667–677. https://doi.org/10.1093/geronb/gbt109.

Etzrodt, K. (2021). The ontological classification of conversational agents. In A. Følstad, T. Araujo, S. Papadopoulos, E. L.-C. Law, E. Luger, M. Goodwin & P. B. Brandtzaeg (Eds.), Chatbot research and design (pp. 48–63). Cham: Springer. https://doi.org/10.1007/978-3-030-68288-0_4.

Etzrodt, K., & Engesser, S. (2021). Voice-based agents as personified things: Assimilation and accommodation as equilibration of doubt. Human-Machine Communication, 2, 57–79. https://doi.org/10.30658/hmc.2.3.

Fogg, B. J., & Tseng, H. (1999). The elements of computer credibility. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’99 (pp. 80–87). https://doi.org/10.1145/302979.303001.

Fogg, B. J., Soohoo, C., Danielson, D. R., Marable, L., Stanford, J., & Tauber, E. R. (2003). How do users evaluate the credibility of web sites?: A study with over 2,500 participants. In DUX ’03, Proceedings of the 2003 Conference on Designing for User Experiences (pp. 1–15). https://doi.org/10.1145/997078.997097.

Følstad, A., Nordheim, C. B., & Bjørkli, C. A. (2018). What makes users trust a chatbot for customer service? An exploratory interview study. In S. S. Bodrunova (Ed.), Internet Science: Proceedings of the 5th INSCI International Conference (Vol. 11193, pp. 194–208). Cham: Springer. https://doi.org/10.1007/978-3-030-01437-7.

Gambino, A., Fox, J., & Ratan, R. (2020). Building a stronger CASA: extending the computers are social actors paradigm. Human-Machine Communication. https://doi.org/10.30658/hmc.1.5.

Garcia, M. P., Lopez, S. S., & Donis, H. (2018). Voice activated virtual assistants’ personality perceptions and desires: Comparing personality evaluation frameworks. In Proceedings of British HCI 2018. 32nd International BCS Human Computer Interaction Conference, Belfast. https://doi.org/10.14236/ewic/HCI2018.40.

Gefen, D., & Straub, D. W. (2004). Consumer trust in B2C e‑commerce and the importance of social presence: Experiments in e‑products and e‑services. Omega, 32(6), 407–424. https://doi.org/10.1016/j.omega.2004.01.006.

Gigerenzer, G., & Todd, P. M. (1999). Fast and frugal heuristics. The adaptive toolbox. In G. Gigerenzer & P. M. Todd (Eds.), Simple heuristics that make us smart (1st edn., pp. 3–36). Oxford: Oxford University Press.

Gong, L. (2008). How social is social responses to computers? The function of the degree of anthropomorphism in computer representations. Computers in Human Behavior, 24(4), 1494–1509. https://doi.org/10.1016/j.chb.2007.05.007.

Gunkel, D. J. (2020). An introduction to communication and artificial intelligence. Cambridge: Polity Press.

Guzman, A. L. (2015). Imagining the voice in the machine: The ontology of digital social agents. Chicago: University of Illinois.

Guzman, A. L. (2019). Voices in and of the machine: Source orientation toward mobile virtual assistants. Computers in Human Behavior, 90, 343–350. https://doi.org/10.1016/j.chb.2018.08.009.

Guzman, A. L. (2020). Ontological boundaries between humans and computers and the implications for human-machine communication. Human-Machine Communication, 1, 37–54. https://doi.org/10.30658/hmc.1.3

Hancock, P. A., Billings, D. R., Schaefer, K. E., Chen, J. Y. C., de Visser, E. J., & Parasuraman, R. (2011). A meta-analysis of factors affecting trust in human-robot interaction. Human Factors: The Journal of the Human Factors and Ergonomics Society, 53(5), 517–527. https://doi.org/10.1177/0018720811417254.

Hearst, M. A. (2011). “Natural” search user interfaces. Communications of the ACM, 54(11), 60–67. https://doi.org/10.1145/2018396.2018414.

Horstmann, A. C., & Krämer, N. C. (2019). Great expectations? Relation of previous experiences with social robots in real life or in the media and expectancies based on qualitative and quantitative assessment. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2019.00939.

Hubbard, F. P. (2010). “Do androids dream?”: Personhood and intelligent artifacts. Temple Law Review, 83, 405–474. https://papers.ssrn.com/abstract=1725983. Accessed 26 April 2022.

Jia, H., Wu, M., & Sundar, S. S. (2022). Do we blame it on the machine? Task outcome and agency attribution in human-technology collaboration. In Hawaii International Conference on System Sciences. https://doi.org/10.24251/HICSS.2022.047.

Kahn, P. H. Jr., Reichert, A. L., Gary, H., Kanda, T., Ishiguro, H., Shen, S., Ruckert, J. H., & Gill, B. (2011). The new ontological category hypothesis in human-robot interaction. In Proceedings of the 6th International Conference on Human Robot Interaction (HRI) (pp. 159–160). https://doi.org/10.1145/1957656.1957710.

Kalogeropoulos, A., & Newman, N. (2017). “I saw the news on Facebook”—Brand attribution from distributed environments. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/our-research/i-saw-news-facebook-brand-attribution-when-accessing-news-distributed-environments. Accessed 23 Mar 2018.

Kim, Y., & Sundar, S. S. (2012). Anthropomorphism of computers: Is it mindful or mindless? Computers in Human Behavior, 28(1), 241–250. https://doi.org/10.1016/j.chb.2011.09.006.

Kinsella, B., & Mutchler, A. (2020). Smart speaker consumer adoption report. voicebot.ai. https://research.voicebot.ai/report-list/smart-speaker-consumer-adoption-report-2020/. Accessed 12 May 2020.

Knote, R., Janson, A., Söllner, M., & Leimeister, J. M. (2019). Classifying smart personal assistants: An empirical cluster analysis. In Proceedings of the 52nd Hawaii International Conference on System Sciences (pp. 2024–2033).

Koh, Y. J., & Sundar, S. S. (2010). Effects of specialization in computers, web sites, and web agents on e‑commerce trust. International Journal of Human-Computer Studies, 68(12), 899–912. https://doi.org/10.1016/j.ijhcs.2010.08.002.

Kohring, M. (2019). Public trust in news media. In T. P. Vos & F. Hanusch (Eds.), The international encyclopedia of journalism studies. Wiley online library. https://doi.org/10.1002/9781118841570.iejs0056.

Kulms, P., & Kopp, S. (2019). More human-likeness, more trust?: The effect of anthropomorphism on self-reported and behavioral trust in continued and interdependent human-agent cooperation. In Proceedings of Mensch und Computer 2019 (pp. 31–42). https://doi.org/10.1145/3340764.3340793.

Lankton, N., McKnight, D. H., & Thatcher, J. B. (2014). Incorporating trust-in-technology into expectation disconfirmation theory. The Journal of Strategic Information Systems, 23(2), 128–145. https://doi.org/10.1016/j.jsis.2013.09.001.

Lankton, N., McKnight, D. H., & Tripp, J. (2015). Technology, humanness, and trust: Rethinking trust in technology. Journal of the Association for Information Systems, 16(10), 880–918. https://doi.org/10.17705/1jais.00411.

Lee, J. D., & See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Human Factors, 46(1), 50–80. https://doi.org/10.1518/hfes.46.1.50.30392.

Lis, B., & Post, M. (2013). What’s on TV? The impact of brand image and celebrity credibility on television consumption from an ingredient branding perspective. International Journal on Media Management, 15(4), 229–244. https://doi.org/10.1080/14241277.2013.863099.

Liu, B. (2021). In AI we trust? Effects of agency locus and transparency on uncertainty reduction in human–AI interaction. Journal of Computer-Mediated Communication, 26(6), 384–402. https://doi.org/10.1093/jcmc/zmab013.

Lombard, M., & Ditton, T. (1997). At the heart of it all: The concept of presence. Journal of Computer-Mediated Communication. https://doi.org/10.1111/j.1083-6101.1997.tb00072.x.

Lombard, M., & Xu, K. (2021). Social responses to media technologies in the 21st century: The media are social actors paradigm. Human-Machine Communication, 2, 29–55. https://doi.org/10.30658/hmc.2.2.

Lyons, K. (2020). Amazon’s Alexa gets a new longform speaking style. The Verge. https://www.theverge.com/2020/4/16/21224141/amazon-alexa-long-form-speaking-polly-ai-voice (Created 16 Apr 2020). Accessed 23 Aug 2020.

Mayer, R. C., Davis, J. H., & Schoorman, F. D. (1995). An integrative model of organizational trust. The Academy of Management Review, 20(3), 709. https://doi.org/10.2307/258792.

McKnight, D. H., Choudhury, V., & Kacmar, C. (2002). Developing and validating trust measures for e‑commerce: An integrative typology. Information Systems Research, 13(3), 334–359. https://doi.org/10.1287/isre.13.3.334.81.

McKnight, D. H., Carter, M., Thatcher, J. B., & Clay, P. F. (2011). Trust in a specific technology: an investigation of its components and measures. ACM Transactions on Management Information Systems, 2(2), 1–25. https://doi.org/10.1145/1985347.1985353.

McTear, M., Callejas, Z., & Barres, D. G. (2016). The conversational interface: Talking to smart devices. Cham: Springer.

Metzger, M. J. (2007). Making sense of credibility on the web: Models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58(13), 2078–2091. https://doi.org/10.1002/asi.20672.

Metzger, M. J., & Flanagin, A. J. (2013). Credibility and trust of information in online environments: The use of cognitive heuristics. Journal of Pragmatics, 59, 210–220. https://doi.org/10.1016/j.pragma.2013.07.012.

Metzger, M. J., Flanagin, A. J., Eyal, K., Lemus, D. R., & Mccann, R. M. (2003). Credibility for the 21st century: Integrating perspectives on source, message, and media credibility in the contemporary media environment. Annals of the International Communication Association, 27(1), 293–335. https://doi.org/10.1080/23808985.2003.11679029.

Metzger, M. J., Flanagin, A. J., & Medders, R. B. (2010). Social and heuristic approaches to credibility evaluation online. Journal of Communication, 60(3), 413–439. https://doi.org/10.1111/j.1460-2466.2010.01488.x.

Moon, Y., Kim, K. J., & Shin, D.-H. (2016). Voices of the internet of things: An exploration of multiple voice effects in smart homes. In N. Streitz & P. Markopoulos (Eds.), Distributed, ambient and pervasive interactions (Vol. 9749, pp. 270–278). Cham: Springer. https://doi.org/10.1007/978-3-319-39862-4_25.

Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of Social Issues, 56(1), 81–103. https://doi.org/10.1111/0022-4537.00153.

Nass, C., Moon, Y., & Carney, P. (1999). Are people polite to computers? Responses to computer-based interviewing systems. Journal of Applied Social Psychology, 29(5), 1093–1109. https://doi.org/10.1111/j.1559-1816.1999.tb00142.x.

Natale, S. (2021). To believe in Siri: A critical analysis of voice assistants. In S. Natale (Ed.), Deceitful media: artificial intelligence and social life after the Turing test (pp. 107–126). Oxford: Oxford University Press. https://doi.org/10.1093/oso/9780190080365.003.0007.

Natale, S., & Cooke, H. (2021). Browsing with Alexa: Interrogating the impact of voice assistants as web interfaces. Media, Culture & Society, 43(6), 1000–1016. https://doi.org/10.1177/0163443720983295.

Natarajan, M., & Gombolay, M. (2020). Effects of anthropomorphism and accountability on trust in human robot interaction. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (pp. 33–42). https://doi.org/10.1145/3319502.3374839.

Newman, N., Fletcher, R., Kalogeropoulos, A., Levy, D. A. L., & Nielsen, R. K. (2018). Digital news report 2018. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/digital-news-report-2018.pdf. Accessed 13 Apr 2019.

Newman, N., Fletcher, R., Kalogeropoulos, A., & Nielsen, R. K. (2019). Digital news report 2019. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2019-06/DNR_2019_FINAL_0.pdf. Accessed 24 May 2020.

Oyedeji, T. A. (2007). The relation between the customer-based brand equity of media outlets and their media channel credibility: An exploratory study. International Journal on Media Management, 9(3), 116–125. https://doi.org/10.1080/14241270701521725.

Piaget, J. (1974). Abriß der genetischen Epistemologie [The principles of genetic epistemology. Olten: Walter.

Pitardi, V., & Marriott, H. R. (2021). Alexa, she’s not human but… Unveiling the drivers of consumers’ trust in voice-based artificial intelligence. Psychology & Marketing, 38(4), 626–642. https://doi.org/10.1002/mar.21457.

Porter, J. (2019). Alexa’s news-reading voice just got a lot more professional. The Verge. https://www.theverge.com/2019/1/16/18185258/alexa-news-voice-newscaster-news-anchor-us-launch (Created 16 Jan 2019). Accessed 14 Feb 2019.

Reeves, B., & Nass, C. (1996). The media equation: How people treat computers, television, and new media like real people and places. Cambridge: Cambridge University Press.

Reichow, V. D., & Schröter, C. (2020). Audioangebote und ihre Nutzungsrepertoires erweitern sich [Audio offerings and their repertoires of use are expanding]. Media Perspektiven, 9, 501–515.

Rheu, M., Shin, J. Y., Peng, W., & Huh-Yoo, J. (2021). Systematic review: Trust-building factors and implications for conversational agent design. International Journal of Human–Computer Interaction, 37(1), 81–96. https://doi.org/10.1080/10447318.2020.1807710.

Rousseau, D. M., Sitkin, S. B., Burt, R. S., & Camerer, C. (1998). Introduction to special topic forum: Not so different after all: A cross-discipline view of trust. The Academy of Management Review, 23(3), 393–404.

Schaefer, K. E., Chen, J. Y. C., Szalma, J. L., & Hancock, P. A. (2016). A meta-analysis of factors influencing the development of trust in automation: Implications for understanding autonomy in future systems. Human Factors: The Journal of the Human Factors and Ergonomics Society, 58(3), 377–400. https://doi.org/10.1177/0018720816634228.

Scharenborg, O. (2019). Reaching over the gap: Cross- and interdisciplinary research on human and automatic speech processing. http://homepage.tudelft.nl/f7h35/presentations/IS19_survey_Scharenborg.pdf. Accessed 23 Jan 2021.