Abstract

Background

The COVID-19 pandemic required clinicians to care for a disease with evolving characteristics while also adhering to care changes (e.g., physical distancing practices) that might lead to diagnostic errors (DEs).

Objective

To determine the frequency of DEs and their causes among patients hospitalized under investigation (PUI) for COVID-19.

Design

Retrospective cohort.

Setting

Eight medical centers affiliated with the Hospital Medicine ReEngineering Network (HOMERuN).

Target population

Adults hospitalized under investigation (PUI) for COVID-19 infection between February and July 2020.

Measurements

We randomly selected up to 8 cases per site per month for review, with each case reviewed by two clinicians to determine whether a DE (defined as a missed or delayed diagnosis) occurred, and whether any diagnostic process faults took place. We used bivariable statistics to compare patients with and without DE and multivariable models to determine which process faults or patient factors were associated with DEs.

Results

Two hundred and fifty-seven patient charts underwent review, of which 36 (14%) had a diagnostic error. Patients with and without DE were statistically similar in terms of socioeconomic factors, comorbidities, risk factors for COVID-19, and COVID-19 test turnaround time and eventual positivity. Most common diagnostic process faults contributing to DE were problems with clinical assessment, testing choices, history taking, and physical examination (all p < 0.01). Diagnostic process faults associated with policies and procedures related to COVID-19 were not associated with DE risk. Fourteen patients (35.9% of patients with errors and 5.4% overall) suffered harm or death due to diagnostic error.

Limitations

Results are limited by available documentation and do not capture communication between providers and patients.

Conclusion

Among PUI patients, DEs were common and not associated with pandemic-related care changes, suggesting the importance of more general diagnostic process gaps in error propagation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Diagnostic errors (DEs) are “the failure to (a) establish an accurate and timely explanation of the patient’s health problem(s) or (b) communicate that explanation to the patient.”1 Many factors contribute to diagnostic errors, but key among them are complex and fragmented care systems, the limited time available to providers trying to ascertain a firm diagnosis, and the work systems and cultures that impede improvements in diagnostic performance.2,3,4,5,6,7,8 In the hospital setting, work burden, patient acuity, and technology (such as electronic health records [EHRs] and multiple “alerting” systems9) all contribute.

In the early stages of the COVID-19 pandemic, these preexisting problems were exacerbated in ways that have yet to be fully elucidated.10 Shortages of personal protective equipment (PPE) and concerns about workforce preservation led hospitals to replace physical visits with videoconferencing or telephone-based encounters.11,12,13,14,15,16,17 Hospital visitor restrictions impaired or delayed collaborative discussions with patients’ family members, potentially limiting clinicians’ ability to obtain thorough clinical histories. Changes in coverage models (e.g., internal medicine providers providing critical care services18) changed the clinical expertise of physicians caring for COVID-19 patients. Data from our network suggested that half of hospitalist leaders surveyed related a missed or delayed non-COVID-19 diagnosis among patients under investigation (PUI) for COVID-19 infection. A similar proportion also reported missing COVID-19 as a diagnosis in patients admitted for other medical reasons,13 consistent with conceptual models published early in the pandemic.10

This study, undertaken at the height of the first wave of the COVID-19 pandemic, sought to gain an understanding of the prevalence of diagnostic errors among PUIs or with confirmed COVID-19 infection and to gather insights into whether changes in health care policies and procedures during the pandemic might have contributed to these errors.

METHODS

Study Design

This was a retrospective multicenter cohort study of randomly selected patients admitted under investigation for COVID-19 investigation.

Sites and Subjects

This study was undertaken as a collaboration among eight academic centers participating in the Hospital Medicine ReEngineering Network19 who were already conducting diagnostic error case reviews as part of a larger research study.20 Sites in this study represented a range of settings, including locations such as New York City which were affected more significantly by the pandemic than others during our study time period.

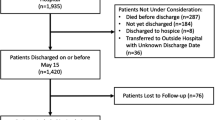

Patients for this study were admitted between February and July 2020 and identified through examination of infection control logs maintained at each site listing patients’ initial COVID-19 status. Patients were then considered for review if they had signs or symptoms considered high risk for COVID-19 based on Centers for Disease Control and Prevention definitions at the time of our study (for example, travel to a high-prevalence area, congregate-living settings, loss of smell) and were awaiting a COVID-19 test or had a negative test prior to hospitalization but persistent symptoms prompting an additional test. We excluded patients whose tests were obtained under universal screening programs (e.g., of all admitted patients regardless of signs or symptoms of COVID-19 infection). As we have done in previous studies,21 we employed a block randomization schema based on patient medical record numbers to randomly select patients; sites were asked to review a minimum of 4 and up to 8 cases meeting these criteria per site per month.

Adjudicator Training

Chart reviewers were identified and trained as part of our larger study, which was ongoing at the start of the pandemic. Each reviewer was first trained to identify diagnostic errors by reviewing at least 5 “gold standard cases” with expert reviewers, then by carrying out adjudications of sample cases in pairs and groups from all participating centers to gain expertise and to cross check results within and across sites.

Group training was followed by review of 10 additional standard cases until we observed consistent agreement on diagnostic error determinations within and across sites, based on over-reads by coordinating center collaborators and through multisite webinar-based case reviews. Once consistency was achieved, we proceeded with adjudication of real cases, with each case being reviewed by two clinicians (physician, nurse practitioner, or physician assistant) active in inpatient care, each of whom was trained according to the protocol above. Finally, every 10th real case was overread by expert reviewers to ensure consistency in error determinations across sites.

Determination of Errors and Underlying Causes

Our two-reviewer process focused on data obtainable from charts, such as patient testing reasons, any COVID-19-specific symptoms which might modify diagnostic thinking (e.g., new-onset anosmia), and patient factors such as functional status.

Adjudicators examined, discussed, and entered data for each case jointly. As a result, each adjudication represented the shared viewpoint of two trained clinicians not connected to the case. In cases of disagreement, a third expert reviewer was employed to help reach a final determination.

Diagnostic errors, defined as missed or delayed diagnoses, were identified using the SAFER-Dx framework, modified for the inpatient setting as operationalized in a larger study (conducted by our group) of diagnostic errors in medical patients who died or were transferred to the ICU.20

Our review methodology used the framework of a “working diagnosis,” in which clinical thinking can rightly evolve over time and can be represented by patterns in diagnostic testing and empiric treatment. Reviewers examined the entire medical record, with particular focus on relating documentation to the results and timestamps for objective data such as vital sign records, laboratory tests, and orders. For example, if a diagnosis was not documented and did not lead to orders for its treatment (or for definitive diagnostic testing) until well after it was apparent based on laboratory findings, then this would be considered a diagnostic error.

If making a diagnosis required a timely procedure (such as urgent endoscopy for gastrointestinal bleeding), but that procedure could not be performed due to various system factors, we would have considered the event to represent a delay in diagnosis because an ideal health care system would be able to accommodate this procedure request. Finally, we granted some discretion to providers based on the context of the information available to clinicians at the time of documentation. This last standard was particularly applicable in our cases where COVID-19 infection was a consideration, as therapeutic and diagnostic approaches were rapidly evolving during our study time period.

Each case was also reviewed for diagnostic process faults using the Diagnostic Error Evaluation and Research (DEER) taxonomy2,22,23 framework, an approach useful for characterizing diagnostic processes regardless of whether or not a diagnostic error took place. DEER is composed of 9 major groupings, under which are more than 50 potential diagnostic process faults that represent various underlying causes of diagnostic errors. Because DEER has the greatest evidence for use in primary care settings and has not been applied to inpatient care, we expanded the taxonomy to include inpatient scenarios (such as transfers from outside hospitals); these factors were added to major headings and analyzed as additional factors.24 We also generated a set of diagnostic process faults related to COVID-19 care (Appendix Table 7). COVID-19-specific diagnostic process factors were generated based on expert input from our collaborative group and included faults such as “physical examination limitations due to medical distancing.” COVID-19-related diagnostic process faults were then aggregated into a separate grouping as a new predictor in analytic models (described below). Finally, each case with an error was rated in terms of its harm to the patient using the NCC-MERP harm rating scale.25

Outcomes and Predictors

The primary outcome of this study was the presence or absence of a diagnostic error, defined as any missed or delayed diagnosis during the index hospitalization. Secondary analyses examined harms due to diagnostic errors. Key predictors tested in primary analyses were those with statistical association with DE in unadjusted analyses and included all major groupings of the DEER taxonomy, including COVID-19-related process faults.

Analytic Approach

We first characterized patients with and without DE using bivariate statistics. Differences in characteristics between patients with and without DE were assessed using either the chi-square test or Fisher exact test for categorical variables and Wilcoxon rank sum test for continuous variables.

Prevalence ratios comparing patients with DE versus those without were computed from the logistic models, as were confounder-adjusted attributable fractions, i.e., the proportion of DE that would have been eliminated if that process fault were eliminated.26,27 Our primary analyses, based on our hypothesis that DEER process faults would be associated with diagnostic errors, used multivariable logistic regression models to assess the adjusted associations between DEER process fault categories with diagnostic errors. Because the DEER taxonomy major grouping for the “clinical assessment” fault was highly correlated with diagnostic error (R2 = 0.70, accuracy = 0.91, sensitivity = 0.89, specificity = 0.91), we carried out exploratory models examining other DEER factors associated with DE but excluding the Clinical Assessment Fault DEER taxonomy grouping. We tested for trends in error rates over the period of our study using the Cochrane-Armitage test for trend. Finally, we calculated descriptive statistics of NCC-MERP harm ratings; our sample size and error rate were too low to carry out multivariable models determining the association between DEER factors and harms due to diagnostic errors. All analyses were conducted using R Statistical Software (v4.1.2; R Core Team 2021).

RESULTS

Patient Characteristics and COVID-19 Illness Features

Two hundred and fifty-seven patients were randomly selected for inclusion in our study. Of these, 36 (14%) had a diagnostic error. Patients with and without diagnostic errors were statistically similar in terms of age, race, ethnicity, primary language, comorbidities, and functional and social determinants of health, but were noted to have symptoms of delirium or be socially isolated more often in chart notation (Table 1). Patients with and without DE were also similar in the proportion of clinical features that might have influenced clinical diagnosis of COVID-19 or non-COVID-19 reasons for hospitalization, including exposure history, travel history, presenting symptoms, severity of illness (e.g., need for mechanical ventilation), and COVID-19 test turnaround times (Table 2).

DEER Taxonomy Features Associated with Diagnostic Errors

Components of the DEER taxonomy features are listed in Appendix Table 7. In unadjusted analyses (Table 3), faults in history taking, physical exam, testing ordering/performance/interpretation, patient follow-up and monitoring, teamwork, and clinical assessment were significantly associated with diagnostic errors (all p < 0.01). DEER diagnostic process faults related to COVID-19 (e.g., failures or delays in eliciting a critical piece of history or physical exam finding or erroneous clinician interpretation of a test related to COVID-19, failure or delay in recognizing or acting upon urgent conditions or complications due to medical distancing) were significantly more frequent among patients with DE (29.5% vs. 9.2%, p < 0.01). In models adjusting for all potential diagnostic process faults (Table 4), only clinical assessment problems remained significantly associated with diagnostic errors, and with a high attributable fraction (78.79% of DEs potentially eliminated if clinical assessment problems eliminated, 95% CI 55.6–102.0). In models without clinical assessment as a covariate, only three non-COVID-19-related process faults had statistically significant attributable fractions (history taking, physical examination, and test ordering, performance, or interpretation), with estimated reductions in diagnostic error rates of between 20 and 37% if these process faults were eliminated entirely.

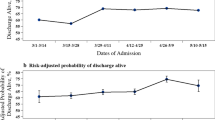

Error Rates Over Time (Table 5)

Diagnostic error rates rose slightly in the last 2 months of the study period (June and July 2020), but this trend did not meet tests for statistical significance (p = 0.06).

Harms Due to Diagnostic Errors (Table 6)

According to the NCC-MERP harms rating scale, 14 patients’ errors (35.9% of patients with errors) produced temporary or permanent harm or led to death; this estimate suggests that harmful errors were present in 5.4% of patients admitted with suspected COVID-19.

DISCUSSION

In this multicenter retrospective study of patients admitted for evaluation for potential COVID-19 infection, diagnostic errors were common. Diagnostic errors were not associated with clinical features of or risk factors for COVID-19 infection or COVID-19 test turnaround times. In contrast, DEs were associated with more general diagnostic process faults such as problems with history taking, physical examination, test ordering, performance, or interpretation, and patient follow-up and monitoring; these factors were closely related to clinical assessment, which was the most common source of DEs. Interestingly, diagnostic process faults related to COVID-19 itself (such need for isolation or medical distancing) were not independently associated with DEs when broader causes of diagnostic process faults were considered.

Our diagnostic error rate needs to be interpreted in light of a field using varying definitions of errors and approaches to detecting cases for review. Studies using common morbid events or “triggers” (e.g., myocardial infarction or epidural abscesses) to identify patients where a diagnosis might have been missed estimated broad ranges of potential error rates (between 2 and 62%).28 Autopsy-based studies have suggested that missed diagnoses are present in 6% of autopsies29 while reviews of over- or under-diagnosis in unselected geriatric patients estimated an error rate of 10% or more.30 When DEs are evaluated as a subset of inpatient adverse events, lower estimated rates (0.2–2.7%) are seen.31 Our estimated rates fall well within this very broad range from previous studies, few of which used a structured review process to identify diagnostic errors or selected patients without an obvious adverse event to “trigger” consideration of an error. Thus, our findings likely represent a more generalized diagnostic error rate.

Patients with and without DEs were nearly identical in terms of patient factors such as age, comorbidities, language, and social determinants of health. Though language limitations and some social determinants of health were likely underdetected with chart reviews alone, it is unlikely that detection bias would have differentially affected patients with and without errors. Detection bias likely reduced our ability to find subtle associations between patient-level factors and diagnostic process faults. While the early phases of the pandemic struck older and disadvantaged patients most severely, our data do not suggest gaps in care or worsened outcomes extended to risks for DEs in vulnerable patient populations. Similarly, DEs were not associated with comorbidities or symptoms which might have been confused with COVID-19 infection (e.g., congestive heart failure or chronic lung diseases, which might also produce dyspnea and infiltrates on chest radiography). Similarly, recognized features of COVID-19 disease risk factors (such as travel to high-risk areas or living in a congregate setting) did not appear to be associated with missed or delayed diagnoses of either COVID-19 or other illnesses. Although scarcity of COVID-19 testing and long test turnaround times were important barriers to timely diagnosis early in the pandemic, we did not find either factor to be associated with diagnostic errors.

In contrast, in multivariable models accounting for DEER process fault groupings, diagnostic errors were associated with general diagnostic process fault groupings, but not with faults specific to the COVID-19 pandemic. It is possible that diagnostic error propagation during the pandemic was driven less by changes caused by COVID-19, and more by foundational problems in diagnostic processes present in everyday care, such as failure to recognize deterioration or loss of information due to multiple handoffs in care. Although we do not have direct measures of workload or hospital capacity, these sorts of stressors, particularly during the beginning of the pandemic, may have had broad impact on a range of diagnostic processes by limiting physicians’ ability to spend adequate time with patients or do the cognitive work required to make accurate and timely diagnoses. In this vein, it is notable that delirium and social isolation were the only patient factors associated with DE, i.e., patients who may require more time spent to gather diagnostic information. Although we used broad DEER classifications as a framework for COVID-19-related faults, it is important to note that our adjudication training asked for reviewers to consider and classify COVID-19-related processes as explicitly separate concepts from standard clinical decision-making. Despite this structured approach, it is possible that our chart review process did not detect subtle issues such as anchoring on COVID-19 diagnoses or communication gaps, making these factors both less common and less associated with DEs after adjusting for more general fault types. Finally, it is also possible that stresses of the pandemic, particularly use of PPE and high workload, may have made physicians less likely to document details of patient history or their diagnostic thinking, which would in turn reduce our ability to discern associations with diagnostic errors. As mentioned above, it is unlikely that documentation biases would have differentially affected patients with or without errors, but this would have made it less likely we would have been able to detect items only gatherable via physician notes.

For similar reasons, we cannot disentangle the relationship between the most common fault associated with diagnostic errors—clinical assessment—and other faults related to history taking, physical exam, or diagnostic testing. For example, it is possible that testing and history taking process faults might lead to problems in clinical assessment (a step which depends on integration of other diagnostic processes). Alternatively, our data could point to the central role of clinical assessment as a root cause of other process faults; failure to consider a diagnosis in the first place can lead to errors in eliciting a piece of history, physical exam finding, or ordering a diagnostic test.

Our study has several limitations. We used chart reviews to gather data, which might be subject to documentation biases and detection biases. To overcome documentation biases, we encouraged chart reviewers to use all available documentation in the medical record (e.g., discharge summaries, admission notes, progress notes, nursing notes, test results, orders, etc.) and to use a reasonable judgment framework to interpret notes and ordering patterns. However, some aspects of care—such as communication between team members or between teams, patients, and families—may not have been captured in documentation and are likely underrepresented in our data. Our data also cannot directly measure whether a diagnostic error represented one type of cognitive process or another (for example, the provider did not consider COVID-19 was the leading diagnosis, but it was, vs. thinking it was COVID-19 but it was not). To address detection biases, all reviewers underwent extensive training at study outset, and cases were overread and reviewed by members of the core research team to ensure consistency and validity. Because PUI admissions varied across our hospitals and over time, our sampling strategy likely resulted in a different proportion of overall PUI admissions being reviewed at each site. While this limits sample size and power within sites, our randomization approach and rigorous and stringent exclusion processes mitigate selection and detection biases. It is possible that local reviewers’ adjudications were shaped by local norms and professional standards (e.g., expectations for consultation timeliness). We addressed this potential problem via training and ongoing inter-site over-read of cases. Our study was not able to capture information about larger challenges in health care at the time of our study, for example, hospital census or physician workload. Finally, although our study incorporated data from multiple hospitals, the overall sample size in our cohort was relatively small and may limit generalizability and statistical power of our findings.

In summary, this multicenter study of diagnostic errors among patients admitted with consideration of COVID-19 as a potential diagnosis demonstrated that diagnostic errors were relatively common and not associated with symptoms, signs, or risk factors associated with COVID-19 or with care processes put in place in the early phase of the pandemic. Several of the process areas associated with diagnostic errors — such as test ordering, history taking, or physical examination gaps — may represent target areas for educational and quality improvement efforts and may be particularly vulnerable to periods of stress in the health care system.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

National Academies of Sciences Engineering, Medicine. Improving Diagnosis in Health Care. The National Academies Press; 2015:472.

Schiff GD, Hasan O, Kim S, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arc Intern Med. 2009;169(20):1881-7. doi:https://doi.org/10.1001/archinternmed.2009.333

Norman GR, Eva KW. Diagnostic error and clinical reasoning. Med Educ. 2010;44(1):94-100. doi:https://doi.org/10.1111/j.1365-2923.2009.03507.x

Scarpello J. Diagnostic error: the Achilles' heel of patient safety? Clin Med (London, England). 2011;11(4):310-1. doi:https://doi.org/10.7861/clinmedicine.11-4-310

Ely JW, Kaldjian LC, D'Alessandro DM. Diagnostic errors in primary care: lessons learned. J Am Board Fam Med. 2012;25(1):87-97. doi:https://doi.org/10.3122/jabfm.2012.01.110174

Singh H, Graber ML, Kissam SM, et al. System-related interventions to reduce diagnostic errors: a narrative review. BMJ Qual Saf. 2012;21(2):160-70. doi:https://doi.org/10.1136/bmjqs-2011-000150

Groszkruger D. Diagnostic error: untapped potential for improving patient safety? J Healthc Risk Manag. 2014;34(1):38-43. doi:https://doi.org/10.1002/jhrm.21149

McCarthy M. Diagnostic error remains a pervasive, underappreciated problem, US report says. BMJ. 2015;351:h5064. doi:https://doi.org/10.1136/bmj.h5064

Institute of Medicine. Health IT and Patient Safety: Building Safer Systems for Better Care. 2011.

Gandhi T, Singh H. Reducing the Risk of Diagnostic Error in the COVID-19 Era. J Hosp Med. 2020. https://doi.org/10.12788/jhm.3461: https://doi.org/10.12788/jhm.3461

Adams JG, Walls RM. Supporting the Health Care Workforce During the COVID-19 Global Epidemic. JAMA. 2020. https://doi.org/10.1001/jama.2020.3972

American Association of Critical Care Nurses. How do we staff during the COVID-19 pandemic? - AACN. Accessed May 19, 2020. https://www.aacn.org/blog/how-do-we-staff-during-the-covid-19-pandemic

Auerbach AD, Fang MC, Greysen RS, et al. Hospital ward adaptation during the COVID-19 epidemic: A national survey of academic medical centers. J Hosp Med. 2020;15 (8) 483-488

Board A. Key actions CNOs should take now to staff for a Covid-19 surge | Advisory Board. Accessed March 9, 2023. https://www.advisory.com/research/nursing-executive-center/expert-insights/2020/staffing-for-the-covid-19-surge

Dewey C, Hingle S, Goelz E, Linzer M. Supporting Clinicians During the COVID-19 Pandemic. Ann Intern Med. 2020. https://doi.org/10.7326/M20-1033

Elston DM. The coronavirus (COVID-19) epidemic and patient safety. J Am Acad Dermatol. 2020;82(4):819-820. doi:https://doi.org/10.1016/j.jaad.2020.02.031

Griffin KM, Karas MG, Ivascu NS, Lief L. Hospital Preparedness for COVID-19: A Practical Guide from a Critical Care Perspective. Am J Respir Crit Care Med. 2020. https://doi.org/10.1164/rccm.202004-1037CP

Kumaraiah D, Yip N, Ivascu N, Hill L. Innovative ICU Physician Care Models: Covid-19 Pandemic at NewYork-Presbyterian | Catalyst non-issue content. Accessed May 19, 2020. https://catalyst.nejm.org/doi/full/https://doi.org/10.1056/CAT.20.0158

Auerbach AD, Patel MS, Metlay JP, et al. The Hospital Medicine Reengineering Network (HOMERuN): a learning organization focused on improving hospital care. Acad Med. 2014;89(3):415-20. doi:https://doi.org/10.1097/ACM.0000000000000139

Auerbach A, Raffel K, Ranji SR, et al. Diagnostic Errors In Patients Who Died Or Were Transferred To An ICU: Preliminary Results From The UPSIDE Study. Accessed March 9, 2023. https://shmabstracts.org/abstract/diagnostic-errors-in-patients-who-died-or-were-transferred-to-an-icu-preliminary-results-from-the-upside-study/

Auerbach AD, Kripalani S, Vasilevskis EE, et al. Preventability and Causes of Readmissions in a National Cohort of General Medicine Patients. JAMA Intern Med. 2016;176(4):484-93. doi:https://doi.org/10.1001/jamainternmed.2015.7863

Schiff GD. Diagnosis and diagnostic errors: time for a new paradigm. BMJ Qual Saf. 2014;23(1):1-3. doi:https://doi.org/10.1136/bmjqs-2013-002426

Schiff GD, Kim S, Abrams R, et al. Diagnosing Diagnosis Errors: Lessons from a Multi-institutional Collaborative Project. Advances in Patient Safety: From Research to Implementation (Volume 2: Concepts and Methodology). 2005

Auerbach AD, Fang MC, Greysen RS, et al. Hospital ward adaptation during the COVID-19 epidemic: A national survey of academic medical centers. J Hosp Med. 2020;15(8):483-488.

Prevention NCCfMERa. NCC MERP Index for Categorizing Medication Errors (rev. 2001). Accessed March 9, 2023. https://www.nccmerp.org/types-medication-errors

Ospina R, Amorim L. prLogistic: Estimation of Prevalence Ratios using Logistic Models. R package version 1.2. Accessed Jul 2022, https://CRAN.R-project.org/package=prLogistic

Dahlqwist E, Sjolander A. AF: Model-Based Estimation of Confounder-Adjusted Attributable Fractions. R package version 0.1.5. Accessed March 9, 2023, https://CRAN.R-project.org/package=AF

Newman-Toker DE, Wang Z, Zhu Y, et al. Rate of diagnostic errors and serious misdiagnosis-related harms for major vascular events, infections, and cancers: toward a national incidence estimate using the "Big Three". Diagnosis (Berl). 2021;8(1):67-84. doi:https://doi.org/10.1515/dx-2019-0104

Winters B, Custer J, Galvagno SM, Jr., et al. Diagnostic errors in the intensive care unit: a systematic review of autopsy studies. BMJ Qual Saf. 2012;21(11):894-902. doi:https://doi.org/10.1136/bmjqs-2012-000803

Skinner TR, Scott IA, Martin JH. Diagnostic errors in older patients: a systematic review of incidence and potential causes in seven prevalent diseases. Int J Gen Med. 2016;9:137-46. doi:https://doi.org/10.2147/ijgm.S96741

Gunderson CG, Bilan VP, Holleck JL, et al. Prevalence of harmful diagnostic errors in hospitalised adults: a systematic review and meta-analysis. BMJ Qual Saf. 2020;29(12):1008-1018. doi:https://doi.org/10.1136/bmjqs-2019-010822

Funding

AHRQ Grant: R01HS027369, Moore Foundation Grant: 8856.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures:

Dr. Auerbach is a founder of Kuretic Health, which has no relationship to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Auerbach, A.D., Astik, G.J., O’Leary, K.J. et al. Prevalence and Causes of Diagnostic Errors in Hospitalized Patients Under Investigation for COVID-19. J GEN INTERN MED 38, 1902–1910 (2023). https://doi.org/10.1007/s11606-023-08176-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-023-08176-6