Abstract

Point-of-care ultrasonography (POCUS) has the potential to transform healthcare delivery through its diagnostic expediency. Trainee competency with POCUS is now mandated for emergency medicine through the Accreditation Council for Graduate Medical Education (ACGME), and its use is expanding into other medical specialties, including internal medicine. However, a key question remains: how does one define “competency” with this emerging technology? As our trainees become more acquainted with POCUS, it is vital to develop validated methodology for defining and measuring competency amongst inexperienced users. As a framework, the assessment of competency should include evaluations that assess the acquisition and application of POCUS-related knowledge, demonstration of technical skill (e.g., proper probe selection, positioning, and image optimization), and effective integration into routine clinical practice. These assessments can be performed across a variety of settings, including web-based applications, simulators, standardized patients, and real clinical encounters. Several validated assessments regarding POCUS competency have recently been developed, including the Rapid Assessment of Competency in Echocardiography (RACE) or the Assessment of Competency in Thoracic Sonography (ACTS). However, these assessments focus mainly on technical skill and do not expand upon other areas of this framework, which represents a growing need. In this review, we explore the different methodologies for evaluating competency with POCUS as well as discuss current progress in the field of measuring trainee knowledge and technical skill.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

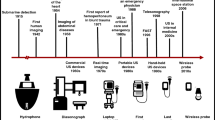

Point-of-care ultrasonography (POCUS) can transform healthcare delivery through its diagnostic and therapeutic expediency.1 It is low-cost, does not expose the patient to radiation, and can bolster the doctor-patient relationship by encouraging the physician to be present at the patient’s bedside. Physicians can become proficient in POCUS with minimal training, and its use has been shown to improve diagnostic accuracy, reduce procedural complications, decrease inpatient length of stay, and improve patient satisfaction.2,3,4,5,6,7 It can be applied in resource-limited settings, where restricted access to traditional radiological equipment can be a barrier to care.8

POCUS has experienced increased usage given its positive impact on patient care.4, 5 Greater than 60% of US medical schools report having an ultrasound curriculum,9 and program directors across varying medical specialties have reported plans to start their own curricula in the future.10, 11 As POCUS has become more prevalent across a variety of clinical settings, several organizations have developed systematic guidelines to promote its effective use amongst practicing clinicians and trainees.12,13,14,15 The best example of this is in emergency medicine (EM), where the American College of Emergency Physicians (ACEP) has developed guidelines on how to train residents with POCUS, and the Accreditation Council for Graduate Medical Education (ACGME) has mandated that ultrasound training be a component of their training.14, 16 While the ACEP and ACGME recommend that EM residents achieve “competence” with POCUS, it is largely left to the individual institution to define competency and measure it. Authors from other medical specialties, such as surgery, internal medicine, family medicine, and anesthesiology, have advocated that POCUS be taught to trainees and incorporated into their milestones for training evaluation.17,18,19,20,21 The Liaison Committee on Medical Education (LCME) has not yet released requirements for POCUS training in undergraduate medical education, although some have advocated for this requirement.22

While investigations continue to demonstrate that we can teach our trainees POCUS,2,3,4,5,6,7 a central question remains: how do we evaluate their competency? The American College of Emergency Physicians (ACEP), alongside the Council of Emergency Medicine Residency Directors (CORD), has made the most progress in this field by adopting their own milestones to meet the new ACGME requirements.23 Under the ACEP-CORD model, competency is evaluated through standardized checklists and the completion of 150 examinations.23, 24 However, the number of required examinations in EM is based on expert opinion and differs from other organizations.23, 25 Amongst EM residency programs, there is significant variability in how competency is assessed.26 For example, 39% of programs use online examinations (including multiple-choice questions), while others use human models (27%) or simulators (10%).26 Other authors have offered their own core competencies in POCUS for trainees, but there remains significant variability in how to measure this.22, 27

As the use of POCUS continues to grow, it is important to (1) define competency, (2) develop a framework for measuring it, and (3) review validated measurement tools that assess trainees. A framework for competency assessment is vital, as POCUS represents an emerging technology whereby trainees may have more exposure with its use compared to senior physicians (especially in specialties where POCUS usage is underutilized).28 In this manuscript, we describe a framework for evaluating ultrasound competency and discuss how to apply these tools toward evaluating trainee competence for newly minted ultrasound programs. Finally, we review validated measurement tools already in existence.

PART 1. BUILDING A FRAMEWORK FOR POCUS EVALUATION

Competency can be broadly defined as the ability to perform a task consistently, efficiently, and effectively. In medicine, competency ultimately occurs when a task is effectively incorporated into routine clinical practice. In order to obtain clinical competence, several steps must occur (Table 1).29 These components can be conceptualized as a framework that can be applied toward assessing competency with POCUS.

ACQUISITION OF KNOWLEDGE

Medical education is predicated on a model that is based heavily on the acquisition of facts. Medical school and national board examinations primarily test the memorization of these facts in multiple-choice format, likely because these tests can be easily scaled and standardized. With POCUS, the acquisition of knowledge can be measured in several ways. Multiple-choice tests can be designed to measure trainee knowledge of ultrasound physics and proper probe selection, while still images or short videos can be utilized to evaluate knowledge of anatomy or the recognition of pathology.30 However, multiple-choice questions can be flawed for a number of reasons, including selection bias and improper wording.31 Images that are utilized could be selected from repositories that reflect local epidemiology and specialty-specific disease states.14, 23 Care must be given to maintain patient anonymity and to avoid inadvertently sharing patient images from privately collected videos across a wide network of users. Medical educators and researchers should consider using pre- and post-testing to determine the rate of learning for POCUS, as this rate is not well known.32, 33

The venue for teaching also requires careful consideration. Many previously described curricula took place in a classroom.27, 32, 34 However, there is evidence that online modules may be effective for learning POCUS.30 Other institutions have adopted the use of hands-on training with simulators or standardized patients, which have also proved successful.34 Future areas of research should focus on which training methods are the most effective for the acquisition of knowledge, how quickly knowledge is obtained, and how it is retained over time.30

APPLICATION OF KNOWLEDGE

Once knowledge has been acquired, the learner must demonstrate the ability to apply it in various clinical settings (Table 1).29 This demonstration can be accomplished through several mechanisms. One common method is to use clinical vignettes that require the trainee to apply memorized facts toward a scenario involving patient care (for example, a multiple-choice question that asks the examinee to consider multiple treatment options based on the nuances of a case presentation).23 A similar format could be applied to POCUS, a modality that inherently requires the application of knowledge to make clinical decisions.14, 23 Several authors have recommended that trainees be asked to infer a patient’s clinical status and offer treatment recommendations based on clinical scenarios and sampled images or videos.23, 24

In addition, trainees should be given the opportunity to apply their knowledge on real patients and clinical scenarios.14, 18, 23, 29 As an example, a trainee could perform a POCUS assessment on a real patient and propose a plan of care based on their findings and associated patient history. A supervising physician could provide real-time feedback on their decision and application of knowledge.23,24,25 Standardization could be achieved through delineation of clinical milestones for each specialty, as the ACEP has done for the evaluation of EM trainees.14, 16 This method requires significant oversight, but it is no different than what is already asked of our trainees and faculty in our current medical education system. It is worth noting that this form of evaluation requires attending physicians who are experienced with incorporating POCUS into the clinical encounter, which may be a rate-limiting step at many institutions. This barrier could be overcome though emerging technologies such as remote-viewing or tele-health, and studies evaluating these novel methods for evaluation should be explored further.

DEMONSTRATES TECHNICAL COMPETENCE

Technical competence is a different skillset compared to the acquisition and application of medical knowledge (Table 2). In POCUS, it requires manual dexterity, visual-spatial orientation, and familiarity with the equipment being used. Additional challenges in POCUS include optimizing the field of depth, image gain, and centering of the target structure to obtain an image that is interpretable.23 If not standardized, the measurement of technical competence can be subject to variability depending on the patient or equipment functionality. The ACEP-CORD guidelines have developed standardized checklists to measure technical skills,23 and other authors have developed their own validated checklists to ensure proper evaluation of image acquisition and quality.25 The key components of technical competence are discussed in Table 2. These include proper probe selection, proper image mode selection (including orientation markers), proper probe positioning for a target structure, adequate depth, adequate image gain, centering of the target structure, troubleshooting difficult windows (though changing patient’s or probe’s position), and the ability to use more advanced functionality (e.g., M-mode, Doppler, or image capturing).

The use of mannequins, standardized patients, or computer simulators represent an opportunity to evaluate technical competency.23, 34 Standardized patients have been validated to measure POCUS competency.35,36,37 However, simulation via these methods may be limited by real-word applicability, as a standardized patient may have more ideal image windows or may lack significant pathology. Similarly, simulators can be used to train trainees, but as with other procedures requiring technical expertise, they ultimately cannot substitute for a real patient in the evaluation of competency.38 Trainees should have exposure to real patients to gain the technical experience that comes with obtaining difficult image windows due to a challenging body habitus.23 Faculty should be present to provide troubleshooting tips to optimize trainee technique.23

EFFECTIVE INTEGRATION INTO CLINICAL PRACTICE

The ultimate goal of the medical educator is to have their lessons incorporated into routine practice by their learners. Unfortunately, methodologies on effectively evaluating integration of POCUS have not been developed. As with direct patient care, supervising faculty should aim to provide direct observation and real-time feedback to the trainee.23 Additionally, many ultrasound systems can be integrated into Picture and Archiving Communication Systems (PACS), which could serve as a mechanism to independently evaluate obtained images by a radiologist for quality control. In this scenario, a clinician could submit an image to their PACS along with their interpretation of the image, and what action they took based on their findings. This process could be peer-reviewed to ensure appropriate decision making and interpretation took place. This peer-reviewed process has been in place for decades for patient care and can likely be effectively incorporated into POCUS.39, 40 Recently, methods for peer-review have been described for POCUS, but more studies would be needed to determine the periodicity of evaluation.41

In addition to peer-review, PACS enable providers to participate in longitudinal patient evaluation and periodic review (Table 3). Providers should review previously acquired images of a patient and retrospectively evaluate the accuracy of their interpretation once a firm diagnosis has been made or definitive radiological studies have been obtained. This allows the provider to learn from faulty image interpretation or clinical integration, regardless of the availability to peer-review images at an institution. For these methods to be successful, POCUS machines should include standardized patient identifiers that allow for seamless integration into PACS and the electronic health record such that they can be reviewed and compared to formal radiological studies.

RE-CERTIFICATION

Once competency is gained, how is it maintained? Physicians routinely undergo specialty board re-certification, and this model could be applied toward POCUS if an institution or specialty organization decides to offer formal credentialing. Even if no formal certification program exists, the time period for re-assessment deserves special consideration by all users. One study investigated the rate of skill decline involving 30 internal medicine graduates who did not routinely use POCUS (yet received training while in residency). After 2 years, nearly all of them failed the same “competency” test they had previously passed while in training.42 It is not known how these skills are maintained over time in regular users of POCUS. However, quality assurance and peer-review with POCUS have been shown to maintain physician skill.41, 43

THE “IDEAL” ASSESSMENT

As a framework for evaluating trainees in POCUS becomes more developed, it is worth noting that there can never be a perfect assessment. This is because any assessment must balance reproducibility and standardization with the uniqueness of real patient encounters. A tool that is easily standardized (e.g., multiple-choice questions) cannot involve real patients, just as any tool that involves real patients will face challenges of standardization. Nevertheless, we can strive for an ideal POCUS assessment as one in which trainees are evaluated on their ability to efficiently incorporate their knowledge of POCUS into their clinical assessments and independently obtain quality images across a variety of clinical scenarios (Table 3).

PART 2. BEYOND FRAMEWORKS: REVIEWING CURRENT COMPETENCY ASSESSMENTS

Bedside Echocardiography

Bedside echocardiography is perhaps the most widely utilized POCUS assessment. Its use has been adopted by several major society guidelines, including those from critical care,44 emergency medicine,45 and cardiology.46 With minimal training, physicians can reliably identify ventricular function, gross valvular abnormalities, and pericardial effusions and provide accurate assessments of intravascular volume status.44 While the ACEP-CORD guidelines offer checklists to evaluate technical competency with bedside echocardiography, these have yet to be validated and are based on expert consensus.23, 24

The Rapid Assessment of Competency in Echocardiography (RACE) Scale was developed to assess the technical skills for trainees learning bedside echocardiography.47 It is an assessment that has two main domains: (1) image generation, which assesses image quality for each of the five core cardiac views used for POCUS (parasternal long, parasternal short, apical four-chamber, subcostal, and inferior vena cava) and (2) image interpretation, which assesses the ability of the trainee to obtain an image that would be sufficient for interpretation by a cardiologist or an expert in echocardiography (notably in this scale, the learner is not actually assessed on their interpretation of an image). When compared across five experts in echocardiography, the tool demonstrated excellent inter-rater reliability for assessing the ability of a trainee to generate a sufficient image (α = 0.87; Table 4), while there was less agreement between raters regarding whether an image was interpretable (α = 0.56; Table 4). Individual items in the assessment demonstrated moderate to excellent inter-rater agreement, although this was lowest for assessments of LV function. The authors of the RACE have validated this measure using inexperienced and experienced users with POCUS.33, 47 The tool was able to distinguish between the two groups and demonstrate their rate of learning over time. Interestingly, the authors suggested that after 20 studies, there was a performance plateau in achieving a higher score on the scale, suggesting that this may be the minimal level of practice needed to gain competency.33

With regard to knowledge acquisition and application, several studies have demonstrated that inexperienced users can learn to identify gross functional and anatomic pathology on bedside echocardiography, but these studies employed methodologies that compared a trainee’s interpretation with an experienced cardiologist.44, 51 Another study utilized clinical scenarios and simulators to test a trainee’s knowledge and application of knowledge, although their methodology has not been validated.52 Further investigations are needed to validate and measure knowledge acquisition, application, and integration into clinical practice with bedside echocardiography.

Thoracic POCUS

Thoracic POCUS can be used to readily identify several pathologies, including pneumothorax, pulmonary edema, pleural effusion, and pulmonary embolism.53 Several protocols have been developed to objectively assess the thoracic cavity via POCUS, including the Bedside Lung Ultrasound in Emergency (BLUE) protocol, which has been demonstrated to have over 90% diagnostic accuracy for the diseases outline above.53 However, these protocols require familiarity with thoracic POCUS and have not been used to validate trainee learning or competency.

The Assessment of Competency in Thoracic Sonography (ACTS) was developed to assess trainee technical competence when performing thoracic POCUS (Table 4).48 Like the RACE tool, the ACTS is divided into two domains: (1) image generation and (2) image interpretation. The image generation scale assesses the quality of eight typical thoracic views on a 1–6 Likert scale. The image interpretation component uses a binary scale to determine whether an expert would be able to assess the obtained images for four key thoracic pathologies (pneumothorax, interstitial fluid accumulation, consolidation, and pleural effusion). The scale demonstrated a high degree of inter-rater reliability in terms of image generation (α = 0.82; Table 4) and image interpretation (α = 0.71; Table 4). The authors concluded that, as with cardiac ultrasound, there was an eventual plateau performance after the 35th exam, suggesting that (at least when using their training method) learners reach a plateau of technical skills after a certain number of sessions.48

Other authors have developed a validated method for assessing trainee competence with thoracic POCUS. The Lung Ultrasound Objective Structured Assessment of Ultrasound Skills (LUS-OSAUS) is a method that assesses competency within the domains of knowledge acquisition, knowledge application, and technical competency (Table 4).49 The method uses a 1–5 Likert scale to assess user competency. It requires interpretation of images and the application of knowledge toward a clinical case. The LUS-OSAUS can distinguish between novice and expert users and has an excellent inter-rater agreement (Pearson’s r = 0.85; Table 4).49 There have been no direct comparisons between ACTS and LUS-OSAUS in terms of inter-rater agreement for technical competence, and these assessments slightly differ in their scope. Regardless, LUS-OSAUS may offer a more complete evaluation for trainees because it assesses multiple aspects of competency with POCUS.

Abdominal POCUS

Abdominal POCUS can be used to readily identify a variety of pathological states including ascites, cholecystitis, cholelithiasis, nephrolithiasis, hydronephrosis, and abdominal aortic aneurysm.54,55,56 Its use has been shown to improve diagnostic accuracy and reduce ER length of stay.55, 56 While abdominal POCUS is incorporated into standardized examinations such as the Focused Assessment with Sonography for Trauma (FAST), these examinations are subject to variability based on user experience and have not been extensively validated as tools to assess competency.24, 57

The Objective Structured Assessment of Ultrasound Skills (OSAUS) was developed through expert consensus and includes elements that assess knowledge acquisition, knowledge application, technical competence, and integration into clinical practice (Table 4).50 This is accomplished through seven elements outlined in the examination: (1) indication for examination, (2) applied knowledge of ultrasound equipment, (3) image optimization, (4) systematic examination, (5) interpretation of images, (6) documentation of examination, and (7) medical decision making. In a validation study using 24 physicians of varying expertise levels performing abdominal POCUS (specifically, gallbladder, renal, FAST, and abdominal aorta ultrasound), the OSAUS demonstrated a high generalizability coefficient (Spearman’s ρ coefficient = 0.076; Table 4).50

Generalized Assessments

The Brightness Mode Quality Ultrasound Imaging Examination Technique (B-QUIET) is a tool that can be broadly applied toward assessing technical competency with POCUS toward a variety of organ systems, including cardiac, abdominal, and lung ultrasound (Table 4).25 The method divides the assessment of image quality into three subscales: (1) identification/orientation (e.g., image labeling and body markers), (2) technical (e.g., image depth, gain, and resolution), and (3) image anatomy (e.g., centering of target structure on screen). The method employs a 4-point Likert scale to rate the image quality by the observer. When applied toward echocardiography and abdominal POCUS, the B-QUIET assessment had a modest inter-rater reliability (κ = 0.68; Table 4).25 However, for 90% of the items used in this study, there was complete inter-observer agreement despite different image views, locations, and patient body types that were used in the analysis. The study had relatively low inter-rater reliability for the identification/orientation subscale, which was attributed to improper labeling by the trainees.25

Objective Structured Clinical Examinations

Objective Structured Clinical Examinations (OSCEs) have been used for over 40 years to provide standardized and objective assessments of a learner’s clinical skills and attitudes.58 These assessments typically utilize simulation aided by mannequins or trained actors to reenact a clinical scenario for the learner.59 The majority of medical schools employ an OSCE to evaluate trainees, and it has been shown to predict clinical practice ability.60, 61 However, OSCEs can be limited by both inter-rater agreement (e.g., the stability of a student’s score across multiple independent evaluators) and observer bias (whereby previous performance of a student by a single observer may influence subsequent assessments).62 This can be overcome by the use of a standardized scoring rubric and peer-review.59

OSCEs provide a conduit to assess multiple aspects of POCUS competency, including knowledge application, technical skill, and clinical practice integration.63 As simulation technology becomes more advanced, the ability to replicate clinical scenarios with simulated pathology videos will allow evaluators to assess trainee decision making in a more objective (and safe) manner. While the use of OSCE to assess these domains of competency within POCUS has been previously described, there is a paucity of validating methods for using OSCE with POCUS.37, 63 This challenge can be overcome by applying the standardized grading tools outlined above toward specific clinical scenarios. Such analyses should include descriptions of the scenarios and validations of their inter-rater reliability.

CONCLUSIONS

POCUS has the potential to dramatically change patient care through its diagnostic efficacy and low-cost structure. Its use continues to expand across medical specialties and throughout the medical education spectrum. As our trainees become more acquainted to POCUS, we must reconsider how to adequately train them and successfully measure their competency. The scope of their practice should be determined by their specialty organizations, but we should all strive to develop standardized and validated assessments to evaluate their skill. Such assessments must include evaluations of knowledge acquisition, knowledge application, technical skill demonstration, and integration into clinical practice. Trainees should be evaluated across a variety of settings, including with real patients. Future studies should be aimed at defining competency with POCUS and validating it across varying experience levels amongst trainees.

References

Moore CL, Copel JA. Point-of-Care Ultrasonography. NEJM 2011;364: 749–757.

Akkaya A, Yesilaras M, Aksay E, Sever M, Atilla OD. The interrater reliability of ultrasound imaging of the inferior vena cava performed by emergency residents. Am J Emerg Med 2013;31: 1509–1511.

Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr 2011;24: 1319–1324.

Dodge KL, Lynch CA, Moore CL, Biroscak BJ, Evans LV. Use of ultrasound guidance improves central venous catheter insertion success rates among junior residents. J Ultrasound Med 2012;31: 1519–1526.

Cavanna L, Mordenti P, Bertè R, et al. Ultrasound guidance reduces pneumothorax rate and improves safety of thoracentesis in malignant pleural effusion: report on 445 consecutive patients with advanced cancer. World J Surg Onc 2014;12: 139

Testa A, Francesconi A, Giannuzzi R, Berardi S, Sbraccia P. Economic analysis of bedside ultrasonography (US) implementation in an Internal Medicine department. Intern Emerg Med 2015;10: 1015–1024.

Howard ZD, Noble VE, Marill KA, Sajed D, et al. Bedside ultrasound maximizes patient satisfaction. J Emerg Med 2014;46: 46–53.

Glomb N, D’Amico B, Rus M, Chen C. Point-Of-Care Ultrasound in Resource-Limited Settings. Clin Pediatr Emerg Med 2015;16: 256–261.

Bahner DP, Goldman E, Way D, Royall NA, Liu YT. The state of ultrasound education in U.S. medical schools: results of a national survey. Acad Med 2014;89: 1681–1686.

Hall JWW, Holman H, Bornemann P, et al. Point of Care Ultrasound in Family Medicine Residency Programs: A CERA Study. Fam Med 2015;47: 706–711.

Schnobrich DJ, Gladding S, Olson APJ, Duran-Nelson A. Point-of-Care Ultrasound in Internal Medicine: A National Survey of Educational Leadership. J Grad Med Educ 2013;5: 498–502.

Frankel HL, Kirkpatrick AW, Elbarbary M, et al. Guidelines for the Appropriate Use of Bedside General and Cardiac Ultrasonography in the Evaluation of Critically Ill Patients—Part I: General Ultrasonography. Crit Care Med journals.lww.com; 2015;43: 2479.

Rudski LG, Lai WW, Afilalo J, Hua L, et al. Guidelines for the echocardiographic assessment of the right heart in adults: a report from the American Society of Echocardiography endorsed by the European Association of Echocardiography, a registered branch of the European Society of Cardiology, and the Canadian Society of Echocardiography. J Am Soc Echocardiogr 2010;23: 685–713; quiz 786–8.

Ultrasound Guidelines: Emergency, Point-of-Care and Clinical Ultrasound Guidelines in Medicine. Ann Emerg Med 2017;69: e27–e54.

Spencer KT, Kimura BJ, Korcarz CE, Pellikka PA, Rahko PS, Siegel RJ. Focused Cardiac Ultrasound: Recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr Elsevier; 2013;26: 567–581.

Acgme.org. Program Requirements for GME in Emergency Medicine. [online] Available at: https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/110_emergency_medicine_2017-07-01.pdf. Accessed 4 Oct 2018; 2017.

Sabath BF, Singh G. Point-of-care ultrasonography as a training milestone for internal medicine residents: the time is now. J Community Hosp Intern Med Perspect 2016;6: 33094.

Beal EW, Sigmond BR, Sage-Silski L, Lahey S, Nguyen V, Bahner DP. Point-of-Care Ultrasound in General Surgery Residency Training: A Proposal for Milestones in Graduate Medical Education Ultrasound. J Ultrasound Med 2017;36: 2577–2584.

Deshpande R, Montealegre-Gallegos M, Matyal R, Belani K, Chawla N. Training the Anesthesiologist in Point-of-Care Ultrasound. Int Anesthesiol Clin 2016;54: 71–93.

Association of Family Medicine Residency Directors. Consensus statement for procedural training in Family Medicine residency. [online] Available at: https://binged.it/2HGV9hY. Accessed 4 Oct 2018; 2014

Ma IWY, Arishenkoff S, Wiseman J, et al. Internal Medicine Point-of-Care Ultrasound Curriculum: Consensus Recommendations from the Canadian Internal Medicine Ultrasound (CIMUS) Group. J Gen Intern Med 2017; doi:https://doi.org/10.1007/s11606-017-4071-5

Baltarowich OH, Di Salvo DN, Scoutt LM, Brown DL, Cox CW, DiPietro MA, et al. National ultrasound curriculum for medical students. Ultrasound Q 2014;30: 13–19.

Lewiss RE, Pearl M, Nomura JT, et al. CORD-AEUS: consensus document for the emergency ultrasound milestone project. Acad Emerg Med 2013;20: 740–745.

Schmidt JN, Kendall J, Smalley C. Competency Assessment in Senior Emergency Medicine Residents for Core Ultrasound Skills. West J Emerg Med 2015;16: 923–926.

Bahner DP, Adkins EJ, Nagel R, Way D, Werman HA, Royall NA. Brightness mode quality ultrasound imaging examination technique (B-QUIET): quantifying quality in ultrasound imaging. J Ultrasound Med 2011;30: 1649–1655.

Amini R, Adhikari S, Fiorello A. Ultrasound competency assessment in emergency medicine residency programs. Acad Emerg Med 2014;21: 799–801.

Tarique U, Tang B, Singh M, Kulasegaram KM, Ailon J. Ultrasound Curricula in Undergraduate Medical Education: A Scoping Review. J Ultrasound Med 2018;37: 69–82.

Kumar A, Liu G, Chi J, Kugler J. The Role of Technology in the Bedside Encounter. Med Clin North Am 2018;102: 443–451.

Miller GE. The assessment of clinical skills/competence/performance. Acad Med 1990;65: S63–7.

Hempel D, Sinnathurai S, Haunhorst S, et al. Influence of case-based e-learning on students’ performance in point-of-care ultrasound courses: a randomized trial. Eur J Emerg Med 2016;23: 298–304.

Ali SH, Ruit KG. The Impact of item flaws, testing at low cognitive level, and low distractor functioning on multiple-choice question quality. Perspect Med Educ 2015;4: 244–251.

Townsend NT, Kendall J, Barnett C, Robinson T. An Effective Curriculum for Focused Assessment Diagnostic Echocardiography: Establishing the Learning Curve in Surgical Residents. J Surg Educ 2016;73: 190–196.

Millington SJ, Hewak M, Arntfield RT, et al. Outcomes from extensive training in critical care echocardiography: Identifying the optimal number of practice studies required to achieve competency. J Crit Care 2017;40: 99–102.

Johri AM, Durbin J, Newbigging J, Tanzola R, Chow R, De S, et al. Cardiac Point-of-Care Ultrasound: State of the Art in Medical School Education. J Am Soc Echocardiogr 2018;31(7):749–760.

Knudson MM, Sisley AC. Training residents using simulation technology: experience with ultrasound for trauma. J Trauma 2000;48: 659–665.

Damewood S, Jeanmonod D, Cadigan B. Comparison of a multimedia simulator to a human model for teaching FAST exam image interpretation and image acquisition. Acad Emerg Med 2011;18: 413–419.

Sisley AC, Johnson SB, Erickson W, Fortune JB. Use of an Objective Structured Clinical Examination (OSCE) for the assessment of physician performance in the ultrasound evaluation of trauma. J Trauma 1999;47: 627–631.

Lucas BP, Tierney DM, Jensen TP, et al. Credentialing of Hospitalists in Ultrasound-Guided Bedside Procedures: A Position Statement of the Society of Hospital Medicine. J Hosp Med 2018;13: 117–125.

Smith MA, Atherly AJ, Kane RL, Pacala JT. Peer review of the quality of care. Reliability and sources of variability for outcome and process assessments. JAMA 1997;278: 1573–1578.

Kameoka J, Okubo T, Koguma E, Takahashi F, Ishii S, Kanatsuka H. Development of a peer review system using patient records for outcome evaluation of medical education: reliability analysis. Tohoku J Exp Med 2014;233: 189–195.

Mathews BK, Zwank M. Hospital Medicine Point of Care Ultrasound Credentialing: An Example Protocol. J Hosp Med 2017;12: 767–772.

Kimura BJ, Sliman SM, Waalen J, Amundson SA, Shaw DJ. Retention of Ultrasound Skills and Training in “Point-of-Care” Cardiac Ultrasound. J Am Soc Echocardiogr 2016;29: 992–997.

Soni NJ, Tierney DM, Jensen TP, Lucas BP. Certification of Point-of-Care Ultrasound Competency. J Hosp Med 2017;12: 775–776.

Levitov A, Frankel HL, Blaivas M, Kirkpatrick AW, Su E, Evans D, et al. Guidelines for the Appropriate Use of Bedside General and Cardiac Ultrasonography in the Evaluation of Critically Ill Patients—Part II: Cardiac Ultrasonography. Crit Care Med 2016;44: 1206.

American College of Emergency Physicians. Ultrasound Guidelines: Emergency, Point-of-Care, and Clinical Ultrasound Guidelines in Medicine. [online] Available at: http://www.emergencyultrasoundteaching.com/assets/2016_us_guidelines.pdf. Accessed 4 Oct 2018; 2016.

Spencer KT, Kimura BJ, Korcarz CE, Pellikka PA, Rahko PS, Siegel RJ. Focused cardiac ultrasound: recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr onlinejase.com; 2013;26: 567–581.

Millington SJ, Arntfield RT, Hewak M, et al. The Rapid Assessment of Competency in Echocardiography Scale: Validation of a Tool for Point-of-Care Ultrasound. J Ultrasound Med 2016;35: 1457–1463.

Millington SJ, Arntfield RT, Guo RJ, et al. The Assessment of Competency in Thoracic Sonography (ACTS) scale: validation of a tool for point-of-care ultrasound. Crit Ultrasound J 2017;9: 25.

Skaarup SH, Laursen CB, Bjerrum AS, Hilberg O. Objective and Structured Assessment of Lung Ultrasound Competence. A Multispecialty Delphi Consensus and Construct Validity Study. Ann Am Thorac Soc 2017;14: 555–560.

Todsen T, Tolsgaard MG, Olsen BH, Henriksen BM, et al. Reliable and valid assessment of point-of-care ultrasonography. Ann Surg 2015;261: 309–315.

Kimura BJ. Point-of-care cardiac ultrasound techniques in the physical examination: better at the bedside. Heart. 2017;103: 987–994.

Amini R, Stolz LA, Javedani PP, Gaskin K, et al. Point-of-care echocardiography in simulation-based education and assessment. Adv Med Educ Pract 2016;7: 325–328.

Lichtenstein DA. BLUE-protocol and FALLS-protocol: two applications of lung ultrasound in the critically ill. Chest 2015;147: 1659–1670.

Keil-Ríos D, Terrazas-Solís H, González-Garay A, Sánchez-Ávila JF, García-Juárez I. Pocket ultrasound device as a complement to physical examination for ascites evaluation and guided paracentesis. Intern Emerg Med 2016;11: 461–466.

Kameda T, Taniguchi N. Overview of point-of-care abdominal ultrasound in emergency and critical care. J Intensive Care Med 2016;4: 53.

Dickman E, Tessaro MO, Arroyo AC, Haines LE, Marshall JP. Clinician-performed abdominal sonography. Eur J Trauma Emerg Surg 2015;41: 481–492.

Steinemann S, Fernandez M. Variation in training and use of the focused assessment with sonography in trauma (FAST). Am J Surg 2018;215: 255–258.

Hodges B. Validity and the OSCE. Med Teach 2003;25: 250–254.

Ogunyemi D, Dupras D. Does an Objective Structured Clinical Examination Fit Your Assessment Toolbox? J Grad Med Educ 2017;9: 771–772.

Wallenstein J, Ander D. Objective structured clinical examinations provide valid clinical skills assessment in emergency medicine education. West J Emerg Med 2015;16: 121–126.

Berendonk C, Schirlo C, Balestra G, et al. The new final Clinical Skills examination in human medicine in Switzerland: Essential steps of exam development, implementation and evaluation, and central insights from the perspective of the national Working Group. GMS Z Med Ausbild 2015;32:40.

Liao SC, Hunt EA, Chen W. Comparison between inter-rater reliability and inter-rater agreement in performance assessment. Ann Acad Med Singap 2010;39: 613–618.

Mitchell JD, Amir R, Montealegre-Gallegos M, Mahmood F, Shnider M, et al. Summative Objective Structured Clinical Examination Assessment at the End of Anesthesia Residency for Perioperative Ultrasound. Anesth Analg 2018;126(6): 2065–2068.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kumar, A., Kugler, J. & Jensen, T. Evaluation of Trainee Competency with Point-of-Care Ultrasonography (POCUS): a Conceptual Framework and Review of Existing Assessments. J GEN INTERN MED 34, 1025–1031 (2019). https://doi.org/10.1007/s11606-019-04945-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-019-04945-4