Abstract

Euler deconvolution is a widely used automatic or semi-automatic method for potential field data. However, it yields many spurious solutions that complicate interpretation and must be reduced, eliminated, recognized, or ignored during interpretation. This study proposes a post-processing algorithm that converts Euler solutions produced by tensor Euler deconvolution of gravity data with an unprescribed structural index into probability values (p values) using the B-spline series density estimation (BSS) method. The p values of the Euler solution set form a probability density distribution on the estimation grid. The BSS method relies on the fact that while spurious solutions are sparse and ubiquitous, Euler deconvolution yields many similar or duplicate solutions, which may tightly cluster near real sources. The p values of the Euler solution clusters form multi-layered isosurfaces that can be used to discriminate neighboring target sources because the p values of spurious solutions are vanishingly small, making it simple to remove their interference from the probability density distribution. In all synthetic cases, the geometric outlines of anomaly sources are estimated from probability density isosurfaces approximating synthetic model parameters. The BSS method was then applied to airborne gravity data from Mount Milligan, British Columbia, Canada. Subsequently, results from synthetic models and field data show that the proposed method can successfully localize meaningful geological targets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Euler deconvolution is a semi-automatic method for locating geological sources of potential data and can be considered fully automatic when the N (also entitled as structural index) is not used as an input term (Smellie 1956; Hood 1965; Thompson 1982; Reid et al. 1990; Gerovska and Marcos J. 2003). As it can effectively delimit geological bodies with a little prior information, it has high applicability and flexibility, especially for large potential fields. In practice, low signal-to-noise ratios (natural or caused by the Fourier transform (FFT) (Farrelly 1997)), violations of Euler’s homogeneity condition (such as improper data gridding), and superposition of sources all result in many spurious solutions and the whole Euler result forms a sparsely scattered distribution (Ugalde and Morris 2010). Since Euler deconvolution was conceived, its potential and limitations have been extensively discussed. Euler deconvolution fails at both estimating the structural index and rejecting the spurious solution (Barbosa et al. 1999).

To remove spurious solutions, on the one hand, some scholars have proposed post-processing techniques. For instance, Gerovska and Marcos J. (2003) filtered spurious solutions using the standard deviations of Euler’s overdetermined equations. Most solutions, however, were derived from Euler’s overdetermined equations with large condition numbers, which led to few reliable solutions. Truncation error estimation was employed to improve the ability of Euler solutions to detect anomalies in magnetic sources by rejecting spurious solutions (Beiki 2013; Reid and Thurston 2014). Additionally, measures, such as a horizontal gradient filter, a distance constraint evaluation criterion (Reid and Thurston 2014), and an aggregation constraint evaluation criterion (Yao et al. 2004; Wang 2006), were proposed to filter unreliable solutions.

On the other hand, due to the derivatives of magnetic and gravitational potential field data being in the Euler sense, extensions of Euler deconvolution are based on additional constraint equations or conditions, such as 2-D extended Euler deconvolution (Mushayandebvu et al. 2001) or 2-D “Worming” Euler deconvolution (FitzGerald and Milligan 2013), rely on the use of simplified models (Cooper 2006); the combined Euler solutions with analytic signal peaks resulting in only a few solutions can be employed to estimate geological sources (Salem and Ravat 2003); or in conjunction with tilt filters to yield more contiguous solutions (Castro et al. 2019). However, it is still possible for spurious ones to interfere with the interpretation of Euler solutions, even if additional restrictive measures are used (Barbosa et al. 1999; Reid et al. 1990; FitzGerald et al. 2004; Melo et al. 2013).

By assuming a tentative N, traditional Euler deconvolution solves a system of equations in a sliding window scheme and yields Euler solutions for geological bodies. The best N is the one that generates the tightest cluster of Euler solutions in the vicinity of the real sources (Reid et al. 1990; FitzGerald et al. 2004). Therefore, clustering analysis was introduced into the post-processing of Euler solutions based on their aggregation characteristics to mark the spatial information of geological bodies. For example, based on Stavrev (1997) and Stavrev et al. (2009), two kinds of statistical analyses were conducted, one through clustering and the other not, and succeeded in a significant way because there was no artificial selection of structural indexes (Gerovska and Marcos J. 2003). Similarly, to avoid divergence of Euler solutions due to predicted structural indices (SIs), Ugalde and Morris (2010) used a fuzzy clustering method (FCM) to deal with Euler solutions. However, FCM requires the number of clusters to be predetermined, which can easily result in local optima (Lu and Yan 2015). Cao et al. (2012) introduced an adaptive fuzzy clustering algorithm to cluster Euler solutions produced by tensor Euler deconvolution to determine multiple anomaly sources. Since N is a function of the moving window’s size and the distance of observation-to-source (Ravat 1996), using a single N makes it challenging to characterize the multiple geological sources (Keating and Pilkington 2004; Williams et al. 2005). Many scholars tried using a series of tentative N to find the best cluster by manual evaluation, which significantly increased the workload of users or interpreters, especially for complex geological structures with multiple abnormal sources (Barbosa et al. 1999; Gerovska and Marcos J. 2003; Melo and Barbosa 2020).

The skewness and kurtosis of Euler solutions are used to reject spurious solutions using a histogram (FitzGerald et al. 2004), which, however, is difficult to divide the multidimensional space into non-coherent bins (Lee et al. 1999; Husson et al. 2018). Therefore, the histogram is unsuitable for determining multiple anomalous sources. Many similar or duplicate solutions are produced by Euler deconvolution, which may tightly cluster in the vicinity of real sources (FitzGerald et al. 2004). Inspired by clustering methods to discriminate different clusters by the similarity between samples, we propose a novel perspective on post-processing Euler solutions based on probability values calculated by nonparametric probability density estimation methods for detecting multiple geological targets. Like a continuous histogram with density, probability density distributions are employed in many geophysical inversions. Such as electromagnetic inversion (Trainor-Guitton and Hoversten 2011), gravity inversion (Boschetti et al. 2001), Rayleigh wave inversion (Dal Moro et al. 2007), and joint inversion of gravity and magnetic data (Bosch and McGaughey 2001; Bosch et al. 2006; Yunus Levent et al. 2019; Fregoso et al. 2020).

Probability density estimation of random variables includes parametric and nonparametric ones, with the former usually applied to probability density function estimation, whose distribution pattern of random variables is already known (Izenman 1991). When random variables cannot be fitted with some specific probability distribution, the nonparametric type of probability density function estimation can be adopted (Liu et al. 2013). The two common choices are Gaussian kernel smoothing density estimation (KS) and B-spline series density estimation (BSS) (López-Cruz et al. 2014; He et al. 2021). A generalization of the histogram density estimate, the nonparametric BSS is connected to the orthogonal series estimate (Schwartz 1967). BSS is a popular fitting method based on approximation theory to build curves that are the linear combination of B-spline basis functions connected through node vectors. The c+1 order B-spline basis functions can all be obtained recursively from the lower-order basis functions (Eilers and Marx 1996). Unlike the KS method, BSS can transform nonparametric estimation into a parametric one through model transformation. That is, by fixing the number of B-spline basis functions, the estimate of the probability density function of a random variable can be converted into the parameter estimate of the weight vector corresponding to the B-spline basis function (Gehringer and Redner 1992).

Gradient tensor measures are more sensitive to changes in density gradients than traditional gravity measurements, which rely on absolute density changes (Vasilevsky et al. 2003). Tensor Euler deconvolution produces better clustering and faster convergence than Euler deconvolution (Zhang et al. 2000; Fedi et al. 2009; Zhou et al. 2017). Therefore, we proposed a novel probability density imaging, based on Euler solutions’ similarity and aggregation, using BSS to calculate the probability density. It allows differentiating between clusters of Euler solutions for isolating anomaly sources. Through algorithm verification and model tests, BSS is proven to be reliable. Finally, we applied the 3-D BSS to the field data of Mount Milligan, the QUEST project in Canada, with the contour of probability density used to differentiate clusters of Euler solutions for localizing adjacent anomaly sources.

Theory

According to traditional Euler deconvolution, the 3-D Euler equation is (Thompson 1982; Reid et al. 1990):

Where \(T=T(x,y,z)\) is the potential field function, \(({x_o},{y_o},{z_o})\) and (x, y, z) are the coordinates to the observation point and the target anomaly source. The N depends on the nature (type) of the casual source (Thompson 1982; Stavrev and Reid 2007; Uieda et al. 2014; Reid et al. 2014). Generally, a specific geological body has its corresponding attenuation rate or N. The first and second derivatives of the magnetic and gravitational fields, as well as the continuing anomalies of these fields, can all be studied using the Euler equation.

To avoid the influence of regional background fields, a parameter B is usually introduced to represent the anomalies of regional background fields. Then, Eq. 1 can be rewritten as follows (Nabighian and Hansen 2001):

where the distortion rate \(\varepsilon\) exists only when \(N=0\) (Nabighian and Hansen 2001).

The tensor Euler deconvolution consists of Eq. 5 of conventional Euler deconvolution and two similar Equations (Zhang et al. 2000; Phillips et al. 2007):

where \(\alpha ,\beta = x,y,z\) and \({g_\alpha }\) is the gravity vector, the gravity gradient tensor component \({{\partial {g_\alpha }}/{\partial \beta }}\) is the partial derivative of \({g_\alpha }\) with respect to \(\beta\), and \({B_\alpha }\) are anomaly background fields.

Generally, a square sliding window of size \({w_x}={w_y}=w\) traverses the potential field data, and each sliding window with Eqs. (3-5) can form an equation system:

where for \(\alpha = x,y,z\), \({\varvec{A}} = {[{\varvec{A}^1},...,{\varvec{A}^i},...,{\varvec{A}^{{n_w}}}]^\mathrm{{T}}}\), \({n_w} = {w_x} \times {w_y}\), \({{\varvec{A}}^i} = \left[ \right.\) \(\left. {{{\left( {{{\partial {g_\alpha }} / {\partial x}}} \right) }^i},{{\left( {{{\partial {g_\alpha }} / {\partial y}}} \right) }^i},{{\left( {{{\partial {g_\alpha }}/{\partial z}}} \right) }^i},{{\left( {{g_\alpha } - {B_\alpha }} \right) }^i}} \right]\), \({\varvec{b}} = {\left[ {{{\varvec{b}}^1},...,{{\varvec{b}}^i},...,{{\varvec{b}}^{{n_w}}}} \right] ^\mathrm{{T}}}\), \({{\varvec{b}}^i} = {\left( {x{{\partial {g_\alpha }} / {\partial x}}} \right) ^i} + {\left( {y{{\partial {g_\alpha }}/ {\partial y}}} \right) ^i} + {\left( {z{{\partial {g_\alpha }}/ {\partial z}}} \right) ^i}\), \(\left\{ {{\varvec{m}}} \right\}\) is the Euler solution set, in which the \(i^{th}\) solution can be expressed as \({{\varvec{m}}^{i}} = {[{x^i_o},{y^i_o},{z^i_o},{N^i_o}]^\mathrm{{T}}}\).

There is difficulty in ascertaining the credibility and densities of Euler solutions and distinguishing misplaced solutions (‘tails’) among different anomalies in the post-processing strategy (Gerovska and Marcos J. 2003; Mikhailov et al. 2003; FitzGerald et al. 2004; Goussev and Peirce 2010; Melo and Barbosa 2020). Consequently, BSS is applied to calculate the data combinations resulting from Euler solution sets, such as \(\{x_o\}\), \(\{x_o, y_o\}\), \(\{x_o, y_o, z_o\}\), or \(\{x_o, y_o, z_o, N\}\). Due to the linear combination of B-spline basis functions connected through node vectors, the \(c+1\) order B-spline basis functions can all be obtained recursively from the lower-order basis functions (Eilers and Marx 1996). Take the uniform B-spline estimator in \(\mathbb {R}^1\) as an example, let \({\left\{ {\bar{x}}_k \right\} }_{-\infty }^\infty\) be a uniform partition of the real numbers, with interval spacing \({\bar{h}} = {\bar{x}}_{k+1} - {\bar{x}}_k\) for each k and the B-Spline basis functions can be defined as:

where \({B}^c\) is a normalized \(c^{th}\) order uniform B-spline.

Theorem 1

The uniform B-Splines, \(\left\{ {B}_{{k}}^c \left( {{\bar{x}}} \right) \right\}\), form a partition of unity (Schumaker 2007), i.e.,

for all \({{\bar{x}}} \in \left[ \bar{x}_j,\bar{x}_{j+1}\right]\).

Suppose that \({{\varvec{X}}^1}\), ..., \({{\varvec{X}}^i}\),..., \({{\varvec{X}}^n}\) be an independent and identically distributed random d- dimensional sample derived from function f, so the \(i^{th}\) sample is expressed as:

where especially in this paper, \({\varvec{X}}^i\) corresponds to various Euler solutions subsets, such as \({{{x}}^i_o}\), \([{x^i_o},{y^i_o}]\), \([{x^i_o},{y^i_o},{z^i_o}]\), and \([{x^i_o},{y^i_o},{z^i_o},{N^i}]\). Correspondingly, the j- dimensional data is denoted by \({{\varvec{X}}_j}\)=[ \({\varvec{X}}_j^1\),...,\({\varvec{X}}_j^i\),...,\({\varvec{X}}_j^n\) ].

We further suppose the sample \({{\varvec{X}}^i}\) is projected onto the estimation grid \({\varvec{\chi }}\) with size a \({\varvec{M}}=\left[ {\varvec{M}}_1,...,{\varvec{M}}_j,...,{\varvec{M}}_d\right]\) and the bandwidth \({\varvec{h}} = {[{{\varvec{h}}_1},...,{{\varvec{h}}_j},...,{{\varvec{h}}_d}]}\).

Then, following the Gehringer and Redner (1992), define an d- dimensional probability density estimate \({\hat{f}}(\varvec{x})\) on an estimate point \({{\varvec{{\bar{x}}}}}=[{\varvec{{\bar{x}}}}_1,...,{\varvec{\bar{x}}}_j,...,{\varvec{{\bar{x}}}}_d]\) of the estimation grid \(\varvec{\chi }\) as follow:

where the subscript \({{\varvec{k}}_j}\) specifies a position along the j- dimensional of the estimation grid \(\varvec{\chi }\).

Based on the normalized \(c^{th}\) order uniform B-spline \({B}^c\) and the \(j^{th}\) dimensional bandwidth \({\varvec{h}_j}\), the B-Spline basis functions \({B}_{\varvec{k}_j}^c \left( \varvec{{\bar{x}}}_j \right)\) can be redefined as:

and following Gehringer and Redner (1992), the coefficients \({{{{\varvec{a}}_{{{\varvec{k}}_1}...{{\varvec{k}}_j}...{{\varvec{k}}_d}}}}}\) and \({{\varvec{b}}_{{{\varvec{k}}_1}...{{\varvec{k}}_j}...{{\varvec{k}}_d}}}\) can be expressed as:

where the \(j^{th}\) bandwidth \(\varvec{h}_j\) can be expressed as follows:

where \({\varvec{p}}_{{j}}^{\max }\) and \({\varvec{p}}_{{j}}^{\min }\) are, respectively, the upper and lower bound of the \(j^{th}\) dimensional estimation grid \(\varvec{\chi }_j\), which can be expressed as \({\varvec{p}}_j^{\max } = {\varvec{X}}_j^{\max } + 0.25({\varvec{X}}_j^{\max } - {\varvec{X}}_j^{\min })\), and \({\varvec{p}}_j^{\min } = {\varvec{X}}_j^{\min } - 0.25({\varvec{X}}_j^{\max } - {\varvec{X}}_j^{\min })\), respectively. In the two expressions, \(\varvec{X}_{{j}}^{\max }\) and \(\varvec{X}_{{j}}^{\min }\), respectively, denote the marginal maximum and minimum value of the \(\varvec{X}_{{j}}^{}\) (Hüsler and Reiss 1989; Beranger et al. 2023). Generally, the first grid point is expressed as \(\varvec{\chi }_j^1 = \varvec{p}_j^{\min }\), and the last one is described as \({\varvec{M} _j}\). Then, can be rewritten as:

where \({\delta _j}\) denotes the standard deviation of \({\varvec{X}_j}\), \({\gamma _j}\) denotes the empirical coefficient related to dimension d and physical memory, and ⌊ ⌋ denotes the rounding operator. For practical applications, low-order B-sample basis functions are generally used, so here, c=3, Eq. 10 can be rewritten as:

where \(B_{{{\varvec{k}}_j}}^3(\varvec{{\bar{x}}}_j)\) is the normalized cubic uniform B-spline basis function. When c=1, 2, and 3, the \(B^{c} {\left( {\bar{x}} \right) }\) in Eq. 11 can be expressed as:

To calculate the probability density value (p value) of the various Euler solutions subsets, such as \(\left\{ {{{x}}_o}\right\}\), \(\left\{ {x_o},{y_o}\right\}\), \(\left\{ {x_o},{y_o},{z_o}\right\}\), or \(\left\{ {x_o},{y_o},{z_o},{N}\right\}\) at each estimation point of the \(\varvec{\chi }\) with size a \(\varvec{M}\) for presentation purposes, we traverse all of the sample data on the estimation grid using Eq. 16. A server equipped with an Intel(R) Xeon(R) Gold 5117 CPU and 64 GB of memory was used for all tests.

Results

Algorithm verification of the BSS algorithm

To verify the correctness and reliability of BSS, a 1-D random dataset with 1500 samples is generated using the mean of three normal distributions, with the means \(\mu\)=−5, −3, 0, 4, and 9, and the variances \(\sigma =\frac{1}{5}\), \(\frac{1}{4}\), \(\frac{1}{3}\), \(\frac{1}{2}\), and \(\frac{1}{6}\). Based on this, the computational accuracy of the BSS algorithm is compared to that of the KS.

In nonparametric estimation, the challenge is to identify the features that are really there, but at the same time to avoid spurious noise (Zambom and Dias 2013). As shown in Fig. 1, the density estimation results depend on the choice of bandwidth. Larger bandwidths tend to result in oversmoothed density estimates that may hide some interesting underlying structure, as shown in curve 4. Whereas, smaller bandwidths tend to result in unsmoothed density estimates that may contain much spurious noise. It is crucial to choose the optimal bandwidth for the dataset. The optimal bandwidth determines the “resolution” of the kernel density estimate (Kobos and Mańdziuk 2010). When the optimal bandwidth is determined using the improved Sheather-Jones algorithm, the KS results are more accurate (Botev et al. 2010), but the bandwidths are small, leading to perturbations in the peaks of the probability density estimates for \(\mu\)=−3 and 3, as shown in curve 5. However, there has yet to be a consensus on choosing the optimal bandwidth (Levine 2008). Therefore, a small bandwidth is not recommended for practical applications. When the bandwidth is the same, the results of BSS are more reliable, and the computation time is lesser, as shown in curves 2–3; when the default bandwidth is used, as shown in curves 2 and 4, the results of BSS are more accurate, but at the same time, the computation time of KS increases significantly, which is mainly due to the fact that the KS algorithm introduces a large number of search calculations to distinguish adjacent probability density peaks in the case of large bandwidth. In summary, compared with the KS results, the BSS is a reliable method.

Due to space limitations, there is no longer a comparative analysis of higher-dimensional normal distribution combination data.

Algorithm verification of BSS for Euler solutions

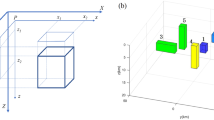

Simple models

Three models are constructed with geometric parameters to verify further the adaptability and reliability of the BSS estimate Euler solutions, as shown in Table 1. The relationships between the structural indices, types, and calculated depth positions of these models are listed in Table 2. The observation height is 50 m, the range of the survey area is x: −5000 m \(\sim\) 5000 m, y: −5000 m \(\sim\) 5000 m, and the measurement grid is 50 m \(\times\) 50 m.

To obtain Euler solutions, the contaminated FTG data are traversed by a sliding window of size 15 \(\times\) 15 points using tensor Euler deconvolution. Then, the Euler solutions, whose \(\textrm{SIs}< 0\), are filtered out to discriminate those without physical meaning. The number of Euler solutions for the cuboid, horizontal cylinder, and sphere models is 14,896, 13,400, and 13,514, respectively.

As shown in Fig. 2, because tensor Euler deconvolution is easily affected by noise, the shallow Euler solutions, whose N is relatively small, generally \(N< 0.5\), tend to diverge. In the deeper part, however, the divergence of the Euler solutions becomes lower and tends to be stable as depth increases. The specific performance is that the Euler solutions get closer to the anomaly source, increasing their N.

1-D BSS is used to analyze the Euler solutions of the three models, and there are twelve probability density curves, as shown in Fig. 3. It can be seen from curves 1–3 that there is only one peak on each curve derived from the subset \(\{x_o\}\). The coordinates x of each peak of the cuboid, horizontal cylinder, and sphere curves are −1000 m, 0 m, and 1500 m, respectively, which agree with those theoretical values. As shown in curves 4–6 of the subset \(\{y_o\}\), the associated y of peaks of the cuboid and sphere curves are −1500 m and 2500 m, respectively, which are also consistent with those theoretical values. As for curve 5 of the horizontal cylinder, there are two probability peaks, and their values are almost equivalent. The corresponding y of peaks on curve 5 is about ± 800 m, consistent with the coordinates of the two endpoints of the horizontal cylinder. There are two peaks, one high and one low, on curves 7–9 in Fig. 3a. The corresponding z of the cuboid, horizontal cylinder, and sphere curves are [50 m, 600 m], [60 m, 420 m], and [50 m, 750 m], respectively. It can be found that the first value of z corresponds to the shallow Euler spurious solutions, which causes instability in the Euler homogeneous equation. However, the second value of z is almost consistent with the theoretical values of the models. It can be seen from the above results that when 1-D BSS is used to estimate the spatial positions of the anomalies, most of the peak points on the XOY plane (the values of x and y) are consistent with theoretical values.

As the result of the 1-D BSS derived from the horizontal cylinder in Fig. 3, only the corresponding y and depth estimation z are ambiguous. In addition, it can be seen from Fig. 3b that there are two or three peaks derived from N of the three models. The first left peak on the curves corresponds to the shallow spurious solutions, while the rightmost peak corresponds to the deep Euler solutions (Fig. 2), whose N are almost consistent with their expected values. However, the rightmost peak of curve 2 is related to the relatively small Euler solution of N above the horizontal cylinder in Fig. 2d, e.

It can be seen from the above results that there are numerous peaks when 1-D BSS is used to estimate the subsets \(\{z_o\}\) and \(\{N\}\). Therefore, a higher-dimensional BSS is adopted to avoid the case of multiple peaks. The following six subsets \(\{x_o, y_o\}\), \(\{x_o, z_o\}\), \(\{y_o, z_o\}\), \(\{x_o, N\}\), \(\{y_o, N\}\), and \(\{z_o, N\}\) are obtained from the four-dimensional Euler solution set \(\{x_o, y_o, z_o,N\}\). Then, 18 subsets derived from the three models are analyzed using 2-D BSS.

As shown in Fig. 4, the probability density distribution images are obtained using 2-D BSS with 2-D subsets derived from the three models. The results of the cuboid in Fig. 4a–f show that except for the ambiguity in the results of the subset \(\{z_o, N\}\), the other five results are consistent with their expected values. The corresponding values of x, y, z, and N for each probability density peak are −1000 m, −1500 m, 700 m, and \(\approx\)2, respectively. Because of many spurious solutions with smaller structural indices in the shallow part, many probability density tails occur near z=0 or N=0.

As for the horizontal cylinder, the results show that there are only two density peaks along the y direction. The horizontal cylinder’s endpoints are indicated by two peaks with y coordinates of ±800 m. However, the coordinates of x, z, and N corresponding to other peaks are 0 m, 450 m, and \(\approx\)1.4. The sphere’s results show only one probability density peak, in contrast to the cubic and horizontal cylinder results. The coordinates of x, y, z, and N corresponding to each peak are 1500 m, 2500 m, 800 m, and \(\approx\)2, which is almost consistent with the expected value.

Compared with the 1-D BSS, the 2-D BSS only yields one peak by estimating the depths z and N of the three models, which provide a basis for determining the locations and shapes of anomalies. The probability density contours derived from the horizontal cylinder are scattered along the y-axis, with the two endpoints as the center, caused by the equivalence effect controlling the potential field. The gravity anomaly response of the horizontal cylinder can be generated by two adjacent rectangles, leading to some ambiguity in interpreting geological formations using Fig. 4.

Furthermore, illustrating the five-dimensional results \(\{x_o,y_o,z_o,N,p\)-\(value\}\) obtained from the dataset \(\{x_o,y_o,z_o,N\}\) using a four-dimensional BSS is difficult. To avoid the above two problems, 3-D BSS is adopted for four subsets \(\{x_o,y_o,z_o\}\), \(\{x_o,y_o,N\}\), \(\{x_o,z_o,N\}\), and \(\{y_o,z_o,N\}\), which are derived from the whole Euler solutions dataset \(\{x_o,y_o,z_o,N\}\). Similar to the 2-D BSS process for Euler solutions, there are 12 subsets derived from the three models analyzed by 3-D BSS.

Figures 5, 6, 7 indicate the results of BSS for 3-D subsets derived from the cube, horizontal cylinder, and sphere, respectively. The corresponding expected values are given as reference planes for each subplot. The N value of the horizontal cylinder is smaller than its expected value. Except for this, the values of x, y, z, and N of each probability density peak are almost consistent with their expected values. However, because tensor Euler deconvolution is easily affected by noise, many spurious solutions with low SIs, generally \(N<\)0.5, are generated in the shallow part. In turn, it leads to one or more peaks, with a low probability density value, in the results of the subsets \(\{x_o,y_o,N\}\), \(\{x_o,z_o,N\}\), and \(\{y_o,z_o,N\}\) (Figs. 5c–d, 6c–d, and 7c). Furthermore, due to the equivalence principle, the Euler solution forms scattering sources at the ends of the horizontal cylinder, resulting in multiple erroneous peaks in the results of \(\{y_o,z_o,N\}\)(Fig. 7d). By contrast, the probability density isosurfaces of the subset \(\{x_o,y_o,z_o,N\}\) obtained using 3-D BSS provide a more intuitive way to locate anomaly sources. Therefore, BSS combined with the solution subset \(\{x_o,y_o,z_o,N\}\) is used to separate and locate geological targets in the remainder of this paper.

Combination model

For the convenience of contrast analysis, a combined model of the cube, sphere, and vertical cylinder was proposed by Zhou et al. (2016) to test the proposed algorithm’s adaptability to complex geological sources. This cube has 800 m long sides, with its center at (1500 m, 4000 m, 650 m), with 0.5 \(\mathrm {g/cm}^{3}\) density contrast. The vertical cylinder has a radius of 400 m at its center (1000 m, 1000 m, 400 m), and its length is 1000 m, with 1.0 \(\mathrm {g/cm}^{3}\) contrast. This sphere has a radius of 350 m at its center (3500 m, 2500 m, 500 m) with 1.0 \(\mathrm {g/cm}^{3}\) density contrast. The measurement area is x:0\(\sim\)5000 m, y:0\(\sim\)5000 m; the survey grid interval is 25 m\(\times\)25 m, and its observation height is 25 m. The FTG data derived from the combined model are shown in Fig. 8.

A total of 34,596 Euler solutions are obtained based on the Eqs. (3-5) with a sliding window of 15\(\times\)15. Compared with the Euler solutions in Fig. 6 in Zhou et al. (2016), the Euler solutions in Fig. 9 are more disorganized, mainly because spurious solutions are not filtered, and the optimal solutions are not determined. As shown in Fig. 9, the z-axis points upward so that the shallow Euler solutions do not cover the deep ones. Due to the mutual interference of adjacent anomaly sources and the influence of noise, Euler solutions tend to diverge in the shallow part, and their N is relatively small. Conversely, the deep Euler solutions tend to be stable, and their SIs dramatically increase as the depth increases. However, their depth exceeds the range of the anomaly source by far.

Suppose all clusters are dense enough and well separated by low-density regions. In that case, the density-based spatial clustering of applications with noise (DBSCAN) can find clusters of any shape in the spatial database without “noise" (Ester et al. 1996; Daszykowski et al. 2001). For comparison, we analyzed the Euler solutions using DBSCAN and yielded five clusters. The object numbers of their neighborhood are 27, 23, 32, 21, and 31, respectively. Their neighborhood radii are 211.35 m, 57.16 m, 328.31 m, 58.78 m, and 452.29 m, respectively. As can be seen from the clustering results (Fig. 10), since DBSCAN only eliminates some spurious solutions, the sparse and dense distributions of the solutions are also indistinguishable from other traditional discriminative techniques.

Since surface gravity data have no depth resolution (Li and Oldenburg 1998) and the Occam algorithm has difficulty in recovering depth resolution, the density distribution map becomes increasingly blurred with increasing depth z (LaBrecque et al. 1992; Siripunvaraporn and Sarakorn 2011; Cao et al. 2023), as shown in Fig. 11. In addition, our method is more effective compared to the Occam algorithm’s inability to recover depth resolution from surface gravity data.

In Fig. 9, the Euler solutions, filtered by \(N<0\) or \(N>3\), are processed by BSS using an estimation grid with a size of 400 \(\times\) 400 \(\times\) 200. The probability density distributions in Fig. 12 show that BSS in conjunction with subset \(\{x_{o}, y_{o}, z_{o}\}\) can effectively indicate locations of anomaly sources compared with the Euler solutions in Fig. 6 in Zhou et al. (2016).

Field data

Alkaline sediments in British Columbia formed 210 to 180 Ma ago between the superterranes of the two oceanic island zones of Quesnelia and Stikinia. The Quesnel Terrane is an early Mesozoic volcanic arc with significant alkaline and calc-alkaline porphyry potential and has a large number of copper-molybdenum and copper-gold porphyry deposits (Oldenburg et al. 1997; Schiarizza 2003; Logan and Mihalynuk 2014; Melo and Barbosa 2020). The middle part of the Geoscience BC survey area is mostly covered by a thick layer of Quaternary glacial sediments, which results in insufficient exploration work (Cui et al. 2017; Deok Kim et al. 2020). Because of this, the QUEST project, as part of Geoscience BC, carried out a series of geophysical surveys from 2007 to 2009, such as electromagnetic (Geotech Limited 2008), aeromagnetic (Aeroquest Limited 2009), and airborne gravity (Sander Geophysics Limited 2008; Farr et al. 2008; Phillips et al. 2009; Mitchinson et al. 2013). All these studies mainly focus on six known porphyry deposits in the Quesnel Terrene: Endako, Huckleberry, Bell, Granisle, Morrison, and Mount Milligan (Mitchinson et al. 2013). Mount Milligan is located 155 Km northwest of Prince George, British Columbia, in the central part of Quesnellia. The Takla group consists mainly of two monzonite stocks, namely Main and Southern Star, and the parent rock affected by hydrothermal fluids. According to Terrane Metals Corp., its resources are about 417.1 million tons, with about 5.5 million ounces of gold and 540,000 tons of copper (Rezaie and Moazam 2017).

Mount Milligan is the testing ground for different geophysical survey methods (Fig. 13). Oldenburg et al. (1997) inverted the airborne magnetic, direct current resistivity, induced polarization, and airborne electromagnetic data of the Mount Milligan copper-gold porphyry deposit one by one. The inversion results are consistent with the rock model constructed with geological information from 600 boreholes and the 3-D model of gold concentration. Espinosa-Corriols and Kowalczyk (2008) analyzed the geophysical characteristics of airborne magnetic and VTEM data of the Mount Milligan copper-gold porphyry deposit. Rezaie and Moazam (2017) achieved 3-D magnetic inversion for the Mount Milligan copper-gold porphyry deposit. The recovered magnetic susceptibility model is consistent with the actual structure (Li and Oldenburg 1996).

Currently, magnetic and electromagnetic data are most often analyzed, while gravity data are used less frequently. At the same time, there is no depth resolution of the gravity and magnetic surface data, and the recovered density distribution obtained by Occam-like inversion tends to be smooth (Ramirez et al. 1993; Li and Oldenburg 1998; Siripunvaraporn and Sarakorn 2011). Under the influence of the volume effect of gravity and magnetic fields, the residual density and magnetic susceptibility obtained through inversion are far from the natural physical property distribution. Therefore, this paper attempts to use Mount Milligan gravity data to reveal geological structures.

Mount Milligan Geological Map after Cui et al. (2017)

The residual Bouguer gravity anomaly is the difference between the gravity data at 2700 m, which corresponds to the flight height, and 5700 m. The upward continuation height (5700 m) was determined using the Montaj MAGMAP Filtering tool and correlation analyses starting from 2700 m to 9700 m with an interval height of 500 m (Zeng et al. 2007; Montaj 2008; Setiadi et al. 2021; Cao et al. 2023). The upward continuation was calculated on each height map using a grid size (500 m \(\times\) 500 m) using the Geosoft Oasis Montaj software (Montaj 2008). Then, we convert the residual Bouguer gravity anomaly into FTG data using FFT (Mickus and Hinojosa 2001), as shown in Fig. 14.

The gravity maps in Fig. 14 show that \(g_z\) is an anomaly that moves along the northwest direction at Mount Milligan. There is no Gibbs disturbance in gravity vectors and components of FTG, which is easily produced by FFT operation (Robertson et al. 1998). On that basis, we use a series of sliding windows whose sizes range from 4 to 25 for traversing the gridded data. Then, 215,727 Euler solutions are obtained with tensor Euler deconvolution based on Eqs. (3-5). The Euler solutions’ slice is similar to density slices and has a depth range of \(z-dz\)/2 to \(z+dz\)/2 as their slice at depth z. Here, dz=300 m.

After comparing Figs. 15c–e and 14c, it can be found that the solution clusters at the center of the former and the northwest-trending anomalies of the latter coincide in some way. At a relatively low depth z, the solutions are scattered in disorder, as shown in Fig. 15a–b; as z increases, the situation will be improved, and there will be some solution clusters. Since there are numerous messy Euler solutions, the solutions drawn early are likely to be covered by those drawn later. Moreover, the solutions at the back of the view may be covered by the ones at the front. As a result, it is difficult to mark meaningful anomaly sources and geological structures with Euler solutions.

For further comparisons, Occam inversion is conducted on the residual gravity anomalies using the interpretation model with 80\(\times\)80\(\times\)40 units, whose length is 250 m, to yield a 3-D density distribution.

Because the surface gravity and magnetic data lack depth resolution (Li and Oldenburg 1998), it is difficult for Occam-like inversion to recover depth resolution (Ramirez et al. 1993; Siripunvaraporn and Sarakorn 2011). It can be seen from the inversion density imaging of Mount Milligan in Fig. 16 that the location patterns of the residual density in the subfigures are similar. It hinders practical geological analysis. So, we use the BSS algorithm on an estimation grid of 400\(\times\)400\(\times\)200 to evaluate the Euler solutions before filtering spurious solutions and selecting optimal ones.

As shown in Fig. 17, combined with the available geological information, the surrounding strata are composed of sedimentary rock (muTrTsf), eolian deposit (EOls), and calc-alkaline volcanic rock of Witch Lake Formation (uTrTWppbb). The muTrTsf combines mudstone, siltstone, and shale fine clastic sedimentary rocks. The EOls deposit is formed by stone, mudstone, coal, pebble conglomerate, volcanic wacke, volcanic ash, and basalt, all of which are probably correlatable with the Endako Group. The uTrTWppbb is mainly composed of calc-alkaline volcanic rocks of the Witch Lake Formation, some volcanic clastic rocks, and basaltic volcanic rocks. Peak A is illustrated in Fig. 17a–b at a depth of about −1000 m to −700 m, consistent with the depth of the mine profile given by Jago et al. (2014). With increasing depth, in Fig. 17c–f, the peak A is gradually shifted to the south, which is inferred to be mainly caused by density contrast among the muTrTsf, Eols, and uTrTWppbb strata, and influenced by the adjacent faults. As shown in Fig. 17a–d, the left flank of peak B along the northwest fault indicates that the fault is probably at a depth of 1000 m. As the depth z increases, peak B gradually develops into three probability density peaks in Fig. 17b–d. Because peak B’ is situated at the common boundary of sedimentary rocks (muTrTsf) and volcanic rocks (uTrTca), it is possibly formed by the relative density of adjacent strata. The volcanic rock uTrTca is dominated by volcanic flows, breccia, agglomerates, and augite with lesser plagioclase-bearing rocks. As its depth increases, peak B likely moves to the south at about 2000 m, as shown in Fig. 17a–h, which was probably caused by an intrusive rock.

In Fig. 16, peak C overlaps with the outline formed by the volcanic rocks uTrTca and its surrounding faults. Considering its corresponding recovered residual density of about 0.1 \(\mathrm {g/cm}^{3}\) in Fig. 16, peak C is inferred to be caused by the volcanic rocks uTrTca, the limestone bioreef/reef sedimentary rocks uTrTls, and their surrounding faults.

According to Fig. 17g–h, peak D appears in the uTrTWppbb stratum, and its left flank spreads primarily along the adjacent fault with increasing depth z, which leads us to conclude that peak D is caused by the adjacent fault penetrating the uTrTWppbb stratum.

In Fig. 18, it is relatively easy to find out the relationship between the probability density peaks formed by the corresponding contour surfaces, such as the affiliation between peaks B’ and B, and the adjacent locations of peaks A and D, which is caused by the nearby fault that penetrates the stratum uTrTWppbb.

Discussion

There are different aggregation characteristics of Euler solutions obtained by different Euler deconvolutions.

We found that combining tensor Euler deconvolution with the proposed algorithm yields meaningful geological results characterized by satisfactory probability density distributions with depth resolution, as shown in Figs. 17 and 18, in comparison with traditional Euler deconvolution (Fedi et al. 2009), Euler deconvolution with truncated SVD (Beiki 2013), and joint Euler deconvolution (Zhang et al. 2000; Zhou et al. 2017).

It is challenging to characterize multiple anomalous sources using a single N because N varies with observation-to-source distance and sliding window size (Ravat 1996). Conventional Euler deconvolution with prescribed N will produce many spurious solutions due to the difficulty in determining N of geological targets of interest (FitzGerald et al. 2004). Therefore, the tensor Euler deconvolution with unprescribed N was employed for discriminating spurious solutions and locating geological targets. Furthermore, since a fixed-size sliding window does not correct for different-sized anomalous sources, we used different-sized sliding windows for field data.

Since all observed samples are needed to traverse each point of the estimation grid, this process is very time-consuming. However, we used MATLAB’s vectorization technique, which can significantly reduce computational time with a speedup of 1\(\sim\)2 orders of magnitude (Chen et al. 2017).

Marginal maxima and minima values are constants for a given sample data (Hüsler and Reiss 1989; Beranger et al. 2023). Thus, the estimation grid size is inversely proportional to the bandwidth. Using smaller bandwidths or larger estimation grids will significantly increase computation time, and its computational complexity is O(\(n^2\)) (Gehringer and Redner 1992; Cao et al. 2023). Since the tensor Euler deconvolution can readily yield repeating or similar solutions (FitzGerald et al. 2004), using a smaller bandwidth will be helpful to locate the centroid of anomaly sources, not characterize the geological boundary. For this reason, an optimal bandwidth is generally selected in practical applications by considering memory consumption, computation time, and determining the anomaly source boundary (Cao et al. 2023).

Conclusion

When most Euler deconvolution variants, which include tensor Euler deconvolution, suffer from noise, the number of spurious solutions increases, which reduces the proportion of optimal solutions with Euler solutions (Keating and Pilkington 2004; Pašteka et al. 2010; Melo and Barbosa 2020; Cao et al. 2023). By introducing BSS based on normalized B-spline into the post-processing of Euler solutions, the paper verifies through model tests and field data that the algorithm proposed during the study can successfully process Euler solutions and separate anomalies. The algorithm results based on BSS and KS are compared under 1-D and 2-D conditions, with the latter’s estimation error being much more severe than that of the former, verifying the applicability of the BSS method.

Whether under 1-D, 2-D, or 3-D conditions, the synthetic models’ Euler solution sets’ estimation results based on BSS are the same as those expected values. 3-D BSS is adopted to calculate the probability density of the Euler solution set, whose contour surface of probability density shows anomaly distribution more directly than other solution sets. The results derived from Mount Milligan show that 3-D BSS is useful for geological interpretation.

Availability of data and materials

All data generated or analyzed during this study are included in this published article.

Code availability

Not applicable.

Change history

16 March 2024

A Correction to this paper has been published: https://doi.org/10.1007/s11600-024-01328-0

References

Aeroquest Limited (2009) Report on a helicopter-borne AeroTEM system electromagnetic & magnetic survey. Report, Geoscience BC, https://cdn.geosciencebc.com/project_data/QUEST-West/Electromagentics/GBCReport2009-6_Quest_West_Report.pdf

Barbosa VC, Silva JB, Medeiros WE (1999) Stability analysis and improvement of structural index estimation in Euler deconvolution. Geophysics 64(1):48–60. https://doi.org/10.1190/1.1444529

Beiki M (2013) TSVD analysis of Euler deconvolution to improve estimating magnetic source parameters: an example from the åsele area, sweden. J Appl Geophys 90:82–91. https://doi.org/10.1016/j.jappgeo.2013.01.002

Beranger B, Lin H, Sisson S (2023) New models for symbolic data analysis. Adv Data Anal Classif 17(3):659–699. https://doi.org/10.1007/s11634-022-00520-8

Bosch M, McGaughey J (2001) Joint inversion of gravity and magnetic data under lithologic constraints. Lead Edge 20(8):877–881. https://doi.org/10.1190/1.1487299

Bosch M, Meza R, Jiménez R et al (2006) Joint gravity and magnetic inversion in 3D using monte carlo methods. Geophysics 71(4):G153–G156. https://doi.org/10.1190/1.2209952

Boschetti F, Hornby P, Horowitz FG (2001) Wavelet based inversion of gravity data. Explor Geophys 32(1):48–55. https://doi.org/10.1071/EG01048

Botev ZI, Grotowski JF, Kroese DP (2010) Kernel density estimation via diffusion. Ann Stat. https://doi.org/10.1214/10-AOS799

Cao S, Ziqiang Z, Guangyin L (2012) Gravity tensor euler deconvolution solutions based on adaptive fuzzy cluster analysis. J Cent South Univ (Sci Technol)(in Chinese) 43(3):1033–1039. http://www.cnki.com.cn/Article/CJFDTotal-ZNGD201203039.htm

Cao S, Deng Y, Yang B et al (2023) Kernel density derivative estimation of Euler solutions. Appl Sci 13(3):1784. https://doi.org/10.3390/app13031784

Castro FR, Oliveira SP, de Souza J et al (2019) Constraining Euler deconvolution solutions through combined tilt derivative filters. Pure Appl Geophys. https://doi.org/10.1007/s00024-020-02533-w

Chen H, Krolik A, Lavoie E, et al. (2017) Automatic vectorization for MATLAB. In: Ding C, Criswell J, Wu P (eds) Languages and compilers for parallel computing, vol 10136. Springer International Publishing, Cham, pp 171–187. https://doi.org/10.1007/978-3-319-52709-3_14

Cooper GRJ (2006) Obtaining dip and susceptibility information from Euler deconvolution using the hough transform. Comput Geosci 32(10):1592–1599. https://doi.org/10.1016/j.cageo.2006.02.019

Cui Y, Miller D, Schiarizza P, et al. (2017) British Columbia digital geology. Report, British Columbia Ministry of Energy, Mines and Petroleum Resources, https://www2.gov.bc.ca/gov/content/industry/mineral-exploration-mining/british-columbia-geological-survey/geology/bcdigitalgeology

Dal Moro G, Pipan M, Gabrielli P (2007) Rayleigh wave dispersion curve inversion via genetic algorithms and marginal posterior probability density estimation. J Appl Geophys 61(1):39–55. https://doi.org/10.1016/j.jappgeo.2006.04.002

Daszykowski M, Walczak B, Massart D (2001) Looking for natural patterns in data. Chemom Intell Lab Syst 56(2):83–92. https://doi.org/10.1016/S0169-7439(01)00111-3

Deok Kim J, Sun J, Melo A (2020) Regional scale mineral exploration through joint inversion and geology differentiation based on multi-physics geoscientific data. SEG Technical Program Expanded Abstracts, pp 1379–1383. https://doi.org/10.1190/segam2020-3428427.1

Eilers PHC, Marx BD (1996) Flexible smoothing with B-splines and penalties. Stat Sci 11(2):89–121. https://doi.org/10.1214/ss/1038425655

Espinosa-Corriols S, Kowalczyk P (2008) Geophysical signature of the Mt. Milligan Cu/Au deposit in the quesnel porphyry belt. SEG Technical Program Expanded Abstracts, pp 1142–1146. https://doi.org/10.1190/1.3059124

Ester M, Kriegel HP, Sander J, et al. (1996) A density-based algorithm for discovering clusters in large spatial databases with noise. In: Proceedings of the second international conference on knowledge discovery and data mining. AAAI Press, KDD’96, pp 226–231

Farr A, Meyer S, Bates M (2008) Airborne gravity survey quesnellia region, British Columbia. Report, Sander Geophysics. https://www.geosciencebc.com/i/project_data/QUESTdata/GBCReport2008-8/Gravity_Technical_Report.pdf

Farrelly B (1997) What is wrong with Euler deconvolution? In: 59th EAGE conference & exhibition. European Association of Geoscientists & Engineers, pp cp–131–00225. https://doi.org/10.3997/2214-4609-pdb.131.GEN1997_F033

Fedi M, Florio G (2013) Determination of the maximum-depth to potential field sources by a maximum structural index method. J Appl Geophys 88:154–160. https://doi.org/10.1016/j.jappgeo.2012.10.009

Fedi M, Florio G, Quarta TA (2009) Multiridge analysis of potential fields: geometric method and reduced Euler deconvolution. Geophysics 74(4):L53–L65. https://doi.org/10.1190/1.3142722

FitzGerald D, Milligan PR (2013) Defining a deep fault network for Australia, using 3D “worming.” ASEG Ext Abstr 1:1–4. https://doi.org/10.1071/ASEG2013ab135

FitzGerald D, Reid A, McInerney P (2004) New discrimination techniques for Euler deconvolution. Comput Geosci 30:461–469. https://doi.org/10.1016/j.cageo.2004.03.006

Fregoso E, Palafox A, Moreles MA (2020) Initializing cross-gradients joint inversion of gravity and magnetic data with a Bayesian surrogate gravity model. Pure Appl Geophys 177(2):1029–1041. https://doi.org/10.1007/s00024-019-02334-w

Gehringer KR, Redner RA (1992) Nonparametric probability density estimation using normalized B-splines. Commun Stat Simul Comput 21(3):849–878. https://doi.org/10.1080/03610919208813053

Geotech Limited (2008) Report on a helicopter-borne versatile time domain electromagnetic (VTEM) geophysical survey: QUEST project, central British Columbia (NTS 93A, B, G, H, J, K, N, O & 94C, D). Report, Geoscience BC. https://cdn.geosciencebc.com/project_data/QUESTdata/report/7042-GeoscienceBC_final.pdf

Gerovska D, Marcos JAB (2003) Automatic interpretation of magnetic data based on Euler deconvolution with unprescribed structural index. Comput Geosci 29(8):949–960. https://doi.org/10.1016/S0098-3004(03)00101-8

Goussev SA, Peirce JW (2010) Magnetic basement: gravity-guided magnetic source depth analysis and interpretation. Geophys Prospect 58(2):321–334. https://doi.org/10.1111/j.1365-2478.2009.00817.x

He Y, Fan H, Lei X et al (2021) A runoff probability density prediction method based on B-spline quantile regression and kernel density estimation. Appl Math Model 93:852–867. https://doi.org/10.1016/j.apm.2020.12.043

Hood P (1965) Gradient measurements in aeromagnetic surveying. Geophysics 30(5):891–902. https://doi.org/10.1190/1.1439666

Hüsler J, Reiss RD (1989) Maxima of normal random vectors: between independence and complete dependence. Stat Probab Lett 7(4):283–286. https://doi.org/10.1016/0167-7152(89)90106-5

Husson E, Guillen A, Séranne MM et al (2018) 3D Geological and gravity inversion of a structurally complex carbonate area: application for karstified massif localization. Basin Res 30(4):766–782. https://doi.org/10.1111/bre.12279

Izenman AJ (1991) Review papers: recent developments in nonparametric density estimation. J Am Stat Assoc 86(413):205–224. https://doi.org/10.1080/01621459.1991.10475021

Jago CP, Tosdal RM, Cooke DR et al (2014) Vertical and lateral variation of mineralogy and chemistry in the early jurassic Mt. Milligan alkalic porphyry Au-Cu deposit, British Columbia, Canada. Econ Geol 109(4):1005–1033. https://doi.org/10.2113/econgeo.109.4.1005

Keating P, Pilkington M (2004) Euler deconvolution of the analytic signal and its application to magnetic interpretation. Geophys Prospect 52(3):165–182. https://doi.org/10.1111/j.1365-2478.2004.00408.x

Kobos M, Mańdziuk J (2010) Classification Based on Multiple-Resolution Data View. In: Diamantaras K, Duch W, Iliadis LS (eds) Artificial neural networks C ICANN 2010, vol 6354. Springer Berlin Heidelberg, Berlin, Heidelberg, pp 124–129. https://doi.org/10.1007/978-3-642-15825-4_16, series Title: Lecture Notes in Computer Science

LaBrecque DJ, Owen E, Dailey W, et al. (1992) Noise and occam’s inversion of resistivity tomography data. In: SEG technical program expanded abstracts 1992. Society of Exploration Geophysicists, pp 397–400. https://doi.org/10.1190/1.1822100

Lee JH, Kim DH, Chung CW (1999) Multi-dimensional selectivity estimation using compressed histogram information. ACM SIGMOD Rec 28(2):205–214. https://doi.org/10.1145/304181.304200

Levine N (2008) CrimeStat: a spatial statistical program for the analysis of crime incidents. In: Shekhar S, Xiong H (eds) Encyclopedia of GIS. Springer US, Boston, MA, pp 187–193. https://doi.org/10.1007/978-0-387-35973-1_229

Li Y, Oldenburg D (1996) 3-D inversion of magnetic data. Geophysics 61(2):394–408. https://doi.org/10.1190/1.1443968

Li Y, Oldenburg D (1998) 3-D inversion of gravity data. Geophysics 63(1):109–119. https://doi.org/10.1190/1.1444302

Liu Z, Zhenjiang T, Hongjun W (2013) Clustering algorithm based on normalized B-spline density model. J Jilin Univ (Inf Sci Edi) (in Chinese) 31(05):522–527. http://kns.cnki.net/KCMS/detail/detail.aspx?FileName=CCYD201305014 &DbName=CJFQ2013

Logan JM, Mihalynuk MG (2014) Tectonic controls on early Mesozoic paired alkaline porphyry deposit belts (Cu-Au \(\pm\) Ag-Pt-Pd-Mo) within the Canadian cordillera. Econ Geol 109(4):827–858. https://doi.org/10.2113/econgeo.109.4.827

López-Cruz PL, Bielza C, Larrañaga P (2014) Learning mixtures of polynomials of multidimensional probability densities from data using B-spline interpolation. Int J Approx Reason 55(4):989–1010. https://doi.org/10.1016/j.ijar.2013.09.018

Lu WJ, Yan ZZ (2015) Improved FCM algorithm based on K-means and granular computing. J Intell Syst 24(2):215–222. https://doi.org/10.1515/jisys-2014-0119

Melo FF, Barbosa VC (2020) Reliable Euler deconvolution estimates throughout the vertical derivatives of the total-field anomaly. Comput Geosci 138:104436. https://doi.org/10.1016/j.cageo.2020.104436

Melo FF, Barbosa VCF, Uieda L et al (2013) Estimating the nature and the horizontal and vertical positions of 3D magnetic sources using Euler deconvolution. Geophysics 78(6):J87–J98. https://doi.org/10.1190/geo2012-0515.1

Mickus KL, Hinojosa JH (2001) The complete gravity gradient tensor derived from the vertical component of gravity: a Fourier transform technique. J Appl Geophys 46(3):159–174. https://doi.org/10.1016/S0926-9851(01)00031-3

Mikhailov V, Galdeano A, Diament M et al (2003) Application of artificial intelligence for Euler solutions clustering. Geophysics 68(1):168–180. https://doi.org/10.1190/1.1543204

Mitchinson DE, Enkin RJ, Hart CJR (2013) Linking porphyry deposit geology to geophysics via physical properties: adding value to Geoscience BC geophysical data. Report, Geoscience BC. https://cdn.geosciencebc.com/project_data/GBC_Report2013-14/GBC_Report2013-14.pdf

Montaj G (2008) The core software platform for working with large volume gravity and magnetic spatial data. Geosoft Inc, Toronto, Canada

Mushayandebvu M, van Driel P, Reid A et al (2001) Magnetic source parameters of two-dimensional structures using extended Euler deconvolution. Geophysics 66(3):814–823. https://doi.org/10.1190/1.1444971

Nabighian MN, Hansen RO (2001) Unification of Euler and Werner deconvolution in three dimensions via the generalized Hilbert transform. Geophysics 66(6):1805–1810. https://doi.org/10.1190/1.1487122

Oldenburg D, Li Y, Ellis R (1997) Inversion of geophysical data over a copper gold porphyry deposit: a case history for Mt. Milligan. Geophysics 62(5):1419–1431. https://doi.org/10.1190/1.1444246

Pašteka R, Kušnirák D, Götze HJ (2010) Stabilization of the Euler deconvolution algorithm by means of a two steps regularization approach. In: EGM 2010 international workshop. European Association of Geoscientists & Engineers, Capri, Italy. https://doi.org/10.3997/2214-4609-pdb.165.C_PP_09

Phillips JD, Nabighian MN, Smith DV et al (2007) Estimating locations and total magnetization vectors of compact magnetic sources from scalar, vector, or tensor magnetic measurements through combined Helbig and Euler analysis. Seg Tech Program Expand Abstr 26(1):770–774. https://doi.org/10.1190/1.2792526

Phillips N, Nguyen T, Thomson V et al (2009) 3D inversion modelling, integration, and visualization of airborne gravity, magnetic, and electromagnetic data: The Quest Project. Geoscience BC Report 2009–15. https://doi.org/10.3997/2214-4609-pdb.165.D_OP_01

Ramirez A, Daily W, Labrecque D et al (1993) Monitoring an underground steam injection process using electrical resistance tomography. Water Resour Res 29(1):73–87. https://doi.org/10.1029/92WR01608

Ravat D (1996) Analysis of the Euler method and its applicability in environmental magnetic investigations. J Environ Eng Geophys 1(3):229–238. https://doi.org/10.4133/JEEG1.3.229

Reid A, Allsop J, Granser H et al (1990) Magnetic interpretation in three dimensions using Euler deconvolution. Geophysics 55(1):80–91. https://doi.org/10.1190/1.1442774

Reid AB, Thurston JB (2014) The structural index in gravity and magnetic interpretation: errors, uses, and abuses. Geophysics 79(4):J61–J66. https://doi.org/10.1190/geo2013-0235.1

Reid AB, Ebbing J, Webb SJ (2014) Avoidable Euler errors - the use and abuse of Euler deconvolution applied to potential fields. Geophys Prospect 62(5):1162–1168. https://doi.org/10.1111/1365-2478.12119

Rezaie M, Moazam S (2017) A new method for 3-D magnetic data inversion with physical bound. J Min Environ 8(3):501–510. https://doi.org/10.22044/jme.2017.953

Robertson AN, Park KC, Alvin KF (1998) Extraction of impulse response data via wavelet transform for structural system identification. J Vib Acoust 120(1):252–260. https://doi.org/10.1115/1.2893813

Roy L (2001) Short note: source geometry identification by simultaneous use of structural index and shape factor: source geometry identification. Geophys Prospect 49(1):159–164. https://doi.org/10.1046/j.1365-2478.2001.00239.x

Salem A, Ravat D (2003) A combined analytic signal and Euler method (AN-EUL) for automatic interpretation of magnetic data. Geophysics 68(6):1952–1961. https://doi.org/10.1190/1.1635049

Sander Geophysics Limited (2008) Airborne gravity survey, Quesnellia Region, British Columbia. Report, Geoscience BC. https://cdn.geosciencebc.com/project_data/QUESTdata/GBCReport2008-8/Gravity_Technical_Report.pdf

Schiarizza P (2003) Geology and mineral occurrences of Quesnel terrane, Kliyul Creek to Johanson Lake (94d/8, 9). In: Geological Fieldwork 2003. BC Ministry of Energy and Mines, Paper 2004–1:83–100. https://cmscontent.nrs.gov.bc.ca/geoscience/PublicationCatalogue/Paper/BCGS_P2004-01-07_Schiarizza.pdf

Schumaker L (2007) Spline functions: basic theory, 3rd edn. Cambridge University Press. https://doi.org/10.1017/CBO9780511618994

Schwartz SC (1967) Estimation of probability density by an orthogonal series. The Annals of Mathematical Statistics. pp 1261–1265. https://doi.org/10.1214/aoms/1177698795

Setiadi I, Marjiyono Nainggolan TB (2021) Gravity data analysis based on optimum upward continuation filter and 3D inverse modelling (case study at sedimentary basin in volcanic region malang and its surrounding area, East Java). IOP Conf Ser Earth Environ Sci 873(1):012008. https://doi.org/10.1088/1755-1315/873/1/012008

Siripunvaraporn W, Sarakorn W (2011) An efficient data space conjugate gradient Occam’s method for three-dimensional magnetotelluric inversion. Geophys J Int 186(2):567–579. https://doi.org/10.1111/j.1365-246X.2011.05079.x

Smellie DW (1956) Elementary approximations in aeromagnetic interpretation. Geophysics 21(4):1021–1040. https://doi.org/10.1190/1.1438294

Stavrev P, Reid A (2007) Degrees of homogeneity of potential fields and structural indices of Euler deconvolution. Geophysics 72(1):L1–L12. https://doi.org/10.1190/1.2400010

Stavrev P, Gerovska D, Araúzo-Bravo MJ (2009) Depth and shape estimates from simultaneous inversion of magnetic fields and their gradient components using differential similarity transforms. Geophys Prospect 57(4):707–717. https://doi.org/10.1111/j.1365-2478.2008.00765.x

Stavrev PY (1997) Euler deconvolution using differential similarity transformations of gravity or magnetic anomalies. Geophys Prospect 45(2):207–246. https://doi.org/10.1046/j.1365-2478.1997.00331.x

Thompson DT (1982) EULDPH: a new technique for making computer-assisted depth estimates from magnetic data. Geophysics 47(1):31–37. https://doi.org/10.1190/1.1441278

Trainor-Guitton W, Hoversten GM (2011) Stochastic inversion for electromagnetic geophysics: practical challenges and improving convergence efficiency. Geophysics 76(6):F373–F386. https://doi.org/10.1190/geo2010-0223.1

Ugalde H, Morris WA (2010) Cluster analysis of Euler deconvolution solutions: new filtering techniques and geologic strike determination. Geophysics 75(3):L61–L70. https://doi.org/10.1190/1.3429997

Uieda L, Oliveira VC, Barbosa VCF (2014) Geophysical tutorial: Euler deconvolution of potential-field data. Lead Edge 33(4):448–450. https://doi.org/10.1190/tle33040448.1

Vasilevsky A, Druzhinin A, Evans R et al (2003) Feasibility of FTG reservoir monitoring. In: SEG technical program expanded abstracts, pp 1450–1453. https://doi.org/10.1190/1.1817564

Wang J (2006) Views on the domestic situation and progress of gravity and magnetic petroleum exploration. Progr Explor Geophys (in Chinese) 29(02):82–86

Williams SE, Fairhead JD, Flanagan G (2005) Comparison of grid Euler deconvolution with and without 2D constraints using a realistic 3D magnetic basement model. Geophysics 70(3):L13–L21. https://doi.org/10.1190/1.1925745

Yao C, Zhining G, Qibin W et al (2004) An analysis of Euler deconvolution and its improvement. Geophys Geochem Explor (in Chinese) 28(02):150–155. https://doi.org/10.3969/j.issn.1000-8918.2004.02.017

Yunus Levent E, Çağlayan B, Gökhan G (2019) Parameter estimations from gravity and magnetic anomalies due to deep-seated faults: differential evolution versus particle swarm optimization. Turk J Earth Sci 28(6):860–881. https://doi.org/10.3906/yer-1905-3

Zambom AZ, Dias R (2013) A Review of Kernel Density Estimation with Applications to Econometrics. Int Econom Rev (IER) 5(1):20–42. https://ideas.repec.org/a/erh/journl/v5y2013i1p20-42.html

Zeng H, Xu D, Tan H (2007) A model study for estimating optimum upward-continuation height for gravity separation with application to a Bouguer gravity anomaly over a mineral deposit, Jilin province, northeast China. Geophysics 72(4):I45–I50. https://doi.org/10.1190/1.2719497

Zhang C, Mushayandebvu MF, Reid AB et al (2000) Euler deconvolution of gravity tensor gradient data. Geophysics 65(2):512–520. https://doi.org/10.1190/1.1444745

Zhou W, Nan Z, Li J (2016) Self-constrained Euler deconvolution using potential field data of different altitudes. Pure Appl Geophys 173(6):2073–2085. https://doi.org/10.1007/s00024-016-1254-7

Zhou W, Guoqing M, Zhenlong H et al (2017) The study on the joint Euler deconvolution method of full tensor gravity data. Chin J Geophys (in Chinese) 60(12):4855–4865. https://doi.org/10.6038/cjg20171225

Acknowledgements

We acknowledge Geoscience BC for making the data available. Moreover, we would like to thank Cui, Y., Miller, D., Schiarizza, P., and Diakow, L.J. for their permission to reproduce the Mount Milligan geological map.

Funding

This research was funded by the National Natural Science Foundation of China under Grant 41704138, Grant 41974148, and in part by the Hunan Provincial Science & Technology Department of China under Grant 2017JJ3069, and in part by the Project of Doctoral Foundation of Hunan University of Science and Technology under Grant E51651, and in part by the Hunan Provincial Key Laboratory of Share Gas Resource Exploitation under Grant E21722.

Author information

Authors and Affiliations

Contributions

SC provided software and was involved in conceptualization, methodology, writing, review, and editing; YD provided software and contributed to writing, review, and editing; YB contributed to data curation, investigation, and writing original draft; GL was involved in resources, supervision, writing, review, and editing; ZZ was in involved in conceptualization; PC contributed to validation and visualization; JX provided software and was involved in validation; and XC was involved in supervision, writing, review, and editing. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyzes, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable for this type of study.

Additional information

Edited by Prof. Ivana Vasiljevic (ASSOCIATE EDITOR) / Prof. Gabriela Fernández Viejo (CO-EDITOR-IN-CHIEF).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cao, S., Deng, Y., Yang, B. et al. 3-D probability density imaging of Euler solutions using gravity data: a case study of Mount Milligan, Canada. Acta Geophys. (2024). https://doi.org/10.1007/s11600-023-01279-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11600-023-01279-y