Abstract

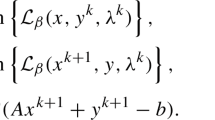

In this paper, we propose a novel variant of the alternating direction method of multipliers (ADMM) approach for solving minimization of the rate of \(\ell _{1}\) and \(\ell _{2}\) norms for sparse recovery. We first transform the quotient of \(\ell _{1}\) and \(\ell _{2}\) norms into a new function of the separable variables using the least squares minimum norm solution of the linear system of equations. Subsequently, we employ the augmented Lagrangian function to formulate the corresponding ADMM method with a dynamically adjustable parameter. Additionally, each of its subproblems possesses a unique global minimum. Finally, we present some numerical experiments to demonstrate our results.

Similar content being viewed by others

Data Availability

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

References

Berg, E.V.D., Friedlander, M.P.: Sparse optimization with least-squares constraints. SIAM J. Opt. 21(4), 1201–1229 (2011)

Wright, J., Yang, A.Y., Arvind, G., et al.: Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 31, 210–227 (2008)

Bruckstein, A.M., Donoho, D.L., Elad, M.: From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Rev. 51, 34–81 (2009)

Yu, Siwei, Ma, Jianwei: Deep learning for geophysics: current and future trends. Rev. Geophys. 59(3), e2021RG000742 (2021)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Candés, E.J., Romberg, J., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Comm. Pure Appl. Math. 59, 1207–1233 (2006)

Candès, E.J., Tao, T.: Near-optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 52, 5406–5425 (2006)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

Pati, Y.C., Rezaiifar, R., Krishnaprasad, P.S.: Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. In: Proc. Asilomar Conf. Signals, Syst. Comput. pp. 40–44 (1993)

Needell, D., Tropp, J.A.: CoSaMP: iterative signal recovery from incomplete and inaccurate samples. App. Comput. Harmonic Anal. 26(3), 301–321 (2009)

Eldar, Y.C., Kutyniok, G.: Compressed Sensing: Theory and Applications. Cambridge University Press, Cambridge (2015)

Foucart, S., Rauhut, H.: A Mathematical Introduction to Compressive Sensing. Birkhauser, Cambridge MA, USA (2013)

Boche, H., Calderbank, R., Kutyniok, G. , Vybiral, J.: Compressed sensing and its applications. Appl. Numer. Harmon. Anal. (2015)

Natarajan, B.K.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24, 227–234 (1995)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 43(1), 129–159 (2006)

Candès, E. J., Tao, T.: Decoding by linear programming. IEEE Trans. Inform. Theory 51 (2005)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2, 183–202 (2009)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers, Found. Trends Mach. Learn. 3, 1–122 (2011)

Lai, M., Wang, J.: An unconstrained \(\ell _{q}\) minimization with \(0<q\le 1\) for sparse solution of underdetermined linear systems. SIAM J. Opt. 21, 82–101 (2010)

Yin, P., Lou, Y., He, Q., Xin, J.: Minimization of \(\ell _{1-2}\) for compressed sensing. SIAM J. Sci. Comput. 37, A536–A563 (2015)

Lou, Y., Yin, P., He, Q., Xin, J.: Computing sparse representation in a highly coherent dictionary based on difference of \(\ell _{1}\) and \(\ell _{2}\). J. Sci. Comput. 64, 178–196 (2015)

Zhang, S., Xin, J.: Minimization of transformed \(L_1\) penalty: theory, difference of convex function algorithm, and robust application in compressed sensing. Math. Program. 169(1), 307–336 (2018)

Shen, X., Pan, W., Zhu, Y.: Likelihood-based selection and sharp parameter estimation. J. Am. Stat. Assoc. 107(497), 223–232 (2012)

Wang, J.: Sparse reconstruction via the mixture optimization model with iterative support estimate. Inf. Sci. 574, 1–11 (2021)

Wang, J.: The proximal gradient methods for the \(\ell _{1-\infty }\) minimization problem with the sharp estimate, submitted (2022)

Wang, J.: A wonderful triangle in compressed sensing. Inf. Sci. 611, 95–106 (2022)

Rahimi, Y., Wang, C., Dong, H., Lou, Y.: A scale invariant approach for sparse signal recovery. SIAM J. Sci. Comput. 41(6), A3649–A3672 (2019)

Hoyer, P. O. : Non-negative sparse coding. In: Proceedings of the IEEE Workshop on Neural Networks for Signal, Martigny, Switzerland, pp. 557–565 (2002)

Hurley, N., Rickard, S.: Comparing measures of sparsity. IEEE Trans. Inform. Theory 55, 4723–4741 (2009)

Zeng, L., Yu, P., Pong, T.K.: Analysis and algorithms for some compressed sensing models based on L1/L2 minimization. SIAM J. Opt. 31(2), 1576–1603 (2021)

Xu, Y., Narayan, A., Tran, H., Webster, C.G.: Analysis of the ratio of \(\ell _{1}\) and \(\ell _{2}\) norms in compressed sensing. Appl. Comput. Harmon. Anal. 55, 486–511 (2021)

Tao, Min: Minimization of L1 over L2 for sparse signal recovery with convergence guarantee. SIAM J. Sci. Comput. 44(2), A770–A797 (2022)

Krishnan, D., Tay, T., Fergus, R.: Blind deconvolution using a normalized sparsity measure. In: Proc. IEEE comput. Vis. Pattern recongit., pp. 233–240 (2011)

Wang, C., J. G, Min Tao, Lou, Nagy, Y.: Limited-angle CT reconstruction via the L1/L2 minimization. SIAM M. Imag. Sci. 14(2), 749–777 (2021)

Coleman, T.F., Li, Y.: On the convergence of reflective Newton methods for large-scale nonlinear minimization subject to bounds. Math. Program. 67(2), 189–224 (1994)

Mordukhovich, B., Nam, N.M.: An easy path to convex analysis and applications. Synth. Lect. Math. Stat. 6(2), 1–218 (2016)

Powell, M.J.D.: A Fortran Subroutine for Solving Systems of Nonlinear Algebraic Equations, Numerical Methods for Nonlinear Algebraic Equations, P. Rabinowitz, (ed.), Ch.7 (1970)

Marquardt, D.: An algorithm for least-squares estimation of nonlinear parameters. SIAM J. Appl. Math. 11, 431–441 (1963)

Grant, M.: Stephen Boyd, CVX: Matlab Software for Disciplined Convex Programming, version 2.2. http://cvxr.com/cvx, January (2020)

Zhang, Y.: Theory of compressive sensing via L1-minimization : a non-RIP analysis and extensions. J. Oper. Res. Soc. China 1, 79–105 (2013)

Acknowledgements

We are very grateful to anonymous reviewers for their constructive suggestions which improved this paper significantly. The research of Jun Wang has been supported by Scientific Startup Foundation for Doctors of Jiangsu University of Science and Technology (CN) (No. 1052931903). The work of Qiang Ma has been supported by Scientific Startup Foundation for Doctors of Jiangsu University of Science and Technology (CN) (No. 1062931902), the National Natural Science Foundation of China (NSFC) under Grants (No. 52105350) and State Laboratory of Advanced Welding and Joining in HIT (CN) (AWJ-23R01).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, J., Ma, Q. On the implementation of ADMM with dynamically configurable parameter for the separable \(\ell _{1}/\ell _{2}\) minimization. Optim Lett (2024). https://doi.org/10.1007/s11590-024-02106-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11590-024-02106-z