Abstract

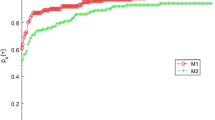

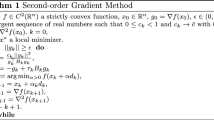

A simple secant-based fast gradient method is developed for problems whose objective function is convex and well-defined. The proposed algorithm extends the classical Nesterov gradient method by updating the estimate-sequence parameter with secant information whenever possible. This is achieved by imposing a secant condition on the choice of search point. Furthermore, the proposed algorithm embodies an "update rule with reset" that parallels the restart rule recently suggested in O’Donoghue and Candes (Found Comput Math, 2013). The proposed algorithm applies to a large class of problems including logistic and least-square losses commonly found in the machine learning literature. Numerical results demonstrating the efficiency of the proposed algorithm are analyzed with the aid of performance profiles.

Similar content being viewed by others

Notes

The simplified Nesterov gradient method Algorithm 1b is used for computations.

References

Amini, K., Ahookhosh, M., Nosratipour, H.: An inexact line search approach using modified nonmonotone strategy for unconstrained optimization. Numer. Algorithms 66(1), 49–78 (2013)

Barzilai, J., Borwein, J.: Two-point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Becker, S.R., Candes, E.J., Grant, M.C.: Templates for convex cone problems with applications to sparse signal recovery. Math. Program. Comput. 3(3), 165–218 (2011)

Bhaya, A., Kaszkurewicz, E.: Steepest descent with momentum for quadratic functions is a version of the conjugate gradient method. Neural Netw. 17(1), 65–71 (2004)

Birgin, E.G., Martinez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 10(4), 1196–1211 (2000)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2009)

Collins, M., Schapire, R.E., Singer, Y.: Logistic regression. Adaboost and Bregman distances. Mach. Learn. 48, 253–285 (2002)

Dolan, E.D., More, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Fletcher, R.: On the Barzilai–Borwein method. In: Qi, L., Teo, K., Yang, X. (eds.) Optimization and Control with Applications. Applied Optimization, vol. 96, pp. 235–256. Springer, US (2005)

Goldstein, T., O’Donoghue, B., Setzer, S.: Fast alternating direction optimization methods. Technical Report, UCLA (May 2012 (Revised January 2014))

Gonzaga, C.C., Karas, E.W.: Fine tuning Nesterov’s steepest descent algorithm for differentiable convex programming. Math. Program. Ser. A 138, 141–166 (2013)

Gu, M., Lim, L.H., Wu, C.: ParNes: a rapidly convergent algorithm for accurate recovery of sparse and approximately sparse signals. Numer. Algorithms 64(2), 321–347 (2013)

He, R., Tan, T., Wang, L.: Robust recovery of corrupted low-rank matrix by implicit regularizers. IEEE Trans. Pattern Anal. Mach. Intell. 36(4), 770–783 (2014)

Hu, S.L., Huang, Z.H., Lu, N.: A nonmonotone line search algorithm for unconstrained optimization. J. Sci. Comput. 42, 38–53 (2010)

Kozma, A., Conte, C., Diehl, M.: Benchmarking large-scale distributed convex quadratic programming algorithms. Optim. Methods Softw. 30(1), 191–214 (2015)

Kozma, A., Frasch, J.V., Diehl, M.: A distributed method for convex quadratic programming problems arising in optimal control of distributed systems. In: Proceedings of the 52nd IEEE Conference on Decision and Control, Florence, Italy (December 2013)

Lan, G., Monteiro, R.D.: Iteration-complexity of first-order penalty methods for convex programming. Math. Program. 138(1–2), 115–139 (2013)

Lin, Q., Xiao, L.: An adaptive accelerated proximal gradient method and its homotopy continuation for sparse optimization. In: Proceedings of The 31st International Conference on Machine Learning, Beijing, China (2014)

Maros, I., Meszaros, C.: A repository of convex quadratic programming problems. Optim. Methods Softw. 11(1–4), 671–681 (1999)

Meng, X., Chen, H.: Accelerating Nesterov’s method for strongly convex functions with Lipschitz gradient. Math. Optim. Control 90C25, 1–13 (2011). arXiv:1109.6058v1

Nemirovski, A.S.: Efficient methods in convex programming, Lecture Notes, Technion-Israel Institute of Technology (1994)

Nesterov, Y.: A method of solving a convex programming problem with convergence rate of (\(1/k^2\)). Sov. Math. Doklady 27(2), 372–376 (1983)

Nesterov, Y.: Introductory Lectures on Convex Programming: A Basic Course. Kluwer Academic Publishers, Dordrecht (2004)

Nesterov, Y.: Gradient methods for minimizing composite objective function. CORE discussion paper (2007)

Nicolas, L.R., Mark, S., Francis, B.: A stochastic gradient method with an exponential convergence rate for finite training sets. Math. Optim. Control 1–34 (2013). arXiv:1202.6258v4

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (2006)

O’Donoghue, B., Candes, E.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. (2013)

Patrinos, P., Bemporad, A.: An accelerated dual gradient-projection algorithm for linear model predictive control. In: Proceedings of the 51st IEEE Conference on Decision and Control. Maui, US (December 2012)

Pedregosa, F.: Numerical optimizers for logistic regression (2013). http://fa.bianp.net/blog/2013/numerical-optimizers-for-logistic-regression/#fn:2

Polyak, B.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Richter, S., Jones, C.N., Morari, M.: Real-time input-constrained MPC using fast gradient methods. In: Proceedings of the 48th IEEE Conference on Decision and Control and 28th Chinese Control Conference, Shanghai, China (December 2009)

Shah, B., Buehler, R., Kempthorne, O.: Some algorithms for minimizing a function of several variables. J. Soc. Ind. Appl. Math. 12(1), 74–92 (1964)

Telgarsky, M.: Steepest descent analysis for unregularized linear prediction with strictly convex penalties. In: Proceedings of the 4th International Workshop on Optimization for Machine Learning (OPT), held as a part of the NIPS workshops series (December 2011)

Torii, M., Hagan, M.T.: Stability of steepest descent with momentum for quadratic functions. IEEE Trans. Neural Netw. 13(3), 752–756 (2002)

Vogl, T.P., Mangis, J., Rigler, A., Zink, W., Alkon, D.: Accelerating the convergence of the back-propagation method. Biol. Cybern. 59(4–5), 257–263 (1988)

Worthington, P.L., Hancock, E.R.: Surface topography using shape-from-shading. Pattern Recognit. 34(4), 823–840 (2001)

Acknowledgments

This work was funded by EPRSC under the Grant EP/H016600/1.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Alli-Oke, R.O., Heath, W.P. A secant-based Nesterov method for convex functions. Optim Lett 11, 81–105 (2017). https://doi.org/10.1007/s11590-015-0991-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-015-0991-3