Abstract

We propose and analyze a new parallel coordinate descent method—NSync—in which at each iteration a random subset of coordinates is updated, in parallel, allowing for the subsets to be chosen using an arbitrary probability law. This is the first method of this type. We derive convergence rates under a strong convexity assumption, and comment on how to assign probabilities to the sets to optimize the bound. The complexity and practical performance of the method can outperform its uniform variant by an order of magnitude. Surprisingly, the strategy of updating a single randomly selected coordinate per iteration—with optimal probabilities—may require less iterations, both in theory and practice, than the strategy of updating all coordinates at every iteration.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work we consider the unconstrained minimization problem

where \(\phi \) is strongly convex and differentiable. We propose a new randomized algorithm for solving this problem—NSync (Nonuniform SYNchronous Coordinate descent)—and analyze its iteration complexity. The main novelty of this paper is the algorithm itself. In particular, NSync is the first method which in each iteration updates a random subset of coordinates, allowing for an arbitrary probability law (sampling) to be used for this.

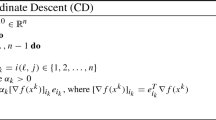

1.1 The algorithm

In NSync (Algorithm 1), we first assign a probability \(p_S\ge 0\) to every subset S of the set of coordinates \([n]:=\{1,\dots ,n\}\), with

and pick stepsize parameters \(w_i>0\), \(i=1,2,\dots ,n\), one for each coordinate.

At every iteration, a random set \(\hat{S}\) is generated, independently from previous iterations, following the law

and then coordinates \(i \in \hat{S}\) are updated in parallel by moving in the direction of the negative partial derivative with stepsize \(1/w_i\). By \(\nabla _i \phi (x)\) we mean \(\langle \nabla \phi (x), e^i\rangle \), where \(e^i\in \mathbf {R}^n\) is the ith unit coordinate vector.

The updates are synchronized: no processor/thread is allowed to proceed before all updates are applied, generating the new iterate \(x^{k+1}\). We study the complexity of NSync for arbitrary sampling \(\hat{S}\). In particular, \(\hat{S}\) can be non-uniform in the sense that the probability that coordinate i is chosen,

is allowed to vary with i.

1.2 Literature

Serial stochastic coordinate descent methods were proposed and analyzed in [8, 15, 20, 23], and more recently in various settings in [4, 9–11, 14, 24, 26, 29]. Parallel methods were considered in [2, 19, 21], and more recently in [1, 5, 6, 12, 13, 25, 27, 28]. A memory distributed method scaling to big data problems was recently developed in [22]. A nonuniform coordinate descent method updating a single coordinate at a time was proposed in [20], and one updating two coordinates at a time in [14].

NSync is the first randomized method in the literature which is capable of updating a subset of the coordinates without any restrictions, i.e., according to an arbitrary probability law, except for the necessary requirement that \(p_i>0\) for all i. In particular, NSync is the first nonuniform parallel coordinate descent method.

In the time between the first online appearance of this work on arXiv (October 2013; arXiv:1310.3438), and the time this paper went to press, this work led to a number of extensions [3, 7, 16–18]. All of these papers share the defining feature of NSync, namely, its ability to work with an arbitrary probability law defining the selection of the active coordinates in each iteration. These works also utilize the nonuniform ESO assumption introduced here (Assumption 1), as it appears to be key in the study of such methods.

2 Analysis

In this section we provide a complexity analysis of NSync.

2.1 Assumptions

Our analysis of NSync is based on two assumptions. The first assumption generalizes the ESO concept introduced in [21] and later used in [5, 6, 22, 27, 28] to nonuniform samplings. The second assumption requires that \(\phi \) be strongly convex.

Notation For \(x,y,u \in \mathbf {R}^n\) we write \(\Vert x\Vert _u^2 :=\sum _i u_i x_i^2\), \(\langle x, y\rangle _u :=\sum _{i=1}^n u_i y_i x_i\), \(x \bullet y :=(x_1 y_1, \dots , x_n y_n)\) and \(u^{-1} :=(1/u_1,\dots ,1/u_n)\). For \(S\subseteq [n]\) and \(h\in \mathbf {R}^n\), let \(h_{[S]} :=\sum _{i\in S} h_i e^i\).

Assumption 1

(Nonuniform ESO: Expected Separable Overapproximation) Assume that \(p=(p_1,\dots ,p_n)^T>0\) and that for some positive vector \(w\in \mathbf {R}^n\) and all \(x,h \in \mathbf {R}^n\), the following inequality holds:

As soon as \(\phi \) has a Lipschitz continuous gradient, then for every random sampling \(\hat{S}\) there exist positive weights \(w_1,\dots ,w_n\) such that Assumption 1 holds. In this sense, the assumption is not restrictive. Inequalities of the type (2), in the uniform case (\(p_i=p_j\) for all i, j), were studied in [6, 21, 22, 27]. Motivated by the introduction of the nonuniform ESO assumption in this paper, and the development in Sect. 3 of our work, an entire paper was recently written, dedicated to the study of nonuniform ESO inequalities [16].Footnote 1

We now turn to the second and final assumption.

Assumption 2

(Strong convexity) We assume that \(\phi \) is \(\gamma \)-strongly convex with respect to the norm \(\Vert \cdot \Vert _{v}\), where \(v=(v_1,\dots ,v_n)^T>0\) and \(\gamma >0\). That is, we require that for all \(x,h \in \mathbf {R}^n\),

2.2 Complexity

We can now establish a bound on the number of iterations sufficient for NSync to approximately solve (1) with high probability. We believe it is remarkable that the proof is very concise.

Theorem 3

Let Assumptions 1 and 2 be satisfied. Choose \(x^0 \in \mathbf {R}^n\), \(0 < \epsilon < \phi (x^0)-\phi ^*\) and \(0< \rho < 1\), where \(\phi ^* :=\min _x \phi (x)\). Let

If \(\{x^k\}\) are the random iterates generated by NSync, then

Moreover, we have the lower bound

Proof

We first claim that \(\phi \) is \(\mu \)-strongly convex with respect to the norm \(\Vert \cdot \Vert _{w\bullet p^{-1}}\), i.e.,

where \(\mu :=\gamma /\Lambda \). Indeed, this follows by comparing (3) and (7) in the light of (4). Let \(x^*\) be such that \(\phi (x^*) = \phi ^*\). Using (7) with \(h=x^*-x\),

Let \(h^k :=-({{\mathrm{Diag}}}(w))^{-1}\nabla \phi (x^k)\). Then \(x^{k+1}=x^k + (h^k)_{[\hat{S}]}\), and utilizing Assumption 1, we get

Taking expectations in the last inequality and rearranging the terms, we obtain

Using this, Markov inequality, and the definition of K, we finally get

Let us now establish the last claim.

First, note that (see [21, Sec 3.2] for more results of this type),

Letting \(\Delta :=\{p'\in \mathbf {R}^n : p'\ge 0, \sum _i p_i' = \mathbf {E}[|\hat{S}|]\}\), we have

where the last equality follows since optimal \(p_i'\) is proportional to \(v_i/w_i\). \(\square \)

Theorem 3 is generic in the sense that we do not say when Assumption 1 is satisfied and how should one go about choosing the stepsizes \(\{w_i\}\) and probabilities \(\{p_S\}\). In the next section we address these issues. On the other hand, this abstract setting allowed us to write a brief complexity proof.

The quantity \(\Lambda \), defined in (4), can be interpreted as a condition number associated with the problem and our method. Hence, as we vary the distribution of \(\hat{S}\), \(\Lambda \) will vary. It is clear intuitively that \(\Lambda \) can be arbitrarily bad. Indeed, by choosing a sampling \(\hat{S}\) which “nearly” ignores one or more of the coordinates (by setting \(p_i\approx 0\) for some i), we should expect the number of iterations to grow as the method will necessarily be very slow in updating these coordinates.

In the light of this, inequality (6) is useful as it gives a useful expression for bounding \(\Lambda \) from below.

2.3 Change of variables

Consider the change of variables \(y={{\mathrm{Diag}}}(d) x\), where \(d>0\). Defining \(\phi ^d(y):=\phi (x)\), we get \(\nabla \phi ^d(y) = ({{\mathrm{Diag}}}(d))^{-1}\nabla \phi (x)\). It can be seen that (2), (3) can equivalently be written in terms of \(\phi ^d\), with w replaced by \(w^d :=w \bullet d^{-2}\) and v replaced by \(v^d :=v \bullet d^{-2}\). By choosing \(d_i=\sqrt{v_i}\), we obtain \(v^d_i=1\) for all i, recovering standard strong convexity.

3 Nonuniform samplings and ESO

In this section we consider a problem with standard assumptions and show that the (admittedly nonstandard) ESO assumption, Assumption 1, is satisfied.

Consider now problem (1) with \(\phi \) of the form

where \(v>0\). Note that Assumption 2 is satisfied. We further make the following two assumptions.

Assumption 4

(Smoothness) Function f has Lipschitz gradient with respect to the coordinates, with positive constants \(L_1,\dots ,L_n\). That is,

for all \( x \in \mathbf {R}^n\) and \(t\in \mathbf {R}\).

Assumption 5

(Partial separability) Function f has the form

where \(\mathcal {J}\) is a finite collection of nonempty subsets of [n] and \(f_J\) are differentiable convex functions such that \(f_J\) depends on coordinates \(i\in J\) only. Let \(\omega :=\max _{J} |J|\). We say that f is separable of degree \(\omega \).

Uniform parallel coordinate descent methods for regularized problems with f of the above structure were analyzed in [21].

Example 1

Let

where \(A \in \mathbf {R}^{m \times n}\). Then \(L_i = \Vert A_{:i}\Vert ^2\) and

where \(A_{:i}\) is the ith column of A, \(A_{j:}\) is the jth row of A and \(\Vert \cdot \Vert \) is the standard L2 norm. Then \(\omega \) is the maximum # of nonzeros in a row of A.

Nonuniform sampling Instead of considering the general case of arbitrary \(p_S\) assigned to all subsets of [n], here we consider a special kind of sampling having two advantages: (i) sets can be generated easily, (ii) it leads to larger stepsizes \(1/w_i\) and hence improved convergence rate.

Fix \(\tau \in [n]\) and \(c\ge 1\) and let \(S_1,\dots ,S_c\) be a collection of (possibly overlapping) subsets of [n] such that

for all \(j=1,2,\dots ,c\) and

Moreover, let \(q= (q_1,\dots ,q_c)> 0\) be a probability vector. Let \(\hat{S}_j\) be \(\tau \)-nice sampling from \(S_j\); that is, \(\hat{S}_j\) picks subsets of \(S_j\) having cardinality \(\tau \), uniformly at random. We assume these samplings are independent. Now, \(\hat{S}\) is defined as follows: We first pick \(j\in \{1,\dots ,c\}\) with probability \(q_j\), and then draw \(\hat{S}_j\).

Note that we do not need to compute the quantities \(p_S\), \(S\subseteq [n]\), to execute NSync. In fact, it is much easier to implement the sampling via the two-tier procedure explained above. Sampling \(\hat{S}\) is a nonuniform variant of the \(\tau \)-nice sampling studied in [21], which here arises as a special case for \(c=1\).

Note that

where \(\delta _{ij}=1\) if \(i \in S_j\), and 0 otherwise.

In our next result we show that Assumption 1 is satisfied for f and the sampling described above.

Theorem 6

Let Assumptions 4 and 5 be satisfied, and let \(\hat{S}\) be the sampling described above. Then Assumption 1 is satisfied with p given by (11) and any \(w=(w_1,\dots ,w_n)^T\) for which

for all \(i \in [n]\), where

Proof

Since f is separable of degree \(\omega \), so is \(\phi \) (because \(\frac{1}{2}\Vert x\Vert _v^2\) is separable). Now,

where the last inequality follows from the ESO for \(\tau \)-nice samplings established in [21, Theorem 15]. The claim now follows by comparing the above expression and (2). \(\square \)

4 Optimal probabilities

Observe that the formula (12) can be used to design a sampling (characterized by the sets \(S_j\) and probabilities \(q_j\)) that maximizes \(\mu \), which in view of Theorem 3 optimizes the convergence rate of the method.

4.1 Serial setting

Consider the serial version of NSync (\(\mathbf {Prob}(|\hat{S}|=1)=1\)). We can model this via \(c=n\), with \(S_i =\{i\}\) and \(p_i=q_i\) for all \(i \in [n]\). In this case, using (11) and (12), we get \(w_i = w_i^* =L_i + v_i\). Minimizing \(\Lambda \) in (4) over the probability vector p gives the optimal probabilities (we refer to this as the optimal serial method)

and optimal complexity

Note that the uniform sampling, defined by \(p_i=1/n\) for all \(i\in [n]\), leads to

Note that this can be much larger than \(\Lambda _{OS}\). We refer to NSync utilizing this sampling as the uniform serial method.

Moreover, the condition numbers \(L_i/v_i\) can not be improved via such a change of variables. Indeed, under the change of variables \(y={{\mathrm{Diag}}}(d)x\), the gradient of \(f^d(y):=f({{\mathrm{Diag}}}(d^{-1})y)\) has coordinate Lipschitz constants \(L_i^d = L_i/d_i^2\), while the weights in (10) change to \(v_i^d = v_i/d_i^2\).

4.2 Optimal serial method can be faster than the fully parallel method

To model the “fully parallel” setting (i.e., the variant of NSync updating all coordinates at every iteration), we can set \(c=1\) and \(\tau =n\), which yields

Since \(\omega \le n\), it is clear that \(\Lambda _{US} \ge \Lambda _{FP}\). However, for large enough \(\omega \) it will be the case that \(\Lambda _{FP}\ge \Lambda _{OS}\), implying, surprisingly, that the optimal serial method can be faster than the fully parallel method.

4.3 Parallel setting

Fix \(\tau \) and sets \(S_j\), \(j=1,2,\dots ,c\), and define

Consider running NSync with stepsizes \(w_i = \theta (L_i+v_i)\) (note that \(w_i \ge w_i^*\), so we are fine). From (4), (11) and (12) we see that the complexity of NSync is determined by

The probability vector q minimizing this quantity can be computed by solving a linear program with \(c+1\) variables (\(q_1,\dots ,q_c,\alpha \)), 2n linear inequality constraints and a single linear equality constraint:

where \(b^i \in \mathbf {R}^c\), \(i\in [n]\), are given by

5 Experiments

We now conduct two preliminary small scale experiments to illustrate the theory; the results are depicted in Fig. 1. All experiments are with problems of the form (10) with f chosen as in Example 1.

In the left plot we chose \(A\in \mathbf {R}^{2\times 30}\), \(\gamma =1\), \(v_1=0.05\), \(v_i=1\) for \(i\ne 1\) and \(L_i=1\) for all i. We compare the US method (\(p_i = 1/n\), blue) with the OS method [\(p_i\) given by (13), red]. The dashed lines show 95 % confidence intervals (we run the methods 100 times, the line in the middle is the average behavior). While OS can be faster, it is sensitive to over/under-estimation of the constants \(L_i,v_i\). In the right plot we show that a nonuniform serial (NS) method can be faster than the fully parallel (FP) variant (we have chosen \(m=8\), \(n=10\) and three values of \(\omega \)). On the horizontal axis we display the number of epochs, where one epoch corresponds to updating n coordinates (for FP this is a single iteration, whereas for NS it corresponds to n iterations).

Notes

A clarifying comment answering a question raised by the reviewer: The authors of [16] give explicit formulas for w for which (2) holds, under an assumption that is slightly weaker than Lipschitz continuity of the gradient of \(\phi \). In particular, they study functions \(\phi \) admitting the global quadratic upper bound

$$\begin{aligned} \phi (x+h)\le \phi (x) + \langle \nabla \phi (x), h\rangle + \tfrac{1}{2}\Vert Ah\Vert ^2 \end{aligned}$$for all \(x,h\in \mathbf {R}^n\), where \(A\in \mathbf {R}^{m\times n}\). One of the consequence of their work is that the parameters \(w_1,\dots ,w_n\) must necessarily satisfy the inequalities: \(w_i\ge \Vert A_{:i}\Vert ^2\), where \(A_{:i}\) is the ith column of A. Moreover, as long as \(\mathbf {Prob}(|\hat{S}|\le \tau )=1\) for some \(\tau \), then (2) holds for \(w_i=\tau \Vert A_{:i}\Vert ^2\). However, this choice of parameters is rather conservative. The goal of [16] is to give explicit and tight formulas for w, where hopefully \(w_i\) will be much smaller than \(\tau \Vert A_{:i}\Vert ^2\), utilizing specific properties of the sampling \(\hat{S}\) and data matrix A.

References

Bian, Y., Li, X., Liu, Y.: Parallel coordinate descent Newton for large-scale l1-regularized minimization. arXiv:1306.4080v1 (2013)

Bradley, J., Kyrola, A., Bickson, D., Guestrin, C.: Parallel coordinate descent for L1-regularized loss minimization. In: International Conference on Machine Learning (2011)

Csiba, D., Richtárik, P.: Primal method for ERM with flexible mini-batching schemes and non-convex losses. arXiv:1506.02227 (2015)

Dang, C.D., Lan, G.: Stochastic block mirror descent methods for nonsmooth and stochastic optimization. In: Technical report, Georgia Institute of Technology (2013)

Fercoq, O.: Parallel coordinate descent for the AdaBoost problem. In: ICMLA, vol. 1, pp. 354–358. IEEE, 2013

Fercoq, O., Richtárik, P.: Smooth minimization of nonsmooth functions with parallel coordinate descent methods. arXiv:1309.5885 (2013)

Gower, R., Richtárik, P.: Randomized iterative methods for linear systems. In: Technical report, University of Edinburgh (2015)

Hsieh, C.-J., Chang, K.-W., Lin, C.-J., Keerthi, S.S., Sundarajan, S.: A dual coordinate descent method for large-scale linear SVM. In: Proceedings of the 25th International Conference on Machine Learning, pp. 408–415. ACM (2008)

Lacoste-Julien, S., Jaggi, M., Schmidt, M., Pletcher, P.: Block-coordinate Frank–Wolfe optimization for structural SVMs. In: 30th International Conference on Machine Learning (2013)

Lu, Z., Xiao, L.: On the complexity analysis of randomized block-coordinate descent methods. arXiv:1305.4723 (2013)

Lu, Z., Xiao, L.: Randomized block coordinate non-monotone gradient methods for a class of nonlinear programming. arXiv:1306.5918 (2013)

Mukherjee, I., Frongillo, R., Canini, K., Singer, Y.: Parallel boosting with momentum. In: Machine Learning and Knowledge Discovery in Databases, vol. 8190, pp 17–32. Springer, Heidelberg (2013)

Necoara, I., Clipici, D.: Efficient parallel coordinate descent algorithm for convex optimization problems with separable constraints: application to distributed mpc. J. Process Control 23, 243–253 (2013)

Necoara, I., Nesterov, Y., Glineur, F.: Efficiency of randomized coordinate descent methods on optimization problems with linearly coupled constraints. In: Technical report, vol. 58, pp 2001–2012 (2012)

Nesterov, Yu.: Efficiency of coordinate descent methods on huge-scale optimization problems. SIAM J Optim 22(2), 341–362 (2012)

Qu, Z., Richtárik. P.: Coordinate Descent with Arbitrary Sampling II: Expected Separable Overapproximation. arXiv:1412.8063 (2014)

Qu, Z., Richtárik, P., Takáč, M., Fercoq, O.: Stochastic Dual Newton Ascent for Empirical Risk Minimization. arXiv:1502.02268

Qu, Z., Richtárik, P., Zhang, T.: Randomized Dual Coordinate Ascent with Arbitrary Sampling. arXiv:1411.5873 (2014)

Richtárik, P., Takáč, M.: Efficient serial and parallel coordinate descent methods for huge-scale truss topology design. In: Operations Research Proceedings, pp. 27–32. Springer, New York (2012)

Richtárik, P., Takáč, M.: Iteration complexity of randomized block-coordinate descent methods for minimizing a composite function. In: Mathematical Programming (2012)

Richtárik, P., Takáč, M.: Parallel coordinate descent methods for big data optimization. arXiv:1212.0873 (2012)

Richtárik, P., Takáč, M.: Distributed coordinate descent method for learning with big data. arXiv:1310.2059 (2013)

Shalev-Shwartz, S., Tewari, A.: Stochastic methods for l1-regularized loss minimization. JMLR 12, 1865–1892 (2011)

Shalev-Shwartz, S., Zhang, T.: Proximal stochastic dual coordinate ascent. arXiv:1211.2717 (2012)

Shalev-Shwartz, S., Zhang, T.: Accelerated mini-batch stochastic dual coordinate ascent. arXiv:1305.2581v1 (2013)

Shalev-Shwartz, S., Zhang, T.: Stochastic dual coordinate ascent methods for regularized loss minimization. JMLR 14, 567–599 (2013)

Takáč, M., Bijral, A., Richtárik, P., Srebro, N.: Mini-batch primal and dual methods for SVMs. In: ICML (2013)

Tappenden, R., Richtárik, P., Büke, B.: Separable approximations and decomposition methods for the augmented Lagrangian. arXiv:1308.6774 (2013)

Tappenden, R., Richtárik, P., Gondzio, J.: Inexact coordinate descent: complexity and preconditioning. arXiv:1304.5530 (2013)

Acknowledgments

This work appeared on arXiv in October 2013 (arXiv:1310.3438). P. Richtárik and M. Takáč were partially supported by the Centre for Numerical Algorithms and Intelligent Software (funded by EPSRC grant EP/G036136/1 and the Scottish Funding Council). The second author also acknowledge support from the EPSRC Grant EP/K02325X/1, Accelerated Coordinate Descent Methods for Big Data Optimization.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Richtárik, P., Takáč, M. On optimal probabilities in stochastic coordinate descent methods. Optim Lett 10, 1233–1243 (2016). https://doi.org/10.1007/s11590-015-0916-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-015-0916-1