Abstract

The outbreak of the COVID-19 pandemic and the associated lockdown measures have been a shock to market systems worldwide, affecting both the supply and demand of labor. Intensified by this pandemic-driven recession, online labor markets are in many ways at the core of the economic and policy debates about their technological innovation, which could be used as a way of economic reform and recovery. In this work, we focus on crowdsourcing, which is a specific type of online labor. We apply a unique dataset of labor data to investigate the effects of online training, a policy that was provided to requesters by the platform during the COVID-19 period. Our findings suggest that workers indirectly finance on-the-job online training by accepting lower wages during the pandemic. By utilizing a difference-in-difference research design, we also provide causal evidence that online training results in lower job completion time and the probability of being discontinued. Our findings show that both employers and employees in our online labor context reacted to the pandemic by participating in online labor procedures with different risk strategies and labor approaches. Our findings provide key insights for several groups of crowdsourcing stakeholders, including policy-makers, platform owners, hiring managers, and workers. Managerial and practical implications in relation to how online labor markets react to external shocks are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The COVID-19 pandemic, as an unusual economic shock, has caused a messy combination of disaggregated sectoral supply and demand disturbances (Baqaee and Farhi 2020). Although the final stages of the pandemic are behind us, it is still very difficult to assess its long-term effects on the economy in general and especially on the labor market (Cortes and Forsythe 2020; Jordà et al. 2020).

In the conventional labor market, retailers and brands face short-term challenges related to health and safety issues, supply chains, employees’ working behavior, cash flow, sales and labor relations, and management. At the same time, online shopping, online communication, entertainment, and education have increased in unprecedented ways. Remote working and the associated use of the internet and social media have grown at the same pace (Candela et al. 2020).

All the aforementioned health, economic, environmental, and social challenges caused by COVID-19 worldwide give reason to assume that they will also have an impact on paid crowdsourcing platforms, such as Amazon Mechanical Turk (MTurk) or Prolific. Previous studies have shown, for example, how the diversity of the available workforce is reduced if, due to the pandemic, people experiencing financial difficulties no longer have internet connections that are reliable enough to participate in online work environments (Lourenco and Tasimi 2020). Economic hardships can also affect worker diversity in the opposite way (Arechar and Rand 2021). One can argue that the labor force might become more diverse in the long run, as the need for new forms of employment might attract new workers to online marketplaces.

Arguably, one of the most valuable aspects of online technology is how it connects people. And the unprecedented disruption in the wake of the Covid-19 pandemic is seeing countless institutionalized practices and established norms being reimagined. Hence, the pandemic significantly elevated the value of crowdsourcing as a vital economic lifeline, especially through online labor markets (Gurca et al. 2023). With restrictions limiting traditional employment avenues, many individuals turned to online labor platforms to generate income from the safety of their homes (Dąbrowska et al. 2021; Colovic et al. 2022). This shift highlighted the resilience and adaptability of crowdsourcing, transforming it into a primary income source for countless workers. As remote work became a necessity, the convenience and accessibility of online crowdsourcing services not only provided financial stability but also demonstrated the potential for a more decentralized and flexible employment landscape in the post-pandemic world (Vermicelli et al. 2021).

In online labor markets, requesters such as employers in the conventional labor market face unexpected uncertainty due to the pandemic and thereby try to follow practices that decrease undue risks and support a more controlled online working environment (Carmel and Káganer 2014; Soto-Acosta 2020). This proactive coping behavior can include the induction of training sessions before online hiring, better future time orientation regarding online tasks, and more detailed job descriptions that will help candidates analyze whether they are eligible to participate in a particular online job. Although all these aspects can be viewed as beneficial working strategies for the increasing number of affected individuals' personal resources (Chang et al. 2021), they may result in more conservative and rigorous behavior by requesters to tackle the risk and stress imposed by the pandemic (Trougakos et al. 2020). For example, requesters’ behavior may be driven by trends in several online job dimensions, including concerns tied to overall low job quality and lower working engagement during the pandemic. To a large extent, we are investigating how to bolster the resilience of the online labor market both from the point of view of workers and requesters.

We show that workers may contribute to investments in job training by reducing their wages. This is one of the first studies to find causal evidence to support human capital theory within an online labor context. Human capital theory suggests that individuals have a set of skills or abilities that they can improve or accumulate through training and education (Becker 1964).Footnote 1 Workers may finance on-the-job training by accepting lower wages (Fairris and Pedace 2004). In our case, requesters use a pretask-training strategy in an attempt to maintain the quality of the productivity outcomes. The decision to offer online training is ultimately made by the employer, i.e., the requester. Although workers pay for part or all their training by accepting lower wages, their decision to undertake training depends on the choice of which task they decide to join. Thus, we believe the task is the logical unit of analysis for exploring the causal effect of online training on several productivity indicators (Heuser et al. 2022).

The academic and practical interest in leveraging crowdsourcing initiatives to address challenges arising from the COVID-19 pandemic, is on the rise. There is a noticeable expansion in the scientific literature discussing the involvement of online labor markets in providing crowdsourcing services during this global health crisis (Vermicelli et al. 2022). For example, although the pandemic caused a significant shift in the job market and many employees had to adapt to working from home, online job platforms became popular because they allowed people to work over the internet (Arechar and Rand 2021). Despite this growing body of literature, empirical evidence detailing the dynamics of these online labor markets during the pandemic remains limited and the existing literature underscores the necessity for a quantitative assessment of the economic impact associated with crowdsourcing initiatives amid disruptive events (Vermicelli et al. 2022).

This paper seeks to bridge this knowledge gap by empirically examining the mechanisms that govern how online labor markets adapt to an external shock, specifically the COVID-19 pandemic. The aim of this paper is to study the determinants of online task completion within an emerging online labor market before and during the initial outbreaks of the COVID-19 pandemic. In this paper, we focus on the following research questions.

RQ1: How did the demographics of online workers and the characteristics of tasks influence the probability of task completion or discontinuation during the onset of the COVID-19 pandemic?

RQ2: How does the implementation of online training—a policy introduced by the online labor platform at the onset of the pandemic—affect both wages and the completion status of online tasks?

As far as we know, our paper is the first to use online labor data to study how the COVID-19 pandemic has changed online labor crowdsourcing markets and labor mechanisms. The paper aims to increase our understanding of the structure and functioning of the on-demand online job market. This is achieved through the presentation of results derived from a distinctive dataset comprising over 13,000 online tasks posted by 1046 requesters.

The structure of the paper is as follows. Section 2 reviews labor issues related to online labor markets. Section 3 describes the data and the empirical strategy. Section 4 presents the estimation results, and Sect. 5 provides insights regarding the theoretical and practical implications. Section 6 concludes the paper.

2 Online labor markets

2.1 The online setting

Online labor markets have emerged not “in the wild” but within the context of highly structured platforms created by for-profit intermediaries (Autor 2001). These platforms give the opportunity for employers and firms to directly outsource an online job, with an open call, to a large set of potential anonymous workers. Within the online setting employers are called requesters. In general, online labor markets offer workers the way to find jobs in three ways. They can use electronic search to explore job postings tailored to specific categories or those requiring particular skills. Secondly, they may opt to receive email notifications from the platform, alerting them to job postings within a designated category. Lastly, though infrequent, workers may also receive direct invitations from employers to apply for specific positions. Conversely, requesters seeking workers navigate two primary methods. They may receive inquiries from workers who discover job openings by perusing the platform's offered jobs, or they search for workers themselves and invite specific workers to apply.Footnote 2 (Barach and Horton 2021; Kässi and Lehdonvirta 2018).

In this context, crowdsourcing constitutes a mechanism that optimally reallocates resources through labor matching and performance (Pallais 2014; Dube 2017). Crowdsourcing platforms specialize in hosting small tasks that need human intelligence (Fernandez et al. 2019).Footnote 3 A great advantage of this labor procedure is the participation of dispersed workers, which provides requesters the opportunity to make transient contracts for work, during which time the task is completed, payment is made, and the contract is dissolved.

2.2 A comprehensive overview of their evolution over the years

Since their debut in 2006, on-demand online labor platforms have played a significant role in shaping the gig economy. They have emerged as the new online distributed problem-solving and production model in which networked people collaborate to complete a common task. These platforms are becoming an increasingly important part of the labor market by hosting and evolving their online features (Autor 2001). It is estimated that there was an approximately 50% increase in flexible work arrangements in the U.S. economy between 2005 and 2015, which accounts for 94% of the net employment growth in the U.S. (Katz and Krueger 2019).Footnote 4 Agrawal et al. (2015) and Kokkodis and Ipeirotis (2016) report that oDesk faced exponential growth with earnings greater than $10 billion by 2020, while Amazon Mechanical Turk received new jobs worth hundreds of thousands of dollars in recent years. At the same time, tens of millions of people have found employment opportunities through such platforms (Kassi et al. 2018). Growth is forecast to continue in the near future (Chandler and Kapelner 2013).

2.3 Online versus offline markets

To date, there has been relatively little work examining the nature of online labor markets and how they affected from offline shocks. These markets are relatively new, which could explain the lack of research, but another factor is that they have not received much mainstream attention. However, they are worthy of research attention for several reasons beyond just their current size and the ease with which research can be conducted (Horton et al. 2011; Chen and Horton 2016). However, what is the difference in online work? The most obvious distinguishing characteristic of online labor is that it happens online rather than by workers who are physically collocated. Therefore, it removes the role of geography.Footnote 5 Moreover, in relation to traditional labor markets, it is commonplace for workers to work for many different “employers” (i.e., requesters) within a short amount of time, even in markets such as oDesk/Upwork that “look” more traditional.

Another key difference between online labor markets (OLMs) and other marketplaces is that a work project is mainly an “experience good.” This means that it is difficult (if not impossible) to predict the quality of the deliverable in advance (Horton and Chilton 2010). To resolve this uncertainty, many studies have explored the determinants of online productivity in relation to the successful completion of tasks. For example, studies have linked the quality of labor in online labor markets with demographics and human capital characteristics (Acemoglu and Restrepo 2018; Farrell et al. 2017) to wage-related factors (Chen and Horton 2016; Horton and Chilton 2010), personality attributes (Mourelatos et al. 2022), incentives (Mourelatos et al. 2023), task characteristics (Mourelatos et al. 2020), workers’ reputation (Kokkodis and Ipeirotis 2016), mood (Mourelatos 2023) and the labor structure of crowdsourcing (Pelletier and Thomas 2018). Many of the abovementioned studies have been conducted on Amazon Mechanical Turk (Johnson and Ryan 2020).Footnote 6

In online labor markets, workers’ ability to charge for their services largely depends on their skills.Footnote 7 To meet the evolving demands of the labor market, employees (i.e. online workers) frequently endeavor to broaden their skills and knowledge (Kokkodis 2022). Similarly, employers (i.e. requesters) also aim to reduce uncertainty in the hiring process by leveraging all available information about potential employees and implementing strategies to enhance their understanding of successful hiring in the online job market (Kokkodis and Ransbotham 2022).

2.4 Online labor markets during Covid-19

The COVID-19 pandemic has disrupted economies and societies, creating a need for collaboration, synergy, and resilience, especially within business environments (Al-Omoush et al. 2021). Crowdsourcing initiatives have played a significant role in addressing these challenges. Majchrzak and Shepherd (2021) suggest that community and crowd-based digital innovation are crucial during crises, facilitating compassionate efforts like those seen during the pandemic. Temiz (2021) examined HacktheCrisis, a Swedish digital hackathon in response to COVID-19, noting the enthusiastic participation of private companies and citizens, compared to public organizations. Al-Omoush et al. (2021) explored how a sense of community contributed to harnessing the wisdom of the crowd during the pandemic, emphasizing the importance of collaborative knowledge creation through social media crowdsourcing. Kokshagina (2022) discussed Open COVID-19, a crowdsourcing initiative by Just One Giant Lab, focusing on its role in developing and testing low-cost solutions. Transparency emerged as a key factor in building a collective project memory. Additionally, Mansor et al. (2021) investigated how crowdsourcing initiatives impacted the business performance of small and medium-sized enterprises in Malaysia, finding positive effects on performance.

During the pandemic, the increasing interest led crowdsourcing platforms to enhance their operational structures by integrating gamification tools, various payment schemes, and training mechanisms into their labor framework. Nowadays, research indicates that AI tools can also be provided to enhance support for workers in their online jobs (Wiles and Horton 2023). This has been done to control the quality of responses (Jin et al. 2020). Online training worker-specific strategies are another means to stimulate workers’ intrinsic needs.Footnote 8 By default, training of crowd workers is performed prior to their participation in an online job. The goal is to teach employees the basic skills and expertise required for the actual task. Pre-COVID studies have shown that, in this way, a requester can significantly improve the quality of workers’ responses on a variety of crowdsourcing microtasks. However, how was this mechanism of quality assurance used by requesters as a recovery strategy during the pandemic, and what consequences did it have on offered wages? Our paper is one of the first attempts to tackle this challenge and provide valuable insights into the online labor process.

For that reason, our paper also relates to the literature on the economic effects of the COVID-19 crisis and the impact of the COVID-19 pandemic on alternative working arrangements (Adams-Prassl et al. 2020b). Concerning the conventional labor market, Carlsson-Szlezak et al. (2020a, b) suggest that supply-side disruptions will negatively affect supply chains, labor demand, and employment. This has led to prolonged periods of layoffs, rising unemployment and a generalized change in the behavioral labor patterns of employers and employees. The COVID-19 pandemic led to spikes in uncertainty, in which there are no close historical parallels (Baker et al. 2020; Altig, et al. 2020). Bonadio et al. (2020) found some pandemic-induced contractions in the labor supply. Eleven et al. (2020) linked the impact of the pandemic with a fall in worker productivity and with a decline in labor supply. Brinca et al. (2021) estimated labor demand and supply shocks in several U.S. economic sectors and found a decrease in work hours that was related to the lockdown and other government policies during the pandemic. These changes in the traditional labor market have several socioeconomic consequences. Adams-Prassl et al. (2020a) showed that workers who can perform none of their employment tasks from home are more likely to lose their job. Yasenov (2020) found that workers whose tasks are less likely to be performed from home have lower educational levels and are younger adults and mostly immigrants.

However, few studies have analyzed these issues in the online labor market. Stephany et al. (2020) used data from the Online Labor Index to explore the labor market effects of the COVID-19 pandemic. According to their results, overall, the COVID-19 pandemic has not had a substantial effect on the number of registered worker profiles. Frank Mueller-Langer et al. (2021) find a positive effect of stay-at-home restrictions on job postings and hires of remote workers relative to on-site workers. These findings raise a crucial question. How has COVID-19 affected labor procedures, employers and workers who already participate remotely in crowdsourcing platforms? The first instinct is to draw from their structural characteristics and consider crowdsourcing a form of labor that does not require face-to-face contact and only needs a computer or mobile device and internet connection. Therefore, it can be mostly incorporated into occupations that have a huge share of workers working remotely and are less affected by COVID-19 (Beland et al. 2020). This turns out to be wrong.

Our paper, by using a unique dataset from an emerging online labor market, shows that online labor markets have also tried to readjust and adapt to the new working conditions. We show that during COVID-19, requesters have put more effort into the labor process by requiring training before making the final hiring choice, monitoring continuous improvement of the task, and discontinuing it when they determine that workers produce unforeseen outputs.

3 Dataset and empirical strategy

3.1 Data

For our analysis, we partnered with a crowdsourcing platform. The data used in this paper were obtained from the ICT department of the Toloka platform, which provided assurance that the sample is representative of the online labor market. This ensures that the findings from this study are likely to be applicable to the broader online labor market and not just specific to a particular segment or group. This OLM has a typical crowdsourcing process and connects requesters and workers as follows. A requester defines a batch of tasks (e.g., transcription, image tagging, sentiment analysis), along with other configuration details, e.g., the specific task instructions, reward, desired outputs, and desired worker profiles. The requesters post projects as open-call types of jobs in the marketplace. The workers who qualify to work on the task can start working on it either through a dedicated mobile app or through the web application.

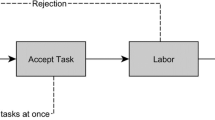

We have thoroughly examined the platform under consideration, and, at present, we do not have any indications that set it apart significantly from other similar platforms. This lack of divergence is crucial in ensuring the representativeness of our findings. The framework employed by this online labor market adheres to well-established standards, mirroring those embraced by other prominent platforms such as Amazon Mechanical Turk and Crowdworkers, as illustrated in Fig. 7 in Appendix 1.

While it is essential to acknowledge the similarities in the framework of the online labor market under study with other platforms, we cannot completely rule out the possibility of subtle differences. For instance, it has been observed in platforms, like the one we use, that the user population may exhibit slight variations, such as a marginally higher level of education compared to Amazon Mechanical Turk (Difallah et al. 2018). However, it is crucial to emphasize that these nuanced distinctions do not pose a significant threat to the generalizability of our results (please refer to Appendix 1 for details on the online labor market).

Our data consist of over 13,000 online microtask jobs posted within the period between March 11, 2019 and March 10, 2021 by 1046 requesters.Footnote 9 Hence, it allows us to investigate the change in requesters and workers’ preferences due to the COVID-19 pandemic. Unfortunately, regarding requesters, we do not have demographic information except for their identifier and task-specific characteristics. The data contain the average number of workers involved in a task, their gender and age, as well as requesters’ choices on pretraining, required outputs and the word count of all job descriptions. Thus, we have an opportunity to explore how these variables are associated with the demand and supply of labor affected by the pandemic. Further details on the data are provided in Appendix 2, which includes a terminology table.

Our main dependent variables of interest include a task’s completion time (in seconds/continuous scale), completion status (1: completed successfully, 0: otherwise), discontinued status (1: discontinued by requester, 0: otherwise), and offered wage categories (in a 5-point categorical scale, where 1: Low wage and 5: High wage). Furthermore, we also take advantage of an option that the online platform offers, in which requesters have an opportunity to discontinue their tasks when they feel that the final average outcome will not meet the minimum quality threshold level.

3.2 Data insights overview

Table 1 provides summary statistics of the data. Table 14 provides detailed definitions in the Appendix 2.

Regarding our dependent variables, in Table 2, we observe that during the COVID-19 outbreak, the average percentage of tasks being discontinued increased by approximately 6.7%, while the percentage of successfully completed tasks decreased by 1.3%. Regarding the completed tasks, the completion time seemed to have decreased over time in all cases due to the COVID-19 outbreaks (Table 2).

Tables 3 and 4 include paired t-tests for the variables before and during the global COVID-19 outbreak.Footnote 10 The initial results highlight several trends. First, the number of online jobs almost doubled during COVID-19.Footnote 11 Additionally, when the pandemic outbreak occurred, more male and elderly workers became part of the online workforce. Requesters seem to have a quick response to adapt and protect the quality of online labor. During the pandemic, required outputs have decreased, while job descriptions slighlty (i.e., task instruction) increased. Several papers have noted similar effects of COVID-19 on several attributes of online job postings (e.g. Hensvik et al. 2021; Forsythe et al. 2020).

Finally, statistically significant changes also occurred in offered wage categories. Requesters offered higher wages below average, mainly during the COVID-19 pandemic (Table 4). Undoubtedly, the platform's dynamic time trends are a crucial factor to consider. As depicted in Fig. 1, the overall trend is evident. However, to comprehensively analyze deeper the impact of the pandemic on this relationship we proceed to our empirical analysis (Figs. 1 and 2).

3.3 Empirical model

3.3.1 Tasks’ probability of being completed or discontinued.

To investigate the determinants of a task either being completed or discontinued by a requester, we utilize the following logit model:

where Y* is the latent variable reflecting the probability of a task i being completed or discontinued by a requester. k = 1…, 5 are COVID-19 dummy time trends (i.e., an indicator variable for whether the online task was conducted before or during the COVID-19 outbreak in each case).Footnote 12 We incorporated several Covid dummies, with Global Covid-19 being the primary variable of interest. These dummies take the value of 1 when daily deaths reached 1000 confirmed cases on each case. Notably, the outbreak dates we used are as follows: Global Covid - March 9th, 2020; America - April 2nd, 2020; Europe - March 21st, 2020; Asia - February 17th, 2020; Russia - May 15th, 2020 (Source: World Health Organization). Next, Di is a vector including the demographics of the workers (i.e., average age and share of males), Xi is a vector of the task characteristics (i.e., number of participant workers, required training or not, word count of job description, task outputs, date) and ε is the idiosyncratic error term. All our specifications control for the task’s project category.

3.3.2 Tasks’ completion time

Regarding the COVID-10 pandemic, we use the data from the official WHO COVID-19 statistics and define the dates when the pandemic reached over 100,000 confirmed cases on a continent-specific basis and over 1000 confirmed cases on a country-specific basis as an indicator of the pandemic outbreak in each case. We introduce COVID-19 time trends as dummy variables. In a model, if \(\hat{\beta }\) = 0, the tasks before and during the COVID-19 outbreak have the same probability of being completed or discontinued by the requester. If \(\hat{\beta }\) < 0, the tasks during the COVID-19 outbreak have a lower probability of being completed or discontinued by the requester than before the pandemic. If \(\hat{\beta }\) > 0, the tasks during the COVID-19 outbreak have a higher probability of being completed or discontinued by the requester compared to the time before the pandemic.

Moreover, to estimate the effect of the pandemic on the completion time for the tasks properly finalized, we consider a linear straightforward OLS log regression model,Footnote 13 using the same set of independent variables, given by:

where completion time i denotes task i’s average completion. Other variables are the same as in equation one. All our specifications control for the task’s project and offered wage category. Tasks were, of course, observed only if they were completed successfully.

3.4 Wages

Furthermore, we use the wage categories as dependent variables to examine the wage fluctuations and how they were affected by the COVID-19 outbreaks. In this case, we utilize an ordered logistic model. Unfortunately, we did not have the opportunity to have wages on a continuous scale due to the sensitivity of the information provided by the online platform (Fig. 7, Appendix 1). Instead, we use the five wage categories, i.e., low, below average, average, above average, and high.

Our approach to addressing the challenge of obtaining specific information on task-related wages involved a rigorous protocol during our collaboration with the data acquisition platform. We intentionally refrained from acquiring details on wages in both absolute and relative terms, adhering to a strict protocol.Footnote 14 Subsequently, through extensive discussions with the platform's data scientists, we gained access to wage indicators. To enable a meaningful analysis of probabilities before and after the pandemic, we implemented the following methodology:

Categorization The platform's data scientists categorized offered wages into five percentile-based groups (0–20%, 20–40%, 40–60%, 60–80%, 80–100%), drawing from the distribution on the online platform.

Temporal Consideration To prevent the introduction of pandemic-induced dynamics into the wage distribution, we exclusively utilized data from the platform's last three years of operation before the onset of the pandemic.

Task Assignment All tasks within our dataset, including those conducted online during the pandemic, were systematically assigned to their respective wage categories based on the established percentile criteria.

Comparative Analysis This approach allows for a robust comparison of the probability of a task falling into each wage category, constructed using pre-pandemic wage values, when the pandemic started. This comparison is facilitated by the inclusion of an onset dummy variable for COVID-19.

Hence, we consider the econometric specification given by:

Our ordered logit model includes task category fixed effects. We report marginal effects where covariates are evaluated at their mean values.Footnote 15 Even though our regression framework cannot address endogeneity directly, the rich set of control variables and the sample size make our statistical models less subject to bias vis-a-vis the literature (e.g., Bayo-Moriones and Ortín-Ángel 2006). All models include requesters fixed effects.

4 Results

4.1 Baseline results

We report the marginal effects and discuss how the behavior of requesters and workers was correlated with task characteristics and the COVID-19 outbreak within an online labor market. First, we hypothesized that an online job’s outcome is associated with the accumulation of specific task characteristics and the evolution of the pandemic. Table 5 presents the related key results. Columns (1)–(3) show the estimated probability of an online job being completed properly (i.e., completed = 1 and uncompleted = 0), while Columns (4)–(6) show the probability of an online job being forced to stop by a requester (discontinued = 1, ongoing = 0). An online job that was forced to be discontinued by the requester does not fall into the category of uncompleted jobs. ‘Discontinued online jobs’ are those that a requester cancels before he receives the full response from the workers and before the time limit expires. The ‘uncompleted jobs’ category includes online jobs for which they have received answers from workers, but the quality failed to meet the requesters’ requirements and quality threshold. In addition, Columns (2) and (5) include the time trend dummies (i.e., COVID-19 outbreaks), while Columns (3) and (6) present estimates obtained when adding tasks (i.e., transcription, image labeling) and offered wage category.

Regarding the probability of an online job outcome, the current study revealed clear trends over time, which were amplified further after the pandemic outbreaks. More specifically, the probability of an online job being completed properly decreased by 9.7 p.p after the global COVID-19 outbreak occurred. Interestingly, the probability of a task being discontinued increases over time. These trends also seem to remain when we include several continent-specific pandemic outbreaks.Footnote 16

Moreover, we explored differences in the outcome in response to task attributes. The coefficients reported in Table 5 show the estimated effect of a task on being properly completed or unexpectedly discontinued based on several labor characteristics. We found that as the age and share of male workers increased, the probability of the online job being completed increased by 4.2 p.p and 3 p.p, respectively, while the probability of being unexpectedly discontinued decreased by 7.2 p.p and 6.8 p.p, respectively. With respect to the requesters’ job requirements, we observe that the larger the number of workers participating, the lower the probability of a task being completed and being unexpectedly discontinued. When training is needed, requesters increase the probability of completing an online job by 27 p.p, while they decrease the probability of a job being discontinued by 12.8 p.p. In Table 5, we enhanced the specifications by incorporating the age squared term alongside age, enabling a more precise representation of the effect and facilitating the exploration of non-linearities. Notably, in all instances, we observed a consistently negative impact of age squared. This implies that as workers age, the influence of age on the likelihood of completing or discontinuing a task diminishes.

Table 6 presents the OLS task’s completion time coefficients. Column (1) presents the effects of the workers’ demographic characteristics, while Columns (2) and (3) include estimates with task characteristics. Columns (5) and (6) also consider the COVID-19 outbreaks. Columns 4 and 6 include only the tasks that have been fully completed. All specifications include offered wage level controls and task category fixed effects.

First, we find a negative effect of gender and age on log values of task completion time. When the share of male workers increases by 1%, the completion time decreases to approximately 68%, while an increase in age by 1 unit leads to a decrease in completion time by 13.6%. The coefficient of the share of males and age are negatively and statistically significant at the 1% level across columns. Concerning the age squared variable, our observations reveal that as workers' age advances, there may be an upturn or slower decline in tasks’ completion time. The magnitude of these effects is not highly affected when we induce the COVID-19-time trends (Column (5)) and when we refer only to the completed tasks (Columns (4) and (6)). By also taking into consideration the findings of Table 6 for the completed tasks, we conclude that, on average, males and older people are more effective at performing jobs in online labor markets than females and younger workers.Footnote 17

Regarding task characteristics, our results support the idea that the greater the number of workers involved and job outputs a requester requires, the longer it will take for a task to be completed. Conversely, when requesters train their workers before the online job takes place, a more detailed description of the job needs to be performed to increase the probability of the job being properly completed within a short period of time. All the above-mentioned effects are statistically significant at the 1% level, and they do not vary in magnitude between specifications.

Column (5) includes both tasks properly completed, and tasks completed but with unsatisfactory results, which are, thus, forced to be discontinued. Column (6) includes only those tasks completed properly. Concerning the COVID-19 outbreaks, the results reveal that after the global outbreak occurred, the completion time is associated with a 11.5% increase, at the 1% level of significance. However, this effect follows the opposite direction in Column (6), which refers only to completed tasks. It seems that this effect is mainly driven by Asian workers participating in the online platform. By taking into consideration the country distribution of online platforms (almost 40% of the online workforce originates from Asian countries),Footnote 18 we provide evidence that the average increase in task completion time online is mainly driven by Asian workers affected by the pandemic. Our findings are in line with the previous literature findings on Asian crowdworkers’ working preferences (mainly Indians) (Chandler and Kapelner 2013). After the Asia COVID-19 outbreak, the task completion time increased by 21.6% (Column 5), while after the outbreaks in Europe and America, task completion times decreased. Unfortunately, we do not have other online working insights to draw on to reach a deeper understanding of what it means to be an Indian crowdworker during the pandemic and to provide comparisons between workers from India and the U.S. or Europe.

It's essential to note that we must consider the possibility that, to the extent that employers have reacted to the pandemic by offering training, one should not control for training if one is interested in the effect of the pandemic on outcomes because this may introduce a selection bias problem (Angrist and Pischke 2009). In our main specifications, we deliberately excluded training, and our findings, as shown in Column 1 and 2 of Tables 7, 8, and 9, confirm that this is not the case.

Furthermore, while this section serves as a descriptive analysis, establishing the foundation for our primary research question, it also sets the stage for a more profound exploration. In addition to this, we executed a comprehensive interaction model with our key Covid-19 pandemic variable, providing robust support for our observations regarding the pandemic's influence on the online labor platform. Tables 7, 8 and 9 include the interaction effects in relation to each online labor outcome (i.e. the probability of a task to be completed, the probability of a task to be discontinued and tasks’ completion time respectively).

Interestingly, the findings reveal that amidst the global COVID-19 outbreak, there exists a negative correlation between the proportion of male workers and the likelihood of task completion (Table 7, Column 3). Conversely, there is a positive correlation between the share of male workers and the likelihood of task discontinuation (Table 8, Column 3). Additionally, a higher proportion of male workers is associated with shorter completion times for tasks (Table 9, Column 3). More specifically, we find that after the COVID-19 outbreak, an 1% increase in the share of male workers in a task further decreases the probability of task completion by 9.1%, increases the probability of a task to be discontinued by 4% and decreases the completion time by 29.4% compared to the period before the pandemic.

Concerning age, our observations indicate that as the average age of workers rises amid the pandemic, the probability of task completion further decreases by 0.7% while the likelihood of task discontinuation further increases by 1.5% (Tables 7, 8, Column 4). Furthermore, a one unit increase in age is associated with longer completion times (i.e. approximately 8%) for tasks during the pandemic (Table 9, Column 4). A plausible interpretation for this trend could be rooted in health-related issues that may exhibit a strong correlation with age progression during the pandemic.

Moreover, during the COVID-19 outbreak, there is a positive correlation between the increased number of involved workers and the heightened probability of task completion (i.e. 1.7%), coupled with a decreased likelihood of discontinuation (i.e. 0.8%) (Tables 7, 8, Column 5). Additionally, completion times show a further decrease of 1.1% with a higher number of workers during the pandemic (Table 9, Column 5). One plausible interpretation for this pattern could be associated with the implementation of the training strategy, potentially leading to the engagement of more adept and skilled workers in online tasks.

In the context of online job descriptions, we notice consistent patterns like those observed before the pandemic. However, concerning the required task outputs, findings indicate that, during the pandemic, an increased number of required outputs are linked to a decreased likelihood of task completion and an increased likelihood of task discontinuation (Tables 7, 8, Column 7). Notably, during the pandemic, when tasks are completed, the completion time is lower with a concurrent increase in required outputs (Table 9, Column 7). This intriguing observation may be attributed to the involvement of more trained workers in these tasks during the COVID-19 period.

All effects persist consistently across the full specifications in every instance (Tables 7, 8, 9, Column 8).

4.2 Wage dynamics during Covid-19 outbreak

Now, we turn our analysis to the wage levels offered by the requesters. Table 10 reports the marginal effects where covariates are evaluated at their mean values (Fig. 3). High wage levels are negatively correlated with pretraining and job description length. As expected, a higher number of outputs positively correlates with a higher wage level offered by the requesters. The training of workers allows requesters to cut the required compensation rate and makes it possible to expand a potential workforce capable of performing the required task. This can be compared to a case where a company hires less educated/low-paid employees and trains them within the company instead of hiring highly skilled, high-paid employees. The only difference is that in the online job market, employees themselves pay for this training investment. Figure 4 confirms that tasks requiring training are likely to offer below-average compensation to workers.

During the global outbreak of COVID-19, we do not observe statistically significant effects on wage levels. Nevertheless, after the pandemic outbreak in America, Asia and Russia, we reveal a statistically significant increase in the tasks offering high wage levels by 2.8, 1.1 and 2 percentage points, respectively, while after Europe’s pandemic outbreak, the effects are in the opposite direction (Column 5). The interpretation of the price effects relies on two online labor aspects. Online labor markets are spot marketsFootnote 19 (Chen and Horton 2016) with monopsony power (Dube et al. 2020). The classical way to think about price-setting in these spot markets is that both requesters and workers exert different cross-side externalities. While Horton (2021) showed that small-scale supply shocks do not have price effects, the pandemic can be considered an enormous, once-in-a-lifetime shock that could have significant effects at the market level. Requesters may try to readjust the minimum wage during the pandemic as a way of increasing employment and maintaining the quality levels of online work.

4.3 The impact of training on task completion

Recognizing the substantial and statistically significant impact of the training intervention on our outcomes, our attention now shifts towards a granular examination of how employers' training strategies during the outbreak of the pandemic influence key metrics such as task completion time, the likelihood of successful task completion, and the probability of employers interrupting tasks. This transition prompts a refined analysis at the requester's level, providing a more nuanced understanding of the dynamics at play.

To ensure the validity of our Difference-in-Differences (DiD) estimates, two pivotal assumptions must be met: treatment exogeneity and parallel trends. The pandemic training policy, uniformly implemented by the online crowdsourcing platform across all requesters during the pandemic, aimed at elevating result quality without consideration for the online labor market's structural nuances. It is crucial to emphasize that the treatment (training) was strategically introduced precisely at the outbreak of the pandemic. This deliberate timing reinforces the exogeneity of our treatment indicator.

Addressing the parallel trends assumption, our comprehensive panel event study and placebo tests serve as robust validations, affirming the satisfaction of this critical requirement. With these assurances, we proceed to aggregate the data and scrutinize requester behavior before and after the pandemic outbreak, employing a difference-in-differences design to investigate the causal impact of the training intervention on our outcomes. Hence, we estimate the following model:

where Y is the selected outcome (completion time, completed tasks, discontinued tasks) of a posted i task by a r requester at period t, Covidpeak is a temporal dummy equal to one for the period during the pandemic peak (0 otherwise), Training is 0 in the pre-Covid period, then takes on positive values on Covid-peak onward. The treatment is considered continuous and reflects the proportion of the tasks requiring pre-training of the workers. Instead of a discretized version of treatment (i.e., 0/1 for pre-/post-treatment), the variable now represents the different gradations of exposure to online tasks requiring training over time. X is a vector including the time-varying demographics of the workers (i.e., average age and share of males), the task characteristics (i.e., number of participant workers, word count on the job description, task outputs, date) and e is the idiosyncratic error term. All our specifications control for the task’s project category. In Eq. (4), the DiD parameter of interest is δ and indicates whether differences in an online’s job completion time, the probability of being completed or forced to stop between tasks with and without training have changed significantly after the outbreak of the pandemic. Based on our initial findings, we expect that δ < 0 for completion time and discontinued tasks and δ > 0 for properly finished tasks.

Table 11 displays the DiD parameters obtained after estimating the abovementioned equation. Column 1 indicates a decreased completion time and probability of employers’ task-stopping behavior and an increased probability of a task being completed when training is implemented during the exposure period. Our findings reveal that an increase of the share of task-training by 1%, then online jobs have an approximately 13.4% lower completion time, a 6.2% higher probability of being properly completed and a 6.2% lower probability of being discontinued by the requester. Our expanded dataset allows us to examine whether our findings remain consistent during another crisis within the same timeframe. Following the COVID-19 pandemic, a global energy crisis emerged in early 2021, characterized by shortages and escalating prices in oil, gas, and electricity markets worldwide (Calanter and Zisu 2022). This crisis stemmed from various economic factors, including the rapid post-pandemic economic recovery outpacing energy supply, and further intensified following the Russian invasion of Ukraine (Ozili and Ozen 2023). To account for this subsequent shock, we incorporated a dummy variable in our econometric model indicating the onset of the energy crisis (i.e., mid-January 2021). As anticipated, specifications (4), (8), and (12) demonstrate that our results were not affected by this exogenous shock.

The DiD estimates are valid under the assumption that actual and counterfactual online task outcomes trended similarly before the pandemic outbreak, i.e. when Post = 0. Given that after the outbreak of the pandemic, the training-based-tasks as a strategy, were not adapted simultaneously by all requesters, we show that this assumption is met using a panel event study specification.Footnote 20 Therefore, we estimate a generalized variant of Eq. (4). Denote as Onset a variable indicating the month in which a requester first time used this option. If completion time, completed tasks, discontinued tasks are denoted as y, then the panel event specification is the following:

where Yit is the outcomes of an online task i at time t (i.e., month) of r requester. Lag and Lead are the c-lag and k-lead set of dummies denoting the time distance away from the first event, βc and γk are parameters to be estimated denoting how completion time, if a task was completed or not, and whether was discontinued or not, vary in periods before and after the event compared to the period prior to the event. Also, φt a vector of time-related dummy variables to control for the time-varying trend of monthly outcomes by including month of the year fixed effects. Furthermore, μr is a set of requesters fixed effects to absorb time-invariant factors at requester level. Lastly, εit is a random error component.

Lags and leads to the onset of training provided opportunity are defined as below:

In Eq. 2 the baseline lead is set where c = 1 (one period prior to the event).Footnote 21

Figure 5 primarily examines the time it takes to complete online tasks, while Fig. 6a (left panel) and 6b (right panel) focus on the likelihood of a task being finished or discontinued, respectively. The event study presented in the panels demonstrates a significant drop in the average completion time of online tasks five months after the outbreak of the pandemic. In relation to Fig. 6, the estimated event-time path of the probability of a task being completed exhibits a gradual and consistent increase over time, while the probability of a task being discontinued follows the opposite trend. Both panels of Fig. 6 portrays a smooth and steady rise in the likelihood of task completion over time, while the right panel shows a decline in the probability of tasks being discontinued.

Tasks completion time (ln). Point estimates are displayed along with their 95% confidence intervals as described in Eq. 5. Leads and lags capture the difference between treated and control group, compared to the prevailing difference in the omitted base period indicated by the vertical line in the plot

Probability of a task to be completed (left panel) and being discontinued (right panels). Point estimates are displayed along with their 95% confidence intervals as described in Eq. 5. Leads and lags capture the difference between treated and control group, compared to the prevailing difference in the omitted base period indicated by the vertical line in the plot

As a robustness check, firstly, we re-estimated Eq. (4) by replacing Covidpeak with our alternative pandemic peaks used in Eqs. (1)–(3). As shown in Table 12, the effects of the pretask-training as a strategy on our outcomes of interest also hold in the case of the pandemic peak in Asia, Europe, the U.S., and Russia.

Moreover, we conduct permutation inference using placebo difference-in-differences estimates to provide more evidence that the observed effects are a result of online training during the pandemic and not an existing artifact of the data. To conduct the analysis, we used the sample data before the pandemic peak. Thus, we exclusively utilized data collected prior to March 2020, thereby disregarding all data gathered during the periods when the treatment was actually implemented. Then, using the pre-March 2020 data, we picked a few different periods and pretend that the treatment was applied at that time. We used September 2019 and December 2019. We estimated DiD using that pretend treatment date. Table 13 includes the DiD Estimates by using the fake treatment periods. We did not find an “effect” at those pretend treatment dates, which provides additional support for the idea that online training is driving our reported results.

5 Discussion

5.1 Impact on task completion

In the short run, the viability of home-based work undoubtedly became a key factor for the immediate protection of jobs during the pandemic and a way to stop the escalating income loss of workers (Avdiu and Nayyar 2020). Mobility restrictions have led many individuals to substitute on-site employment with remote work by deploying working initiatives on online labor platforms (Mueller-Langer and Gomez-Herrera 2021). This increasing labor inflow created new working conditions and behavioral trends for both employers (i.e., requesters) and employees (i.e., workers), reflecting the adaptability of human capital in response to external shocks. Human capital theory suggests that individuals, through education, training, and experience, develop skills that increase their productivity and adaptability in the labor market. The participation of elderly and male workers in online tasks during the pandemic can be seen as a utilization of their accumulated human capital, demonstrating their ability to transition to remote work environments. While papers exploring conventional labor markets have found that females suffered more than males from the COVID-19 shock, with female unemployment growing faster than males during the pandemic (Kikuchi et al. 2020), studies have also revealed that individuals in occupations that score highly in terms of working from home are more likely to be male (Mongey et al. 2021; Hensvik et al. 2021). This gender disparity in the adoption of remote work during the pandemic may reflect underlying differences in human capital accumulation between genders, highlighting the importance of addressing gender disparities in access to education and training opportunities to enhance overall labor market resilience.

Furthermore, while the demand for online work has increased steadily over time,Footnote 22 we observed that fewer workers were becoming involved, on average, per task. Recall that online labor markets provide the opportunity for potential workers to engage in more than one task simultaneously. Thus, many workers may be primarily interested in producing quick generic answers rather than correct ones to optimize their time efficiency and, in turn, earn more money (Eickhoff and de Vries 2013). Now, we observe that this “sloppy” behavior has decreased, with workers engaging less in many available tasks simultaneously. While the number of online jobs has increased, the number of final involved workers has decreased. A possible interpretation may rely on individual psychological and personality differences (Mourelatos et al. 2022).

As we mentioned in the dataset and empirical strategy section, the number of involved workers per task reflects only those who had accepted the task and not the workers who had successfully completed it and earned the reward. Therefore, a worker’s decision to engage or not engage with a task always has a potential risk. Workers may allocate their effort to several tasks, knowing that they will not earn compensation from the requester if their work output is considered low-quality through post hoc assessments or even for reasons that may be unfair or unclear (Horton and Chilton 2010; Felstiner 2011).

During the COVID-19 outbreak, psychologists and economists highlighted changing human behavior and revealed evidence that individuals are risk averse under ambiguity in economic contexts (Cori et al. 2020). This change in the risk-based decision-making mechanisms of individuals may correlate with personality factors and incentive strategies (Heckman et al. 2019). Human capital theory posits that individuals make decisions based on their expected returns on investment, considering factors such as risk aversion and uncertainty. Hence, one possible interpretation of our findings is that the pandemic’s consequences in real life may lead workers with particular personality traits (i.e., extraversion and openness) and motivations (i.e., extrinsic) to join online labor markets and may also change the behavioral approach toward employees already working online (Mourelatos et al. 2020; Volk et al. 2021; Fest et al. 2020). Our results are in line with Arechar and Rand’s (2021) experimental research on Amazon Mechanical Turk that show several demographic changes as a consequence of the influx of new participants into the MTurk subject pool who are more diverse and representative but also less attentive than previous MTurkers. This observation underscores the dynamic nature of human capital formation and utilization, suggesting that shifts in labor market participation during crises can have implications for the composition and behavior of the workforce.

Finally, and most importantly, requesters followed two strategies during the pandemic, either hiring with higher wages or requiring a pretask-training phase offering lower wages to workers. We argue that pretask-training can also be used as a way of quality assurance in online labor contexts. This process of enhancing the skills, capabilities, and knowledge of employees can provide benefits for both requesters and workers. Within the context of human capital theory, we understand that the role of training and skill development is crucial in enhancing productivity and improving labor market outcomes. This suggests that investments in training during the pandemic may contribute to the long-term resilience and adaptability of the workforce. On the other hand, research by Horton and Chilton (2010), Horton (2010), Dube et al. (2020) highlights this may cause changes in both supply and demand dynamics. While payments in crowdsourcing contexts are typically small, the monopsony power of requesters may have significant implications for the future. Unfortunately, our research team was unable to explore this further due to limited access to reward data, which would have allowed for the estimation of recruitment elasticities before and after the COVID-19 outbreak.

5.2 Implications for practitioners

During the last decade, several online labor markets have emerged, allowing workers from around the world to sell their labor to an equally global pool of buyers (Horton 2010). Despite their online context, many of the conventional labor market attributes and features also exist in these markets. Nevertheless, they are constantly under investigation to obtain deeper insights into the business strategies employed by market creators. Research has found that online labor markets are heavy-tailed markets (Ipeirotis 2011) in which there are still substantial search frictions (Horton 2017, 2019), barriers to entry (Stanton and Thomas 2016; Pallais 2014), and information asymmetries (Ipeirotis and Kokkodis 2016; Benson et al. 2020). Furthermore, there is evidence of employers’ monopsony power in online labor markets (Dube et al. 2020) and that online labor markets can be affected by external shocks taking place in conventional labor markets (Horton 2021).

For crowdsourcing scientists, the COVID-19 experience offers several insights. Thus, in this paper, we provide evidence suggesting that online labor markets can are affected by an external shock such as a global pandemic. Our results show that both the supply and demand sides tried to adapt to the new life and real labor conditions,Footnote 23 while the average number of online tasks increased. Hence, employers put additional effort into the design and the hiring phase of the task. Workers, however, did not follow a multiple-task engagement strategy during the pandemic period but rather pursued a more focused labor approach. These behavioral changes may reflect a more conservative approach to the online labor procedure by both sides of the market. However, more research is warranted to quantify the exact effect of the pandemic vs. generic trends over time in a given platform.

Our findings also offer several lessons for would-be market designers. First, except for pretask-training, even more effective and personalized-specific tools and quality assurance mechanisms will be needed to ensure that the online labor supply adequately meets labor demand, both in the short term, as some industries face immediate shortages, and in the longer term, as sectors and industries adjust to postcrisis conditions. Life limitations and restricted mobility have led many employers and workers to remain active in the online working environment where they will try to facilitate post pandemic recovery (Mueller-Langer and Gomez-Herrera 2021).

Second, over the following period, the increasingly lower degree of workers’ engagement in crowdsourcing jobs may signal to online labor market policy-makers that they must consider that workers appear to be particularly vulnerable to real economic shocks and face high levels of potential risk. For that reason, the continued rise of the online labor economy raises the importance of strengthening the social safety net for contingent online participants and the need to reduce the sources of potential risk and remedy externalities by establishing a more standardized online labor environment (Ipeirotis and Horton 2011; Lüttgens et al. 2014).

5.3 Limitations

It is important to recognize that the model's consideration of demographic factors may lack comprehensive information to fully elucidate the variation in task completion probabilities. Although our vector Xi encompasses various task characteristics, and Di vector various demographics, we openly acknowledge the possibility that unobserved variables, such as worker experience, task complexity, and other nuanced elements, may exert influence on task outcomes. Regrettably, due to constraints in data accessibility, we were unable to delve into these factors in greater detail, limiting our ability to draw more definitive causal interpretations. Therefore, our interpretations in this section of the paper should be approached with caution, as our aim was primarily to present and analyze notable trend dynamics within the investigated platform.

Furthermore, while the difference-in-differences methodology employed in our study provides a robust framework for estimating the causal effect of training on our outcomes precisely when the COVID-19 pandemic commenced, it is imperative to acknowledge and delve into the potential sources of bias, particularly self-selection among requesters. Despite the intentional implementation of the pandemic training policy across all platform requesters to ensure uniformity and enhance result quality, we recognize the need to address the limitations stemming from self-selection.

The uniform application of the training policy serves as a strategic measure to render the treatment exogenous, aligning with the principles of our research design. However, the existence of self-selection bias may introduce a layer of complexity to the interpretation of our findings. As highlighted in our earlier discussion, we concede that detailed demographic information about the requesters, beyond the available identifiers and task-specific characteristics, remains elusive for further exploration.

6 Conclusions

In psychology, researchers are typically interested in resilience at the individual level, i.e., how individual characteristics affect people’s ability to adapt to shocks and crises (e.g., Bonanno 2004). In the organizational literature, resilience is defined as an organization’s capacity to anticipate, resist adverse effects, maintain, or restore an acceptable level of functioning and recover from perturbations, crises, or failures (Duchek 2020). Economists have started to use the concept of resilience to describe how well actors at different levels (e.g., individuals, organizations and firms) are able to adapt to changes in their economic and technological environment (e.g., Riepponen et al. 2022). Adaptation to changes in working life status, such as unemployment and re-employment due to changes in economic conditions, are good examples of individual-level resilience research in labor market economics (e.g., Doran and Fingleton 2016). Our research expands the knowledge of this process in the case of online labor markets.

During the COVID-19 pandemic, the online labor platform offered opportunities for requesters to use pretask-training for workers to preserve labor quality and thereby enhance the resilience of the crowdsourcing process. By utilizing a difference-in-difference research design, we provide causal evidence that online training policy, when adopted, leads to a lower level of online job completion time and probability of being discontinued. At the same time, it increases the probability of a job being properly completed during the pandemic period. On the other hand, when a requester uses this quality-assurance strategy, it offers workers lower salaries that are mainly below the average salary range of the crowdsourcing platform. This can be compared to a case where a firm, instead of hiring highly skilled, high-paid employees, hires less educated/low-paid employees and trains them within the company. The only difference is that in the online job market, employees themselves seem to pay for this training investment.

Online labor markets are an interesting environment to study the effect of the pandemic on employment. Crowd work marketplaces are complex sociotechnical systems with high levels of heterogeneity and complexity (Mourelatos et al. 2020). Online labor platforms consist of individuals from different countries and socioeconomic, cognitive and noncognitive levels. For that reason, there is always the possibility of unpredictable side effects and reactions to conventional labor demand or supply shocks. Many observed disparities in labor market conditions between crowdworkers and traditional workers may be attributed to factors other than workers’ ability and skills (Cantarella and Strozzi 2021).Footnote 24

While our findings provide us with a better understanding of how the global pandemic has affected online labor markets, we have noticed that both employers and employees in our online labor context reacted to the exogenous shock of the pandemic by participating in online labor procedures with different risk strategies and labor approaches. We must not forget that online labor markets are based on crowdsourcing employment, which involves individuals coming from in-person jobs and who are driven by different incentives and goals. Future research could focus on analyzing the long-term effects of the pandemic on online work, deepening our understanding of the underlying recovery patterns and, more broadly, the resilience of online labor markets against external shocks.

Data availability

Data are available upon reasonable request.

Notes

Examples of human capital include communication skills, education, technical skills, creativity, experience, problem-solving skills, mental health, and individual resilience.

This option is offered by specific OLMs e.g., Amazone Mechanical Turk but not from Toloka platform.

Human intelligence tasks (HITs) represent single, self-contained, virtual tasks that a worker can execute, submit an answer, and collect a reward for completing. HITs are created by requester customers in order to be completed by worker customers.

They define flexible work arrangements as “temporary help agency workers, on-call workers, contract workers, and independent contractors or freelancers” and involves work done via online platforms such as Amazon Mechanical Turk, TaskRabbit, and E-lance.

Geography profoundly shapes labour market outcomes, in that throughout history, workers have needed to live relatively near the productive capital and their fellow coworkers.

MTurk is extremely inexpensive both in terms of the cost of subjects and the time required to implement studies.

The skill acquisition system includes worker ratings based on service quality. After delivering a service meeting the requester's standards, workers are rated within the crowdsourcing system, reflecting their proficiency. This system calculates each worker’s average approval rate and tenure value, indicating their participation and performance across jobs. By incorporating requester feedback, this system aims to reduce noise and biases, promoting fairness and meritocracy for all participants.

These include the need for competence, autonomy, and relatedness. Along with satisfying these underlying psychological needs, intrinsic motivation also involves seeking out and engaging in activities that we find challenging, interesting, and internally rewarding without the prospect of any external reward.

We included in our sample only requesters and their online tasks, being active, for our period of investigation.

We conducted Kolmogorov–Smirnov normality tests on our continuous variables, with all p-values surpassing 0.05, thus confirming normality. Subsequently, despite its suitability for small sample sizes we also performed Shapiro–Wilk tests, and consistently, all p-values remained above 0.05.

By dividing the 2,984 tasks by the 10-month period before Covid-19, we obtained an average of 299 online tasks per month, while by dividing the 10,798 tasks by the 15-month period of Covid-19, we obtain an average of 719 tasks per month.

Covid dummy equals to one (zero) if the task was conducted during the Covid-19 outbreak (otherwise).

The completion time is included in its natural logarithmic form so that the resulting estimated coefficients are more easily interpretable as percentages.

The only available information at our disposal was an average wage of approximately 5 euros, a figure consistent with comparable microtask platforms (Hornuf and Vrankar 2022).

Empirical papers in the previous literature estimate ordered logit models and report marginal effects where covariates are evaluated at their mean values (e.g., Hayo and Seifert 2003).

We didn’t include Oceania and Africa pandemic outbreaks because the online labor market has only 2% of workers and requesters from these continents.

The workers’ demographics average percentages (i.e., age, gender, country of origin) were provided by the online labor platform.

The evidence from the Chen and Horton (2016) experiment suggests that in a “spot” market as the online labor markets, workers still bring employee-like behavior to their interactions.

There is a growing literature on Difference-in-Differences (DiD) methodology that capitalizes on variation across units treated in a staggered manner, persisting as treated for the subsequent period (Athey and Imbens 2022; Goodman-Bacon 2021; Callaway and Sant’Anna, 2021). In these scenarios, conventional two-way fixed effects DiD specifications, as presented in Eq. (4), yield estimates that lack validity for the parameters of interest. Essentially, these estimates function as weighted averages of all potential 2 × 2 DiDs comparing treated and control units before and after treatment. Depending on the timing of treatment for each unit, such comparisons may pose challenges, introducing the possibility that some units already treated may inadvertently serve as control units (Baker et al. 2022).

eventdd package was used in STATA 17.

Requested tasks are increasing over time within the platform after the pandemic outbreak.

For example, the excess supply spurring from competition from equally skilled but cheaper labor from other countries within the same online labor market, the scarcity, and heterogeneity in demand for these kinds of activities and/or the monopsonistic nature of crowdsourcing platforms, associated with a broader lack of labor standards, enabling the imposition of a heavy mark-up over online workers.

A small number of workers don’t provide gender information in their profiles.

References

Acemoglu D, Restrepo P (2018) Artificial intelligence, automation and work (No. w24196). National Bureau of Economic Research

Adams-Prassl A, Boneva T, Golin M, Rauh C (2020a) Inequality in the impact of the coronavirus shock: evidence from real time surveys. J Public Econ 189:104245

Adams-Prassl A, Boneva T, Golin M, Rauh C (2020b) Work tasks that can be done from home: evidence on the variation within and across occupations and industries. IZA Discussion Paper No. 13374

Agrawal A, Horton J, Lacetera N, Lyons E (2015) Digitization and the contract labor market: a research agenda. University of Chicago Press, pp 219–256

Al-Omoush KS, Orero-Blat M, Ribeiro-Soriano D (2021) The role of sense of community in harnessing the wisdom of crowds and creating collaborative knowledge during the COVID-19 pandemic. J Bus Res 132:765–774

Altig D, Baker S, Barrero JM, Bloom N, Bunn P, Chen S, Thwaites G (2020) Economic uncertainty before and during the COVID-19 pandemic. J Public Econ 191:104274

Angrist JD, Pischke JS (2009) Mostly harmless econometrics: an empiricist’s companion. Princeton University Press

Arechar AA, Rand DG (2021) Turking in the time of COVID. Behav Res Methods 53:1–5

Athey S, Imbens GW (2022) Design-based analysis in difference-in-differences settings with staggered adoption. J Econom 226(1):62–79

Autor D (2001) Wiring the labor market. J Econ Perspect 15:25

Avdiu B, Nayyar G (2020) When face-to-face interactions become an occupational hazard: Jobs in the time of COVID-19. Econ Lett 197:109648

Baker AC, Larcker DF, Wang CCY (2022) How much should we trust difference-in-difference estimates? J Financ Econ 144(2):370–395

Baker SR, Bloom N, Davis SJ, Terry SJ (2020) Covid-induced economic uncertainty (No. w26983). National Bureau of Economic Research

Baqaee D, Farhi E (2020) Supply and demand in disaggregated keynesian economies with an application to the covid-19 crisis (No. w27152). Am Econ Rev 112:1397

Barach MA, Horton JJ (2021) How do employers use compensation history? Evidence from a field experiment. J Law Econ 39(1):193–218

Bayo-Moriones A, Ortín-Ángel P (2006) Internal promotion versus external recruitment in industrial plants in Spain. ILR Rev 59(3):451–470

Benson A, Sojourner A, Umyarov A (2020) Can reputation discipline the gig economy? Experimental evidence from an online labour market. Manage Sci 66(5):1802–1825

Bonadio B, Huo Z, Levchenko AA, Pandalai-Nayar N (2020) Global supply chains in the pandemic. NBER Working Paper No. 27224. National Bureau of Economic Research, Cambridge

Bonanno GA (2004) Loss, trauma, and human resilience: have we underestimated the human capacity to thrive after extremely aversive events? Am Psychol 59(1):20–28

Brinca P, Duarte JB, Faria-e-Castro M (2021) Measuring labor supply and demand shocks during covid-19. Eur Econ Rev 139:103901

Calanter P, Zisu D (2022) EU policies to combat the energy crisis. Glob Econ Obs 10(1):26–33

Callaway B, Sant’Anna PHC (2021) Difference-in-differences with multiple time periods. J Econom 225(2):200–230

Candela M, Luconi V, Vecchio A (2020) Impact of the COVID-19 pandemic on the Internet latency: a large-scale study. Comput Netw 182:107495

Cantarella M, Strozzi C (2021) Workers in the crowd: the labor market impact of the online platform economy. Ind Corp Chang 30(6):1429–1458

Carlsson-Szlezak P, Reeves M, Swartz P (2020a) Understanding the economic shock of coronavirus. Harvard Bus Rev 27:4–5

Carlsson-Szlezak P, Reeves M, Swartz P (2020b) What coronavirus could mean for the global economy. Harvard Bus Rev 3:10

Carmel E, Káganer E (2014) Ayudarum: an Austrian crowdsourcing company in the Startup Chile accelerator program. J Bus Econ 84:469–478

Chan J, Wang J (2018) Hiring preferences in online labor markets: evidence of a female hiring bias. Manag Sci 64(7):2973–2994

Chandler D, Kapelner A (2013) Breaking monotony with meaning: motivation in crowdsourcing markets. J Econ Behav Organ 90:123–133

Chang Y, Chien C, Shen LF (2021) Telecommuting during the coronavirus pandemic: future time orientation as a mediator between proactive coping and perceived work productivity in two cultural samples. Person Individ Differ 171:110508

Chapkovski P (2023) Conducting interactive experiments on Toloka. J Behav Exp Financ 37:100790

Chen DL, Horton JJ (2016) Research note—are online labor markets spot markets for tasks? A field experiment on the behavioral response to wage cuts. Inf Syst Res 27(2):403–423

Colovic A, Caloffi A, Rossi F (2022) Crowdsourcing and COVID-19: how public administrations mobilize crowds to find solutions to problems posed by the pandemic. Public Adm Rev 82(4):756–763

Cori L, Bianchi F, Cadum E, Anthonj C (2020) Risk perception and COVID-19. Int J Environ Res Public Health 17(9):3114

Cortes GM, Forsythe E (2020) Heterogeneous labor market impacts of the COVID-19 pandemic. ILR Rev 76:30–55

Dąbrowska J, Keränen J, Mention AL (2021) The emergence of community-driven platforms in response to COVID-19: GetUsPPE, a multi-sided platform that emerged during the COVID-19 pandemic, offers key insights on how to mobilize and leverage diverse actors to provide solutions in emergencies. Res Technol Manag 64(5):31–38

Del Rio-Chanona RM, Mealy P, Pichler A, Lafond F, Farmer JD (2020) Supply and demand shocks in the COVID-19 pandemic: an industry and occupation perspective. Oxf Rev Econ Policy 36:S94–S137

Difallah D, Filatova E, Ipeirotis P (2018) Demographics and dynamics of mechanical turk workers. In: Proceedings of the eleventh ACM international conference on web search and data mining, pp 135–143

Doran J, Fingleton B (2016) Employment resilience in Europe and the 2008 economic crisis: insights from micro-level data. Reg Stud 50(4):644–656

Dube A, Jacobs J, Naidu S, Suri S (2020) Monopsony in online labor markets. Am Econ Rev Insights 2(1):33–46

Dube A, Manning A, Naidu S (2017) Monopsony and employer mis-optimization account for round number bunching in the wage distribution. Unpublished manuscript

Duchek S (2020) Organizational resilience: a capability-based conceptualization. Bus Res 13(1):215–246

Eickhoff C, de Vries AP (2013) Increasing cheat robustness of crowdsourcing tasks. Inf Retrieval 16(2):121–137

Elenev V, Landvoigt T, Van Nieuwerburgh S (2020) Can the Covid bailouts save the economy? NBERWorking Paper No. 27207. National Bureau of Economic Research, Cambridge

Fairris D, Pedace R (2004) The impact of minimum wages on job training: an empirical exploration with establishment data. South Econ J 70(3):566–583

Farrell AM, Grenier JH, Leiby J (2017) Scoundrels or stars? Theory and evidence on the quality of workers in online labor markets. Account Rev 92(1):93–114

Felstiner A (2011) Working the crowd: employment and labor law in the crowdsourcing industry. Berkeley J Emp Lab l 32:143

Fernández D, Harel D, Ipeirotis P, McAllister T (2019) Statistical considerations for crowdsourced perceptual ratings of human speech productions. J Appl Stat 46(8):1364–1384

Fest S, Kvaloy O, Nieken P, Schöttner A (2020) Motivation and incentives in an online labor market. German Economic Association

Forsythe E, Kahn LB, Lange F, Wiczer D (2020) Labor demand in the time of COVID-19: evidence from vacancy postings and UI claims. J Public Econ 189:104238