Abstract

This paper sheds light on the question of whether a rules-based or general principles-based decision aid is preferable in the context of increased information load by experimentally investigating how different types of decision aids interact with increases in information load in a structured capital budgeting decision-making task. The experiment employed a 2 × 2 between-subjects design and was run in a course on management control systems with 136 master’s degree students at a German university. Subjects were tasked with reviewing investment proposals that contained differing amounts of information (low vs. high information load, i.e., irrelevant information cues in addition to those relevant for the decision). The second manipulation referred to the type of decision aid—either a detailed, rules-based capital budgeting guideline with clear cut-off rates, or the advice to employ generally accepted criteria for investment decision-making. The dependent variables investigated were perceived task complexity, decision accuracy, and decision confidence. Increases in information load and provision of a decision aid based on general principles led to an increase in perceived task complexity. There was only limited evidence for experimental conditions affecting decision accuracy, but the group of subjects relying on the rules-based capital budgeting guideline reported significantly higher decision confidence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Even though capital budgeting decisions have a major impact on financial performance, many firms decentralize at least some of these decisions. Despite potential agency problems, delegating authority to lower levels of the organizational hierarchies can be beneficial, for example, as information is only available at the local or divisional level, or is costly to obtain and process (see the summary by Hoang et al. 2018). To ensure that the firm’s capital is still allocated wisely to the most profitable projects while considering both opportunities and risks, decision-makers are provided with accounting information on these projects. Often, compulsory guidelines are provided for the decision-making process to ensure that capital budgeting decisions are made in compliance with corporate goals (e.g., Istvan 1961; Mukherjee 1988; Segelod 1995, 1997).

Nevertheless, there is no evidence to date on how the design of these guidelines and the information provided within investment proposals affect individual decision-making. Our research question is therefore whether, in the context of increased information load, a rules-based decision aid or reliance on general principles is preferable. To this end, our paper experimentally analyzes the effects of increases in information load on perceived task complexity, decision-making accuracy and confidence under the presence of two forms of decision aids, that is, a rules-based capital budgeting manual (checklist-type procedure) vs. a set of general principles for investment valuation. Our results shed light on how important variables in the domain of decision performance are influenced by variations in the information load and decision aids provided, thus shedding light on the question whether principles or rules-based approaches should be preferred (e.g., Nelson 2003). The rules vs. principles discussion mainly refers to accounting standards (Benston et al. 2006; Nelson 2003; Sundvik 2019) but has also been applied in the domain of corporate governance (Arjoon 2006) and can be extended to decision-making in the management accounting domain. While a rules-based approach relies on a more detailed specification of instructions (as illustrated by the checklist type approach in our experiment), it could lead to a too mechanical behavior (Dowling and Leech 2007), bureaucratization effects (Benston et al. 2006) and in that way, to errors, thus lowering decision accuracy. This effect might only occur when a certain complexity is given, i.e., a certain amount of information is provided. It is therefore necessary to investigate the two variables (information load and type of decision aid) in conjunction.

The contribution of our paper is two-fold. Firstly, our findings support practitioners in the capital budgeting domain (i.e., those in the role of designing and implementing processes and the respective approval mechanisms) in understanding how to provide decision-makers with management accounting information and the relevant tools for structured decision-making, potentially driving more effective capital allocation. Secondly, our findings provide additional transparency with regards to the underlying theories of decision-making performance in the context of information overload by investigating changes in contextual factors (the task, provision of different types of decision aids) in conjunction with increases in information load.

2 Literature review

The research question at hand focuses on the effectiveness of different types of decision aids in the context of increases in information load, investigated for a task set in the capital budgeting domain. As there is no research that focuses specifically on the combination of the two variables in the given context, several research streams need to be considered.

Firstly, effects of increases in information load impairing managerial decision-making have been researched across a broad domain. Schick et al. (1990), Eppler and Mengis (2004), and more recently Roetzel (2019), provide reviews of the existent literature. Specifically in the literature on capital budgeting, Swain and Haka (2000) provide evidence that an increase in information load leads to changes in search patterns in an unstructured decision-making task, but do not investigate decision accuracy. Based on an experiment conducted in Volnhals (2010), Hirsch and Volnhals (2012) find that decision quality decreased beyond a certain point of information input and that managers subjects were not sufficiently aware of information overload effects and therefore exhibited overconfidence concerning the quality of their decisions. In a related field, Roetzel et al. (2020) investigate the impact of information load on escalation of commitment. They find a U-shaped effect with higher information load mitigating escalation of commitment up to a certain point, but then leading to increased escalation.

However, decision-making should not be analyzed solely with reference to information load. Bonner and Sprinkle (2002), extending the model proposed by Bonner (1999), describe performance in a given task as being determined by a broad set of variables relating to person, task, environmental, and incentive scheme characteristics. Therefore, to comprehensively assess the impact of increases in information (or data, i.e., irrelevant information cues; Iselin 1993) load, task characteristics must also be taken into account (Iselin 1988; Libby and Lewis 1977; Schroder et al. 1967).

Still, to date, only few studies have addressed information load and further task characteristics in conjunction. For example, Chan (2001) found no significant effect of graphs as decision aids in improving decision-making under information overload, whereas Umanath and Vessey (1994) show that graphical representation can increase accuracy in a bankruptcy prediction task. Blocher et al. (1986) find an interaction between task complexity and graphical vs. tabular representation, with the first being preferable for less complex tasks, i.e., with low information load. Kelton and Murthy (2016) investigated the impact of interactive drilldown functionalities in firms’ web-based interactive financial statements and found them to reduce perceived cognitive load and earnings fixation under certain conditions. In the capital budgeting context, management accounting researchers have not yet investigated the question of whether a variation in task structure through different kinds of decision aids influences individuals' ability to cope with increases in information load, even though capital budgeting manuals are widely used in organizations (Istvan 1961; Mukherjee 1988; Segelod 1995, 1997).

Furthermore, literature has found variations in the effectiveness of different types of decision aids. Van Rinsum et al. (2018) and Wheeler and Arunachalam (2008), for example, found that a checklist-type decision aid might lead to judgment biases or an increase in confirmatory search behavior. Another potential limitation of decision aid use is an overly mechanistic behavior (e.g., Dowling and Leech 2007). A comparison of different kinds of capital budgeting guidelines (e.g., rules-based vs. principles-based decision aids) thus contributes to our understanding of which type of decision aid might be preferable.

3 Hypotheses development

3.1 Relevant theoretical frameworks

Both the effect of increases in information load and changes in task characteristics are relevant to the research question formulated above. We therefore rely on a combination of several frameworks to identify the variables of interest and formulate our hypotheses. The model by Schroder et al. (1967) is at the base for the prediction of the effects of increases in information load. Furthermore, Schick et al. (1990) explicitly define information overload with a reference to the time at hand, i.e., information cues per time available. The task employed here does not restrict the time as information overload effects are expected to occur independent of the time available. However, among the factors shown to affect decision-making processes and outcomes is not only the amount of information available, but also the context (e.g., task type, instructions) in which decision-making takes place (e.g., Libby and Lewis 1977). Employing different kinds of decision aids ultimately changes the nature of the task. This is made transparent in the model by Libby and Lewis (1977) as well as in the models on task performance (see Bonner 1999; Bonner and Sprinkle 2002). Characteristics of the task at hand (e.g., complexity of the task; Wood 1986) are likely to impact the quality of managerial decisions. Finally, related to information overload effects, the models by e.g., Eppler and Mengis (2004) or Roetzel (2019) consider task characteristics (among other factors) a potential antecedent to information overload, underlining the importance of examining both increase in information load and task characteristics in conjunction. Eppler and Mengis (2004) point out the need for continuous evaluation of outcomes and adjustment of the variables that cause information overload—adjusting the information set and decision aids and repeating the respective task, however, is not in the scope of the present research.

Variables of interest in our research are perceived task complexity, decision accuracy, and decision confidence. Perceived task complexity serves as an indicator for the variation in the task triggered by the application of differing types of decision aids (Bonner and Sprinkle 2002). Decision accuracy is a major criterion in assessing whether these practices, here the application of different types of decision aids, is desirable (Sprinkle 2003). Finally, decision confidence is of interest as people are more likely to act upon their judgments if they are more confident (Norman 1975). It is thus desirable that decision confidence is high when decision accuracy is high, because otherwise high confidence may instigate non-desirable actions (Chung and Monroe 2000).

3.2 Perceived task complexity

According to Wood (1986), task complexity can be divided into three elements: component complexity, coordinative complexity, and dynamic complexity (not relevant in our context). The level of component complexity depends on the number of actions required to perform a task and the related number of information cues that must be processed. Coordinative complexity refers to the form of the relationship between task input (actions and information cues) and task output (the resulting product). The more constraints (e.g., timing, sequence) are to be considered when performing a task, the higher is the coordinative complexity.

The design of the two types of decision aids employed in our experiment can be related to the principles versus rules-based discussion in accounting contexts (e.g., Nelson 2003). Whereas decisions in the "capital budgeting manual" condition had to be made based on thresholds and a set of clear rules, decisions in the "general principles" condition had to be made by prioritizing investment based on general guidance. Applying the task-complexity concept of Wood (1986), Nelson (2003) states that additional rules (as it is the case in the “capital budgeting manual” condition) have ambiguous effects, as more rules are likely to increase component complexity but might also reduce coordinative complexity. Wood (1986) notes that the weights associated to the different components should be highest for dynamic complexity, followed by coordinative complexity, then component complexity. We expect the decrease in coordinative complexity to be larger than the increase in component complexity when subjects are provided with a capital budgeting manual, which leads to the first hypothesis:

Hypothesis 1: Perceived task complexity will be higher for the “general principles” condition than for the “capital budgeting manual” condition.

Increases in information load are likely to result in increased component complexity, as more information cues must be considered (Wood 1986), leading to an increase in perceived overall task complexity:

Hypothesis 2: Perceived task complexity will be higher for the “high information load” condition than for the “low information load” condition.

3.3 Decision accuracy

Similarly to Eppler and Mengis (2004), we use the term “decision accuracy” with regards to the objective correctness of a decision made. The task employed in our experiment is a structured task with a clear definition of the correct answer to each of the questions presented to the subjects. Therefore, decision accuracy can be measured unambiguously.

According to Bonner and Sprinkle (2002), task complexity may affect performance in several ways: by decreasing effort duration and intensity, by making individuals focus more or less on strategy development, or by requiring higher skills for more complex tasks. More specifically, Bonner and Sprinkle (2002), citing expected utility theory and its adaption by Payne et al. (1993), assume effort duration and intensity to decrease if a task is more complex as individuals might consider the relationship between effort and performance as less favorable. This, in turn, might lead them to invest less effort, which is amplified further when self-efficacy regarding the task is low or cannot easily be assessed as may be the case for simpler tasks. More complex tasks also require higher skill levels and more strategy development, which is only beneficial in repeated settings but defers effort from the task in the short run (Bonner and Sprinkle 2002).

As task structure increases, task complexity decreases (Bonner and Sprinkle 2002; Wood 1986). With regards to our experiment, subjects in the “capital budgeting manual” condition faced a more structured task than subjects in the “general principles” condition. In addition, subjects in the “general principles” condition needed to rely on their knowledge to prioritize the investment alternatives, which was not the case in the “capital budgeting manual” condition. It was also likely that in the “general principles” condition, more strategy development would be necessary.

However, there were also factors that might counteract these effects. Firstly, subjects in the “capital budgeting manual” condition needed to familiarize themselves with longer and more detailed instructions, which might divert effort to the instructions away from the task. In addition, a decision aid employing checklist mechanisms might lead to a bureaucratization effect and an overly “mechanical” behavior that might in turn lead to errors (Dowling and Leech 2007).

Still, as we assume the previously named factors to have a stronger influence on decision accuracy, we postulate the third hypothesis:

Hypothesis 3: Decision accuracy will be higher for the “capital budgeting manual” condition than for the “general principles” condition.

As noted above, increases in information load might also cause increases in task complexity, affecting performance. Luft and Shields (2010) highlight two reasons why increases in information load above a certain level are likely to impair decision quality: Firstly, via sub-optimal strategies in selecting information, an increase in absolute quantity of information might decrease the relative amount of information investigated (Payne et al. 1993). Secondly, with an increase in information quantity, the selection process requires more of the limited human information processing capacity, which leads to an inverted-U relationship between information quantity and information integration (Schroder et al. 1967).

In this context, Iselin (1993) differentiates between information load and data load, defining data load as information cues that are irrelevant to the decision. A slightly different definition is that of data having no value or meaning as they lack context (e.g., Rowley and Hartley 2016). According to this definition, data might not lead to information overload as subjects will not at all consider integrating these information cues into their decision-making process and not even need to filter out the irrelevant information. However, irrelevant information as understood in our experiment (following Iselin 1993), is more than incoherent pieces of data but—in line with typical investment decision-making situations—was operationalized as, e.g., additional KPIs and / or a more detailed split of KPIs in the experiment (see e.g., the supplementary materials for the experiment materials, which have been made available for download by the journal together with all Tables and Figures provided as appendices).

In our experiment at hand, we manipulated information load by adding additional irrelevant information cues. Research in different fields has shown that individuals seek irrelevant information and subsequently incorporate it into their judgments (Bastardi and Shafir 1998). The presence of additional information, even if irrelevant, can therefore lead to diluted judgments (Hackenbrack 1992) or to overconfidence in accuracy of one’s answers (Fleisig 2011). Moreover, irrelevant information is likely to increase demands on the capacity for filtering which can lead to errors (Iselin 1993). All in all, the presence of irrelevant information can be hypothesized to impair decision-making performance:

Hypothesis 4: Decision accuracy will be lower for the “high information load” condition than for the “low information load” condition.

3.4 Decision confidence

Chung and Monroe (2000) found a negative relationship between task difficulty and confidence in an audit setting. In the context of general knowledge questions, Kelley and Lindsay (1993, p. 2) argue that the “fluency” with which an answer comes to mind has positively affects confidence in one’s answer. As described above, task difficulty is hypothesized to be lower in the “capital budgeting manual” condition, which should positively affect decision confidence. Lower task difficulty might also contribute to the “fluency” (Kelley and Lindsay, 1993, p. 2) of the decisions made:

Hypothesis 5: Decision confidence will be higher for the “capital budgeting manual” than for the “general principles” condition.

Concerning the effects of increases in information load on decision confidence, the predictions are less clear. On the one hand, increases in information load might lead to a decrease in decision confidence via increases in task complexity (see hypothesis 2). On the other hand, additional information might lead to overconfidence via the impression of having used many information cues (Einhorn et al. 1979; Shepard 1964). Fleisig (2011) experimentally demonstrated that adding information may lead to overconfidence in knowledge retrieval. As we cannot predict which effect might be stronger, we do not formulate a hypothesis for the effects of increases in information load on decision confidence. Figure 1 below summarizes the research design.

4 Research design

4.1 Overview of the experimental task

The experimental task employed a 2 × 2 between-subjects design, referring to differing decision aids (“capital budgeting manual” vs. “general principles”) and information load (“low” vs. “high”). Our experiment was conducted with 136 master's degree students participating in a course on management control systems at a German university in December 2013. As the experimental task contributed to the learning goals of the course, there were no monetary incentives. The materials were pretested by twelve people with an educational background in business administration. There was no time limit for the completion of the task and the experiment lasted for approximately 50 min. In all groups, subjects received investment proposals and information on budget constraints for different product segments of a fictitious company. Manipulations were implemented via (1) information load in the investment proposals (“low” vs. “high”) and (2) the decision aid to be used ("capital budgeting manual" vs. "general principles"). The experimental materials can be found in the supplementary materials and are summarized in the following sections.

In all groups, subjects received seven investment proposals and information on budget constraints for different product segments of a fictitious company (“Smith PLC”). As noted above, manipulations were implemented through (1) information load in the investment proposals (“low” vs. “high”) and (2) the decision aid to be used ("capital budgeting manual" vs. "general principles"). Both groups received the same instructions with regards to budget constraints, saying that they should not approve more investment proposals than would be covered by the budget available for the different fictitious product segments (household, entertainment, and telecommunications). This was followed by the manipulation of the decision aid by providing the subjects with either a capital budgeting manual or an overview on general principles. Subjects then reviewed the investment proposals, which they received as a separate handout and which constituted the information load manipulation. They were then asked to decide whether to accept or reject the investment proposal (“Do you approve the investment described in investment proposal X?—yes or no”) and indicated decision confidence on a 7-point Likert scale for each decision (“On a scale from 1 to 7, how sure are you of the decision made?”) (Hirsch and Volnhals 2012; Holthoff et al. 2015). If they decided to reject a proposal, subjects were also asked to briefly comment on the reasons. The experiment concluded with a post-experimental questionnaire. At certain points during the experiment, subjects were asked to write down the time (a stopwatch was projected at the wall). The primary dependent variables of interest were perceived task complexity, decision accuracy, and subjective decision confidence. Figure 2 illustrates the structure of the experiment.

4.2 Manipulation of the decision aid

Subjects in the "capital budgeting manual" condition were advised to rely on an investment manual (three pages) that clearly specified which thresholds to use, for example regarding the discount rates to be employed for different investment categories and countries or the required payback period. Subjects in the "general principles" condition were instructed to rely on generally accepted criteria for investment valuation that were then specified as net present value, payback period, and risk. In addition, they received information concerning the order of riskiness of different investment categories and countries that had to be taken into account. Furthermore, subjects in the “general principles” condition were reminded that the discount rate consisting of the weighted average cost of capital (WACC) used for calculating the net present value was already adjusted for the risk category of the investment category as well as for country-specific risks.

To execute the experimental task, subjects in the "capital budgeting manual" condition had to compare the values in the investment proposals to the thresholds specified in the capital budgeting manual, whereas subjects in the "general principles" condition had to prioritize investment proposals per product segment based on the principles described in the instructions. Both groups should arrive at the same decisions, but through different argumentations.

The following example illustrates the rationale for the decision regarding the investment proposals in the telecommunications segment. There were two investment proposals, each with an initial investment of €250,000. From the budget constraint for the telecommunications segment (€250,000), it was clear that only one investment proposal could be approved. Subjects relying on the capital budgeting manual would see that the payback period for investment proposal “F” was above the threshold specified in the capital budgeting manual, whereas subjects in the “general principles” condition would compare the two investment proposals on the criteria described in the general principles sections and see that alternative “G” dominates alternative “F,” being equal on all criteria except for the payback period, which was lower for “G.”

Table 1 provides an overview of the rationale for approving or rejecting decisions for each investment proposal. The decision to reject was described as giving the investment proposal back to the requestor. All groups received a glossary with definitions for the terms used in the experimental materials (e.g., NPV, payback period, WACC). We included a reverse order of investment proposals across all cells to control for possible order effects.

4.3 Design of the capital budgeting manual and investment proposals

The layout and contents of the fictitious capital budgeting manual and the investment proposals used in the experiment were inspired by empirical studies on capital budgeting, in particular those analyzing capital budgeting manuals (Mukherjee 1988; Segelod 1995, 1997). We also used studies on capital budgeting practice (Arnold and Hatzopoulos 2000; Brunzell et al. 2013; Graham and Harvey 2001; Istvan 1961; Oblak and Helm 1980; Pike 1996; Ryan and Ryan 2002), practitioners' literature (Bragg 2011; Fabozzi et al. 2008; Moles et al. 2011; veb.ch 2011), and textbooks (Ross 2007), trying to balance the need for a certain degree of realism with the need for a focus on the most important characteristics for the experimental task.

The capital budgeting manual contained the following sections: “goals and contents of the capital budgeting manual,” “classification of investments,” “contents of investment proposals,” and “valuation of investments.” These sections described which discount rates to use depending on country and investment category and when to accept or reject investment alternatives based on thresholds for NPV and payback period.

As noted above, for the manipulation of information load in the investment proposals, there was one version that contained relevant information cues and very few irrelevant information cues ("low information load") whereas the other version contained the same relevant information cues plus a high number of irrelevant information cues ("high information load"). Table 2 illustrates the contents of the investment proposals for low versus high information load.

4.4 Post-experimental questionnaire

Besides manipulation checks focusing on the experimental materials the subjects received, a variety of items to control for possible intervening variables were included. We employed scales that measured personal characteristics, that is, subjective knowledge and experience in investment decision-making; the “Big Five” dimensions on personality, especially conscientiousness (Lang et al. 2001; Rammstedt and John 2007); subjects’ time perspectives (Zhang et al. 2013); and general sociological factors. With regards to perceived task characteristics, we included scales for task complexity and task attractiveness (Fessler 2003; Scott and Erskine 1980). As a measure for effort duration, we used the time spent on the tasks recorded by the subjects (Bonner and Sprinkle 2002), and we also asked subjects to fill in a self-report measure of effort intensity (Yeo and Neal 2004), time pressure (Glover 1997), motivation, and expected performance on the task.

4.5 Variable measurement

We relied on established scales where available. Table 3 provides an overview of the most important variables and the respective measurement.Footnote 1

Single-item measures bear the risk of not adequately capturing the constructs to be measured and their use has raised concerns with regards to reliability and validity compared to multi-item constructs (see Fuchs and Diamantopoulos 2009 for an overview). In a number of cases, we did resort to using single-item measures in order to not overload participants with an even longer questionnaire and risk potential break-offs (e.g., Fuchs and Diamantopoulos 2009). This was especially relevant for the measure of decision confidence that was repeated after each of the seven decisions to be taken.

5 Results

5.1 Descriptives and manipulation checks

In the course of the experiment, 136 questionnaires were collected. As manipulation checks for the between-subjects variables, we used two basic questions regarding the experimental materials the subjects received.

The first manipulation check referred to the “capital budgeting manual” versus “general principles” manipulation. Subjects chose one out of three possible answers regarding the experimental materials received. The second manipulation check referred to the amount of information in the investment proposals (high vs. low information load manipulation). Again, one out of three answers had to be chosen:

Out of the 136 subjects, 98 subjects answered both questions correctly, and two out of the 98 subjects, based on their comments, apparently did not take the task seriously or did not complete the questionnaire. This leaves us with 96 questionnaires.

Table 4 shows the details for each question presented aboveFootnote 2:

The analyses were also run for the total subject pool, including the subjects who failed to answer either one or both manipulation check questions correctly. When taking all 136 subjects into account, a total of four subjects were excluded from analysis as they did not take the task seriously (two in addition to the two subjects mentioned above), which then resulted in a group of 132 subjects. Results based on the total (n = 132) subject pool were qualitatively the same for most analyses. Where this was not the case, the results for the total group are reported in the footnotes.Footnote 3

The following analysis results are based on the 96 subjects described above. All of these subjects indicated that they studied business or economics as a major. 89 subjects indicated German as their mother tongue. The average age was 25.03 years (n = 94; two subjects did not indicate their age; the mean age was approximately equal across groups, ranging from 24.80 to 25.52). 54 subjects were female; 42 subjects were male. Table 5 shows the resulting number of subjects in each cell.

In the post-experimental questionnaire, several questions were included that were used to verify that there were no systematic differences between the groups. One-way ANOVAs conducted across all four groups revealed no significant differences between groups for self-reports (7-point Likert scales) for motivation, the influence of seeing a stopwatch and having to report the time, estimated theoretical knowledge on investment decision-making, practical experience in investment decision-making, and motivation for completing the task. Furthermore, there was no significant difference across groups for age, the grade they presented to enter university, or the score for a selected number of questions on investment decision-making. These results are further supported by the results of a Kruskal–Wallis H test that shows no significant differences between the four experimental groups for the most important subject characteristics (Table 6):

In addition to the basic manipulation checks described above, we were interested in how subjects perceived the information load in the investment proposals. This can be considered an additional check to verify whether the information load manipulation was effective. We therefore included a question regarding the perceived amount of information in the investment proposals (on a 7-point Likert scale anchored by “very little information” and “very much information”) and a question regarding the estimated percentage of information used.

Subjects in the high information load condition were expected to score higher on the first question and lower on the second question; the reverse was expected for the subjects in the low information load condition. Tables 7 and 8 in the supplementary materials show the means, standard deviations, and medians for the two variables across experimental cells.

As predicted, the scores for “perceived information load” are significantly higher in the “high information load” condition (Mdn = 6) than in the “low information load” condition (Mdn = 4); U = 1986.50, z = 6.27, p < 0.001, r = 0.64. Correspondingly, subjects in the “high information load” condition reported significantly lower scores for “percentage of information used” than in the “low information load” condition. Medians are 30.00% and 65.00% respectively (U = 360.50, z = – 5.73, p < 0.001, r = – 0.59).

Interestingly, for the “low information load” condition, there was a statistically significant difference for “percentage of information used” between the “capital budgeting manual” and the “general principles” groups, although the number of information cues inspected should be the same for both groups. Subjects in the “capital budgeting manual” condition reported having used more information (Mdn = 70.00%) than subjects in the “general principles” condition (Mdn = 50.00%); U = 382.00, z = 2.66, p = 0.008, r = 0.39.Footnote 4 A possible reason for the “capital budgeting manual” group reporting having used more information might be that subjects subconsciously considered the contents of the capital budgeting manual as information cues. There is, however, no statistically significant difference for “used information” between those who relied on the capital budgeting manual and those who relied on general principles for the “high information load” condition.

In addition to the questions regarding perceived information load and use, questions regarding the characteristics of the decision aid (“general principles” vs. “capital budgeting manual”) were included. With regards to the rules versus principles discussion, we wanted to discover if there was a difference between the conditions with regards to typical characteristics of rules-based instructions. We therefore included statements referring to “bright-line thresholds,” a high number of rules, very detailed rules, and very precise rules; inspired by Nelson (2003, p. 91). There was also one question asking if general principles had to be followed. For this purpose, 7-point Likert scales with anchors “does not apply at all” and “completely applies” were used. Our data provide evidence for the “bright-line thresholds,” as with respect to this question, subjects in the “capital budgeting manual” condition scored higher (Mdn = 7) than subjects in the “general principles” conditionFootnote 5 (Mdn = 5.50); U = 1489.50, z = 2.88, p = 0.004, r = 0.30.Footnote 6

5.2 Results on perceived task complexity

Perceived task complexity was measured using the scale employed by Scott and Erskine (1980)—three 7-point Likert scales anchored by “difficult-easy,” “complex-simple,” and “varied–routine.” Reliability, as measured by Cronbach’s Alpha, improved from 0.69 to 0.83 when the item “varied-routine” was deleted. A score was calculated by summing the scores on the two remaining items.Footnote 7

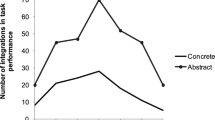

Hypotheses 1 suggests perceived task complexity to be higher for the “general principles” condition. A two-way independent ANCOVA with gender, age, and score of the knowledge questions as covariates revealed a significant main effect of whether subjects received general principles or the capital budgeting manual, F(1, 82) = 5.11, p = 0.026. Thus, hypothesis 1 is supported. Hypothesis 2 predicts perceived task complexity to be higher in the “high information load” condition. For the ANCOVA described above, the main effect of information load on perceived task complexity was also significant, F(1, 82) = 8.53, p = 0.005; Fig. 3. Therefore, hypothesis 2 is supported as well. The interaction term was not significant, F(1, 82) = 0.00, p = 0.995. The detailed results can be found in the supplementary materials (Tables 9–11).Footnote 8

The use of a Mann–Whitney U-test revealed the differences in perceived task complexity triggered by increases in information load in the “general principles” and “capital budgeting manual” conditions: the increase in perceived task complexity caused by an increase in information load was only statistically significant for the “general principles” group (U = 339.50, z = 2.07, p = 0.039, r = 0.31) but not for the “capital budgeting manual” group (U = 389.50, z = 1.44, p = 0.150, r = 0.20).Footnote 9 Using the Jonckheere-Terpstra test for ordered alternatives, we found evidence that perceived task complexity was influenced by both increases in information load and whether subjects relied on general principles or the capital budgeting manual. This result indicates a trend in increasing perceived task complexity from “capital budgeting manual” and “low information load” to “general principle” and “high information load” (J = 2122.00, z = 2.72, p = 0.007, r = 0.28).

5.3 Results on decision accuracy

To test hypotheses 3 and 4, we investigated two outcome measures. The first outcome measure was a score computed as the sum of correct decisions. The second measure was the correct budget allocation per segment (household, telecommunications, or entertainment), coded as a dummy variable with “1” signifying a correct budget allocation and “0” signifying an incorrect budget allocation. In the case of the telecommunications budget, for example, the dummy variable was coded as “1” if subjects approved proposal “G” and rejected proposal “F” based on the payback period (see Table 1) and as “0” in all other cases.

Figures 4 and 5 show the frequency distributions for the sum of correct decisions, the first comparing by “general principles” and “capital budgeting manual” (across both information load groups), and the second comparing the conditions “high information load” and “low information load” (across both decision aid groups). Both figures indicate that there was a concentration of high values at the maximum number of correct decisions (which was 7) and that the distributions are quite similar, suggesting that neither manipulation affected decision accuracy as measured as the sum of correct decisions.

Hypothesis 3 predicts decision accuracy to be higher in the capital budgeting manual condition. For both the general principles and the capital budgeting manual conditions the medians are 7, and the difference for scores in the two groups was not statistically significant (U = 1212.00, z = 0.84, p = 0.401, r = 0.09).Footnote 10 Based on our first measure of decision accuracy, hypothesis 3 is therefore not supported.

Hypothesis 4 suggests that decision accuracy will be lower for the high information load condition. The median for the sum of correct decisions was 7.00 for both the “high information load” and the “low information load” groups. As can be assumed from the frequency distribution, the difference between scores in the two groups was not significant (U = 1089.50, z = – 0.34, p = 0.732, r = – 0.04). Hypothesis 4 is therefore not supported when applying the first measure of decision accuracy.

Nevertheless, based on the second measure of decision accuracy described above (the correct allocation of the available budget per segment), the distribution of the scores for the correct allocation of the telecommunications budget (rejecting investment proposal F and approving investment proposal G) suggests that in the “capital budgeting manual” condition, a higher proportion of subjects allocated the budget correctly (98.00% vs. 77.78%). The association between the type of decision aid subjects received and whether they would allocate the telecommunications budget correctly was significant (Fisher’s exact test: p = 0.003, odds ratio 14.00). Still, the odds ratio must be interpreted with caution, as there was only one subject who did not allocate the budget correctly in the “capital budgeting manual” condition. We therefore find limited support for hypothesis 3 that predicts decision accuracy to be higher in the capital budgeting manual condition.

This result is primarily driven by the “high information load” condition, as there was a significant effect of type of decision aid in the high information load condition (Fisher’s exact p = 0.002), which was not the case for the “low information load” condition (Fisher’s exact p = 0.362). Table 15 in the supplementary materials shows the cross-tabulation with the resulting and expected counts for correctly and incorrectly allocated telecommunication budgets. While there was no significant difference for the low information load group, the difference between correct and incorrect allocations differs significantly between the general principles and the capital budgeting manual conditions for the high information load group. In the general principles condition, only 14 subjects allocated the budget correctly; in the capital budgeting manual condition, 30 subjects allocated the budget correctly.

For the allocation of the budgets for household and entertainment, the proportions of correct decisions do not differ statistically across cells (not tabulated). There was also no statistically significant difference when comparing proportions of correct decisions between low and high information load.

5.4 Results on decision confidence

Decision confidence was hypothesized to be higher in the “capital budgeting manual” condition than in the “general principles” condition (Hypothesis 5). Subjects indicated their confidence on a 7-point Likert scale after each of the seven approve or reject decisions. These scores were summed to calculate a confidence score.Footnote 11

A two-way independent ANCOVA with decision confidence as the dependent variable and gender, age, and score of the knowledge questions as covariates revealed a significant main effect of whether subjects relied on the capital budgeting manual or on general principles, F(1, 72) = 12.34, p = 0.001. Hypothesis 5 is therefore supported. In addition, there was a marginally significant main effect of an increase in information load, F(1, 72) = 3.81, p = 0.055Footnote 12; Fig. 6. The interaction between the two independent variables was not significant, F(1, 72) = 0.19, p = 0.664. The detailed results can be found in the supplementary materials (Tables 16–18).Footnote 13

Calculating Kendall’s tau, there was a significant positive correlation between decision confidence and decision accuracy (τb = 0.26, p = 0.005), suggesting that higher decision confidence goes hand in hand with increases in decision accuracy.

The Jonckheere-Terpstra test for ordered alternatives indicates a trend (decline in decision confidence) from “capital budgeting manual” and “low information load” to “general principles” and “high information load” (J = 926.00, z = – 3.26, p = 0.001, r = – 0.35).

As predicted, values for Kendall’s tau show that perceived task complexity and decision confidence are negatively correlated (τb = – 0.31, p < 0.001).

5.5 Additional analyses

When providing information to decision-makers or offering a decision aid, one could wonder whether personal characteristics of the decision-maker affect how effectively information or a decision aid is utilized. If there are notable differences, a standardized decision aid or provision of the same amount of information might not lead to the same desired results for every decision-maker. Early management information systems articles called for further research to investigate how to provide personalized information based on decision-maker characteristics (e.g., Mason and Mitroff 1973). However, it would be costly to develop personalized decision aids or provide information based on individuals’ preferences or individual choice processes, and this would also require insights into individuals’ decision processes (Snowball 1979). Early articles dealing with information overload on a conceptual level discussed whether individuals with differing structures would face information overload at similar levels (e.g., Wilson 1973). In addition, a number of articles analyzed the effect of experience on decision-making performance (e.g., Iselin 1988; Simnett 1996). However, with some exceptions (e.g., Benbasat and Dexter 1979, who analyze low vs. high analytic personality types) personality traits of the decision-maker have received less attention. We therefore analyzed whether personality dimensions and a measure of individuals’ time perspectivesFootnote 14 (Zhang et al. 2013; Zimbardo and Boyd 1999) influence decision-making performance in our experimental setting to derive whether a standardized approach is suitable.

As described above, in the post-experimental questionnaire, subjects answered a number of questions on personal characteristics, in particular the “Big Five” dimensions (Lang et al. 2001; Rammstedt and John 2007) and time perspectives (Zhang et al. 2013; Zimbardo and Boyd 1999).

The “Big Five” have emerged as a widely used measure for personality, consisting of the dimensions extraversion, agreeableness, conscientiousness, neuroticism, and openness (Digman 1990). The “conscientiousness” dimension was of particular interest. Barrick and Mount (1991) summarize the dimension as measuring “personal characteristics such as persistent, planful, careful, responsible, and hardworking, which are important attributes for accomplishing work tasks in all jobs” (Barrick and Mount 1991, p. 5). In their meta-reviews, Barrick and Mount (1991) and Hurtz and Donovan (2000) analyze the influence of the big five personality dimensions on different criteria for job performance across several occupational groups. They found conscientiousness to consistently predict job performance, while other personality dimensions only correlated with either selected criteria or selected job groups.

The post-experimental questionnaire included a short version of the big five inventory (Rammstedt and John 2007), with the exception of the conscientiousness scale, where the long form was used (Lang et al. 2001). Cronbach’s Alpha for the conscientiousness scale improved from 0.780 to 0.785 when excluding the item “tend to be disorganized” from the analysis. Including conscientiousness in models for perceived task complexity and decision confidence did not reveal a significant influence of the covariate. The same was the case for decision time (see Tables 22–30 in the supplementary materials for results). However, when running a two-way independent ANCOVA with effort intensity as the dependent variable and decision aid and information load as the between-subject factors, conscientiousness as a covariate had a significant positive effect. Subjects scoring high on the conscientiousness scale also scored higher on effort intensity, F(1, 90) = 14.96, p < 0.001; details can be found in the supplementary materials in Tables 31–33.Footnote 15 Analyzing Kendall’s tau as a non-parametric measure for correlation, there was a significant positive correlation between conscientiousness and effort intensity (τb = 0.31, p < 0.001).

Finally, our analysis shows a significant influence when including gender as an additional independent variable in the model for decision confidence, F(1, 77) = 4.98, p = 0.029. The effect of the type of decision aid continued to be significant in the model, F(1, 77) = 10.86, p = 0.001. Male subjects were more confident with their decisions than female subjects (also see Tables 37–39 in the supplementary materials).

In addition, there was a three-way interaction effect between gender, information load, and decision aid in the ANOVA with task complexity as the dependent variable, F(1, 88) = 4.00, p = 0.049.Footnote 16 With general principles as a decision aid, male subjects reacted with an increase in perceived task complexity, while the score for perceived task complexity stayed flat for female subjects (see Fig. 7). This was not the case with the capital budgeting manual as decision aid, where task complexity increased for both male and female subjects when information load increased (see Fig. 8, also see Tables 40–42 in the supplementary materials).

However, including gender as an additional between-subjects factor reduces group sizes further (see Tables 43–45 in the supplementary materials). The results therefore must be interpreted with caution.

6 Discussion

Overall, our results support hypothesis 1 (perceived task complexity will be higher for the “general principles” condition than for the “capital budgeting manual” condition), hypothesis 2 (perceived task complexity will be higher for the “high information load” condition than for the “low information load” condition), and hypothesis 5 (decision confidence will be higher for the “capital budgeting manual” than for the “general principles” condition). There is moderate support for hypothesis 3 (decision accuracy will be higher for the “capital budgeting manual” condition than for the “general principles” condition). Finally, hypothesis 4, which predicted lower decision accuracy for high information load, was not supported.

Trends in the analysis of perceived task complexity suggest that increases in information load and the move from the “capital budgeting manual” condition to the “general principles” condition resulted in increasing perceived task complexity, with information load being the more important factor with regards to changes in perceived task complexity. Correspondingly, trends in decision confidence suggest a decline in confidence. In this case, however, the move from the “capital budgeting manual” to the “general principles” had a more pronounced effect on the decline in decision confidence than an increase in information load.

When comparing results of our experiment with prior literature, the specifics of the task at hand need to be considered, specifically the type of information that was presented (relevant vs. irrelevant vs. redundant), the nature of the task (structured vs. unstructured), and the surrounding environment (e.g., time pressure, incentives)—drawing comparisons therefore needs to be done with caution. However, bearing these restrictions in mind, the following can be formulated with regards to congruence or deviations to prior literature’s findings:

Decision accuracy is a frequently analyzed dependent variable in information overload research. In general, increases in information load leads to a decrease in decision accuracy beyond a certain point (e.g., Chan 2001; Hirsch and Volnhals 2012; Impink et al. 2021). However, most research does not explicitly focus on an increase in the number of irrelevant information cues. To the authors’ knowledge, only Iselin (1993) and Rakoto (2005) have explicitly considered the effect of an increase in irrelevant information. Unlike Iselin (1993), we did not find a significant effect of increases in the number of irrelevant information cues on decision accuracy. This might be because Iselin (1993) used an unstructured decision task, namely a bankruptcy prediction task, whereas our results are based on a structured decision task with an unambiguous solution. Rakoto (2005) found no significant effect of increases in the number of irrelevant information cues on decision accuracy.

Even though it is not the main variable of interest, another comparison can be drawn with regards to relative cue usage. In line with Shields (1983), Stocks and Tuttle (1998), Swain and Haka (2000), Tuttle and Burton (1999), and Hioki et al. (2020), we find relative cue usage to be lower with increasing information load—however, extant literature does not explicitly consider the effect of increases in irrelevant information cues.

The effect of increases in information load on decision confidence was only marginally significant, when including all subjects independent of their result in the manipulation check, it was significant. This is majorly in line with prior literature: Agnew and Szykman (2005) (first experiment), Hirsch and Volnhals (2012), Keasey and Watson (1986), Simnett (1996), and Snowball (1980) find no significant effects on decision confidence, the second experiment in Agnew and Szykman (2005) does find a negative impact on decision confidence. Similarly to Chung and Monroe (2000), we found that increases in task complexity are negatively associated with decision confidence.

With regards to overarching frameworks for the effects of increases in information load, our findings underline the importance of investigating task characteristics together with increases in information load. Effects of increases in information load are embedded in a number of contextual factors, as pointed out by e.g., Eppler and Mengis (2004) and Roetzel (2019). While employing slightly differing terminology, both models name task characteristics, information characteristics, decision-maker characteristics, and characteristics of the data source (such as IT systems) as factors influencing decision processes and outcome. They also highlight the fact that the different factors are interlinked and influence each other.

While we do not find significant interactions between the type of decision aid employed and the amount of information provided, we do observe that task characteristics do play a role and affect both perceived task complexity and decision confidence. As we did not adjust the task during the experiment, we cannot conclude which effect a differently designed decision aid or different type of information provision would have on measures of decision performance—this would be a potential field for future research.

The results from our experiment contribute to a better understanding of how to design capital budgeting guidelines and investment proposals. In our experiment, reliance on a capital budgeting manual resulted in an increase of the second measure of decision accuracy (correct budget allocation per segment), although this was only the case for one out of three budget allocations. In addition, decision confidence was higher in the “capital budgeting manual” condition and correlated with decision accuracy. This indicates support for providing decision-makers with a capital budgeting manual. However, the experimental task was not designed to detect any drawbacks a capital budgeting manual might have (e.g., bureaucratization effects). With regards to generalizability of the results, the following aspects need to be considered: The experimental task was restricted to a short time period, whereas in practice, management accountants are likely to review investment proposals repeatedly over a longer time span. In addition, the organizational context in which decision-making in the capital budgeting domain typically takes place cannot easily be induced in an experimental setting. The fact that subjects in the capital budgeting manual condition reported having used more information compared to subjects in the “general principles” condition for the “low information load” groups might indicate that the use of a capital budgeting manual influences the way decision-makers judge the amount of information considered. Subjects relying on a capital budgeting manual exhibited higher decision confidence. As long as decision confidence is positively associated with decision accuracy, this is a desirable result. However, if the use of a capital budgeting manual leads to very high confidence in decisions made and decision-makers become overconfident, there may be adverse effects.

With regards to the amount of information figuring in the investment proposals, perceived task complexity was higher for the “high information load” condition. As indicated above, increases in task complexity may lead to decreases in performance. Increases in perceived task complexity can therefore be considered as a first step towards a potential decline in decision-making performance. This implies that decision-makers should only be provided with the information essential for the decision at hand, even if the additional information is irrelevant. The observed effect on perceived task complexity should be a conservative measure, as the distinction between relevant and irrelevant information cues was relatively easily made in the experimental task. In a field setting, irrelevant information might not be as easily distinguishable from the more important information cues, and the effect on perceived task complexity is thus likely to be higher. Furthermore, concluding from the additional effects of the type of decision aid provided, task structure and information load should be considered in conjunction—the less structured a task, the more important it is to not provide too much data.

With regards to the realism of the experimental task, we adapted the format of the investment proposals to the documents typically used in practice: information was not presented in tabular format with alternatives in rows and attributes in columns, a format typically employed to study search strategies (Shields 1980; Swain and Haka 2000), but was presented in the form of investment proposals. We assume this format is quite close to the format decision-makers are likely to encounter in practice. As mentioned above, we employed a structured decision task. In practice, most decision tasks taking place in upper and middle management are unstructured decision tasks (Iselin 1993) and additional information will most likely not only be irrelevant information but a mix between relevant, redundant, and irrelevant information potentially leading to a U-curved relationship as initially put forward by Schroder et al. (1967). As there was a clear dominance of one alternative in the “general principles” condition, subjects did not need to trade off attributes against each other (non-compensatory decision strategy), which in turn should not lead to the decision-maker experiencing conflict (Zakay 1985). A clear dominance of such kind, however, is unlikely to be encountered in practice. The highly structured task, combined with a clear dominance of one investment alternative over others, is likely to have contributed to the clustering of scores for decision accuracy at the maximum point of the scale, raising the potential concern that the task might have been too easy. However, the changes in perceived task complexity, decision accuracy, and decision confidence triggered by the provision of a capital budgeting manual or by increases in information load seen in our results are likely to be at the lower end of possible effects, the findings are therefore still valid. Extending the task to less structured decisions or to decisions between alternatives where dominance is less obvious would add to our understanding of how these mechanisms operate in a different task environment. However, the drawback of employing a less structured task would probably be that formulation of a clear measure of task performance is difficult to implement if there are no clear right or wrong answers (Wheeler and Murthy 2011).

In experimental decision aid research, there is often a control group that does not receive any decision aid (Wheeler and Murthy 2011). We chose not to include a group without any decision aid as this would have altered the task in a substantial way—differentiating between relevant and irrelevant information would not have been possible without guidelines as to which information cues to consider.

In addition, the experiment relied on a student sample, there were no monetary incentives and there was no time limit to perform the task. Whether student subjects should be used as a surrogate for practitioners in experimental accounting research has long been a matter of discussion (e.g., Ashton and Kramer 1980; Liyanarachchi 2007). There is moderate support that in decision-making experiments such as the one described here, results for students are not too different from those of practitioners (Ashton and Kramer 1980). In addition, as the students were majoring in business or economics, it can be assumed that they had sufficient knowledge to complete the task and that results therefore should not differ substantially from those that professionals would have achieved. Incentives do have the potential to influence task performance, e.g., for decision accuracy as found by Tuttle and Burton (1999). As the task was related to the course content, no monetary incentives were provided. Applying time pressure can exacerbate the effects of increases in information load (e.g., Hirsch and Volnhals 2012). Investigating which (additional) effect time pressure might have had on decision-making performance was not in the scope of the experiment described.

Besides task characteristics, personal characteristics may also impact task performance (Bonner and Sprinkle 2002). However, the analysis conducted here only produced limited evidence with regards to the effect of personal characteristics.

From the experimental data, it is unclear which information subjects integrated into their judgments, for example whether subjects in the “high information load” condition considered information that was irrelevant to the decision. Although the comments on the reason for rejection of an investment proposal provide some general hints as to which information cues were used, this does not allow for a systematic analysis of information acquired during the decision process. Process tracing methods, as utilized by, for example, Swain and Haka (2000), can be a way to discover more about the information acquisition process during the capital budgeting review process.

Some of the limitations mentioned above are closely linked to opportunities for future research. Future research could further investigate decision aids for unstructured decision-making, capital budgeting decisions in an organizational context, and the application of process tracing technology to further investigate information use. Furthermore, the setting of the experimental task could be adapted to include monetary incentives and evaluate whether limiting the time available leads to differing results. The exclusion rates from the manipulation checks were quite high. Even though this did not affect the homogeneity of the experimental groups, lacking incentives might have increased the diligence in answering the questions. As pointed out above, we applied single-item measures for selected variables to further parsimony of the questionnaire provided. Evaluating (selected aspects) of the experiment applying multi-item measures will be beneficial not only to validate the findings but also for future researchers that investigate e.g., decision confidence in similar settings. In addition, a larger sample size should potentially be considered.

Summarizing the implications for practice, management accountants should ensure to only provide relevant information to the decision-maker. In addition, provision of a clearly structured decision aid was beneficial in the context investigated. While a decision aid is relevant for aiding human decision-making, a next step to improve decision-making could be to have a decision model either make suggestions or even replace human decision-makers. This step is especially relevant and feasible for highly structured tasks, such as the one investigated here. Overall, the experiment further contributes to our understanding of how a decision aid can improve decision-making in the capital budgeting context.

Data availability

Data will be made available upon reasonable request. Supplementary materials are available online.

Notes

The exact wording can be found in the experimental materials in the supplementary materials (in German).

3 Subjects did not answer the manipulation check questions.

“n = 132” in the footnotes refers to the total number of subjects included in the respective analysis. However, as not all questions have been answered by all subjects, some results include missing values, effectively reducing the n for which the analysis could be run.

For n = 132, the difference was only marginally significant with Mdn = 60% for the “general principles” condition and Mdn = 70% for the “capital budgeting manual” condition: U = 608.50, z = 1.73, p = 0.087, r = 0.22.

One subject answered “5–6,” which was coded as 5.5.

For n = 132 subjects, there was a significant difference for two additional questions: the “general principles” group scored higher (Mdn = 6.00) than the “capital budgeting manual” group (Mdn = 5.00) on the question of whether general principles had to be followed: U = 1740.50, z = – 2.03, p = 0.043, r = – 0.18. In addition, surprisingly, the general principles group scored also higher (Mdn = 4.00) than the capital budgeting manual group (Mdn = 3.00) on the question of whether a high number of rules needed to be considered: U = 1741.50, z = – 2.02, p = 0.044, r = – 0.18.

Analysis of perceived task complexity included 89 subjects as not all subjects filled out the questions for perceived task complexity or the included control variables.

To investigate whether the distribution of the perceived task complexity score is approximately normal, normality of residuals across all experimental group was investigated, a Shapiro–Wilk test and the histogram and normal Q–Q plot of residuals indicate that data are sufficiently close to normality (see Tables 12–14 and Figs. 9, 10 in the supplementary materials).

The effect was also significant for the capital budgeting manual condition when including n = 132 subjects: U = 651.50, z = 2.09, p = 0.037, r = 0.26.

One of the 96 subjects did not answer all questions and was excluded from this analysis.

Analysis of decision confidence included 79 subjects as some subjects did not fill out the confidence scales for all decisions or the included control variables.

When including n = 132, the effect was significant with F(1,95) = 4.32, p = 0.040.

To investigate whether the distribution of the decision confidence score is approximately normal, normality of residuals across all experimental group was investigated, a Shapiro–Wilk test and the histogram and normal Q-Q plot of residuals indicate that data are sufficiently close to normality (see Tables 19–21 and Figs. 11, 12 in the supplementary materials).

Analysis of time perspectives was of a more exploratory nature. As it did not yield significant results, we do not report the effects in detail.

Results should be interpreted considering the following deviations from assumptions for ANCOVA: Levene’s test was significant, pointing to heteroscedasticity. In addition, the Shapiro–Wilk test was also significant, indicating a deviation from normality. Distribution of residuals in a histogram, a Q-Q plot, and values for skewness (– 0.90) and kurtosis (0.84), however, do not indicate an extreme departure from normality (see Tables 34–36 and Figs. 13, 14 in the supplementary materials).

When including n = 132 subjects, the interaction effect was no longer significant with F(1, 123) = 1.33, p = 0.250.

References

Agnew JR, Szykman LR (2005) Asset allocation and information overload: the influence of information display, asset choice, and investor experience. J Behav Financ 6(2):57–70

Arjoon S (2006) Striking a balance between rules and principles-based approaches for effective governance: a risks-based approach. J Bus Ethics 68(1):53–82

Arnold GC, Hatzopoulos PD (2000) The theory-practice gap in capital budgeting: evidence from the United Kingdom. J Bus Financ Acc 27(5–6):603–626

Arnold V, Collier PA, Leech SA, Sutton SG (2000) The effect of experience and complexity on order and recency bias in decision making by professional accountants. Account Financ 40(2):109–134

Ashton RH, Kramer SS (1980) Students as surrogates in behavioral accounting research: some evidence. J Account Res 18(1):1–15

Barrick MR, Mount MK (1991) The big five personality dimensions and job performance: A meta-analysis. Pers Psychol 44(1):1–26

Bastardi A, Shafir E (1998) On the pursuit and misuse of useless information. J Pers Soc Psychol 75(1):19–32

Benbasat I, Dexter AS (1979) Value and events approaches to accounting: an experimental evaluation. Account Rev 54(4):735–749

Benston GJ, Bromwich M, Wagenhofer A (2006) Principles- versus rules-based accounting standards: the FASB’s standard setting strategy. Abacus 42(2):165–188

Blocher E, Moffie RP, Zmud RW (1986) Report format and task complexity: interaction in risk judgments. Acc Org Soc 11(6):457–470

Bonner SE (1999) Judgment and decision-making research in accounting. Acc Horiz 13(4):385–398

Bonner SE, Sprinkle GB (2002) The effects of monetary incentives on effort and task performance: theories, evidence, and a framework for research. Acc Org Soc 27(4–5):303–345

Bragg SM (2011) The new CFO financial leadership manual, 3rd edn. Wiley, Hoboken, N.J

Brunzell T, Liljeblom E, Vaihekoski M (2013) Determinants of capital budgeting methods and hurdle rates in Nordic firms. Acc Financ 53(1):85–110

Chan SY (2001) The use of graphs as decision aids in relation to information overload and managerial decision quality. J Inf Sci 27(6):417–425

Chung J, Monroe G (2000) The effects of experience and task difficulty on accuracy and confidence assessments of auditors. Acc Financ 40(2):135–151

Digman JM (1990) Personality structure: emergence of the five-factor model. Annu Rev Psychol 41(1):417–440

Dowling C, Leech S (2007) Audit support systems and decision aids: current practice and opportunities for future research. Int J Account Inf Syst 8(2):92–116

Einhorn HJ, Kleinmuntz DN, Kleinmuntz B (1979) Linear regression and process-tracing models of judgment. Psychol Rev 86(5):465–485

Eppler MJ, Mengis J (2004) The concept of information overload: a review of literature from organization science, accounting, marketing, MIS, and related disciplines. Inf Soc 20(5):325–344

Fabozzi FJ, Peterson Drake P, Polimeni RS (2008) The complete CFO handbook: from accounting to accountability. John Wiley and Sons, Hoboken, N.J

Fessler NJ (2003) Experimental evidence on the links among monetary incentives, task attractiveness, and task performance. J Manag Account Res 15(1):161–176

Fleisig D (2011) Adding information may increase overconfidence in accuracy of knowledge retrieval. Psychol Rep 108(2):379–392

Fuchs C, Diamantopoulos A (2009) Using single-item measures for construct measurement in management research: conceptual issues and application guidelines. DBW Die Betriebswirtschaft 02:195

Glover SM (1997) The influence of time pressure and accountability on auditors’ processing of nondiagnostic information. J Account Res 35(2):213–226

Graham JR, Harvey CR (2001) The theory and practice of corporate finance: evidence from the field. J Financ Econ 60(2–3):187–243

Hackenbrack K (1992) Implications of seemingly irrelevant evidence in audit judgment. J Account Res 30(1):126

Hioki K, Suematsu E, Miya H (2020) The interaction effect of quantity and characteristics of accounting measures on performance evaluation. Pac Account Rev 32(3):305–321

Hirsch B, Volnhals M (2012) Information Overload im betrieblichen Berichtswesen—ein unterschätztes Phänomen. Die Betriebswirtschaft 72(1):23–55

Hoang D, Gatzer S, Ruckes M (2018) The economics of capital allocation in firms: evidence from internal capital markets. Retrieved from https://econpapers.wiwi.kit.edu/downloads/KITe_WP_115.pdf

Holthoff G, Hoos F, Weissenberger BE (2015) Are we lost in translation? The impact of using translated IFRS on decision-making. Account Eur 12(1):107–125

Hurtz GM, Donovan JJ (2000) Personality and job performance: the big five revisited. J Appl Psychol 85(6):869–879

Impink J, Paananen M, Renders A (2021) Regulation-induced disclosures: evidence of information overload? Advance Online Publication, Abacus

Iselin ER (1988) The effects of information load and information diversity on decision quality in a structured decision task. Acc Org Soc 13(2):147–164

Iselin ER (1993) The effects of the information and data properties of financial ratios and statements on managerial decision quality. J Bus Financ Acc 20(2):249–266

Istvan DF (1961) Capital-expenditure decisions: how they are made in large corporations. Bureau of Business Research, Graduate School of Business, Indiana University, Bloomington, Indiana

Keasey K, Watson R (1986) The Prediction of small company failure: some behavioural evidence for the UK. Acc Bus Res (wolters Kluwer UK) 17(65):49–57

Kelley CM, Lindsay D (1993) Remembering mistaken for knowing: ease of retrieval as a basis for confidence in answers to general knowledge questions. J Mem Lang 32(1):1–24

Kelton AS, Murthy US (2016) The effects of information disaggregation and financial statement interactivity on judgments and decisions of nonprofessional investors. J Inf Syst 30(3):99–118

Lang FR, Lüdtke O, Asendorpf JB (2001) Testgüte und psychometrische Äquivalenz der deutschen Version des Big Five Inventory (BFI) bei jungen, mittelalten und alten Erwachsenen. Diagnostica 47(3):111–121

Libby R, Lewis BL (1977) Human information processing research in accounting: the state of the art. Acc Org Soc 2(3):245–268

Liyanarachchi GA (2007) Feasibility of using student subjects in accounting experiments: a review. Pac Acc Rev 19(1):47–67

Luft J, Shields MD (2010) Psychology models of management accounting. Found Trends Account 4(3–4):199–345

Mason RO, Mitroff II (1973) A program for research on management information systems. Manag Sci 19(5):475–487

Moles P, Parrino R, Kidwell DS (2011) Fundamentals of corporate finance. Wiley, Hoboken, NJ

Mukherjee TK (1988) The capital budgeting process of large U.S. firms: an analysis of capital budgeting manuals. Manag Financ 14(2–3), 28–35

Nelson MW (2003) Behavioral evidence on the effects of principles- and rules-based standards. Account Horiz 17(1):91–104

Norman R (1975) Affective-cognitive consistency, attitudes, conformity, and behavior. J Pers Soc Psychol 32(1):83–91

Oblak DJ, Helm RJ, JR. (1980) Survey and analysis of capital budgeting methods used by multinationals. Financ Manag 9(4):37–41

Payne JW, Bettman JR, Johnson EJ (1993) The adaptive decision maker. Cambridge University Press, New York, NY

Pike R (1996) A longitudinal survey on capital budgeting practices. J Bus Financ Acc 23(1):79–92

Rakoto P (2005) Caractéristiques de l’information, surcharge d’information et qualité de la prédiction. Comptabilité - Contrôle - Audit 11(1):23

Rammstedt B, John OP (2007) Measuring personality in one minute or less: a 10-item short version of the Big Five Inventory in English and German. J Res Pers 41(1):203–212

Roetzel PG (2019) Information overload in the information age: a review of the literature from business administration, business psychology, and related disciplines with a bibliometric approach and framework development. Bus Res 12(2):479–522

Roetzel PG, Pedell B, Groninger D (2020) Information load in escalation situations: combustive agent or counteractive measure? J Bus Econ 90(5):757–786

Ross SA (2007) Modern financial management, 8th edn. McGraw-Hill Irwin, New York, NY

Rowley JE, Hartley R, j. (2016) Organizing knowledge: an introduction to managing access to information, 4th edn. Routledge, Abingdon, Oxon, New York, NY

Ryan PA, Ryan GP (2002) Capital budgeting practices of the Fortune 1000: how have things changed? J Bus Manag 8(4):355

Schick AG, Gordon LA, Haka S (1990) Information overload: a temporal approach. Acc Organ Soc 15(3):199–220

Schroder H, Driver M, Streufert S (1967) Human information processing. Holt, Rinehard and Winston, New York

Scott W, Erskine J (1980) The effects of variations in task design and monetary reinforcers on task behavior. Org Behav Hum Perform 25(3):311–335

Segelod E (1997) The content and role of the investment manual—a research note. Manag Account Res 8(2):221–231

Segelod E (1995) Resource allocation in divisionalized groups: A study of investment manuals and corporate level means of control. Aldershot, UK: Avebury

Shepard RN (1964) On subjectively optimum selection among multi-attribute alternatives. In: Shelley MW, Bryan GL (eds) Human judgments and optimality. Wiley, New York, NY, pp 257–281

Shields MD (1980) Some effects on information load on search patterns used to analyze performance reports. Acc Org Soc 5(4):429–442

Shields MD (1983) Effects of information supply and demand on judgment accuracy: evidence from corporate managers. Account Rev 58(2):284–303

Simnett R (1996) The effect of information selection, information processing and task complexity on predictive accuracy of auditors. Acc Org Soc 21(7–8):699–719

Snowball D (1979) Information load and accounting reports: too much, too little or just right? Cost Manag 15(3):22–28

Snowball D (1980) Some effects of accounting expertise and information load: an empirical study. Acc Org Soc 5(3):323–338

Sprinkle GB (2003) Perspectives on experimental research in managerial accounting. Acc Org Soc 28(2–3):287–318

Stocks MH, Tuttle B (1998) An examination of information presentation effects on financial distress predictions. In: Sutton SG (ed) Advances in accounting information systems, 6th edn. JAI Press, Stamford, Conn., pp 107–128

Sundvik D (2019) The impact of principles-based vs rules-based accounting standards on reporting quality and earnings management. J Appl Acc Res 20(1):78–93

Swain MR, Haka SF (2000) Effects of information load on capital budgeting decisions. Behav Res Account 12:171–198