Abstract

The most commonly used variant of conjoint analysis is choice-based conjoint (CBC). Here, hierarchical Bayesian (HB) multinomial logit (MNL) models are widely used for preference estimation at the individual respondent level. A new and very flexible approach to address multimodal and skewed preference heterogeneity in the context of CBC is the Dirichlet Process Mixture (DPM) MNL model. The number and masses of components do not have to be predisposed like in the latent class (LC) MNL model or in the mixture-of-normals (MoN) MNL model. The aim of this Monte Carlo study is to evaluate the performance of Bayesian choice models (basic MNL, HB-MNL, MoN-MNL, LC-MNL and DPM-MNL models) under varying data conditions (especially under multimodal heterogeneity structures) using statistical criteria for parameter recovery, goodness-of-fit and predictive accuracy. The core finding from this Monte Carlo study is that the standard HB-MNL model appears to be highly robust in multimodal preference settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Addressing consumer heterogeneity in choice models is an issue in the marketing literature since the mid-1990s (e.g., Allenby and Ginter 1995; Allenby and Rossi 1998; Rossi et al. 1996). Using appropriate statistical estimation techniques makes it possible for researchers and practitioners to analyze and fully understand markets with truly heterogeneous and/or segment-specific market structures. To date, the most widely applied discrete choice model is the multinomial logit (MNL) model (e.g., Horowitz and Nesheim 2021; Keane et al. 2021), which dates back to McFadden (1973). Considering random taste variation in the MNL model nowadays allows the researcher to derive implications at the individual respondent level and also to avoid or relax the stuck-in-the-middle problem by using individual-level estimates for decisions at the market level (e.g. if a firm plans to launch one new product for an aggregate of consumers). Wedel et al. (1999) distinguished between continuous and discrete representations of consumer heterogeneity. Although the “true” distribution of consumer heterogeneity is often continuous, the concept of the existence of a discrete number of market segments is often more attractive and easier to understand, especially from a managerial point of view (e.g., Ebbes et al. 2015; Tuma and Decker 2013). Whereas discrete approaches often over-simplify the concept of heterogeneity, continuous approaches may not be flexible enough to reproduce consumer heterogeneity adequately, especially if a unimodal heterogeneity distribution is assumed (Allenby and Rossi 1998; Rossi et al. 2005).

Choice models accounting for discrete and continuous representations of heterogeneity became popular for analyzing stated preferences using choice-based conjoint (CBC) data, too (Louviere and Woodworth 1983). On the one hand, the finite mixture MNL approach, proposed by Kamakura and Russell (1989) for the analysis of panel data, was applied to CBC data (DeSarbo et al. 1995; Kamakura et al. 1994; Moore et al. 1998). This approach, also known as latent class (LC) MNL model, divides the market into a manageable number of homogeneous segments with different preference and elasticity structures. On the other hand, Allenby et al. (1995), Allenby and Ginter (1995) and Lenk et al. (1996) published milestone articles for the application of models with continuous representations of heterogeneity to CBC data using hierarchical Bayesian (HB) estimation techniques. Using a normal distribution became the standard procedure to represent preference heterogeneity, referred to as HB-MNL model in the following (e.g., Kim et al. 2007; Webb et al. 2021). A number of researchers have tested and compared the capability and the statistical performance of HB-MNL vs. LC-MNL models, providing ambiguous findings, see e.g. Paetz and Steiner (2017) or Paetz et al. (2019) for detailed reviews. Andrews et al. (2002a) reported that the HB-MNL model worked quite robust even in case of multimodal preference structures. However, it is well known that the thin tails of the normal distribution tend to shrink unit-level estimates toward the center of the data (Rossi et al. 2005). This shrinkage, especially in multimodal data settings, could mask important information (e.g., new or different market structures) (Rossi et al. 2005).

As a generalization of the finite mixture model, the mixture-of-normals (MoN) approach avoids the drawbacks of both the LC-MNL (assumption of homogeneous market segments) and the HB-MNL model (assumption of a unimodal heterogeneity distribution), see Lenk and DeSarbo (2000). Here, a mixture of several multivariate normal distributions representing consumer heterogeneity is applied to a MNL model (Allenby et al. 1998). Using a sufficient number of components, any desired heterogeneity distribution can be approximated using a MoN (e.g., heavy-tailed, multimodal and skewed distributions), see Rossi et al. (2005) or Train (2009). Ebbes et al. (2015) and Chen et al. (2017) more recently reported a better performance of MoN-MNL models in comparison to LC-MNL models in data sets with a large within-segment consumer heterogeneity (Chen et al. 2017) and in the presence of continuous heterogeneity structures (Ebbes et al. 2015).

An additional variant of a discrete choice model for capturing consumer heterogeneity is a hierarchical MNL model with a Dirichlet Process Prior (Voleti et al. 2017). In this way, the researcher is able to model heterogeneity of an unknown form, which allows to classify this approach (as well as the MoN) as Bayesian semi-parametric method (Ansari and Mela 2003; Rossi 2014). One variant of a hierarchical MNL model with a Dirichlet Process Prior is the Dirichlet Process Mixture (DPM) MNL model. Here, part-worth utilities are drawn from continuous distributions (here multivariate normal distributions), where population means and covariances follow a Dirichlet Process. In other words, the continuous distribution is centered around the discrete part-worth utilities of the Dirichlet Process Prior (Voleti et al. 2017). The consideration of within-segment heterogeneity is – as well as in the MoN-MNL − a strength of the DPM-MNL model. Ferguson (1973) and Antoniak (1974) introduced the Dirichlet Process, and although e.g. Escobar and West illustrated Bayesian density estimation based on a Dirichlet Process already in 1995 (Escobar and West 1995), its application in the context of CBC data has been proposed only recently. An advantage of the DPM-MNL is that the number and composition of components are determined as a result a posteriori. Post hoc procedures (e.g., Andrews and Currim 2003) to find the optimal number of segments (components) − like in LC-MNL or MoN-MNL models − are no longer required (Ebbes et al. 2015; Kim et al. 2004; Voleti et al. 2017). Table 1 summarizes the strengths and weaknesses for each of the four model types (LC-MNL, HB-MNL, MoN-MNL, DPM-MNL).

A number of Monte Carlo studies related to conjoint analysis and discrete choice models have been conducted previously, focusing on

-

the comparison of different conjoint segmentation methods (Vriens et al. 1996),

-

the comparison of different variants of MNL models to capture preference heterogeneity (in particular comparing HB-MNL or MoN-MNL versus LC-MNL models, see Andrews et al. (2002a, 2002b), Otter et al. (2004), Chen et al. (2017), and Ebbes et al. (2015)),

-

the comparison of HB-MNL models involving different levels of information (Wirth 2010),

-

the analysis of the statistical capabilities of the HB-MNL model for extreme settings of CBC design parameters (Hein et al. 2020), or

-

the capability of DPM-MNL models to capture differently shaped heterogeneity distributions (Burda et al. 2008; Li and Ansari 2014).

To the best of our knowledge, no Monte Carlo study has yet systematically explored the comparative performance between LC-MNL, HB-MNL, MoN-MNL and DPM-MNL models for CBC data, with all of these models embedded in the same fully Bayesian estimation framework.

In a study by Voleti et al. (2017), the four models were empirically compared on the basis of eleven CBC data sets. The data sets varied in the number of respondents, the number of choice tasks per respondent, the number of alternatives per task, the number of attributes as well as the number of part-worth utilities to be estimated per respondent (which also depends on the number of attribute levels). The authors focused on the predictive accuracy of the different approaches and found that the DPM-MNL outperformed the competing models in terms of holdout sample hit rates and holdout sample hit probabilities. Importantly, on average, the HB-MNL model provided the second-best predictive performance. More, recently, Goeken et al. (2021) also compared the HB-MNL, the MoN-MNL, and the DPM-MNL models (but not the LC-MNL) in an empirical study, applying them to a real multi-country CBC data set for tires. The authors reported a slightly higher cross-validated hit rate for the DPM-MNL compared to both the MoN-MNL and the HB-MNL, thus confirming the tendency of a better predictive performance of the DPM-MNL in empirical settings. But again, the HB-MNL model was close to the DPM-MNL in its predictive accuracy.

Voleti et al. (2017, p. 334) further stated that the “recovery of parameters is also a relevant objective. However, the only way to address this issue is through computer simulations. […] We leave it to future research to address the issue of parameter recovery under alternative assumptions regarding the true distribution of heterogeneity.” Since Goeken et al. (2021) as well focused on empirical data and did not provide any findings for simulated data, we pick up the suggestion of Voleti et al. (2017) in this paper, and study the statistical performance of choice models with different representations of heterogeneity in a Monte Carlo study for CBC data. In particular, we compare the LC-MNL, HB-MNL, MoN-MNL and DPM-MNL models under varying experimental conditions for parameter recovery, goodness-of-fit and predictive accuracy. Like Andrews et al. (2002a), we further incorporate the aggregate MNL model that completely ignores heterogeneity as a benchmark for all heterogeneous models. As opposed to earlier simulation studies, we compare these choice models in one Monte Carlo study and estimate all models within the same Bayesian estimation framework.

Parameter recovery is an important criterion for product design decisions as parameters (part-worth utilities in CBC studies) relate to values of product attribute levels and managers are interested to find the best attribute levels for their products. How well a method can recover hidden “true” utility structures can only be studied with artificial data, but knowing which method under which condition is theoretically better in this aspect constitutes an important asset for managers. Independent of whether companies tailor their products to individual customers or not, it is essential and also standard to measure parameter recovery at the individual respondent level (e.g., Andrews et al. 2002a, 2002b, 2008). In other words, although managers might not be interested in parameter values (preference structures) of specific respondents, a better parameter recovery at the individual respondent level should enable managers to come closer to the real expectations (true preferences) of customers even if product line decisions are subsequently made on a more aggregate level. Market simulations using choice simulators are typically conducted based on individual parameters, even more so as it is well-known that parameter estimates from aggregate models can be strongly biased (“stuck-in-the-middle”). Sometimes, however, companies are also interested in knowing preference parameters of individual respondents, like e.g. in the discrete choice experiments for app-based recommender systems conducted by Danaf et al. (2019). There are also examples for commercial applications where individual-level estimates were the focus, e.g. studies about individual preferences for hair coloration or for preferred products in online shopping trips. Not least, we generally expect personalization efforts of firms and related CBC experiments to further increase in digital environments.

On the other hand, studying the predictive accuracy of the different models under experimental conditions can either generalize the empirical findings of Voleti et al. (2017) or reveal conditions where a different predictive performance can be expected. Predictive accuracy is as well an important dimension for management decisions, since managers are interested in predicting shares of choice (preference shares) as accurate as possible. It has been shown, however, that a model with a high predictive accuracy not necessarily must provide a high accuracy in recovering true parameter values (and the reverse). While minimizing errors in shares-of-choice forecasts represents a natural aggregate measure, it is further also common to assess the predictive validity of a model based on individual-level measures like hit rates or hit probabilities, as used in Voleti et al. (2017). If actual market share data are not available to validate shares of choice predictions, model validation can also be based on individual-level measures (like hit rates in holdout tasks) to find the best model for market simulations. We use the latter approach to provide comparability to Voleti et al. (2017).

To carve out differences in the statistical performance between the classes of models with discrete versus continuous representations of heterogeneity, we specifically vary the levels of within-segment and between-segment heterogeneity. In particular, we want to investigate (1) which representation of heterogeneity is favorable to analyze CBC data, (2) if there is a clear recommendation toward one model for discovering multimodal heterogeneous preference structures and (3) whether (and if how) related findings vary depending on specific levels of our experimental factors. Furthermore, we are particularly interested in (4) how robust the HB-MNL model performs especially in terms of parameter recovery and predictive accuracy compared to the other heterogeneous models due to its underlying unimodal preference distribution which seems least appropriate for segmented markets as considered here. Finally, we want to prove (5) whether the empirical findings of Voleti et al. (2017) with regard to the predictive performance of the models hold for simulated data, too.

In the next section, we propose the design of our Monte Carlo study. In particular, we describe the different choice models, the estimation process, the performance measures used, and the data generation process including experimental factors and factor levels. We subsequently present the results of the Monte Carlo study, discuss implications and provide an outlook onto future research perspectives. We used the R software (R Core Team 2020) for data generation, choice design construction, model estimation and model evaluation. For model estimation, we used the bayesm package (Rossi 2019) within the R software.

2 Design of the Monte Carlo study

2.1 Models

Since the 1990s, hierarchical Bayesian models have been used for part-worth utility estimation in a CBC framework. The strength of these methods is the ability to yield part-worth utilities at the individual respondent level even when little individual respondent information is available. This is possible by using prior distributions, which borrow information from the sample population (population mean and population covariance). Using a multivariate normal distribution as a first-stage prior has become the state–of–the–art to represent heterogeneity. However, the use of a single normal distribution can be considered as a very conservative approach. Unit-level estimates are shrank toward the population mean, which may mask potential multimodalities in consumer preferences (Rossi et al. 2005). Using a mixture of normal distributions as a first-stage prior can relax this weakness. In particular, multimodal heterogeneity structures as well as thick tails and skewed distributions can be modelled that way. Allenby et al. (1998) pointed out that many distributions can be approximated by using the MoN approach.

Let us denote the utility respondent \(n\) \((n=1,\dots ,N)\) obtains from alternative \(j\) \(\left(j=1,\dots ,J\right)\) in choice situation \(s\) \((s=1,\dots ,S)\) as

where \({\mathrm{V}}_{\mathrm{njs}}={\upbeta }_{\mathrm{n}}^{\mathrm{^{\prime}}}{\mathrm{x}}_{\mathrm{njs}}\) and \({\upvarepsilon }_{\mathrm{njs}}\) represent the deterministic utility and the stochastic utility components, respectively. \({\upbeta }_{\mathrm{n}}\) denotes the vector of part-worth utilities of respondent \(n\), and \({\mathrm{x}}_{\mathrm{njs}}\) is a binary coded vector indicating the attribute levels of alternative \(j\) offered to respondent \(n\) in choice situation \(s\). Assuming that the error term \({\varepsilon }_{njs}\) follows a Gumbel distribution we obtain the MNL model (Train 2009):

To be able to model multimodality with a mixture-of-normals approach consisting of \(T\) components, we can specify the hierarchical model as follows (Rossi et al. 2005; Rossi 2014):

\({l}_{n}\in \left\{\left.1,\dots ,T\right\}\right.\) indicates the components from which respondent \(n\) can be drawn and follows a multinomial distribution. \(p\in {\mathbb{R}}^{T}\) denotes the associated probabilities of the multinomial distribution which follow a Dirichlet distribution. \(\alpha \in {\mathbb{R}}^{T}\) can be interpreted as a tightness parameter, which has an influence on the masses of the components. Rossi (2014) for example shows that larger values of \(\alpha\) are associated with a higher prior probability for models with a large number of components. The corresponding population means \({b}_{t}\) and the covariance matrices \({W}_{t}\) with \(t\in \left\{\left.1,\dots ,T\right\}\right.\) are normal and inverse Wishart distributed, respectively. The dimensions of \({b}_{t}\) and \({W}_{t}\) depend on the number of parameters to be estimated. With this model framework, the MoN-MNL model and some nested model variants can be estimated based on CBC data. For \({W}_{{l}_{n}}\ne 0\) and \(T=1\) for example we obtain the HB-MNL model. For diagonal elements of \({W}_{{l}_{n}}\) close to zero we can further approximateFootnote 1 the LC-MNL (\(T\ne 1\)) and the aggregate MNL (\(T=1\)) model (Allenby et al. 1998; Lenk and DeSarbo 2000). A reasonable choice of prior settings therefore leads to an approximated LC-MNL and MNL model with a discrete distribution of heterogeneity. By weighting the estimated part-worth utilities of a LC-MNL with the posterior membership probabilities, we obtain part-worth utilities on an individual level (Andrews et al. 2002a).

Using a Dirichlet Process allows for a countable infinite number of components by supplementing the component parameters with additional priors. The DPM-MNL model can therefore be seen as an extension of the MoN-MNL approach. Rossi (2014) comments on a better approximation of multimodal distributions when using Dirichlet Processes. One possible reason for this superiority is that the Dirichlet Process offers the benefits of automatically inferring the number of mixture components. Rossi (2014) stated that in practical applications no more than about 20 components are used in a MoN approach. In some cases this a priori specified number of components in a MoN approach is not near the limiting case (Rossi 2014). Another possible reason for this superiority is that additional priors are placed on the parameters and hyper-parameters of the Dirichlet Process resulting in substantial performance differences and more flexible prior assumptions. To obtain the DPM-MNL model, we replace the Dirichlet prior by a Dirichlet Process:

\({\alpha }_{DPP}\in {\mathbb{R}}\) is referred to as concentration parameter or Dirichlet Process tightness parameter. Similar to the MoN-MNL, increasing \({\alpha }_{DPP}\) puts a higher prior probability on models with a large number of components (Rossi 2014). Rossi (2014) chooses a flexible priorFootnote 2 for the concentration parameter based on Conley et al. (2008). The advantage of this prior (as compared to e.g. gamma priors) is that the implications for the distribution of the number of possible components are more intuitive to assess (for more details see e.g. Rossi (2014)):

Here, \(\underline{\alpha }\in {\mathbb{R}}\) and \(\overline{\alpha }\in {\mathbb{R}}\) are chosen to reflect the range of the probable number of components, and \(\omega\) is a power parameter. Conley et al. (2008) as well as other authors (e.g., Voleti et al. 2017) describe the modus operandi of the Dirichlet Process and especially of the concentration parameter \({\alpha }_{DPP}\) with the help of the stick-breaking representation published by Sethuraman (1994). There, the draws from the Dirichlet Process can be represented as an infinite mixture of discrete “atoms” with specific probabilities. Following Conley et al. (2008) the baseline distribution \({G}_{0}\) is parametrized as follows:

The priors on \(a, \nu\) and \(u\) are:

where \(d\) is the dimension of the data (here the number of mean part-worth utilities) and \(\mathrm{U}\) is the uniform distribution. Appropriate prior settings as well as more information on the estimation process are presented in the next section.

2.2 Estimation

In the following, MNL, LC-MNL, HB-MNL, MoN-MNL as well as DPM-MNL models were estimated using Bayesian procedures to obtain part-worth utilities. Markov chain Monte Carlo (MCMC) methods were applied to generate draws from posterior distributions.

We used a Gibbs Sampler with a random walk Metropolis step for the MNL coefficients \({\beta }_{n}\) for each respondent \(n\) as outlined in Sect. 5.5 of Rossi et al. (2005) and Sect. 5.2 of Rossi (2014). In addition to the “default” prior settings suggested by Rossi (2014),Footnote 3 we tested a variety of additional prior settings. We finally adapted the prior settings (in particular the settings for the prior covariance matrix \(\Sigma\)) partly from Sawtooth Software (Sawtooth Software 2016) as they provided the best results in terms of part-worth recovery. Specifically, we chose the following prior configuration to estimate MoN-MNL models (compare Eq. (3)):

where \(d\) represents the dimension of the data (here the number of mean part-worth utilities). The prior covariance matrix \(\Sigma\) was chosen according to Sawtooth Software (2016) with a prior variance of \(2\). Since we can approximate the LC model by the MoN model for diagonal elements of \(\Sigma\) being close to \(0\) (Allenby et al. 1998), we modified the parameters of the inverse Wishart distribution to estimate the LC models as followsFootnote 4:

where \(\mathrm{I}\) is the identity matrix. Note that the prior covariance matrix \(\Sigma\) of the inverse Wishart distribution is a positive-definite matrix. Therefore, we approximate the LC-MNL model by setting the diagonal elements of \(\Sigma\) close to zero. The estimation of both the MoN-MNL and the LC-MNL model was carried out for a fixed number of components \(T\in \left\{\left.1,\dots ,6\right\}\right.\), which implicitly included the HB-MNL (\(T=1, {W}_{{l}_{n}}\ne 0\)) and the simple MNL model (\(T=1, {W}_{{l}_{n}}=0\)).

To estimate the DPM-MNL model, we set the power parameter \(\omega\) to 0.8 (Conley et al. 2008). Following Rossi (2014), we set the other prior parameters as follows:

\(\underline{\alpha }\) and \(\overline{\alpha }\) were chosen to provide a broad prior support for values from 1 to 50 components. We also performed a sensitivity analysis regarding these prior settings and found out that results only differed marginally for different choices of \(\underline{\alpha }\) and \(\overline{\alpha }\). This is in line with the findings reported by Rossi (2014) and Voleti et al. (2017).

The MCMC sampler was run for \(\mathrm{200,000}\) iterations with a burn-in period of \(\mathrm{190,000}\) iterations. We used only every \(50\)th draw of the remaining 10,000 draws after convergence to reduce autocorrelation among the draws. We evaluated the performance of the various models based on individual draws after the burn-in phase. More precisely, each measure of performance was at first computed on draw-level, and subsequently averaged across the draws. This procedure enables to fully exploit the posterior distribution and also prevents the label switching problem (Frühwirth-Schnatter et al. 2004; Rodríguez and Walker 2014). We monitored the time-series plots of parameters and performance measures to ensure convergence of the MCMC chains. We furthermore calculated Gelman and Rubin’s potential scale reduction factor to formally prove convergence (Gelman and Rubin 1992). Each check demonstrated that all MCMC chains appeared to reach stable states.

2.3 Experimental design

The choice of the experimental factors and factor levels leans on the Monte Carlo designs used by Vriens et al. (1996), Andrews et al. (2002a), Andrews et al. (2002b) and Andrews and Currim (2003). Overall, six factors were experimentally manipulated in the current study: the model complexity (number of attributes and attribute levels), the number of segments, the separation between segments (between-segment heterogeneity), the segment masses, the degree of within-segment heterogeneity, as well as the number of choice sets to be evaluated per respondent. All factors and their corresponding factor levels used, together with some additional notes, are shown in Table 2, and we will refer back to this table several times in the following.

The more attributes (and attribute levels) are relevant for preference formation, the more parameters (part-worth utilities) a conjoint choice model has. Since attributes in conjoint studies are specified with a discrete number of levels each (including the metric attributes), effects- or dummy-coding is used for parameter estimation (see Sect. 2.1). We vary the number of attributes and levels by analyzing treatments with 6 attributes with 3 levels each, 9 attributes with 4 levels each, or 12 attributes with 5 levels each, leading to choice models with 12, 27, or 48 individual parameters to be estimated for each respondent.Footnote 5 A larger number of individual parameters leads to a higher model complexity (factor 1) and given a certain number of choices per respondent to a smaller number of degrees of freedom for model estimation. It can therefore be assumed that a larger number of parameters at the individual respondent level lead to less reliable parameter estimates in all models. Note that we assign no specific meaning to the attributes and do not consider one attribute explicitly to represent the price attribute, since the interpretation of the attributes can be held arbitrary in our Monte Carlo study. Price is often (very) important in empirical studies as well as generally relevant from an economic point of view in CBC studies if related quantities like willingness-to-pay or expected revenue or profit calculations are additionally considered. On the other hand, there are many situations where price is not relevant in choice experiments. Detergents for example have different fragrances and clients are often only interested in preferences for fragrances in combination with the brand (different fragrances do not affect the price of detergents). Smartphone apps are usually not price relevant and can be added along the preferences of the customers. However, apps are relevant for purchase decisions and clients are therefore interested in customers’ preferences for apps. Lastly, the development of new cars traditionally goes through a three-stage preference elicitation process: conjoint on design (design clinic), conjoint on features (concept clinic), conjoint on pricing (pricing clinic). In the first stage, price is never included since the “look” of the car is the primary focus here. Price is mostly considered only in the last stage, but some manufactures already additionally conduct a price-only conjoint study in the second stage where preferences for additional features (e.g. color, interior design, entertainment features) are collected.

Given a segmented market structure as assumed in our Monte Carlo study, we expect a better performance of the LC-MNL, MoN-MNL and DPM-MNL models compared to simple MNL and HB-MNL models, since the former models can handle multiple segments (factor 2). The simple MNL is not able to detect any segment structures due to its assumption of parameter homogeneity. Similarly, from a theoretical perspective, the assumption of a unimodal prior in the HB-MNL model is per se not in line with the existence of segmented markets or should make it at least much more difficult to identify existing multimodal preference structures. We therefore expect a worse performance of the HB-MNL model for multimodal preference structures in terms of parameter recovery and prediction accuracy, as well. If segments are less clearly separated from each other (factor 3), i.e. the closer segment centroids are to each other and hence the less between-segment heterogeneity exists, the less distinct the disadvantage for the HB-MNL model is expected to be (e.g., Andrews et al. 2002a).

Including factor 4 allows us to consider more or less (within-segment) heterogeneity in the part-worth utility structures across respondents (Andrews et al. 2002a; Hein et al. 2019; Vriens et al. 1996). Since HB models borrow information from all individuals (respondents) for parameter estimation, the degree of heterogeneity in a sample might affect the individual-level parameter estimates. Previous Monte Carlo studies report different findings about whether models with continuous or discrete representations of heterogeneity are better suited to capture existing preference heterogeneity. While Andrews et al. (2002a, 2002b) have shown that continuous and discrete approaches worked similarly well concerning parameter recovery and predictive validity (Andrews et al. 2002a, 2002b), Otter et al. (2004) reported that the discrete (continuous) approach performed superiorly if the underlying heterogeneity distribution was strictly discrete (continuous). In addition, for sparse data at the individual respondent level, Otter et al. (2004) found the discrete approach to provide a superior parameter recovery and predictive performance.Footnote 6

We expect that both a smaller within-segment and between-segment heterogeneity should positively affect the performance of simple MNL and LC-MNL models, because both only use discrete support points. When the inner-segment heterogeneity is large, we expect a better performance of HB-MNL, MoN-MNL and DPM-MNL models. Furthermore, it can be assumed that it is more difficult to identify the “true” segment structure (factor 2) for more heterogeneous samples, especially if the separation between segments is small (factor 3). The heterogeneity levels are chosen according to Andrews et al. (2002a). Based on a variety of Monte Carlo studies in the context of finite mixture models summarized in a meta-study of Tuma and Decker (2013), we generated preference structures for 2, 3 and 4 segments. We further expect problems for the LC-MNL model in identifying small segments, i.e. when the masses of components are rather small. In other words, we expect a better performance of LC-MNL models in the symmetric case when the number of respondents is equal across segments compared to the asymmetric case when segment sizes are different from each other (one large, one or several small segments, factor 5) (Andrews and Currim 2003; Dias and Vermunt 2007).

Factor 6 addresses the implementation of CBC studies in market research practice and the related problem that clients want to incorporate more and more attributes while keeping the choice task manageable for respondents (e.g., Hauser and Rao 2004; Hein et al. 2020). In their meta-analyses of empirical CBC studies, both Hoogerbrugge and van der Wagt (2006) and Kurz and Binner (2012) could show that using too many choice tasks per respondent (more than about 15) did no longer increase or may even decrease the predictive performance of HB models, since respondents tend to apply simplification strategies or become disengaged in later choice tasks (also referred to as “individual choice task threshold”). If a choice design comprises more choice tasks than manageable for a single respondent, the researcher can split the design into several versions. Of course, in a Monte Carlo study the number of choice tasks to be completed by a respondent is not relevant as artificial respondents do not become fatigue. Still, by varying the length of the choice task we are able to analyze the statistical effects of shorter-than-optimal designs (regarding the criterion of orthogonality on the individual respondent level) on the model performance. We expect a worse performance of models when splitting the choice task into several versions since then the choice design does not allow an uncorrelated estimation of main effects (Street et al. 2005).

Note that we did not vary factor 6 for treatments with 12 individual parameters (factor 1, see Table 2) since here the resulting optimal number of 18 choice sets per respondent is (nearly) compatible with the “individual choice tasks threshold” of respondents (see next section for more details). Thus, we obtain \({2}^{3}\times {3}^{2}+{2}^{4}\times 3=120\) experimental data conditions (treatments) and with one replication (i.e., two runs) per treatment 240 data sets. In empirical applications, only the number of parameters to be estimated at the individual respondent level (factor 1) and the number of choice sets respectively the number of versions (factor 6) are observable prior to estimation. In contrast, the number of segments (factor 2), the separation of segments (factor 3), the amount of heterogeneity across respondents (factor 4), and the masses of segments (factor 5) are not known a priori to model estimation. Table 2 provides an overview of all factors and their corresponding factor levels used in our Monte Carlo study together with additional notes.

Generally, we expect the MoN-MNL and especially the DPM-MNL models to outperform the other models (with regard to both parameter recovery, model fit and predictive validity) because they accommodate both within-segment and between-segment heterogeneity. Voleti et al. (2017) only focused on predictive capabilities and found out that the DPM-MNL model can improve the predictive validity. However, for their data sets, the MoN-MNL model was not able to improve the predictive validity over HB-MNL models. On the other hand, data in CBC studies are generally quite sparse on the individual respondent level, and it is therefore not clear whether the performance of the more complex MoN-MNL and DPM-MNL models necessarily outperforms the more restrictive (single) multivariate HB-MNL model.Footnote 7 To the best of our knowledge, no Monte Carlo study related to conjoint data has yet compared the goodness of parameter recovery of MoN-MNL and DPM-MNL models. In particular, we will analyze how well these two types of models are able to detect the “true” part-worth utility structure compared to the other models in extreme scenarios (e.g., 4 segments, small separation, large heterogeneity and asymmetric segment masses). We further controlled for the “overlapping mixtures problem” by holding the sample size constant (Kim et al. 2004), and used 600 respondents following the study of Wirth (2010).

2.4 Data generation

The following section describes how the synthetic data sets were generated in our Monte Carlo study. The data generation process can be divided into the construction of the choice task design, the generation of individual part-worth utilities, and the generation of choice decisions based on the choice task design and individual part-worth utilities. The data sets that support the findings of this study are available from the corresponding author upon request.

2.4.1 Choice task design

Following Street et al. (2005) and Street and Burgess (2007), we constructed optimal choice designs. Determinants for the design generation were the model complexity (factor 1) as well as the number of choice task versions (factor 6). The number of individual parameters, i.e. part-worth utilities to be estimated for each respondent, results from the specification of the number of attributes and attribute levels, as already outlined in the last subsection and summarized in Table 3 below. We used symmetrical designs (i.e., the same number of attribute levels across attributes) to control the number-of-levels effect (Verlegh et al. 2002).

Depending on the number of attributes and attribute levels, we chose an orthogonal array from Kuhfeld (2019) as a starting orthogonal design to fix the first alternative in each choice set. Further alternatives were then added to the first options in each choice set by generating systematic level changes via modulo arithmetic.

As a result, as many pairs of alternatives in a choice set had assigned different levels for each attribute (Street et al. 2005). To ensure an equal distribution of attribute levels (per attribute) across the choice sets (level balance) as well as an equal distribution of attribute levels across alternatives within each choice set (minimal overlap) we constructed choice sets with 3, 4, or 5 alternatives for treatments with 3, 4 or 5 attribute levels (Table 3), respectively (Street and Burgess 2007). The information matrices of our CBC designs were thus diagonal so that estimates of main effects were uncorrelated. By comparing the determinants of the information matrices with the determinants of the information matrices of an optimal design, we obtained a D-efficiency of 100% for each of our generated choice designs.Footnote 8 Based on the generated optimal choice tasks each synthetic respondent completed all corresponding choice sets on the one hand. This ensured that all main effects could be estimated completely independently from each other on the individual respondent level. On the other hand, the choice task length of these optimal designs may be far too large for real respondents. We therefore split up the generated optimal choice tasks into several versions (where necessary) in order to limit the number of choice sets per respondent to a manageable number (factor 6). As a consequence, choice designs were no longer optimal on an individual respondent level because desirable properties such as orthogonality or level balance could have been negatively affected by the split. However, since the choice sets were randomly split into several versions, they were at least near-optimal (Street and Burgess 2007). For the treatments with 6 attributes with 3 levels each the starting orthogonal design comprised 18 alternatives, which can be just considered a manageable number. Therefore, a split of the optimal design across respondents was not necessary here. In contrast, treatments including 9 attributes with 4 levels each resulted in an optimal choice design with 32 choice sets. Accordingly, the design was divided into two versions with a length of 16 choice sets each. Similarly, the optimal design for treatments involving 12 attributes with 5 levels each was divided into 5 versions with a length of 20 choice sets each. This procedure ensured desirable properties for optimal or at least near-optimal discrete choice experiments (Street and Burgess 2007). Table 4 summarizes how factor 6 was operationalized depending on the model complexity (factor 1). To be able to assess the predictive validity of the competing models, three additional holdout choice tasks were randomly generated for each respondent.

2.4.2 Part-worth utilities

For each of the 240 data sets (i.e., for each treatment and replication), individual part-worth utilities were generated in such a way that they followed a mixture of multivariate normal distributions. Leaning on Wirth (2010), elements of a vector of initial “true” mean part-worth utilities (\({\beta }_{start}\in {\mathbb{R}}^{d}\), where \(d\) is the total number of attribute levels) were drawn from a uniform distribution within the range between − 5 and + 5. Such a range for mean betas is typical for empirical applications (cf. Wirth 2010). We can confirm this finding of Wirth based on an inspection of a random sample of 250 real-world HB-CBC studies conducted at one of the largest market research institutes worldwide (with 6 to 12 attributes, 3 to 5 attribute levels, and 11 to 15 choice tasks).Footnote 9

The generation of mean part-worth utilities (centroids) for the segments (factor 2) closely follows the studies of Andrews et al. (2002a) and Andrews and Currim (2003) and is based on the generation of a separation vector that controls the distance between the segment centroids. In particular, the separation between segments was manipulated by generating a vector \({sep}_{z}\in {\mathbb{R}}^{d}\) with \(\mathrm{z}\in \left\{1, 2,\dots ,Z\right\}\) as segment index and \({sep}_{z}\sim N\left(\mathrm{1,0.1}\right)\) for a small separation and \(N\left(2, 0.2\right)\) for a large separation (factor 3), see Andrews and Currim (2003). These vectors were then added to the initial vector of “true” mean part-worth utilities to generate the segment-specific centroids (i.e., “true” segment mean part-worth utilities):

\({SIGNS}_{z}\in {\mathbb{R}}^{d\times d}\) denotes a diagonal matrix containing the values − 1 and + 1, each of which were randomly drawn based on a Bernoulli distribution with parameter 0.5. Finally, the generated segment mean part-worth utilities were rescaled to become zero-based, i.e. so that each first level of an attribute constitutes the reference category with a corresponding part-worth utility of zero. Note that multiplying segment-specific part-worth utilities by a constant factor like in Vriens et al. (1996) or Andrews et al. (2002b) also scales the separation of segments. However, Andrews et al. (2002a) demonstrated that such a procedure affects the scale factor of the MNL model, which makes it difficult to assess parameter recovery (Andrews et al. 2002a). Similarly, multiplying the separation vectors \({sep}_{z}\) by a constant other than − 1 or + 1 would confound the scale factor of the MNL and thus the sensitivity of respondents, too (Andrews and Currim 2003).

Next, inner-segment heterogeneity (factor 4) was generated by adding quantities to the mean segment part-worth utilities \({\beta }_{z}\). These quantities were drawn from a multivariate normal distribution with mean vector 0 and covariance matrix \({V}_{{\beta }_{z}}\in {\mathbb{R}}^{d\times d}\), the latter which was determined by:

\({\mathrm{\rm I}}_{{\beta }_{z}}\in {\mathbb{R}}^{d\times d}\) denotes the identity matrix, and the scalar \(v\) controls the degree of inner-segment heterogeneity with either \(v=0.05\) (small heterogeneity) or \(v=0.25\) (large heterogeneity), see Andrews et al. (2002a).Footnote 10 In addition, segment masses (factor 5) were defined to be either equal or unequal. In the symmetric case, the relative size of segment \(z\) is equal to \(1/Z\). In the asymmetric case, the relative size of the largest segment was fixed to \(1.5\times (1/Z) ,\) while the remaining respondents were split equally across the other segments with relative segment sizes of \((1-1.5\times(1/Z))/(Z-1).\)

Table 5 shows the resulting segment masses for the symmetric versus asymmetric case depending on the number of segments considered.

2.4.3 Generation of choices

Based on the generated choice task designs and the generated “true” individual part-worth utilities, deterministic utilities \({\mathrm{V}}_{\mathrm{njs}}={\upbeta }_{\mathrm{n}}^{\mathrm{^{\prime}}}{\mathrm{x}}_{\mathrm{njs}}\) could be at first computed for each respondent for each alternative in each choice set. Stochastic utilities were subsequently computed by adding a Gumbel distributed error term with standard error variance to the deterministic utilities. Simulated choices were obtained by assuming that each respondent chooses the alternative with the highest stochastic utility from a choice set. Based on the simulated choices part-worth utilities were re-estimated by the different models.

2.5 Measures of performance

We estimated 13 different models for each data set: one aggregate MNL model (as benchmark model), one HB-MNL model, one DPM-MNL model, as well as each five LC-MNL and MoN-MNL models with two to six components. Model selection was at first performed for the estimated LC-MNL and MoN-MNL models to determine the appropriate number of segments, respectively. Though “true” preference structures for a maximum of four segments were generated, we decided to estimate LC-MNL and MoN-MNL models for five and six segments in addition to explore the capabilities of the two types of models to find the “true” number of segments. Subsequent to the model selection process where the best LC-MNL and MoN-MNL solutions were retained, we assessed the statistical performance of the five different types of models. This means that a total of 240 (data sets) × 5 (models) = 1,200 observations were subjected to analysis of variance (ANOVA), i.e. the type of model was included as additional factor in the ANOVAs. Following previous Monte Carlo studies (e.g., Andrews et al. 2002a; Hein et al. 2019; Vriens et al. 1996), we evaluated the performance of the competing models in terms of parameter recovery, goodness-of-fit and predictive accuracy. We used three measures for parameter recovery, three measures for goodness-of-fit, and two measures for predictive accuracy. Each performance measure was computed 200 times based on the 200 individual HB draws that were saved after the burn-in phase (see Sect. 2.2 above) to fully exploit the information of the posterior distribution. Finally, the draw-based scores were averaged to compare the performance of the models along the measures used.

2.5.1 Model selection

For model selection, we computed the marginal likelihood (ML) by means of the Harmonic Mean estimator (Frühwirth-Schnatter 2004; Newton and Raftery 1994; Rossi et al. 2005):

where \(r=1,\dots ,R\) denotes the \(r\)-th draw of the Markov chain used for computing the harmonic mean. The ML penalizes models for complexity, i.e. models with a larger number of parameters get a higher penalty (Frühwirth-Schnatter 2006; Rossi 2014), and it is common practice to prefer more parsimonious models in the model selection process. Following Wirth (2010) and Rossi (2014), we here used the log marginal likelihood (LML) in order to minimize overflow problems. Similar to Elshiewy et al. (2017), we plotted the LML values against the number of components estimated by the LC-MNL or MoN-MNL models and used the “elbow”-criterion for model selection. Furthermore, we examined the more informative sequence plots of the log-likelihood values to identify possible outliers as suggested in Rossi et al. (2005). Note that the approximation of the LML can be influenced by outliers in the vector of log-likelihoods. Following Voleti et al. (2017) and Zhao et al. (2015), we further applied the deviance information criterion (DIC, Spiegelhalter et al. 2002, 2014) and the Watanabe-Akaike information criterion (WAIC, Watanabe 2010) as additional measures for model selection. The latter (WAIC) is closely related to leave-one-out cross-validation, as discussed in Vehtari et al. (2017). Like the LML, DIC and WAIC as well penalize models for complexity. Contrary to these “explicit” model selection procedures (estimating models with a different number of components and selecting the best one), Rossi (2014, p. 29) has suggested to start with a sufficiently large number of components (we here set \(T=6\), see above) and to allow the MCMC sampler to “shut down” a number of the components in the posterior (also see Goeken et al. 2021 for an application). We also tested this kind of model selection in our Monte Carlo study.

2.5.2 Parameter recovery

Parameter recovery was measured by the Pearson correlation between the generated (“true”) and the re-estimated individual part-worths on the individual draw-level. Since Pearson correlations are not interval-scaled, they were rescaled using Fisher’s z-transformation prior to computing the mean Pearson correlation across respondents, and retransformed afterwards to their original scale (Hein et al. 2019, 2020).

As a measure of parameter recovery in absolute terms, the root mean square error (RMSE) between “true” \(({\upbeta }_{\mathrm{nal}})\) and re-estimated part-worth utilities \(\hat{\beta }_{{{\text{nal}}}}^{{\text{r}}}\) was calculated:

where N, A and L refer to the number of respondents, the number of attributes and the number attribute levels.

In addition to the Pearson correlation and the RMSE, we further determined the proportion of “true” part-worth utilities covered by the 95% credible interval of the draws of the posterior distribution, referred to as %TrueBetas (Hein et al. 2020).

2.5.3 Model fit

The percent certainty, the root likelihood, and the in-sample hit rate were used as measures to compare the goodness-of-fit between models. The percent certainty (PC), also referred to as pseudo R2, McFadden’s R2, or likelihood-ratio-index, compares the likelihood of an estimated (final) model to the likelihood of the null model, i.e. a model without any explanatory variables (Hauser 1978; Ogawa 1987):

where \({LL}_{final}^{r}\) and \({LL}_{null}\) denote the log-likelihood of the (final) estimated model based on draw \(r\) and the null log-likelihood.

Log-likelihood values were calculated by

where \({\mathrm{S}}_{\mathrm{n}}\) denotes the number of choice sets offered to respondent \(n\). \({Y}_{njs}\) indicates whether respondent \(n\) has chosen alternative \(j\) from choice set \(s\), and \({\widehat{P}}_{njs}^{r}\) is the choice probability of respondent \(n\) for choosing alternative \(j\) in choice set \(s\) based on draw \(r\). \({LL}_{null}\) represents the chance likelihood, that means \({\widehat{\beta }}^{r}={\left(0,\dots ,0\right)}^{T} \forall r.\)

The root likelihood (RLH) is the geometric mean of hit probabilities (e.g., Jervis et al. 2012)

A RLH value equal to the reciprocal of the number of alternatives in a choice set (here: \(1/J\)) corresponds to completely uninformative utilities of all alternatives (i.e., each alternative has the same utility). In other words, the RLH of the null model equals \(1/J\).

The in-sample hit rate (IHR) represents the percentage of first choice hits in the estimation sample (e.g., Andrews et al. 2002b; Voleti et al. 2017). The term first choice hit means that the alternative chosen by a respondent from a choice set is assigned the highest deterministic utility based on the re-estimated part-worth utilities. Note that the first choice rule is invariant to the value of the scale parameter of the Gumbel distribution.

2.5.4 Predictive accuracy

The hit rate was further computed for holdout choice sets to assess the predictive accuracy, referred to as holdout sample hit rate (HHR). For this, three holdout choice tasks were randomly generated for each respondent. Further, we computed the root mean square error between the “true” and predicted deterministic utilities (RMSE(V)) for each draw (e.g., Andrews et al. 2002b):

\({\mathrm{V}}_{\mathrm{njs}}\) and \({\widehat{\mathrm{V}}}_{\mathrm{njs}}^{\mathrm{r}}\) denote the “true” versus predicted deterministic utilities (the latter based on draw-level) for respondent \(n\), alternative \(j\) and choice set \(s\), respectively. The number of holdout choice tasks \({\mathrm{S}}_{\mathrm{n}}\) was held constant in all treatments \({(\mathrm{S}}_{\mathrm{n}}=3)\).

3 Results and discussion

3.1 Effects on parameter recovery, fit and predictive accuracy

The impact of the six experimental factors and the type of model (aggregate MNL, HB-MNL, LC-MNL, MoN-MNL and DPM-MNL) on each of the eight measures of performance was examined by analysis of variance for main effects and first-order interaction effects. The ANOVAs were based on a total of 1,200 observations (240 data sets times 5 models) with 1,130 degrees of freedom for error. Prior to that, the best LC-MNL and MoN-MNL solutions were selected in a first attempt by applying the “elbow” criterion to the plots of the LML values versus the number of components (2 to 6). Figure 1 displays examples for selecting the right number of components via the “elbow” criterion. In the refinement subsection (Sect. 3.2), we will discuss the results from applying the DIC, WAIC and the “shut down” procedure suggested by Rossi (2014) for model selection.

Panels A-C show three different scenarios for treatments with 2, 3, or 4 “true” segments where the LC-MNL model was estimated for 2 to 6 segments. In all three scenarios, the “true” number of components was clearly identifiable by means of the elbow criterion. Using the LC-MNL model, we were able to recover the “true” number of segments by the elbow criterion uniquely in 82% of all data sets. Panels D-F show another three plots for treatments with 2, 3, or 4 “true” segments, this time relating to estimations based on the MoN-MNL model (again for 2 to 6 components). The picture is completely different here since in neither case the “true” number of segments is identified. First, no clear elbow is visible each time, rather the LML continues to improve for an increasing number of components. And second, if one dared to recognize an elbow, it would suggest the wrong number of segments in each of the three scenarios.Footnote 11 We think plots like the ones in panels D-F are too diffuse to justify a unique solution (i.e., a clear elbow), thus we chose the solution with six components in such cases. In contrast to the LC-MNL model, we were able to identify the “true” number of components via the elbow criterion in only 2% (!) of all data sets when using the MoN approach (in 5 out of 240 data sets). Overall, the model selection process for the MoN models resulted in 67 solutions with five components (28%) and 136 solutions with six components (57%). In another 13% of the cases, wrong solutions with two to four segments were suggested. Note that also the DPM-MNL model returned the “true” number of components in only 14% of all cases (34 out of 240 data sets). The capability of the DPM-MNL model to recover the “true” number of segments was therefore rather disappointing, too.

We further conducted chi-squared tests to assess significant relationships between the experimental factors and the number of re-estimated components (for LC-MNL and MoN-MNL models based on the best solutions determined by model selection by LML). In the cases where we obtained significant results we subsequently analyzed for each respective factor level how often the “true” number of segments could be identified.

It turned out that the “true” number of segments was correctly recovered by the LC-MNL model at all times for treatments with equal segment masses (symmetric case). In contrast, the hit ratio was only 63% for treatments with unequal segment masses (76 out of 120 cases). The number of components suggested by the DPM-MNL depends on the model complexity, the degree of between-segment heterogeneity (separation), and the degree of inner-segment heterogeneity. Fewer components were suggested for treatments with more parameters to be estimated. In particular, a maximum of two segments was found for the treatments with 12 attributes and 5 levels each (81% one-component solutions, 19% two-component solutions). Furthermore, the DPM-MNL models yielded more components in treatments with a small separation between the segments or with a small degree of inner-segment heterogeneity. On the one hand, if the “true” components overlap because they are less clearly separated from each other, the DPM-MNL models tend to a larger number of components. The reason for this result may be that the preference structure of respondents appears more diffuse with less clearly separated segments so that more components are needed to reproduce this diffuse preference pattern. On the other hand, if the “true” components overlap due to a large degree of heterogeneity within segments, the DPM-MNL models tend to a smaller number of components. This may be because preference structures appear to be less multimodal when the “true” segment structures become blurred by a large inner-segment heterogeneity. No significant relationships were found for the MoN-MNL model since 85% of the selected solutions were either 5-component or 6-component solutions. Overall, the LC-MNL model seems to be the best approach by far to recover the “true” number of segments, in particular for scenarios with equal segment masses.

Taking into account only the best LC-MNL and MoN-MNL solutions per data set, we used 1,200 observations (240 data sets times 5 types of models) for analysis of variance.Footnote 12 R-squares (adjusted R-squares) range between 0.533 (0.504) and 0.949 (0.946), whereas half of the R squares are larger than 0.9. Most of the main effects (86%) are highly significant (p < 0.001), indicating differences in the measures of performance between the corresponding factor levels.

First of all, we recognize that many measures of performance are not significantly affected by the factor segment masses (p > 0.05), and if they are (as for the Pearson correlation as well as for IHR and HHR) that F-values turn out rather small compared to other factors. Very high F-values are observed for the type of model which substantially affects all three types of performance measures (recovery, fit, and prediction). In addition, the number of choice sets per respondent represent the factor which most strongly impacts the predictive accuracy (with F-values of 786 and 203 for HHR and RMSE(V)). Higher F-values pointing to substantial differences between factor levels are further observed for the number of parameters in the model (model complexity) and the separation between segments (between-segment heterogeneity).

Furthermore, 62% of the first-order interaction effects are significant. We here, however, consistently observe rather low F-values for nearly all interactions except for some between the type of model and the factor separation (Pearson correlation and the goodness-of-fit statistics). Note that 85% of the interaction effects between the type of model and any of the other factors turn out significant. Here, similar to the main effects, the factor segment masses seems to play a minor role again (as 5 out of 8 interactions between this factor and the type of model are not significant). On the other hand, the separation between segments and the number of parameters in the model (model complexity) are the two factors which most strongly interact with the type of model, in particular w.r.t. goodness-of-fit. It is further noticeable that only 53% of the remaining interaction effects (i.e., excluding interactions where the type of model is involved) are significant. Consequently, the type of model plays a very important role for the goodness of parameter recovery, fit and prediction.

Since even small differences between factor levels may turn out significant for large sample sizes such as in this study (N = 1200), we further report related effect sizes measured by Eta square (η2) in Table 6. Following the guidelines of Cohen (1988), we interpret values of η2 below 0.06 as small effects, between 0.06 and 0.14 as medium-sized effects, and higher than 0.14 as large effects.

In the following, we concentrate on a more detailed interpretation of factors which show at least medium effect sizes. We observe the largest effect sizes for the type of model with very large effect sizes for all goodness-of-fit measures (> 0.72) and the %TrueBetas measure of parameter recovery, large effect sizes for the Pearson correlation (0.29) and the HHR (0.29), and medium effect sizes for both RMSE measures (0.10). In other words, the effect sizes of the type of model on all performance measures are substantial and most of them are large or very large. We further observe medium effect sizes (a) for the number of parameters in the model (model complexity) on parameter recovery (0.10 for both Pearson correlation and RMSE) and the HHR (0.11), (b) for the separation between segments on the Pearson correlation (which measures parameter recovery in relative terms), and (c) for the number of choice sets per respondent on both predictive accuracy statistics, the HHR (0.11) and RMSE(V) (0.08). That also means that 75% of the effect sizes for main effects can be classified as small, and more than half of them are below 0.01. All interaction effects where the type of model is not involved show small if not (as in the very most cases) negligible effect sizes near zero. Considering interactions where the type of model is involved only few (8 out of 48, 17%) show medium-sized effects, and these with only one exception relate to interactions of the type of model with the separation between segments or the model complexity. In particular, we observe medium-sized interactions between (a) the type of model and the separation between segments on the Pearson correlation, PC, RLH, and the HHR, between (b) the type of model and the number of parameters in the model on the RMSE (which measures parameter recovery in absolute terms) and both predictive validity measures (HHR, RMSE(V)), and between (c) the type of model and the degree of inner-segment heterogeneity on RMSE(V). Altogether, the effect sizes provide a rather clear picture: the type of model in particular, and further the number of parameters in the model (model complexity), the separation between segments, as well as the number of choice sets per respondent seem to be the primary drivers for the model performance, while the number of segments, the inner-segment heterogeneity (except for the one interaction effect), and the segment masses do not show any noticeable and in most cases even negligible effect sizes on the model performance. Obviously, the model performance is not substantially affected by the number of segments, although the aggregate MNL and the HB-MNL are not at all or only conditionally able to recover segments. It was further not expected that the degree of inner-segment heterogeneity plays such a weak role especially for the goodness of-parameter recovery.Footnote 13

Table 7 provides the means of the eight performance measures for each individual factor level and further reports significant differences between factor levels based on post hoc tests. For the post hoc tests, we applied the Bonferroni correction to control for the family-wise error rate. For interpreting factor level differences, we again focus on factors which show at least a medium effect size. Rather surprisingly, the HB-MNL model performs excellently in terms of parameter recovery. While, except for the aggregate MNL model, Pearson correlations are comparable across models with high values above 0.95, the HB-MNL model shows a much better performance with regard to the RMSE measure. Especially the DPM-MNL model and the MoN-MNL model perform considerably worse here, showing much larger absolute deviations between the “true” and re-estimated part-worth utilities (DPM-MNL: 3.091; MoN-MNL: 2.608) than the LC-MNL (2.159), the aggregate MNL (2.466) and the HB-MNL (1.650) models. Concerning the percentage of “true” part-worth utilities that lie in the corresponding 95% credible intervals of the draws, we observe that models with a discrete representation of heterogeneity perform inferior and provide inacceptable results (LC-MNL: 0.014, aggregate MNL 0.021). But again, the HB-MNL model (0.746) performs markedly better than DPM-MNL (0.623) and MoN-MNL (0.585) models.

We observe similar results when comparing the predictive accuracy between the models. Except for the MNL model, the HHR between models differ only marginally with values around 83%. The absolute deviations between “true” and re-estimated total utilities of alternatives are much larger for the DPM-MNL (7.469), the MoN-MNL (6.352) and the aggregate MNL model (6.275) than for the HB-MNL (4.199) and the LC-MNL models (5.395). Again, the HB-MNL model here provides the lowest errors. The better performance of the HB-MNL and LC-MNL models in predicting total utilities of alternatives (RMSE(V)) corresponds with the better performance of both models in terms of absolute errors with regard to parameter recovery (RMSE).

We further observe that DPM-MNL and MoN-MNL models provide the best model fit with respect to all three fit statistics (PC, RLH, IHR), whereas the aggregate MNL model performs by far worst. That the aggregate MNL model performs so much worse here compared to the other four models seems to be the reason for the very large effect sizes of the type of model on the three model fit measures (this also applies to the %TrueBetas measure and in alleviated form to Pearson correlations and holdout sample hit rates, where the aggregate MNL is inferior while the other models perform comparable). On the other hand, including the aggregate MNL model in the ANOVAs provided evidence that it performs not worse than the MoN-MNL model or even significantly better than the DPM-MNL model regarding absolute errors in both parameter recovery and prediction accuracy, respectively. We later check if or how much the ANOVA results for the type of model change when the aggregate MNL model is removed from the analyses (see refinements, Sect. 3.2).

Moreover, optimal choice designs on an individual respondent level enable significantly better predictions compared to the case where respondents evaluate only a smaller (manageable) number of choice sets. We further observe slightly higher Pearson correlations for a smaller separation between segments. In addition, we recognize the best parameter recovery both in relative (Pearson correlation) and absolute (RMSE) terms (and also for the %TrueBetas measure) for the most complex treatment with 12 attributes with 5 levels each (A12L5). Similarly, we also observe a very high HHR (0.83) for the most complex treatment (A12L5), which is markedly higher than for the less complex treatment with 9 attributes with 4 levels (A9L4). We will discuss this in more detail next.

We did not expect these results but rather that a higher number of parameters in the model should lead to a worse parameter recovery and a worse prediction accuracy. However, remember that in our design setup a larger number of attribute levels (3, 4, or 5) not only increased the model complexity but also involved larger choice sets containing more alternatives (e.g., 5 alternatives in treatments with 5 attribute levels, while only 3 alternatives in treatments with 3 attribute levels) as well as a much higher optimal number of choice sets to be evaluated by a respondent (compare Table 4). On the one hand, a higher number of alternatives per choice set makes it more difficult to predict respondents’ “true” choices correctly, which should decrease the HHR. On the other hand, more choice sets for each respondent lead to more information on an individual level, which in turn should improve parameter recovery and prediction accuracy. Obviously, the much larger optimal number of choice sets (100 per respondent) for the most complex treatment (A12L5) compared to the two other treatments (A6L3: 18 choice sets per respondent; A9L4: 32 choice sets per respondent) favors the good performance with regard to parameter recovery and prediction accuracy.

To fully understand the effects of (a) the number of parameters in the model, (b) the level of inner-segment heterogeneity and (c) the separation between segments on the performance measures, it is helpful to examine their interaction effects with the type of model. As before, we only focus on interaction effects which showed an at least medium effect size (see Figs. 2 and 3).

Considering the interaction effects between model complexity and type of model (Fig. 2, panels A-C), we observe that the aggregate MNL model has by far the lowest HHR (panel A). This in particular applies to the most complex treatment (A12L5) where the HHR is about 10% lower than for all competing models (panel A). The corresponding interaction effects on absolute prediction errors (RMSE(V)) and absolute parameter recovery errors (RMSE) show similar patterns (panels B and C). For the treatments with 6 attributes with 3 levels (A6L3) and 9 attributes with 4 levels (A9L4) the aggregate MNL, the LC-MNL and the HB-MNL models perform almost equally well (with slight advantages for the HB-MNL model). For the most complex treatment with 12 attributes with 5 levels (A12L5) the HB-MNL clearly outperforms all other models, whereas the aggregate MNL and the LC-MNL models perform worst here. In addition, we observe that for the less complex treatments (A6L3, A9L4) both absolute error measures turn out very large for the MoN-MNL and DPM-MNL models. The number of re-estimated components (for the MoN-MNL and the DPM-MNL models) seems to play a negligible role for the most complex treatment (A12L5). For the DPM-MNL model a maximum of two segments was found for the treatments with 12 attributes and 5 levels each. The MoN model overestimates the number of “true” segments in most cases (see above). However, both models show similar absolute errors for the most complex treatment (lower than the absolute errors for the less complex treatments but higher compared to the HB-MNL model).

A very similar pattern is found for the interaction effect between inner-segment heterogeneity and type of model on absolute prediction errors (RMSE(V)), see panel D in Fig. 2. Here, for treatments with a low inner-segment heterogeneity, the aggregate MNL, the LC-MNL and the HB-MNL models perform again almost equally well (once more with slight advantages in favor of the HB-MNL model), while the MoN-MNL and DPM-MNL models provide inacceptable large prediction errors. As discussed above, this result may be associated with the finding that DPM-MNL (and also MoN-MNL) models tend to more components for treatments with a smaller inner-segment heterogeneity. For treatments with a high inner-segment heterogeneity, the HB-MNL once more clearly outperforms all other models.

When examining the interactions between the separation of segments and the type of model (Fig. 3) on Pearson correlations (parameter recovery), PC, RLH (model fit) and HHR, the most noticeable point is that the aggregate MNL model doesn’t work competitively, in particular not if segments are clearly separated from each other. For the treatments with a large separation, all models with continuous representations of heterogeneity (HB-MNL, MoN-MNL, DPM-MNL) perform nearly equally well. The LC-MNL model performs slightly worse in terms of goodness-of-fit (PC, RLH) but is competitive in terms of Pearson correlations and HHRs. Rather similar results can be observed for treatments with a small separation between segments with the exception that the aggregate MNL doesn’t perform such bad here or even comparable to the other models in terms of Pearson correlations and HHRs. For the same two performance measures, the LC-MNL model shows even a slightly better performance than the models with continuous representations of heterogeneity.

We summarize the main results about effect sizes and the impacts of factor levels on the model performance in Table 8.

3.2 Refinements

We performed sensitivity analyses to check if our ANOVA results stayed robust for two differing scenarios.Footnote 14 First, we excluded the aggregate MNL model as benchmark model from all ANOVAs. We did this check due to the huge effect sizes (> 0.7) we observed for effects of the type of model on all goodness-of-fit statistics (PC, RLH, IHR) and the %TrueBetas measure of parameter recovery (cf. Table 6). The ANOVA results changed only little, providing strong evidence that our findings for the different models are highly robust. The huge effects sizes for the type of model on all goodness-of-fit measures are lower than those reported in Table 6 but still large (> 0.44). The effect sizes for the type of model on the Pearson correlation and HHR turn out only small after removing the MNL model from the ANOVAs. Further, we now observe medium effect sizes for the model complexity on RLH and on IHR, medium effect sizes of the degree of within-segment heterogeneity on all goodness-of-fit measures, and medium to large effect sizes for the number of choice sets per respondent on the RMSE (0.06) and on the Pearson correlation (0.185). For the latter factor, corresponding correlations are still high (optimal number of choice sets: 0.975; manageable number of choice sets: 0.957). As expected (cf. Figure 3) the interaction effects between the type of model and the separation between segments on the Pearson correlation, PC, RLH, and HHR become negligible. As mentioned above, it was not expected that the degree of inner-segment heterogeneity plays such a weak role, especially for the goodness of-fit measures. After dropping the MNL model, we now observe medium effect sizes of the factor heterogeneity on goodness of-fit measures (but only on goodness-of-fit measures). A small heterogeneity enables a significantly better fit compared to a large heterogeneity.

Second, we re-estimated all LC-MNL and MoN-MNL models for the given “true” number of segments instead of determining the best solutions by model selection (e.g., see Vriens et al. 1996). As a result, the MoN-MNL model now comes up with much lower absolute errors of parameter recovery (RMSE: 1.826) and prediction accuracy (RMSE(V): 4.518) as well as with a strongly improved %TrueBetas measure of parameter recovery. All other results regarding effect sizes of the experimental factors and the means of performance measures by experimental condition remained extremely robust. Under this approach, the HB-MNL model and the MoN-MNL model work similarly effective in terms of parameter recovery, goodness-of-fit and predictive accuracy. In particular, the MoN-MNL model reveals slight advantages over the HB-MNL model with respect to Pearson correlations (HB-MNL: 0.968, MoN-MNL: 0.978) and the HHR (HB-MNL: 0.832, MoN-MNL: 0.851), whereas the HB-MNL model performs better w.r.t. the %TrueBetas measure of parameter recovery (HB-MNL: 0.746, MoN-MNL: 0.689). At this point, it is important to note that the “true” number of segments or components is not known in empirical studies.

As noted in Sect. 2.5, we also applied the DIC, WAIC and the “shut down” procedure suggested by Rossi (2014) as alternatives for model selection in addition to the LML criterion. For the LC-MNL model, the true number of segments could be identified as well in the majority of cases when using the DIC (87%) or the WAIC (78%), compared to 82% before via the LML. For the MoN-MNL model, the recovery rate could be improved by using the DIC (9%) or the WAIC (26%), compared to only 2% before (LML). Still, the ability of the MoN-MNL model to identify true segment structures remains quite modest. A somewhat different picture results from using the “shut down” procedure of Rossi (2014) for model selection. For the LC-MNL model, the “true” number of segments could be recovered for only 15% of the simulated data sets, while at least in 38% of all cases by the MoN-MNL model. One possible reason for the rather poor performance of the “shut down” variant obtained for the LC-MNL model could be that the prior configurations used in this paper are a bit more informative than those suggested by Rossi (2014), still they are uninformative. Interestingly, the eight performance measures are hardly affected by the choice of the model selection procedure and remained highly stable for the different model types, as displayed in Table 10 in the Appendix.Footnote 15

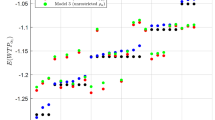

Allenby and Rossi (1998) have already noted that the posterior means of individual-level parameters do not have to follow a normal distribution even if the heterogeneity model is represented by the single normal distribution, as in the HB-MNL model. The rationale behind this is that the single normal distribution is only part of the prior, and the posterior is affected by the individual respondent data (cf. Allenby and Rossi 1998, p. 71). Thus, the distribution of the individual-level parameters could be multimodal even if the heterogeneity model is wrong, which would explain – at least to some degree – the very good performance of the HB-MNL model in our study.Footnote 16 The following density plots displayed in Fig. 4 should bring more light into this issue, showing for selected treatments how multimodal the simulated preference distributions were and how well individual-level parameters were recovered. In addition, Fig. 4 provides examples for treatments when MoN-MNL and DPM-MNL models overestimated the “true” number of components.

Selected density plots for “true” distributions of part-worth utilities (black lines) versus re-estimated distributions of part-worth utilities by model type (HB-MNL: blue lines; MoN-MNL: green lines; DPM-MNL: red lines). Upper left panel: 3 “true” segments, large separation, small heterogeneity. Upper right panel: 2 segments, small separation, large heterogeneity. Lower left panel: 2 segments, large separation, large heterogeneity. Lower right panel: 2 segments, small separation, small heterogeneity (note that the two true segments are not visible in the lower right panel due to their small separation and the coarse scaling required to represent the estimated components from the DPM-MNL model; for a finer resolution, see the bottom part of Fig. 5 in the Appendix where the DPM-MNL has been excluded)

Shown are selected individual-level preference distributions for true versus re-estimated part-worth utilities for different numbers of segments and different combinations of factor levels regarding the factors separation of segments (between-segment heterogeneity) and within-segment heterogeneity. The solid black lines refer to the generated “true” preference distributions, while the dashed and/or dotted lines refer to the re-estimated part-worth distributions obtained from the HB-MNL (blue), MoN-MNL (green), and DPM-MNL (red) models.