Abstract

Reaction times (RTs) are an essential metric used for understanding the link between brain and behaviour. As research is reaffirming the tight coupling between neuronal and behavioural RTs, thorough statistical modelling of RT data is thus essential to enrich current theories and motivate novel findings. A statistical distribution is proposed herein that is able to model the complete RT’s distribution, including location, scale and shape: the generalised-exponential-Gaussian (GEG) distribution. The GEG distribution enables shifting the attention from traditional means and standard deviations to the entire RT distribution. The mathematical properties of the GEG distribution are presented and investigated via simulations. Additionally, the GEG distribution is featured via four real-life data sets. Finally, we discuss how the proposed distribution can be used for regression analyses via generalised additive models for location, scale and shape (GAMLSS).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The brain is a system that consists of millions of thousands of neurons designed to perceive and respond to external stimulus in a highly nonlinear and complex way. Reaction times, broadly defined as the time lapse between the presentation of a stimulus and a response to it, have been proven a ubiquitous metric extensively used in experimental psychology, cognitive neuroscience, psychophysiology and behavioural neuroscience to explain the mechanisms supporting higher- and lower-order cognitive processes. Research suggests there is a tight coupling between neuronal RTs and behavioural RTs (see Palmer et al. 2007; Galashan et al. 2013; Mukamel and Fried 2012; Levakova et al. 2015) and that neural processing, signal transmission, and decision processes are bundled within that time lapse (see Voelker et al. 2017; see Figure 1 in Commenges and Seal (1986) for a representation of the association between stimulus, neuronal RTs, and behavioural RT). The importance of investigating RTs has been demonstrated via computational, human- and animal-based behavioural approaches. From a computational perspective, for example, it has been shown that behavioural RTs can be used as biomarkers for characterising neurological diseases (Maia and Kutz 2017). From a human behavioural perspective, evidence shows that RTs can indeed allow differentiation between certain brain diseases. For example, Jahanshahi et al. (1993) showed that patients with Parkinson’s disease, Huntington’s disease and cerebellar disease exhibit different average RTs, and Osmon et al. (2018) demonstrated the clinical advantage of examining the distribution of Attention Deficit/Hyperactivity and neurotypical patients’ RTs. Animal-based research has shown how and when specific sets of neurons fire to selected stimuli under highly controlled experimental settings (e.g. Sun et al. 2019; Luna et al. 2005; Múnera et al. 2012; Veit et al. 2014).

Various distributions including the Inverse-Gaussian (or Wald), Weibull, Log-Normal, Weibull-Gaussian, and Gamma have been used to fit behavioural and neural reaction time data. For example, Palmer et al. (2007) used the Weibull distribution, Leiva et al. (2015) used the Birnbaum–Saunders distribution, and Seal et al. (1983) used the Gamma distribution. Other distributions have recently been proposed for modelling RT data. For example, Tejo et al. (2018, 2019), and Martínez-Flórez et al. (2020) have put forward the shifted Birnbaum–Saunders, shifted Gamma distributions, and Exponential-Centred Skew-Normal distributions, respectively, as good fits to RTs. For example, Osmon et al. (2018) fitted the participants’ RTs with the three-parameter Johnson’s SU distribution,Footnote 1 and Foroni et al. (2020) found the four-parameter Sinh-Arcsinh distribution gave the best fit for simple choice RTs. However, the distribution most commonly used to fit RT data is the Exponential Gaussian (also known as Exponentially Modified Gaussian or Ex-Gaussian distribution; here referred to as EG). This is a three-parameter distribution that can fit the data’s location, scale and rightward exponential shape. The EG hence shows a positive skew, which is the canonical shape of both neuronal and behavioural RTs [e.g. see Figure 4 in Hanes and Schall (1996), Figure 2 in Hauser et al. (2018), and Figure 2 in Mormann et al. (2008) for neuronal RT shapes, Figure 7 in Osmon et al. (2018) and Figures 3C, 4C, and 5B in Fischer and Wegener (2018) for behavioural RT shapes]. Although flexible enough to fit the typical positive skew distribution of RTs, the EG cannot fit RTs that exhibit normal-like or even negative skew. Normal- and negative-like shapes have been reported for rate RTs (reciprocal RTs, i.e. 1/RT) [see Figure 2A in Harris et al. (2014)] and recognition (go/no-go) RTs [see Figure 2 in Limongi et al. (2018)], respectively.

In this article, a four-parameters distribution called Generalised Exponential Gaussian is proposed. Similar to the EG distribution, the proposed distribution can fit positively skewed RT shapes but it has the advantage of fitting Gaussian-like and negatively skewed RT shapes. The article unfolds as follows: first, statistical arguments in favour of asymmetric distributions are presented; second, the properties of the EG are outlined; third, the details of the proposed distribution are described; fourth, the results of a computer simulation examining the properties of the proposed distribution are reported; fifth, the proposed distribution is illustrated via four data sets; sixth, generalised additive models for location, scale and shape (GAMLSS) are briefly presented, as this is the only existing regression framework suitable to model regression data in a fully distributional fashion; and finally, the statistical and practical implications of the proposed distribution are discussed in relation to GAMLSS.

Asymmetric distributions as better alternatives to model non-normal data

Most statistical analyses featured in published research rely on techniques that assume, among other things, that data follow a normal distribution. In practice, however, data tend to follow non-normal shapes [see Bono et al. (2017)]. When faced with non-normal distributions, the common approach is to transform the numeric variables. Although transformations can indeed be successful, they bring challenges in relation to the interpretability of the new metric and back-transformation (Marmolejo-Ramos et al. 2015; Pek et al. 2018; Vélez et al. 2015). Specifically, it is not always possible to find a back-transformation that enables interpretation of the parameter estimate, and this issue is more pronounced when there are several variables with different transformations [see Azzalini and Capitanio (1999)]. Distributions more flexible than the traditional Gaussian have been proposed to overcome these challenges. These newly proposed distributions enable data with different degrees of asymmetry and kurtosis to be tackled. Some of these distributions are the Skew-Normal [SN; Azzalini (1985)], Power-Normal [PN; Durrans (1992); Pewsey et al. (2012)], and Skew-Normal Alpha-Power [here called SNAP for short; Martínez-Flórez et al. (2014)].

The SN model is defined by the probability density function (PDF)

where \(\phi\) and \(\Phi\) are the density and distribution functions, respectively, of the standard normal distribution, and \(\lambda\) is a skewness parameter. This distribution is denoted by \(Z\sim {SN(\lambda )}\) and, in addition to the work by Azzalini (1985), it has been extensively studied by Henze (1986), Chiogna (1998), Gómez et al. (2007) and Pewsey et al. (2012).

Alternatively, Lehmann (1953) proposed a family of distributions with PDF \(F(z;\alpha )=\{F(z)\}^{\alpha -1}\), where F is a distribution function with \(z\in {\mathbb{R}}\), and \(\alpha \in \mathbb{Z}^+\). In general terms, this distribution is generated from the distribution of the maximum of the sample. This model is known in the literature as Lehmann’s alternative model and is widely discussed by Gupta and Gupta (2008).Footnote 2

The PN model, denoted by \(Z\sim PN(\alpha )\) and introduced by Durrans (1992), has the following PDF:

where \(\phi\) and \(\Phi\) are the density and distribution functions, respectively, of the standard normal distribution and \(\alpha\) is a shape parameter. This distribution has multiple applications in cases where data cannot be handled through normal distributions and, instead, data present high or low asymmetry and/or kurtosis.

Martínez-Flórez et al. (2014) proposed the SNAP distribution (\(SNAP(\lambda ,\alpha )\)), a more flexible extension of the previous two distributions. This distribution not only contains the SN and PN distributions as special cases, but it also includes the normal distribution. The PDF of the SNAP distribution is as follows:

The SN, PN, and SNAP distributions illustrate the versatility of asymmetric and generalised distributions for fitting non-Gaussian data (Table 1 provides a summary of key aspects of these distributions). Although it could be argued that these distributions provide good-enough fits to RT data, they have not been investigated in the context of RT experiments. The EG distribution, however, is the most commonly used distribution to fit RTs obtained in (neuro)psychological experiments [see Dawson (1988)].

The Ex-Gaussian distribution and its statistical properties

This probability model was introduced by Hohle (1965) through the convolution of two independent random variables; the Normal and Exponential PDFs. This distribution was conceived between 1956 and 1963 (Christie and Luce 1956) while searching for a model that could represent the disjunctive structure of RTs. That is, the RT is composed of an exponentially distributed decision time, plus a variable time or motor RT. After studying the cumulative distribution function (CDF) of the RT for different intensities of auditory stimuli, McGill (1963) concluded that the variable had an exponential form with similar constant times. This led to the assumption that at least one component of the total RT had an exponential distribution. Given that the time constants implied by the curves appeared to be almost independent of the stimulus intensity, McGill (1963) assumed that this component was the time required for the motor response, while the other component was assumed to be the time taken to make a decision. Specifically, the EG’s exponential distribution component has a constant average response \(\tau\) (Luce 1986; McGill 1963) while the remaining part of the EG follows a normal distribution \(N(\mu ,\sigma ^{2})\) (Hohle 1965). These statistical properties of the EG distribution are described in more detail in “Appendix” [see also section 13.3.2.1 in Rigby et al. (2020)].

The generalised exponential-Gaussian distribution

Based on the distribution of the sample’s maximum, Lehmann (1953) proposed the family of distributions \(\mathbb{F}_F=\{F(x)\}^\alpha\) where \(F(\cdot )\) is an absolutely continuous distribution function and \(\alpha\) is a rational number. In the context of hydrology, Durrans (1992) extended this model to a family of distributions \(\mathbb{G}_F=\{F(x)\}^\alpha\) where \(F(\cdot )\) is a distribution function and \(\alpha \in {\mathbb{R}}^+\). This family of distributions has PDF \(g(x)=\alpha f(x)\{F(x)\}^{\alpha -1}\) where \(f=dF\) denotes the distribution of fractional order statistics [see also Stigler (1977)]. When \(F=\Phi\) (i.e. the Normal distribution’s CDF), it is known as the generalised normal distribution. This is a flexible distribution that can be conceived as an extreme values distribution given that \(\alpha \in {\mathbb{R}}^+\). Also, when \(\alpha \in \mathbb{N}\), this distribution can fit data with positive or negative skews. Finally, when \(\alpha =1\), the original distribution’s PDF \(f(\cdot )\) is obtained.

Following the above-mentioned work by Durrans (1992), a generalisation of the distribution of the maximum in the \(f_{EG}(\cdot )\) is proposed by considering an \(\alpha -\)fractional order statistic where \(\alpha \in {\mathbb{R}}^+\). That is, the goal is to extend the EG distribution by incorporating a new parameter that controls the skewness and kurtosis of the distribution, i.e. the distribution’s shape. As the new distribution has skewness and kurtosis values above and below those possible by the original \(f_{EG}(\cdot )\), it is hence much more flexible than the traditional EG distribution in accommodating skewness and kurtosis.

According to the results given by Durrans (1992) and Pewsey et al. (2012), the exponential extension of the EG distribution is given by the following PDF:

such that \(\alpha \in {\mathbb{R}}^+\) is a shape parameter that controls skewness and \(\tau\) regulates kurtosis; \(\mu\) and \(\sigma\) are the location and scale parameters respectively (such that \(\sigma >0\) and \(-\infty<\mu <\infty\)). This distribution is called from here on the Generalised Exponential Gaussian (GEG) distribution and it is denoted by \(X\sim GEG(\tau ,\mu ,\sigma ,\alpha )\). Note that when \(\alpha =1,\) the GEG meets the EG distribution. On the other hand, when \(\tau \rightarrow 0\), then \(\mathbb{E}(X)\rightarrow \mu , ~~Var(X)\rightarrow \sigma ^{2},~~skew\rightarrow 0\quad \text {and}\quad kurt\rightarrow 3\); i.e. \(EG(\tau ,\mu ,\sigma ^{2})\rightarrow N(\mu ,\sigma ^{2})\). Additionally, when \(\tau \rightarrow 0\) and \(\alpha \ne 1\), the GEG distribution converges to the PN distribution; i.e. \(GEG(\tau ,\mu ,\sigma ,\alpha )\rightarrow PN(\mu ,\sigma ,\alpha )\). Figure 1 displays some of the shapes the GEG distribution can take.

The CDF of the random variable \(X\sim GEG(\tau ,\mu ,\sigma ,\alpha )\) is given by:

To generate a random variable with GEG distribution, a uniform random variable U in (0,1) should be used. So, letting

then

from where it follows that

where \(F_{EG}^{-1}(\cdot ;\tau ,\mu ,\sigma )\) is the inverse function of the EG distribution with parameters \(\tau ,\mu ,\sigma\), which is available in the R packages emg and gamlss.dist (via the function ‘exGAUS’).

The survival, inverse risk, and the hazard functions of the GEG distribution are:

where \(r_{EG}(\cdot )\) is the inverse risk function of the EG distribution defined in Eq. (11 in “Appendix”). This entails the inverse risk function of the GEG distribution being directly proportional to the previous function, and in the same way, they are intervals where \(r_{GEG}\) grows or decreases. Some properties of the exponentiated generalized class of distributions can be found in Cordeiro et al. (2013). Details regarding the GEG distribution’s moments, log-likelihood function, score function, and information matrices are presented in “Appendix”.

A simulation-based assessment of the generalised exponential-Gaussian distribution

A simulation was carried out to investigate the maximum likelihood estimates (MLEs) of the GEG’s parameters across several data generating process (DGP) scenarios. While \(\mu\) and \(\sigma\) were set at 0 and 1 respectively (as no major impact of location and scale on the quality of the estimates was expected), \(\tau\) and \(\alpha\) were varied such that \(\tau \in \{0.5,\, 1.25\)} and \(\alpha \in \{0.75,\, 1.75,\, 2.75\}\). Variations of these parameter values were assessed across small and large sample sizes such that \(n\in \{50;\, 100;\, 200;\, 400;\, 800;\, 1600\}\). Each of the resulting 36 DGP was replicated 1000 times.

For each setting, the empirical bias, root mean squared error (rMSE), and coverage probability were estimated (see Table 2). The coverage probability had a 95% Wald confidence interval utilising the inverse of the observed Fisher information as the asymptotic covariance matrix for all four distributional parameters \(\mu\), \(\sigma\), \(\tau\) and \(\alpha\). The median value across the 1,000 simulations in each DGP was estimated for the bias and the rMSE. The empirical coverage probabilities were obtained by averaging over the replications. Given that the numerical estimation of MLE failed in the case of small sample sizes, the number of converged optimisations for each DGP is reported.

The results indicate that, as expected from standard asymptotic consistency and normality of MLEs, all criteria improve as the sample size increases, regardless of the specific parameter setting (i.e. the bias and the rMSE decrease while the coverage approaches the nominal level of 95%). It is evident too that larger values of either \(\tau\) and/or \(\alpha\) are associated with decreasing statistical performance; thus, larger sample sizes are required to obtain reliable estimates.

Illustration of the generalised exponential-Gaussian distribution via published data sets

In this section, the versatility of the GEG distribution is illustrated via four data sets in which motor or neuronal RTs are featured.

-

ADHD’s simple RTs in Osmon et al. (2018) [here ADHD data set]. Osmon et al. (2018) obtained three different types of RTs from 27 neurotypical participants and 28 participants diagnosed with attention-deficit/hyperactivity disorder (ADHD). The RTs obtained during the simple RT task are featured in this study. In this task, participants had to press the same centrally located key when a stimulus appeared, regardless of the location on a computer screen (right or left side). As each participant had a fixed number of 120 trials and there were 28 ADHD participants, a total of 3360 trials were obtained.

-

Monkey S’s RTs in a reaching task in Kuang et al. (2016) [here M.S. data set]. The goal of this study was to investigate the neuronal activity in the posterior parietal cortex in two rhesus monkeys while they performed centre-out hand reaches under either normal or prism-reversed viewing conditions. All the trials in all 107 sessions for the ‘normal right’ condition from monkey S were used. There were between 19 and 62 trials across sessions and the median number of trials across sessions was \(40 \pm 14.82\). This gives a total of 3996 trials and RTs. After removing RTs < 50 ms, 3980 RTs remained. It is important to acknowledge that the lower limit of 50 ms is somewhat arbitrary and there are no agreed rules in the monkey literature on what the lower and upper boundaries should be. However, considering usual visual information processing latencies (ventral stream: \(\sim\)50 ms; dorsal stream: \(\sim\)100 ms), imposing a 50 ms constraint seems to be a minimal requirement.

-

Crows’ RTs in a visual task in Veit et al. (2014) [here C.D. data set]. This study aimed to investigate the neuronal correlates of visual working memory in four trained carrion crows. The experimental set-up required crows to remember a visual stimulus for later comparison while the activity of neurons in the nidopallium caudolaterale (a higher association brain area functionally akin to the prefrontal cortex in monkeys) was recorded. Veit et al. (2014) reported a histogram of the RTs of 162 visually responsive neurons (i.e. neurons from the four crows for which RTs could be estimated) in Figure 4B in their paper.

-

Synchronised cortical state and neuronal RTs [here S.S.N. data set]. Fazlali et al. (2016) investigated the link between spontaneous activity in the locus coeruleus (a key neuromodulatory nucleus in the brainstem) and synchronised/desynchronised states in the vibrissal barrel somatosensory cortex (BC) in Wistar rats. One of the analyses looked at neuronal responses in the BC during the two cortical states. The authors found that neuronal RTs in the BC were faster during the desynchronised than during the synchronised cortical state (in their study neuronal RTs were defined as the first time bin exceeding background activity by three standard deviations). The distribution of the BC’s neuronal RTs in the synchronised cortical state are featured in this study. Figure 6B and the section ‘Reduced response latency in desynchronised state’ in Fazlali et al. (2016) provide details for this data set.

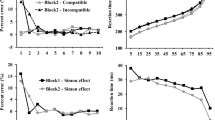

Table 3 reports the goodness-of-fit of the GEG and other distributions to these data sets (to improve numerical stability, all data were divided by a constant factor of 100). The results indicated that the SNAP and SN distributions provided the best fits in the C.D. and S.S.N. data sets, respectively, and that the GEG distribution gave the best fit in the remaining two data sets (bearing in mind that the lower the AIC and/or BIC, the better the fit). Note that, while we are comparing distributions with differing numbers of parameters and therefore an inherent advantage for more complex distributions to fit the data better, AIC and/or BIC both adjust for the model complexity such that we can make valid comparisons across distributions with different numbers of parameters.

Although the ECSN (Exponential-Centred Skew-Normal) distribution gave the second-best fit in the C.D. data set, this result is not reliable given difficulties in convergence, leaving that second place for the GEG distribution. In the case of the S.S.N. data set, the LN and GEG distributions gave the second- and third-best fits, respectively.

Table 3 also shows that the NO distribution tended to give bad fits (i.e. very high AIC and/or BIC) due to its natural inability to fit asymmetric data and its definition on the real line, which does not match with the non-negativity of RTs. Such a result reinforces the claim that methods that assume normality in the response variable (e.g. ordinary least squares, t-test and ANOVA) are not suitable to analyse and model RT data. The results of the LN distribution also indicate that a logarithmic transformation is usually not enough to make the distribution of RTs adhere to a normal law. That the EXP distribution gave the highest AIC and/or BIC suggests that having just one parameter (rate or inverse scale in the case of this distribution) limits this distribution’s flexibility to meet the shape of RT data. Overall, the results thus suggest the GEG distribution provides a good fit to these behavioural and neuronal RTs data sets (see Fig. 2). A future study should aim to fit several suitable distributions to a much larger collection of real-life neuronal and behavioural data sets to obtain a fine-grained picture of which distributions tend to give the best fit across data sets of comparable characteristics.

Empirical CDFs of four real-life data sets and four fitted theoretical CDFs. The data distributions are represented by black dots (eCDF). Note that the NO distribution tends to miss the tails of the data (e.g. in data sets M.S. and C.D.) and in other cases it misses the data locations (e.g. in the C.D. data set). Note there is a trade-off between interpretability and fitness (i.e. accuracy and flexibility) that requires careful consideration when selecting a distribution to model data. GEG = four-parameters Generalised Exponential-Gaussian distribution; NO = two-parameter Normal distribution; G = two-parameter Gamma distribution; SN = three-parameter Skew-Normal distribution. The x axis represents RTs (these were divided by 100 to improve numerical stability). See Table 3 for the results of the fits

GAMLSS in a nutshell: a regression framework for distributional modelling

In the regression context, it is traditional to investigate the effects of independent variables (IVs) on the mean of the dependent variable (DV) and this is achieved via ordinary least squares regression (also known as linear models, LM). Improvements on the LM approach have been reflected in the generalised linear model (GLM) by replacing the required normal distribution of the response variable with the exponential family of distributions (e.g. the Gamma distribution). Although GLM is more flexible than LM, both focus on the effects of the IVs on the DV’s mean. However, even if LM and GLM could also model the effects of the IVs on the DV’s standard deviation, the findings would be limited to the data’s location and scale parameters. Additionally, LM allows only a linear relationship between continuous IVs and DVs, and GLM assumes a linear relationship between the transformed response in terms of the link function and IVs. Generalised additive models (GAM), however, allow modelling such a relationship by using non-parametric (smooth) functions on the numeric IVs. Generalised additive models for location, scale and shape (GAMLSS) is the only existing regression framework that encompasses all these regression methods (Stasinopoulos et al. 2018; Kneib et al. 2021). It also has the extra property of allowing modelling of the effects of IVs on the DV’s location, scale and shape (i.e. skewness and kurtosis) via over 100 statistical distributions, thus enabling a comparison between many different models and proper distributional analysis [Rigby et al. (2020); see also Fig. 3].

Cumulative distribution functions (CDF) illustrating different normal (left plot) and non-normal distributions (right plot). Left plot (differences/similarities in location and scale): black and red CDFs have similar location and similar scale; blue and black/red CDFs have similar location and different scale; blue and green CDFs have different location and similar scale; green and black/red CDFs have different location and different scale. In all these cases, LM and GLM would be able to identify similarities/differences in location only (i.e. mean values); that is, standard techniques are good for detecting shifts but not shapes of distributions. Right plot (different types of shapes): black and blue CDFs represent distributions with positive skew, green and red CDFs represent distributions with negative skew, and the grey CDF represents a uniform distribution. The dotted grey horizontal line cuts through the distributions medians

Statistically speaking, in a GAMLSS model \(Y_i \sim D(\varvec{\theta })\) where the values of \(Y_i\) are n independent observations, for \(i=1,2,\ldots ,n\), and that have probability (density) function \(f_Y(y_i|{\varvec{\theta }})\) conditional to distribution parameters, usually up to four distribution parameters, each of which can be a function of the IVs. For \(k=1,2,3,4\), let \(g_k(.)\) be a known monotonic link function relating a distribution parameter to a predictor \(\varvec{\eta }_k\), such that

where \(\mathbf{X}_k\) is a known design matrix, \({\beta }_k=(\beta _{k1}, \ldots , \beta _{k J_{k}^{'}} )^{\top }\) is a parameter vector of length \(J_{k}^{'}\), \(s_{kj}\) is a smooth non-parametric function of variable \(X_{kj}\) and the terms \(\mathbf{x}_{kj}\) are vectors of length n, for \(k=1,2,3,4\) and \(j=1,\ldots ,J_k\). Here, \(\varvec{\theta }\) \(=(\tau , \mu , \sigma , \alpha )\), D represents the GEG distribution, and each of the GEG distribution parameters can be modelled as linear or smooth functions of the IVs.

In a nutshell, GAMLSS is thus an interpretable, flexible and sophisticated framework for data modelling. It is interpretable in that it is conceived in the well-known regression framework; it is flexible in that it allows modelling of the response variable via several candidate statistical distributions; and it is sophisticated in that numeric and categorical IVs can be subjected to cutting-edge smoothing algorithms. Thus, GAMLSS could be an educated analytical approach to respond to the current lack of statistical sophistication and rigour permeating research in neuroscience (Nieuwenhuis et al. 2011).

To illustrate the potential of GAMLSS for the analysis of RT data, the data set shown in Figure 2F in Schledde et al. (2017) was examined via GAMLSS. In that study, the authors recorded in monkey motion-sensitive area MT and investigated the latency of neurons in response to stimulus changes under different conditions of attention. Two monkeys were engaged in a change-detection paradigm and the neuron under investigation was responding to a speed change either with spatial attention directed to its receptive field or away from it (i.e. attend-in and attend-out), and with feature-attention directed towards the motion domain or towards the colour domain otherwise (i.e. speed and colour tasks). The authors found that the response latency of the neurons was significant, depending on the attentional condition, with both spatial and feature attention having an influence on the shape of the distribution of latencies.

Such data can be represented as the regression model ‘\(RT \sim A * T\)’; where RT are the neuronal latencies, A and T are the 2-level categorical variables attention (attend-in and attend-out) and task (colour and speed), respectively, and ‘\(*\)’ stands for main effects and interactions. That model is equivalent to a \(2\times 2\) ANOVA design and that is traditionally assessed via LM. GAMLSS models therefore imply four conditional distributions whose ECDFs are shown in Figure 2F in Schledde et al. (2017). There can be several analytical options, but for illustration purposes only a main-effects-with-no-random-effects model was considered. A GAMLSS modelling of the conditional distributions via the 10 probability distributions considered in Table 3 indicated that while the G distribution gave the best fit for one conditional distribution, the NO distribution fitted the three remaining conditional distributions best. A marginal distributional modelling (i.e. all the RTs) showed the EG distribution gave the best fit (the GEG distribution being the second-best fit). Two versions of the regression model shown above were considered; a model in which the DV was modelled via the NO distribution and a model in which the DV was modelled via the EG distribution.Footnote 3 While the NO model corresponds to the classic LM, the EG model is achievable only via GAMLSS. In both cases only the location parameter was investigated and RTs were divided by 100 to improve numerical stability. The results showed that the EG model (AIC = − 73.86) provided a better fit to the data than the NO model (AIC = − 70.09).Footnote 4

Discussion and conclusion

The GEG distribution was proposed as a candidate statistical model of behavioural and neuronal RTs and its statistical properties were described and examined via simulations. Given that the GEG is a four-parameter distribution, it can readily adopt non-normal shapes typically found in RT data; and this was exemplified via real-life data sets. It is a common practice to apply non-linear transformations to RT data to meet parametric assumptions and thus approximate normality or improve symmetry. However, the GEG distribution, and other distributions considered here enable working with the original shape of the data and therefore sidestep unnecessary non-linear transformations. The following paragraphs discuss statistical and practical implications of the GEG distribution within a GAMLSS framework for the analysis of neuronal and behavioural RTs.

Some statistical graphics aspects relating to GAMLSS

Commenges and Seal (1986) argue that explaining the relationship between neuronal RTs and behavioural RTs in well-controlled experiments, depends on the statistical methods used for the data analysis. The GEG distribution is amenable to properly characterise the distribution of both types of RTs conditioned on the specific variables manipulated in an experiment. However, the explanatory power of the GEG can only be appreciated when this distribution is used within a distributional modelling approach. Such a method was briefly described above: GAMLSS (Stasinopoulos et al. 2017). A key step, though, in the distributional modelling of data is the use of statistical graphical techniques that allow the distribution of the data to be examined. Traditionally, bar plots have been used for such a purpose but they do not allow the shape of the data to be visualised; instead, boxplots and violin plots are better techniques. However, empirical cumulative distribution function (ECDF) plots are the optimal approach to investigate the shape of data and are instrumental in comparing vectors of data. An example of ECDFs representing neuronal RTs can be seen in Figure 4A in Mormann et al. (2008). Indeed, Mormann et al. (2008)’s study is an excellent example of how neuronal RTs can provide insight as to neuronal firing associated to brain connectivity and stimuli.

A hypothetical scenario where GAMLSS can be used

The explanatory and predictive power of statistical distributions can only be achieved via the GAMLSS framework. The following lines depict a hypothetical experiment in which GAMLSS could be used to model RTs via the GEG distribution to better understand neuronal activity. A study conceptualised from the the two-stream model of higher-order visual processing (Goodale and Milner 1992) measures extracellular RTs in neurons specialised in the shape of visual stimuli. The study’s goal is to characterise neuronal RTs conditioned on IVs of interest in the experiment; of particular interest is the presentation time of the stimuli. Hence, single- and multiple-neuron recordings are performed in cortical visual areas V1, V2, V3, and V4 from a small sample of neurotypical human adult participants. The researchers define neuronal RTs as the time lapse between the presentation of the stimulus and the moment the neuron generates an action potential. Since each neuron ‘sees’ all stimuli, RT distributions per neuron for all trials and stimuli are obtained. Further suppose that data from a reasonable number of neurons in each V area are obtained (e.g. 10 neurons per area per participant). The stimulus consists of equal numbers of two-dimensional round and angular shapes of equal size and colour (e.g. all black colour). The task consists of sacadding from a fixation point to selected coordinates on a computer screen where the shapes are shown individually and randomly at a fixed interstimulus interval but at three different presentation times (e.g. stimuli are exposed for 10, 30, and 50 ms; i.e. each image is seen three times in total).

A traditional LM or GLM model to analyse the data could be conceived as \(RT \sim V * T * S\), such that RT, V, T, and S stand for the resulting marginal distribution of neuronal RTs, the four V cortical areas, the three presentation times, and the two types of shapes, respectively; and \(*\) stands for the main effects and interactions. The results would inform whether, for example, there are differences in mean RTs among the four V areas, the three presentation times, and/or the two types of shapes. Also, the model would indicate, for example, if there is an interaction between V and T such that differences in mean RTs between V areas may occur at certain presentation times. The other two two-way interactions and the three-way interaction could also be investigated. The issue with modelling such data via LM or GLM is that the findings are limited to mean RTs. Regardless of the main or interaction effects on the mean RTs, it may be the case that there are effects on the RTs’ variability (i.e. the RT’s scale). Neither LM nor GLM can detect potential effects of the IVs on changes in the RT’s scale. GAMLSS, on the other hand, is able to examine effects that the IVs can have on the RT’s location parameter (as LM and GLM do) but can also determine if the same IVs (or a subset of them) can affect the response’s variability (see Fig. 3). Importantly, while LM can model the DV via the Normal distribution only and GLM can do so via distributions from the Exponential family, GAMLSS can use those and any other statistical distribution implemented in the gamlss.dist R package (Rigby et al. 2020) or in a way that can be used within the GAMLSS framework [see Roquim et al. (2021) and the ‘RelDists’ package for examples of this approach].

Modelling the data of this hypothetical experiment via GAMLSS would allow understanding of the trial-to-trial RT signature of single and multiple neurons conditioned on the stimuli and task they are faced with. Furthermore, it could be inferred that signature RTs’ distributions (location, scale and shape) should be able to differentiate between healthy and unhealthy neurons according to task demands (e.g. visual tasks, auditory tasks, type of stimuli). If there is a predictive goal, trees and forest for distributional regression could be used [see Schlosser et al. (2019) and the R package disttree], since they blend algorithmic modelling with GAMLSS modelling.

Applied implications of the GAMLSS modelling

The previous example illustrates how neuronal RTs can be used to explain the processing of stimuli in networks of brain areas. An actual example of the value of investigating neuronal RTs is provided in Figures 5D and 5E in Yoshor et al. (2007). Those figures show an increasing trend in neuronal RTs (Figure 5D) and time to peak (Figure 5E) from zone 1 (areas for shape and colour processing) to zone 4 (areas for object and face processing) in the visual cortex; in particular, there was an RT mean difference between neurons in zone 1 and zone 4. In a similar vein, Mormann et al. (2008) found that parahippocampal, entorhinal, hippocampal, and amygdalan neuronal average RTs were in accord with neuroanatomical evidence. Also, Lin et al. (2018) found increments in RTs’ average and variability from the calcarine fissure (low-order primary sensory cortex) to the fusiform gyrus (higher-order association area), areas within the ventral pathway of face processing. Such types of analytical approach contribute to better characterising brain areas and their functions by placing neurons along a sensory-motor spectrum (DiCarlo and Maunsell 2005). Very likely, a more sophisticated analysis of this type of data via GAMLSS would further the current understanding of neuronal activation in those areas.

Future challenges

Although using the GEG distribution for the analysis of RTs offers a promising avenue for new research, there are some aspects that need to be investigated. It was argued that the GEG is a four-parameter distribution flexible enough to capture changes in location, scale, and shape. The simulation study reported above indicated that the GEG’s scale parameters, that is, skewness and kurtosis, require large samples to allow reliable estimates. Future work should investigate these parameters in more depth. A way to do so is via transformed moment kurtosis and skewness plots [see section 16.1.2 in Rigby et al. (2020)]. These types of plot enable the investigation of regions of possible combinations of transformed moment skewness and transformed kurtosis of the distributions, and so the flexibility of the GEG can be compared to other continuous distributions in terms of moment skewness and kurtosis.

Conclusion

In summary, distributional modelling of neuronal RTs enables fine-grained temporal profiles of brain areas and networks. The GEG has been proposed as a suitable distribution to fit RT data and its use in neuronal and behavioural statistical modelling will contribute to forging the link between neurometrics and psychometrics. Data sets featured in this article and related R codes are available at https://cutt.ly/IWJeSkO.

Notes

This study is perhaps one of the few studies, if not the only one, tackling RTs from a distributional perspective for understanding neuropsychological disorders.

Following the work by Lehmann (1953), Durrans (1992) extended the SN distribution for all \(\alpha \in {\mathbb{R}}^{+}\) and labelled it the distribution of fractional order statistics. These statistics are also known as AP, Exponentiated, or Generalised Gaussian distributions. These distributions have the PDF \(\varphi _F(z;\alpha )=\alpha f(z)\{F(z)\}^{\alpha -1}\), with z \(\in {\mathbb{R}}, ~~\alpha \in \mathbb{R}^+\), and where F is an absolutely continuous distribution function with probability density function \(f(z) =d/dz F(z)\). This new model gives rise to the family of AP (or Exponentiated) distributions, denoted by \(Z\sim AP(\alpha )\), which is a new alternative among families of distributions that model high degrees of asymmetry and kurtosis. The AP distribution therefore allows modelling data that do not follow a normal distribution and that rather exhibit high (or low) asymmetry and/or kurtosis.

Note that the GEG, ECSN, and SNAP distributions are not yet included in standard GAMLSS R packages such as gamlss.dist and only the specific GAMLSS model required for the analysis of Schledde et al. (2017)’s data set was implemented. While the results reported in this manuscript indicate that it is worthwhile including the GEG distribution in the gamlss.dist R package, the other distributions should be included as well given evidence supporting their value (see references in the main text). Doing so, though, requires more theoretical work on determining several derivatives and other properties of these distributions that are needed for the internal optimisation of parameters. Research on this this matter is under way.

Indeed, when both location and scale parameters were modelled, the EG model still provided a better fit (EG model’s AIC = − 78.62 and NO model’s AIC = − 78.59). Having said this, it is important that a GAMLSS model be selected based on its interpretability (Ramires et al. 2021).

References

Azzalini A (1985) A class of distributions which includes the normal ones. Scand J Stat 12(2):171–178

Azzalini A, Capitanio A (1999) Statistical applications of the multivariate skew normal distribution. J R Stat Soc B 61(3):579–602

Bono R, Blanca M, Arnau J, Gómez-Benito J (2017) Non-normal distribution commonly used in health, education, and social sciences: a systematic review. Front Psychol 8:1602

Chiogna M (1998) Some results on the scalar skew-normal distribution. Stat Methods Appl 7(1):1–13

Christie LS, Luce RD (1956) Decision structure and time relations in simple choice behavior. Bull Math Biophys 18:89–112

Commenges D, Seal J (1986) The formulae-relating slopes, correlation coefficients and variance ratios used to determine stimulus- or movement-related neuronal activity. Brain Res 383:350–352

Cordeiro GM, Ortega EMM, da Cunha DCC (2013) The exponentiated generalized class of distributions. J Data Sci 11:1–27

Dawson MRW (1988) Fitting the ex-Gaussian equation to reaction time distributions. Behav Res Methods Instrum Comput 20(1):54–57

DiCarlo J, Maunsell J (2005) Using neuronal latency to determine sensory-motor processing pathways in reaction time tasks. J Neurophysiol 93(5):2974–2986

Durrans S (1992) Distributions of fractional order statistics in hydrology. Water Resour Res 28:1649–1655

Fazlali Z, Ranjbar-Slamloo Y, Adibi M, Arabzadeh E (2016) Correlation between cortical state and locus coeruleus activity: implications for sensory coding in rat barrel cortex. Front Neural Circuits 10:14

Fischer B, Wegener D (2018) Emphasizing the “positive’’ in positive reinforcement: using nonbinary rewarding for training monkeys on cognitive tasks. J Neurophysiol 120(1):115–128

Foroni F, Wilcox R, de Bastiani F, Marmolejo-Ramos F (2020) Inductive-deductive asymmetry in valence activation in the affective priming paradigm: a multi statistical approaches test (in preparation)

Galashan F, Saßen H, Kreiter A, Wegener D (2013) Monkey area MT latencies to speed changes depend on attention and correlate with behavioral reaction times. Neuron 78(4):740–750

Gómez HW, Venegas O, Bolfarine H (2007) Skew-symmetric distributions generated by the distribution function of the normal distribution. Environmetrics 18:395–407

Goodale MA, Milner AD (1992) Separate visual pathways for perception and action. Trends Neurosci 15(1):20–25

Gupta D, Gupta R (2008) Analyzing skewed data by power normal model. TEST 17:197–210

Hanes DP, Schall JD (1996) Neural control of voluntary movement initiation. Science 274(5286):427–430

Harris C, Waddington J, Biscione V, Manzi S (2014) Manual choice reaction times in the rate-domain. Front Hum Neurosci 8:418

Hauser C, Zhu D, Stanford T, Salinas E (2018) Motor selection dynamics in FEF explain the reaction time variance of saccades to single targets. eLife 7:e33456

Henze N (1986) A probabilistic representation of the skew-normal distribution. Scand J Stat Ser B 61(13):271–275

Hohle RH (1965) Inferred components of reaction times as functions of fore period duration. J Exp Psychol 69(4):382–386

Jahanshahi M, R B, Marsden C (1993) A comparative study of simple and choice reaction time in Parkinson’s, Huntington’s and cerebellar disease. J Neurol Neurosurg Psychiatry 56:1169–1177

Kneib T, Silbersdorff A, Säfken B (2021) Rage against the mean: a review of distributional regression approaches. Econom Stat. https://doi.org/10.1016/j.ecosta.2021.07.006

Kuang S, Morel P, Gail A (2016) Planning movements in visual and physical space in monkey posterior parietal cortex. Cereb Cortex 26:731–747

Lehmann EL (1953) The power of rank tests. Ann Math Stat 24(1):23–43

Leiva V, Tejo M, Guiraud P, Schmachtenberg O, Orio P, Marmolejo-Ramos F (2015) Modeling neural activity with cumulative damage distributions. Biol Cybern 109(4):421–433

Levakova M, Tamborrino M, Ditlevsen S, Lansky P (2015) A review of the methods for neuronal response latency estimation. Biosystems 136:23–34

Limongi R, Bohaterewicz B, Nowicka M, Plewka A, Friston KJ (2018) Knowing when to stop: aberrant precision and evidence accumulation in schizophrenia. Schizophr Res 197:386–391

Lin J-F, Silva-Pereyra J, Chou C-C, Lin F-H (2018) The sequence of cortical activity inferred by response latency variability in the human ventral pathway of face processing. Sci Rep 8:5836

Luce RD (1986) Response times. Oxford University Press, New York

Luna R, Hernández A, Brody C, Romo R (2005) Neural codes for perceptual discrimination in primary somatosensory cortex. Nat Neurosci 8(9):1210–1218

Maia PD, Kutz JN (2017) Reaction time impairments in decision-making networks as a diagnostic marker for traumatic brain injuries and neurological diseases. J Comput Neurosci 42:323–347

Marmolejo-Ramos F, Cousineau D, Benites L, Maehara R (2015) On the efficacy of procedures to normalise Ex-Gaussian distributions. Front Psychol 5:1548

Martínez-Flórez G, Barrera-Causil C, Marmolejo-Ramos F (2020) The exponential-centred skew-normal distribution. Symmetry 12(7):1140

Martínez-Flórez G, Bolfarine H, Gómez H (2014) Skew-normal alpha-power model. Statistics 48(6):1414–1428

McGill WJ (1963) Stochastic latency mechanisms. In: Luce RD, Bush RR, Galanter E (eds) Handbook of mathematical psychology, vol 1. Wiley, New York, pp 309–359

Mormann F, Kornblith S, Quiroga Q, Kraskov A, Cerf M, Fried I, Koch C (2008) Latency and selectivity of single neurons indicate hierarchical processing in the human medial temporal lobe. J Neurosci 28(36):8865–8872

Mukamel R, Fried I (2012) Human intracranial recordings and cognitive neuroscience. Annu Rev Psychol 63:511–537

Múnera A, Cuestas D, Troncoso J (2012) Peripheral facial nerve lesions induce changes in the firing properties of primary motor cortex layer 5 pyramidal cells. Neuroscience 223:140–151

Nieuwenhuis S, Forstmann B, Wagenmakers EJ (2011) Erroneous analyses of interactions in neuroscience: a problem of significance. Nat Neurosci 14(9):1105–1107

Osmon D, Kazakov D, Santos O, Kassel M (2018) Non-gaussian distributional analyses of reaction times (RT): improvements that increase efficacy of RT tasks for describing cognitive processes. Neuropsychol Rev 28(3):359–376

Palmer C, Cheng S-Y, Seidemann E (2007) Linking neuronal and behavioral performance in a reaction-time visual detection task. J Neurosci 27(30):8122–8137

Pek J, Wong O, Wong A (2018) How to address non-normality: a taxonomy of approaches, reviewed, and illustrated. Front Psychol 9:2104

Pewsey A, Gómez H, Bolfarine H (2012) Likelihood-based inference for power distributions. TEST 21:775–789

Ramires TG, Nakamura LR, Righetto AJ, Carvalho RJ, Vieira LA, Pereira CA (2021) Comparison between highly complex location models and GAMLSS. Entropy 23(4):469

Rigby RA, Stasinopoulos MD, Heller GZ, De Bastiani F (2020) Distributions for modeling location, scale, and shape: using GAMLSS in R. CRC Press, Boca Raton

Roquim F, Ramires T, Nakamura L, Righetto A, Lima R, Gomes R (2021) Building flexible regression models: including the Birnbaum–Saunders distribution in the GAMLSS package. Semina: Ciências Exatas e Tecnológicas, 42(2):163–168

Schledde B, Galashan F, Przybyla M, Kreiter A, Wegener D (2017) Task-specific, dimension-based attentional shaping of motion processing in monkey area MT. J Neurophysiol 118(3):1542–1555

Schlosser L, Hothorn T, Stauffer R, Zeileis A (2019) Distributional regression forests for probabilistic precipitation forecasting in complex terrain. Ann Appl Stat 13(3):1564–1589

Seal J, Commenges D, Salamon R, Bioulac B (1983) A statistical method for the estimation of neuronal response latency and its functional interpretation. Brain Res 278:382–386

Stasinopoulos M, Rigby R, de Bastiani F (2018) GAMLSS: a distributional regression approach. Stat Model 18(3–4):248–273

Stasinopoulos MD, Rigby RA, Heller GZ, Voudouris V, De Bastiani F (2017) Flexible regression and smoothing: using GAMLSS in R. CRC Press, Boca Raton

Stigler S (1977) Fractional order statistics, with applications. J Am Stat Assoc 72(359):544–550

Sun H, Ma X, Tang L, Han J, Zhao Y, Xu X, Wang L, Zhang P, Chen L, Zhou J, Wang C (2019) Modulation of Beta oscillations for implicit motor timing in primate sensorimotor cortex during movement preparation. Neurosci Bull 35(5):826–840

Tejo M, Araya H, Niklitschek-Soto S, Marmolejo-Ramos F (2019) Theoretical models of reaction times arising from simple-choice tasks. Cogn Neurodyn 13(4):409–416

Tejo M, Niklitschek-Soto S, Marmolejo-Ramos F (2018) Fatigue-life distributions for reaction time data. Cogn Neurodyn 12(3):351–356

Veit L, Hartmann K, Nieder A (2014) Neuronal correlates of visual working memory in the corvid endbrain. J Neurosci 34(23):7778–7786

Vélez J, Correa J, Marmolejo-Ramos F (2015) A new approach to the Box-Cox transformation. Front Appl Math 1:12

Voelker P, Piscopo D, Weible A, Lynch G, Rothbart M, Posner M, Niell C (2017) How changes in white matter might underlie improved reaction time due to practice. Cogn Neurosci 8(2):112–135

Yoshor D, Bosking W, Ghose G, Maunsell J (2007) Receptive fields in human visual cortex mapped with surface electrodes. Cereb Cortex 17:2293–2302

Acknowledgements

F.M.-R. thanks Rogelio Luna for clarifying aspects related to neuronal latencies and brain areas and C.B.-C. thanks the Instituto Tecnológico Metropolitano (ITM) for its support. T.K. gratefully acknowledges financial support from the German Research Foundation (Deutsche Forschungsgemeinschaft; DFG), Grant KN 922/9-1. D.W. was supported by the German Research Foundation (Deutsche Forschungsgemeinschaft; DFG), grant WE 5469/2-1. F.D.B is grateful for the partial financial support of the National Council for Scientific and Technological Development (CNPq), grant 310050/2019-7. The authors thank Joan Gladwyn (https://properwords.co.nz/) for professionally proofreading the latest version of this manuscript.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Contributions

GMF conceived the proposed distribution, wrote its associated equations, and implemented the simulations; CBC checked the R codes, implemented the figures for the GEG distribution, and performed data analyses; SK provided data and checked the description of the studies; ZF provided data and checked the description of the studies; DW provided data and checked the description of the studies; TK revised the simulations and the implementation of the distributions, fitted the data sets and created the figures for the applications; FDB revised the data fitting and wrote the mathematics of GAMLSS; FMR wrote the motivation of the manuscript (i.e. linking the proposed distribution to reaction time data and distributional GAMLSS analyses), wrote the discussion section, acquired the data for illustrating the proposed distribution, and proofread and edited all sections.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Statistical details of the Ex-Gaussian distribution

For \(Y_1\sim AP(\tau )\) and \(Y_2\sim N(\mu ,\sigma ^{2})\) with independent \(Y_1\) and \(Y_2\), it follows that the PDF of the random variable \(X=Y_1+Y_2\) is given by:

This PDF is denoted as \(EG(\tau ,\mu ,\sigma ^{2})\). On the other hand, the EG distribution’s CDF can be obtained as:

where \(z=\left( \frac{x-\mu }{\sigma }\right)\).

From this result, it follows that the survival functions, relative risk and hazard, are given by:

where \(S_N(t)\) is the survival function of the normal distribution.

Given the independence between the convoluted exponential and normal variables, it follows that

Likewise, the skewness and kurtosis coefficients are given by:

A simplification of kurtosis expression could be through excess kurtosis, which is calculated as \(3-kurt=3\frac{\left( \frac{\sigma }{\tau }\right) ^2-2}{\left( \left( \frac{\sigma }{\tau }\right) ^2+1 \right) ^2}.\)

These formulas thus indicate that the EG distribution has positive asymmetry.

In the context of an RT experiment, if T is the RT or response time, then, for a random sample \(T=(T_1, T_2,\ldots ,T_n)',\) with \(T_i\sim {EG(\tau ,\mu ,\sigma )},\) the distribution of the statistics \(T_{(n)}=max[T_1, T_2,\ldots ,T_n],\) where max denotes the maximum of the sample, and is given by

Therefore, the PDF of T is given by the expression:

which is an expression similar to the PDF of the random variable AP(n).

Statistical details of the generalised exponential-Gaussian distribution

Moments

Moments of the rth order statistics of the GEG distribution are not available in a closed-form expression, and thus need to be estimated numerically. Then, for \(X\sim GEG(\tau , \mu , \sigma , \alpha )\) it follows that

where \(F_{EG}^{-1}(\cdot ;\tau ,\mu ,\sigma )\) is the inverse function of the EG distribution with parameters \(\tau ,\mu ,\sigma\). This distribution is available in R packages such as emg and gamlss.

The central moments, \(\acute{\mu _r}=\mathbb{E}(X-\mathbb{E}(X))^r\), for \(r=2,3,4\) can be calculated by the expressions,

Also, the variance, coefficients of variation, skewness, and kurtosis are given as:

Skewness and kurtosis coefficients in the range [− 1.20841, 2.01773] and [1.658303, 10.28841] were found when \(\mu =0,~\sigma =1\), \(\tau \in [0.2,200]\) and \(\alpha \in [0.25,20]\). These intervals contain skewness and kurtosis ranges of the PN and EG distributions; thus indicating that the GEG distribution is more flexible in terms of skewness and kurtosis than the PN and EG distributions. Furthermore, the GEG distribution’s skewness and kurtosis ranges provide better coverage than those of the SN (Azzalini 1985), and the SNAP (Martínez-Flórez et al. 2014) distributions. These results also indicate that while the EG distribution can only fit positively skewed data, the GEG distribution can fit data with positive skew, negative skew, and data with kurtosis larger than that of the EG distribution.

Log-likelihood function

The parameters of the \(GEG(\tau ,\mu ,\sigma ,\alpha )\) distribution are estimated via a maximum likelihood method. Thus, for a random sample of size n, \(X_1, X_2,\ldots , X_n\), where for \(i=1,2,\ldots ,n\), \(X_i\sim GEG(\tau ,\mu ,\sigma ,\alpha )\); the log-likelihood function of the parameter vector \(\theta =(\tau ,\mu ,\sigma ,\alpha )'\) is given by:

Score function

\(U(\theta )=(U(\tau ),U(\mu ),U(\sigma ),U(\alpha ))',\) defined as the derivatives with respect to the parameters of the log-likelihood function are given by:

and

where \(w_i=\frac{\phi (z_i)}{\Phi (z_i)}\) with \(z_i=\frac{x_i-\mu }{\sigma }\). The corresponding score equations are obtained by equating the above equations to zero. The score equations can be solved by iterative numerical methods and this, in turn, leads to maximum likelihood estimators.

Information matrices

The elements of the observed information matrix, \(J(\theta ),\) defined as minus the second derivatives of the log-likelihood function with respect to the parameters denoted by \(\kappa _{\tau \tau },~\kappa _{\mu \tau },~\kappa _{\sigma \tau },\cdots ,\kappa _{\alpha \alpha }\), are given by:

and

where \(w_i(\mu ,\sigma ,\tau )=\frac{\phi \left( \frac{x_i-\mu }{\sigma }-\frac{\sigma }{\tau }\right) }{\Phi \left( \frac{x_i-\mu }{\sigma }-\frac{\sigma }{\tau }\right) }\) and \(q_i=w_i(\mu ,\sigma ,\tau )\left[ \frac{x_i-\mu }{\sigma }-\frac{\sigma }{\tau }+w_i(\mu ,\sigma ,\tau ) \right]\).

For large n, the observed information matrix \(J(\theta )\), converges to the expected information matrix \(I(\theta )\). Hence, with the elements of the matrix \(J(\theta )\) and the power-normal family being characterised by having a non-singular information matrix [see Pewsey et al. (2012)], it can be concluded that

That is, the GEG converges to a normal distribution with covariance matrix \(J^{-1}(\theta ).\) The standard errors of the estimates of the model parameters can be obtained by calculating the square root of the elements of the diagonal of \(\hat{J}^{-1}(\hat{\theta })\). The result of this can be used to find confidence intervals for the model parameters.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marmolejo-Ramos, F., Barrera-Causil, C., Kuang, S. et al. Generalised exponential-Gaussian distribution: a method for neural reaction time analysis. Cogn Neurodyn 17, 221–237 (2023). https://doi.org/10.1007/s11571-022-09813-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-022-09813-2