Abstract

In this paper we consider a numerical scheme for the treatment of an integro-differential equation. The latter represents the formulation of a nonlocal diffusion type equation. The discretization procedure relies on the application of the line method. However, quadrature formulae are needed for the evaluation of the integral operator. They are based on generalized Bernstein polynomials. Numerical evidence shows that the proposed method is a suitable and reliable approach for the problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The application of mathematics to physics and life sciences allows the formulation of models to interpret the phenomena that are observed by the experimentalists. A wealth of examples can be drawn for instance from mechanics, material sciences, fluid mechanics [4] or human population dynamics [1]. For instance, with the advancement of technology, new materials have appeared that exhibit features that depart from the classical assumptions of Newtonian fluids. They are at the boundary between fluids and soft solids, with a behavior that is plastic and not elastic. Rheology investigates the properties of these soft solids. For their understanding, new reformulations of the classical equations of mathematical physics are required.

In physics in particular, attention has recently been paid to situations in which the energy at a point depends also on the neighboring points, up to a threshold distance which is a function of the range of the molecular forces. Thus a material that has two possible stable states can be set up from these microscopic interactions over a lattice of points. This gives a dynamical system, in which the sum of the lattice interactions represent an approximated integral. In this way nonlocal alternatives of well-known equations such as Phase-Field Klein-Gordon as well as Allen-Cahn, are obtained [5]. For instance, expressing the concentration of a species at a point in a two-element alloy gives rise to the nonlocal version of the Cahn-Hilliard equation [6,7,8].

To give another example, also a nonlocal version of the nonlinear Schrödinger equation, which is based on a convolution, has been investigated. A numerical method has been proposed to ease the computational costs involved in the simulation of the original equation. By means of a suitable reformulation that employs partial differential equation techniques, the original integro-differential equation is reformulated as a system of partial differential equations. The latter can then be numerically integrated by means of an improvement of a finite integration method [13].

Many numerical methods for nonlocal equations have been developed based essentially on techniques related to partial differential equations, see for instance the unconditionally energy stable finite difference convex splitting schemes of [2], one being first order accurate in time and second order accurate in space, the second one instead representing a fully second-order scheme, both solved by efficient nonlinear multigrid methods. Also consider the more recent second order methods presented in [3].

In this paper we would like to address the problem of nonlocal diffusion type equations describing a fourth order scheme for its solution. The main novelty of this investigation consists in the fact that the proposed line method avoids any reformulation of the problem, dealing instead directly with the original integro-differential formulation. To illustrate the method, we consider a relatively simple diffusive equation.

The paper is organized as follows. In the next section we describe the equation under study, the numerical scheme is presented in Sect. 3, the needed quadratures are contained in Sect. 4 and numerical evidence in support of our results concludes the paper.

2 The sample model

We illustrate the numerical method on a simple example. Our starting point is a simple diffusion equation in which nonlocal interactions are considered. Specifically we consider the following nonlinear diffusion equation subject to nonlocal interactions

where the kernel function \(\varphi (y-x)\) is sufficiently smooth. The initial condition is

while the Dirichlet boundary conditions read

In the examples, we will simply take \(H_-=H_+\). For the subsequent illustration of the numerical method it is convenient to set a specific notation for the nonlocal interactions, namely

3 The method

We propose to use the method of lines, suitably adapted for this task. It can be summarized in the following steps:

-

Collocate (1) in a set of \(n-1\) equispaced internal points \(\{x_i\}_{i=1}^{n-1}\) of the interval \((-a,a)\);

-

Approximate the spatial derivatives \(\frac{\partial ^2 u(x,t)}{\partial x^2}\Big |_{x=x_i}\) by means of finite differences schemes;

-

Approximate the integrals \(J(u,x_i,t)\) with a quadrature formula based on the Generalized Bernstein polynomials;

-

Solve the obtained ordinary differential system by applying the standard Runge-Kutta-Fehlberg (4,5) method.

More in detail, let n denote the number of nodes in the spatial mesh; the nodes and the stepsize are

equation (1) is collocated at the internal nodes \(x_i\), \(i=1,\ldots ,n-1\):

At the points \(x_0\) and \(x_n\) the boundary conditions (2) are employed. The restriction of the solution at the nodes becomes a function solely of time, denoted by \(u_i(t) = u(x_i,t)\); its second partial derivative in space is discretized by means of the divided central difference scheme of order \(\mathcal {O}(h^4)\) (see for instance [10]):

For nodes involving points that lie outside the mesh, we suitably modify the finite difference formulae, while keeping their accuracy commensurable with the one of (4), namely order \(\mathcal {O}(h^4)\). These meshpoints near the boundary are those with index \(i=1\) and \(i=n-1\); the modified finite difference formulae are:

Moreover in (3) we approximate the integrals \(J(u,x_i,t)\) by means of one of the two quadrature formulae that will be illustrated in Sect. 4; both of them are based on the uniform mesh \(x_i\), \(i=0,\ldots ,n\). The choice between these quadratures depends on the nature of the kernel \(\varphi (y-x)\). Hence, denoting by \(J_n(u,x,t)\) the quadrature rule used for the discretization of J, see (16), from the divided difference scheme (4)-(6), we get the following system of ODEs

with initial conditions \(u_i(0)=k(x_i)\), \(i=0,\ldots ,n\). The final discretized system of ordinary differential equations (7) is then solved by means of the Runge-Kutta (4, 5) method, implemented by the "ode45" Matlab integrator.

4 The quadrature formulae

Let us fix x and t. To approximate the integral

we suggest two possible strategies, according to the nature of the kernel \(\varphi \). The first case deals with very smooth kernels, the second one is reliable in all the cases where the kernel presents some kind of pathologies: weak singularity, high oscillations (see Fig. 1), “near” strong singularity, etc. In such cases the standard rules fail, and product integration rules provide satisfactory results because they integrate exactly the pathology. Each kernel has to be treated with specific approaches based on its very nature, and this entails different ways of implementing the computation. For the pathological case we consider oscillating kernels of the type

for a “large” oscillation frequency \(\omega \).

Both the rules that we consider are based on the same Generalized Bernstein operator

where \(B_n\) is the ordinary Bernstein operator, shifted to the interval \([-a,a]\). Boolean sums based on the Bernstein operator \(B_n\) represent an adequate tool to attain our goal, since they are based on \(n+1\) equally spaced points in \([-a,a]\). Furthermore, unlike the “originating” operator \(B_{n}\), the speed of convergence accelerates as the smoothness of the approximating function increases. To be more precise, first we recall that for \(r\in \mathbb {N}^*,\) with \(1 \le r\le 2\ell \), the \(r-\)th Sobolev-type space is defined as

where \(\displaystyle \Vert f\Vert _{\infty }:=\max _{x\in [-a,a]}|f(x)|\) is the uniform norm, \(\phi (x)=\sqrt{a^2-x^2}\) and \(\mathcal{AC}\mathcal{}\) denotes the space of all locally absolutely continuous functions on \([-a, a]\). Consequently, for each \(f \in W_r([-a,a])\) the error is estimated as \(\Vert f-B_{n,\ell }(f)\Vert _\infty = \mathcal {O}(\sqrt{n^{-r}})\), \(1 \le r\le 2\ell \). In other words, the convergence rate behaves like the square root of the best approximation error for this functional space. Note that \(C^r([-a,a])\subset W_r([-a,a])\). A survey on the Generalized Bernstein polynomials and their applications is contained in [12].

In what follows by writing \(g_x(y)\) we mean that any bivariate function g(x, y) is taken as function just of the variable y. Furthermore, from now on we use \(\mathcal {C}\) in order to denote a positive constant, which may have different values at different occurrences, and we write \(\mathcal {C}\ne \mathcal {C}(n,f,\ldots )\) to mean that \(\mathcal {C}>0\) is independent of \(n,f, \ldots \).

4.1 First case: the “smooth” kernel \(\varphi (y-x)\)

In this case we use the Generalized Bernstein quadrature formula studied in [11] and based on shifted Generalized Bernstein Polynomials:

where \(c_{i,j}^{(n,\ell )}\) are the entries of the matrix \(C_{n,\ell }\in {\mathbb {R}}^{(n+1)\times (n+1)}\),

and \({\textbf {I}}\) denotes the identity matrix. Furthermore

Letting

under the assumption

and setting

we have [9]

An analogous estimate was derived in [11] in the classical case on the interval [0, 1].

4.2 Second case: the kernel \(\varphi (y-x)=e^{i\omega (y-x)}\)

By splitting this kernel into its real and imaginary parts, we obtain integrals of the type

Hence, by approximating f by \(B_{n,\ell }(f)\) we have

where \(p_{n,i}(y)\) and \(c_{i,j}^{(n,\ell )}\) are defined as in the previous subsection.

For the product rule to work, the integrals \(q_i(y)\) need to be accurately computed. To this end, we use the formula proposed in [9]. For the benefit of the reader, we now briefly recall it. Let \(N=\left\lfloor \omega \frac{a}{\pi }\right\rfloor +1\) and consider the partition \([-a,\ a]=\bigcup _{h=1}^N [t_{h-1}, t_h]\), \(t_h=-a+\frac{2a}{N}h.\) Hence, we have

where for \(h=1,2\dots ,N\) the transformations

map \([t_{h-1},\,t_h]\) into \([-1,\,1]\). Then, approximating each integral by the n-th Gauss-Legendre rule,

where \(\{z_k\}_{k=1}^{n}\) represent the zeros of the \(n-\)th Legendre polynomial and \(\{\lambda _k\}_{k=1}^{n}\) the corresponding Christoffel numbers, we have

Combining the above equation with (13), we obtain the following quadrature rule:

For the quadrature error, for any \(f\in W_r([-a,a])\), \(1 \le r \le 2\ell \), and for n sufficiently large, say \(n>n_0\) for a fixed \(n_0\), we have [9]

where \(\mathcal {C}\ne \mathcal {C}(n,f)\) and \(\Vert C_{n,\ell }\Vert _\infty \) denotes the infinity norm of the matrix \(C_{n,\ell }\) defined in (10).

4.3 The approximation of J(u, x, t)

In summary, by using the results of the previous two subsections, to calculate (8) we have the following alternatives:

where \(D_j^{(\ell )}\) and \(P_{j}^{(\ell )}(x)\) are defined in (9) and (15).

5 Numerical experiments

In this section we report the results of some numerical experiments on the nonlocal diffusion model (1) for different choices of the kernel function \(\varphi (y-x)\). Without loss of generality we assume \(a=1\) and integrate up to time \(T=10\) throughout all our simulations, with the exception of Examples 5.5 and 5.6, in which we set \(a=2\) and \(a=3\) respectively.

To show the reliability of the proposed algorithm, we consider at first some examples constructed so that the analytical solution u(x, t) is known. In such cases we report the maximum absolute errors attained by the scheme. Denoting by \(u_n(x,t)\) the numerical solution of the discretized model for a fixed number n of meshnodes, we set

The tables also display the Estimated Order of Convergence (EOC) and the Mean Estimated Order of Convergence defined as follows:

where M is a fixed integer. In our case we set \(M=8\).

All the computed examples are carried out in Matlab R2022a in double precision on an M1 MacBook Pro under the macOS 12.4 operating system.

For the sake of brevity, from now on we also introduce the notation

In Examples 5.1 and 5.2 the solution u(x, t) is known and the functions \(\varphi (y-x)\) are both smooth. Therefore, the chosen method is based on the Generalized Bernstein formula given in Sect. 4.1. As Tables 1 and 2 show, the \(\text {EOC}_{\text {mean}}\) is coherent with the expected value 4, that coincides with the order of the divided difference schemes employed.

Example 5.3 considers a kernel of the type \(\sin (\omega (y-x))\). In this case the quadrature formula for the discretization of J(u, x, t) in (1) is the product formula described in Sect. 4.2. Table 3 shows the performances of the method for four different choices of the oscillatory parameter \(\omega \), while Table 4 makes a direct comparison between the results obtained by the method based on the quadrature (9) and on the product rule (15) respectively. Note that for high values of \(\omega \) the results indicate that the latter turns out to be the optimal choice to obtain good approximations of the solution u(x, t).

Example 5.4 has the same known solution u(x, t) of Example 5.3, but the kernel \(\varphi (y-x)\) is of the type \(\cos (\omega (y-x))\). This choice is made in order to consider the case of applications in which the kernel function is of oscillatory type, namely \(\varphi (y-x)=e^{i\omega (y-x)}\), as described in Sect. 4.2. The good performances of the method based on the product formula are displayed in Table 5 using the same four values \(\omega \) of the previous example. Note that also these examples share almost the same \(\text {EOC}_{\text {mean}}\), which again is approximately 4, as underlined in Tables 6 and 7. Furthermore, according to the theoretical estimates of the described quadrature formulae, convergence to the exact solution is achieved.

Examples 5.5 and 5.6 are variations of Examples 5.1 and 5.4 respectively. We consider them to underline that the increment of the value a does not substantially affect the error propagation. In the first case we set \(a=2\) and in the second one \(a=3\) and \(\omega =80\). Tables 8 and 9 display the obtained results.

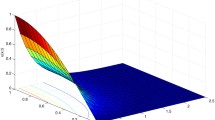

Finally, in Examples 5.7 and 5.8 the solution u(x, t) of the problem (1) is not known explicitly. Here the choices of \(\varphi (y-x)\) respectively are \(\sin (\omega (y-x))\) and \(e^{-(y-x)}\). Since in the previous examples we already tested the accuracy of our method, we just plot the approximated solution \(u_n(x,t)\) for \(n=256\). Hence, Figs. 2 and 3 display the approximated solutions, each of them obtained by the method based on the more suitable quadrature formula, according to the nature of the considered kernel \(\varphi (y-x)\). In both cases the plotted solutions \(u_{256}(x,t)\) satisfy the given initial and boundary conditions. Moreover, from Fig. 2 one can notice that the approximated solutions inherit the oscillating behavior of the kernel \(\sin (\omega (y-x))\): this can be easily observed when approaching the end of the time span [0, 10].

Overall from these results we infer that the proposed line method is a suitable and reliable discretization method for the problem (1).

Example 5.1

Example 5.5-Plot of the approximated solution u(x, t) for different values of \(\omega \)

Example 5.2

Example 5.3

Example 5.6-Plot of the approximated solution u(x, t)

Example 5.4

Example 5.5

Example 5.6

Example 5.7

Example 5.8

6 Conclusions

In this paper we have presented a line method for the numerical solution of evolution equations of nonlocal type. It is fourth order accurate in both space and time. In contrast to other currently employed methods that, after possible previous reformulation of the original integro-differential equation, use order-two-accurate finite difference schemes, we propose a fourth order line method. Our numerical scheme relies on a good and efficient discretization of the integral term. This is achieved by using state-of-the-art quadratures based on Generalized Bernstein polynomials. The numerical examples support the analytical findings, if the kernel of the integral is smooth or, alternatively, presents weak singularities or high frequency oscillations. The case of strongly singular kernels, presenting additional difficulties, is currently being under investigation and will be examined in future research.

References

Banerjee, M., Petrovskii, S.V., Volpert, V.: Nonlocal reaction-diffusion models of heterogeneous wealth distribution. Mathematics 9, 351 (2021). https://doi.org/10.3390/math9040351

Baskaran, A., Hu, Z., Lowengrub, J.S., Wang, C., Wise, S.M., Zhou, P.: Energy stable and efficient finite-difference nonlinear multigrid schemes for the modified phase field crystal. J. Comput. Phys. 250, 270–292 (2013). https://doi.org/10.5555/2743136.2743331

Baskaran, A., Lowengrub, J.S., Wang, C., Wise, S.M.: Convergence analysis of a second order convex splitting scheme for the modified phase field crystal equation. SIAM J. Numer. Anal. 51(5), 2851–2873 (2013). https://doi.org/10.1137/120880677

Bates, P.W.: On some nonlocal evolution equations arising in materials science. Nonlinear dynamics and evolution equations. Fields Inst. Commun. 48, 13–52 (2006)

Bates, P.W., Brown, S., Han, J.: Numerical analysis for a nonlocal Allen-Cahn equation. Int. J. Numer. Anal. Model. 6(1), 33–49 (2009)

Cahn, J.W., Hilliard, J.E.: Free energy of a nonuniform system I. Interfacial free energy. J. Chem. Phys. 28, 258–267 (2010)

Elliott, C.M., Zheng, S.: On the Cahn-Hilliard equation. Arch. Rat. Mech. Anal. 96, 339–357 (1986)

Elliott, C.M., Zheng, S.: Global existence and stability of solutions to the phase field equations. Free Boundary Problems. Int. Ser. Numer. Math. 95, 46–58 (1990)

Fermo, L., Mezzanotte, D., Occorsio, D.: A product integration rule on equispaced nodes for highly oscillating integrals. (preprint submitted). arXiv:2207.08881

Fornberg, B.: Generation of finite difference formulas on arbitrarily spaced grids. Math. Comput. 51(184), 699–706 (2021)

Occorsio, D., Russo, M.G.: A Nyström method for Fredholm integral equations based on equally spaced knots. Filomat 28(1), 49–63 (2014). https://doi.org/10.2298/FIL1401049O

Occorsio, D., Russo, M.G., Themistoclakis, W.: Some numerical applications of generalized Bernstein Operators. Constr. Math. Anal. 4(2), 186–214 (2021). https://doi.org/10.33205/cma.868272

Zhao, W., Lei, M., Hon, Y.-C.: An improved finite integration method for nonlocal nonlinear Schrödinger equations. Comput. Math. Appl. 113, 24–33 (2022). https://doi.org/10.1016/j.camwa.2022.03.004

Acknowledgements

The authors are grateful to the referees for their careful reading of the manuscript and for the useful comments which helped to improve the quality of this paper. All the authors are members of the INdAM research group GNCS. Since the GNCS is a nationwide Italian group, it is difficult to LIST all its members and their corresponding affiliation details.

Funding

Open access funding provided by Università degli Studi della Basilicata within the CRUI-CARE Agreement. No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper is dedicated to the memory of Professor Ilio Galligani.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mezzanotte, D., Occorsio, D., Russo, M.G. et al. A discretization method for nonlocal diffusion type equations. Ann Univ Ferrara 68, 505–520 (2022). https://doi.org/10.1007/s11565-022-00436-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11565-022-00436-3